Abstract

Additive manufacturing (AM) applications are rapidly expanding across multiple domains and are not limited to prototyping purposes. However, achieving flawless parts in medical, aerospace, and automotive applications is critical for the widespread adoption of AM in these industries. Since AM is a complex process consisting of multiple interdependent factors, deep learning (DL) approaches are adopted widely to correlate the AM process physics to the part quality. Typically, in AM processes, computer vision-based DL is performed by extracting the machine’s sensor data and layer-wise images through camera-based systems. This paper presents an overview of computer vision-assisted patch-wise defect localization and pixel-wise segmentation methods reported for AM processes to achieve error-free parts. In particular, these deep learning methods localize and segment defects in each layer, such as porosity, melt-pool regions, and spattering, during in situ processes. Further, knowledge of these defects can provide an in-depth understanding of fine-tuning optimal process parameters and part quality through real-time feedback. In addition to DL architectures to identify defects, we report on applications of DL extended to adjust the AM process variables in closed-loop feedback systems. Although several studies have investigated deploying closed-loop systems in AM for defect mitigation, specific challenges exist due to the relationship between inter-dependent process parameters and hardware constraints. We discuss potential opportunities to mitigate these challenges, including advanced segmentation algorithms, vision transformers, data diversity for improved performance, and predictive feedback approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Additive manufacturing (AM) is a layered material addition process with widespread applications in the aerospace, automotive, and medical industries. AM processes such as laser powder bed fusion (PBF) [1], directed energy deposition (DED) [2], binder jetting [3], and fused filament fabrication [4] are adopted widely in these industries. In AM, the CAD model of the part is first converted to a B-rep file format and sliced as per the user-specified slice thickness. The sliced geometries serve as inputs to generate the G-codes for the additive manufacturing process. A specific AM modality is chosen based on a part’s material or functional requirements. As AM is gaining more traction in commercial manufacturing environments and highly specialized applications, there is a growing need to formulate methods that improve AM part quality with reduced lead time and costs.

In laser-based additive manufacturing processes such as PBF and DED, the laser energy fuses and binds the metal powder to build the part. The continuous heating–cooling cycles result in non-uniform temperature distributions, residual stresses, inconsistent ultimate tensile strength, and geometrical distortions [5,6,7]. The balling and keyholing phenomenon also affects the overall part density, resulting in porosity issues [8]. Balling effects occur due to incomplete sintering of powder particles or powder coalescing into larger droplets[9]. Keyhole pores result from the formation of bubbles being trapped by the solidification front and have been shown to be sensitive to AM process parameters such as laser scanning speed [10, 11]. Eventually, the cavities in such regions result in crack propagation, affecting the part’s quality. Due to these process-inherent defects, monitoring them becomes a critical aspect of AM. Although conventional non-destructive defect inspection strategies such as infrared testing [12], ultrasonic evaluation based on acoustic vibrations [13], and penetrant testing [14] exist, computer vision (CV) inspection approaches have been widely reported in the AM community in recent years. The reasons are attributed to improved computational algorithms, ease of hardware access, and its ability to be deployed directly on the machines and provide real-time feedback to the user.

In AM, computer vision and image processing methods are applied for segmenting in situ infrared (IR), X-ray computed tomography (XCT), high-dynamic range (HDR), or ultrasonic images. In [15], the authors have developed segmentation approaches for the melt pool region using IR images. They incorporate thresholding and connected components algorithms for finding the defects’ region of interest (ROI) within the plume, laser-heated zone, or spatters. The segmented region determines the correlation between the pixel area and the mean temperature intensity of the ROI to address unstable melting conditions in LPBF. In this case, the IR thermal images are input to calculate the mean intensity. Instead, authors in [16] have implemented an adaptive thresholding algorithm to segment XCT scan images for porosity analysis in the LPBF process. This work addresses the cupping and streaking artifacts from XCT beam hardening, especially in high-density metal AM parts. XCT is a non-destructive inspection technique in which a relaxed state of non-ionized electrons from radiation represents defects [17]. This element composition information is extracted to analyze pore variations resulting from the lack of fusion. XCTs also perform volume-based measurements and can integrate seamlessly with 3D segmentation models. In general, XCT and ultrasonic imaging techniques are utilized for porosity detection, while IR images are used for segmenting melt-pool regions. The selection of the correct type of image format is critical for CV-based defect detection.

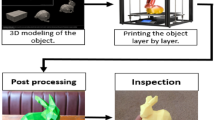

Traditional CV-based inspection methods, such as thresholding [18] or region-growing techniques [19], assume significant user expertise in image processing concepts, which is not viable in all industrial settings. In contrast, deep learning CV methods, mainly supervised DL for surface defects classification, have constant learning and automation capabilities [20]. In supervised deep learning, objects are initially labeled by highlighting them in a bounding box or a free-form shape and serve as an input layer for training DL networks. Next, the intermediate layers squeeze out feature information (such as shapes, straight lines, corners, and edges) or pixel intensity from these images. Finally, the pooling layer extracts relevant information to predict the defect class and location on previously unseen images. Figure 1 shows applications of deep learning in different stages of AM. Convolutional neural network (CNN) is a popular DL architecture that automatically evaluates features using supervised learning for in situ defect detection and monitoring. Such applications of DL for anomaly segmentation are implemented in a wide range of AM surface defects classifications after the introduction of AlexNet in 2012 [21]. Segmentation primarily highlights bounding boxes or pixels in an image corresponding to a user-defined category. In AM, image segmentation models detect porosities, hotspots, spatter, and melt-pool defects. The model’s performance is evaluated based on the number of pixels classified correctly in comparison to the ground truth labels. The Appendix Table 6 lists some of the performance indicators for segmentation. The mean average precision, \(\text{mAP}\), is a key metric for patch-wise localization and mean Intersection over Union, \(\text{IoU}\) for pixel-wise segmentation tasks. Patch-wise localization relates to finding the bounding box of the defect as an output, while pixel-wise segmentation maps the exact segmented contour/localized boundary as an output.

In addition to obtaining segmentation maps, correlating these results with the input design and back to the process variables can optimize the process parameters and minimize future defects. Figure 2 shows the high-level overview of the process flow for DL segmentation in closed-loop feedback for AM discussed in this paper. This concept serves as a basis for several AM process advisory systems since it incorporates design and manufacturing process variables, quality metrics, and data-driven DL recommendations. In this paper, we provide a comprehensive overview of research conducted in applying DL segmentation for in situ AM process defect detection and its extension to a feedback loop for optimizing the AM process parameters.

The paper is arranged as follows: Sect. 2 presents a survey of existing research on DL-based image segmentation in AM. We discuss its classification based on the type of algorithm (patch-wise and pixel-wise). Next, an overview of applications of DL beyond classification for a closed-loop system in AM is reported in Sect. 3. Section 4 discusses the future opportunities for advances in AM segmentation models, such as generative modeling and data augmentation strategies. Lastly, the conclusion is outlined in Sect. 5.

2 Deep learning-based defect localization and segmentation

Typically, in deep learning methods, defect localization aims to find the relative location of the defect in an input image through in situ analysis and is followed by defect segmentation that extracts the defect regions from the input image. As shown in Fig. 2, the bottom-right box describes two methods for DL segmentation: patch-wise (box-type) and pixel-wise segmentation (ROI-type). From an AM defect detection perspective, pixel-level segmentation provides a more accurate result for images captured in a stable background. In contrast, the bounding box prediction method provides good results for images captured on noisy backgrounds where pixel-level segmentation fails. Both methods originate from CNN but have different neural network architectures. In general, a CNN contains three major elements:

-

i.

A kernel box (2D or 3D) is a window that moves in a specific pattern across the image; the kernel box consists of a set of pixels that extracts key features for training

-

ii.

Pooling that downsizes images by retaining key features

-

iii.

An activation function that acts as a filter and maps the weighted sum of input nodes to output probabilistic distribution

The subsequent sections discuss reported studies on AM defect detection, such as porosity and keyholes, using four prominent DL localization and segmentation methods—bounding box detection (two-stage, one-stage) and pixel-level detection (semantic, instance).

2.1 Two-stage detection—faster R CNN

Faster R-CNN is one of the advanced two-stage defect detection models popular for AM defect detection. In a two-stage approach, a set of the regions of interest (ROI) is detected using the selective search algorithm (such as greedy non-maximum suppression) in the first stage and then passed on to the CNN to localize the defects in the second stage. In faster R-CNN, the ROIs, also called masked region proposals, are obtained using a convolution layer instead of a selective search. The masked regions are then processed together in the second stage for feature classification.

Shang et al. [22] have developed an improved faster R-CNN. Their weighted multi-scale fusion approach captures the surface-level defects as well as the depth of the keyhole defects for the SLM process to detect defects such as insufficient powder, sag, scratch, and vibration fringe. Scime and Beuth [23] have proposed an advanced version—MsCNN—that prevents redundant grayscale images from being processed for CNN training through all the channels. Instead, they introduce multi-scale patches in RGB color channels that allow learning of contextual relationships related to powder bed disturbances and anomalies. Figure 3 shows the results obtained on a particular layer using MsCNN approach. The accuracy of faster R-CNN is comparable to or even higher than one-stage defect detection, such as Single Shot Detection (SSD) model. However, the computational time for training is higher due to a two-stage process. For example, in [24], despite faster R-CNN showing better accuracy than SSD to detect a tray, a nozzle, and multiple AM parts in the camera’s field of view, it had an average computational performance.

Multi-scale CNN (MsCNN) approach for identifying defects in a layer for powder-based AM process [23]

Although few studies focus on implementing only faster R-CNN, they have been utilized widely for comparative analysis with one-stage defect detection models such as You Only Look Once (YOLO) or SSD [25,26,27], discussed in the following subsection.

2.2 One-stage detection

One-stage detection performs the classification and localization of defects in a single step in the forward loop. This DL framework combines the base CNN architecture and a separate detection framework to obtain patch-wise or region-wise defects and localization results. The base CNN architectures such as VGGNet [28], ResNet [29], or Darknet-53 [30] extract critical features to perform classification, and these feature vectors are passed to the detection framework, such as YOLO or SSD, to identify the object or defect location. From an AM defect identification standpoint, the single-stage methods eliminate the need for region proposals, saving computational time. Notable studies in AM that report one-stage defect recognition include algorithms that identify misalignment errors [25] and spatters observed near the melt-pool regions [31].

2.2.1 You only look once (YOLO)-based defect detection

The YOLO method classifies an input image into multiple subimage grids, each with a unique output vector that contains an array of object classes and bounding box dimensions. These arrays are further passed on to the convolution layers to extract features. Due to the single-stage detection approach, YOLO (shown in Fig. 4a) performs faster classification than R-CNN (shown in Fig. 4c). YOLO has improved since its inception in 2015, with YOLO v9 [32] being the most advanced architecture in this category at the time of writing this paper. As shown in Fig. 4a, feature vectors are obtained using a base CNN network in YOLO v4 architecture and further passed to the classifier to detect bounding regions. Lu et al. [25] have compared YOLO v4, SSD, and faster RCNN algorithms for robot-based AM processes to detect abrasion and misalignment defects in continuous fiber reinforcement composites. The YOLOv4 was selected as the final model due to its accuracy and efficiency. This model was further extended in closed-loop feedback and is discussed later in Sect. 3.

YOLOv4, SSD, and faster RCNN network architectures implemented for assessing defects in composite materials for robot-based AM process [25]

YOLO is also a popular approach to segment defects in the Wire Arc AM (WAAM), also known as the wire arc-based DED process. WAAM consists of metal-wire as a feedstock instead of metal powder. The following reasons can be attributed to this popularity:

-

i.

YOLO architecture performed well for many small-sized defects on a near-similar colored background usually present in the WAAM process [31, 33].

-

ii.

Compared to freeform labeling, drawing a bounding box would be a less complicated task for small-sized defects.

-

iii.

Pixel-wise labeling may not provide a significant advantage for segmenting small-sized defects.

Wu et al. [30] have developed an improved YOLO v3 that classifies melt pool regions in WAAM. The advances in this work include a bounding box clustering algorithm, improved loss functions, and overall network architecture. In another WAAM process, Li et al. [33] have implemented a YOLO-attention mechanism based on YOLOv4 to effectively localize welds, surface pores, grooves, and slag inclusion in the similar-colored background. The work is validated for single-pass and multi-pass welds with its potential application in real-time defect detection in industrial settings.

2.2.2 Singleshot multibox detection (SSD)

This SSD method also performs similar to YOLO, the differences being the base model and the way bounding box regressor functions work. In YOLO v3, the base model is Darknet-53 and implements the non-maxima suppression technique (NMS) to highlight the object of interest. NMS selects the best ROI proposal from several overlapped bounding boxes. However, in SSD, the base models may vary, be shallow, and have a lightweight architecture. Figure 4b shows the SSD architecture, which classifies the features using a bounding box regressor and classifier. Chen et al. [34] have used ECANet-Mobilenet architecture as the base model for SSD and a novel feature contrast enhancement algorithm to detect defects in critical regions of the ceramic AM parts. The regions include curved surfaces with poor contrast between the defect and ceramic background.

SSDs can extract multi-scale features, leading to a quick output but at the cost of reduced accuracy. Thus, only a few articles have been reported on using SSD for AM defect detection since compromising accuracy may significantly impact quality, primarily when implemented in a closed-loop process. The bounding-box approach generally provides suboptimal defect maps, especially for powder-based AM processes such as LPBF and binder jetting. Pixel-wise methods such as semantic and instance segmentation provide near-accurate segmented regions at a pixel-level. The subsequent section discusses the segmentation-based DL methods and reported studies for AM defect detection.

2.3 Semantic segmentation

Semantic segmentation provides a near-accurate pixel map of a segmented region, giving an in-depth understanding of a defect. An ideal output from a binary-class segmented porosity model is a background containing a non-porous region and a foreground representing a porous region. This section discusses studies reported for three popular semantic segmentation algorithms for defect detection in AM: FCN, U-Net, and dilated convolution models.

2.3.1 Full convolutional neural network-based defect detection

Full convolution networks (FCNs) are end-to-end convoluted architecture, providing a class label and its location in the image, retaining the spatial context of the defects. While fully connected layers [35] are incorporated in traditional CNN, FCN implements decoders that assist in learning the location of the relevant feature. Figure 5 shows the differences between traditional CNN [36] and FCN [37] architecture for two AM processes. In Fig. 5a (CNN), the fully connected layers (shown in purple) classify the LPBF process features into one of the output defects (good quality, gas porosity, crack, or lack of fusion). Still, the locations of these defects in each layer are unknown.

Network architectures for convolutional neural network and fully convolutional network. In a, the CNN classifies the input image in one of the four labels for laser metal deposition [36] while in b, the encoder-decoder network segments the image in background and foregrounds for plasma arc AM process [37]

In contrast, Fig. 5b (FCN) extends the classification results from the encoder to the decoder to determine the exact location of the pool and plasma arc for a plasma arc additive manufacturing (PAAM) process. The FCN model is used widely to segment melt-pool images, especially for plasma arc AM processes, because of its ability to segment critical melt-pool areas with higher accuracy [37, 38]. In the plasma-arc-based process, Zhang et al. [37] applied the FCN model with dilated spatial pyramid pooling (DSPP) to segment melt pools and morphologies. In DSPP, a dilation (digital image processing operation) enhances the accuracy of capturing a more significant number of feature maps without increasing the total parameter count. A DSPP-based CNN would be beneficial in AM defect detection because the size of different defects varies according to the process parameter selection. The grayscale images in the DSPP-FCN model [38] were obtained from a high-speed camera monitoring system. The authors have analyzed the relationship between segmented regions and the process parameters such as current intensity and scanning speed. In another work, Zhu et al. [38] predicted the segmented regions of the melt pool in a PAAM process using FCN. The authors have implemented a video super-resolution algorithm using an AI-edge board to reconstruct images generated from a high-speed camera video, which is subsequently used for image segmentation.

Minnema et al. [39] segmented additively manufactured bone implants using four layers of deep CNN for medical diagnosis. Since FCNs require many training images, the authors have implemented bounding box-based CNN to classify the voxels from the DICOM data. However, bounding box implementations have two drawbacks: reduced speed due to the redundancy of overlapping boxes and localization accuracy variation with the patch size. A convolutional design-based encoder-decoder segmentation model called a “U-Net” [40] is used to address these limitations. This particular network architecture is gaining popularity in AM defect detection and biomedical applications such as cell/tissue anomaly detection. Unlike FCNs, where the deconvolution results in semantic loss, U-Net has multiple feature channels to yield a higher-resolution image and can predict the defect map close to the original image resolution.

2.3.2 U-Net for defect detection

A typical architecture utilized in AM for dense pixel-level defect segmentation is the U-Net architecture for 2D images and 3D volumetric images. As shown in Fig. 6, U-Net consists of an encoder and a symmetric decoder. The encoder encodes the pixel data and extracts the critical features through several layers of convolution-pooling while reducing the spatial dimensions (downsampling), resulting in a compact latent representation vector. Further, in the decoder, the critical features from the encoder are combined with expanding spatial dimensions that restore the original image dimensions and predict the final defect probability maps (upsampling) [41]. In summary, the U-Nets preserve input images’ context and, at the same time, highlight predicted semantic segmentation regions of interest. They are widely used in multiple AM studies to identify defects, including porosity [42,43,44,45], spatter detection [46], cracks [47], melt-pool [48], keyhole-induced bubbles [27], and print quality [49, 50]. Table 1 summarizes encoder-decoder-based variations in U-Net architecture reported for AM defect analysis. From the summary, it can be observed that U-Nets perform well in identifying in situ defects in metal AM. Figure 6 shows an example of defect probability maps for the melt pool regions segmented through U-Net [48].

U-Net implemented for semantic segmentation [48]. The background and defect probability maps give additional insights into the melt-pool zones

One of the popular works in AM segmentation is the hybrid U-Net architecture developed by Scime et al. [51]. In this work, the authors have implemented a dynamic segmentation convolutional neural network (DSCNN) in which U-Net and CNN work in parallel. The authors proposed a segmentation methodology for detecting defects and capturing variations for LPBF, electron beam PBF, and binder jetting processes. The authors have extended this network to rapidly identify defects using an unsupervised encoder to improve the data diversity pool and reduce manual data labeling efforts [52]. Some of the critical impacts of the presented research include the following:

-

No need for image augmentation for training

-

Knowledge transfer between different AM machines

-

Real-time performance evaluation

Wong et al. [54] presented an AM defect segmentation approach using two-dimensional CNNs for layer-wise data and three-dimensional CNNs for volumetric data. The melt pool maps detected through U-Net are discussed in this work. Among the different variations of the U-Net architecture, the 2D U-Net showed the highest dice coefficient. Additional details on U-Net metrics, including dice coefficient, are included in the Appendix Table 6.

As for other defect types, Zamiela et al. [55] proposed a deep image fusion approach that combines data from in situ thermal images and post-processed ex situ ultrasonic images to predict porosity. The authors have implemented two modified versions of U-Net: inception blocks for locating defects of varying sizes and Long Short-Term Memory (LSTM) recurrent networks to capture spatial–temporal relations that provide frame-to-frame distinctions. The prediction accuracy improved when using the inception blocks. The research reported by Zamiela et al. [55] is the first work in AM segmentation that considers both in situ and post-process results for porosity predictions.

2.3.3 Dilated convolution models for defect detection

Along with U-Net, dilated convolution models, particularly DeepLab v3 and spatial pyramid pooling, have been implemented for identifying extrusion defects [56], fatigue lifetime [57], and spatter-melt pool defects [58] for FDM, SLM, and DED processes, respectively. An advantage of dilated convolution models over U-Net is atrous convolutions—an alternative approach to the downsampling step of the encoder stage. Atrous convolution overcomes the pooling layer’s information loss through multi-scale input feature maps. In other words, U-Net input features are commonly extracted from a fixed kernel size, but in dilated convolutions, features can combine from variable kernels. This property can be important for AM to extract multi-size shape defects for accurate process recommendations. For example, Jin et al. [56] implemented a DeepLab v3 architecture for extrusion defects in an FDM process. The atrous convolutions can rescale the feature-rich map to a resolution of an original image due to multiple sampling processes. Figure 7 shows the segmented maps for “background,” “previous-layer,” “good quality,” “over-extrusion,” and “under-extrusion” classes [56] obtained through DeepLab v3. The authors have also implemented a modified YOLO network to detect bounding boxes for the same dataset, showing an excellent mean average precision. Pan et al. [57] have characterized small-target defects using DeepLab v3 + for synchrotron radiation computed tomography (SRCT) specimen images obtained for the SLM Ti-6Al-4 V process to forecast the fatigue lifetime. In [58], the authors have segmented the spatter regions in DED using atrous convolutions and correlated the number of spatters and the melt pool area to laser scanning speed and power.

Segmented regions for an FDM process obtained using DeepLab v3 [56]

Although semantic segmentation approaches help segment the defects, such as porosity and spatters, there could be inaccuracies in quantifying the total number of defects in each layer in case of overlapping defects. Instance segmentation can overcome these limitations by separating each segmented region as a separate instance of defect.

2.4 Instance segmentation—mask R-CNN

In semantic segmentation, the objects of the same class are classified under a unique label. However, in instance segmentation, objects of the same class can have different labels. The latter provides a near-accurate segmentation mapping but involves a detailed object labeling phase. In this section, we focus on AM studies utilizing mask R-CNN—one of the popular instance segmentation techniques.

Mask R-CNN is an extension of faster R-CNN discussed in Sect. 2.1. Each overlapped bounding box output detected using mask R-CNN is treated as a separate instance of segmentation. This network can segment occluded objects effectively. Klippstein et al. [59] have developed an online monitoring system for LPBF to track the powder spread in each layer through mask R-CNN and trigger a “severity alarm” if the defects exceeds a certain threshold. Figure 8a shows another work where mask R-CNN architecture is employed for defect detection [60]. In this figure, the backbone CNN-based architecture extracts multi-scale features to generate several possible region proposals of the defect. These ROIs are mapped back through the decoder to gradually restore the original image through the “alignment” layer. As shown in Fig. 8b, the mask R-CNN segments several powder-based defects, such as uneven powder, uncovered powder, and scratches.

a Mask R-CNN Architecture for predicting powder spreading defects for magnetic materials in AM. b Corresponding powder defects results and its comparison with the ground-truth [60]

Variations of mask R-CNN architectures for identifying different AM defects include powder-based defects [61, 62], melt-pool segmentation [63], pore detection [64] warpages [65], and detection of powdered AM parts from post-process build tray [66]. Table 2 summarizes predicting different types of AM defects using mask R-CNN. Being a two-stage network, mask R-CNN can be slower for predictions. In addition, several models are initially pre-trained using public datasets such as COCO [67] to ensure they can localize common objects before training them in the defects dataset [68]. These transferrable pre-trained models are also known as transfer learning, in which trained weights are transferred to another model.

Depending on the scale of training segmentation algorithms in AM, the predominant challenge in applying such models for a real-time closed-looped feedback system is the latency in detection and subsequent action policy to either stop the build process or compensate for the detected errors. The next section reports advancements in extending these DL techniques for closed-loop feedback in AM.

3 Extension of DL for closed-loop feedback in AM

While previously presented DL techniques are equipped to detect defects in AM, their extension to quality forecasting and predictive analytics can be used to tailor the process parameters to improve the overall build quality. This allows the users to achieve a zero-defect additive manufacturing practice [69] and circumvent the currently available passive feedback and non-adaptive in-process control [70]. This section explores the reported work in DL classification and segmentation with a provision for closed-loop feedback in AM. Some of the commonly reported DL-based feedback processes in AM include adjusting voltage/current levels of the laser [71, 72], adapting the process variables [73,74,75], and/or compensating for geometrical errors [76].

3.1 Closed-loop feedback using non-DL approaches in AM

Non-computer vision-based traditional approaches, such as the research by Yao et al. [77], show how a Markov decision process-based model can be designed to develop an optimal control policy. This mathematical model characterizes layer-wise irregular and non-homogenous patterns in AM and solves the defect-state signals for subsequent build layers. In the case of computer vision-based in situ feedback algorithms that utilize a non-DL approach, some reported studies include the following:

-

A diagnosis for under-fill or over-fill using Proportional-Integral-Derivative (PID) to automatically correct the process parameters, such as turning the cooling fan ON or OFF [78]

-

An intelligent adaptive control framework based on the probabilities of out-of-control processes in a simulated AM extrusion process [79]

-

Laser power feedback for the next layer based on thermal history and process features for LPBF [80]

-

The feedback system for the electron beam melting process using an in situ infrared (IR) thermography [81]

-

An open-source Additive Manufacturing Autonomous Research System (AM ARES) for automated image analysis through machine learning and closed-loop feedback for the material extrusion process. This work [82] implements a cloud-based feedback cycle to correct prime delay, print speed, and x and y-offset correction through Bayesian optimization techniques

These methods can be seamlessly adapted and implemented through DL—a subset of machine learning, for further improvements in accuracy. Figure 9 shows a concept for implementing multiple levels of feedback using machine learning in AM. For example, the melt-pool level feedback can assist in altering the laser parameters, while the layer-level feedback can assist in adjusting the recoating parameters [83]. The closed-loop mechanism is achieved by providing process signature inputs to an offline-machine learning training model. Later, this model is deployed online to provide corrective actions. Although several studies have been reported for ML-based feedback in AM, we focus on techniques where the results from DL image analysis (classification and segmentation) are employed for closed-loop analysis.

Machine learning-based feedback architecture in AM; this can extend to the DL segmentation framework [83]

3.2 Closed-loop feedback using “classification-only” DL in AM

A closed-loop feedback network employed in a complex, rapid manufacturing setting such as AM would require instantaneous decision-making for mitigating defects. A classification framework is equipped to do so because if a particular set of parameters yields defects within a specific class based on their size, shape, and location, preventative measures could immediately rectify those defects. Additionally, classification-only DL methods (equivalent to a traditional CNN discussed in Sect. 2.3.1) have become significantly robust compared to the earlier networks based on shallow-depth multi-layer perceptron [84]. Due to these reasons, a classification-only DL would be an optimum choice for the closed-loop feedback system in AM. This section discusses studies performed on closed-loop feedback networks using these types of DL networks.

Yang et al. [85] have trained melt pool images for the LPBF using CNN architecture with an island serpentine scan strategy to classify regions based on the melt pool size. The authors have proposed its potential application in closed-loop feedback and feedforward control to adjust the instantaneous laser power and maintain a consistent melt pool. As for other AM modalities, closed feedback strategies are reported for fused filament fabrication machines, primarily due to the ease of machine access, deployment, and cost-effectiveness. Table 3 lists the literature summary reported for CNN-classification-based closed-loop feedback in AM. Many studies in this table have a common goal—to detect layer-wise defects with DL and pause the print if the defects deviate from the nominal print strategy [86,87,88]. Despite pausing and restarting being effective in specific scenarios, providing real-time feedback during the process can facilitate reducing and correcting the errors accurately. DL segmentation can effectively address the defect’s locations, size, and shapes in such cases and address real-time defect suppression. The following section discussed reported studies in DL-segmentation implemented in the feedback loop.

3.3 Closed-loop feedback using segmentation DL in AM

Studies performed for some traditional manufacturing applications [89] utilize the results from the DL-segmentation algorithms to develop a forecasting model and change the process parameters intelligently. These models can predict defects over time by tracking their accurate region of interest using digital image pre-processing approaches. Similar strategies can extend to AM applications.

For example, Lu et al. [25] implemented closed-loop feedback for correcting process parameters, including layer thickness and filament feed rate, in a robot-based AM process. In their work, misalignments and abrasion defects are detected using DL segmentation algorithms. The printing strategy is updated intelligently and in real-time with a new combination of process variables. In another study, Cho et al. [90] proposed a three-phase research framework to identify and rectify the Wire Arc Additive Manufacturing (WAAM) process anomalies using the MobileNet CNN model to detect normal or abnormal processes using computer vision. The model knowledge and data are then fed to an inference engine to obtain optimal process parameters through a closed-loop feedback system, as shown in Fig. 10. Table 4 lists the literature summary for DL-segmentation-based closed-loop feedback in AM.

Closed-loop feedback control and generative process planning monitoring through DL-based anomaly detection in Wire Arc Additive Manufacturing (WAAM) proposed by Cho et al. [90]

As elaborated in the table, several studies have reported implementing patch-wise defect segmentation CNN models (YOLO, SSD, and faster R-CNN) for misalignments [25], extrusion defect correction [75], and semantic segmentation model for melt-pool width control [72].

3.4 Implementation challenges of closed-loop feedback in AM

CNNs, FCNs, U-Nets, and other DL models aid in detecting a defect or, in some cases, predict the existence of defects based on the training data. However, there is limited literature that focuses on a closed-loop feedback approach to modify the input build parameters. Traditional prediction models are fast, but their computational speed cannot match the laser travel speed or the temperature dissipation that causes the melt pool. This disparity poses a challenge in making real-time changes to the parameters such that defects are either prevented or compensated.

Furthermore, most of the reviewed research focuses on simple idealized geometries. The effects of combining different geometric features in one or more slice layers on the build quality are not well-researched, owing to the cost and complexity of such experimentation. Due to this, a model trained or constructed using the present DL models may eventually prove insufficient when applied to real-life parts with complex geometry. Finally, a feedback loop needs to upgrade the models in real time based on their predictions and potential issues from the previous iteration. This cyclical feedback loop and online learning are computationally expensive and, thus, a significant challenge in their implementation. The final section discusses potential opportunities to address these challenges.

4 Discussion and future scope

While the applications of DL for defect detection in AM are increasing, several opportunities exist in implementing and adapting variations of object detection, localization, and segmentation DL architectures. The sources for these proposed opportunities are of various levels of sophistication, ranging from graph-based methods with DL to an AM-friendly closed-loop intelligent PLM framework. The latter involves a feedback loop to provide real-time information to the prediction models that users can leverage to make process adjustments for optimum part quality. This section reports ongoing advancements in segmentation, closed-loop feedback, and possible areas for improving overall accuracy.

4.1 Advanced DL models for defect detection in AM

Several advances and variations in the segmentation algorithms beyond FCN and U-Net are being reported for improved results. For example, the Image Cascade Network (ICN) [91] shows a good trade-off between speed and accuracy for segmenting real-time images. UNet + + [92] is another advanced architecture that performs a fusion of semantically similar feature maps. Compared to traditional U-Net, where dissimilar feature maps correlate through skip connections, UNet + + utilizes a standalone CNN to train the skip pathways, eventually improving the feature transfer process from the encoder to the decoder. UNet + + can handle a large volume of data in a reasonable time. Another advancement includes the design of a segmentation network, which consists of a combination of classification and segmentation. Tan et al. [93] developed a novel DL-based segmentation that combines CNN-based block selection for classification and Thresholding Neural Network (TNN) for segmenting binarized maps for spatter extraction. They aimed to capture and extract the spatter signatures connected to a molten pool of an LBPF process, yielding better accuracy than U-Nets.

Additionally, anchor-free models, such as CenterNet [94], are being developed for AM to extend the ‘one-stage patch-wise’ segmentation networks discussed in Sect. 2.2. The authors in [94] propose the CenterNet architecture that simultaneously classifies defects based on their type, location, and counts. Compared to multiple bounding boxes predicted in YOLO [95] or SSD [34], CenterNet trains with a single reference bounding box and is computationally less expensive. Other segmentation models such as Automated Computed Tomography Segmenter (ACTS) [96], modified DeepLab V3 + [97], Class Activation Map Guided U-Net [98], and segmentation with transfer learning [68] have provided improved accuracy that can be adapted for AM defect detection.

4.2 Vision transformers in additive manufacturing

While traditional CNNs provide both bounding-box and pixel-wise segmented regions of interest, vision transformers (ViT)—popular for natural language processing applications—are recently receiving attention for image processing tasks [99]. Vision transformers differ from CNN architectures in the way it processes the input images for training. Unlike CNN where input images focus on small patches or moving window to extract features, ViT splits each image into fixed-size patches. This is followed by passing them through the transformer encoder (embedded with multi-head attention mechanism) and perform classification. For AM applications, recent ViT studies include the following:

-

Multimodal sensor and image data to classify FDM print quality (rough, normal printing, overfill, regular pattern, underfill, and warping). For image analysis, the authors implemented a regular multi-head attention vision transformer in combination with a novel shift window feature extraction technique [100]. Multi-heads are capable to process multiple features of images in parallel

-

A super frame feature pyramid transformer (SFFPT) to learn spatiotemporal relations from videos captured using high-speed camera for a metal 3D printing (M3DP) to classify plumes, spatter, and melt-pool [101]

-

Incorporating Swin Transformer [102] within YOLOv7 to classify defects for industrial CT-scans of 3D printer lattice structure (curve, warped, and break) [103].

In general, ViT has a potential capture global complex relationship within defects in an image; however, they require a significant amount of training data to outperform CNNs, which may be a bottleneck to their implementation. One approach to improve the training data diversity is implementing Generative Adversarial Network (GAN) to generate synthetic images.

4.3 Training data diversity to improve DL performance

One of the most common bottlenecks for DL applications in AM is the lack of sufficient training data, limited open-source datasets, and the prohibitive amount of time and cost associated with its accrual. Generative Adversarial Network [104] is popular for creating synthetic data. In cases where the training data is complex to generate for GANs, a pre-processing step of adding noise to the limited training data can be employed to induce data diversity. As shown in Fig. 11, a GAN architecture consists of a generator and a discriminator. The generator produces “fake data” from random inputs and a discriminator that penalizes the “fake” generated data against the ground truth obtained from the training/test data. The core idea behind GAN is to decrease the error between the generator and the discriminator to the point where the generator starts producing images (or data points) that resemble the ground truth by fooling the discriminator. Cannizzaro et al. [46] proposed an AM defect segmentation algorithm where a Concurrent-Single-Image-GAN (ConSinGAN) [104] was used to generate synthetic training data (images of defects). Liu et al. [105] studied melt pool detection using GANs for directed energy deposition. They performed segmentation on the thermal images using an Image Enhancement Generative Adversarial Network (IEGAN).

Image Enhancement Generative Adversarial Network (IEGAN) architecture [105]

Their focus was to enhance the contrast ratio of the training dataset, which eventually helped in the defect segmentation process. Petrik et al. [106] developed MeltPoolGAN to generate melt-pool images and obtain optimized laser power, scan speed, and scan direction. The data training was performed using the open-source metal AM NIST dataset [107] and in-house ETH data. The XCT scan images in the open-source dataset were obtained on cylindrical samples printed with cobalt-chromium alloys using the LPBF AM process.

However, this approach is not warranted in the AM feedback loop-based model to predict and change the process parameters in real-time. Since correlating the synthetic data points to an input parameter set is difficult, we need a method to parametrize the input parameters to the GAN-generated data. One approach would be to obtain discrete data points that produce real images and perform a curve fitting to get a continuous parameterization of the process parameter and images. However, this requires a significant number of discrete points, which can be a neural network-based problem in itself.

Other synthetic data generation techniques, such as mosaic augmentation [33] that involve recombining multiple images into a new image, have been investigated for training segmentation models with limited datasets. Additionally, few-shot learning (FSL) methods, such as prototypical networks [108], are also receiving attention for training sparsely available data. FSL is based on the concept that the network is trained on only a few standard images and learns enough feature points to classify an unseen image. However, since this network was developed recently, only a few AM defect detection applications using FSL are available in published literature. The most relevant research article in this area is the application of one-shot learning in fault diagnosis in 3D printers [109]. In this work, a one-shot learning network is trained based on the signal collected from the 3D printer. Before training this network, a feature space comprising unique labels for healthy and faulty machine conditions is created. Subsequently, a bidirectional GAN maps the input signal and the feature space. This approach outperforms fault diagnostic systems in this domain. In another study, Wang [110] has performed a semantic segmentation using cLass-aware Semantic Contrast and Attention amalgamation (LSCA) to detect defects in unbalanced data. In this work, the author has implemented an open-source FDM defect dataset [111] for in situ stratified defect detection (empty, under, normal, and over). Unlike common supervised CNN models, contrastive learning is a self-supervised model that learns rich semantics and improves feature representation in imbalanced labeled training data.

Further, for improvements on the hardware side and to reduce the computational processing time, several studies have explored the AI-edge computing board graphics processing unit (GPU)-based parallel processing [38] and Field Programmable Gate Arrays (FPGA)-based real-time embedded systems. FGPA works well with an embedded AM machine, along with an option for scalability to train neural networks. For example, authors in [112] have developed an FPGA-based binarized neural network to classify real-time defects in metal AM.

4.4 Predictive feedback for process parameter optimization and quality control

Combining defect detection results from semantic segmentation with probabilistic techniques such as Bayesian networks [113,114,115], ontology-based knowledge graphs [116], fuzzy clustering, or stochastic models can improve the predictions on the final accuracy. Hybrid DL models inspired using U-Net and VGG16 have combined CNN and traditional neural networks to predict part printability in the LPBF process [23]. The authors in this work have highlighted the importance of not only microstructural defects but also machine-level defects, such as recoater arm errors in metal AM or nozzle defects in extrusion-based AM, which also impact the overall part quality. An overall summary deep learning models, advantages/disadvantages, suitable AM process, complexity level, and cost of industrial implementation are presented in Table 5.

5 Conclusion

Defect detection in AM is correlated to multiple factors, including part geometry, materials interaction, in situ physics, and process parameter optimization. In such cases, process monitoring becomes vital for real-time defect inspection. Deep learning can assess these complex associations and provide near-accurate predictions to minimize these defects. Several reported studies in computer vision-based DL, such as YOLO, faster R-CNN, U-Net, and dilated convolution, have the ability to perform localization and segment defect locations precisely. In particular, semantic segmentation methods such as U-Net are implemented widely to identify layer-wise porosity defects for the metal AM process. However, fine-tuning and extending this architecture to recognize multiple AM defects, such as spatter and keyhole defects, will improve generalization. Further, localization methods such as YOLO are commonly deployed in Wire Arc and Plasma Arc Additive Manufacturing to detect small-sized defects where semantic segmentation exhibits certain limitations.

Several studies have presented extensions of the DL to update the process parameters or recommend an array of actions in real-time. While numerous reported research studies suggest pausing the process in case of any errors, particularly for plastic-based 3D printing processes, deploying them for laser-based processes may exhibit specific challenges due to their inherent process speed and complex physics phenomena. In such cases, integrating the system’s surrogate models or physics domain knowledge in the segmentation architectures would aid in associating image data to input process parameters. Surrogate modeling is a popular technique for achieving reduced-order computationally lightweight and physics-accurate representation of high-fidelity calculations. While these techniques rely heavily on data, DL algorithms such as few-shot learning (FSL) and hybrid DL segmentation models are also emerging to predict features from limited datasets.

In addition to segmentation models such as FCN, U-Net, dilated convolution and pyramid networks, we report several advanced architectures developed for AM defect detection and address methods to improve training data diversity. Methods such as GANs, DL-based vision transformers, and diffusion-based models are receiving wide attention for image classification, synthetic data generation, and defect detection in AM.

References

King WE, Anderson AT, Ferencz RM et al (2015) Laser powder bed fusion additive manufacturing of metals; physics, computational, and materials challenges. Appl Phys Rev 2:041304. https://doi.org/10.1063/1.4937809

Gibson I, Rosen D, Stucker B (2015) Additive manufacturing technologies: 3D printing, rapid prototyping, and direct digital manufacturing, second edition. Addit Manuf Technol 3D Printing, Rapid Prototyping, Direct Digit Manuf Second Ed 1–498. https://doi.org/10.1007/978-1-4939-2113-3/

Ziaee M, Crane NB (2019) Binder jetting: a review of process, materials, and methods. Addit Manuf 28:781–801. https://doi.org/10.1016/J.ADDMA.2019.05.031

Fu Y, Downey A, Yuan L et al (2021) In situ monitoring for fused filament fabrication process: A review. Addit Manuf 38:101749. https://doi.org/10.1016/J.ADDMA.2020.101749

Bartlett JL, Li X (2019) An overview of residual stresses in metal powder bed fusion. Addit Manuf 27:131–149. https://doi.org/10.1016/J.ADDMA.2019.02.020

Ye C, Zhang C, Zhao J, Dong Y (2021) Effects of post-processing on the surface finish, porosity, residual stresses, and fatigue performance of additive manufactured metals: a review. J Mater Eng Perform 309(30):6407–6425. https://doi.org/10.1007/S11665-021-06021-7

Ngo TD, Kashani A, Imbalzano G et al (2018) Additive manufacturing (3D printing): a review of materials, methods, applications and challenges. Compos Part B Eng 143:172–196. https://doi.org/10.1016/J.COMPOSITESB.2018.02.012

Teng C, Gong H, Szabo A et al (2017) Simulating melt pool shape and lack of fusion porosity for selective laser melting of cobalt chromium components. J Manuf Sci Eng Trans ASME 139. https://doi.org/10.1115/1.4034137/472194

Boutaous M, Liu X, Siginer DA, Xin S (2021) Balling phenomenon in metallic laser based 3D printing process. Int J Therm Sci 167:107011. https://doi.org/10.1016/J.IJTHERMALSCI.2021.107011

Wang L, Zhang Y, Chia HY (2022) Yan W (2022) Mechanism of keyhole pore formation in metal additive manufacturing. npj Comput Mater 81(8):1–11. https://doi.org/10.1038/s41524-022-00699-6

Huang Y, Fleming TG, Clark SJ et al (2022) Keyhole fluctuation and pore formation mechanisms during laser powder bed fusion additive manufacturing. Nat Commun 131(13):1–11. https://doi.org/10.1038/s41467-022-28694-x

Vavilov VP, Pawar SS (2015) A novel approach for one-sided thermal nondestructive testing of composites by using infrared thermography. Polym Test 44:224–233. https://doi.org/10.1016/J.POLYMERTESTING.2015.04.013

Jaber A, SattarpanahKarganroudi S, Meiabadi MS et al (2022) On smart geometric non-destructive evaluation: inspection methods, overview, and challenges. Mater 15:7187. https://doi.org/10.3390/MA15207187

Waller JM, Saulsberry RL, Parker BH et al (2015) Summary of NDE of additive manufacturing efforts in NASA. AIP Conf Proc 1650:51–62. https://doi.org/10.1063/1.4914594

Grasso M, Demir AG, Previtali B, Colosimo BM (2018) In situ monitoring of selective laser melting of zinc powder via infrared imaging of the process plume. Robot Comput Integr Manuf 49:229–239. https://doi.org/10.1016/J.RCIM.2017.07.001

Lifton J, Liu T (2021) An adaptive thresholding algorithm for porosity measurement of additively manufactured metal test samples via X-ray computed tomography. Addit Manuf 39:101899. https://doi.org/10.1016/J.ADDMA.2021.101899

Minaee S, Boykov Y, Porikli F et al (2022) Image segmentation using deep learning: a survey. IEEE Trans Pattern Anal Mach Intell 44:3523–3542. https://doi.org/10.1109/TPAMI.2021.3059968

Xu X, Xu S, Jin L, Song E (2011) Characteristic analysis of Otsu threshold and its applications. Pattern Recognit Lett 32:956–961. https://doi.org/10.1016/J.PATREC.2011.01.021

Hojjatoleslami SA, Kittler J (1998) Region growing: a new approach. IEEE Trans Image Process 7:1079–1084. https://doi.org/10.1109/83.701170

Chen Y, Peng X, Kong L et al (2021) Defect inspection technologies for additive manufacturing. Int J Extrem Manuf 3:022002. https://doi.org/10.1088/2631-7990/ABE0D0

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60:84–90. https://doi.org/10.1145/3065386

Shang Y, Xiao C, Pan K, Xue L (2022) Research on defect detection algorithm of additive manufacturing powder spreading based on improved Faster R CNN. International Conference on Advanced Manufacturing Technology and Manufacturing Systems (ICAMTMS 2022). Shijiazhuang, China, 12309:586–591. https://doi.org/10.1117/12.2645471

Scime L, Beuth J (2018) A multi-scale convolutional neural network for autonomous anomaly detection and classification in a laser powder bed fusion additive manufacturing process. Addit Manuf 24:273–286. https://doi.org/10.1016/J.ADDMA.2018.09.034

Lemos CB, Farias PCMA, Filho EFS, Conceicao AGS (2019) Convolutional neural network based object detection for additive manufacturing. In 2019 19th International Conference on Advanced Robotics (ICAR). Belo Horizonte, Brazil, pp 420–425. https://doi.org/10.1109/ICAR46387.2019.8981618

Lu L, Hou J, Yuan S et al (2023) Deep learning-assisted real-time defect detection and closed-loop adjustment for additive manufacturing of continuous fiber-reinforced polymer composites. Robot Comput Integr Manuf 79:102431. https://doi.org/10.1016/J.RCIM.2022.102431

Li Y, Mu H, Polden J et al (2022) Towards intelligent monitoring system in wire arc additive manufacturing: a surface anomaly detector on a small dataset. Int J Adv Manuf Technol 120:5225–5242. https://doi.org/10.1007/S00170-022-09076-5/FIGURES/13

Zhang J, Lyu T, Hua Y et al (2022) Image segmentation for defect analysis in laser powder bed fusion: deep data mining of X-ray photography from recent literature. Integr Mater Manuf Innov 11:418–432. https://doi.org/10.1007/S40192-022-00272-5/FIGURES/16

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. Proc IEEE Computer Vision and Pattern Recognition 2016 (CVPR). Las Vegas, NV, USA, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Redmon J, Farhadi A, Redmon J, Farhadi A (2018) YOLOv3: An Incremental Improvement. Preprint at https://arxiv.org/abs/1804.02767

Wu J, Huang C, Li Z et al (2023) An in situ surface defect detection method based on improved you only look once algorithm for wire and arc additive manufacturing. Rapid Prototyp J 29:910–920. https://doi.org/10.1108/RPJ-06-2022-0211/FULL/PDF

Patel S, Mekavibul J, Park J et al (2019) Using machine learning to analyze image data from advanced manufacturing processes. In 2019 systems and information engineering design symposium SIEDS 2019. Charlottesville, VA, USA, pp 1–5. https://doi.org/10.1109/SIEDS.2019.8735603

Wang C-Y, Yeh I-H, Liao H-YM (2024) YOLOv9: learning what you want to learn using programmable gradient information. Preprint at https://arxiv.org/abs/2402.13616

Li W, Zhang H, Wang G et al (2023) Deep learning based online metallic surface defect detection method for wire and arc additive manufacturing. Robot Comput Integr Manuf 80:102470. https://doi.org/10.1016/J.RCIM.2022.102470

Chen W, Zou B, Huang C et al (2023) The defect detection of 3D-printed ceramic curved surface parts with low contrast based on deep learning. Ceram Int 49:2881–2893. https://doi.org/10.1016/J.CERAMINT.2022.09.272

Shiri P, Baniasadi A (2022) Convolutional fully-connected capsule network (CFC-CapsNet): a novel and fast capsule network. J Signal Process Syst 94:645–658. https://doi.org/10.1007/S11265-021-01731-6/TABLES/6

Cui W, Zhang Y, Zhang X et al (2020) Metal additive manufacturing parts inspection using convolutional neural network. Appl Sci 10:545. https://doi.org/10.3390/APP10020545

Zhang Y, Mi J, Li H et al (2022) In situ monitoring plasma arc additive manufacturing process with a fully convolutional network. Int J Adv Manuf Technol 120:2247–2257. https://doi.org/10.1007/S00170-022-08929-3/FIGURES/11

Zhu W, Li H, Shen S et al (2024) In-situ monitoring additive manufacturing process with AI edge computing. Opt Laser Technol 171:110423. https://doi.org/10.1016/J.OPTLASTEC.2023.110423

Minnema J, van Eijnatten M, Kouw W et al (2018) CT image segmentation of bone for medical additive manufacturing using a convolutional neural network. Comput Biol Med 103:130–139. https://doi.org/10.1016/J.COMPBIOMED.2018.10.012

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. Lect Notes Comput Sci (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) 9351:234–241. https://doi.org/10.1007/978-3-319-24574-4_28/COVER

Jiang R (2023) Analysis of laser melting and resolidification related to additive manufacturing. Carnegie Mellon University, Thesis. Pittsburgh, PA. https://doi.org/10.1184/R1/24123495.V1

Acharya P, Chu TP, Ahmed KR, Kharel S (2022) A deep learning approach for defect detection and segmentation in X-ray computed tomography slices of additively manufactured components. Int J Artif Intell Appl 13. https://doi.org/10.5121/ijaia.2022.13401

Mutiargo B, Pavlovic M, Malcolm AA et al (2019) Evaluation of X-ray computed tomography (CT) images of additively manufactured components using deep learning. In Proceedings of the 3rd Singapore International Non-Destructive Testing Conference and Exhibition, (SINCE2019). Singapore, p 5. https://doi.org/10.3850/978-981-11-2719-9

Bellens S, Vandewalle P, Dewulf W (2021) Deep learning based porosity segmentation in X-ray CT measurements of polymer additive manufacturing parts. Procedia CIRP 96:336–341. https://doi.org/10.1016/J.PROCIR.2021.01.157

Croom BP, Berkson M, Mueller RK et al (2022) Deep learning prediction of stress fields in additively manufactured metals with intricate defect networks. Mech Mater 165:104191. https://doi.org/10.1016/J.MECHMAT.2021.104191

Cannizzaro D, Varrella AG, Paradiso S et al (2022) In-situ defect detection of metal additive manufacturing: an integrated framework. IEEE Trans Emerg Top Comput 10:74–86. https://doi.org/10.1109/TETC.2021.3108844

Iyer N, Raghavan S, Zhang Y et al (2021) Attention-based 3D neural architectures for predicting cracks in designs. In: Farkaš I, Masulli P, Otte S, Wermter S (eds) Artificial Neural Networks and Machine Learning – ICANN 2021. Lecture Notes in Computer Science (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics), LNCS. 12891:179–190. https://doi.org/10.1007/978-3-030-86362-3_15

Fang Q, Tan Z, Li H et al (2021) In-situ capture of melt pool signature in selective laser melting using U-Net-based convolutional neural network. J Manuf Process 68:347–355. https://doi.org/10.1016/J.JMAPRO.2021.05.052

Zhang Y, Zhao YF (2022) Hybrid sparse convolutional neural networks for predicting manufacturability of visual defects of laser powder bed fusion processes. J Manuf Syst 62:835–845. https://doi.org/10.1016/J.JMSY.2021.07.002

Mehta M, Shao C (2022) Federated learning-based semantic segmentation for pixel-wise defect detection in additive manufacturing. J Manuf Syst 64:197–210. https://doi.org/10.1016/J.JMSY.2022.06.010

Scime L, Siddel D, Baird S, Paquit V (2020) Layer-wise anomaly detection and classification for powder bed additive manufacturing processes: a machine-agnostic algorithm for real-time pixel-wise semantic segmentation. Addit Manuf 36:101453. https://doi.org/10.1016/J.ADDMA.2020.101453

Scime L, Goldsby D, Paquit V (2023) Methods for rapid identification of anomalous layers in laser powder bed fusion. Manuf Lett 36:35–39. https://doi.org/10.1016/J.MFGLET.2023.01.003

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. Proc IEEE Comput Soc Conf Comput Vis Pattern Recognit 7132–7141. https://doi.org/10.1109/CVPR.2018.00745

Wong VWH, Ferguson M, Law KH et al (2022) Segmentation of additive manufacturing defects using U-Net. J Comput Inf Sci Eng 22. https://doi.org/10.1115/1.4053078/1128855

Zamiela C, Jiang Z, Stokes R et al (2023) Deep multi-modal U-Net fusion methodology of thermal and ultrasonic images for porosity detection in additive manufacturing. J Manuf Sci Eng 145. https://doi.org/10.1115/1.4056873

Jin Z, Zhang Z, Ott J, Gu GX (2021) Precise localization and semantic segmentation detection of printing conditions in fused filament fabrication technologies using machine learning. Addit Manuf 37:101696. https://doi.org/10.1016/J.ADDMA.2020.101696

Pan J, Hu D, Zhou L et al (2024) Semantic segmentation of defects based on DCNN and its application on fatigue lifetime prediction for SLM Ti-6Al-4V alloy. Philos Trans R Soc A 382. https://doi.org/10.1098/RSTA.2022.0396

Mi J, Zhang Y, Li H et al (2023) In-situ monitoring laser based directed energy deposition process with deep convolutional neural network. J Intell Manuf 34:683–693. https://doi.org/10.1007/S10845-021-01820-0/FIGURES/11

Klippstein SH, Heiny F, Pashikanti N et al (2022) Powder spread process monitoring in polymer laser sintering and its influences on part properties. JOM 74:1149–1157. https://doi.org/10.1007/S11837-021-05042-W/FIGURES/9

Chen HY, Lin CC, Horng MH et al (2022) Deep Learning applied to defect detection in powder spreading process of magnetic material additive manufacturing. Mater 15:5662. https://doi.org/10.3390/MA15165662

Cohn R, Anderson I, Prost T et al (2021) Instance segmentation for direct measurements of satellites in metal powders and automated microstructural characterization from image data. JOM 73:2159–2172. https://doi.org/10.1007/S11837-021-04713-Y/FIGURES/10

Bakas G, Dimitriadis S, Deligiannis S et al (2022) A tool for rapid analysis using image processing and artificial intelligence: automated interoperable characterization data of metal powder for additive manufacturing with SEM case. Met 12:1816. https://doi.org/10.3390/MET12111816

Xia C, Pan Z, Zhang S et al (2020) Mask R-CNN-based welding image object detection and dynamic modelling for WAAM. Trans Intell Weld Manuf 57–73. https://doi.org/10.1007/978-981-15-7215-9_4/FIGURES/14

Han F, Liu S, Liu S et al (2020) Defect detection: defect classification and localization for additive manufacturing using deep learning method. In 21st International Conference on Electronic Packaging Technology ICEPT 2020. Guangzhou, China, pp 1–4. https://doi.org/10.1109/ICEPT50128.2020.9202566

Xiao L, Lu M, Huang H (2020) Detection of powder bed defects in selective laser sintering using convolutional neural network. Int J Adv Manuf Technol 107:2485–2496. https://doi.org/10.1007/S00170-020-05205-0/FIGURES/11

Lim JXY, Pham QC (2021) Automated post-processing of 3D-printed parts: artificial powdering for deep classification and localisation. Virtual Phys Prototyp 16:333–346. https://doi.org/10.1080/17452759.2021.1927762

Lin TY, Maire M, Belongie S et al (2014) Microsoft COCO: common objects in context. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T (eds) Computer Vision – ECCV 2014. 8693 Springer, Cham, LNCS, pp 740–755. https://doi.org/10.1007/978-3-319-10602-1_48/COVER

Ferguson M, Ak R, Lee YTT, Law KH (2018) Detection and segmentation of manufacturing defects with convolutional neural networks and transfer learning. Preprint at https://arxiv.org/abs/1808.02518

Caiazzo B, Di Nardo M, Murino T et al (2022) Towards zero defect manufacturing paradigm: a review of the state-of-the-art methods and open challenges. Comput Ind 134:103548. https://doi.org/10.1016/J.COMPIND.2021.103548

Moretti M, Rossi A, Senin N (2021) In-process monitoring of part geometry in fused filament fabrication using computer vision and digital twins. Addit Manuf 37:101609. https://doi.org/10.1016/J.ADDMA.2020.101609

Wang T, Kwok TH, Zhou C, Vader S (2018) In-situ droplet inspection and closed-loop control system using machine learning for liquid metal jet printing. J Manuf Syst 47:83–92. https://doi.org/10.1016/J.JMSY.2018.04.003

Wang Y, Lu J, Zhao Z et al (2021) Active disturbance rejection control of layer width in wire arc additive manufacturing based on deep learning. J Manuf Process 67:364–375. https://doi.org/10.1016/J.JMAPRO.2021.05.005

Jin Z, Zhang Z, Gu GX (2019) Autonomous in-situ correction of fused deposition modeling printers using computer vision and deep learning. Manuf Lett 22:11–15. https://doi.org/10.1016/J.MFGLET.2019.09.005

Charles A, Salem M, Moshiri M et al (2021) In-process digital monitoring of additive manufacturing: proposed machine learning approach and potential implications on sustainability. Smart Innov Syst Technol 200:297–306. https://doi.org/10.1007/978-981-15-8131-1_27/FIGURES/4

Goh GD, Bin Hamzah NM, Yeong WY (2022) Anomaly detection in fused filament fabrication using machine learning. 3D Print Addit Manuf 10:428–437. https://doi.org/10.1089/3DP.2021.0231

Lyu J, Manoochehri S (2021) Online Convolutional neural network-based anomaly detection and quality control for fused filament fabrication process. Virtual Phys Prototyp 16:160–177. https://doi.org/10.1080/17452759.2021.1905858

Yao B, Imani F, Yang H (2018) Markov decision process for image-guided additive manufacturing. IEEE Robot Autom Lett 3:2792–2798. https://doi.org/10.1109/LRA.2018.2839973

Liu C, Law ACC, Roberson D, Kong Z (James) (2019) Image analysis-based closed loop quality control for additive manufacturing with fused filament fabrication. J ManufSyst 51:75–86.https://doi.org/10.1016/J.JMSY.2019.04.002

Singer G, Cohen Y (2021) A framework for smart control using machine-learning modeling for processes with closed-loop control in Industry 4.0. Eng Appl Artif Intell 102:104236. https://doi.org/10.1016/J.ENGAPPAI.2021.104236

Zhong Q, Tian X, Huang X et al (2021) Using feedback control of thermal history to improve quality consistency of parts fabricated via large-scale powder bed fusion. Addit Manuf 42:101986. https://doi.org/10.1016/J.ADDMA.2021.101986

Mireles J, Ridwan S, Morton PA et al (2015) Analysis and correction of defects within parts fabricated using powder bed fusion technology. Surf Topogr Metrol Prop 3:034002. https://doi.org/10.1088/2051-672X/3/3/034002

Deneault JR, Chang J, Myung J et al (2021) Toward autonomous additive manufacturing: Bayesian optimization on a 3D printer. MRS Bull 46:566–575. https://doi.org/10.1557/S43577-021-00051-1/FIGURES/5

Liu C, Le Roux L, Ji Z et al (2020) Machine Learning-enabled feedback loops for metal powder bed fusion additive manufacturing. Procedia Comput Sci 176:2586–2595. https://doi.org/10.1016/J.PROCS.2020.09.314

Baumann FW, Sekulla A, Hassler M et al (2018) Trends of machine learning in additive manufacturing. Int J Rapid Manuf 7:310. https://doi.org/10.1504/IJRAPIDM.2018.095788

Yang Z, Lu Y, Yeung H, Krishnamurty S (2019) Investigation of deep learning for real-time melt pool classification in additive manufacturing. IEEE International Conference on Automation Science and Engineering (CASE), Vancouver, BC, Canada, pp 640–647. https://doi.org/10.1109/COASE.2019.8843291

Saluja A, Xie J, Fayazbakhsh K (2020) A closed-loop in-process warping detection system for fused filament fabrication using convolutional neural networks. J Manuf Process 58:407–415. https://doi.org/10.1016/J.JMAPRO.2020.08.036

Prakash E, Subramaniyan M, Naveen Sankar AK, Chandra Kumar K (2021) Additive manufacturing parameter optimization with automated post-printing flaw detection using convolutional neural networks. Springer Proc Mater 5:127–135. https://doi.org/10.1007/978-981-15-8319-3_14/FIGURES/10

Xie J, Saluja A, Rahimizadeh A, Fayazbakhsh K (2022) Development of automated feature extraction and convolutional neural network optimization for real-time warping monitoring in 3D printing. Int J Comput Integr Manuf 35:813–830. https://doi.org/10.1080/0951192X.2022.2025621

Schlagenhauf T, Burghardt N (2021) Intelligent vision based wear forecasting on surfaces of machine tool elements. SN Appl Sci 3:1–13. https://doi.org/10.1007/S42452-021-04839-3/FIGURES/14

Cho HW, Shin SJ, Seo GJ et al (2022) Real-time anomaly detection using convolutional neural network in wire arc additive manufacturing: Molybdenum material. J Mater Process Technol 302:117495. https://doi.org/10.1016/J.JMATPROTEC.2022.117495

Zhao H, Qi X, Shen X et al (2018) ICNet for real-time semantic segmentation on high-resolution images. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer Vision ECCV 2018. Lect Notes Comput Sci 11207:405–420. https://doi.org/10.1007/978-3-030-01219-9_25

Zhou Z, Rahman Siddiquee MM, Tajbakhsh N, Liang J (2018) UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In: Stoyanov D et al (ed) Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. DLMIA ML-CDS 2018. Lect Notes Comput Sci, vol 11045. Springer, Cham. https://doi.org/10.1007/978-3-030-00889-5_1

Tan Z, Fang Q, Li H et al (2020) Neural network based image segmentation for spatter extraction during laser-based powder bed fusion processing. Opt Laser Technol 130:106347. https://doi.org/10.1016/J.OPTLASTEC.2020.106347

Wang R, Cheung CF (2022) CenterNet-based defect detection for additive manufacturing. Expert Syst Appl 188:116000. https://doi.org/10.1016/J.ESWA.2021.116000

Wang C-Y, Bochkovskiy A, Liao H-YM (2023) YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, pp 7464–7475. https://doi.org/10.1109/CVPR52729.2023.00721

Gobert C, Kudzal A, Sietins J et al (2020) Porosity segmentation in X-ray computed tomography scans of metal additively manufactured specimens with machine learning. Addit Manuf 36:101460. https://doi.org/10.1016/J.ADDMA.2020.101460

Jiang R, Hu J, Lou P (2021) A DeepLab-based segmentation network for screw images. In Proceedings of the 2021 5th International Conference on Machine Learning and Soft Computing (ICMLSC '21). ACM Int Conf Proceeding Ser, New York, NY, USA, pp 84–89. https://doi.org/10.1145/3453800.3453816

Lin D, Li Y, Prasad S et al (2020) CAM-UNET: class activation MAP guided UNET with feedback refinement for defect segmentation. In: 2020 IEEE International Conference on Image Processing (ICIP). IEEE, Abu Dhabi, United Arab Emirates, 25-28 October 2020, p 2131–2135

Dosovitskiy A, Beyer L, Kolesnikov A et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. ICLR 2021 - 9th International Conference on Learning Representations. https://doi.org/10.48550/arXiv.2010.11929

Li XY, Liu FL, Zhang MN et al (2023) A combination of vision- and sensor-based defect classifications in extrusion-based additive manufacturing. J Sensors https://doi.org/10.1155/2023/1441936

Zhang W, Wang J, Tang M et al (2024) 2-D transformer-based approach for process monitoring of metal 3-D printing via coaxial high-speed imaging. IEEE Trans Ind Informatics 20:3767–3777. https://doi.org/10.1109/TII.2023.3314071

Liu Z, Lin Y, Cao Y et al (2021) Swin transformer: hierarchical vision transformer using shifted windows. Proc IEEE Int Conf Comput Vis 9992–10002. https://doi.org/10.1109/ICCV48922.2021.00986

Wen Y, Cheng J, Feng Y et al (2024) Application of improved YOLOv7 based on Swin Transformer in defect detection of 3D printed lattice structures. Proceedings of the SPIE. 130713071:15–19. https://doi.org/10.1117/12.3025552

Hinz T, Fisher M, Wang O, Wermter S (2021) Improved techniques for training single-image GANs. Proc - 2021 Winter Conference on Applications of Computer Vision (WACV), Waikoloa, Hawaii, USA. pp 1299–1308. https://doi.org/10.1109/WACV48630.2021.00134

Liu W, Wang Z, Tian L et al (2021) Melt pool segmentation for additive manufacturing: a generative adversarial network approach. Comput Electr Eng 92:107183. https://doi.org/10.1016/J.COMPELECENG.2021.107183

Petrik J, Kavas B, Bambach M (2023) MeltPoolGAN: auxiliary classifier generative adversarial network for melt pool classification and generation of laser power, scan speed and scan direction in Laser Powder Bed Fusion. Addit Manuf 78:103868. https://doi.org/10.1016/J.ADDMA.2023.103868

Kim F, Garboczi E, Moylan S, Slotwinski J (2017) CoCr AM XCT data | NIST. https://www.nist.gov/el/intelligent-systems-division-73500/cocr-am-xct-data. Accessed 26 July 2024

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning. Adv Neural Inf Process Syst 30. Curran Associates, Inc. https://proceedings.neurips.cc/paper_files/paper/2017/file/cb8da6767461f2812ae4290eac7cbc42-Paper.pdf

Li C, Cabrera D, Sancho F et al (2021) One-shot fault diagnosis of three-dimensional printers through improved feature space learning. IEEE Trans Ind Electron 68:8768–8776. https://doi.org/10.1109/TIE.2020.3013546

Wang K (2023) Contrastive learning-based semantic segmentation for in-situ stratified defect detection in additive manufacturing. J Manuf Syst 68:465–476. https://doi.org/10.1016/J.JMSY.2023.05.001

Lyu J, Akhavan J, Manoochehri S (2022) Image-based dataset of artifact surfaces fabricated by additive manufacturing with applications in machine learning. Data Br 41:107852. https://doi.org/10.1016/J.DIB.2022.107852

Luo Y, Chen Y, Wang J-G, Xu K (2021) FPGA-based acceleration on additive manufacturing defects inspection. Sensors 21:2123. https://doi.org/10.3390/S21062123

Hu Z (2017) Mahadevan S (2017) Uncertainty quantification and management in additive manufacturing: current status, needs, and opportunities. Int J Adv Manuf Technol 935(93):2855–2874. https://doi.org/10.1007/S00170-017-0703-5

Wang Y, Lin Y, Zhong RY, Xu X (2019) IoT-enabled cloud-based additive manufacturing platform to support rapid product development. Int J Prod Res 57:3975–3991. https://doi.org/10.1080/00207543.2018.1516905

Hertlein N, Deshpande S, Venugopal V et al (2020) Prediction of selective laser melting part quality using hybrid Bayesian network. Addit Manuf 32. https://doi.org/10.1016/j.addma.2020.101089

Ko H, Witherell P, Lu Y et al (2021) Machine learning and knowledge graph based design rule construction for additive manufacturing. Addit Manuf 37:101620. https://doi.org/10.1016/J.ADDMA.2020.101620

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Consent to participate

All co-authors agree to participate in the presented manuscript.

Consent for publication

This work has been approved by all co-authors for publication.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deshpande, S., Venugopal, V., Kumar, M. et al. Deep learning-based image segmentation for defect detection in additive manufacturing: an overview. Int J Adv Manuf Technol 134, 2081–2105 (2024). https://doi.org/10.1007/s00170-024-14191-6

Received:

Accepted:

Published:

Issue Date: