Abstract

Although the raison d’etre of the brain is the survival of the body, there are relatively few theoretical studies of closed-loop rhythmic motor control systems. In this paper we provide a unified framework, based on variational analysis, for investigating the dual goals of performance and robustness in powerstroke–recovery systems. To demonstrate our variational method, we augment two previously published closed-loop motor control models by equipping each model with a performance measure based on the rate of progress of the system relative to a spatially extended external substrate—such as a long strip of seaweed for a feeding task, or progress relative to the ground for a locomotor task. The sensitivity measure quantifies the ability of the system to maintain performance in response to external perturbations, such as an applied load. Motivated by a search for optimal design principles for feedback control achieving the complementary requirements of efficiency and robustness, we discuss the performance–sensitivity patterns of the systems featuring different sensory feedback architectures. In a paradigmatic half-center oscillator-motor system, we observe that the excitation–inhibition property of feedback mechanisms determines the sensitivity pattern while the activation–inactivation property determines the performance pattern. Moreover, we show that the nonlinearity of the sigmoid activation of feedback signals allows the existence of optimal combinations of performance and sensitivity. In a detailed hindlimb locomotor system, we find that a force-dependent feedback can simultaneously optimize both performance and robustness, while length-dependent feedback variations result in significant performance-versus-sensitivity tradeoffs. Thus, this work provides an analytical framework for studying feedback control of oscillations in nonlinear dynamical systems, leading to several insights that have the potential to inform the design of control or rehabilitation systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Physiological systems underlying vital behaviors such as breathing, walking, crawling, and feeding, must generate motor rhythms that are not only efficient, but also robust against changes in operating conditions. Although central neural circuits have been shown to be capable of producing rhythmic motor outputs in isolation from the periphery (Brown 1911, 1914; Harris-Warrick and Cohen 1985; Pearson 1985; Smith et al. 1991), the role of sensory feedback should not be underestimated. Sensory feedback can play a crucial role in stabilizing motor activity in response to unexpected conditions. For example, modeling work suggests that walking movements can be stably restored after spinal cord injury by enhancing the strengths of the afferent feedback pathways to the spinal central pattern generator (CPG) (Markin et al. 2010; Spardy et al. 2011). Feedback control can also improve the performance and efficiency of movements. For instance, in a model of feeding motor patterns in the marine mollusk Aplysia californica, seaweed intake can be increased by strengthening the gain of sensory feedback to a specific motor neural pool (Wang et al. 2022).

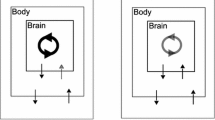

We are interested in understanding how sensory feedback contributes to control and stabilization within a specific class of rhythmic motor behaviors, namely, behaviors in which an animal (or robot) repeatedly engages and disengages with the outside world (see Fig. 1, top). We refer to the phase of the motion during which the animal is in contact with an external substrate as the power stroke, and the component during which the animal is disengaged as the recovery phase. The decomposition of a repetitive movement into powerstroke and recovery applies naturally to many motor control systems, including locomotion (Jahn and Votta 1972) and swallowing (Shaw et al. 2015); a similar dynamical structure also appears in mechanical stick–slip systems (Galvanetto and Bishop 1999) as well as abstract two-stroke relaxation oscillators (Jelbart and Wechselberger 2020). In the motor control context, when the animal is in contact with an external substrate or load opposing the motion, we say the animal makes “progress" (food is consumed, distance is traveled, oxygen is absorbed) relative to the outside world. During the recovery phase, the animal disconnects from the external component, and repositions relative to the substrate in order to prepare for the next power stroke. Consider, for example, the ingestive behavior of Aplysia (Shaw et al. 2015; Lyttle et al. 2017; Wang et al. 2022). When the animal’s grasper is closed on a stipe of seaweed, it drags the food into the buccal cavity; meanwhile, the food applies a mechanical load on the grasper. Then the grasper opens, releasing its grip on the food. The grasper moves in the absence of the force exerted by the seaweed and returns to the original position to begin the next swallowing cycle.

Schematic of dynamics and analysis of a powerstroke–recovery system. Top left: The system trajectory sketched in a 3D space, for illustration. The vertical axis represents a body variable (e.g., foot) and the xy-plane represents brain variables. A specific model system can have multiple brain and body variables. The system trajectory makes an entry to a constraint surface (blue shaded parallelogram) at the landing point A, followed by motion confined to move along the surface (red wave) and then a liftoff at point B back into the unconstrained space. The motion of the system is thereby partitioned into a powerstroke phase and a recovery phase. Top right: A system of differential equations governs the dynamics of the powerstroke–recovery system. The brain and body variables are denoted by \(\textbf{a}\) and \(\textbf{x}\), respectively. During recovery the body variable is unconstrained, while it is confined to a lower-dimensional manifold during powerstroke. An external load, represented by \(\kappa \), is imposed on the system when the body is in contact with the hard surface. Bottom: Two objectives in the control of the system’s response to a sustained external perturbation away from a default value \(\kappa _0\). Performance, \(Q_0\), measures the average rate of task progress of the system (e.g., food consumed, distance traveled), defined to be the total progress \(y_0\) over a cycle divided by the cycle period \(T_0\), when \(\kappa =\kappa _0\) (see Eq. (2)). Sensitivity, \(S_0\), measures the (infinitesimal) robustness of the system performance against the perturbation, defined to be the derivative of the performance with respect to the perturbation parameter evaluated at \(\kappa _0\). It accounts for the interaction of both the shape and timing effects of the perturbation on the trajectory: \(y_1\) is the linearized shift in the shape of the trajectory while \(T_1\) is the linearized shift in the trajectory timing (see Eq. (12)). The two quantities can be captured by recently developed variational analysis tools—the infinitesimal shape response curve (iSRC) and the local timing response curve (lTRC), respectively (Wang et al. 2021)

In this paper, we present a novel analysis of feedback control for powerstroke–recovery systems. To quantitatively evaluate the behavior of a system controlled by different feedback mechanisms, we measure the sensitivity (or robustness) and performance (or efficiency) (see Fig. 1, bottom). The complementary objectives of sensitivity and performance have been studied in a variety of motor control systems, from both empirical and theoretical perspectives (Lee and Tomizuka 1996; Yao et al. 1997; Ronsse et al. 2008; Hutter et al. 2014; Lyttle et al. 2017; Sharbafi et al. 2020; Mo et al. 2023). There are a myriad of ways to interpret performance and robustness used by engineers, biologists, neuroscientists, and applied mathematicians. Here we define the performance of a powerstroke–recovery system to be the total progress divided by the period of the rhythm (i.e., the average rate of progress), and the sensitivity to be the ability of the system to maintain performance in response to some specific external perturbation, such as an increased mechanical resistance while pulling on a load, or increased slope while walking. That is, we take the sensitivity to be the derivative of the performance with respect to the external perturbation parameter. As a step towards first-principles—based design of sensory feedback mechanisms, we aim to understand what aspects of sensory feedback contribute to the coexistence of high performance and low sensitivity.

The ubiquity of powerstroke–recovery systems, and the importance of the dual goals of robustness and efficiency, motivate us to develop analytical tools for systematically studying both quantities simultaneously. In this work we apply mathematical tools based on variational analysis to evaluate the two objectives applicable for any powerstroke–recovery system. The key quantities in our analysis are the infinitesimal shape response curve (iSRC) and local timing response curve (lTRC) recently established and validated by Wang et al. (2021, 2022) and generalized in Yu and Thomas (2022); Yu et al. (2023). The iSRC describes, to first order, the distortion of an oscillator trajectory—the shape response—under a sustained perturbation. In contrast, the lTRC captures the effect of the perturbation on the timing of the oscillator trajectory within any defined segments of the trajectory (such as the powerstroke and recovery phases). Both the iSRC and the lTRC complement the more widely known infinitesimal phase response curve (iPRC), which quantifies the effect of a transient perturbation on the global limit cycle timing (Brown et al. 2004; Izhikevich and Ermentrout 2008; Ermentrout and Terman 2010; Schultheiss et al. 2012; Zhang and Lewis 2013).

Based on the iSRC and lTRC approach, we propose a general framework to investigate robust and efficient control through diverse feedback architectures. We apply our method to two neuro-mechanical models, each possessing a natural powerstroke–recovery structure but different in their levels of details and perturbations. The first model is based on an abstract CPG-feedback-biomechanics system introduced in Yu and Thomas (2021) which studied the relative contributions of feedforward and feedback control (an idea going back to Kuo 2002). We extend this model to incorporate an externally applied load, enabling us to define quantitative measures of both performance and robustness, as the system alternately grasps and releases the external substrate. The second model, due to Markin et al. (Markin et al. 2010; Spardy et al. 2011), represents a locomotor system with a single-joint limb, and features more detailed CPG circuitry as well as more realistic afferent feedback pathways. We modify the Markin model so that the limb “walks” up an “incline”; modifying the angle of the incline introduces a parametric perturbation that allows us to define performance and robustness.

The activity of sensory feedback pathways is difficult to measure in many physiological systems; for this reason sensory feedback is the “missing link" for understanding the design and function of many biological motor systems. Specifically, in many experimental biological systems, the dynamics of the isolated CPG and the form of the muscle activation in response to descending motor signals is well characterized, while the precise form of the sensory feedback remains unkown. From a practical perspective, descending motor signals are generally carried by large-diameter axons from which it is easier to record high-quality (high signal-to-noise) traces, relative to ascending sensory signals, which are generally carried by much smaller diameter axons with poor signal-to-noise properties.

Given a particular specification of central neural circuit, descending output, and biomechanical response elements, but with the precise form of sensory feedback unknown, we can think of the pursuit of performance and/or robustness as an optimization (or dual optimization) problem on the space of sensory feedback functions. However, this is an infinite dimensional space of inputs to a highly nonlinear system. That is, the mapping from sensory feedback function to system trajectories to system performance and/or robustness is highly nonlinear and possibly non-convex. It may have multiple nonequivalent optima. Therefore, to restrict the problem to a manageable scope, in the specific cases studied here we restrict attention to sensory feedback functions with a prescribed, but plausible form, such as a sigmoid specified by a threshold and slope parameters, or multiple channels with different relative gain parameters, and study the restricted optimization problem there. Moreover, for simplicity, in the models we consider here, we restrict attention to sensory feedback that either monotonically increases or monotonically decreases with variables such as muscle length, tension, and/or velocity.

Our analysis of these two systems—one more abstract and the other more realistic—illustrates a technical framework for studying performance and sensitivity of powerstroke–recovery motor systems, leading to several insights that have the potential to inform the design of control or rehabilitation systems. For example, (i) the excitation–inhibition property of feedback signals determines the sensitivity pattern while the activation–inactivation property determines the performance pattern; (ii) the strong nonlinearity of feedback activation with respect to biomechanical variables may contribute to achievable performance–sensitivity optima; (iii) force-dependent feedback can prevent the performance/robustness tradeoffs commonly occuring with the length-dependent feedback. These findings may yield important information for future work modeling biphasic rhythm generation, in that they provide insights that could guide the design of feedback systems to accomplish well-balanced efficient and robust powerstroke–recovery activities in biological and robotic experiments.

The broad aim of this paper is to establish variational methods for multi-objective optimization of powerstroke–recovery systems. To this end, we organize the paper as follows.

-

We introduce and give a general definition of a “power- stroke–recovery” motor control system at the beginning of Sect. 2. Although such systems are ubiquitous in the physiology of motor control, to the best of our knowledge they have previously not been systematically defined nor studied.

-

We illustrate the particular features of recently established variational tools (such as the local timing response curve) as applied to powerstroke–recovery systems, in the rest of Sect. 2. For these systems, the quantities of interest (performance and sensitivity) take on specific forms that we describe in detail.

-

To show the breadth of applicability of the general analysis, we apply it to example model systems embodying a variety of feedback mechanisms, namely

-

In Sect. 5 we summarize the framework, as well as the main observations and insights that we obtain from the models; we also discuss limitations, connections to previous literature, and possible implications of our results for biology and engineering as well as future directions.

2 Mathematical formulation

The motor systems we consider integrate central neural circuitry, biomechanics, and sensory inputs from the periphery to form a closed-loop control system. The model systems we study fall within the following general framework (Shaw et al. 2015; Lyttle et al. 2017; Yu and Thomas 2021):

Here, \(\textbf{a}\) and \(\textbf{x}\) are vectors representing the neural activity variables and mechanical state variables, respectively; the vector field \(\textbf{f}(\textbf{a})\) represents the intrinsic neural dynamics of the central pattern generator when isolated from the rest of the body; \(\textbf{h}(\textbf{a},\textbf{x})\) captures the biomechanical dynamics driven by the central inputs; \(\textbf{g}(\textbf{a},\textbf{x})\) carries the sensory feedback from the periphery, which modulates the neural dynamics; \(\textbf{j}(\textbf{x},\kappa )\) is an externally applied load to the mechanical variables controlled by a load parameter \(\kappa \).Footnote 1

In the powerstroke–recovery systems, we assume the load interacts with the mechanics only during the powerstroke phase. The portion of the trajectory comprising the powerstroke phase is specified separately for each model. For the purposes of this paper we assume the vector fields \(\textbf{f},\textbf{g},\textbf{h},\textbf{j}\) are sufficiently smooth (e.g., twice differentiable). Specifically, we assume that any nonsmoothness in the vector fields is limited to a finite number of transition surfaces—for example at points marking the powerstroke–recovery transitions—and that the limit cycle trajectories are themselves piecewise differentiable, with at most a finite number of points of nondifferentiability.

For many naturally occurring control systems, the mechanical variables \(\textbf{x}\) may include both the position and velocity of different body components, as well as muscle activation variables. The sensory feedback function \(\textbf{g}\) may be difficult to ascertain experimentally. For example, the feedback could have an excitatory effect on the neural dynamics, or an inhibitory effect, or a mixture at different points within a single movement; it may depend not only on neural outputs but also the length, velocity, or tension of the mechanical components; it may arise from multiple channels each with different gain. Given the broad varieties of the possible feedback functions, we restrict the scope of our investigation to some biologically plausible forms for the specific models we consider in Sects. 3 and 4.

2.1 Performance and sensitivity

Suppose for \(\kappa \in \mathcal {I}\subset \mathbb {R}\), system (1) has an asymptotically stable limit cycle solution \(\gamma _\kappa (t)\) with period \(T_\kappa \). Let \(q_\kappa \) represent the rate at which the system advances relative to the outside world, and let \(y_\kappa \) represent the total progress achieved over one limit cycle, i.e.,

We note that in general, the instantaneous performance measure q may depend both on the system variables \(\textbf{x}\) and on the control parameter \(\kappa \).

We consider the task performance of the system, denoted by Q, to be the progress divided by the limit-cycle period, or equivalently, the mean value of the rate of progress averaged around the limit cycle, defined as follows

For a powerstroke–recovery system, we choose the time coordinate so that \(t=0\) coincides with the beginning of the powerstroke phase, and write \(T_\kappa ^\text {ps}\) for the duration of the power stroke. We adopt the convention that during the recovery phase, the position with respect to the outside world is held fixed (\(q_\kappa \equiv 0\)),Footnote 2 and denote \(T_\kappa ^\text {re}\) as the recovery phase duration (\(T_\kappa ^\text {ps}+T_\kappa ^\text {re}=T_\kappa \)). In such a system, we write the performance (2) as

Assume that \(\kappa _0\in \mathcal {I}\), which represents the unperturbed load. When the system is subjected to a small static perturbation on the load, \(\kappa _0\rightarrow \kappa _\epsilon =\kappa _0+\epsilon \in \mathcal {I}\), the original limit cycle trajectory \(\gamma _{\kappa _0}\) is shifted to a new trajectory \(\gamma _{\kappa _\epsilon }\), and its ability to resist the external change to maintain the performance is considered as a measure of robustness for the system. Since the sensory feedback pathways regulate the system dynamics, it would be desirable to obtain a feedback function \(\textbf{g}\) so that the system is most robust against the load change, i.e.,

Suppose we can expand \(Q(\kappa _\epsilon )\) around \(\kappa _0\):

Then the minimization problem (4) to the first order reduces to

We quantify the sensitivity of the original system to be \(S=\left| \frac{\partial Q}{\partial \kappa }(\kappa _0)\right| \), which describes the (infinitesimal) response of the task performance to the external perturbation on the load. When \(S=0\) with a certain feedback function, \(Q(\kappa _\epsilon )\approx Q(\kappa _0)\), which implies a strong ability of the system to maintain performance homeostasis.Footnote 3 Define a (linear) functional \(J: q\rightarrow S\), and the problem falls into a functional minimization problem, \(\min _\textbf{g}J[\textbf{g}]\), which attains the minimum with some feedback function \(\textbf{g}^*\) when the functional derivative \(\partial J/\partial \textbf{g}^*=0\). Finding \(\textbf{g}^*\) when the underlying system has a limit cycle (as opposed to the more often studied case of fixed-point homeostasis) is an open problem that we do not attempt to solve here. Instead, we focus on a limited range of \(\textbf{g}\) functions with practical significance and investigate the constrained optimization problem for the optimal feedback structure within that range.

2.2 Variational analysis

Variational analysis using the infinitesimal shape response curve (iSRC) and the local timing response curve (lTRC) is the key tool in our derivation of the performance sensitivity \(S=\left| \frac{\partial Q}{\partial \kappa }(\kappa _0)\right| \). In this section, we present a brief review of the theory and then provide two analytical methods to calculate the sensitivity. More mathematical details are given in Appendix C.

Consider system (1) written in the form

where \(\textbf{z}=(\textbf{a},\textbf{x})^\intercal \) and \(\textbf{F}_\kappa (\textbf{z})\) is the corresonding vector field parameterized by the load \(\kappa \in \mathcal {I}\). For convenience, we write trajectories \(\gamma _{\kappa _\epsilon }\) as \(\gamma _{\epsilon }\) and \(\gamma _{\kappa _0}\) as \(\gamma _{0}\); we write other quantities similarly. Expanding the perturbed trajectory yields

The timing function \(\tau _\epsilon (t)\) rescales the perturbed trajectory to match the unperturbed trajectory, so that the series (6) is uniform with respect to the time coordinate. As discussed in Wang et al. (2021), \(\tau _\epsilon (t)\) can be any smooth, monotonically increasing function mapping the interval \([0,T_0)\) to the interval \([0,T_\epsilon )\). We discuss the choice of \(\tau _\epsilon \) further below. The linear shift in the shape of the unperturbed trajectory, \(\gamma _1(t)\), is referred to as the iSRC. It satisfies a nonhomogeneous variational equation (Wang et al. 2021; Yu and Thomas 2022)

where \(\nu _1(t)=\frac{\partial ^2\tau _\epsilon (t)}{\partial \epsilon \partial t}\big |_{\epsilon =0}\) measures the local timing sensitivity to the perturbation. The initial condition for the iSRC Eq. (7) is

where \(\textbf{p}_\epsilon \) and \(\textbf{p}_0\) represent the intersection points of the trajectories with a smooth Poincaré section transverse to both the perturbed and unperturbed limit cycles, so that \(\gamma _1(0)\) indicates the linear displacement of the unperturbed intersection point. For more details about the iSRC, see Appendix C.2, Wang et al. (2021) and Yu and Thomas (2022).

To solve the iSRC Eq. (7), the lTRC is built to yield the timing sensitivity \(\nu _1\) local to each phase of the motion. Approximate the perturbed phase durations by

Wang et al. (2021) and Yu et al. (2023) developed a formula to calculate the first-order approximation for the duration change in phase \(i\in \{\text {ps},\text {re}\}\), given by

Here, \(\textbf{z}_0^\text {in}\) and \(\textbf{z}_0^\text {out}\) denote the unperturbed entry point to, and exit point from, the specific phase, respectively. Vector \(\eta ^i\), defined to be the gradient of the remaining time of the trajectory until exiting phase i, is referred to as the lTRC for phase i. It satisfies the adjoint equation

with a boundary condition

where \(n^\text {out}\) is a normal vector of the exit boundary surface at \(\textbf{z}_0^\text {out}\). When the vector field \(\textbf{F}\) changes discontinuously across the surface defining the boundary between two regions, the Jacobian \(D\textbf{F}\) should be evaluated as a one-sided limit, taken from the interior of the local region. With a linear time scaling for (6) (and setting \(t=0\) to be the start of the power stroke), i.e.,

the local timing sensitivity function in (7) reduces to

which can be obtained by using Eq. (9) for each phase. See Appendix C.1, Wang et al. (2021), and Yu et al. (2023) for more details about the lTRC formulation.

The variational analysis above allows us to analyze the performance sensitivity of powerstroke–recovery systems. In Yu and Thomas (2022) we provided a formula for the sensitivity of any averaged quantity with respect to an arbitrary control parameter, as long as the quantity of interest does not have explicit dependence on the control parameter. We generalize the approach of Yu and Thomas (2022) to allow for dependence of the instantaneous performance \(q_\kappa (\textbf{x})\) on both the state \(\textbf{x}\) and the parameter \(\kappa \), and obtain

The first term in the integral arises from the impact of the perturbation on the shape of the trajectory (\(\gamma _1\)) as well as directly on the quantity of interest (\(\partial q_\kappa /\partial \kappa )\). Here \(\beta _0\) denotes the proportion of the powerstroke duration within the period (\(\beta _0=T_0^\text {ps}/T_0\)). The second term indicates the impact of the perturbation on the timing of the trajectory, in that \(\beta _1\) represents the linear shift in \(\beta _0\) in response to the perturbation, which can be analytically evaluated by

The derivation of formula (10) is given in Appendix C.3.

Given the special structure of powerstroke–recovery systems, we can derive a more succinct expression for \(\partial Q/\partial \kappa \). For any value of \(\kappa ,\) the second definition in (2) gives

Recall at \(\kappa =\kappa _\epsilon \), we write \(y_\epsilon \) and \(T_\epsilon \) as shorthand for \(y_{\kappa _\epsilon }\) and \(T_{\kappa _\epsilon }\). We can expand the perturbed progress \(y_\epsilon \) and period \(T_\epsilon \) around \(\epsilon =0\) as

where \(y_1\) is approximately given by the net change of the mechanical component of the iSRC \(\gamma _1\) (cf. Eqs. (7) and (8)) within the powerstroke phase, and \(T_1\) is the linear shift in the total period, readily given by \(T_1=T_1^\text {ps}+T_1^\text {re}\) (cf. Eq. (9)). Therefore, Eq. (11) at \(\kappa =\kappa _0\) becomes

which, like (10), incorporates both the shape and timing effects of the perturbation in two distinct terms. Equation (12) also suggests that the sensitivity can be directly given by the absolute difference between the first-order timing change and shape change induced by the perturbation. When the two effects completely offset each other, the system achieves “perfect" robustness.

The two expressions given by (10) and (12) for calculating the sensitivity of the task performance for powerstroke–recovery systems allow us to compare different sensory feedback mechanisms in pursuit of an efficient and robust motor pattern. In the following sections we develop two illustrative examples: an abstract CPG-motor model introduced in Yu and Thomas (2021), and an unrelated realistic locomotor model studied in Markin et al. (2010) and Spardy et al. (2011). The two examples show a variety of differences in their model construction, but our analytic framework is broad enough to address both and give useful insights. Simulation codes required to produce each figure are available at https://github.com/zhuojunyu-appliedmath/Powerstroke-recovery. Instructions for reproducing each figure in the paper are provided (see the README file at the github site). Figures for this paper were produced using MatLab release R2023b.

3 Application: HCO model with external load

In Yu and Thomas (2021), we studied a simple closed-loop model combining neural dynamics and biomechanics, as sketched in Fig. 2. The CPG sytem comprises a half-center oscillator (HCO) with two conductance-based Morris–Lecar neurons (Morris and Lecar 1981; Skinner et al. 1994). Outputs from the HCO drive a simple biomechanical system, which follows a Hill-type kinetic model based on experimental data from the marine mollusk Aplysia californica (Yu et al. 1999). Sensory feedback from the periphery couples the body and brain dynamics, allowing the system to interact with the changing outside world, and to modulate the central neural activities adaptively. However, the previous study of this model did not explore the performance with respect to a physical task; rather, the CPG followed an autonomous clocklike pattern. To perform a more meaningful analysis for understanding principles of closed-loop motor control, here we augment the model from Yu and Thomas (2021) by incorporating a mechanical load exerted in a specific direction with recurrent engagement and disengagement with the system, which enables us to apply our quantitative measures of progress and sensitivity of the system.

Schematic of components and basic behavior of the HCO model system, adapted from Yu and Thomas (2021). The CPG circuit of the system comprises a half-center oscillator, represented by mutually inhibitory cell 1 and cell 2. Output from each neuron drives its ipsilateral muscle pulling a limb. The muscle stretch and contraction in turn produce reflex commands to a feedback receptor, which sends an inhibitory signal to its contralateral neuron. The limb interacts with an external substrate, which imposes a mechanical load opposing the limb movement. Inhibitory connections end with a round ball, and excitatory connections end with a triangle. In a single movement cycle, the powerstroke phase (panel A to panel B) occurs when the body (red dashed rectangle) moves forward while the foot is fixed, subjected to the load \(F_\ell \) opposite to the movement direction (blue arrow). The recovery phase follows (panel B to panel C), during which the body is fixed and the foot is lifted off the substrate and repositions for the next power stroke (green arrow)

3.1 The equations of the HCO model

The model equations we consider are as follows. For \(i,j=1,2\) and \(j\ne i\),

Variable \(V_i\) denotes the membrane voltage for HCO neuron cell i, and \(N_i\) is the gating variable for the potassium current in cell i. The two neuron cells are coupled by fast inhibitory synapses, and the coupling function is given by

which closely approximates a Heaviside step function with \(E_\text {thresh}\) denoting the synaptic threshold.

In the third equation of (13), \(A_i\ge 0\) represents the activation of the ith muscle. The neural outputs from the HCO drive the associated muscle, modeled as

The biomechanics is represented by the movement of an object (nominally, a pendulum or limb), with each side connected to one of the two muscles. The object position relative to the center of mass of the organism, denoted as x, is controlled by the muscle forces \(F_1\) and \(F_2\) acting on it. An external load \(F_\ell \) is exerted on the object only during the powerstroke phase, as specified by the indicator variable r defined to be

We assume that the powerstroke phase is at work when \(V_1\) is in the active state (\(V_1> E_\text {thresh}\)), whereas the load is absent from the system when \(V_1\) is inhibited (\(V_1\le E_\text {thresh}\)). Parameter \(\kappa \) describes the strength of the load, which is considered as the perturbation parameter for this model.

The system completes an intact closed loop through the sensory feedback induced by the biomechanics on the CPG in the form of feedback currents, e.g.,

where \(L_j\) is the length of muscle j, and the function \(S_\infty ^\text {FB}(L_j)\) describes the feedback synaptic activation. We assume that the feedback conductance has fast dynamics, following a sigmoid function. As discussed in Yu and Thomas (2021), the feedback synaptic architecture affords eight variations, depending on whether the feedback is (i) inhibitory or excitatory, (ii) activated by muscle contraction or muscle stretch, and (iii) modulatory on the contralateral or ipsilateral neuron. For example, when the feedback current is inhibitory to its contralateral neuron and activated when the muscle is contracted, then for the \(V_i\)-equation we set \(E_\text {syn}^\text {FB}=-80\) mV and

Fig. 2 illustrates the system controlled by the inhibitory-contralateral-decreasing feedback mechanism, and Fig. 3 shows a typical solution for the system. In contrast, setting \(E_\text {syn}^\text {FB}=80\) mV for the excitatory feedback current, or setting the sigmoid function to be increasing for the muscle-stretch activated case, or changing \(L_j\)-dependence to \(L_i\)-dependence for the ipsilateral mechanism, would specify other possible mechanisms. We compare the performance and sensitivity of all different realizations of each of the eight variations of the feedback control scheme below. The force terms \(F_{1,2}\), as well as additional details about the functions in system (13), parameter values used for simulations, and simulation codes are given in Appendix A.1.

A typical solution for the system (13) in the absence of perturbation (\(\kappa _0=1\)), plotted over two periods with \(T_0=3055\) ms. The system is governed by the inhibitory-contralateral feedback mechanism, with a decreasing sigmoid activation function \(S_\infty ^\text {FB}\) given by (14), and threshold \(L_0=10\) (panel E). Blue trace: cell/muscle 1. Red trace: cell/muscle 2. The gray shaded regions represent the powerstroke phase, defined by the active state of cell 1 (\(V_1>E_\text {thresh}\), panel A), with duration \(T_0^\text {ps}=1544\) ms; the white regions represent the recovery phase with duration \(T_0^\text {re}=1511\) ms. The system makes progress during the powerstroke phase at rate \(q=-dx/dt\), while it maintains its position during the recovery phase (compare panels D and F)

3.2 Analysis of the HCO model

In order to establish measures of performance and sensitivity, we assume that the system advances only during the powerstroke phase. That is, the rate at which the system makes progress is given by

as indicated in Fig. 3D, F. Therefore, by (3), the performance (i.e., the average rate of progress) is

When a sustained small perturbation is applied to the load, \(\kappa _0\rightarrow \kappa _0+\epsilon \), the solution trajectory shifts in both its shape and timing, and the performance of the perturbed system is consequently different from the performance of the unperturbed system. As a reference, Fig. 4A, B, E, F compare the trajectories of the unperturbed solution with \(\kappa _0=1\) for the system shown in Fig. 3 and the perturbed solution with \(\kappa _\epsilon =2\), and Fig. 4C, D, G, H illustrate the iSRC \(\gamma _1\) of the unperturbed trajectory, specified by Poincaré section \(\{V_1=0,\,dV_1/dt>0\}\). Note that for visual convenience, the large perturbation (\(\epsilon =1\)) is applied here, but in our actual analysis the perturbation magnitude should be small (\(|\epsilon |\ll 1\)). Our analysis yields several observations about the role of the inhibition-contralateral-decreasing sensory feedback in regulating the system’s response to the perturbation, as discussed in detail below.

The iSRC analysis for system (13). A, B, E, F: Time series of the unperturbed trajectory (solid, same as Fig. 3) and perturbed trajectory with load \(\kappa _\epsilon =2\) (dashed), both of which are initiated at the start of their respective powerstroke phase. The perturbed trajectory is uniformly time-rescaled by \(t_\epsilon =\frac{T_0}{T_\epsilon }t\) to compare with the unperturbed trajectory, where \(T_0=3055\) and \(T_\epsilon =2831\). The shaded regions represent the powerstroke phase for the unperturbed case, whereas the vertical green dotted lines represent the time at which the phase for the perturbed case switches from power stroke to recovery, which is advanced due to the perturbation. C, D, G, H: The components for the iSRC \(\gamma _1(t)\) of the unperturbed trajectory, with the initial condition defined by the Poincaré section \(\{V_1=0,\,dV_1/dt>0\}\). The negative responses in the \(V_1, N_1, A_1\) directions at the end of power stroke are consistent with the earlier transition to the recovery phase shown in panels A, B, C. The accumulating positive response of x results from the direct effect of perturbation, which shows a stronger resistance to the limb movement

With the perturbation (increased load), the transition from the power stroke to recovery is advanced, i.e., the powerstroke phase is shorter. As indicated in Fig. 4F, the immediate effect of the perturbation is the positive displacement and slower change rate in the x-variable, which occurs because the object is being pulled by the stronger load opposite to its movement direction. Correspondingly, muscle 2, whose length is \(L_2=10-x\), is more contracted than it is in the unperturbed case. The sensory feedback current injected to neuron 1, with synaptic activation given by (14), is therefore larger and gives more inhibition to the active neuron 1. As a result, the active \(V_1\) crosses the synaptic threshold and terminates the powerstroke phase at an earlier time, as indicated in Fig. 4A. The iSRC in each direction is consistent with the associated trajectory comparison. The significant negative peak in the \(V_1\)-component at the end of powerstroke phase (panel C) suggests that \(V_1\) of the perturbed solution at the rescaled time already decreases to the synaptic threshold and jumps down to the inhibited state, indicating the transition out of the power stroke is advanced. Since \(\kappa \) directly impacts dx/dt, we see a different effect on x (panel H) than the other variables.

Both efficiency (high performance) and robustness (low sensitivity) are important features of motor control systems interacting with the outside world. The performance for the perturbed system in Fig. 4 is smaller than the unperturbed system (\(Q_\epsilon =0.87\times 10^{-3}\), \(Q_0=1.23\times 10^{-3}\)), due to the larger magnitude in the progress decrease relative to that in the period. To measure the ability of maintaining the performance, we quantify the sensitivity of the original system in response to an infinitesimal sustained perturbation, following (10), to be

One can also estimate the sensitivity by (12), where \(y_1\) corresponds to the x-component of \(\gamma _1\). That is,

To evaluate the joint goals of high performance and low sensitivity, and to investigate how they are affected by sensory feedback, we will simultaneously study the two measures plotted together, while manipulating the shape of the feedback activation function. Specifically, we will vary the steepness parameter \(L_\text {slope}\) and position/half-threshold parameter \(L_0\) of the sigmoid synaptic feedback activation function \(S_\infty ^\text {FB}\). Figure 5 shows the results for all eight sensory feedback mechanisms.

Performance–sensitivity patterns of all eight sensory feedback architectures in the HCO model. A Contralateral feedback. B Ipsilateral feedback. Each panel includes four subarchitectures: excitatory feedback (upper ensembles) versus inhibitory feedback (lower ensembles), and inactivating feedback (dots) versus activating feedback (+ signs). Specifically, the dot trace indicates decreasing synaptic feedback activation \(S_\infty ^\text {FB}\) (muscle-contraction activated feedback), while the plus trace indicates increasing activation \(S_\infty ^\text {FB}\) (muscle-stretch activated feedback). The half-threshold position parameter \(L_0\) of \(S_\infty ^\text {FB}\) is varied over \(\{9, 10, 11\}\), colored by blue, black, and red, respectively. A green dot at the center of each ensemble indicates the system with constant feedback (\(S_\infty ^\text {FB}\equiv 0.5\)). Starting from this constant feedback case, the performance–sensitivity curve of each mechanism becomes distinct as the shape of \(S_\infty ^\text {FB}\) becomes steeper (i.e., \(L_\text {slope}\) decreases) until it approaches a Heaviside step function (\(L_\text {slope}\rightarrow 0\)). The contralateral feedback mechanism with increasing (resp. decreasing) sigmoidal activation \(S_\infty ^\text {FB}\) with \(L_0=10+\theta \) is functionally equivalent to the ipsilateral mechanism with decreasing (resp. increasing) sigmoidal activation \(S_\infty ^\text {FB}\) with \(L_0=10-\theta \), reducing the eight feedback architectures to four fundamentally different classes. Blue arrow (panel A top) marks the most advantageous configuration among those tested, and black arrow marks the sub-optimal configuration with high performance and low sensitivity

The eight superficially distinct feedback architectures can be reduced to four fundamentally different mechanisms in terms of their performance and sensitivity. The contralateral mechanism of muscle-stretch activated (increasing) current with threshold \(L_0=10+\theta \) (\(\theta \in \mathbb {R}\)), is equivalent to the ipsilateral mechanism of muscle-contraction activated (decreasing) current with threshold \(L_0=10-\theta \). Specifically, substituting the contralateral-increasing feedback activation with \(L_0=10+\theta \) to the \(V_1\)-equation of system (13) yields

where the last equation is exactly the case for the ipsilateral-decreasing feedback with \(L_0=10-\theta \). Note that for the unloaded model in Yu and Thomas (2021), only the inhibition–excitation property of the feedback makes a fundamental difference regarding the stability and robustness of the system. However, when we incorporate mechanical interactions with an external substrate, the activating property of the feedback must be taken into account. In the following, we will discuss the performance and sensitivity for the four contralateral feedback mechanisms, which we call inhibition-increasing (II), inhibition-decreasing (ID), excitation-increasing (EI), and excitation-decreasing (ED). The other four ipsilateral feedback mechanisms can be applied accordingly.

3.3 Performance and sensitivity of the HCO model

Our analysis of the HCO model, subject to an applied external load, leads to the following observations from Fig. 5:

-

1.

Excitatory feedback is advantageous over inhibitory feedback in terms of performance.

-

2.

The qualitative patterns of performance are reversed with respect to the steepness of synaptic activation function in the activating and inactivating feedback mechanisms.

-

3.

The qualitative patterns of sensitivity are reversed with respect to the steepness of synaptic activation function in the excitatory and inhibitory feedback mechanisms.

-

4.

When the sigmoid activation function \(S_\infty ^\text {FB}\) is approximately linear over the working range of the limb, the performance–sensitivity changes approximately linearly with the slope of \(S_\infty ^\text {FB}\).

-

5.

As the working range of the limb extends beyond the linear regime of \(S_\infty ^\text {FB}\), the performance–sensitivity curve can become strongly nonlinear and even non-monotonic, leading to well-defined simultaneous optima in both performance and sensitivity.

We discuss each of these points in turn below.

Excitatory sensory feedback outperforms inhibitory feedback. This conclusion is evident from Fig. 5, in the higher location of the performance–sensitivity pattern for each of the excitation mechanisms relative to that for all of the inhibition mechanisms. To understand the advantage of excitatory over inhibitory feedback in this model system, Fig. 6 compares the trajectories of two systems, one with excitatory constant feedback and the other with inhibitory constant feedback. These systems correspond respectively to the two green dots in Fig. 5A. In the excitatory system, the extra excitation to \(V_i\) due to the feedback has two opposing effects. On the one hand, it advances the time at which the active neuron “jumps down” to the inhibited state and thus shortens both the powerstorke phase and the total period T, relative to the system with constant inhibitory feedback. Panel F of Fig. 6 shows the projection of the trajectory on the \((V_1, N_1)\) plane with points plotted at constant time intervals. Note the rapid change in the voltage component relative to the slower gating variable. The significant timing change results from the exponential deceleration of the dynamics of the active neuron when approaching the jump-down point, as shown by the contraction of points before jumping in panel F. Although the \((V_1, N_1)\) projection of the two trajectories during the active state does not differ much spatially, the difference in time needed to cover the small spatial difference is significant. On the other hand, the constant excitatory drive also reduces the net progress y of the system per cycle. In particular, the progress y of the system declines due to the shorter movement time (panel E). The net performance (\(Q=y/T\), Eq. (2)) is determined by both the timing T and shape y of the trajectory. For the excitatory system, the resulting performance is in fact larger than for the inhibitory system, because the relative change in period is larger than the relative decrease in progress. Therefore, regardless of the structure of the feedback pathway, any system equipped with the excitatory sensory feedback is always advantageous over the system with the inhibitory sensory feedback in terms of their performance.

Comparison of trajectories for the constant inhibitory feedback system (solid lines) and constant excitatory feedback system (dashed lines), plotted over one period. The only difference in the setting for the two systems is the synaptic feedback reversal potential, \(E_\text {syn}^\text {FB}=+80\) or \(-80\) mV, respectively. The gray shaded region represents the powerstroke phase of the inhibitory system; the vertical green dotted line denotes the transition time of the excitatory system out of the power stroke, and the vertical green dashed line denotes the end of its recovery phase. Panel F shows the projection of the trajectories to the \((V_1, N_1)\) phase plane, where the successive dots are equally spaced in time. The inhibitory trajectory is in dark blue (generally passing through more negative voltages) and the excitatory trajectory in light blue (generally higher voltages). The excitatory feedback current raises the voltage of the active neuron and greatly shortens both the powerstroke and recovery phases (panels A, F). The limb makes smaller progress due to the shorter powerstroke duration (panels D, E), but the decrease in magnitude is smaller than the decrease in the period. Hence, the performance of the excitatory-feedback case is larger than the inhibitory-feedback case

The performance patterns in the decreasing and increasing feedback mechanisms are qualitatively reversed with respect to the steepness of \(S_\infty ^\text {FB}\). For example, as \(S_\infty ^\text {FB}\) changes from constant to approaching a Heaviside step function, the performance of the II mechanism with \(L_0=11\) (red dots in Fig. 5A bottom) monotonically increases while the performance of the corresponding ID mechanism (red + in Fig. 5A bottom) decreases monotonically. Figure 7 illustrates two systems controlled by the ID mechanism with \(L_0=11\) but different \(L_\text {slope}\) values. When \(S_\infty ^\text {FB}\) is more shallow (dashed), the inhibitory feedback current to cell 1 is less intense, due to the smaller synaptic activation over the contraction regime of muscle 2 (panel E). This makes neuron 1 (the neuron driving the powerstroke) more active, switching earlier to the inhibited state, and thus giving a shorter duration power stroke (panel A). The progress of the limb over the shorter powerstroke phase is however larger, which is opposite to the excitatory situation in Fig. 6. This outcome occurs because the effect of the duration decrease is not comparable to the effect of the faster velocity around the end of power stroke. The progress velocity over the powerstroke phase is controlled by the force generated by muscle 1, which becomes stronger due to the increased muscle activation \(A_1\) (panel C). Therefore, by decreasing the steepness of \(S_\infty ^\text {FB}\) for the ID mechanism, the muscles act on the limb in a stronger and faster fashion, leading to the enhanced performance. In contrast, by applying a similar analysis, we observe that the corresponding II mechanism, for which the muscle-stretch activated feedback current gives more inhibition to the active neuron 1, has the opposite effect (not shown). This example illustrates how two mechanisms with the opposite activating property of the sensory feedback can have qualitatively distinct performance changes when the sensitivity of the feedback pathway to the biomechanics is varied.

Comparison of the dynamics of two systems controlled by the ID mechanism with \(L_0=11\) and \(L_\text {slope}=0.5\) (solid traces) versus \(L_\text {slope}=2\) (dashed traces). The gray shaded region denotes the powerstroke phase of the first (steeper slope) system (\(T_0^\text {ps}=1547\)); the green vertical dotted line represents the end of the powerstroke phase for the second system (\(T_0^\text {ps}=1511\)), and the green vertical dashed line represents the end of its full cycle. During the powerstroke phase, the less steep synaptic activation \(S^\text {FB}_\infty \) (panel E) reduces the feedback inhibition to \(V_1\), leading to greater activation of the neuron driving the powerstroke (“neuron 1”), and earlier transition into the recovery phase (panel A). The activation of muscle 1 becomes stronger due to the larger \(V_1\) (panel C), which results in a stronger force being generated by muscle 1. This enhanced force pulls the object forward more quickly, especially around the end of power stroke (panel D). Due to the faster movement, the object makes greater progress in an even shorter time (panel F), thereby outperforming the system with the more steep \(S_\infty ^\text {FB}\) curve

The sensitivity profiles of the excitatory and inhibitory systems to an applied load are qualitatively reversed with respect to the steepness of the synaptic activation function. Consider the two contralateral-decreasing mechanisms with \(L_0=9\) (see the two blue dot curves in Fig. 5A). As \(L_\text {slope}\) becomes smaller, the sensitivity of the ED mechanism first decreases to almost 0 (perfect robustness) and then increases, whereas the sensitivity of the corresponding ID mechanism first increases to the maximum and then decreases. Recall that following Eq. (12), the sensitivity can be written as

This expression contains two terms—\(y_1/y_0\) accounts for the effect of the perturbation on the system progress (shape), while \(T_1/T_0\) accounts for the effect on the period (timing). To the extent that the two effects are large and of opposite signs, the system becomes more sensitive; in contrast, the cancellation of the two effects leads to a robust system. Figure 8 shows the two effects in the ED mechanism (red) and ID mechanism (blue) with \(L_0=9\) and \(L_\text {slope}\) varied over (0.6, 40). We observe that the stronger mechanical load exerts positive effects on both the timing and shape for the system with the excitatory sensory feedback mechanism, by prolonging the limit cycle period and increasing the progress of the limb (positive red curves), but the shape effect is more profound. As \(L_\text {slope}\) decreases, the improvement in the progress becomes less significant, while the timing changes more dramatically, which leads to a reduction in the sensitivity. The sensitivity minimum is attained when the two effects completely offset each other at \(L_\text {slope}\approx 0.6\), where the system is most robust against the perturbation. In contrast, the perturbation allows the system with the inhibitory feedback to make larger progress in a shorter time (negative blue solid and positive blue dashed); this possibility was mentioned in the discussion of Fig. 7. Although making greater progress in a shorter time in response to perturbation improves performance, it is not beneficial to the robustness of the system in terms of maintaining performance homeostasis. Moreover, as \(L_\text {slope}\) decreases, both effects become stronger, inducing the system to be even more sensitive. Apart from this example, the excitation–inhibition property of the sensory feedback in this model always offers qualitatively reversed sensitivity patterns when we consider the steepness variation of the feedback activation curve. The variational analysis serves as a tool to examine the coordinated effects of perturbation on the trajectory geometry and timing, and to identify mechanisms with superior robustness.

Effects of the increased load on the period (\(T_1/T_0\), solid) and on the progress (\(y_1/y_0\), dashed) of the trajectories for the ED systems (red) and ID systems (blue) with \(L_0\) fixed at \(L_0=9\) and \(L_\text {slope}\) varied over (0.6, 40). (Note this is a subset of the ranges plotted in Fig. 5, limited to the region in which sensitivity varies monotonically.) The absolute difference between the two effects accounts for the sensitivity of the system. In the excitatory system, the positive \(T_1/T_0\) and \(y_1/y_0\) indicate that the perturbed system spends longer time to make larger progress. The decrease of \(L_\text {slope}\) reduces \(y_1/y_0\) while \(T_1/T_0\) is increased, so the sensitivity decreases, until perfect robustness is attained at \(L_\text {slope}\approx 0.6\), i.e., where the two red curves meet each other. In the inhibitory system, \(T_1/T_0\) is negative, indicating that the perturbation shortens the working period. The effects on the progress and period both become stronger with the decrease of \(L_\text {slope}\), so the system becomes more sensitive to the perturbation

When \(S_\infty ^\text {FB}\)

is approximately linear over the working range of the muscles and limb, the performance–sensitivity curve changes approximately linearly with \(L_\text {slope}\). As an illustration, Fig. 9 zooms in on the patterns shown in Fig. 5A with \(L_\text {slope}\) varied among \((2,\infty )\), over which \(S_\infty ^\text {FB}\) is approximately linear within the possible range of muscle length. On this scale, a fundamental ambiguity arises on account of the dual goals of performance and robustness. Without specifying a relative weighting between these two quantities, there is no well justified way to choose which of the three traces in the upper ensemble, all moving up and to the left, are preferred. The red, black, and blue traces all simultaneously increase performance while decreasing sensitivity, relative to the constant feedback case (green dot). Among the lower ensemble, the blue curve can be rejected as its components exhibit a tradeoff: the robustness is enhanced with decreased performance. Moreover, within the linear regime, the performance and sensitivity can both be improved indefinitely by increasing the feedback gain. The possibility of disambiguating the two effects and finding a globally optimal solution arises only when the parameters are varied enough for nonlinear effects to come into play, as shown next.

The nonlinearity of the synaptic feedback activation function allows the existence of optimal combinations of performance and sensitivity. As the linear regime of \(S_\infty ^\text {FB}\) extends beyond the working range of muscle length and the curvature of the sigmoid \(S_\infty ^\text {FB}\) becomes more pronounced, some sharp turning points arise in the performance–sensitivity curve of each feedback mechanism, either in terms of performance or sensitivity or both. In general, along a continuous curve in the (sensitivity S, performance Q) plane, indexed by a parameter \(\mu \) (here, the sigmoid steepness parameter \(L_\text {slope}\)), there will be an optimal region marked at one end by the condition \((\partial S/\partial \mu =0, \partial Q/\partial \mu \not =0)\) and at the other end by \((\partial S/\partial \mu \not =0, \partial Q/\partial \mu =0)\). Depending on the relative weight given to S and Q, the optimal value of \(\mu \) will place the system somewhere within this segment of the \((S(\mu ), Q(\mu ))\) curve. In the case of the HCO system, the optimal curve among those tested (\(L_0=9\), top blue dots in Fig. 5A) makes a hairpin turn in the upper left corner of the plot (blue arrow), leading to relatively unambiguous identification of the optimal value of the slope parameter \(L_\text {slope}\). In such cases, these optimal points, or narrowly identified optimal regions, indicate the possibility of well-defined simultaneous optima in both of the performance and sensitivity patterns. Thus our results suggest the possibility to realize these joint goals through adjusting the structure of the sensory feedback mechanisms more broadly. Note that the nearby black trace with \(L_0=10\) shows a qualitatively different result on the sensitivity, which gives rise to a sub-optimal mechanism (black arrow). In Appendix A.2 we show the higher-resolution pattern by considering smaller increments of the position parameter \(L_0\) and slope parameter \(L_\text {slope}\) around the optimal and sup-optimal points.

Detail from Fig. 5A, expanded to show the region of approximate linearity. Colors as in Fig. 5A. The steepness parameter \(L_\text {slope}\) of the synaptic feedback activation function \(S_\infty ^\text {FB}\) is varied over \((2,\infty )\). Correspondingly, the gain of the sigmoid ranges from zero to 0.5. With the small gain, the sigmoid \(S_\infty ^\text {FB}\) is almost linear over the working range of the muscle length, and the performance–sensitivity curve for each case changes approximately linearly with respect to \(L_\text {slope}\)

The preceding observations have provided several insights that could support the design of sensory feedback pathways, such as the selection of excitatory versus inhibitory feedback currents, and activation versus inactivation with muscle contraction, as well as the shape of the feedback activation curve, to promote efficiency and robustness in other more realistic HCO-motor models with analogous configurations.

4 Application: Markin hindlimb model with imposed slope

The second closed-loop powerstroke–recovery example we consider is based on a neuromechanical model proposed by Markin et al. (2010), as sketched in Fig. 10. The model consists of a spinal central pattern generator controlling the movement of a single-joint limb. The CPG sends output via efferent activation of two antagonist (flexor and extensor) muscles to a mechanical limb segment, which in turn generates afferent feedback signals to the CPG. The model system performs rhythmic locomotion, comprising a stance phase, during which the limb is in contact with the ground, and a swing phase, during which the limb moves without ground contact. Thus the system falls within the class of powerstroke–recovery systems.

Schematic of Markin hindlimb model with imposed slope, adapted from Markin et al. (2010). The spinal CPG, consisting of Rhythm Generator (RG) and Pattern Formation (PF) half-center oscillators, as well as Interneurons (In-E/F), receives tonic supra-spinal drive, and generates alternating activation driving corresponding flexor and extensor Motor neurons (Mn). An additional circuit including more interneurons (Int and Inab-E) is incorporated to provide disynaptic excitation to Mn-E. The Mn cells activate antagonistic flexor and exentors muscles, which control the motion of a single-joint limb. Sensory feedback from muscle activation, which comes in three types (Ia, Ib, and II), provides excitation to the ipsilateral neurons of the CPG. Inhibitory connections end with a round ball, and excitatory connections end with a black arrow. The limb stands on the ground with slope specified by \(\kappa \), which we take as our perturbation parameter. During a single locomotion cycle, the ground reaction force is active on the limb in the stance phase where the limb velocity \(\dot{q}\) is positive (red arrow) but not in the swing phase where \(\dot{q}<0\) (blue arrow)

Everyday experience teaches us that normal walking movements are robust against gradual changes in the slope of the terrain, although the detailed shape and timing of the limb trajectory changes as one ascends (or descends) a steeper or shallower incline. As a proxy for this form of parametric perturbation of rhythmic walking movements, we extend Markin et al’s model to include an incline parameter, simulating the effects of a change in the ground slope. We use this parameter in our analysis of performance and sensitivity, to define the sensitivity of the system’s progress relative to the external substrate (the ground) in response to this environmental change.

4.1 The equations of the Markin model

Following Markin et al. (2010), we model the central pattern generator as a multi-layer circuit, consisting of a half-center rhythm generator (RG) containing flexor neurons (RG-F) and extensor neurons (RG-E). These RG neurons project to pattern formation (PF) neurons (PF-F and PF-E, respectively) and to inhibitory interneurons (In-F and In-E) which mediate reciprocal inhibition between the flexor and extensor half-centers. Given sufficient tonic supra-spinal drive, the CPG generates rhythms of alternating activation of flexor and extensor neurons, and the output of PF neurons induces alternating activity in flexor and extensor motor neurons (Mn-F and Mn-E). An additional circuit of interneurons (Int and Inab-E) provides a disynaptic pathway from the extensor side to Mn-E.

The dynamics of the RG, PF, and Mn neurons are each described by two first-order ordinary differential equations, governing each cell’s membrane potential \(V_i\) and the slow inactivation gate \(h_i\) of a persistent sodium current:

Here, \(I_\text {NaP}\), \(I_\text {K}\), and \(I_\text {Leak}\) refer to the persistent sodium current, potassium current, and leak current, respectively, described by

Excitatory and inhibitory currents to neuron i are respectively represented by \(I_\text {SynE}(V_i)\) and \(I_\text {SynI}(V_i)\), given by

The nonlinear function f describes the output activity of neuron j, defined to be

where \(V_\text {th}\) is the synaptic threshold. Parameter \(a_{ji}\) defines the weight of the excitatory synaptic input from neuron j to neuron i, while \(b_{ji}\) defines the weight of the inhibitory input from j to i; \(c_i\) represents the weight of the excitatory drive d to neuron i; \(w_{ki}\) defines the synaptic weight of afferent feedback \(\text {fb}_k\) to neuron i, with the feedback strength \(s_k\). Details on the feedback terms \(\text {fb}_k\) will be provided at the end of this section.

The dynamics of the interneurons, In-F, In-E, Int, and Inab-E, are each described by a single first-order equation:

where the currents are in the same form as above. As shown in Fig. 10, the source of excitatory inputs \(I_\text {SynE}\) to these interneurons comes from RG, supra-spinal drive, and sensory feedback. Note that In-E and In-F in particular do not receive any inhibitory input or excitatory supra-spinal drive, so the right-hand side of their voltage equation has \(I_\text {SynI}=0\) and \(c_i=0\).

The motor neurons, Mn-F and Mn-E, respectively activate two antagonistic muscles, the flexor (F) and extensor (E), controlling a simple single-joint limb. The limb motion is described by a second-order differential equation:

where q represents the angle of the limb with respect to the horizontal. The first term accounts for the moment of the gravitational force; \(M_F\) and \(M_E\) are the moments of the muscle forces; \(M_\text {GR}\) denotes the moment of the ground reaction force which is active only during the stance phase, given by

Note that the stance (powerstroke) phase is defined when the limb angular velocity \(v=\dot{q}\) is nonnegative, while the swing (recovery) phase occurs when the velocity is negative. We expand on the original model from Markin et al. (2010) by introducing parameter \(\kappa \) to describe the slope of the ground whereon the limb stands. Thus \(\kappa \) will play the role of the load parameter subjected to perturbations for this model.

A typical solution for the Markin model, in A RG output, B PF output, C In output, D Mn output, E Int (blue) and Inab-E (red) output, F limb angle, G Ia-F feebback activity, H Ia-E feedback activity, I II-F feedback activity, and J Ib-E feedback activity. The flexor side is colored by blue and the extensor side is colored by red. The gray shaded region indicates the stance phase (the powerstroke) during which the limb angle is positive in velocity (panel F), and the white region indicates the swing phase. The stance and swing phases are slightly shifted relative to the extensor and flexor active phases in the CPG. Note that for rat hindlimb walking, the extensor muscle dominates the stance/powerstroke phase Markin et al. (2010)

The feedback signals from the extensor and flexor muscle afferents provide excitatory inputs to the RG, PF, In, and Inab-E neurons. Muscle afferents provide both length-dependent feedback (type Ia from both muscles and type II from the flexor) and force-dependent feedback (type Ib from the extensor). Linear combinations of feedback terms \(\text {fb}_k\in \{\text {Ia-F, II-F, Ia-E, Ib-E}\}\), written as \(\sum _{k}w_{ki}s_k\text {fb}_k\) in (18), are fed into each side of the model—Ia-F and II-F feedback go to the flexor neurons and Ia-E and Ib-E to the extensor neurons. The feedback terms are in the form

For more details about the mathematical formulations, functional forms, and parameter values of the entire model, see Appendix B.

4.2 Analysis of the Markin model

Figure 11 shows the time courses of the output of neurons \(f(V_i)\), limb angle q, and feedback activity \(\text {fb}_k\) in the default flat-ground system where \(\kappa =0\). Unlike the HCO model, where the active states of neurons overlap with the powerstroke and recovery phases, here the extensor and flexor active phases are slightly shifted relative to the stance and swing phases Spardy et al. (2011). The excitatory feedback of types Ia and II increase during the silent phase of the associated neuron receiving the signal, reaching a peak just before the target neuron becomes active; these feedback signals then decreases during the active phase of the neuron. In contrast, the Ib-E feedback signal, which is solely dependent on the extensor muscle force, is active only when the extensor neurons are active. It remains low until the onset of activation in the extensor units, at which point it jumps up to a high level and then recedes. The different types of the feedback pathways induce distinct performance–sensitivity patterns, as we will discuss in Sect. 4.3.

For this model, we define the performance to be the average distance the limb moves along the ground during the stance phase, given by

Here \(T_\kappa ^\text {st}\) denotes the stance duration and \(l_s\) is the limb length. Figure 12 compares the time series of the perturbed system subjected to a small change in the ground slope (\(\kappa _\epsilon =\epsilon =0.01\)) with the unperturbed system. As indicated by the green dotted vertical line, the perturbation prolongs the stance phase and delays the transition to the swing phase, in that the system decelerates because of the steeper ground slope (panel B). Consequently, the progress becomes smaller (panel D), and the performance is further deteriorated due to a longer period (\(Q_0=0.1506\), \(Q(\epsilon )=0.1375\)). Note that this model and the HCO model have different conditions for transitioning between the powerstroke and recovery phases. In the HCO model the transition is determined by the neuron activation, while in the biophysically-grounded Markin model the transition is determined by the mechanical condition \(\dot{q}=0\). This difference accounts for the opposite responses of the transition timing to analogous environmental challenges: when increasing the difficulty of the task the HCO powerstroke-to-recovery transition moves earlier, while the Markin model’s transition is delayed. Put another way, the powerstroke of the perturbed HCO system contracts relative to the net period, while in the Markin model it expands (cf. Fig. 4).

Comparison of time series of A output of RG neurons, B limb angle, C output of Mn neurons, and D progress, for the unperturbed trajectory (solid, same as Fig. 11) and perturbed trajectory (dashed) with ground slope \(\kappa _\epsilon =0.01\). Both are initiated at the start of their respective stance phase. The perturbed trajectory is uniformly time-rescaled by \(t_\epsilon =\frac{T_0}{T_\epsilon }t\), where \(T_0=1035\) and \(T_\epsilon =1104\). The shaded regions represent the stance phase for the unperturbed case, whereas the vertical green dotted lines represent the onset of the swing phase for the perturbed case, which is delayed due to the steeper ground. Compare Fig. 4

In the following, we study how the performance–sensitivity pattern is affected by the strength of each afferent feedback pathway, represented by \(s_k\) where \(k\in \{\text {Ia-F, II-F, Ia-E, Ib-E}\}\) in (18). Figure 13 shows the patterns as one of the four feedback strengths is varied, with the other three strengths fixed at the normal strength \(s_k=1\). Before we discuss the patterns in Sect. 4.3, note that the possible range of each strength allowing for stable progressive locomotor oscillations is remarkably different:

In particular, the system can maintain stable oscillations upon cutting off the II-F or Ib-E feedback pathway, but cannot sustain oscillations without the Ia-type feedback. We finish this subsection by examining the mechanism underlying the failure of movement as the strength parameter is out of the range.

Performance–sensitivity patterns of the Markin model for different feedback strengths. The black dot represents the default system with the normal feedback strength (all \(s_k=1\)). In each curve, the strength for only one feedback pathway is varied, with the other three strengths fixed at one. The number at each end indicates the maximal or minimal strength allowing for stable progressive locomotor oscillations. The purple arrow marks the most advantageous configuration among those tested

The Markin model system features fast–slow dynamics (Rubin and Terman 2002), as the persistent sodium inactivation time constant \(\tau _h(V_i)\) in (17) is large over the relevant voltage range, so h evolves on a slower timescale than \(V_i\). The activity of neuron i can be therefore determined from the location of the intersection of its nullclines in the \((V_i,h_i)\) phase plane. To illustrate the mechanism underlying the transition between phases, Fig. 14 shows the nullcline configuration and the corresponding positions of RG and In neurons in the default system around the CPG transition. Starting from the extensor-inhibited state (panels A), because the V-nullcline and h-nullcline of RG-E intersect at a silent stable fixed point, RG-E cannot escape from the silent state and trade dominance on its own. However, In-E, due to the increasing excitatory inputs from Ia-E and Ib-E feedback (Fig. 11), is able to cross the synaptic threshold first and begin to inhibit RG-F (panels B). This inhibition raises the V-nullcline of RG-F, followed by the decrease of RG-F voltage, weakening its excitation to the downstream In-F. This reduction of excitation results in the decrease of In-F voltage. Consequently, RG-E receives less inhibition from In-F, lowering the V-nullclines of RG-E, such that the critical point moves to the middle branch of its V-nullcline, and hence, RG-E can reach the left knee and jump to the right branch. This transfer of active and silent states indicates that the In cells and excitatory sensory feedback dominate the transitions in the CPG. In the case without sufficiently strong feedback inputs to the silent In cell, the ipsilateral RG neuron will become deadlocked in silence, and thus the locomotion will fail.

Escape of In triggers CPG transitions. Top: Nullclines and positions of RG neurons (dots) in the \((V_\text {RG}, h_\text {RG})\) phase plane. Bottom: Voltages of In neurons (diamonds) at corresponding times. Blue indicates flexor V-nullcline, voltage and/or h values. Red indicates extensor nullcline and values. The h-nullcline is shown in green. The vertical magenta line represents the synaptic voltage threshold \(V_\text {th}\). Arrows mark the direction the neurons are moving. A When the extensor neurons are at the inhibited state, the h-nullcline intersects the inhibited V-nullcline of RG-E at the left branch. Excitation from feedback allows In-E to reach the threshold first, independently of RG-E. B When In-E jumps above the threshold it begins to inhibit RG-F, which raises the V-nullcline of RG-F. The downstream In-F, receiving less excitation from RG-F, thus reduces its voltage and gives less excitation to RG-E, which lowers the V-nullcline of RG-E. As a result, RG-E lies above the left knee of its V-nullcline, so it jumps across the threshold and becomes active, switching the dominance in the CPG (not shown)

Disentangling the effects of changing the strength of an afferent feedback pathway is nontrivial, because of the multiplicity of pathways impacting the activities of many neurons and limb within the system. For instance, Fig. 15 shows the feedback and neuron dynamics in the cases \(s_\text {Ia-F}=0.63\) (dashed) and \(s_\text {Ia-F}=0.6\) (solid), which is approaching the minimal allowable strength of Ia-F. Varying the strength of the Ia-F pathway alone leads to a chain of changes in all system components including the extensor feedback, and significantly impacts the transition timing. Although the magnitude of the feedback signals does not differ much in the two systems, yet the system with smaller \(s_\text {Ia-F}\) accumulates feedback excitation more slowly. This circumstance is due to the interplay of spatial and timing measures of distance in the fast–slow dynamical systems (Terman et al. 1998; Rubin and Terman 2002; Yu et al. 2023), in which a small spatial difference translates into a significant temporal extension. Decreasing the feedback strength \(s_\text {Ia-F}\) further (below 0.59) makes it impossible for the extensor feedback to jump up; consequently the In-E neuron will not be facilitated to escape from the inhibited state (not shown) and the movement will stall. This outcome may seem counterintuitive, since reducing the flexor feedback strength should make the flexor In more difficult to escape; however, its direct effect on the mechanics in turn influences the extensor feedback to an analogous (Ia-E) or even more significant (Ib-E) extent (see Eq. (23)). We observe similar situations beyond the nonzero extrema of other feedback strengths, in which either In-F or In-E fails to escape from the silent state and compromises the whole locomotor oscillation.

Effects of reduced sensory feedback gain \(s_\text {Ia-F}\) to In cells near the collapse point. Dashed curves: \(s_\text {Ia-F}=0.63\). Solid curves: \(s_\text {Ia-F}=0.6\). For all plots, red traces indicate extensor and blue traces indicate flexor quantities. The vertical green dotted and dashed lines indicate the end of stance and swing phases for the case \(s_\text {Ia-F}=0.63\). The shaded and white regions represent the stance and swing phases, respectively, for the case \(s_\text {Ia-F}=0.6\), both of which are significantly prolonged. Further reduction of \(s_\text {Ia-F}\) below 0.59 leads to stalling (convergence to a stable fixed point). Panels G and H plot the weighted feedback inputs (\(\sum _{k}w_{ki}s_{k}\text {fb}_k\)) fed into each In cell. Although the feedback magnitude does not differ much between the two cases, the time to reach the peak is significantly different, which affects the extensor-flexor transition and the following stance-swing transition

4.3 Performance and sensitivity of the Markin model

When analyzing the abstract HCO model, we considered both ipsilateral and contralateral feedback, both excitatory and inhibitory. In contrast, each of the three sensory feedback pathways in the Markin model is ipsilateral and excitatory. Although the two models are quite different in their level of details, feedback variations, and the type of perturbation, we compare and contrast the two models as far as we can in the Discussion. In this section, by varying the strength of each feedback pathway, we study the performance–sensitivity patterns of the Markin model as shown in Fig. 13 and yield the following observations.

Trade-offs between performance and sensitivity often occur as the afferent feedback strength changes As the strength parameter is varied, the system shows a performance–sensitivity tradeoff when the curve either moves up and to right (performance improves while robustness decreases) or moves down and to left (robustness increases while performance declines). Specifically, the system is not able to generate simultaneously efficient and robust movement with the whole flexor-feedback (Ia-F and II-F) variations as well as with the large Ia-E and small Ib-E variations. For example, Fig. 16 compares the performance of the default system to the system with a stronger Ia-F pathway (\(s_\text {Iaf}=1.1\)). Similarly to Fig. 15, the increase in the Ia-F strength advances the stance-swing transition, leading to a shortened working period and reduced progress. Since the change in the shape is more significant than the change in the timing, the performance (\(Q_0=y_0/T_0\)) declines. Note that in the HCO systems featuring either excitatory feedback or inhibitory feedback, the dominance between the shape and timing is reversed (cf. Fig. 6). These examples indicate the interplay of temporal and spatial effects in the performance measure. Likewise, the sensitivity measure, given by (10) or (12), also consists of two factors—the timing response to the perturbation and the shape response. Although the system with strengthened Ia-F feedback is inferior to the default system in terms of performance, it benefits from enhanced robustness. In contrast, weakening the Ia-F strength contributes to an improved performance at the cost of responding more sensitively to external perturbations. With such a performance–sensitivity tradeoff, the system cannot simultaneously achieve the dual goals of efficiency and robustness, especially when varying the flexor-feedback pathway(s) alone.