Abstract

A disease is a distinct abnormal state that significantly affects the functioning of all or part of an individual and is not caused by external harm. Diseases are frequently understood as medical conditions connected with distinct indications and symptoms. According to a fairly wide categorization, diseases can also be categorized as mental disorders, deficient diseases, genetic diseases, degenerative diseases, self-inflicted diseases, infectious diseases, non-infectious diseases, social diseases, and physical diseases. Prevention of the diseases is of multiple instances. Primary prevention seeks to prevent illness or harm before it ever happens. Secondary prevention tries to lessen the effect of an illness or damage that has already happened. This is done through diagnosing and treating illness or injury as soon as feasible to stop or delay its course, supporting personal ways to avoid recurrence or reinjury, and implementing programs to restore individuals to their previous health and function to prevent long-term difficulties. Tertiary prevention tries to lessen the impact of a continuing sickness or injury that has enduring repercussions. Diagnosis of the disease at an earlier stage is important for the treatment of the disease. Hence, in this study, deep learning algorithms, such as VGG16, EfficientNetB4, and ResNet, are utilized to diagnose various diseases, such as Alzheimer's, brain tumors, skin diseases, and lung diseases. Chest X-rays, MRI scans, CT scans, and skin lesions are used to diagnose the mentioned diseases. Transfer learning algorithms, such as VGG16, VGG19, ResNet, InceptionV3, and EfficientNetB4, are utilized to categorize various diseases. EfficientNetB4 with the learning rate annealing, having obtained an accuracy of 94.04% on the test dataset, is observed. As a consequence, we observed that every network has unique particular skills on the multi-disease dataset, which includes chest X-rays, MRI scans, etc.,

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

This study helps diagnose various diseases, including lung, skin, brain, and eye-related diseases. The eye diseases that are classified include diabetic retinopathy, cataract, hypertensive retinopathy, glaucoma, and age-related macular degeneration. Retinal fundus images are used to classify eye diseases. Brain diseases include Alzheimer's and brain tumors. Brain disorders are diagnosed using CT and MRI images. Skin diseases include carcinoma, melanoma, and naevus. Lesions are used to identify skin diseases. Lung diseases include COVID-19, lung opacity, and pneumonia. Chest X-rays are used to classify lung-related diseases. The choice of deep learning algorithms in this study, including VGG16, EfficientNetB4, and ResNet, is motivated by their proven efficacy in extracting complex features from medical images, crucial for the secondary prevention of diseases. By diagnosing conditions such as Alzheimer's, brain tumors, and various lung and skin diseases at an early stage, these algorithms facilitate timely intervention, thereby playing a pivotal role in the multilevel prevention framework. The utilization of diverse diagnostic images, including chest X-rays, MRI scans, CT scans, and skin lesions, underscores the versatility and robustness of these deep learning models in handling multifaceted medical data.

1.1 Eye diseases

Figure 1 exhibits a retinal fundus picture depicting the retina's different components. As a result, eye disorders may be predicted/detected with high accuracy using retinal fundus images.

1.1.1 Age-related macular degeneration

Age-related macular degeneration is of two forms: wet and dry. Wet macular degeneration is a chronic and progressive illness that produces impaired vision or a blind area in your visual field. It is sometimes caused by faulty blood vessels spilling fluid or blood in the macula. Of all persons with age-related macular degeneration, around 20 percent have the wet variant. With extreme loss of eyesight, people may have visual hallucinations. Dry macular degeneration is a frequent eye condition among adults over 50. It generates cloudy or reduced central vision due to the macula's thinning. The macula is the area of the retina essential for clear vision in your straight line of sight. Dry macular degeneration may initially start in one or both eyes and subsequently affect both. Over time, your vision may diminish and impede your ability to complete activities like reading, driving, and recognizing faces.

1.1.2 Diabetic retinopathy

Diabetic retinopathy is an eye disorder caused by diabetes. It is caused by reduced blood flow of the light-sensitive tissue in the back of the eye. Diabetic retinopathy may present with no symptoms or moderate vision abnormalities at first. However, it has the potential to cause blindness. Mild DR is the initial stage of diabetic retinopathy, characterized by microscopic regions of enlargement in the retina's blood vessels. These patches of swelling are known as microaneurysms. Small quantities of fluid can seep into the retina at this stage, prompting enlargement of the macula. This is a region toward the center of the retina. In moderate DR, excessive enlargement of microscopic blood vessels impedes blood flow to the retina, inhibiting normal nourishment. This creates a buildup of blood and other fluids in the macula. In severe DR, a larger part of blood vessels in the retina gets blocked, producing a significant reduction in blood flow to this area. At this moment, the body gets instructions to create new blood vessels in the retina. The advanced stage of the disease is proliferate diabetic retinopathy, in which new blood vessels form in the retina. Since these blood arteries are generally frail, fluid leakage is more dangerous. This generates diverse visual difficulties such as blurriness, limited field of vision, and even blindness.

1.1.3 Cataract

A cataract occurs when the lens in your eye, which is generally clear, gets hazy. For your eye to see, the light goes through a clean lens. The lens is behind your iris (the colored portion of your eye). The lens concentrates the light so that your brain and eye can combine to convert information into an image.

1.1.4 Hypertensive retinopathy

The retina is the tissue layer that lines the rear of your eye. This layer converts light into nerve impulses, subsequently sent to the brain for processing. If your blood pressure is excessively high, the blood vessel walls of the retina may thicken. This might tighten your blood vessels, preventing blood from reaching your retina. In rare cases, the retina might get enlarged. Over time, high blood pressure may damage the blood vessels in the retina, limiting its function and putting pressure on the optic nerve, resulting in vision issues. Hypertensive retinopathy is the medical term for this disorder.

1.1.5 Glaucoma

Glaucoma is a set of eye illnesses that cause the optic nerve, which is required for sight. Extremely high pressure in your eye usually causes this damage. Glaucoma is among the most common impairment or blindness in those over 60.

1.2 Brain diseases

Figure 2 exhibits an MRI scan used to predict the presence of brain diseases.

1.2.1 Alzheimer

In Alzheimer’s disease, when neurons are harmed and perish throughout the brain, connections among neural circuits may decompose, and many brain areas begin to atrophy. This phenomenon, known as brain atrophy, can be seen in the last stages of Alzheimer's—and is pervasive, producing considerable loss of brain volume. There are three stages of Alzheimer's, which include mild, moderate, and severe.

1.2.2 Brain tumor

A brain tumor is an abnormal cell growth or lump in the brain. Some brain tumors are benign (noncancerous), whereas others are cancerous (malignant). Brain tumors can develop in the brain (primary brain tumors), or cancer might start elsewhere in the body and spread to the brain (metastatic brain tumors).

1.3 Skin diseases

Lesions of the skin are used to classify skin-related diseases. Figure 3 exhibits the image of a lesion.

1.3.1 Basal cell carcinoma

The most frequent low-grade skin cancer is basal cell carcinoma (BCC), which accounts for up to 80% of all epidermis-based carcinomas. According to epidemiology, BCC is more common in Caucasians and can form anywhere on the body surface, particularly in exposed areas of the head and neck, with a high predisposition for local recurrence.

1.3.2 Malignant melanoma

Melanoma is most typically diagnosed as a dermatological primary tumor on the skin or mucous membranes. The predominant manifestation of lung melanoma is highly unusual, and in the literature, it is poorly described.

1.3.3 Dermatofibromas

Dermatofibromas are skin growths with a modest diameter that are typically innocuous. They come in various colors, from pink to light brown for fair skin to dark brown or black for dark skin.

1.3.4 Melanocytic naevus

A melanocytic naevus (American spelling: mole) is a frequent benign skin lesion caused by localized pigment cell growth (melanocytes). It is also known as a naevocytic naevus or simply 'naevus' (but note that there are other types of naevi). A pigmented naevus is a brown or black melanocytic naevus that includes the pigment melanin.

1.3.5 Vascular lesions

Birthmarks are vascular lesions, which are very common malformations of the skin and underlying tissues. Hemangiomas, vascular malformations, and pyogenic granulomas are the three main types of vascular lesions. While these birthmarks may appear the same, they differ regarding genesis and treatment.

1.4 Lung disease

Chest X-rays diagnose lung diseases like pneumonia, COVID-19, etc. Figure 4 shows an example of a chest X-ray.

1.4.1 COVID-19

COVID-19 is a serious respiratory disease that has triggered a pandemic in our area. The SARS-CoV-2 virus causes COVID-19, discovered in Wuhan, China, in December 2019. It is highly contagious and has quickly spread over the globe.

1.4.2 Lung opacity

Lung opacity is a pneumonia-causing infectious illness. The immunological reaction to this infection causes the lungs to fill with pus or other fluids, reducing the patient's ability to hold air resulting in suffocation, coughing, and fever, among other symptoms. The opacity of the lungs extends throughout the human body.

1.4.3 Pneumonia

Pneumonia is a pulmonary infection that can affect people of all ages and cause moderate to severe illness. It occurs when an infection clogs a person's lungs' air sacs with fluid or pus. As a result, it is difficult for an individual to inhale sufficient oxygen to reach the bloodstream.

1.5 Problem description

Diseases appear numerous and have become more widespread in recent years, and early detection is crucial in reducing the risk of many diseases. Access to an autonomous, dependable system that can detect diseases using image datasets of the eyes, lungs, brain, and skin can be a valuable diagnostic tool. One of the most advanced methods for computer-assisted medical or automated diagnosis is deep learning based on CNN, which has been used to build segmentation, classification, and detection systems for various disorders. The method proposed in this project comprises automatically cropping the region of interest inside an image using a CNN methodology that identifies infected and healthy images. Numerous deep learning techniques individually predict the abovementioned diseases, like diagnosing diabetic retinopathy using retinal fundus images. Here in this study, various diseases are classified with the help of a single deep learning technique using an image dataset, including chest X-rays, skin lesions, MRI scans, and retinal fundus images. These deep learning techniques help diagnose multiple diseases at the earlier stages and at a faster phase, which helps doctors get started with treating the diagnosed disease for the respective patient.

2 Literature review

Several deep learning-based algorithms have been used to forecast some eye disorders described above using retinal fundus pictures. Several researchers have employed deep learning techniques such as VGG16, ResNet50, and others to diagnose particular eye illnesses from retinal fundus pictures and improve other researchers' current state-of-the-art approaches. Few authors experimented with several CNNs (convolution neural networks) by training numerous models for detecting diabetic retinopathy. They rated the retinal fundus pictures from 0 to 4 (0 indicating no DR, 1 indicating mild non-proliferate retinopathy, 2 indicating moderate non-proliferate retinopathy, 3 indicating severe non-proliferate retinopathy, and 4 indicating proliferate retinopathy). EyePACS, Messidor-2, and Messidor-2 datasets were utilized for training and testing the model. The developed model has an AUC of 0.92 on the benchmark test dataset Messidor-2, with specificity and sensitivity of 81.02 percent and 86.09 percent, correspondingly. The AUC, sensitivity, and specificity of Messidor-1 are 0.958, 88.84 percent, and 89.92 percent, respectively. According to this research, the InceptionResNetV2 outperforms all prior CNN versions for identifying diabetic retinopathy.

Prabhjot Kaur et al. [1] used a Modified InceptionResNet-V2 (MIR-V2) with a transfer learning to predict the diseases in the tomato leaves. Sachin Jain et al. [2] suggested a classifier called ensemble deep learning-brain tumor classification (EDL-BTC) to get high accuracy. Y. Pathak et al. [3] adopted a deep transfer learning-based COVID-19 classification model that provides efficient results. Some researchers employed a modified CNN model using the deep residual learning concept to identify DR. For every severity level, the model returns 0 if there is no DR and 1 if there is DR. The model achieved a precision of 0.94. The authors [4, 5] suggested brain tumors are diagnosed via MRI imaging. On the other hand, the vast quantity of data obtained by an MRI scan renders manual categorization of cancer vs. non-tumor at a particular time unfeasible for humans. Automatic brain tumor categorization is a tough effort owing to the significant geographical and structural variety of the brain tumor's surrounding environment. The use of CNN classification for automated brain tumor detection is suggested in this research. Small kernels are employed to form the deeper architecture. The neuron's weight is defined as small. Compared to all other state-of-the-art approaches, trial findings reveal that CNN archives have a rate of 97.5 percent accuracy with minimum complexity [6, 7]. The authors [8, 9] proposed identifying and classifying pneumonia cases using photographs. They provide a deep learning method based on CNN. The author acquired various categorization results and accuracy for our three models. Based on the data, they were able to produce better prediction with an average accuracy of (68 percent) and a specificity of (69 percent) compared to the present state-of-the-art accuracy of (51 percent) applying the VGG16 [10,11,12]. The proposed model can predict with more accuracy than human specialists by incorporating more diverse lung segmentation approaches, limiting overfitting, and adding more learning layers, and will assist in subsidizing and minimizing the cost of diagnosis around the world.

The authors [13,14,15] created a deep learning system to extract attributes from chest X-ray pictures to detect COVID-19. Three powerful networks, ResNet50, InceptionV3, and VGG16, were fine-tuned using an improved dataset generated by merging COVID-19 and normal chest X-ray pictures from multiple open-source datasets. They applied data augmentation approaches to build many chest X-ray pictures artificially. According to experimental data, the proposed models categorized chest X-ray photographs as normal or COVID-19 with an accuracy of 97.20 percent for Resnet50, 98.10 percent for InceptionV3, and 98.30 percent for VGG16 [16, 17]. The data imply that transfer learning is successful, with great performance and straightforward COVID-19 detection methodologies. ResNet was used to detect Glaucoma by the authors [18,19,20]. This study made use of the REFUGEE and DRISHTI datasets. The suggested method has an accuracy of 98.9 percent and an F1 score of 98.8 percent. The authors [21, 22] detected and graded cataracts using DCNN (deep convolutional neural network). Cataracts were classified as non-cataractous, mild, moderate, or severe. According to the papers described above, deep learning architectures are rapidly being applied in identifying retinal illness from retinal fundus images. However, several gaps in using deep learning systems must be addressed and also we can employ transfer learning (TL) to reuse the knowledge obtained from the learning period in order to improve the performance on the relevant job. In the TL, we could reuse the pre-trained prototype to begin for a model on a new job [23, 24].

In the realm of medical imaging and disease diagnosis, the application of deep learning techniques has become increasingly prevalent [25,26,27]. However, the novelty of our approach lies not just in the employment of these algorithms but in the synergistic integration of advanced preprocessing techniques, data augmentation strategies, and the incorporation of a channel attention mechanism within the deep learning architecture [28,29,30]. This innovative amalgamation is designed to address specific challenges encountered in medical imaging, such as variability in image quality and the subtlety of pathological features. Our methodology extends beyond conventional practices by optimizing the neural network architectures to enhance their efficiency and accuracy in disease detection [31,32,33]. The channel attention mechanism, in particular, represents a significant methodological advancement, allowing our models to focus on the most informative features of an image, thereby improving diagnostic precision [34,35,36]. Such innovations are pivotal in advancing the field, offering a nuanced approach that increases the robustness and interpretability of deep learning models in medical applications.

3 Materials and methods

3.1 Dataset collection

Data collection plays an important part in the project's progress. The datasets collected in this case were the retinal fundus photographs from Kaggle separated into training, testing, and validation images. The open-source platform Kaggle was used to collect the retinal fundus photographs, chest X-rays, MRI scans, skin lesions and CT Scans of different diseases. The datasets utilized for this study are the ocular disease dataset, the diabetic retinopathy dataset, the cataract dataset, the Alzheimer dataset, the skin disease dataset, pneumonia, COVID-19, lung opacity, and brain tumor datasets. AMD, moderate DR, severe DR, proliferate DR, cataract, HR, glaucoma, Alzheimer, glioma brain tumor, meningioma brain tumor, pituitary brain tumor, basal cell carcinoma, malignant melanoma, dermatofibromas, melanocytic naevus, vascular lesions, COVID-19, lung opacity, pneumonia, and normal are the categories in the final dataset collected [37, 38].

3.1.1 Data preprocessing

The quality of input images is of paramount importance in medical imaging in order to provide the accuracy required in disease diagnosis and subsequent treatment planning. Images of high quality are characterized by several key attributes, including but not limited to high resolution, optimal contrast, low noise level, as well as the absence of artifacts. A high-resolution image ensures that the finest details are visible, which can be imperative in identifying subtle pathological features that can be indicative of early stage diseases [39, 40]. Optimal contrast is essential to distinguish between different tissues and lesions and a low noise level prevents critical details being obscured. Furthermore, the absence of artifacts, which can arise from numerous sources, such as patient motion, malfunctioning of the imaging device, or transmission errors, is vital for maintaining the integrity of the diagnostic information contained within the image. In this work, we apply a strict preprocessing protocol, aimed at ensuring that only images of the aforementioned high quality are included in our dataset. This protocol involved a sequence of validation checks to evaluate the resolution, contrast, noise level, and artifact presence in each image and images, which failed to satisfy these, were either subjected to further preprocessing techniques to enhance their quality or excluded from the dataset to uphold the credibility of our model predictions. Before using the data in the project, it should be validated for quality. The chest X-rays, skin lesions, and MRI scans are of good quality, and the image's details are visible. However, the blood vessels in the retinal fundus picture are not apparent, which may lead to incorrect disease prediction. As a result, image blending, a preprocessing technique, is applied for each image.

3.1.2 Influence of poor-quality ımages on model predictions

The presence of poor-quality images in the training dataset can seriously impede the identification of discriminative features essential for accurate disease diagnosis. In our approach, we address this challenge using a range of strategies that render our model robust and adaptable to images of varying qualities. These include, but are not limited to, robust data augmentation, such as random rotations, scaling, and brightness adjustments, which imbues a degree of variability into the training process that is representative of the spectrum of image qualities that the model is likely to encounter in a real-world setting. In addition to these, our model incorporates custom preprocessing steps, such as noise reduction and contrast enhancement, that are geared to make the lower-quality images more usable. These steps improve visibility of critical diagnostic features in these images, such that they can positively contribute to the training process.

Furthermore, the architecture of our deep learning model is inherently robust across a wide range of image qualities. For instance, by using a sequence of convolutional layers, our model learns the hierarchical features from the input images and can focus on the features that are most relevant to disease diagnosis, keeping quality variability at bay. This capability is further strengthened by a channel attention mechanism, which allows our model to dynamically focus on the most informative channels of an image, and is therefore more adept at identifying subtle pathological signs, even in less-than-ideal images. By integrating these strategies, our approach not only compensates for the potential quality variability within the training dataset, but also ensures that the learned discriminative features are invariant to a wide spectrum of image quality variations, thereby making our approach a reliable diagnostic tool across diverse clinical settings.

3.1.2.1 Gaussian blur

Gaussian blur is the result of blurring a photograph with a Gaussian function. Gaussian smoothing is another name for it. It is often used to decrease image noise and detail. Gaussian blur smooths out unequal pixel values in a picture by removing extreme outliers. The Gaussian blur of a retinal fundus is seen in Fig. 5

3.1.2.2 Image blending

Image blending combines two photographs with the same pixel values to generate a new target image. We may add or blend two images to aid with data preparation. Using Python OpenCV, we can combine or blend two pictures using cv2.addWeighted (). The outcome of picture blends the two images (retinal fundus image with Gaussian blur) from Fig. 6.

3.1.3 Data augmentation

To enhance model robustness, especially for low-quality images, our study employs comprehensive data augmentation strategies. This approach not only compensates for the potential quality variability in the training dataset but also artificially enriches our dataset, ensuring the model's resilience to variations in image quality. Specifically, for images deemed of lower quality, these augmentation techniques significantly improve the model's diagnostic accuracy by presenting a broader spectrum of image conditions. Data augmentation strategies that can help deep learning systems perform better are widely utilized. Data augmentation aims to increase the performance of the data model used to categorize retinal fundus pictures. Data augmentation is used on the training data, which in this example are photographs, to improve and augment the data's quality, adeptness, and size. The amount of data provided typically enhances deep learning neural networks' performance. Generating new training data from existing training data is known as data augmentation. This refers to changes in the training set pictures that the model will likely observe. We employed traditional augmentation methods such as rotation, scaling, translation, and horizontal/vertical flipping to create additional training samples. Additionally, we used advanced augmentation methods like random rotation, zooming, and brightness adjustments to diversify the dataset further. The choice of data augmentation was deliberate and based on its proven operation in enhancing the model's ability to generalize and learn robust features. Augmentation helps the model to learn from a broader spectrum of image variations, making it more adaptable to diverse data during training and improving its overall performance in diagnosing diseases. Data augmentation and preprocessing approaches were used to obtain the highest-performing model. Overfitting can be reduced with the help of data augmentation. Figures 7 and 8 depict the number of photographs in each illness class following the augmentation procedure.

3.1.4 Convolutional neural network

The most widely used and well-established deep learning model is CNN. CNN has been used in various image classification tasks and is gaining popularity in various fields such as health and music. Convolutional neural networks comprise numerous layers, including pooling, convolution, and fully connected, also known as dense layers. They are designed to learn data hierarchies automatically using the backpropagation approach.

3.1.4.1 Convolutional layer

The first and foremost layer of CNN is the convolutional layer. This layer applies a convolution to the input and passes the result to the output. Convolution is the simple application of a filter to an input, resulting in a smaller image even while combining all of the data from the field into a single pixel.

Figure 9 shows the convolution of an image which detects the edges of the animal present in the image.

3.1.4.2 Pooling layer

Using pooling layers, the images or feature maps input into the convolutional layer are reduced in size or dimensions. As a result, the amount of computation required in the network and the number of characteristics that must be taught are decreased. Pooling layers are classified into three types: average, maximum, and global pooling layers. This paper uses the max pooling layer. Figure 10 shows how the max pooling layer decreases the dimension of the image.

3.1.4.3 Activation function

The activation function retrieves the deep neural network's output, such as yes or no, 0 to 1, etc. The two types of activation functions are linear and nonlinear activation functions. This study uses the SoftMax and rectified linear unit (ReLU) activation functions.

3.1.4.4 Learning rate annealing

The learning rate for gradient descent is an important hyperparameter to specify while training a neural network. As previously stated, this parameter adjusts the size of our weight updates to minimize the network's loss function.

ReduceOnPlateau:

-

When a metric no longer improves, reduce the learning rate by a factor of 2–10.

-

This callback watches a quantity and reduces the learning rate if no progress is noticed after a 'patience' number of epochs.

3.2 Proposed work

Images of the retinal fundus are used as input in the suggested system. As part of the data preprocessing operations, chest X-ray pictures are equalized, improved, and augmented before extracting characteristics and assigning weights to them. This approach would essentially aid in detecting various eye illnesses using retinal fundus images. The innovations considered here are efficient deep learning model advanced preprocessing and augmentation techniques which results in the potential for clinical decision support. One of the most innovations used is in the integration of a channel attention mechanism into the architecture. Figure 11 depicts the benefit of learning rate annealing over modest and large constant learning.

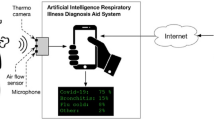

This paper analyzes CNN architectures like VGG16, VGG19, MobilenetV2, and others. The process of the eye disease detection system is depicted in Fig. 12.

3.2.1 VGG16

VGG16 is a CNN architecture that took first place in the 2014 ILSVR (ImageNet) competition. It is among the most advanced vision model designs currently available. VGG16 focused on having 3 × 3 filter convolution layers with stride 1 and used the same padding and max pooling layer as a 2 × 2 filter with stride 2 rather than getting a lot of hyper-parameters. This mix of convolution and max pool layers remains consistent throughout the architecture. Finally, it has two FCs (fully connected layers) and a SoftMax for output. The number 16 in VGG16 refers to the weighted layers it comprises. The structure of the VGG16 model is shown in Fig. 13. With over 138 million (estimated) parameters, this network is rather large.

3.2.2 VGG19

VGG19 is a variation of the VGG model with 19 layers (16 convolution layers, three fully connected layers, five MaxPool layers, and one SoftMax layer). VGG19 has 19.6 billion FLOPs in total.

3.2.3 Resnet50

ResNet50 is a variation of the ResNet model, comprising 48 convolution layers, one MaxPool, and one average pool layer. It contains 3.8 × 10^9 floating points operations. It is a frequently used type of ResNet model. The top-1 error rate for the ResNet 50 model was 20.47%, and the top-5 error rate was 5.25%. This is for a single model with 50 layers, not an ensemble. This can be extended to non-computer vision activities to offer them the benefit of depth and lower computational expenditure. Figure 14 shows the architecture of the resnet50.

3.2.4 InceptionV3

Inception v3 is an image recognition model that has been demonstrated to attain an accuracy of over 78.1 percent on the ImageNet dataset. On the ImageNet dataset, Inception v3 is just an image recognition algorithm found to achieve higher than 78.1 percentage points. The V3 model of Inception comprises 42 layers, which is somewhat more than the V1 and V2 models. On the other hand, the efficiency of this model is astounding.

3.2.5 EfficientNet B4

EfficientNet is a CNN design and scaling method using a compound coefficient to uniformly scale all depth/width/resolution parameters. To grow network width, depth, and resolution evenly, EfficientNet employs a compound coefficient. Generally, the models are excessively large, too deep, or have an extremely high resolution. Increasing these qualities initially improves the model, but it rapidly saturates, and the model created has more parameters and is, therefore, inefficient. In EfficientNet, they are scaled more conscientiously, i.e., everything is gradually raised. EfficientNetB4 is being more efficient compared to other models within the EfficientNet family. EfficientNet models are scaled versions, each with a specific depth, width, and resolution, with B0 being the smallest and B7 being the largest. This assertion is supported by various studies and empirical evidence that demonstrate the superior performance of EfficientNetB4 concerning model efficiency, computational cost, and accuracy. EfficientNetB4 strikes a balance between model complexity and computational resources, making it an efficient choice for our specific medical imaging application. The decision to utilize EfficientNetB4 among the spectrum of EfficientNet models (B0 to B7 and B2V2) was made after careful consideration and experimentation. EfficientNetB4 and other variants within the EfficientNet family are used to highlight the efficiency gains in terms of accuracy and computational efficiency.

4 Results and discussion

The results of our study underscore the efficacy of the proposed deep learning models in diagnosing a range of diseases from various medical imaging modalities. Each model, including VGG16, MobileNetV2, VGG19, InceptionResNetV2, and EfficientNetB4, was rigorously evaluated, with performance metrics indicating high accuracy and low loss across both training and validation datasets. Notably, the integration of the channel attention mechanism has shown to significantly enhance model performance, particularly in distinguishing subtle pathological features often overlooked by conventional models. Discrepancies observed between the expected and actual performance metrics were meticulously analyzed, leading to further optimizations in the preprocessing and augmentation techniques. These refinements have contributed to the models' improved generalizability and robustness, as evidenced by their performance on unseen data. The discussion of these results is firmly anchored in the quantitative data presented, with a clear exposition on how the findings align with the overarching hypotheses of enhanced diagnostic accuracy and efficiency in medical imaging (Figs 15 and 16).

The models, such as VGG16, MobileNetV2, VGG19, InceptionResNetV2, and EfficientNetB4, are used in this study. This section discusses the outcomes of each model and its configurations. Table 1 displays the accuracy and loss for the validation and training datasets for the VGG16 Model. This model was trained over 25 epochs with a learning rate of 0.01. The model's training accuracy was 94.48 percent. Table 1 shows that the model's training accuracy continuously rises while the validation loss increases compared to the model's initial epoch, which is not desired. When tested with the test dataset, the model produced an accuracy of 88.36.

When compared to the VGG16 model, VGG19 performed better, resulting in a training accuracy of 82.66 percent. This model, like VGG16, was trained for 25 epochs with a learning rate of 0.01. Table 2 displays the VGG19 findings. When tested with the test dataset provided, the accuracy was 88.82.

Table 3 shows the performance of the Resnet50model. Like the above models, this model was trained for 25 epochs with a learning rate 0.01. As a consequence, the training accuracy was 98.69 percent. The model's test accuracy is 88.95 percent.

Table 4 shows the performance of the InceptionV3model. The training accuracy of this model was 98.52 percent. When tested with the test data set, the model obtained a test accuracy of 90.89 percent.

The EfficientNetB4 model outperforms the prior models in terms of performance. The model's performance is presented in Table 5. When tested on the test dataset, the model had the highest test accuracy of 91.95 percent of all models.

InceptionV3 model and learning rate annealing outperform the models mentioned above in terms of performance. It performs better than the normal InceptionV3 model. The model's performance is presented in Table 6. This model uses learning rate annealing, which monitors the validation loss and reduces the learning rate by 0.5. The weights of the best epoch have been considered for the model, so it performs well. When tested on the test dataset, the model had a test accuracy of 92.67 percent of all models.

The EfficientNetB4 model, when trained along with the learning rate annealing, outperforms the other models mentioned above. The model's performance is presented in Table 7. This model uses learning rate annealing, which monitors the validation loss and reduces the learning rate by 0.5.

Here, we have used learning rate annealing in EfficientNetB4 model to get better results which is demonstrated in Fig. 17. The confusion matrix for the EfficientNetB4 model, which is trained with the help of the learning rate annealing, is shown in Fig. 18. The matrix's diagonal reflects the right classification, whereas the remainder represents the misclassification. According to the confusion matrix, most misclassifications occur between the mild and moderate DR classes.

The metrics of the confusion matrix are as follows:

-

TP (true positive): This is the number of times the classifier successfully predicts the positive class as positive in a prediction.

-

TN (true negative): This is the number of times the classifier correctly predicts the negative class to be negative.

-

FP (false positive): This is the number of times the classifier has predicted the negative class to be positive.

-

FN (false negative): This is the number of times the classifier incorrectly predicted a positive class as a negative class.

Accuracy, precision, recall, F1 score, and support are the metrics used to assess the model. The proportion of total samples properly classified by the classifier is called accuracy. The equation provides the accuracy (1).

The recall of the classifier tells you what proportion of all positive samples were correctly predicted as positive. True positive rate, sensitivity, and probability of detection are other terms. The equation can be used to calculate recall (2).

Precision indicates the percentage of positive forecasts that were truly positive. The equation provides the answer (3).

The F1 score is the weighted average of precision and recall, often known as the harmonic average of precision and recall. It is calculated using Eq. (4).

Table 8 shows the performance of the EfficientNetV2 model, which uses learning rate annealing for individual classes on the test dataset. The EfficientNetB4 model has the highest accuracy of all models, at 94.04 percent.

5 Conclusion

Early detection is critical for successful treatment and avoiding death in the case of some diseases. Treatment of any disease requires prompt and accurate intervention. Early and precise therapies are crucial in the treatment of eye disorders. This study presents a new tool to increase disease diagnostic accuracy, thanks to recent breakthroughs in new deep learning methodologies. In our study, we aimed to demonstrate the model's efficiency and effectiveness in diagnosing diseases within a diverse range of organ systems, each presenting unique challenges in medical image analysis. Throughout this project, we used the most prevalent deep learning approach known as convolutional neural network and VGG16, VGG19, InceptionV3, EffecientNetB4, and ResNet to implement computer-aided diagnostics of numerous diseases. With 94.04 percent accuracy, the EfficientNetB4model, tuned with the help of learning rate annealing, trumps the other models discussed. Our proposed strategy beats current best practices. As a result, it can be stated that the deep learning models EffecientNetB4 suggested above accurately classify diseases. This one model is capable of classifying multiple diseases accurately and precisely. This is extremely beneficial in the medical industry for accurate and timely diagnosis. Early diagnosis is critical for saving a person's life by ensuring the patient receives effective and timely treatment. In future research, we plan to expand our study to cover a broader spectrum of diseases, encompassing various organ systems.

Data availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Kaur P, Harnal S, Gautam V et al (2023) A novel transfer deep learning method for detection and classification of plant leaf disease. J Ambient Intell Human Comput 14:12407–12424. https://doi.org/10.1007/s12652-022-04331-9

Jain S, Jain V (2023) Novel approach to classify brain tumor based on transfer learning and deep learning. Int. j. inf. tecnol. 15:2031–2038. https://doi.org/10.1007/s41870-023-01259

Pathak Y, Shukla PK, Tiwari A, Stalin S, Singh S (2022) Deep transfer learning based classification model for COVID-19 disease. Irbm 43(2):87–92

Saxena G, Verma DK, Paraye A, Rajan A, Rawat A (2020) Improved and robust deep learning agent for preliminary detection of diabetic retinopathy using public datasets. Intell-Based Med 3–4:100022. https://doi.org/10.1016/j.ibmed.2020.100022

Borwankar S, Sen R, Kakani B (2020) Improved Glaucoma Diagnosis Using Deep Learning. 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONNECT). https://doi.org/10.1109/conecct50063.2020.9198524

Sandoval-Cuellar, H. J. (n.d.). Image-based Glaucoma Classification Using Fundus Images and Deep Learning. https://doi.org/10.17488/rmib.42.3.2

International Diabetes Federation (IDF). (n.d.). International Year Book and Statesmen’s Who’s Who. https://doi.org/10.1163/1570-6664_iyb_sim_org_38965

Liang Y, He L, Fan C, Wang F, Li W (2008) Pre-processing study of retinal image based on component extraction. 2008 IEEE Int Symp IT Educ. https://doi.org/10.1109/itme.2008.4743950

Soares JVB, Leandro JJG, Cesar RM, Jelinek HF, Cree MJ (2006) Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans Med Imaging 25(9):1214–1222. https://doi.org/10.1109/tmi.2006.879967

Gadkari S, Maskati Q, Nayak B (2016) Prevalence of diabetic retinopathy in India: The all india ophthalmological society diabetic retinopathy eye screening study 2014. Indian J Ophthalmol 64(1):38. https://doi.org/10.4103/0301-4738.178144

Ghodasra DH, Brown GC (2009) Prevalence of diabetic retinopathy in india: sankaranethralaya diabetic retinopathy epidemiology and molecular genetics study report 2. Evidence-Based Ophthalmol 10(3):160–161. https://doi.org/10.1097/ieb.0b013e3181ab81bf

Walker R, Rodgers J (2002) Diabetic retinopathy. Nurs Stand 16(45):46–52. https://doi.org/10.7748/ns2002.07.16.45.46.c3238

Wilkinson CP, Ferris FL, Klein RE, Lee PP, Agardh CD, Davis M, Dills D, Kampik A, Pararajasegaram R, Verdaguer JT (2003) Proposed international clinical diabetic retinopathy and diabetic macular oedema disease severity scales. Ophthalmology 110(9):1677–1682. https://doi.org/10.1016/s0161-6420(03)00475-5

Mahesh TR, Sivakami R, Manimozhi I, Krishnamoorthy N, Swapna B (2023) Early predictive model for detection of plant leaf diseases using mobilenetv2 architecture. Int J Intell Syst Appl Eng 11(2):46–54

Krishnamoorthy N, Nirmaladevi K, Kumaravel T, Nithish, KS, Sarathkumar S, Sarveshwaran M (2022) Diagnosis of Pneumonia Using Deep Learning Techniques. In 2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT) (pp. 1–5). IEEE.

Scanlon, P. Stage R3: Proliferative diabetic retinopathy and advanced diabetic retinopathy. (2009). A Practical Manual of Diabetic Retinopathy Management, 109–132. https://doi.org/10.1002/9781444308174.ch9

Chua J, Baskaran M, Ong PG, Zheng Y, Wong TY, Aung T, Cheng C-Y (2015) Prevalence, risk factors, and visual features of undiagnosed glaucoma. JAMA Ophthalmology 133(8):938. https://doi.org/10.1001/jamaophthalmol.2015.1478

Maheshwari S, Pachori RB, Acharya UR (2017) Automated diagnosis of glaucoma using empirical wavelet transform and correntropy features extracted from fundus images. IEEE J Biomed Health Inform 21(3):803–813. https://doi.org/10.1109/jbhi.2016.2544961

Singh A, Dutta MK, ParthaSarathi M, Uher V, Burget R (2016) Image processing-based automatic diagnosis of glaucoma using wavelet features of the segmented optic disc from fundus image. Comput Methods Programs Biomed 124:108–120. https://doi.org/10.1016/j.cmpb.2015.10.010

Study of various detection techniques of tampered regions in digital image forensics. (2016). International J Latest Trends in Eng Technol, 7(4). https://doi.org/10.21172/1.74.034

Krishnamoorthy N, Asokan R, Jones I (2016) Classification of malignant and benign micro calcifications from mammogram using optimized cascading classifier. Curr Signal Transduct Ther 11(2):98–104. https://doi.org/10.2174/1574362411666160614083720

Linglin Zhang, Jianqiang Li, I Zhang, He Han, Bo Liu, Yang, J., Qing Wang. (2017). Automatic cataract detection and grading using Deep Convolutional Neural Network. 2017 IEEE 14th International Conference on Networking, Sensing and Control (ICNSC). https://doi.org/10.1109/icnsc.2017.8000068

Rebinth A, Kumar, SM (2019) A Deep Learning Approach To Computer-Aided Glaucoma Diagnosis. 2019 Int Conf Recent Adv Energy-Effic Computing Commun (ICRAECC). https://doi.org/10.1109/icraecc43874.2019.8994988

Krishnamoorthy N, Prasad LN, Kumar CP, Subedi B, Abraha HB, Sathishkumar VE (2021) Rice leaf diseases prediction using deep neural networks with transfer learning. Environ Res 198:111275

Guo L, Yang J-J, Peng L, Li J, Liang Q (2015) A computer-aided healthcare system for cataract classification and grading based on fundus image analysis. Comput Ind 69:72–80. https://doi.org/10.1016/j.compind.2014.09.005

Chakravarty A, Sivaswamy J (2016) Glaucoma classification with a fusion of segmentation and image-based features. 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI). https://doi.org/10.1109/isbi.2016.7493360

Devi KN, Krishnamoorthy N, Jayanthi P, Karthi S, Karthik T, Kiranbharath K (2022). Machine Learning Based Adult Obesity Prediction. 2022 International Conference on Computer Communication and Informatics (ICCCI). https://doi.org/10.1109/iccci54379.2022.9740995

Krishnamoorthy D, Parameswari VL (2018) Rice leaf disease detection via deep neural networks with transfer learning for early identification. Turkish J Physiother Rehabilit 32:2

Raghavendran PS, Ragul S, Asokan R, Loganathan AK, Muthusamy S, Mishra OP, Sundararajan SCM (2023) A new method for chest X-ray images categorization using transfer learning and CovidNet_2020 employing convolution neural network. Soft Comput 27(19):14241–14251

Sinnaswamy RA, Palanisamy N, Subramaniam K, Muthusamy S, Lamba R, Sekaran S (2023) An extensive review on deep learning and machine learning ıntervention in prediction and classification of types of aneurysms. Wirel Pers Commun 131(3):2055–2080

Subramaniam K, Palanisamy N, Sinnaswamy RA, Muthusamy S, Mishra OP, Loganathan AK, Sundararajan SCM (2023) A comprehensive review of analyzing the chest X-ray images to detect COVID-19 infections using deep learning techniques. Soft Comput 27(19):14219–14240

Thangavel K, Palanisamy N, Muthusamy S, Mishra OP, Sundararajan SCM, Panchal H, Ramamoorthi P (2023) A novel method for image captioning using multimodal feature fusion employing mask RNN and LSTM models. Soft Comput 27(19):1–14

Gnanadesigan, N. S., Dhanasegar, N., Ramasamy, M. D., Muthusamy, S., Mishra, O. P., Pugalendhi, G. K., ... & Ravindaran, A. (2023). An integrated network topology and deep learning model for prediction of Alzheimer disease candidate genes. Soft Computing, 1–15.

Subramanian, D., Subramaniam, S., Natarajan, K., & Thangavel, K. (2023). Flamingo Jelly Fish search optimization-based routing with deep-learning enabled energy prediction in WSN data communication. Network: Computation in Neural Systems, 1–28.

Jagadeesan V, Venkatachalam D, Vinod VM, Loganathan AK, Muthusamy S, Krishnamoorthy M, Geetha M (2023) Design and development of a new metamaterial sensor-based minkowski fractal antenna for medical imaging. Appl Phys A 129(5):391

Ezhilarasi K, Hussain DM, Sowmiya M, Krishnamoorthy N (2023) Crop Information Retrieval Framework Based on LDW-Ontology and SNM-BERT Techniques. Inf Technol Control 52(3):731–743

Batcha BBC, Singaravelu R, Ramachandran M, Muthusamy S, Panchal H, Thangaraj K, Ravindaran A (2023). A novel security algorithm RPBB31 for securing the social media analyzed data using machine learning algorithms. Wireless Personal Communications,1-28

Chinthamu N, Gooda, SK, Shenbagavalli P, Krishnamoorthy N, Selvan ST Detecting the Anti-Social Activity on Twitter using EGBDT with BCM.

Bennet MA, Mishra, OP, Muthusamy, S (2023, March). Modeling of upper limb and prediction of various yoga postures using artificial neural networks. In 2023 Int Conf Sustainable Comput Data Commun Syst (ICSCDS) (pp. 503–508). IEEE.

Jude MJA, Diniesh VC, Shivaranjani M, Muthusamy S, Panchal H, Sundararajan SCM, Sadasivuni KK (2023) On minimizing TCP traffic congestion in vehicular internet of things (VIoT). Wireless Pers Commun 128(3):1873–1893

Acknowledgements

This work was supported by the Qatar National Research Fund under grant no. MME03-1226-210042. The statements made herein are solely the responsibility of the authors.

Funding

Open Access funding provided by the Qatar National Library. No financial support was received from any organization for carrying out this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no potential conflicts of interest concerning this article's research, authorship, and publication. To the best of my knowledge and belief, any actual, perceived, or potential conflicts between my duties as an employee and my private and business interests have been fully disclosed in this form following the journal's requirements.

Consent to participate

I have been informed of the risks and benefits involved, and all my questions have been answered satisfactorily. Furthermore, I have been assured that a research team member will also answer any future questions. I voluntarily agree to take part in this study.

Consent to publication

Individuals may consent to participate in a study but object to publishing their data in a journal article.

Ethical Approval

This material is the author's original work, which has not been previously published elsewhere. The paper is not currently being considered for publication elsewhere. The paper reflects the author's research and analysis truthfully and completely.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Natarajan, K., Muthusamy, S., Sha, M.S. et al. A novel method for the detection and classification of multiple diseases using transfer learning-based deep learning techniques with improved performance. Neural Comput & Applic 36, 18979–18997 (2024). https://doi.org/10.1007/s00521-024-09900-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-024-09900-x