Abstract

This paper presents a comprehensive review of the use of Artificial Intelligence (AI) in Systematic Literature Reviews (SLRs). A SLR is a rigorous and organised methodology that assesses and integrates prior research on a given topic. Numerous tools have been developed to assist and partially automate the SLR process. The increasing role of AI in this field shows great potential in providing more effective support for researchers, moving towards the semi-automatic creation of literature reviews. Our study focuses on how AI techniques are applied in the semi-automation of SLRs, specifically in the screening and extraction phases. We examine 21 leading SLR tools using a framework that combines 23 traditional features with 11 AI features. We also analyse 11 recent tools that leverage large language models for searching the literature and assisting academic writing. Finally, the paper discusses current trends in the field, outlines key research challenges, and suggests directions for future research. We highlight three primary research challenges: integrating advanced AI solutions, such as large language models and knowledge graphs, improving usability, and developing a standardised evaluation framework. We also propose best practices to ensure more robust evaluations in terms of performance, usability, and transparency. Overall, this review offers a detailed overview of AI-enhanced SLR tools for researchers and practitioners, providing a foundation for the development of next-generation AI solutions in this field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A Systematic Literature Review (SLR) is a rigorous and organised methodology that assesses and integrates previous research on a specific topic. Its main goal is to meticulously identify and appraise all the relevant literature related to a specific research question, adhering to strict protocols to minimise biases (Higgins 2011; Moher et al. 2009). This methodology originally emerged within the realm of Evidence-Based Medicine Sackett et al. (1996), and it was subsequently adapted and employed in diverse research disciplines including social sciences Petticrew and Roberts (2008), engineering and technology Keele et al. (2007), education Gough et al. (2017), environmental sciences Pullin and Stewart (2006), and business and management Tranfield et al. (2003).

SLRs are recognised for being time-consuming and resource-intensive. This is due to several factors, including the lengthy process that can extend beyond a year Borah et al. (2017), the necessity of assembling a team of domain experts Shojania et al. (2007), significant financial implications from database subscriptions, specialised software, and personnel remuneration Shemilt et al. (2016), the growing number of publications Bornmann and Mutz (2015), and the periodic need for updates to maintain relevance Moher et al. (2007).

Over the past decades, numerous tools have been developed to support and even partially automate SLRs, aiming to address these challenges. Many of these tools have adopted Artificial Intelligence (AI) solutions (van den Bulk et al. 2022; Kebede et al. 2023), particularly for the screening and data extraction phases. The incorporation of AI into SLR tools has been further propelled by the emergence of more sophisticated AI techniques in Natural Language Processing (NLP), such as Large Language Models (LLMs), which have the potential to revolutionise these systems Robinson et al. (2023). While a significant body of research has examined SLR tools (Carver et al. 2013; Feng et al. 2017; Napoleão et al. 2021; Cierco Jimenez et al. 2022; Jesso et al. 2022; Khalil et al. 2022; Wagner et al. 2022; Ng et al. 2023; Robledo et al. 2023), relatively few studies have explored the role of AI in this domain (Cowie et al. 2022; Burgard and Bittermann 2023; de la Torre-López et al. 2023; Robledo et al. 2023). Furthermore, these studies focused on a limited selection of AI features, as we will discuss in Sect. 4.

This survey aims to address the existing gap by rigorously examining the application of AI techniques in the semi-automation of SLRs, within the two main stages of application, namely screening and extraction. For this purpose, we first conducted an analysis of eight prior surveys and identified the most prominent features examined in the literature. Next, we defined a framework of analysis that integrates 23 general features and 11 features pertinent to AI-based functionalities. We then selected 21 prominent SLR tools and subjected them to rigorous analysis using the resulting framework. We extensively discuss current trends, key research challenges, and directions for future research. We specifically focus on three major research challenges: (1) integrating advanced AI solutions, such as large language models and knowledge graphs, (2) enhancing usability, and (3) developing a standardised evaluation framework. We also propose a set of best practices to ensure more robust evaluations regarding performance, usability, and transparency. Finally, we performed an additional analysis on 11 recent tools that utilise the capabilities of LLMs (predominantly ChatGPT via the OpenAI API) for searching the literature and aiding academic writing. Although these tools do not cater directly to SLRs, there is potential for their features to be integrated into future SLR tools. In conclusion, this survey seeks to offer scholars a thorough insight into the application of Artificial Intelligence in this field, while also highlighting potential avenues for future research.

The remainder of this paper is structured as follows. Sect. 2 includes a description of the SLR stages and their relationship with AI. Section 3 outlines the methodology we employed to identify the SLR tools discussed in the survey. Section 4 provides a meta-review of previous surveys about SLR tools that analysed AI features. Section 5 provides an in-depth examination of the 21 tools. Section 6 discusses the key research challenges and proposes some best practices for the evaluation of AI-enhanced SLR tools. Section 7 analyses the latest generation of LLM-based systems designed to assist researchers. Finally, Sect. 8 concludes the paper by summarising the contributions and the main findings.

2 Background

In this section, we examine the various stages of a SLR and the extent of support they receive from AI in the current generation of tools. Here, the term ‘AI’ specifically denotes weak or narrow AI, which includes systems designed and trained for specific tasks like classification, clustering, or named-entity recognition Iansiti and Lakhani (2020). In the context of SLR, these methodologies are predominantly utilised to semi-automate tasks like screening and data extraction (Cowie et al. 2022; Burgard and Bittermann 2023).

The SLR methodology consists of six distinct stages (Keele et al. 2007; Higgins 2011): (i) Planning, (ii) Search, (iii) Screening, (iv) Data Extraction and Synthesis, (v) Quality Assessment, and (vi) Reporting. Each stage plays a pivotal role in ensuring the comprehensiveness and rigour of the review process.

The planning phase is foundational to the entire review process, as it involves formulating a set of precise and specific research questions that the SLR seeks to address O’Connor et al. (2008). A detailed protocol is also developed during this stage, outlining the appropriate methodologies that will be adopted to carry out the review Fontaine et al. (2022). This protocol ensures consistency, reduces bias, and enhances the transparency and reproducibility of the review.

The search phase aims to identify relevant papers using search strategies, snowballing, or a hybrid approach. Search strategies are typically implemented by creating a query based on a combination of terms using boolean operators (Team 2007; Glanville et al. 2019). This query is then executed on designated search engines. In snowballing, the researcher examines the references and citations of an initial group of papers (also known as seed papers) to identify additional articles. This process is iteratively repeated until no new relevant scholarly documents are found (Webster and Watson 2002; Wohlin 2014). The hybrid approach is the combination of search strategy and snowballing (Mourão et al. 2017; Wohlin et al. 2022). Traditionally, the search phase had not been significantly supported by artificial intelligence techniques Adam et al. (2022). Nevertheless, there are some emerging tools, which we will examine in Sect. 7, that have begun to incorporate LLMs in academic search engines, often within a Retrieval-Augmented Generation (RAG) framework Lewis et al. (2020). This innovative approach allows for the formulation of precise questions and complex queries in natural language, surpassing the capabilities of traditional keyword-based searches.

The screening phase uses a set of inclusion and exclusion criteria to further filter the paper obtained from the search stage. It typically consists of two stages: (i) title and abstract screening and (ii) full-text screening. In the first step, the reviewers screen the relevant papers according only to the title and abstract Moher et al. (2009). The second step entails a detailed evaluation of the content of each paper, a task that demands significantly more effort but leads to a more thorough assessment. It is also customary to document the rationale for excluding any given paper during this process. The predominant application of AI in SLR regards this phase. It usually involves employing machine learning classifiers, which are trained on an initial set of user-selected papers and then used to identify additional relevant articles Miwa et al. (2014). This process frequently involves iteration, where the user refines the automatic classifications or selects new papers, followed by retraining the classifier to better identify further pertinent literature.

In the data extraction and synthesis phase, all the pertinent information is systematically extracted from the selected studies. The techniques for data extraction vary greatly depending on the research field and the objective of the researcher. For example, in the biomedical field, protocols like PECODR Dawes et al. (2007) (Patient-Population-Problem, Exposure-Intervention, Comparison, Outcome, Duration, and Results) and PIBOSO Kim et al. (2011) (Population, Intervention, Background, Outcome, Study Design, and Other) are used to identify key elements from clinical studies, while the STARD checklist Bossuyt et al. (2003) supports readers in assessing the risk of bias and evaluating the relevance of the results. Following the extraction, the data is aggregated and summarised (Munn et al. 2014; Garousi and Felderer 2017). Depending on the nature and heterogeneity of the data, the resulting synthesis might be qualitative or quantitative. This phase is also occasionally supported by AI solutions. Commonly, the relevant tools employ classifiers to identify articles possessing specific characteristics Marshall et al. (2018) or implement named-entity recognition for extracting specific entities or concepts Kiritchenko et al. (2010) (e.g., RCT entities Moher et al. (2001), entities pertaining environmental health studies Walker et al. (2022)).

The quality assessment phase evaluates the rigour and validity of the selected studies (Project 1998; Wells et al. 2000; Von Elm et al. 2007; Higgins and Altman 2008). This analysis provides evidence of the overall strength and the level of trustworthiness presented in the review (Zhou et al. 2015; Chen et al. 2022).

Finally, the reporting phase involves presenting the findings in a structured and coherent manner within a research paper. This presentation typically follows an established format comprising sections like introduction, methods, results, and discussion, but this may differ depending on the journal in which the manuscript will be published (Stroup et al. 2000; Page et al. 2021). Historically, this stage did not benefit from the use of artificial intelligence techniques (Justitia and Wang 2022; Li and Ouyang 2022). However, as we will discuss in Sect. 7, recent advancements have led to the development of tools based on LLMs designed to support academic writing, which can be particularly useful in this phase. These tools typically enable users to draft an initial outline of the desired document and iteratively refine it.

3 Methodology

We adopt the standard PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) methodology Page et al. (2021) for conducting and reporting the systematic review and the meta-analysis. The PRISMA checklist is linked in the supplementary material at the end of the paper.

The primary objective of our analysis was to examine the application of Artificial Intelligence in the current generation of SLR tools to identify trends and emerging research directions. In order to identify the set of AI-enhanced SLR tools, we first formulated three inclusion criteria and two exclusion criteria. Specifically, the inclusion criteria are the following:

- IC 1::

-

The SLR tool must incorporate AI techniques to semi-automate the screening or extraction process, while still maintaining the user’s capacity to make the final decision Tsafnat et al. (2014);

- IC 2::

-

The tool must possess a user interface that facilitates paper screening or information extraction by the user;

- IC 3::

-

The tool should not require advanced technical expertise for installation and execution.

The exclusion criteria are:

- EC 1: :

-

The tool is under maintenance;

- EC 2::

-

The tool has not been updated in the last 10 years.

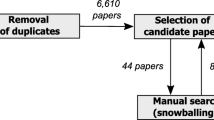

The PRISMA diagram, depicted in Fig. 1 illustrates the main phases of the process. We utilised three main strategies for identifying the tools.

First, we conducted a search of previous survey papers on SLR tools and extracted the tools they analysed. This was accomplished using Scopus,Footnote 1 a leading bibliographic database Pranckutė (2021). We selected Scopus over other alternatives because it is widely recognised as the preferred source for conducting systematic literature reviews due to its high-quality metadata, reliable citation tracking, and extensive coverage of scientific documents, including journals, conference proceedings, and books (Baas et al. 2020; Visser et al. 2021). Specifically, we used the search string: (“Literature Reviews” OR “Systematic Review”) AND (“Tools” OR “Automation” OR “Semi-Automation” OR “Semiautomation” OR “Software”).Footnote 2 Since this field lacks standardised vocabulary (Dieste et al. 2009; Grant and Booth 2009), we aimed to maximise recall by using broad terms, planning to refine the results at a later stage. Additionally, we filtered the results by selecting only ‘review’ as the ‘Document type’ in the Scopus interface.

The search yielded 356 review papers. From this set, we identified the surveys focusing on SLR tools. This selection process was conducted in two stages. Initially, the first author, who has eight years of experience in teaching evidence-based medicine and bibliometric analysis, identified a preliminary set of 14 papers based on their titles and abstracts. Subsequently, all four authors collaboratively examined the shortlisted papers by reviewing the full texts. Potentially ambiguous cases were discussed among the authors to achieve consensus. This process yields five survey papers. We then applied a snowballing search Webster and Watson (2002) to identify additional surveys. This involved examining the references and citations of the five survey papers. As before, we implemented a two-stage selection process, screening titles and abstracts first, and followed by a full-text analysis of potentially relevant papers. This resulted in the inclusion of three additional papers. Overall, this procedure yielded a total of 8 survey papers. An analysis of these surveys led to the identification of 23 tools. Among these, 17 were associated with academic papers, whereas 6 were not.

As a second source, we adopted the Systematic Literature Review Toolbox Marshall and Brereton (2015), a repository in which SLR tools are published and updated. This platform is highly regarded in the field and was adopted as a source in five of the eight previous surveys (Kohl et al. 2018; Van der Mierden et al. 2019; Harrison et al. 2020; Cowie et al. 2022; Robledo et al. 2023). Specifically, we utilised the advanced search functionality to retrieve all tools under the “software” category. The query returned 236 tools that were manually analysed. Similar to the analysis of the papers, this process was conducted in two phases. Initially, the first author selected a preliminary set of 45 tools based solely on their descriptions in the repository. Next, all authors evaluated these tools by examining their websites, tutorials, and the tools themselves. When necessary, the first author collected additional information through interviews with the developers. As before, any ambiguous cases were discussed among the authors until a consensus was reached. The analysis identified a total of 21 tools. Of these, 16 were associated with academic papers, while 5 were not.

As a third source, we adopted the Comprehensive R Archive Network (CRAN),Footnote 3 a well-regarded repository for R packages widely used by the statistical and data science communities. Specifically, we employed the packagefinder library Zuckarelli (2023) and used the query: (“systematic literature review” OR “systematic review” OR “literature review”). We chose this library due to its proven effectiveness in retrieving relevant applications in various domains, such as ecology and evolution Lortie et al. (2020). This search yielded 329 tools, which we then evaluated using the same two-stage selection process previously applied for the tools produced by searching the SLR Toolbox. However, none of these tools incorporated AI solutions while also providing a visual interface. Consequently, we discarded all of them.

After deduplicating the results from the SLR toolbox and previous surveys, we identified 17 tools linked to research papers and 8 tools without associated papers. To validate and expand our findings, we conducted a snowballing search using the 17 papers linked to the tools as the initial seeds. Our aim was to identify additional papers associated with a relevant tool. This search was conducted on Semantic Scholar,Footnote 4 chosen for its extensive coverage in Computer Science, especially for snowballing searches Hannousse (2021). To facilitate this, we developed a custom script to interface with the Semantic Scholar API, enabling the efficient retrieval of references from seed papers and the papers citing them.Footnote 5 This process led to the identification of 584 references and 8,009 papers citing the seeds for a total of 8,593 papers. After removing duplicates, 7,304 papers remained. The authors analysed these papers using the same two-stage selection process that was employed for survey papers. This analysis yielded 15 of the papers included in the initial seeds as well as the 8 previously identified surveys, but it did not reveal any new tools or papers. The lack of new findings, despite the comprehensive snowballing process, suggests that the tool section identified earlier is exhaustive.

In conclusion, the process led to a collection of 17 tools with associated papers and 8 without (Covidence, DistillerSR, Nested Knowledge, Pitts.ai, Iris.ai, LaserAI, DRAGON/litstream, Giotto Compliance). We then excluded Giotto Compliance, DRAGON/litstream, and LaserAI from our study due to the lack of available information.Footnote 6 We also consolidated RobotReviewer and RobotSearch into a single entry, recognising their shared algorithmic basis. Consequently, the final set of tools considered in our study included 21 distinct tools.

Table 1 lists the 21 selected tools. The majority of them (17) were primarily designed for the purpose of screening. Two tools, namely Dextr and ExaCT, focused exclusively on extraction. The remaining two tools, Iris.ai and RobotReviewer/RobotSearch, had a dual focus on both screening and extraction. In summary, 19 tools can be used for the screening phase and 4 tools for the extraction phase. The majority of the tools (19) are web applications, while only 2, namely SWIFT-Review and ASReview, need to be installed locally. Furthermore, only 4 tools (Colandr, ASReview, FAST2, and RobotReviewer) release their code under an open license.

4 Meta-review of previous surveys

This section provides a brief meta-analysis of how the previous systematic literature reviews have described the tools in relation to Artificial Intelligence. We focus on four surveys that analysed AI features (Cowie et al. 2022; Burgard and Bittermann 2023; de la Torre-López et al. 2023; Robledo et al. 2023).

The previous survey papers characterised AI according to five main features:

-

1.

Approach: dentifies the method used for performing a specific task. This is the most examined feature, receiving attention from four studies (Cowie et al. 2022; Burgard and Bittermann 2023; de la Torre-López et al. 2023; Robledo et al. 2023).

-

2.

Text representation: escribes the processes employed to convert text into suitable input for the algorithm (e.g., BoW Zhang et al. (2010), LDA topics Blei et al. (2003), word embeddings Wang et al. (2020)). This feature was analysed by two previous surveys (de la Torre-López et al. 2023; Burgard and Bittermann 2023).

-

3.

Human interaction: pecifies how users engage with a tool, detailing the operations and options available to them, as well as the characteristics of the user interface. This is among the least explored features with just one previous study de la Torre-López et al. (2023).

-

4.

Input: pecifies the type of content full-text or just title and abstract) the tool will need to train its model. Alongside Human interaction, this is the least explored feature, with just one previous study de la Torre-López et al. (2023).

-

5.

Output: epresents the outcome generated by the trained algorithm, and it has been analysed in three studies (Burgard and Bittermann 2023; de la Torre-López et al. 2023; Robledo et al. 2023).

Table 2 summarises the analysis of the four systematic literature reviews and shows how 17 tools have been reviewed according to the five AI features. Only three tools (FASTRED, EPPI-Reviewer, and Abstractr) were actually assessed according to all five AI features. For ten tools (ASReview, Colandr, Covidence, DistillerSR, Rayyan, Research Screener, RobotAnalyst, RobotReviewer/RobotSearch, SWIFT-Active Screener, and SWIFT-Review) only three features named approach, text representation, and output have been assessed. The remaining tools were assessed by using only one feature (approach).

Upon examining the four survey papers, it is apparent that there is a limited exploration of AI features. De la Torre-López et al. de la Torre-López et al. (2023) provide the most comprehensive analysis, utilising all five specified features to examine seven tools. In contrast, Burgard and Bittermann (2023) employed only three features: text representation, approach, and output. Robledo et al. (2023) focused on just two features: approach and output. Cowie's et al. (2022) conducted the most restricted analysis, considering only one feature (approach). Furthermore, the five reported features only offer a narrow perspective on how AI can support SLRs.

In summary, the previous systematic reviews offer a relatively limited analysis of the expanding ecosystem of AI-enhanced SLR tools and their characteristics. In the next section, we will address this gap by introducing a comprehensive set of 11 AI features and applying them to evaluate the 21 SLR tools identified in Sect. 3.

5 Survey of SLR tools

We analysed the 21 SLR tools according to 34 features (11 AI-specific and 23 general) by examining the relevant literature (see Table 1), their official websites, and the online tutorials. When necessary, we sought additional information by reaching out to the developers through email or online interviews.

Section 5.1 describes the full set of features, paying particular attention to the new AI features that we first introduced for this survey. Section 5.2 reports the results of the review. Section 5.3 discusses the most suitable systems for specific use cases. Finally, Sect. 5.4 outlines the threats to validity of our analysis.

5.1 Features overview

5.1.1 AI features

To analyse the extent of AI usage within SLR tools, we considered a total of eleven features. This evaluation included the five features previously described in Sect. 4 (approach, text representation, human interaction, input, and output) along with six new features unique to this study. These additional features were identified through a review of the relevant literature (Cowie et al. 2022; Burgard and Bittermann 2023; de la Torre-López et al. 2023; Robledo et al. 2023) and a preliminary analysis of the tools. The six novel features are as follows:

-

SLR Task: hich categorises the tasks for which the AI approach is used (e.g., paper classification, paper clustering, named-entity recognition);

-

Minimum requirement: hich refers to the minimum number of relevant and irrelevant papers required to effectively train a classifier tasked with selecting pertinent papers;

-

Model execution: which evaluates whether the models operate in real time (synchronously) or later, typically overnight (asynchronously);

-

Research field: hich identifies the research domains in which the tools can be effectively employed;

-

Pre-screening support: which specifies the application of AI techniques to assist users in manually selecting relevant papers, typically by highlighting key terms or grouping similar papers (e.g., topic maps Howard et al. (2020) based on LDA Blei et al. (2003), clustering approaches Przybyła et al. (2018));

-

Post-screening support: which refers to the application of AI techniques to conduct a final review of the screened papers (e.g., summarisation Shah and Phadnis (2022)).

Five of the eleven features (minimum requirement, model execution, human interaction, pre-screening support, and post-screening support) are exclusive to the screening phase and will not be considered when analysing the extraction phase.

5.1.2 General features

We analysed the non-AI characteristics of SLR tool based on 23 features. We derived these features from previous studies (Marshall et al. 2014; Kohl et al. 2018; Van der Mierden et al. 2019; Harrison et al. 2020; Cowie et al. 2022) after a process of synthesis and integration. Table 3 shows the description of each feature with its category. To facilitate the systematic analysis, we grouped them into six categories: Functionality (F), Retrieval (R), Discovery (Di), Documentation (Do), Living Review (L), and Economic (E).

The functionality category includes features for auditing and evaluating the technical aspects of the tools. The retrieval category covers features related to the acquisition and inclusion of scholarly documents. The discovery category consists of features that facilitate the inclusion, exclusion, and management of references during the screening phase. The documentation category includes features that support the reporting of the findings. The living review category captures the ability of tools to incorporate new relevant documents based on AI techniques. Lastly, the economic category reflects the financial considerations associated with the tools.

5.2 Results

In this section, we present the results of our analysis. Section 5.2.1 describes the tools for the screening phase through the AI features. Section 5.2.2 presents the tools for the extraction phase also through the AI features. Finally, Sect. 5.2.3 describes the full set of 21 SLR tools according to the general features.

5.2.1 The role of AI in the screening phase

As reported in Table 1, 19 tools use AI for the screening phase. In the following, we analyse them according to the 11 AI features. To eliminate repetitions, this discussion combines the features input and text representation into the category Input Data and Text Representation. Moreover, the output feature is discussed within the context of the SLR Task, as it is contingent upon the specific task requirements. The Table in Appendix (Table 6) reports detailed information on how each of the 19 tools addresses the 11 AI features.

Research field: Twelve tools utilise general AI solutions that are applicable across various research fields. The other seven tools employ specific AI solutions designed to support biomedical studies, typically by identifying Randomised Controlled Trials (RCTs) through the use of dedicated classifiers Noel-Storr et al. (2021). Notably, EPPI-Reviewer, PICOPortal, and Covidence offer both a general mode and a specialised setting for biomedical studies. Conversely, Pitts.ai, RobotReviewer/RobotSearch, SWIFT-Review, and LitSuggest are exclusively dedicated to the biomedical domain.

SLR task: Fifteen tools utilise artificial intelligence for only one task, most often to classify papers as relevant/irrelevant. The other four tools (Covidence, PICOPortal, and EPPI-Reviewer, Colandr) undertake two AI-related tasks. They all classify papers as relevant/irrelevant, but also execute an additional task, such as identifying a specific type of paper (e.g., economic evaluation, randomised controlled trials, etc.) or categorising papers according to a set of entities defined by the user. For the sake of clarity, in our discussion of the subsequent features, we will systematically address the first group (one task) followed by the second group (two tasks). In the first group, twelve tools focus on selecting relevant papers given a set of seed papers. Typically, each paper is assigned an inclusion probability score, usually ranging from 0 to 1. Of the remaining three, two of them (Pitts.ai and RobotReviewer/RobotSearch) identify RCTs based on a pre-built classification model, while the third (Iris.ai) clusters similar papers to build topic maps that assist users in selecting the relevant papers. In the second group, all four systems classify pertinent papers using a set of seed papers as a reference. However, they vary in their secondary AI-driven tasks. Specifically, Covidence and PICOPortal identify RCTs using a predefined classification model. EPPI-Reviewer can identify various types of studies, including RCTs, systematic reviews, economic evaluations, and COVID-19 related studies. Finally, Colandr, in addition to the standard identification of relevant papers, enables users to define their own set of categories (e.g., “water management”) and subsequently performs a multi-label classification of articles based on them Cheng et al. (2018). It also maps individual sentences to the user-defined categories and provides a confidence score for each classification.

AI approach: In the group of tools performing one task, the twelve tools focused exclusively on categorising relevant papers employed various types of machine learning classifiers. The most adopted approach is Support Vector Machine (SVM) Hearst et al. (1998), which aligns with the findings of prior studies Schmidt et al. (2021). Four of the tools (Abstractr, FAST2, Rayyan, RobotAnalyst) exclusively rely on SVM. Distiller supports both SVM and Naive Bayes. ASReview allows the user to select a vast range of methods, including Logistic Regression, Random Forest, Naive Bayes, SVM, and a Neural Networks classifier. Litsuggest use logistic regression, while SWIFT-Review and SWIFT-Active Screener use a method based on log-lineal regression. Pitts.ai and RobotReviewer/RobotSearch also use an SVM for identifying RCTs Marshall et al. (2018). Finally, Iris.ai identifies and groups similar papers based on the similarity of their ‘fingerprint’, a vector representation of the most meaningful words and their synonyms extracted from the abstract Wu et al. (2018).

With regards to the four tools that perform two AI tasks, Covidence, EPPI-Reviewer, and PICOPortal also identify relevant papers by using a SVM classifier. In contrast, Colandr employs a method where it identifies papers by searching for keywords that are related to a set of user-defined search terms Cheng et al. (2018). For instance, it can recognise terms commonly associated with ‘Artificial Intelligence’ and select papers containing these terms. Covidence also implements a machine learning classifier based on SVM with a fixed threshold for the identification of RCTs following the Cochrane guidelines Thomas et al. (2021). EPPI-Reviewer utilises a range of proprietary classifiers trained on various databases to identify papers with distinct characteristics.Footnote 7 It uses the Cochrane Randomized Controlled Trial classifier Thomas et al. (2021) to identify RCTs. It employs a classifier trained with the NHS Economic Evaluation Database (NHS EED) Craig and Rice (2007) for identifying economic evaluations and another trained on the Database of Abstracts of Reviews of Effects La Toile (2004) to identify systematic reviews in the biomedical field. Finally, it uses a classifier trained on the ‘Surveillance and disease data on COVID-19’Footnote 8 for identifying research related to COVID. PICOPortal employs instead an ensemble of machine learning classifiers, which combines both decision trees and neural networks Onan et al. (2016). Finally, for the identification of the category attributed to the paper by the user, Colandr used a combination of Named Entity Recognition for extracting entities relevant to the categories and a classifier based on logistic regression Cheng and Augustin (2021).

Input data and text representation: The AI techniques employed by these tools take as input the title, abstract, or full text of papers. All the tools analysed need only titles and abstracts as input, with the exception of Colandr, which requires the full text of papers. The tools generate different representations of the papers to input into the AI models. Specifically, of the 15 tools dedicated to classifying relevant papers, the majority (8 out of 15) use a Bag of Words (BoW) approach Zhang et al. (2010), while the remainder employ various word embedding techniques Wang et al. (2020). Pitts.ai and RobotReviewer/RobotSearch use SciBERT embeddings Beltagy et al. (2019). Research Screener employs Doc2Vec embeddings Le and Mikolov (2014). ASReview offers multiple options, including Sentence-BERT Reimers and Gurevych (2019) and Doc2Vec Le and Mikolov (2014). Iris utilises a unique representation called fingerprint Wu et al. (2018), which is a vector characterising the most meaningful words and their synonyms extracted from the abstract. In the second group, Covidence and EPPI-Reviewer adopt a BoW representation, while PICOPortal uses both BoW and the BioBERT embeddings Lee et al. (2020). Finally, Colandr uses both word2vec Mikolov et al. (2013) and GloVe Pennington et al. (2014) embeddings. Overall, much like other NLP applications, these tools are evolving from traditional text representations like BoW to a range of more modern word and sentence embeddings.

Human interaction: We identified three main types of interfaces. The first and most typical one, implemented by 16 tools, regards the classification of paper as relevant. These graphical interfaces typically feature similar templates that allow users to upload and examine papers. Some tools (Rayyan, SWIFT-Active Screener, SysRev, Covidence, and PICOPortal) also offer a menu with additional functionalities like ranking or filtering papers based on specific criteria. A few tools (Rayyan, SWIFT-Active Screener, Research Screener, Pitts.ai, SysRev, Covidence, PICOPortal) also enable multiple users to collaboratively perform this task, allowing them to add comments for discussion about problematic papers or to delegate challenging papers to a senior reviewer. For illustration, Fig. 2a and b depict the interfaces used by ASReview and RobotAnalyst, respectively, for selecting relevant papers. The ASReview interface enables users to classify papers as either relevant or irrelevant. In contrast, the RobotAnalyst interface provides options for users to categorise papers as included, excluded, or undecided.

The second interface type, offered by Colandr, enables users to define specific categories to assign to the papers. This approach offers greater flexibility compared to the traditional binary classification of relevant or not relevant papers. For instance, in Fig. 3 Colandr suggests that for the given paper, the shown sentences are classified with a confidence level of high, medium or low in the category “land/water management”, previously defined by the user. The user can accept, skip or reject the suggested classification.

Examples of the tagging process of Colandr. This figure is courtesy of Colandr (n.d.)

The third interface type, offered by Iris.ai, is based on a topic map, a visualisation technique that clusters papers based on thematic similarities. The user initiates the search process with a brief description of the user’s search intent (typically 300 to 500 words), a title, or the abstract of a paper. The system then clusters the papers according to their topics and generates a topic map, such as the ones depicted in Fig. 4c. These interactive visualisations enable users to effectively navigate and select papers relevant to their research. The user can iteratively repeat the clustering process until they are satisfied that all pertinent papers have been incorporated into the analysis. Iris.ai also enables users to further filter the papers according to a variety of facets.

Minimum requirements: Generally, the accuracy of a classifier improves with an increasing number of annotated papers, but this also increases the time and effort required from researchers. Most methods need between 1–15 relevant papers and typically the same number of irrelevant ones. This is a relatively low number that should allow researchers to quickly annotate the initial set of seed papers. However, the necessary quantity varies a lot across tools. For instance, ASReview, SWIFT-Active Screener, and SWIFT-Review require just one relevant and one irrelevant paper to begin classification. Covidence and Rayyan require two and five papers, respectively. Other tools require a larger number of papers. For example, Colandr needs 10 seed papers, while SysRev requires 30.

Model execution: Thirteen tools employ a real-time model execution strategy, wherein the training and classification of the model occur immediately after the user selects the relevant and irrelevant paper. Conversely, SysRev and SWIFT-Active Screener adopt a delayed-model-execution approach in which the training and classification steps are conducted at predetermined intervals. Specifically, SysRev executes these operations overnight, whereas SWIFT-Active Screener updates its model after every thirty papers, maintaining a minimum two-minute interval between the most recent and the currently used model.

Pre-screening support: Among the 19 tools evaluated, eight implement standard techniques for pre-screening support, such as keyword search, boolean search, and tag search. ASReview, Covidence, DistillerSR, and SWIFT-Active Screener only enable the user to filter the paper by keyword. Rayyan and EPPI-Reviewer enhance this functionality by highlighting keywords in their visual interface. Additionally, Colandr and Abstrackr offer the feature of colour-coding keywords based on their relevance level. Rayyan incorporates a boolean search feature, allowing users to combine keywords with operators like AND, OR, and NOT. For example, a boolean search such as “literature review” AND “tools” will retrieve scholarly documents containing both keywords in their titles or abstracts. Rayyan also provides options to search by author or publication year. EPPI-Reviewer, on the other hand, offers a tag search function, where users can tag papers with specific keywords and then search based on these tags.

RobotAnalyst, SWIFT-Review, and Iris.ai also support topic modelling. The first two use LDA Blei et al. (2003), which probabilistically assigns a topic to a paper based on the most recurrent terms shared by other papers. RobotAnalyst presents the topics in a network, as shown in Fig. 4a in which each node (circle) represents a topic, and its size is proportional to the frequency of the terms that belong to it. SWIFT-Review uses a simpler approach displaying the topics and their terms in a bar chart, as shown in Fig. 4b. Iris.ai clusters the papers according to a two-level taxonomy of global topics and specific topics. For instance, in Fig. 4c we can observe a set of global topics in the background, which include ‘companion’, ‘labour’, ‘provider’, and ‘woman’. Whereas in the cyan section there are the second-level specific topics, in this case concerning the ‘labor’ global topic, such as ‘woman’, ‘companion’, ‘market’, ‘management’, and ‘care’.

RobotAnalyst offers a cluster-based search functionality. This feature employs a spectral clustering algorithm Ng et al. (2001) to group papers. It also incorporates a statistical selection process for identifying the key terms characterising each cluster Brockmeier et al. (2018). The resulting clusters are presented to the user, emphasising the most representative terms.

Finally, Nested Knowledge, PICOPortal, Rayyan, and RobotReviewer/RobotSearch provide PICO identification, which uses distinct colours to highlight the patient/population, intervention, comparison, and outcome. Rayyan also enhances search capabilities by extracting topics and enriching them with the Medical Subject Headings (MeSH) Lipscomb (2000). Furthermore, it enables users to select biomedical keywords and phrases for inclusion or exclusion.

Post-screening support: Only two tools offer support for post-screening: Iris.ai and Nested Knowledge. Specifically, Iris.ai generates summaries from either a single document, multiple abstracts, or multiple documents. It employs an abstractive summarisation technique Shah and Phadnis (2022), where the summary is formed by generating new sentences that encapsulate the core information of the original text. The system also provides users with the flexibility to adjust the length of the summary, ranging from a brief two-sentence overview to a more comprehensive one-page summary. Nested Knowledge allows users to create a hierarchy of user-defined tags that can be associated with the documents. For instance, in Fig. 5a, Mean Diastolic blood pressure was defined as a sub-tag of Patient Characteristics. The user can also visualise the resulting taxonomy as a radial tree chart, as shown in Fig. 5b.

5.2.2 The role of AI in the extraction phase

In this section, we describe the four tools that support the extraction phase (Dextr, ExaCT, Iris.ai, and RobotReviewer/RobotSearch) with a focus on the six AI features relevant to the extraction phase. We apply the same feature grouping of Sect. 5.2.1. The table in Appendix (Table 7) reports how each of the 4 tools addresses the relevant features.

Research field: RobotReviewer/RobotSearch and ExaCT focus on the medical field, whereas Dextr covers environmental health science. In contrast, Iris.ai can be employed across various research domains.

SLR task: ExaCT, Dextr, and Iris.ai perform Named Entity Recognition (NER) Nasar et al. (2021) to extract various types of information from the relevant articles. Specifically, ExaCT identifies RCT entities based on the CONSORT statement Moher et al. (2001). It returns the top five supporting sentences for each extracted RCT entity, ranked according to relevance. Dextr detects data entities used in environmental health experimental animal studies (e.g., species, strain) Walker et al. (2022). Finally, Iris.ai allows users to customise entity extraction by defining their own set of categories and associating them with a set of exemplary papers. This is done by filling in a form called Output Data Layout (ODL), which is essentially a spreadsheet detailing all the entities that need to be extracted. Finally, RobotReviewer/RobotSearch categorises biomedical articles according to their assessed risk of bias and provides sentences that support these evaluations.

AI approach: The tools perform the NER tasks with a variety of algorithms. ExaCT applies a two-step approach Kiritchenko et al. (2010). First, it identifies sentences that are predicted to be similar to those in the pre-trained model, using a SVM classifier. Next, it extracts from these sentences a set of entities via a rule-based approach, relying on the 21 CONSORT categories Moher et al. (2001). Dextr employs a Bidirectional Long Short-Term Memory - Conditional Random Field (BI-LSTM-CRF) neural network architecture Nowak and Kunstman (2019; Walker et al. (2022). Iris.ai does not share specific information about the method used for NER. Finally, RobotReviewer/RobotSearch employs an ensemble classifier, combining multiple CNN models Krichen (2023) and soft-margin Support Vector Machines Boser et al. (1992) in order to categorise articles based on their risk of bias assessment (either low or high/unclear) and concurrently extract sentences that substantiate these judgements. The final score for each predicted sentence is the average of the scores obtained from each model.

Input data and text representation: The majority of the models accept the full-text document as input, except for Dextr, which utilises only titles and abstracts. The format requirements vary across these tools. Dextr, Iris.ai, and RobotReviewer/RobotSearch, Iris.ai process papers in PDF format. Dextr also supports input in RIS or EndNote formats. ExaCT encodes papers as HTML. The methods for text representation also differ across tools. Dextr encodes text using two pre-trained embeddings: GloVe Pennington et al. (2014) (Global Vectors for Words Representations) and ELMo Peters et al. (2018) (Embeddings from Language Models). Iris.ai utilises the same fingerprint representation Wu et al. (2018) discussed in Sect. 5.2.1. ExaCT uses a simple BoW representation. Finally, RobotReviewer/RobotSearch uses BoW for the linear model and an embedding layer for the CNN model.

5.2.3 General features

Table 4 provides an overview of the proportion of tools covering each of the 23 features. These features are categorised across the six categories outlined in Sect. 5.1.2. The Table in Appendix (Table 8) provides a more general analysis, detailing how the 21 tools address the 23 features.

The functionality category exhibits the highest degree of implementation, with 5 out of 7 features being effectively executed by all the tools. The remaining two features, namely authentication and project auditing, are implemented by 18 and 9 tools, respectively. The other categories present a more heterogeneous scenario. Within the retrieval category, only reference importing is implemented by all tools. Interestingly, no tools provide the ability to automatically retrieve the reference of a paper from bibliographic databases. The tools also offer limited support for the feature within the discovery category. Notably, approximately 50% of the tools lack basic functionalities such as reference deduplication, options for manual annotation and exclusion of references, and features for labelling and commenting on the references. Regarding the documentation category, only 4 tools (DistillerSR, Nested Knowledge, Rayyan, and Covidence) provide the PRISMA diagram of the entire SLR process or the protocol templates. Significantly, LitSuggest stands out as the sole tool providing a living review, enabling users to easily update their earlier analyses by automatically adding recent papers that exhibit a high degree of similarity to the previously selected ones. In terms of economic aspects, the majority of the tools (13 out of 21) are accessible for free.

In summary, only eight of the evaluated tools implement at least 70% of the designated features. Specifically, DistillerSR, Nested Knowledge, Dextr, and ExaCT lead with the highest feature coverage at 82%. They are followed by PICOPortal and Rayyan, each with 78%, EPPI-Reviewer with 74%, and SWIFT-Active Screener with 70%. Among the remaining 13 tools, eight cover between 50 and 70% of the features, while the last five cover between 35 and 50%.

5.3 Outstanding SLR tools

Comparing our results with the previous studies in the literature (Marshall et al. 2014; Van der Mierden et al. 2019; Harrison et al. 2020; Cowie et al. 2022), we observed that many SLR tools have undergone significant development and advancements in the last few years. Particularly, the features in the functionality category have received more attention and are now considered standard functions. These include capabilities for tracking and auditing projects, multiple user support, and multiple user roles. Additionally, the management of references has seen considerable enhancement. As discussed in the previous section, the more complete tools in terms of feature coverage include DistillerSR, Nested Knowledge, Dextr, ExaCT, PICOPortal, and Rayyan. However, in practical scenarios, the selection of these tools should be guided by the user’s specific needs and use cases. In the following, we provide a brief analysis of some tools that our evaluation has identified as particularly suited to certain scenarios. However, it is important to recognise that there is no single best solution in this complex landscape. Therefore, we encourage researchers to experiment with these tools and determine which ones best meet their requirements.

In non-biomedical fields, ASReviewer stands out for its comprehensive range of methods for selecting relevant articles, including Logistic Regression, Random Forest, Naive Bayes, and Neural Networks classifiers. This makes it a potentially optimal choice for this phase of research. Iris.ai and Colandr are also strong contenders that may enable the greatest flexibility since they allow users to respectively cluster documents based on their semantic similarity, and create specific categories for paper classification. Moreover, they offer user-friendly interfaces for analysing the resulting data. Both platforms feature user-friendly interfaces that facilitate the analysis of the resulting data. These features are especially beneficial for exploratory studies aiming to progressively deepen understanding of a domain.

In the biomedical field, Covidence, PICOPortal, EPPI-Reviewer, RobotReviewer/RobotSearch, and Rayyan are all reliable tools. Covidence, PICOPortal, and EPPI-Reviewer have also the capability to identify Randomised Controlled Trials (RCTs) using a predefined classification model. Among these, EPPI-Reviewer offers the most flexibility, since it can be customised to identify a broader range of studies, including systematic reviews, economic evaluations, and research related to COVID-19. RobotReviewer/RobotSearch stands out as the only tool that offers automated bias analysis. This feature makes it an ideal choice for researchers who require this specific functionality. Finally, Rayyan offers a suite of biomedical features, such as PICO highlighting and filtering, the capability to extract study locations, and topic extraction enriched with MeSH terms. It also allows users to define a set of biomedical keywords and phrases for inclusion and exclusion, which is beneficial for identifying specific RCTs.

5.4 Threats to validity

This section outlines various threats to the validity of this study. We examined four primary categories of validity threats: internal validity, external validity, construct validity, and conclusion validity Wohlin et al. (2012). We considered and mitigated them as follows.

Internal validity. Internal validity in systematic literature reviews concerns to the rigour and correctness of the review’s methodology. To ensure the replicability of our review, we meticulously developed a methodologically sound protocol, which incorporated systematic and transparent procedures for the selection of studies and software tools. We also adopted the PRISMA guidelines, known for their robustness and reproducibility (the PRISMA checklist is available in the supplementary material). The protocol for this SLR was developed by the first author and reviewed by co-authors to establish a consensus before initiating the review process. We identified relevant tools using two prominent software repositories (the Systematic Literature Review Toolbox and the Comprehensive R Archive Network) supplemented by manually searching relevant surveys for additional tools. Additionally, we employed a snowballing search strategy to further extend and validate our results. The selection process involved multiple stages to ensure rigorous evaluation and minimise selection bias. Initially, the first author filtered the tools based on the description in the repositories. Next, all authors participated in a more thorough review of the shortlisted tools. In cases where information was unclear or missing, the first author contacted the tool developers directly through email or online interviews. All related publications were thoroughly reviewed to inform the development of the features. Despite the systematic process, biases could still emerge due to the subjective decisions made by researchers when applying inclusion and exclusion criteria. To mitigate this, we collaboratively reviewed the inclusion or exclusion of the shortlisted tools, thereby reducing the influence of individual biases. Another potential threat to the internal validity arises from the fact that the SLR Toolbox has been offline since March 2024. Although the developers have indicated that it will be operational again soon, there is a possibility that the tool may not be available for future surveys. Nevertheless, we believe that including its results remains valuable, given that this system was utilised in five (Kohl et al. 2018; Van der Mierden et al. 2019; Harrison et al. 2020; Cowie et al. 2022; Robledo et al. 2023) of the eight previous surveys identified in Sect. 3.

In conclusion, while the replication of this study by another research team might yield slight variations in the tools and studies included, the robust, systematic methodology employed and the collaborative nature of the review process lend a high degree of internal validity to our findings.

External validity. External validity refers to the degree to which the findings of this systematic literature review are generalisable across various environments and domains. To mitigate threats to external validity, we used multiple sources for selecting the SLR tools. Despite these efforts, the selection of search engines and the formulation of search strings might have impacted the completeness of the tool identification. It is possible that some tools were missed because they were not described using the selected keywords or were absent from the targeted repositories and previous surveys. To counteract this limitation, several strategies were implemented. First, search strings were iteratively refined to enhance coverage and ensure a more exhaustive identification of potential tools. Second, a thorough snowballing method was employed. Finally, interviews were conducted with developers of several tools to further ensure the inclusiveness of the tool selection.

Concerning the inclusion and exclusion criteria, we identified two main potential threats to external validity. The first threat stems from the exclusion of tools that do not feature user interfaces. This criterion was set to focus on tools that are readily adoptable by the average researcher. However, earlier studies involving prototypes without interfaces still align with many of our findings. For instance, these studies also conclude that most SLR tools employ relatively outdated AI techniques Schmidt et al. (2023, 2023), as we will discuss more in detail in Sect. 6.1. The second threat concerns the exclusion of tools that were either under maintenance and unavailable for evaluation or had not been updated in the past ten years. This exclusion criterion might have omitted tools that, despite being inaccessible at the time of the review, could otherwise fulfil the inclusion criteria. These exclusions could potentially restrict the generalisability of our findings.

Construct validity. Construct validity concerns the extent to which the operational measures used in a study accurately represent the concepts the researchers intend to investigate. In our systematic literature review, a primary concern is whether the 34 features identified to evaluate SLR tools cover all relevant characteristics, particularly concerning the integration of Artificial Intelligence. To address potential gaps identified from previous studies, we developed a set of 11 AI-specific features aimed at capturing aspects previously overlooked. Despite these efforts to create a thorough framework for analysis, AI remains a rapidly evolving field, and our feature set might not encapsulate all current and emerging dimensions. To mitigate this issue, the authors collaboratively developed the feature definitions, striving to create a comprehensive representation that incorporates both established dimensions identified in prior surveys and emerging trends noted in recent publications and software developments. Nevertheless, it is acknowledged that some relevant aspects may still be absent from our analysis.

Conclusion validity. Conclusion validity in systematic literature reviews refers to the extent to which the conclusions drawn from the review are supported by the data and are reproducible. In our review, we focused on mitigating threats to conclusion validity by employing a systematic process for identifying relevant software tools and extracting pertinent data for analysis. To ensure accuracy and consistency in data collection, we developed a data extraction form based on the general and AI-specific features identified during our meta-review and feature analysis. The first author applied this form to a small subset of tools to test its effectiveness. Subsequently, all authors independently used the same form to extract data for the same subset of tools. Comparative analysis of the extracted data revealed a high degree of consistency among authors, thereby validating the data extraction process. Following this validation, the first author continued with the data extraction for the remaining tools. Throughout the data analysis and synthesis phases, we engaged in multiple rounds of discussions to refine our categorisation and representation of the features. This collaborative approach aimed to reduce bias and enhance the reliability of our findings.

A persistent threat to conclusion validity in the context of software tool reviews is the dynamic nature of software development Ampatzoglou et al. (2019). Software tools frequently evolve, acquiring new functionalities that may not be documented in the published literature. To address this, we supplemented our literature review with comprehensive examinations of websites, tutorials, and relevant academic papers. Additionally, we reached out directly to developers to obtain updated or missing information. This proactive approach frequently provided crucial clarifications and additions, which we incorporated into our final review, thereby strengthening the reliability of our conclusions. However, the field of AI is evolving rapidly, particularly in areas such as Generative AI Brynjolfsson et al. (2023) and Large Language Models Min et al. (2023). As a result, it is expected that many tools will soon incorporate new AI features. Therefore, while our findings offer a snapshot of the current landscape, they may not fully represent the ongoing advancements.

6 Research challenges

The current generation of SLR tools can demonstrate significant effectiveness when utilised properly. Nonetheless, these tools still lack crucial abilities, which hampers their widespread adoption among researchers. This section will discuss some of the key research challenges identified from our analysis that the academic community will need to address in future work. It is not intended to provide a systematic review like the one in Sect. 5, but rather to explore some of the most compelling research directions and open challenges, aiming to inspire researchers in this area. Section 6.1 analyses the current challenges associated with integrating AI within SLR tools and discusses the potential social, ethical, and legal risks associated with the resulting systems. Section 6.2 addresses usability concerns, which represent a major barrier to the adoption of these tools. Finally, Sect. 6.3 discusses the challenges in establishing a robust evaluation framework and suggests some best practices.

6.1 AI for SLR

As previously discussed, several SLR tools now incorporate AI techniques for supporting in particular the screening and extraction phases. However, current approaches still suffer from several limitations. Consistent with prior research (Kohl et al. 2018; Burgard and Bittermann 2023; Schmidt et al. 2021), our study reveals that the majority of SLR tools still depend on possibly outdated methodologies. This includes the use of basic classifiers, which are no longer considered state-of-the-art for text and document classification. Likewise, several tools continue to employ BoW methods for text representation, although some of the most recent ones (Walker et al. 2022; Van De Schoot et al. 2021; Marshall et al. 2018) have shifted towards adopting word and sentence embedding techniques, such as GloVe (Pennington et al. 2014), ELMo Peters et al. 2018), SciBERT Beltagy et al. 2019), and Sentence-BERT Reimers and Gurevych (2019). Therefore, the first interesting research direction regards incorporating advanced NLP technologies, particularly the rapidly evolving Large Language Models (LLMs) Min et al. (2023). LLMs represent the state of the art for many NLP tasks and demonstrated remarkable proficiency in classifying and extracting information from documents (Dunn et al. 2022; Xu et al. 2023). However, integrating these models presents several challenges Ji et al. (2023). Firstly, LLMs are trained on general data, resulting in less effective performance in specialised fields and languages with fewer resources. Secondly, LLMs may generate inaccurate or fabricated information, known as “hallucinations”. Finally, understanding the decision-making process of LLMs is complex, and their outputs can be inconsistent. A possible solution to these issues is the integration of LLMs with different types of knowledge bases that can provide verifiable factual information Meloni et al. (2023). This is typically achieved through the Retriever-Augmented Generation (RAG) framework Lewis et al. (2020), which allows LLMs to retrieve information from a collection of documents or a knowledge base. For example, the recent CORE-GPT Pride et al. (2023) utilises a vast database of research articles to assist GPT3 (Brown et al., 2020) and GPT4 OpenAI (2023) in generating accurate answers. In addition, the extraction phase in particular could be enhanced by also incorporating modern information extraction methods such as event extraction Li et al. (2022), open information extraction Liu et al. (2022), and relation prediction Tagawa et al. (2019).

A second interesting research direction regards interpretability. Indeed, current classification methods for the screening phase typically operate as ‘black boxes’, not giving much additional information on why a certain paper was deemed as relevant. One important research challenge here is to improve this step by including interpretability mechanisms such as fact-checking Vladika and Matthes (2023) or argument mining Lawrence and Reed (2020) to provide further insights. Such techniques would provide deeper insights into the screening process, enhancing the reliability and credibility of the tools. In the field of explainable AI Linardatos et al. (2020), significant research has been conducted to improve our understanding of the processes models use to generate specific outputs. Specifically, in the context of LLMs, various prompting techniques have been developed to enhance the models’ ability to explain their reasoning and justify their decisions. These techniques include Chain-of-Thought (CoT) Wei et al. (2023), Tree of Thoughts (ToT) (Long 2023; Yao et al. 2023) and Graph of Thoughts (GoT) Besta et al. (2023).

A third promising research direction involves the use of semantic technologies Patel and Jain (2021), particularly knowledge graphs, to enhance the characterisation and classification of research papers Salatino et al. (2022). Knowledge graphs consist of large networks of entities and relationships that provide machine-readable and understandable information about a specific domain following formal semantics Peng et al. (2023). They typically organise information according to a domain ontology, which provides a formalised description of entity types and their relationships Hitzler (2021). In recent years, we saw the emergence of several knowledge graphs that offer machine-readable, semantically rich, interlinked descriptions of the content of research publications (Jaradeh et al. 2019; Salatino et al. 2019; Angioni et al. 2021; Wijkstra et al. 2021). For instance, the latest iteration of the Computer Science Knowledge Graph (CS-KG)Footnote 9 details an impressive array of 24 million methods, tasks, materials, and metrics automatically extracted from approximately 14.5 million scientific articles Dessí et al. (2022). Similarly, the Open Research Knowledge Graph (ORKG)Footnote 10 provides a structured framework for describing research articles, facilitating easier discovery and comparison Jaradeh et al. (2019). ORKG currently includes about 25,000 articles and 1,500 comparisons. This survey is also available in ORKG (https://orkg.org/review/R692116). In a similar vein, NanopublicationsFootnote 11 allow the representation of scientific facts as knowledge graphs Groth et al. (2010). This method has been recently applied to support “living literature reviews”, which can be dynamically updated with new findings Wijkstra et al. (2021). The integration of these knowledge bases offers significant possibilities. It allows for a more detailed and multifaceted analysis of document similarity, and aids in identifying documents related to specific concepts. For instance, it would enable the retrieval of articles that mention particular technologies or that utilise specific materials.

Other SLR phases, such as appraisal and synthesis, received relatively little attention. This gap offers a substantial research opportunity for the application of AI techniques in these areas. In the appraisal phase, incorporating AI-driven scientific fact-checking tools to evaluate the accuracy of research claims could provide significant benefits Vladika and Matthes (2023). For the synthesis phase, the use of summarisation techniques Altmami and Menai (2022) and text simplification methods Sikka and Mago (2020) has the potential to enhance both the efficiency of the analysis and the clarity of the final output.

Finally, we recommend that the research community participates to scientific events and initiatives in this field, such as ICASRFootnote 12 (Beller et al. 2018; O’Connor et al. 2018, 2019, 2020), ALTARFootnote 13 Di Nunzio et al. (2022), and the MSLR Shared TaskFootnote 14 Wang et al. (2022). These initiatives are focused on discovering the most effective ways in which AI can improve the SLR stages.

6.1.1 AI impact assessment

The importance of evaluating the impact of AI systems has grown significantly, particularly with the recent enactment of the European Commission’s Artificial Intelligence Act, which establishes specific requirements and obligations for AI providers. In this context, it is crucial to assess the potential impact of AI-enhanced SLR tools, considering both the relevant literature and the new regulatory framework (Renda et al. 2021; Ayling and Chapman 2022).

Stahl et al. (2023) propose an impact assessment model consisting of two main steps: (1) determining whether the AI tool is expected to have a social impact, and (2) identifying the stakeholders who might be affected by the AI system. We can apply this model to the SLR tools discussed in this survey.

Regarding social impact, SLR tools aim to support the identification, analysis, and synthesis of findings that are pertinent to specific research questions. The information generated by these tools is typically incorporated into research papers and, in some cases, may influence policy development Birkland (2019). The primary concern here is the dissemination of inaccurate scientific information and how such information might be used by the community and policymakers.

Regarding potentially impacted stakeholders, we consider three main groups. The first group consists of authors who use these tools for literature reviews. These individuals face the risk of including incorrect studies and drawing inaccurate conclusions, potentially jeopardising the quality of their work and their careers. To mitigate these risks, it is crucial to use tools that demonstrate high performance and transparency, especially in terms of the datasets used and potential biases. Additionally, these tools should provide mechanisms that allow users to inspect, interpret, and override the tool’s choices. The second group includes the readers of these literature reviews. They are primarily at risk of being exposed to and subsequently disseminating incorrect or biased information. In addition to the strategies previously mentioned, the scientific community itself plays a crucial role in mitigating this risk by reproducing and correcting earlier results Munafò et al. (2017). The third group becomes relevant when policy development is involved. In these instances, targeted populations might be affected by policies based on incorrect or biased analyses Young (2005). To mitigate this risk, policymakers shall conduct additional analyses to verify the accuracy of the information and use multiple sources.

In conclusion, while SLR tools carry some inherent risks, these can generally be managed through responsible use and adherence to validation and correction strategies Myllyaho et al. (2021). A major challenge remains in enhancing the trustworthiness of these tools through robust evaluation mechanisms O’Connor et al. (2019). As we will discuss in Sect. 6.3, the current landscape lacks high-quality evaluation frameworks.

In the context of the recent EU Artificial Intelligence Act,Footnote 15 it is important to note that if we classify SLR tools as “specifically developed and put into service for the sole purpose of scientific research and development”, they would be explicitly exempt from this legislation. Nevertheless, it is still worthwhile to examine how these tools might be categorised under the four risk categories outlined by the AI Act: Unacceptable Risk, High Risk, Limited Risk, and Minimal Risk. After a detailed analysis of the current draft of the legislation, it seems that a typical AI-enhanced SLR tool would most likely be classified as ‘Limited Risk’. This classification primarily concerns potential issues regarding transparency Larsson and Heintz (2020), which may become more pronounced as these tools begin to utilise generative AI Brynjolfsson et al. (2023). According to the AI Act, these systems should be “developed and used in a way that allows appropriate traceability and explainability while making humans aware that they communicate or interact with an AI system as well as duly informing users of the capabilities and limitations of that AI system and affected persons about their rights.”

6.2 Usability

The current generation of SLR tools remains underutilised Marshall et al. (2018). Most researchers continue to depend on manual methods, often supported by software like Microsoft Excel, or reference management tools Marshall et al. (2015) such as ZoteroFootnote 16 and Mendeley.Footnote 17 Recent studies Van Altena et al. (2019), suggest that this limited usage primarily stems from usability issues, in addition to a few other relevant factors: (i) steep learning curve, as researchers may be unfamiliar with the tools’ functionalities Scott et al. (2021), (ii) misalignment with user requirements, as many of these software deviate from the guidelines set forth by SLR protocols and exhibit limited compatibility with other software systems (Thomas 2013; Arno et al. 2021), (iii) distrust, as there is uncertainty about the reliability and the mechanisms of these tools (O’Connor et al. 2019; Haddaway et al. 2020), and (iv) financial obstacles, predominantly arising from licensing expenses, along with feature restrictions in trial versions Dell et al. (2021). This suggests that usability and accessibility should be prioritised in the design process to encourage wider adoption of these tools (Hassler et al. 2014, 2016; Al-Zubidy et al. 2017).

The literature has given limited attention to the usability of SLR tools. To the best of our knowledge, only a few studies focused on this aspect. For instance, Harrison et al. Harrison et al. (2020) conducted an experiment where six researchers were tasked with using six different tools in trial projects. Findings indicated that two tools also presented in this study, Rayyan and Covidence, were perceived as the most user-friendly. Van Altena et al. (2019) conducted a survey involving 81 researchers about the usage of SLR tools and found that the primary reasons cited by participants for discontinuing the use of a tool included poor usability (43%), insufficient functionality (37%), and incompatibility with their workflow (37%). In the same study, a set of SLR tools was assessed using the System Usability Scale (SUS) questionnaire Lewis (2018). The tools demonstrated comparable usability, with scores ranging from 66 to 77. These scores correspond to a ‘C’ to ‘B’ grade, indicating satisfactory but not outstanding performance.

Therefore, a critical challenge in this field lies in the need for more comprehensive research focused on usability. This involves conducting in-depth studies to understand the various aspects of usability, such as effectiveness, efficiency, engagement, error tolerance, and ease of learning Quesenbery (2014). The goal is to gather empirical data and user feedback that can provide insights into how users interact with tools, identify common usability issues, and understand the specific needs and preferences of different user groups. Based on these findings, it is essential to develop robust, evidence-based usability guidelines Schall et al. (2017). These guidelines should offer clear and actionable recommendations for designing user-friendly interfaces and functionalities in future tools.

6.3 Evaluation of SLR tools

A robust evaluation framework is essential for comparing SLR tools and supporting their continuous improvement National Academies of Sciences (2019). In the following subsections, we will first discuss the shortcomings of existing evaluation methods and then propose a set of best practices as an initial step towards developing a high-quality evaluation framework.

6.3.1 Lack of standard evaluation frameworks

The assessment of SLR tools presents a significant challenge due to the absence of standard evaluation frameworks and established benchmarks. Existing literature includes various evaluations of SLR tools that focus on individual phases of the SLR process (Liu et al. 2018; Yu et al. 2018; Burgard and Bittermann 2023). However, these evaluations are not directly comparable due to variations in datasets and evaluation methodologies. Moreover, most SLR tools are tested using small, custom datasets, which may not provide a realistic representation of their performance in typical usage scenarios Burgard and Bittermann (2023). Additionally, leading commercial providers of SLR tools typically do not make evaluation data available, which complicates comparisons with both existing competitors and new prototypes developed by the research community.

Another concern is related to the performance metrics. Indeed, canonical metrics like precision, recall, and F1-score may not suffice to assess these tools. For instance, for the screening phase, it is critical to minimise the costs of screening while preserving a high recall. For this reason, it was suggested to adopt the F2 score Sumbul et al. (2021) instead of the F1 score. The F2 score is computed as the weighted harmonic mean of precision and recall. In contrast with the F1 score, which assigns equal importance to precision and recall, the F2 score places greater emphasis on recall compared to precision. The Work Saved over Sampling (WSS) Van De Schoot et al. (2021) is another metric that proved to be quite effective in assessing the screening phase Burgard and Bittermann (2023). However, Kusa et al. (2022) point out that this measure depends on the number of documents and the proportion of relevant documents in a dataset, making it difficult to compare the performance of different screening tasks performed over different systematic reviews. To address this, they introduced the Normalised Work Saved over Sampling (nWSS) metric Kusa et al. (2023), which facilitates the comparison of paper screening performance across various datasets.