Abstract

The recent advancements in the field of Artificial Intelligence (AI) have sparked a renewed interest in how organizations can potentially leverage and gain value from these technologies. Despite the considerable hype around AI, recent reports indicate that a very small number of organizations have managed to successfully implement these technologies in their operations. While many early studies and consultancy-based reports point to factors that enable adoption, there is a growing understanding that adoption of AI is rather more of a process of maturity. Building on this more nuanced approach of adoption, this study focuses on the diffusion of AI through a maturity lens. To explore this process, we conducted a two-phased qualitative case study to explore how organizations diffuse AI in their operations. During the first phase, we conducted interviews with AI experts to gain insight into the process of diffusion as well as some of the key challenges faced by organizations. During the second phase, we collected data from three organizations that were at different stages of AI diffusion. Based on the synthesis of the results and a cross-case analysis, we developed a capability maturity model for AI diffusion (AICMM), which was then validated and tested. The results highlight that AI diffusion introduces some common challenges along the path of diffusion as well as some ways to mitigate them. From a research perspective, our results show that there are some core tasks associated with early AI diffusion that gradually evolve as the maturity of projects grows. For professionals, we present tools for identifying the current state of maturity and providing some practical guidelines on how to further implement AI technologies in their operations to generate business value.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The deployment of emerging digital technologies within organizations has nowadays become a central area of focus and a key aspect of competitive differentiation. While there has been much interest over the past decades in novel technologies, the last few years have seen a growing emphasis on leveraging AI technologies (Enholm et al., 2022). Despite some promising early results from industry forerunners, most companies are still struggling to deploy AI in key operations and generate value from such investments (Åström et al., 2022). This issue has been attributed to the fact that value generation from AI is a lengthy process of diffusion and entails different phases of maturity and, accordingly, challenges when doing so (Sadiq et al., 2021). This problem becomes increasingly complex when considering the fast pace at which organizations need to be able to assimilate new technologies such as AI in their operations, as well as their inherent complexity to implement (Boden., 2016). Within the AI community, there is also an increased push from major vendors and consultancy firms for organizations to rapidly adopt AI to maintain their competitive position (Daugherty & Bilts., 2021; Krishna et al., 2021; Ransbotham et al., 2020). Yet, the adoption and implementation of AI often creates friction for organizations, and diffusion is subject to many forces of resistance that need to be managed (Ransbotham et al., 2021). As such, AI transformation becomes more than just an auxiliary technology, but an entire organizational transformation that necessitates coordinated action at different levels (VentureBeat, 2021). Nevertheless, there is still limited knowledge about what challenges organizations face while diffusing AI in their operations, and as a result, there is limited guidance on how to increase an organization’s AI maturity to realize value. (Holmström, 2022).

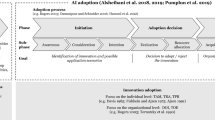

In this paper, we build on the definition of AI as a "system's ability to interpret external data correctly, to learn from such data, and to use those learnings to achieve specific goals and tasks through flexible adaptation" (Haenlein & Kaplan., 2019, p. 5). While there has been considerable attention in popular press on AI as a revolutionary technology, it is important to highlight that the term is used to describe a set of varying technologies such as machine learning (ML), deep learning (DL), and others (Benbya et al., 2020). Since the first conceptualization of AI by McCarthy et al.. (1955), the notion has evolved considerably and has morphed from a mechanical and statical concept to a dynamic one with implications for value. When considered in the domain of organizational applications, AI has been suggested to drive value-creation in the areas of customer engagement, decision-making, and process automation among others (Davenport & Ronanki, 2018). While some studies have identified positive effects between the degree of AI investments and key organizational outcomes, there is still limited understanding concerning how organizations mature in their use of AI and through what process they can eventually realize such gains (Alsheibani et al., 2020; Enholm et al., 2022). Within the field of information systems, there is a realization that, as with any technology, AI requires a process of diffusion and assimilation in organizational practices before it can result in business value (Fichman et al., 2014). Reports from practice also highlight this issue, with a recent white paper from Gartner (2024) underscoring the challenges organizations face when scaling and diffusing AI in their operations.

In this study we argue that it is important to understand how organizations develop AI maturity, and we postulate that there will be different paths and choices concerning how organizations decide to leverage AI during the diffusion process. As such, the main assumption of this work is that there will be equifinality in terms of how organizations are able to generate value from AI investments. To date, there has been a significant body of research that has investigated maturity models for technology diffusion (Remane et al., 2017). Yet, one of the key limitations of many of these studies is that they do not consider the intrinsic characteristics of the digital artifact and how it may influence diffusion approaches (Sadiq et al., 2021). We know from prior studies that each novel technology comes with a unique set of challenges in implementation and deployment (Armstrong & Sambamurthy., 1999). Studies surrounding the organizational use of AI have identified some issues that emerge during the diffusion phase (Trocin et al., 2023). Nevertheless, we still know very little about how the maturity of organizations evolves as they gradually incorporate AI into their operations. We approach this topic by first conducting an extensive review of the current body of literature to identify and categorize different aspects that inhibit the diffusion of AI. We use this as a starting point to develop the following research questions:

-

RQ1: What are the challenges that organizations face when diffusing AI in their operations?

-

RQ2: What are the maturity phases of AI diffusion and how can organizations overcome the challenges that emerge at each level?

To answer RQ1, conduct an extensive review of past empirical research on AI adoption and use within organizations. In addition, we synthesize our knowledge about maturity models and construct an outline of key dimensions and aspects that need to be considered during technology assimilation. In sequence, and to answer RQ2, we conduct a series of interviews with domain experts concerning their views on AI diffusion and key challenges that emerge during the path to adoption and use. Based on these insights we develop a first version of a conceptual capability maturity model of AI diffusion. We then build on three case studies of organizations that are at different stages of adopting AI to validate our capability maturity model. In addition, we apply a cross-case analysis approach to identify common themes and challenges that organizations encountered when deploying AI, as well as best practices to overcome these. We present these in the form of a capability maturity model that depicts the main stages of diffusion, along with commonly occurring obstacles. The article concludes with a discussion of ways that organizations can mature their AI diffusion process and realize business value, while avoiding common pitfalls.

2 Theoretical Background

To develop a comprehensive understanding of the key obstacles organizations’ face when diffusing AI in their operations, and to create a mapping of how they relate to different levels of maturity, we synthesized the latest research in the domain. In sequence, these findings were used to develop the foundations of a novel capability maturity model, following an iterative approach (Bryman., 2012; Saunders et al., 2009). As a first step, understanding the organizational setting for AI was important to capture any nuances and contingencies of the technology as it is diffused for organizational processes. During the second step, we surveyed maturity models and capability frameworks to examine if there were any important aspects we had failed to include. Based on this we sketched a first draft of our AI capability maturity model (AICMM) for diffusion, which we later utilized in our empirical study.

2.1 AI in Organizations

The rapid development of AI techniques and technologies over the last few years has resulted in an increased interest in organizational deployment (Collins et al., 2021). A result of this has been that there is now a myriad of different techniques that have become available for organizations to utilize when deciding how to enhance their operations. AI has already become an integral part of organizations' digital transformation projects, and many organizations whether private or public are now piloting AI projects (Brock & Von Wangenheim., 2019). Nevertheless, a key challenge for organizations that are in the process of adopting AI in their operations is handling the process of diffusion and assimilation in new or revamped operations (Collins et al., 2021). Prior studies have shown that organizations encounter problems that are often not of a technical nature when leveraging AI (Enholm et al., 2022). Adding to this complexity, obstacles in the deployment of AI can arise at different stages of diffusion, and oftentimes concern decisions that need to be taken at various levels within the organization (Alsheibani et al., 2018). Therefore, it is important to develop a holistic understanding of the different factors that are pertinent to diffusion to examine how AI deployments are realized in practice (Sestino & De Mauro., 2021).

When it comes to the types of uses AI can have for organizational operations, research has drawn a distinction between two main types; automation and augmentation (Enholm et al., 2022; Raisch & Krakowski., 2021; Rouse & Spohrer., 2018). Examples of automation include robotic process automation applications on the assembly line, use of chatbots in customer interaction, as well as machine learning applications for application and document handling (Enholm et al., 2022). When it comes to AI for augmentation, several studies have outlined different forms in which humans and machines can complement each other and enable the emergence of core capabilities (Rouse & Spohrer., 2018). Examples of such synergies include the use of AI to process complex financial data to improve decision-making. Raish and Krakowski (2021) conclude that these two categories of outcomes are equally as important, and that firms should identify what operations can facilitate a competitive edge by use of AI. Adopting a slightly different perspective, Yablonsky (2021) suggests using five levels for AI innovation, where the first step is human-led, and the final is machine-led and governed. This way of thinking about AI creates a broader application area for organizations rather than a dichotomy. The logic of this approach is that "AI can extend humans' cognition when addressing complexity, whereas humans can still offer a more holistic, intuitive approach in dealing with uncertainty and equivocality in organizational decision making" (Jarrahi, 2018, p. 577).

The past few years have seen an increased interest and discussion on how AI can be leveraged to facilitate a competitive advantage for organizations. Several literature reviews have highlighted that AI can add value by enhancing performance, productivity, effectiveness, and contribute by lowering operational costs (Enholm et al., 2022). In addition, AI can create a richer breadth of options when it comes to decision-making, speed up processes, and contribute to understanding customer requirements and catering to them in a personalized way (Benbya et al., 2020; Sadiq et al., 2021). In combination with other digital technologies, AI can drastically change how the workforce is structured, how jobs are designed, and how knowledge is managed (Benbya et al., 2020). Other studies have suggested that AI can also improve stakeholders' relationships and customer-and employee engagement (Borges et al., 2021). However, to generate business value organizations need to develop unique resources to effectively leverage their investments (Mikalef & Gupta, 2021). Nevertheless, to date there is significantly less research and a gap in understanding of how organizations develop the necessary AI maturity to achieve such outcomes (Papagiannidis et al., 2021).

In this direction, early reports have highlighted that organizations encounter both technical and organizational barriers when implementing AI applications (Ransbotham et al., 2021). In fact, Sadiq et al.. (2021) argue that human-related challenges are most prominent. Such issues can be related to a lack of skilled staff (Brock & Von Wangenheim., 2019), scarcity of talent (Benbya et al., 2021), unknowledgeable leaders (Fountaine, Saleh, & McCarthy, 2019), or a strategy that does not give space to technology (Dwivedi et al., 2021; Sadiq et al., 2021). Adding to the above, other studies have cited issues such as a lack of trust, fear of replacement, or inertia as important hindrances to value generation from AI (Dwivedi et al., 2021; Mikalef, Fjørtoft, & Torvatn, 2019; Sadiq et al., 2021; Mikalef et al., 2018). In terms of organizational structures, functional silos have been noted to be one of the most significant barriers to realizing AI investments (Mikalef & Gupta., 2021). Taking a more process-view of diffusion, studies have identified that many AI Systems are merely experimental and never put into production (Sadiq et al., 2021). Integration of existing technologies, financing, changes in business processes, and data engineering are also obstacles that emerge during different phases of diffusion (Benbya et al., 2020; Mikalef et al., 2019). In addition, scaling projects can be challenging since the value of experimental projects can be hard to prove, making it difficult to justify further investments (Mikalef & Gupta., 2021). On the other hand, early initiatives may discontinue due to lacking data infrastructure, integration challenges, as well as limited quantity and quality of available data (Dwivedi et al., 2021; Enholm et al., 2022). Taken together, these studies indicate that challenges to deploying AI span both multiple levels within an organization, as well as different phases of diffusion.

2.2 Understanding Organizational Maturity of AI Capabilities

Over time, numerous strategic frameworks and theoretical perspectives have been built on the idea that organizations need to develop valuable and difficult-to-imitate capabilities (Barney & Arikan., 2005). For example, the resource-based view (RBV) posits that organizations can sustain a competitive advantage by acquiring valuable resources that can be then converted into difficult to imitate capabilities (Barney., 1991). Within this perspective, a distinction is drawn between capabilities and resources where "resources present the input of the production process, while a capability is the potential to deploy these resources to improve productivity and generate rents" (Amit & Schoemaker., 1993). When abstracting these concepts to AI management, Mikalef and Gupta (2021) present tangible, human, and intangible resources as key components of an AI capability. Further studies have extended on this logic and argue that for organizations to be able to drive value-generation, they must be able to develop their AI capabilities. In this paper, we build on prior definitions of AI capabilities as "a firm's ability to select, orchestrate, and leverage its AI-specific resources" (Mikalef & Gupta., 2021, p. 2). Based on this definition, we argue that such resources will either enable AI diffusion and value generation if developed appropriately or inhibit it (Enholm et al., 2022). In this context, the notion of an AI capability is an overarching concept, where the constituent components are the resources that comprise it. Thus, an AI capability is developed and matured through the underlying resources that comprise it, and by ensuring that the resources operate in an aligned and complementary manner. Thus, to ensure a holistic view of value generation from AI capabilities it is important to treat the concept as a whole and not only focus on specific resources (Mikalef & Gupta., 2021).

Although the notion of an AI capability has often been associated with decisions made internally in an organization, we know that the broader socio-technical environment shapes and influences how these capabilities are developed and leveraged. Thus, there can be a multitude of elements that either enable or inhibit the diffusion of AI and the development of AI capabilities, either from the internal or external environment. To develop a more complete understanding of the different enables and inhibitors, we build on the Technology-Organization-Environment framework, which was, introduced by Tornatzky and Fleischer (1990), as it considers a firm's technological, organizational, and environmental and how they shape technology diffusion (Alsheibani et al., 2020; Enholm et al., 2022; Mikalef et al., 2021; Schaefer et al., 2021). Since AI initiatives in organizations have been argued to be affect as much on internal resources and structures as on external conditions and pressures, it is important to utilize a holistic lens to determine how these aspects influence AI capability maturity (Enholm et al., 2022). A complementary angle to understanding how AI capabilities are shaped and developed is to view capabilities through a process of maturation. While there may be internal and external pressures that influence the rate and type of technologies that are diffused, there is also a temporal dimension to capabilities which suggests that they mature and develop over time. In this direction, maturity models (MM) have been long used as a useful tool for practitioners and researchers to gauge the level of sophistication of IT in operations. (Becker et al., 2009). Maturity models have been used to measure different aspects of processes or organizational development and have later been developed and adopted to assess the level of AI sophistication (Yablonsky, 2021). When combining the logic of maturity models with the capabilities’ perspective, it is possible to understand AI capabilities as having different levels of development and thus value. AI capability maturity models (AICMM) are thus a useful tool for benchmarking organizations AI capabilities and understanding the process of their evolution (Sadiq et al., 2021). Taken together, the maturity models capabilities can be defined as “the ability of a process (or company) to achieve a required goal or contribute to achieving a required goal” (Herbsleb & Goldenson, 1996; Lacerda & Von Wangenheim., 2018). When it comes to AICMM, the key pillars on which they are developed are typically generated through conceptual or empirical approaches (Sadiq et al., 2021).

Within the context of maturity models, there tends to be considerable variation in terms of scope, typology, and design. Within the context of AI capabilities, Sadiq et al. (2021) looked at three purposes of using maturity models in an AI setting: descriptive models provide understanding of the status quo of AI in a domain, prescriptive models offer a roadmap for improvements to progress to the next level, and comparative models which offer comparison and benchmarking. Based on this categorization, the authors argue that research has been limited to descriptive and comparative approaches, with much of a less focus on prescriptive approaches (Sadiq et al., 2021). An additional angle on maturity models concerns the levels to which they assign maturity as well as the form of representation, whether in stages or continuous format (Lasrado et al., 2015; Sadiq et al., 2021). Lichtenthaler (2020), offers five stages: initial intent, independent initiative, interactive implementation, interdependent innovation, and integrated intelligence. Contrastingly, Brock and von Wangenheim (2019) use implementation stages on a numerical scale for 0 to 4. From practitioner-based resorts, Ransbotham et al. (2020) present four levels of maturity: discovering AI, building AI, scaling AI, and organizational learning with AI. Element AI (2020) uses five stages which include: exploring, experimenting, formalizing, optimizing, and transforming. These levels indicate that there are specific expectations and actions associated with each level, as organizations develop their AI capabilities.

3 Method

3.1 Research Design

The objective of this study is to understand the key aspects that enable AI diffusion through an AI capability maturity model (AICMM). To explore this topic, we applied a two-phased research design to synthesize current literature and gather expert input, and to develop and validate the AI capabilities maturity model through a series of use cases. The first phase built on an exploratory approach where the aim was to synthesize the current body of knowledge around AI diffusion in the organizational setting. To complement the literature review, we utilized a pool of experts to enrich our understanding of AI diffusion (Yin., 2014). The multi-method design allowed us to put together a picture of where theory and practice align. The second phase included formulating and validating the AICMM through a series of case organizations. To do so, we utilized an embedded design approach, which allowed us to draw comparisons and obtain an overview of multiple elements that related to AI capability maturation in the context of the internal and external environment pressures. By exploring multiple cases we were able to analyze each case by itself and across different situations, making the findings more robust and more reliable (Gustafsson., 2017).

3.2 Research Setting

To actualize the objectives of this study we worked in two empirical phases. During the first phase, the aim was to develop and refine the AICMM and to cross-verify challenges and levels of maturity against previous research with AI experts. To validate the constructed AICMM we built on a pool of experts around organizational diffusion of AI. Specifically, we utilized experts from AI consulting, which is a relatively new branch and assists companies to improve their business through AI diffusion (Dilmegani., 2022). Utilizing insight from respondents in large consultancy firms showed that most already offer such services through their analytics branch. Therefore, consultants within this domain were relevant respondents due to their prior experience. Apart from consultants, we also used a pool of AI researchers that had worked on practical cases with organizations. Our initial search for participants was conducted through convenient sampling. After contacting a first set of respondents, we utilized a snowball sampling method to reach other relevant practitioners and researchers with experience in the organizational diffusion of AI (Bryman., 2012).

During the second phase, we conducted a multiple case comparison between organizations that were at different stages of AI capability development. Respondents from these organizations provided insights into the organization's AI initiatives and utilized the AICMM to map their current level of maturity and to provide insight for its refinement. The selected organizations either had AI diffused in several operations or were at the start of an AI initiative. To ensure that the AICMM can be generally applicable, we emphasized selecting contrasting organizations in both industry and AI maturity. We utilized a similar approach to identify respondents in these organizations as with the first phase. With input from the first phase, a list of relevant companies was drafted, and the organizations were contacted. In total, three organizations were selected. During the interviews with the respondents in organizations, we emphasized the need for a variety of participants to ensure that all aspects of the AICMM were captured accurately. Hence, we notified respondents that they could also seek out the input from other knowledgeable colleagues, and suggest other key contact persons in their organizations that could help provide a holistic picture.

3.3 Data Collection

To collect data during both phases, we conducted semi-structured interviews with respondents as they enabled richer input and flexibility in the key themes they wanted to highlight. The objective was to make the interviews feel more like well-directed conversations than an interview and to discuss important themes related to AI diffusion. It also allowed us to raise follow-up questions on specific answers. The collection phase was set to Q1 in 2022 and conducted in two phases with similar interview frames. Although most interviews were conducted in person, some participants were offered the opportunity to participate digitally due to limited time. In addition, some interviews were conducted online due to Covid-19 restrictions that were still active at that time. Having interviews in a hybrid format also made the recruitment process more straightforward as it provided flexibility for respondents. From the interviewee point of view, there were several aspects that were considered prior to collecting data. First, an identification of personal biases was conducted to evade them from affecting our response and follow-up questions (Bryman., 2012). We also focused on being deeply present in our presentation of questions to probe for additional answers and transcribing notes in an unbiased and factual manner (Bryman., 2012). In semi-structured interviews, it is typical to prepare interview guides with various phases while simultaneously being able to ask probing questions (Bryman., 2012). To ensure limitation of bias and appropriate follow-up questions, there were always at least two interviewees in each session.

3.4 Data Analysis

The analysis of the extracted data followed a qualitative approach after transcribing the interviews and annotating them independently by at least two of the co-authors. The qualitative data that were used came mostly from the interview corpus, as well as secondary sources that were provided by the interviewees, such as reports, presentations, press releases, and other non-confidential documents. We followed appropriate procedures for preparing the data, including transcribing, summarizing, coding, categorizing, and identifying relationships stand central (Jacobsen., 2015; Saunders et al., 2009). For each interview, comprehensive notes were also taken by one of the interviewees and summarized right after. As a result, the categorization mostly followed an outline sketch model that was developed based on prior literature and was refined based on the input of the interviews in the first phase. During the first phase of interviews, we categorized our interview guideline into four parts: introductory knowledge, capabilities, AICMM opinions, and other relevant information. During the second phase, we divided the interview guideline into five parts: introduction to participant and company, technological aspects, organizational aspects, environmental aspects related to AI diffusion, as well as other important information that may be relevant. Finally, we utilized a set of analytical procedures to map and categorize findings. In the first phase of data collection, the categorical patterns were matched across the participants to build a more comprehensive understanding (Saunders et al., 2009). During the second phase, a cross-case analysis was conducted to facilitate comparison of commonalities and differences among cases (Khan & Van Wynsberghe, 2008). Doing so allowed us to evaluate the organizations against each other and to extract common patterns or approach of AI diffusion.

3.4.1 Findings

As a result of the two-phased research we divided findings into two parts. The first phase summarizes the insights that were extracted from interviews with the AI experts (4.1). We specifically explored the technological, organizational, and environmental aspects of AI capability maturity and how they were perceived by the pool of respondents. During the second phase, the goal was to apply the developed AICMM to three cases to validate it, extract some important insight concerning its value in practical setting, and to refine it (4.2).

3.5 Phase 1: AI Experts View

3.5.1 AI Capability Maturity Model

The objective of a maturity model is to identify critical dimensions to focus on and facilitate the diffusion process of AI in organizational operations with the goal of enhancing value creation. The dimensions of the AICMM were extracted based on the resources that comprise an AI capability in the work of Mikalef and Gupta (2021) as well as on input from the expert respondents. Specifically, under the technological dimension we used the dimensions of data and infrastructure, under the organizational aspects, strategic vision, people, and organizational culture, and under the environmental aspects, ethics, morals and regulation, and competition. The reflections and insight into the dimensions and their content came from five experts with extensive experience in the field of AI diffusion in organizations. The respondents gave important insights into each dimension of the model that helped refine the different levels of which it comprises, as well as the important milestones for each. For instance, one respondent noted the following when it came to the data dimension: “We will check if a company has control over their data by asking them to send us some data. From that you can see a lot of what is going on. It clarifies whether the data is good, bad, structured, unstructured, centralized, or decentralized.” Similarly, responses on the other dimensions validated and help shape the final dimensions of the model, as well as the different levels of maturity of AI capabilities.

The respondents also commented on the overall AICMM overall and the importance of such frameworks. Specifically, they noted that for an AI maturity model to do well, one must dig deep and dare to invest and test as it requires time and resources to capture the critical success factors. Further, there is a need for someone to translate the measurement process and communicate a common understanding. In other words, for mapping to succeed, requirements for questions asked, the translation, and the mapping of maturity should be set. While mapping contributes to transparency, alignment, and competence-sharing, it is also beneficial if utilized iteratively. With AICMM serving an iterative role, it also lays the foundation for future cooperative work. Further, it needs to be generic enough to be customizable for different cases. When asked about the limitations of maturity models, one participant answered: “There will always be limitations. It could be more thorough. You could spend a year mapping an organization, however, that is not the purpose of this tool”. The levels in a maturity model should not be perceived as a to-do list for success but rather enable a sense of direction and clarify expectations. In doing so, maturity models such as the AICMM can allow for planning in terms of investments and key decisions that need to be made at different levels within the organization to increase value capturing from AI investments. A respondent also commented that the AICMM can be used in a continuous manner, where it presents an overview of the status at each point in time and a sense of direction of further actions and changes. Based on the insight from the AI experts, a refined draft of the model was developed, and was validated and tested in phase two.

3.6 Phase 2: AI Organizations

During the second phase of this research, we built on a selection of organizations to further explore and validate the AICMM we drafted. The goal of this approach was to map and explain the maturity of organizations in a structured way, and to ensure that the levels and dimensions we had included reflected the key dimensions pertinent to developing an AI capability. We start by introducing the three case organizations and the respondents in each. This is followed by a thorough analysis of each organization based on the AICMM.

3.7 Retail

The first case is an online company founded in the 2010s specializing in the domain of retail. Since then, it has had significant growth and become an international company with a global presence, and currently employs more than 40 individuals. In total, three respondents were interviewed. One from customer experience (Retail1), the chief technology officer (CTO) (Retail2), and the head of IT operations (Retail3). The company has recently completed an extensive system migration to reduce legacy and improve communication across platforms.

3.8 Technological Dimensions

All participants agree that there are many forms of data available and that they can access for building AI applications. One of the respondents highlighted the fact that data requirements are a necessity of the industry, and that their company had an advantage in terms of availability of data: “We operate within an industry with more data points than others” (Retail3). The data that was used in this company came primarily from customers, website action, orders, and shipping among others. Nevertheless, it was still challenging for the organization to use their data in an active manner. Retail1 mentions that she must ask for or manually export reports through various platforms. They recently started to work with an external partner to develop a canonical data model (CDM) that gathers, cleans, and stores data from various data points (Retail2). “It has been much work to implement it, but it will help prepare us to utilize the data we have” (Retail2). The respondent from the business domain mentioned that data processes are still fragmented and manual (Retail1). Respondent Retail3 agrees: “today, we are about average here” (Retail3). The digital infrastructure of the company is frequently seeing changes. “We try to build as little as possible ourselves and rather focus on finding vendors that offer out-of-the-box integrations with other systems” (Retail3). The result is not one massive platform but several smaller. The company struggles with the distance between systems and current connections have a ‘homemade’ feeling (Retail1). The objective is that the newest implementation will improve this (Retail2). It consists of a more centralized ERP-like system specifically good at tying platforms together. Many of the platforms come with integrated AI solutions. However, it is probably years until such solutions will be utilized (Retail2). By then, new platforms or solutions may already be implemented.

3.9 Organizational Dimensions

Strategically, the digital and technical aspects of the organization have been integral. One of the respondents highlights that: “We have always been digital-first” (Retail3). Adding to the previous, there is also acknowledgement that the organization has a strategic orientation where technology has an important role: “This last integration is probably the single biggest investment the organization has ever done” (Retail2). All agree it is not whether technology is considered on a strategic level but rather around the phase they are in. “With our growth position, there are so many areas of the organization that constantly need improvements, not only from the tech side” (Retail3). Respondent Retail1 follows up: “although management seems willing to utilize such technology, it still feels quite ahead considering time”. Based on all respondents, technology investments and AI appear to be considered equal to any other part of the organization. When the time comes, it will not be hindered by the strategic layer, but rather what is prioritized. Additionally, all respondents agree that the IT department is still under-staffed based on future ambitions. One of the respondents clarifies that: “Many in the organization see areas that technology can improve, but the tech team’s backlog is just too long” (Retail1).

Immediately it shows a clear understanding among the non-technical staff that future technologies, like AI, can create opportunities for the company in many different areas. This is something that the more technical people also agreed on: “The capacity on our various teams is already severely pressed” (Retail3). A common critical inhibitor concerns technical competence: “In order to succeed in becoming more technology-driven, we need to strengthen the internal technical environment” (Retail1). Using external sources is suitable for projects, but the technical competence within the organization also needs to develop in the long run. So far, this is something that has not been prioritized but is getting more attention now. One of the respondents highlights that new technology places pressure on the right employee skills: “We have seen with the latest tech implementation that it is very demanding after the initial roll-out too” (Retail2). This finding is also confirmed by the IT manager: “A focus we will have going forward is to hire the right people that we can learn from” (Retail3). When the technical capacity is in place, a crucial point becomes the cooperation between tech and other departments: “We need to be able to show the people how the new solutions can help make their workday easier” (Retail 3). Based on the respondents, this does not seem like a significant problem as it is more connected to having the time to cooperate. An evident positive organizational trait is their openness to change, and the culture has a base of solid team spirit and considerable flexibility (Retail1,2,3). A negative aspect of such a culture is that it can make it harder to prioritize what is essential and consequently may lead to extra hours. If every task is felt to be critical, something is wrong. Thus, prioritization should be emphasized by creating good routines around project management and tasks. Despite being digital-first, there are still barriers to cross-organizational cooperation and silos still exist.

3.10 External Dimensions

When exploring the companies’ approach to ethics and regulations, it became evident that they are still lagging others in the industry. One respondent (Retail2) argues that notes that it is hard for a relatively small company to navigate what is right and wrong. With the pressed capacity, it is hard to use the time to forecast how this field will look in the future. Respondent, Retail1, points out that perhaps they are a bit reactive regarding ethical questions around tech, but they always have good intentions: “An example is when someone pointed out that our website was not that good for visually impaired customers. When we got that message, we wanted to fix it” (Retail1). If these capabilities remain reactive, it may lead to negative consequences for AI use. Lastly, around industry forces and motivators, an ambidextrous relationship appears: “We want to be forward leaning” (Retail1). Ambitions are high, and economically and geographically, the growth phase still transpires. However, the company is not stable enough to become an innovative actor within their industry. It is early to consider AI technologies, and for AI to be relevant, proven solutions must be offered by external vendors. The motivators of exploring AI are connected to the financial results. Respondent, Retail3, mentions that AI will be prioritized where the organization can either increase sales or efficiency.

3.11 Bank

The second company that is used in phase two is a large Norwegian bank with a substantial market share and a strong digital presence. It is also a company with a complex digital infrastructure that offers a multitude of online services. In 2018, the bank intensified its data efforts. From the bank, two respondents were interviewed. One worked within the data science field (Bank1), and the other worked within cyber security (Bank2).

3.12 Technological Dimensions

The respondents noted that the bank had been making substantial efforts to get control of its data: "Structuring data was the main reason I got hired" (Bank1). In recent years the company has taken clear steps toward creating a sound system around data with data warehouses and data lakes (Bank1, Bank2). The bank has massive amounts of customer and behavior data where much of this data was of a sensitive nature, and thus had to be handled in accordance with strict regulations (Bank1, Bank2). The quantity of data was not a problem that the bank faced, but it was the compliance restrictions that was challenging to work with. Such tasks are only prioritized once use cases are clear (Bank1). The bank has a complex infrastructure with multiple systems and system legacy is bound to exist (Bank1, Bank2). Respondent, Bank2, points out that it is easier said than done to fix this: "because of all the regulatory supervision we as a bank face, it is not like we can just delete everything old and start from scratch as smaller organizations do". However, it feels impossible to avoid this from happening, and it may not be a considerable hindrance. Respondent, Bank1, expressed that rather than focusing on all the legacy, the bank develops central resources that explore how AI is used today and can be utilized tomorrow.

3.13 Organizational Dimensions

Strategically, the large hierarchy leads to a long decision line and a lengthy quarrel for resources to innovate. One respondent (Bank1) explains two main ways to fast-track decisions: either managers see risks that need to be taken seriously, or enough employees want technological changes. Today, large distances between groups and clear silos have become a problem. The respondent also commented that some divisions are easy to cooperate with, while others are prolonged. This makes the second option hard to achieve. There was also a clear ambition as noted by a respondent: "We want to be a step ahead, but that depends on what people further up decide to focus on" (Bank2). A better alignment around AI was therefore seen as necessary. However, quantification of data and AI was not on the agenda yet. A summary to the above was that there is a lot of responsibility and decision-making allocated to top management when it comes to AI adoption and use.

When it comes to employee knowledge and specializations, the respondents note that competence appears sturdy: "Take us in cyber security, for example, I think we are among the largest professional environments in the country" (Bank2). This is a positive side to its large size as it allows for broad investments that make each group and division solid. A general skepticism toward AI is noticeable due to the highly restricted industry that they operate in. Respondent, Bank2, explains that she often works in teams with other technical divisions connected to her area. Teamwork works well but is rarely extended to include other organization groups. Even within the technical division, efficiency is not on top: "I may not know whom to contact, but we have organizational maps and an overview of people, and then there is always someone that has worked in the bank longer than you" (Bank2). Cross-organizational cooperation may, in other words, be hard to accomplish. Lastly, the two participants point to some clear differentiators. First, the bank has a strong brand name and reputation: "I think that people believe that the bank is a good bank with solid values, open culture and that we care" (Bank2). A testament to this is its stable market share. Similarly, a respondent commented on the bank's incredible effort to protect its customers and their data: "Our customers must feel certain that our digitized processes work every time. This may be something that smaller banks also offer but not with the same security as us" (Bank1). In other words, they offer consistency that more minor actors cannot. Further, the second respondent mentions that it is easy to become risk-averse because of this explicit focus: "If the bank makes a mistake, everyone will know about it" (Bank2).

3.14 External Dimensions

Ethics and regulations were mentioned multiple times throughout the interviews. The company works to avoid negative media coverage, especially on sensitive matters, which is why security and data handling have been heavily prioritized in recent years. Risks of missteps around regulatory issues is the most apparent reason the company does not venture harder into AI technologies (Bank1). This is relevant for the whole industry. The bank also focuses on openness and transparency for customers and fellow industry actors (Bank2). Technological growth is not necessarily their aim, but rather governance and adhering to all regulations. The organization has a relaxed approach towards trends, and they only pursue new methods when ROI is evident. In one way, this is more reactive. Considering the industry, this makes sense as most traditional banks will have similar views on this. Smaller and more technology-oriented banks may cause a future threat. The respondents highlighted that: "The bank hasn't really pushed for using AI for growth" (Bank1). With its size, it seems clear that if they prioritized, they could become an AI leader. The hypothetical question is thus whether complete control of regulations and governance can open for more explorative AI techniques in the future.

3.15 RoboTech

The third company is in the robotic and technological industry and was founded in the mid-90 s. Over the past few years, it has experienced significant growth and now has approximately 600 employees. In addition, it is the only firm that has previously utilized maturity mapping for its AI capabilities. Despite their size, their internal data team is relatively small. Within this company, we interviewed the Director for Cloud and Data (RoboTech1) and the CTO (RoboTech 2).

3.16 Technological Dimensions

Their data journey has started very recently. “I saw many data sources moving across the organization in unorganized ways and decided to look more into how we could improve in this area. As an extension, I contacted an external consultancy firm that completed a data maturity assessment for us” (RoboTech 2). This led to the employment of RoboTech1. In less than a year, the data field has expanded, and a data strategy has been developed. RoboTech1 mentions three platforms that they work on preparing: data, API, and ML. The first two are underway, while the last one is yet to come. The only questionable thing is that data has not been on the agenda earlier. Around its internal infrastructure, they have taken steps toward standardizing and centralizing as much as possible employing one of the prominent providers. “Connecting data from different system providers will only make our job much harder, so I work hard to get colleagues to see the benefits of using the same systems” (RoboTech1). “Because we are moving so fast, we just wanted to move towards standardizing how we do things as early as possible. Also, because there’s few financial borders it made sense to use one of the large providers seeing that they offer nearly everything that is needed” (RoboTech2). With great efforts towards standardizations, they are also aware of some of the critical challenges. RoboTech1 states that it will require more work to customize such solutions, while RoboTech2 indicates that it may cause friction when divisions that use other platforms or software today are “forced” to change. “It is really important for us to understand why we want to do things in a specific way, and we try to accommodate for their meanings” (RoboTech2).

3.17 Organizational Dimensions

When it comes to organizational aspects, the respondents noted that value prioritization is important when deciding what AI application to support: “Since we do not have harsh economic barriers, I am able to bet on areas that look promising, but I still have to prove that there’s value in it” (RoboTech2). Proving value is also something that RoboTech1 mentions: “It is definitely a barrier to show how these data investments will affect the bottom line” (RoboTech1). Strategies around data and AI have been developed, but it is still too early to predict how these will progress. Moreover, the organization operates with a highly technology-driven product. This creates a broader openness in exploring new areas, if it can be justified.

From their previous maturity assessment, RoboTech2 states that its organizational resources proved highly developed. What is evident in their approach is how they include the broader organization in their work: “It is vital for me that less tech savvy people feel that they can ask stupid questions” (RoboTech1). The company has already started to share and develop BI-driven dashboards across the organization. When it comes to skills and knowledge, considerable efforts have been placed around hiring: “I would rather hire a person hungry for learning that, and that I may need to use more time on training and shaping than someone very good in a specific area” (RoboTech1). The second respondent added: “We do not need the best in the biz, we rather look for people that are willing to communicate outside their expertise area. We hire for attitude and train for skills” (RoboTech2). In other words, the emphasis is not purely on hiring the best technologists but on people that will be able to communicate across the organization. Around hiring, RoboTech1 also mentions the variety of roles one finds within the data science field: “I think many organizations move a bit fast when hiring data engineers” (RoboTech1). Their focus is instead on hiring data scientists and analysts first before engineers as these build the foundation.

In terms of organizational culture, RoboTech was significantly different from the previous two companies. Much of this comes from their growth position as noted by a respondent: “When I started four years ago, we were 120, now we are around 600, and next year we will probably be around 800. We need to have this kind of culture to continue this journey” (RoboTech2). The company still operates on a significant scale-up and has enormous growth potential: “What is nice is that we are still not too big. We do not carry massive legacy data and systems and have enough security to expand in many directions” (RoboTech1). This requires a delicate balance between building reasonable solutions and deploying them relatively fast: “We need to keep up internally without losing control” (RoboTech1). The culture shows a willingness to change, and at the same time, the company offers enough safety. One of the respondents noted that the company is relatively average when taking risks. As an example, the other respondent mentions the technology that they use: “If you look at our internal tech stack, we do things pretty basic” (RoboTech2). The clear differentiator thus appears to be connected to the culture that enables a balance between ambition, fast pace, and quality.

3.18 External Dimensions

Within RoboTech, ethics was a topic that had received little consideration yet. Moreover, endeavors toward data governance were prioritized: “Because we have not touched on a major AI project yet, we are not that scared around the ethics of AI, but we are doing solid work around data security” (RoboTech2). A respondent also expressed the complexities that regulations introduce: “It is a challenge to navigate, especially because we need to consider different sets of laws” (RoboTech1). Currently, their primary market was in Europe, but significant growth in the US was expected. Another positive side to centralizing around one of the major technology providers is that they offer data governance solutions, which are currently being explored (RoboTech1). When it comes to AI motivators, the respondent noted that the company focuses on the value it will bring. A respondent linked value specifically to customer requirements: “I want data to be available and accessible to people […] When people in the organization start to realize the value and the mindset around utilizing data, that is when our area speeds up. Then they will be the ones that find the use cases for new solutions” (RoboTech1). The logic of the company according to the respondent is that they are open to creating digital products that can potentially be offered to customers. According to another respondent, AI will have to provide value, like through process improvements: “I also have a large curiosity towards AI and believe that it will be a large differentiator between firms in the future” (RoboTech2). External motivators such as competitive pressures were not mentioned. Thus, it appears that internal forces drive AI diffusion in the organization.

3.19 Towards an AI Capability Maturity Model (AICMM)

Synthesizing the literature review on AI capability and maturity models and integrating the findings for the two phases of the empirical design we developed the conceptual AI capability maturity model presented in the table. The AI capability maturity model delineates five levels of maturity across the three core dimensions that comprise an AI capability. Within each of the three core dimensions, we identify and refine the sub-dimensions that are central to the corresponding pillar. Plotting the two axes in the model, we constructed a detailed model that can enable organizations to identify at what level of maturity they are in each individual sub-dimension. Such a model allows for identifying potential weaknesses and planning for future developments. An assessment of its use is conducted below within the three case organizations we used in this study. They demonstrated how the participants perceived the AICMM, and how it sparked a reflective discussion within each organization.

Technological | Organizational | External | |||||

|---|---|---|---|---|---|---|---|

Data | Infrastructure | Strategy | People | Culture | Ethics and regulations | Pressures and motivation | |

5. Transformational AI is a core part of the organizations business model | Highly automated and reliable. Multifaceted use of data | Push towards AI to manage tech infrastructure. Explore complex problem solving through AI | Chief AI officer present. AI is seamlessly embedded in strategy. Discover and act on innovation | High degree of AI literature. Drive towards AI career paths. Development of interdisciplinary roles | Ingrained – most roles with some sort of AI. High degree of interdisciplinarity | Development beyond current solutions to revolutionize how to think about tasks. Helps shape industrial standards together with regulators | Explore AI to be innovative and create better solutions for the organization and the world. Internally motivated and highly proactive |

4. Embedded Systematic orchestration of different AI projects and pervasive use | Up-to-date, usable data. Majority of data connected to data platform | Centralizing monitoring and auditing. Explore personalized or tailored AI solutions | CTO/CIO management of integration of various departments. Strategy aligned with business strategy and clear KPIs | New talents help other employees to adapt. New roles like ML engineers. Shared ownership across organization | Cross-organizational cooperation through strategy. Formalized center of excellence. Clear communication and processes at all stages | Ethics board present. Standard guidance for responsible AI practices. Formalized sustainability reporting and use of AI to be more sustainable | Digital-first approach and considered an industry leader. Find motivation through improving organizational processes |

3. Formalized AI used in production to exploit diverse business opportunities | Data processing platforms present. Control of quality and measures and prioritized data collection | Modern and centralized infrastructure. Standardized AI deployment reusing some models in parts of the organization | C-suite and budget support. Clear accountability and documented AI strategy. Shared understanding with clear use cases | Data science helps business through knowledge spreading. Minimal resistance towards AI change | Center of excellence to provide skills and resources. Learning organization and culture of change | Centralized and formalized reporting. Strive for full transparency. Proactive towards regulations | Motivation towards creating opportunities internally. Becoming increasingly proactive |

2. Ad-hoc Experimentation through several projects in data science context | Assemble usable and accessible data. Starting to break down data silos and creating collective data spaces | Manual ML training. Cloud solutions utilized but few AI or ML solutions | Some C-suite support, enough knowledge. Limited financial support for initiatives | Need for specialized expertise. Recruitment of data science employees. Organized learning around AI and traces of skepticism | Cross-functional activities around AI. Active identification of learning paths | Full understanding of current ethics and regulations. Clear responsibilities around responsible AI use. Follows large vendors and consultants for governance approaches | Considering AI purely for economic reasons, cost-cutting and improving efficiency. Outward looking for opportunities |

1. Explorative Exploring technical feasibility and business viability | Started data collections and limited understand of requirements and “right data”. Siloed data | Fragmented system infrastructure. Cloud journey initiated with high use of legacy systems | No C-suite support or understanding of AI. No real AI strategy and lack of business cases and enthusiastic leadership | Large contrast between business and tech side. Visible skepticism among employees about AI. Little focus on AI skills and lacking knowledge sharing among groups | Starting to develop AI literacy. Limited communication across the organization and siloed work practices | Thinking about responsible AI. Follows GDPR and other relevant regulations. Motivation for considering ethics is reactive | Follows competitors and hype. Externally motivated and highly reactive. Technologically lagging behind industry |

0. Inactive AI journey not started yet | NA | NA | NA | NA | NA | NA | NA |

3.20 Technological Mappings

All case companies realize there is value in data and have started working around creating data platform solutions. Within this area, the bank was leading the way as they have been utilizing data for insight generation for some time. On the other hand, RoboTech and Retail were in the early stages of implementing some first initiatives. What elevates RoboTech is that they appear ready to utilize a well-defined set of quality data. In this regard, Retail falls behind, and the bank are hesitant due to regulatory constraints, reducing the potential realization of value-generation from data. In the financial sector, an important inhibitor was the compliance work needed to utilize data and specifically personal data. These findings generally diverge a bit from both experts and literature who paint a picture of organizations that still struggle with the most basic data tasks. All organizations still had lots of work ahead, particularly towards using and automating AI solutions connected to the data they have gathered, as none has been able to create any measurable value yet. Furthermore, none utilizes big data sets, with their current datasets being more structured and smaller in volume. Around infrastructure, all utilize cloud solutions from different vendors. A differentiator here is legacy in systems, where RoboTech had made steps toward centralizing systems, and the bank on the other hand carries with it a massive legacy through years of digitalization projects. Retail has prioritized a more fragmented systems solution which allows them to gradually retire large parts of their legacy infrastructure but may at the same time create distance between systems. In preparing for the AI wave, Retail’s priority was going ahead with the system integration plan. Despite being heavily dependent on legacy systems, the bank has been able to stay digitally relevant but struggles enormously around developing central solutions. Lastly, with its centralizing efforts, RoboTech's next step was focused towards deploying new digital solutions. Such solutions are more standardized and are easier to deploy due to the independent nature.

3.21 Organizational Mappings

Strategically, RoboTech was assessed as more mature than the other two case companies. The key reasons stem from their technological background, which meant that they had fewer technological barriers. Additionally, their massive growth allowed them to invest more in necessary organizational changes and in expanding. They are currently developing a data strategy and have fewer barriers than the others around the merger of technology and business. Similarly, Retail also states they are a digital-first company but have noticeable problems finding the time or resources to prioritize data and AI projects. Although they look to have some C-suite support, IT remains a fragmented part of the organization. Similarly, this is one of the signs in Bank, which also appears to have no interest in prioritizing AI. Thus, it is expected that the road to AI alignment will flow much more naturally for RoboTech. Further, Retail and RoboTech experience financial and geographical growth but tackle this in a different way. A good approach would be to intensify the strategic work around spreading the opportunities of how AI can be utilized across the organization and quantifying this. In the dimension of people, Bank, and Retail are on a similar level of maturity. The bank comes ahead due to its technological advantage which has also meant training and hiring relevant employees. They have for years focused on hiring unique expertise but are pulled down because of significant gaps between the departments, which creates more resistance and skepticism around AI. On the other hand, the Retailer still struggles to cover the technological know-how even though the general workforce seems to be positive towards new technology solutions. In this regard, RoboTech differs again because the technical side already focuses on including non-technical staff in their work. They also appear to experience less confusion around such technologies. This finding highlights the importance of outward communication of the technical department, as this is something that the literature also emphasizes (Enholm et al., 2022; Holmsen., 2021). Lastly, in terms of the culture dimension both Bank and Retail score very similarly, but for different reasons. The bank has a significant market position and appears transparent towards customers but has a culture that is concerned of making any mistakes. On the other hand, Retail has a better willingness to change. Such flexibility is a remarkable trait, but it affects Retailer's prioritization. Because of the high pressures to do short-term tasks, it becomes harder to think ahead. Thus, RoboTech has a better balance between growth and quality.

3.22 Environmental Mappings

Due to its heavily regulated industry, the bank has naturally prioritized ethical dimensions of AI diffusion more than the other two case companies. The two companies comply with regulations, Retail through an external service and RoboTech through a data governance product delivered by its infrastructure vendor. Both though experience significant challenges in aligning with ethical expectations and norms. Retail has a culture and brand that assumes that ethics, in general, is essential. On digital ethics, they remain reactive and have little know-how about how to incorporate them in their digital solutions and how to communicate them. RoboTech experiences difficulties in navigating regulations in various geographic locations where it operates. The expert findings also pointed out this problem, where US regulations are still softer than in Europe. Finally, none of the organizations are very proactive in terms of emerging developments and are far away from being able to use this point to their advantage. We, therefore, claim that this point can be a great differentiator for companies that want to create a competitive edge. The last point in our mapping concerns the motivation, which reveals that RoboTech has a more proactive motivation toward exploring AI. The company does not mention any external motivators but rather are prompted by their belief that AI will help employees and their overall workflow. On the contrary, the rest of the case companies appear to be more reactive. The bank scores low in the maturity matrix because it has little push toward becoming AI-driven or using AI to improve their economic situation. We argue that AI can still offer positive and proactive resources internally, and by disregarding any AI growth, these opportunities are lost. Lastly, Retail is high on ambition but is still too externally and economically motivated. For any value to occur, stability must transpire first.

4 Discussion

In this study we have sought to investigate how to develop a maturity scale for AI capabilities that can be used by organizations. The main motivation for doing so is that although we have a good understanding of the key elements that form an AI capability, there is limited guidance on how to move up the maturity scale in the sub-dimensions that comprise an AI capability to realize business value. To this end, this study has integrated prior literature on maturity models, AI capability and business value, and built on a two-phase empirical approach to develop and validate a proposed framework. We then utilize this framework on three case organizations to analyze their current state of AI maturity and to identify key obstacles that are preventing them from realizing value from AI investments. The findings and the in-depth analysis allow for a refined and detailed AI capability maturity model to be created.

4.1 Research Implications

While there has been considerable research in AI capabilities over the past few years (Mikalef & Gupta., 2021), there is still a lack of understanding concerning how organizations develop and foster such AI capabilities. An assumption of many prior studies is that AI capabilities develop in a uniform way and that all organizations are subject to the same process of AI maturation. Nevertheless, our study highlighted that even between organizations that are on similar levels of maturity in certain dimensions, there are unique challenges and obstacles that hinder them from realizing the full potential of AI. In this regard, the AICMM provides a more nuanced view of the ways AI capabilities develop, and specifically on how different dimensions, or core resources, may be mature. Certain organizations are challenged by technical issues, whereas others may be hindered by external forces such as regulations and tight oversight. Understanding how these forces influence AI capability development enables a more in-depth exploration of how to become more AI-ready. This finding also raises further research questions about the path dependencies and forces that shape the ways organizations decide to mature their AI capabilities and how they choose to navigate under different conditions and constraints. Developing a more in-depth understanding of the pressures that prompt organizations to mature their AI capabilities, as well as the dependencies that exist, can enable researchers to identify optimal trajectories and courses of action. In this regard, frameworks such as resource dependence theory or institutional theories can allow for a more nuanced understanding of pressures, constraints, and subsequent organizational choices.

Furthermore, while there has been a long history of using maturity models for understanding technology diffusion processes, there has been considerably less work in the scope of AI technologies. This is quite striking when considering how AI technologies necessitate a complex nexus of resources and assets to be orchestrated within the internal and external organizational ecosystem to drive value. In fact, AI technologies may be one of the most complex technologies and with the broadest set of dependencies that any other digital technology studies in the information systems discipline. Our findings in terms of the empirical results also point out some very interesting insight that contrasts with what we know about the diffusion of digital technologies. For instance, we find that managing AI strategy and governing data assets are critical in the early phases. These must, therefore, receive greater emphasis and form the foundations upon which AI capabilities are built. Yet, navigating these two elements requires that organizations also consider the general and industry-specific regulations and guidelines for good practices. Thus, setting the foundations for AI becomes an organization-wide exercise from day zero.

In addition to the above, the maturity mapping and the case organizations we use to exemplify it highlight how even one dimension of the AICMM can become detrimental to overall value generation from AI capabilities. For instance, ethics, privacy, and security of data used in AI applications can be an inhibiting aspect for organizations that operate with sensitive customer data. Understanding how to navigate these complexities is an important aspect that is generally overlooked in studies that adopt a more quantitative approach to measuring AI capabilities. Moreover, the approach we illustrate through our AICMM shows that AI maturity levels can prove to be misleading if we do not understand the bigger picture of aspects that influence what an organization can do with AI in relation to its operations. On the flip side, though, the AICMM can highlight some critical dependencies early on in projects, which then provides better accuracy and proactiveness in AI diffusion. As such, one of the key novelties in our research work is that we simultaneously pinpoint constraints and dependencies among key aspects of AI implementation at the organizational level.

4.2 Practical Implications

Apart from the research implications our work creates for information systems and management scholars, there are also some key insights that have value for practitioners. These implications are primarily connected to developed AICMM and its use in the organizational context. First, such mapping can deliver great value for a business as it helps identify areas for improvement in a targeted way. The AICMM can help organizations better understand the position they are currently at and how to move towards further AI diffusion in their operations. Since it can function as a decision-making and planning tool, it is possible that can be used during strategic planning through workshops with representatives from different units. IT managers can also utilize it as a translation tool to help bridge the gap between commercial departments and facilitate concrete areas to upgrade. Consequently, such knowledge may not necessarily increase IT spending but will make prioritization easier.

Second, the AICMM enables consultants to conduct maturity mappings as a product to their customers and create a roadmap for implementation of AI over time. By using it with representatives from three case organizations, our results show that the AICMM is useful tool that can be used early in AI project planning to foresee future developments and plan accordingly. Such insights can be used as action points for managers at different levels in the organization. Additionally, having a model to share with other stakeholders in the organization can serve as a translation process and a communication device. In turn, a detailed overview of all key areas within AI diffusion can allow for visualized insights on which internal managers can act. Lastly, for practitioners that intend to utilize AICMMs, the process of mapping current developments offers key learning points that can improve the quality and efficiency of their mapping process and overall organizational coordination. In our discussion, we highlight that the AICMM, among other things, needs to be customizable and iterative, and its primary function is to serve as an assessment tool and a means of creating an action plan that aligns the key stakeholders involved.

4.3 Limitations

As with all empirical research work, so does ours comes with several limitations. First, since we conducted qualitative research there is always the risk of interviewer bias. Although we tried to remedy this by having several researchers involved in the process, there is still the possibility that we may have overemphasized some aspects of the maturity model compared to others. Second, although we have tried to use a multi-phase approach to develop and validate the framework, we have relied on respondents from one country. It is important therefore that future studies extend and apply the framework in different contexts in order test it and further refine it. In addition, what is important in one industry or company may not be so in another, as we saw in the case of the bank. Lastly, we have utilized a limited number of respondents in each case organization. It would have been more beneficial to gain insight from more respondents, and specifically to conduct such a mapping in the form of a focus group with participants from different departments. Our ambition is that the AICMM can be utilized in further studies both in a more representative environment as well as in a longitudinal process that can track the evolution of AI diffusion in organizations.

Data Availability

As the data that is used builds on potentially sensitive data, we cannot provide raw data. There are no competing interests in the draft of this research and no funding sources were used.

References

Alsheibani, S., Cheung, Y., & Messom, C. H. (2018). Artificial Intelligence Adoption: AI-readiness at Firm-Level. PACIS, 4(2018), 231–245.

Alsheibani, S., Messom, C., Cheung, Y., & Alhosni, M. (2020). Artificial Intelligence Beyond the Hype: Exploring the Organisation Adoption Factors. ACIS 2020 Proceedings. 33. https://aisel.aisnet.org/acis2020/33

Amit, R., & Schoemaker, P. J. (1993). Strategic assets and organizational rent. Strategic Management Journal, 14(1), 33–46.

Armstrong, C. P., & Sambamurthy, V. (1999). Information technology assimilation in firms: The influence of senior leadership and IT infrastructures. Information Systems Research, 10(4), 304–327.

Åström, J., Reim, W., & Parida, V. (2022). Value creation and value capture for AI business model innovation: A three-phase process framework. Review of Managerial Science, 16(7), 2111–2133.

Barney, J. (1991). Firm Resources and Sustained Competitive Advantage. Journal of Management, 17(1), 99–120. https://doi.org/10.1177/014920639101700108

Barney, J. B., & Arikan, A. M. (2005). The resource‐based view: origins and implications. The Blackwell handbook of strategic management, 123–182.

Becker, J., Knackstedt, R., & Pöppelbuß, J. (2009). Developing Maturity Models for IT Management. Business & Information Systems Engineering, 1(3), 213–222. https://doi.org/10.1007/s12599-009-0044-5

Benbya, H., Davenport, T., & Pachidi, S. (2020). Artificial Intelligence in Organizations: Current State and Future Opportunities. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3741983

Benbya, H., Pachidi, S., & Jarvenpaa, S. (2021). Special issue editorial: Artificial intelligence in organizations: Implications for information systems research. Journal of the Association for Information Systems, 22(2), 10.

Boden, M. (2016). AI: Its nature and future (1st ed.). Oxford University Press.

Borges, A., Laurindo, F., Spínola, M., Gonçalves, R., & Mattos, C. (2021). The strategic use of artificial intelligence in the digital era: Systematic literature review and future research directions. International Journal of Information Management, 57, 102225. https://doi.org/10.1016/j.ijinfomgt.2020.102225

Brock, J., & von Wangenheim, F. (2019). Demystifying AI: What Digital Transformation Leaders Can Teach You About Realistic Artificial Intelligence. California Management Review, 61(4), 110–134. https://doi.org/10.1177/1536504219865226

Bryman, A. (2012). Social research methods (4th ed.). Oxford University Press.

Collins, C., Dennehy, D., Conboy, K., & Mikalef, P. (2021). Artificial intelligence in information systems research: A systematic literature review and research agenda. International Journal of Information Management, 60, 102383.

Daugherty, P., & Bilts, M. (2021). Technology Trends 2021: Tech Vision. Accenture. Retrieved from https://www.accenture.com/us-en/insights/technology/technology-trends-2021.

Davenport, T. H., & Ronanki, R. (2018). Artificial intelligence for the real world. Harvard Business Review, 96(1), 108–116.

Dilmegani, C. (2022). An In-depth Guide to AI Consulting & Top Consultants in 2022. AIMultiple. Retrieved from https://research.aimultiple.com/ai-consulting/.

Dwivedi, Y., Hughes, L., Ismagilova, E., Aarts, G., Coombs, C., Crick, T., et al. (2021). Artificial Intelligence (AI): Multidisciplinary perspectives on emerging challenges, opportunities, and agenda for research, practice and policy. International Journal of Information Management, 57, 101994. https://doi.org/10.1016/j.ijinfomgt.2019.08.002

Element AI. (2020). The AI Maturity Framework A strategic guide to operationalize and scale enterprise AI solutions. Element AI Inc. Retrieved from https://www.elementai.com/products/ai-maturity

Enholm, I. M., Papagiannidis, E., Mikalef, P., & Krogstie, J. (2022). Artificial intelligence and business value: A literature review. Information Systems Frontiers, 24(5), 1709–1734.