Abstract

Predictive Process Monitoring (PPM) is a field of Process Mining that aims at predicting how an ongoing execution of a business process will develop in the future using past process executions recorded in event logs. The recent stream of publications in this field shows the need for tools able to support researchers and users in comparing and selecting the techniques that are the most suitable for them. In this paper, we present Nirdizati , a dedicated tool for supporting users in building, comparing and explaining the PPM models that can then be used to perform predictions on the future of an ongoing case. Nirdizati has been constructed by carefully considering the necessary capabilities of a PPM tool and by implementing them in a client-server architecture able to support modularity and scalability. The features of Nirdizati support researchers and practitioners within the entire pipeline for constructing reliable PPM models. The assessment using reactive design patterns and load tests provides an evaluation of the interaction among the architectural elements, and of the scalability with multiple users accessing the prototype in a concurrent manner, respectively. By providing a rich set of different state-of-the-art approaches, Nirdizati offers to Process Mining researchers and practitioners a useful and flexible instrument for comparing and selecting PPM techniques.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Predictive Process Monitoring (PPM) (Di Francescomarino & Ghidini, 2022) is a branch of Process Mining that aims at providing predictions on the future of an ongoing process execution by leveraging historical execution traces. An increasing number of PPM approaches leverage Machine and Deep Learning techniques in order to learn from past execution traces the outcome of an ongoing process execution, the time remaining till the end of an ongoing execution, or the next activities that will be performed.

The importance of PPM has stimulated researchers and practitioners to create dozens of Machine/Deep Learning approaches to build PPM models (Di Francescomarino & Ghidini, 2022) ranging from different encodings to different actual Machine/Deep Learning techniques. With such a diverse set of parameters and techniques an issue is how to sensibly support the entire pipeline for building PPM models, by choosing the most appropriate techniques, especially considering that the construction and selection of the appropriate models can greatly impact the performance of the resulting predictions (Luo, 2015). Constructing an effective predictive model, able to maximize the performance of the predictive capabilities for a given dataset, is however non-trivial. For example, this construction may imply a choice among different classification (e.g., decision trees or random forests) and clustering (e.g., k-means, agglomerative clustering or dbscan) algorithms, as well as the hyperparameters that these techniques require have to be tuned according to the specific dataset and prediction problem. While these choices may be challenging even for experts, for non-experts they often result in arbitrary (or default-case) choices (Thornton et al., 2013).

The conventional way to face this problem is combining manual and exhaustive search (Wistuba et al., 2015). In a PPM scenario this consists of two specific steps: first, it requires running different configurations of predictive techniques on an appropriate dataset used for training and validating, and, second, it requires comparing the outcomes of the different configurations to select the one that outperforms the others for the given domain. Unfortunately, this strategy poses two challenges that may hamper the practical adoption of PPM: first, it comes with the computational burden of running different configurations of predictive techniques; second, it comes with the practical complexity of comparing different configurations and then select the best possible outcome for a business analyst/process owner.

Up to now, the tool support for PPM has mainly focused on the addition of specific plug-ins or ad-hoc techniques to general Process Mining suites. This has produced tools that either support specific types of predictions, or a limited amount of Machine/Deep Learning techniques, evaluation metrics, and other elements that come into play when a predictive model is built. More in general, existing tools are not designed as general PPM tools where users can construct predictive models by choosing among a number of configuration parameters and are supported in comparing different PPM models to choose the best ones. In addition, from an architectural point of view, existing tools are not designed as highly modular tools that facilitate the maintenance of the tool itself and, more important, the addition of new techniques when available.

This paper proposes an architecture for a PPM toolkit - Nirdizati - able to address the challenges mentioned above, i.e., it offers to researchers and practitioners a rich set of features and techniques to build, compare, and select PPM models in a computationally viable manner. Given the growing importance of eXplainable AI (XAI), Nirdizati is also equipped with a first set of features for explaining predictions. Nirdizati has been implemented as an open-source toolkit with a highly modular structure. While stemming from the research community, Nirdizati can be of interest to both practitioners and research scientists. Once compared and selected, the chosen predictive models can be used by practitioners to derive predictions on the future values of performance indicators related to a currently running process execution. Researchers instead can exploit the modular nature of the tool for extending it with new solutions, and leverage the standardized Nirdizati pipeline to comparatively evaluate them against existing techniques.

The structure of this paper is as follows. Section 2 provides a background on the data used in PPM, the techniques used to build and evaluate a predictive model, and the techniques used to explain the data and the predictive models to the user. Section 3 provides a description of the typical pipeline used for building PPM models, and the capabilities a generic tool for PPM should satisfy. Sections 4 and 5 describe the general Nirdizati architectures and components to provide the capabilities described in Section 3, respectively. Sections 6 and 7 provide an overview of the current prototype implementation and of some of its front-end features. The paper ends with an assessment of the tool (Section 8), related work (Section 10) and concluding remarks (Section 11).

2 Background

In this section, we provide an overview of the main concepts in PPM, and of the concepts used in the construction of the Nirdizati tool.

2.1 Event log

An event log is composed of executions (also known as traces) of business processes. A trace consists of a sequence of events, each referring to the execution of an activity (a.k.a. an event class). Events that belong to a trace are ordered and constitute a single “run” of the process. For example, in trace \(\sigma _i=\left\langle event_1, event_2, \ldots event_n \right\rangle \), the first activity to be executed is the activity associated with \(event_1\). Events are also characterized by attributes (event attributes), usually denoted by means of a relation # between the set of events E and the set of attribute-value mappings \({\mathcal {U}}_{map}\). Typical attributes are the timestamp, \(\#(event)_{time}\), indicating the time in which the event has occurred, and possible data payloads such as the resource(s) - written as \(\#(event)_{resource}\) - involved in the execution of an activity or other data recorded with the event. Some attributes, as for instance the personal birth date of a customer in a loan request process, do not change throughout the different events in a trace, and are called trace attributes.

The canonical representation format of the event logs is the XES (eXtensible Event Stream) standard (Verbeek et al., 2010), an XML-based format that maintains the general standard structure of an event log. For a comprehensive description of the format, the interested reader is referred to van der Aalst and Carmona (2022).

2.2 Predictive process monitoring

Predictive Process Monitoring (PPM) is a branch of Process Mining that aims at predicting at runtime and as early as possible the future development of ongoing executions of a process given their uncompleted traces. In the last few years, a wide literature about PPM techniques has become available. In the following, we provide a short overview of the main concepts of PPM. For a comprehensive introduction to the topic, the interested reader is referred to Di Francescomarino and Ghidini (2022).

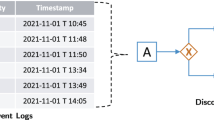

The pipeline Figure 1 shows a typical example of a PPM pipeline. PPM approaches usually require that trace prefixes are extracted from historical execution traces (prefix extraction phase). This is due to the fact that, at runtime, predictions are made on incomplete traces, so that correlations between incomplete traces and what we want to predict (target variables or labels) have to be learned in the training phase. After prefixes have been extracted, prefix traces and labels (i.e., the information that has to be predicted) are encoded in the form of feature vectors (encoding phase). Encoded traces are then passed to the (supervised learning) techniques in charge of learning from the encoded data one (or more) predictive model(s) (learning phase). At runtime, the incomplete execution traces, i.e., the traces whose future is unknown, should also be encoded as feature vectors and used to query the predictive model(s) to get the predictions (predicting phase).

Prediction types Concerning “what we want to predict”, or better the label we aim at predicting, we can follow the typical classification presented in Di Francescomarino and Ghidini (2022) and group predictions into three main categories:

-

outcome-based predictions, i.e., predictions related to predefined categorical or boolean outcome values;

-

numeric value predictions, i.e., predictions related to measures of interest taking numeric or continuous values; and

-

next event predictions, i.e., predictions related to sequences of future activities and related data payloads.

Encodings Encodings are needed to transform labeled prefix traces of the form \(\sigma _i=\left\langle event_1, event_2, \ldots event_i, label \right\rangle \) into a format that is understandable by Machine Learning techniques. This would allow the technique to train and hence learn, from encoded data a predictive model. Due to the complex nature of event logs, which are characterized by a temporal dimension, the presence of data payloads, and the possible inter-relation vs independence between execution traces, several encodings have been proposed (for a recent survey see Tavares et al. 2023). Examples of encodings available in the literature (Leontjeva et al., 2015) are the Boolean encoding and the Simple-index encoding, which only take into account the control flow (i.e., the sequence of events) of a trace, and the Complex index-based encoding, which instead also consider data payloads in the encoding. For an extended view on encodings in PPM and challenges related to the usage of encodings please refer to Tax et al. (2017); Verenich et al. (2019); Teinemaa et al. (2019); Di Francescomarino and Ghidini (2022).

Machine learning approaches When it comes to the learning phase, multiple approaches from the Machine Learning literature have been used in PPM. Examples range from traditional Machine Learning techniques such as Decision Tree, Random Forest, or Support Vector Machine, to Deep Learning approaches such as LSTM Neural Networks (Di Francescomarino & Ghidini, 2022; de Leoni et al., 2016; Di Francescomarino et al., 2019; Leontjeva et al., 2015; Tax et al., 2017). One of the reasons for such a variety of techniques is that the studies carried out have not identified a Machine Learning algorithm strictly better than all the others. Depending on the event log data, the encoding, and the prediction problem, different techniques are known to perform better than others (see, e.g., Teinemaa et al. 2019 for outcome oriented predictions and Verenich et al. 2019 for time-based predictions).

2.3 Hyperparameters optimization

Machine Learning techniques, to better fit the supplied data, typically have a variable number of hyperparameters. Tuning the hyperparameters highly influences the quality of the built predictive models. Although the available hyperparameter optimization techniques are expensive in terms of both computational power and time, the gain in terms of prediction quality often justify their use.

These techniques receive as input the hyperparameter space of correct inputs and a quality metrics to maximize. Their execution will then maximize the quality metrics by producing multiple different configurations of hyperparameters and then evaluating the quality metrics for each of them. The amount of hyperparameter configurations produced by the hyperparameter optimization technique depends on how the technique explores the hyperparameter optimization space. Various algorithms exist to tackle this challenge (Bergstra et al., 2011), including approaches specific for the PPM field (Di Francescomarino et al., 2018).

2.4 Explainable predictions

EXplainable AI (XAI) is a growing body of research focused on providing explanations on the outputs of, usually gray- or black-box, Machine Learning algorithms. In the literature, there are two main sets of techniques used to develop explainable systems, a.k.a. explainers: post-hoc and ante-hoc techniques. Post-hoc techniques, such as the Local Interpretable Model-Agnostic Explanations (LIME) (Ribeiro et al., 2016) or the SHapley Additive exPlanations (SHAP) (Lundberg & Lee, 2017), allow models to be trained as usual, with explainability only being incorporated at testing time. Ante-hoc techniques entail integrating explainability into a model from the beginning. Explainable techniques have recently started to be applied to the PPM field, mainly with the usage of model agnostic generic techniques (such LIME and SHAP mentioned above) with the aim of discovering the rationale behind a prediction. While the application of XAI techniques to PPM is still at its infancy, and specific XAI techniques devoted to event log data or PPM prediction problems are still lacking, the field is developing quickly. The interested reader is referred to Stierle et al. (2021); El-Khawaga et al. (2022) for recent surveys on the topic.

3 Building a PPM model

The task of building a PPM model can be seen as an instance of a data science pipeline, tailored to the specific type of event log data. While several data science pipelines exist, they are all usually composed of a set of tasks that start with the raw data, transform them into a solid dataset (in PPM an event log), and then move through the steps of Data Engineering, Model Construction and Evaluation, and Model Deployment (Alby, 2023). These steps are represented as green and blue boxes in Fig. 2.

Nirdizati aims at supporting users in the construction and the comparison of different predictive models. The comparison step can be performed for different reasons. Typical reasons include: (i) the selection of the most appropriate model(s) to be deployed (e.g., by an end user), and (ii) the experimental comparisons of different techniques in scientific studies (e.g., by research scientists). Therefore, it mainly focuses on providing capabilities for Model Construction and its (comparative) Evaluation, as highlighted in the blue boxes in Fig. 2, and neglects steps such as the extraction of raw data, the construction of an event log, and the deployment of the predictive models, which are often tackled with ad hoc approaches or different specific tools (see, e.g., the onprom tool for the construction of an event log, described in Calvanese et al. 2017). Also, the Data Engineering component is mainly tailored to support the splitting and labeling of data into training and test sets, thus ignoring the important - but often ad hoc - step of Data Cleaning and Improving. Given the growing importance of XAI, explanation is likely to become one of the standard tasks of future Machine Learning-based pipelines and is therefore also included in Nirdizati and discussed here.

In the following, we provide an overview of the capabilities (i.e., of the functionalities for the end user) that a PPM tool should have to support all the steps in the blue boxes in Fig. 2, ranging from the creation of training and test sets to the construction of PPM models, and their (comparative) evaluation.

3.1 Data engineering

In the context of PPM, data pre-processing is used to determine the most effective representation of the data for the predictive problem at hand. In particular, data pre-processing is used in PPM to understand the distribution of attributes, events, and timestamps in the data space, and whether the quality of data and their interesting aspects make the specific prediction problem feasible, unfeasible, or only partly feasible.

The understanding of the quality of data and the feasibility of some/all of the canonical prediction problems is usually tackled with data exploration, i.e., the exploration of the selected event log. This step concerns the determination of the data types, the visualization of such data, and the analysis of the data space densities. Data exploration can trigger a refinement of the data space, which is usually performed in a data cleaning phase that can concern the removal of unnecessary, duplicate, or inconsistent values, the addition of missing values, or the modification of the sparsity of data, just to make simple examples. Several open source or commercial tools exist to perform complex event log exploration, analysis, and engineering tasks, usually as a prerequisite of the task of process discovery (see, e.g., ProMFootnote 1, DiscoFootnote 2, CelonisFootnote 3, just to name a few). Thus, supporting advanced event log engineering functionalities is not deemed crucial for a PPM tool. Obviously an advanced tool for PPM may enable the user to perform some event log engineering as a way to support the user within a single comprehensive tool.

3.2 Building the predictive model(s)

Once the event logs are in a suitable shape, they must be split into training (the data used to build the predictive models), validation (the data used to automatically optimize hyperparameters, if used) and test (the data used to evaluate the predictive models) sets. From here on, we will use the term training set to denote the data used for building a model with optimized hyperparameters, i.e., for denoting both the training and the validation set. In addition to the choice of the size of training and test set, the temporal nature of PPM data implies that different strategies can be chosen in order to split them. For instance, one may opt for a random split of traces for training and testing, disregarding the time they were executed; one could take the older traces (i.e., the ones starting/finishing at the beginning of the period covered by the event log) for training and the newer ones (i.e., the ones starting/finishing towards the end of the period covered by the event log) for testing in order to implement a “not learn from the future” strategy; or one may decide further preferred “orderings”, or even sample traces as suggested in Sani et al. (2023). As a consequence, an advanced tool for PPM should enable the user to split an event log into training and test sets by choosing an appropriate split strategy.

Another important step in preparing the training and test set is the choice of “when to predict” in the ongoing trace, i.e., the length(s) of the sequences (prefixes) to be used to train the predictive model. Similarly to the previous step, several strategies exist in PPM to select prefixes for the construction of a predictive model. For example, one can choose to build a model for making predictions for prefixes of a specific length n (i.e., after n events in the trace), for making predictions for any prefix of length up to n, or to build n different predictive models, one for each prefix of length \(i \le n\). A different strategy would be to choose to predict after (the first occurrence of) a given activity “A” regardless of when it happens in the trace. Once the prefix is chosen, an advanced PPM tool must enable the user to decide how to deal with traces that are shorter than the selected prefix length. Again, different strategies are possible: for example, sequences shorter than n can be discarded, or they can be padded with “0”, a standard strategy when dealing with input sequences of variable lengths. As a consequence, an advanced tool for PPM should enable the user to select the prefixes used to build the predictive model and the appropriate prefix selection strategy.

A similarly important step in building training and test set is the support for the labeling of execution traces. While providing support for any kind of automatic labeling is almost impossible, some support can be provided for typical types of labels such as attributes used for outcome prediction, next activity(ies), categorical values referring to the duration of the trace (e.g., fast or slow w.r.t. a given value, or some average trace duration). Thus, an advanced tool for PPM should provide support for automatic labeling of training and test data.

Execution traces are not in a format that can be directly used by a Machine Learning algorithm. As shown in Section 2.2, different encodings have been proposed in the literature (Leontjeva et al., 2015) to exploit different characteristics of the event logs. Furthermore, PPM approaches can make predictions on a running case as dependent on (resp. independent of) the interplay of all cases that are being executed concurrently (Senderovich et al., 2017) (also known as prediction in an intercase vs intracase fashion). Thus, an advanced tool for PPM should enable the selection among different encodings and support predictions made in an intercase, or intracase fashion.

When it comes to the selection of the Machine Learning algorithm to be used for training a model, it is well known that “no silver bullet exists”. Specifically, while it is true that some findings exist (Teinemaa et al., 2019; Peeperkorn et al., 2023) on the matter, one of the challenges in the PPM field is to understand which algorithm is better suited for specific data, or predictive problems. Thus, an advanced tool for PPM should enable the user to select among a wide variety of techniques and their configurations.

Moreover, and most importantly, an advanced tool for PPM should enable the user to compare configurations emerging from all the different choices illustrated above. In particular, an advanced tool for PPM should enable the setting up of different configurations covering all the steps above (from the log splitting, to the selection of the Machine Learning algorithms and their parameters) and the construction of all their corresponding predictive models.

The ability to store a number of alternatives - from the encodings to the Machine Learning algorithms - and the need to run the (often expensive) construction of several predictive models also imposes requirements on the software architecture that will be discussed in Section 4. In addition, the building of several models triggers the need to support some kind of hyperparameter optimization. Generally speaking, the optimization of the hyperparameters of a Machine Learning algorithm is known to be a difficult and time expensive task, and end users may find unfeasible to autonomously optimize the hyperparameters of multiple Machine Learning algorithms. Thus an advanced tool for PPM should enable the identification of the optimal hyperparameter configuration for each of the supported Machine Learning algorithms.

3.3 Evaluating the predictive model(s)

The evaluation step is of utmost importance, when aiming at the construction of a predictive model that is appropriate for the dataset at hand. A tool must here support the user in two dimensions: the possibility of using different metrics for the evaluation, and the capability to comparatively evaluate different configurations defined to build the models.

Focusing on the metrics to evaluate a predictive model, the Machine Learning literature has produced different evaluation metrics to measure different characteristics of the models. Thus, an advanced tool for PPM should enable the choice among a wide set of evaluation metrics. In particular, it should enable the choice of appropriate accuracy metrics, as these metrics provide an estimate of how well the predictive model understood the data and was capable to solve a prediction problem. In addition, it should be able to accommodate further metrics useful in experimental evaluations. An example of such metrics are execution time metrics. These metrics measure the amount of time spent by the tool to perform a set of actions, such as, e.g., the time spent for pre-processing the data, or the time spent for building the predictive model.

Focusing on the comparative evaluation of different configurations used to construct a predictive model, the comparison of the different configurations needs to be supported in terms of (at least) two different instruments. The first instrument is composed of visual (e.g, tabular) representations enabling the comparison of the different metrics illustrated above. With this instrument, a user may investigate which configurations have, e.g., better F1Footnote 4, or AUCFootnote 5 scores. This is a basic evaluation capability that could help,for instance, an end user to directly select the best performing model. The second instrument should enable the user to drill down and understand how the goodness of the model (e.g., the AUC) is related to a certain configuration step, for example the prefix length, the encoding, or the chosen Machine Learning algorithm. This latter, more sophisticated method may be useful to: (i) tailor future configurations for the construction of further models, in case of not fully satisfactory ones, and (ii) carry out scientific investigations, where it is often required to understand why performances may vary. Thus, an advanced tool for PPM should enable the comparison of different predictive models both in terms of performance metrics and in terms of configuration characteristics used for the model construction.

3.4 Explaining the predictive model(s)

Given the growing importance of XAI, explanation is likely to become one of the standard tasks of future Machine Learning-based pipelines. Therefore, even if this task is not yet part of a standard PPM pipeline, a good PPM tool should include the possibility of easily incorporating XAI techniques. In addition to computing the explanation, a PPM tool should tailor and display explanations using different styles, depending on the user’s expertise. Therefore an advanced tool for PPM should enable the usage of different XAI algorithms and of different visualization methods.

Table 1 summarizes all the capabilities a PPM tool should support illustrated in this section. As discussed, capabilities (C2)–(C11) are considered core functionalities of an advanced tool for PPM, while capabilities (C1) and (C12) are considered useful extra features. In the next two sections, we describe the architecture and the code modules of Nirdizati that are tailored towards supporting these capabilities.

4 The Nirdizati architecture

Complex capabilities as those mentioned in Section 3 require both the ability to offer friendly user interfaces and the ability to ensure fast processing and a good usage of resources, e.g., to train the models, in order to ensure scalability and concurrent employment of different users. A modular structure is also required not only to ease its design and maintenance, but also to facilitate the update of the tool with new code modules implementing new or revised techniques (e.g., new encoding methods, Machine Learning algorithms, or evaluation features). Thus, at an architectural and implementation level, we augmented the end users capabilities of Section 3 with other two requirements, i.e., the support of scalability and modularity. In this section, we provide a high level description of the architecture of Nirdizati . Then, we illustrate how this design supports scalability and modularity, and we terminate with a general description of how the different architectural components support capabilities (C1)-(C12).

At the high level, Nirdizati is composed of (i) a Front-end application, which allows users to select the prediction methods and to assess the goodness-of-fit of the built models, by means of a full/partial implementation of capabilities (C1) – (C12), and (ii) of a Back-end application responsible for the actual training and test (see Fig. 3). Our aim to offer Nirdizati as a service to the research community has triggered the decision to build it as a web application. In the following, we focus on the architectural design that we adopted in order to support modularity and scalability of the tool (see Fig. 4).

Scalability To enable scalability, Nirdizati Footnote 6 leverages the multiprocessing capabilities of nowadays processors, using a Primary/Replica setting. In this setting, the Primary - the server in Fig. 4 - creates and orchestrates the jobs and performs other small tasks, thus providing functionalities such as the Job Validation and Enqueueing, the Job Result retrieval, the Event Log Storage and Analysis, and the Explanation Generation. The Replica - the workers in Fig. 4 - instead, takes care of performing (most of) the jobs (Job Dequeueing, Execution and Result storage). They do it by exploiting code modules that implement the required functionalities in a modular setting (see Section 5 for a description of the modules).

When using such a setting, most of the processing time is taken for the pre-processing phases. These phases are repeated over and over for each different configuration. To overcome this time and resource consumption, Nirdizati is equipped with a cache system that avoids pre-processing the data multiple times. However, when using caching, in the Nirdizati architecture, two or more slaves performing the same pre-processing step may concurrently generate duplicate cache files. A database system has hence been introduced. Its schema and main characteristics are contained in Appendix A. The database is able to track the status of each object of the tool, i.e., the set of elements used in a given task, in a granular way. For instance, the object representing the task of splitting a log into training and test set is composed of a foreign key to the object representing the log, the training and the test set sizes, the type of ordering used for the split, the name of the split, and a unique key. On the one hand, storing each object of the tool in a table of the database allows for an easy retrieval of the required information. On the other hand, the capability to keep in memory only the database keys (instead of the objects themselves) results in a lighter footprint in memory. From the database, it is possible to retrieve the current jobs and their status, the available cached information, the previously trained models and the corresponding accuracy metrics. By relying on a stateful architecture and capitalizing the work carried out, Nirdizati is able to exploit multiprocessing and to ensure a scalable implementation able to support the concurrent usage of several users as shown in Section 8.

The capabilities of Nirdizati are provided via the interaction of multiple architectural elements. The direction of the arrows represents the flow of information. The user accesses each capability by interacting with the Front-end element. In turn, the Front-end element sends requests to the Server element via the APIs. The Server, the Database and the Workers provide the functionalities: the simple requests are handled by the Server, or by the Server and the Database, whereas the Job requests are handled by the Worker

Nirdizati follows a client-server architecture. The server exposes APIs to communicate with the client. When the server receives a new job, it puts it in a Queue. When a new job is available in the Queue, a worker takes care of the job. Workers, whose number can vary according to the needs, share access to the Queue, to the Cache, and to the Database

Modularity To ensure modularity, capabilities (C1)-(C12) are supported in Nirdizati by means of nine specific code modules: Event Log, Split, Label, Encode, Model Construction, Cache, Hyperparameter Optimization, Model Evaluation, and Explanation (see Fig. 3). The description of the single modules and the way they support the required capabilities is discussed in Section 5. Here, we briefly describe how this modular structure enables the provision of a flexible tool, where new or still missing PPM techniques can be easily inserted. Assume, for example, that a scientist, or a practitioner wants to extend Nirdizati with one of the encodings described in Tavares et al. (2023), still missing in the current version of the tool. The author of the new code only has to familiarize with the project (including reading the documentation), implement a compatible encoding function alongside the existing ones, update the enumerators governing the encoder module by providing the new encoder name, and add the new encoder code, declaring the new class as a subclass of the abstract encoder class. Finally, it is essential to provide new unit tests to verify that the new encoder is functioning as expected. The Django web framework used to manage the database ensures the automatic update of the modular database schema (see Appendix A for further details), thus making the process sustainable even without a dedicated API, or plug-in structure to automatically manage extensions, which is left for future work.

The architecture and the capabilities Table 2 describes how the different architectural elements of Fig. 4 are involved in the provision of capabilities (C1)–(C12). For the sake of readability, we have grouped capabilities that involve the same architectural elements. The presence of an “x” in a cell means that the architectural element of that row is used to provide the group of capabilities of that column. For instance, the provision of C1, C7, and C11 involves the Front-end, the API, the Server, the Database, and some Code Modules.

Not surprisingly, the Front-end, the API, the Server, the Database, and the Code Modules are involved in the provision of all capabilities. The first two groups of capabilities do not require any intensive computationFootnote 7 and are therefore handled directly by the Server, with the only exception of (C3) and (C12) using the Cache for optimization purposes. The third group, instead, is the one involved in the setting up, computation, and comparison of the different predictive models. Being time and resource expensive, the provision of these capabilities takes advantage of the Nirdizati Master/Replica architecture based on the usage of Cache and Workers.

5 Code modules

As described in Table 1, the Nirdizati code is involved in the provision of all capabilities (C1)-(C12). In fact, each of the nine code modules provide simple functionalities that compose the internals of the elements discussed in Section 4 and allows for the provision of the complex capabilities discussed in Section 3. Nonetheless, not all the nine specific code modules contribute to all functionalities. In the following, we briefly describe the different modules by highlighting their main characteristics and providing an illustration of how they support the required capabilities. The mapping between each code module and each capability is summarized in Table 3. The aim of the modules, and of the modular structure, is to be as general as possible, to offer different ways to implement the different steps of a PPM pipeline, and to support the addition of new methods in an easy manner. Nonetheless, in the implementation of Nirdizati , a series of specific methods and techniques were chosen along the years, e.g., to split event logs, encode the data, and build the models. The list of the specific state-of-the-art methods currently supported by Nirdizati is listed in Appendix B. Note that the list does not include all the state-of-the-art techniques available in the literature, but documents the ability of the tool to incorporate different techniques in an effective manner thus stimulating the possible addition of other techniques.

The Event Log module receives as input the data uploaded by the user, in the form of an event log in the XES format, and stores it in the Back-end, ready to be used. In order to help the user to establish which predictive model is the most likely to achieve the best performance, a description of the event log in terms of resources involved in the process, number of concurrent executions, and sparsity of the data is provided.

The Split module receives as input an event log and builds the training and the test set. These sets are subsets of the initial event log, and their definition influences the quality of the trained predictive models. To produce training and test set, the Split module needs to sort, filter, and split the event log. The sorting of the event log is useful for implementing different split strategies. The filtering of the event log allows for eliciting and correcting underrepresented variants, wrongly represented traces, malformed traces, biased traces, and, in general, for removing information that is not useful from the log. The actual splitting of the event log allows for obtaining the training and the test set.

The Label module receives as input an event log and returns the same log annotated with a labeling specified by the user. Typical labelings in PPM refer to categorical outcomes, numeric values, and next activities. The Label module of Nirdizati enables the automatic labeling of a log with all three types of predictions. In particular, for categorical outcomes, the module allows for (i) multiclass labels starting from categorical attributes, or from possible next activities, as well as (ii) binary labels derived by comparing numeri attributes, or trace durations with a user-specified threshold value. For numeric outcomes, the module allows for numeric labels based on numeric attributes, or trace durations.

The Encode module receives as input a labeled log, and returns its encoded version as a DataframeFootnote 8. To produce the Dataframe from a log, three different steps need to be tackled: (i) information extraction, (ii) feature encoding, and (iii) data encoding. The information extraction step extracts from an event log useful information for the encoding. In this step, the attributes of the event log related to the control-flow (i.e., the attribute holding the activity name), to the data flow (i.e., trace and event attributes), as well as to the resource-flow (i.e., the attribute related to the resource) are extracted. This mapping is useful in order to understand what kind of information may be taken into account in the actual encoding. The feature encoding step starts from this information and produces the set of features that will be used to represent each trace in the event log. This step takes also into consideration intercase features. The data encoding step starts from the log and the feature set and produces the Dataframe and the encoder object. The Dataframe is built to be easy to understand for a machine and allows for a smoother training. The operations performed to build a machine-understandable object include steps like the one-hot encoding of categorical features, and de-noise and normalization for the categorization of numeric features.

The Model Construction module receives the predictive model to be built as input from the user and returns an instantiated predictive model together with the specification of the hyperparameter space supported by that specific instantiation. There are three classes of predictive model learning algorithms supported by Nirdizati , i.e., (i) classification, (ii) regression, and (iii) timeseries based on Recurrent Neural Networks (see Appendix B for the specific algorithms available). These three classes allow for tackling the three classic types of predictions: (i) categorical outcomes, (ii) numerical values, and (iii) next activities, respectively. In turn, each class of learning algorithms can be easily further extended, thanks to the modular nature of the tool. Section 7.1 provides a description of the Front-end features of this module.

The Hyperparameter Optimization module receives as input the training Dataframe, an instantiated predictive model, and the predictive model hyperparameter space, and returns an optimized predictive model. While the values of hyperparameters can influence the performance of the predictive models in a relevant manner, their optimal values highly depend on the specific dataset under examination, thus making their setting rather burdensome. To support and automatize this onerous but important task, several hyperparameter optimization techniques have been developed in the literature (Bergstra & Bengio, 2012) and are included in the Hyperparameter Optimization module.

The Model Evaluation module receives in input the test Dataframe and the (hyper)optimized predictive model and returns the evaluation of the model using the selected metrics. There are two classes of metrics that can be used in Nirdizati : (i) execution time and (ii) accuracy metrics. Execution time metrics are concerned with understanding how fast the predictive model can be trained (or updated) and can return predictions. Accuracy metrics are, instead, concerned with understanding how good the predictions are in comparison with the real values contained in the test set. Being one of the distinctive features of Nirdizati , the Model Evaluation module provides a wide range of information for the comparative evaluation of different predictive models. In addition to comparative tables, Nirdizati also supports the display of bubble chart visualizations. Section 7.2 provides a description of the Front-end features of this module.

The Explanation module receives as input the test Dataframe and the trained predictive model and returns one of the three explanation classes discussed in Rizzi et al. (2022a). The three classes of explanation refer to three different levels of abstraction an explanation can refer to: (i) event, (ii) trace, and (iii) event log. At the event level, the impact of each feature (and of the corresponding values) on a single prediction returned by the predictive model for a specific prefix length is computed by using the selected feature explanation techniqueFootnote 9 and is shown to the user. At the trace level, the tool shows how the impact on the predictions of each feature (and of the corresponding values) evolves for different prefix lengths. Finally, at log level, the average value of the impacts on the predictions of each feature (and of the corresponding values) over all the traces of the event log is provided to the user. Section 7.3 provides an example of one of the features supported by this module.

The Cache module receives as input an object, such as an event log, a Dataframe, or a trained predictive model and stores it, stores the object and retrieves it when needed. More specifically, the Cache module is concerned with the efficient administration of the loaded and created objects. The objects that are the most suitable to be cached are the ones that require a long time to be computed and occupy a small amount of space on the disk. Nirdizati hence caches the loaded logs, the labeled Dataframes, and the trained predictive models. A loaded log is an event log that is transformed into an in-memory representation starting from a XES log provided by the user (and is then used for encoding). A labeled Dataframe is a log that has already been transformed into the Dataframe format. A trained predictive model is a predictive model that is ready to be used to make predictions, meaning that it has been trained, optimized and evaluated on a specified test set.

6 Implementation

Nirdizati is implemented as a Web application that follows the architecture described in Section 6, in order to be a well-structured, open, flexible and documented platform for PPM supporting the capabilities described in Section 3. We implemented the modules described in Section 5 in the back-end of the toolkit. In addition, we provide a User Interface (UI) that leverages the APIs made available by the back-end to allow the user to interact with the toolkit.

The Front-end features can be accessed via a Web browser that exposes the capabilities described in Section 3 through a graphical interface. The Web page is supported by React.js Footnote 10. Through the stateful component design of React.js, it is possible to load the UI and the state of the UI separately, thus allowing the user to browse the static content while waiting for the load of the dynamic content. After that the dynamic content has been loaded, the UI renders the information requested by the user without any lag due to the state being stored locally, thus avoiding further requests to the back-end. To support the continuous improvement of Nirdizati , we integrated the Google analytics anonymized tracking of the user flow through the pages of the UI in order to identify the most common flows that can be used for future improvements of the UI itself.

The Back-end modules described in Section 5 are implemented in PythonFootnote 11. The Django frameworkFootnote 12 has been used to expose the APIs, synchronize the database with the single code modules, and create the elements of the architecture. The event logs are handled through the pm4py library (Berti et al., 2019). The logs are then encoded using the Pandas Dataframe objectsFootnote 13. The Sklearn Footnote 14 init/fit/partial_fit/predict common signatures are used to create, build, update, and evaluate the predictive models. In this way, adding new Machine/Deep Learning algorithms to the available ones, either included in Sklearn, or taken from external libraries such as PyTorch or TensorFlow through a wrapper, is a simple procedure. The queue handling is implemented through the Redis libraryFootnote 15. The database manager is PostgresFootnote 16.

7 Using Nirdizati

In this section, we provide an overview of the UI of Nirdizati . For the sake of presentation, we focus on the features that characterize Nirdizati more than others, i.e., the ones supporting model construction, comparative evaluation, and prediction explanation. The reader interested in exploring all the Nirdizati features can do it either by assessing the tool at http://research.nirdizati.org/ or by following the step-by-step tutorial at https://bit.ly/tutorial_nirdizati.

7.1 Model construction

The Nirdizati Training page, accessible by clicking on the spanner icon of the navigation menu (red box 1 in Fig. 5), allows the user to configure the construction of a predictive model. We illustrate the features provided in this page using the example of a predictive model for a binary configuration task shown in Fig. 5Footnote 17.

The Nirdizati interface allows the user to select: the general Prediction method (red box A); the specific Classification method(s) (red box B); whether to cluster the traces and, in this case, which Clustering method(s) to use (red box C); the Encoding method(s) (red box D); whether to use padding for the encoding (red box E); the Prefix length(s)(red box F and red box G). Finally, the interface allows the user to select the labeling to be used (red box H) in terms of Label type, Attribute name, and Threshold, the type of hyperparameter optimization (here condensed for lack of space (red box I)), and to Submit the request (red box L).

7.2 Model evaluation

Clicking on the histogram icon of the navigation menu (red box 2 in Fig. 5), the user can open the Evaluation page. In this page, the user can select the predictive models to be evaluated and compared. For space reasons, we skip the model selection part of the page and focus on the comparative evaluation instruments, by assuming that the user is interested in comparing the models obtained by using two learning algorithms (Random Forest and XGBoost), two encodings (simple and complex index-based), and two prefix lengths (5 and 10), thus obtaining in \(2^3\) predictive models. The names of the models in table Classification results, column Task Identity (see red box A), in Fig. 6, provide a hint of the configuration parameters selected for building the models.

The results of the evaluation of the different models are reported in three different sections, i.e., Result, Prefix length and Bubble Chart. The Result section (red box A) reports the values scored by the different predictive models on the test set, using a wide range of metrics, appropriate for the specific prediction task. This section allows the user to compare the metrics, to dynamically re-rank the models depending on the results in specific columns, and also to export them in a tabular format. The Prefix length section (red box B) displays the variation of performance depending on the prefix length. In particular, the left-hand side diagram displays the trends of a specific metric (elapsed time in Fig. 6) for each predictive model across the chosen prefix lengths, while the right-hand side diagram displays the performance of selected models (in Fig. 6, all models have been selected), for a specific prefix (10 in Fig. 6). Larger polygons correspond to better models. Finally, the Bubble Chart section (red box C) displays four different bubble chart plots allowing the user to compare the models (identified by their Model ID) using different perspectives. In Fig. 6, the models are compared by focusing on the learning algorithms and encodings used, respectively. The plots report on the two axes the AUC and F1 metrics, bubbles that are closer to the top right corner correspond to better models for both metrics.

7.3 Explanation

Clicking on the verify icon of the navigation menu (red box 3 in Fig. 5), users can open the Explanation page from where they can select the predictive model used and the prediction to be explained (step omitted for space limitations). As illustrated in Section 5, Nirdizati supports explanations at the level of event, trace, and event log. Figure 7 provides an example of a trace level explanation. The plot shows the correlation between 3 features (Age=20, Weight=50 and Rehabilitation Prescription=false) related to an incomplete trace of a patient who has carried out a treatment for a broken bone and the corresponding prediction (the patient will recover soon from the fracture) for different prefix lengths. As we can see from the plot, at the beginning of the trace (prefix length \(\le 2\)), the age of the patient positively correlates with the patient’s fast recovery, while the absence of a Rehabilitation Prescription has a negative impact on this outcome. We can also note that the correlation of these two features with the prediction outcome varies considerably over time, while the trend of Weight=50 remains rather stable – it does not seem to have a strong impact on the prediction along the whole trace. A detailed illustration of all the plots provided in Nirdizati can be found in the tutorial or in Rizzi et al. (2022a). All the plots support the interaction with the user with hovering (to focus on an element) and clicking (to filter out unnecessary elements) behavior.

8 Assessment

The implementation of Nirdizati has been assessed through the use of reactive design patterns (Bonér et al., 2014) and load tests (Shafique & Labiche, 2015; Schieferdecker et al., 2005). The reactive design patterns aim at assessing the interactions among the architectural elements, while the load tests are designed to emulate the work of multiple users accessing the prototype in a concurrent manner.

Assessing the interaction among architectural elements Web applications, like Nirdizati , are often complex objects to design and evaluate. The Reactive Application Design Methodology (Bonér et al., 2014) was developed to offer numerous advantages to web applications, including enhanced responsiveness, scalability, resilience, and adaptability, ultimately contributing to the creation of more robust, flexible, and user-friendly applications. This methodology emphasizes responsiveness, ensuring that an application remains consistently responsive to user interactions, even under varying workloads and unpredictable conditions. The mechanism used by the Reactive Application Design Methodology to ensure the quality of the developed applications is that of reactive design patterns. These patterns enable the investigation of the interactions among the architectural elements of, often non trivial, web architectures.

We investigate the non-trivial interactions among the architectural elements in Nirdizati (illustrated in Fig. 3) by employing suitable reactive design patterns that concern: (i) fault tolerance/recovery, (ii) control flow (iii) replication, (iv) resource management, and (v) state management. As the description below will make clear, these reactive design patterns are tightly connected with the ability of Nirdizati to ensure responsiveness, scalability, resilience, and adaptability.

Fault Tolerance is ensured by delegating the predictive models’ training and testing to the Workers, which are completely separated from the rest of the toolkit. They are resilient towards code exceptions in the training/testing of the predictive models and are instructed to save the experienced exceptions in the database. The logs can be monitored through a Grafana Footnote 18 extension and, in case of unhandled exceptions, the Fault Recovery is granted by the re-spawn feature of the Docker Footnote 19 daemon. The re-spawn of the Worker element and its connection to the rest of the toolkit is enabled by the Queue element, which can dynamically track the existence of any number of Workers.

Concerning the Control Flow, the Queue element in combination with the Worker, the Server, and the Database elements implement the Pull pattern to give the Worker the time to produce the predictive model. The Server element is not tied to wait for the Worker, which, once the training is finished, publishes its results in the Database element.

The capability to handle heavy workloads in terms of the amount of Jobs to carry out is ensured through the Master/Replica Replication pattern. The Master role is covered by the Server element. On the one side, it creates new jobs for the Workers and allows the workers to be aware of the existence of such jobs by saving the job identifiers (IDs) in the Queue element; on the other side, it acts as an interface to the Front-end element exposing RESTful Footnote 20 APIs. The Replica role is covered by the instances of the Worker element. The Worker element instances have two states, idle and running: when the Worker is in the idle state, it is connected to the Queue element and waits for a new job ID to be published; when a new job ID is available, one Worker pops it from the Queue element, changes the Worker state into running and processes the job. The details regarding the jobs are stored in the Database element and are accessible through the job ID inside the back-end or through the Server element APIs.

Concerning the Resource Management, the Cache element handles the concurrent access to event logs and cached objects. The Cache element is the sole owner of every resource in the toolkit, and implements the Resource Loan pattern allowing those who need the resource to access it but not to modify it. The Cache element also implements the Resource Pool pattern: every time an element in the toolkit needs a resource, it invokes the Cache element to access it (and there is no other way to access a resource).

Finally, the State Management is ensured by the Dissemination of Information pattern that stores multiple representations of the same object, thus allowing for accessing the object with a lower burden.

Assessing the workload Load tests were designed to assess the capability of Nirdizati to support multiple users accessing the prototype in a concurrent manner. In order to perform significant load tests, we first identified different types of workflows corresponding to different types of user interactions with the tool. We then selected the three most resource-intensive ones that are the upload workflow, the job computation request workflow, and the results browsing workflow. The upload workflow involves the Server element, the Database element, and the main memory of the host machine. The job computation request workflow involves the Server element and the Database element. The results browsing workflow involves the Server element, the Database element, and the Worker element.

We assessed these three workflows by achieving a stable CPU usage above \(80\%\) and an error per second \(\le 5\%\), as suggested in Shafique and Labiche (2015) for stress and load testing. The stress test was performed using VegetaFootnote 21, while Nirdizati was hosted on a machine equipped with 4 CPUs and 16GB of RAM. Table 4 reports the results in terms of requests per second (rps) sustained by each workflow both at peak load and at sustained load. The experiments were done using the dataset originally provided for the BPI Challenge 2011 (3TU Data Center, 2011) containing the treatment history of patients diagnosed with cancer in a Dutch academic hospital. The log contains 1,140 traces and 149,730 events referring to 623 different activities. Each trace in this log records the events related to a particular patient. We have chosen this log as it is adequately structured not only in terms of number of traces, but also of events and activities. The hyperparameter optimization was performed using the hyperopt library with the TPE algorithm and 1000 iterations.Footnote 22 The tests show that the most computationally demanding workflow is the result browsing workflow. By assuming an average of 10-15 rps per user, we can conclude that the current version of Nirdizati can support the concurrent usage of about 40 users on similar data and machines.

Nirdizati was also used during the Process Mining Summer SchoolFootnote 23 to support the practical session of a lecture on Predictive Process Monitoring. It was used simultaneously by 32 students, on typical PPM pipelines, such as the one illustrated in Fig. 2, without showing any failure, or lack of performance. This can be considered a further validation of the tool as its features were tested with real world usersFootnote 24, with a workload similar to the 40 concurrent users mentioned above.

9 Limitations

One of the limitations of Nirdizati is the non-exhaustiveness of encodings, predictive models, explainable techniques and evaluation strategies collected in the tool. In fact, although the tool allows for different types of predictions and includes different encodings, learning algorithms, explainability techniques and event log splitting strategies, PPM is a rapidly evolving field. New encodings (Tavares et al., 2023) have been investigated and used in Process Mining and PPM approaches. Similarly, a number of new Deep Learning predictive models (Rama-Maneiro et al., 2023) and explainability approaches (Hundogan et al., 2023; Maita et al., 2023; Buliga et al., 2023) have been explored in recent years. This non-exhaustiveness is particularly evident in the types of evaluation strategies Nirdizati supports. Several issues have been indeed identified when evaluating PPM approaches for next activity prediction (Abb et al., 2023; Pfeiffer et al., 2022), as well as for other prediction types (Weytjens & Weerdt, 2021). Beyond classical approaches, new frameworks and strategies have been recently devised to address these issues (Stevens et al., 2022). Although Nirdizati currently does not include any of them, its modular nature mitigates such a threat and makes it suitable for extension with the most recent advances in the PPM field.

A second limitation of Nirdizati is the lack of a personal working and storage area with user accounts. This threat is partially mitigated by the possibility offered by Nirdizati to save models and export results. We plan to face this challenge in the future developments of Nirdizati .

10 Related work

The most well-known tools that implement PPM techniques are ProM, Apromore, IBM Process Mining Suite, and Camunda.

ProM (van Dongen et al., 2005) is a framework collecting a variety of independent plug-ins. Many of them support specific PPM prediction problems, i.e., they provide (i) numeric predictions (Polato et al., 2014; Folino et al., 2012); (ii) outcome-based predictions (Federici et al., 2015; Castellanos et al., 2006); and (iii) next activity predictions (Polato et al., 2018; de Leoni et al., 2016). Despite the aforementioned wide amount of plug-ins tackling single PPM challenges, the only plug-in in ProM implementing multiple complex capabilities, such as the ones described in this work, is the one in (Federici et al., 2015). This plugin, however, only tackles the outcome and the numeric prediction problems, with no support for a scalable and modular architecture such as the one at the basis of Nirdizati and no support for explanations.

Apromore (La Rosa et al., 2011) is an advanced Process Mining tool that allows for the storage, analysis, and re-use of large sets of process models. The tool is web-based and therefore allows for the easy integration of new plug-ins in a service-oriented manner. This tool aims both at allowing practitioners to deal with the challenges of process stakeholders, and at enabling researchers to develop and benchmark their own techniques with a strong emphasis on the separation of concerns. The only plug-in performing PPM related challenges in Apromore is the one presented in Verenich et al. (2018). This plug-in performs outcome-based prediction, time-based prediction, next activity prediction, and the computes performance metrics w.r.t. an event log. However, the amount of performance metrics available in this tool is limited; no help/indication on the pre-processing of the event log is given to the users, and there is no support for any explanation. Differently from Nirdizati , Apromore also supports the model deployment phase.

The IBM Process Mining Suite (Galanti et al., 2021) is a commercial software suite allowing for the discovery, conformance checking, monitoring, and simulation of processes. The suite is multilevel and provides business analysts with a digital twin of an organization (DTO). While this tool supports explanation and hyperparameter tuning, differently from Nirdizati , it mainly focuses on a single predictive technique (CatBoost), thus lacking the capabilities related to model evaluation and comparison.

Finally, Camunda Footnote 25 is an open-source workflow and decision automation platform. The work in Bartmann et al. (2021) presents a plugin that allows for training, optimizing, and using PPM models. While the plug-in supports all three types of predictions in PPM and the automatic hyperparameters optimization of the predictive models, it supports a limited amount of learning algorithms and a limited amount of evaluation metrics; moreover, no help/indication on the pre-processing of the event log is provided to the users and no support is given to explain the predictive models.

11 Conclusion

This paper presented the latest advancements of Nirdizati . Specifically, its back-end has been redesigned to provide a reliable and efficient framework that allows for an easy comparison of different state-of-the-art PPM approaches and the inclusion of a wide set of PPM techniques, by leveraging its modular architecture.

The recent stream of publications in the PPM field (Di Francescomarino & Ghidini, 2022) shows the need for tools able to support researchers and users in analyzing, comparing and selecting the techniques that are the most suitable for them. This need is also reflected in the growth of PPM plug-ins in general purpose Process Mining tools such as ProM (van Dongen et al., 2005) and Apromore (Verenich et al., 2018). Nirdizati is, instead, a completely dedicated tool for running a very rich set of PPM techniques and its latest advancements make it even more robust, scalable and usable.

We assess the current Technology Readiness Level of Nirdizati to be 5. This release offers indeed a well-defined structure of the software and code documentationFootnote 26; moreover, it is equipped with a very large test suite, and a Continuous Integration deployment pipeline, which supports the integration of all code changes into the main branch of a shared source code repository early and often, and the testing of each committed change in order to kick off a buildFootnote 27. In addition to the workload tests presented in Section 8, the tool has been extensively used and its features exercised on both simulated and real dataFootnote 28 We believe that all these reasons make Nirdizati a mature and useful instrument for the BPM community.

One of the primary future directions we envision for Nirdizati involves adding new encodings, learning techniques and, particularly, evaluation strategies. We believe that its modular nature would facilitate the seamless extension of the toolkit with the latest advances in the PPM field. Moreover, we would like to introduce personal accounts in order to enable users to have personal working and storage areas. Furthermore, the continuous stream of new techniques, coming also from other areas (see e.g., Giannoulidis and Gounaris 2023), will continue to suggest improvements and extensions of the tool.

Data Availability

No datasets were generated or analysed during the current study.

Code Availability

The code is available at https://github.com/nirdizati-research/.

Notes

Source code available at https://github.com/nirdizati-research/

This may change for the provision of explanations (C12), which are currently handled by the Server only because they are novel features of Nirdizati .

In the current version of Nirdizati , SHAP or LIME.

We show here the main features of the page leaving the rest to the tutorial at https://bit.ly/tutorial_nirdizati

The configuration is similar to the one used for the experiments presented in Rizzi et al. (2022b).

The students were encouraged to explore the tool’s behavior while performing their exercises.

For more information on the Continuous Integration paradigm, see https://about.gitlab.com/topics/ci-cd/benefits-continuous-integration/

The usage of Nirdizati is monitored via Google Analytics and the two years time span between the first prototype and the writing of this work recorded 14,637 sessions spending more than a minute in the web application (i.e., filtering out bounce traffic) out of 23,871 sessions in total.

References

3TU Data Center (2011). BPI Challenge 2011 Event Log. https://doi.org/10.4121/uuid:d9769f3d-0ab0-4fb8-803b-0d1120ffcf54

Abb, L., Pfeiffer, P., & Fettke, P., et al. (2023). A discussion on generalization in next-activity prediction. In Business process management workshops - BPM 2023 international workshops, Utrecht, The Netherlands, September 11-15, 2023, Revised Selected Papers, Lecture Notes in Business Information Processing (Vol. 492, pp. 18–3). Springer. https://doi.org/10.1007/978-3-031-50974-2_2

Alby, T. (ed.) (2023). Data science in practice (1st ed.). Chapman and Hall/CRC, https://doi.org/10.1201/9781003426363

Bartmann, N., Hill, S., & Corea, C., et al (2021). Applied predictive process monitoring and hyper parameter optimization in camunda. In Intelligent information systems - CAiSE Forum 2021, Proceedings, LNBIP (Vol. 424, pp. 129–13). Heidelberg: Springer. https://doi.org/10.1007/978-3-030-79108-7_15

Bergstra J, Bardenet R, & Bengio Y, et al (2011). Algorithms for hyper-parameter optimization. In Advances in neural information processing systems 24: 25th annual conference on neural information processing systems 2011, Proceedings (pp. 2546–2554). http://papers.nips.cc/paper/4443-algorithms-for-hyper-parameter-optimization

Bergstra, J., & Bengio, Y. (2012). Random search for hyper-parameter optimization. Journal of Machine Learning Research, 13, 281–305. https://doi.org/10.5555/2503308.2188395

Berti, A., van Zelst, S.J., & van der Aalst, W.M.P. (2019). Process mining for python (pm4py): Bridging the gap between process- and data science. CoRR arXiv:1905.06169

Bonér, J., Farley, D., & Kuhn, R., et al. (2014). The reactive manifesto. http://www.reactivemanifesto.org/

Buliga, A., Di Francescomarino, C., & Ghidini C, et al (2023). Counterfactuals and ways to build them: Evaluating approaches in predictive process monitoring. In Advanced information systems engineering - 35th international conference, CAiSE 2023, Zaragoza, Spain, June 12-16, 2023, Proceedings, Lecture Notes in Computer Science (Vol. 13901, pp. 558–574). Springer. https://doi.org/10.1007/978-3-031-34560-9_33

Calvanese, D., Kalayci, T.E., & Montali, M., et al. (2017). Ontology-based data access for extracting event logs from legacy data: The onprom tool and methodology. In Business information systems - 20th international conference, BIS 2017, Proceedings, LNBIP (Vol. 288, pp. 220–236). Heidelberg: Springer. https://doi.org/10.1007/978-3-319-59336-4_16

Castellanos, M., Salazar, N., Casati, F., et al. (2006). Predictive business operations management. IJCSE, 2(5/6), 292–301. https://doi.org/10.1504/IJCSE.2006.014772

de Leoni, M., van der Aalst, W. M. P., & Dees, M. (2016). A general process mining framework for correlating, predicting and clustering dynamic behavior based on event logs. Information Systems, 56, 235–25. https://doi.org/10.1016/j.is.2015.07.003

Di Francescomarino, C., & Ghidini, C. (2022). Predictive process monitoring. In Process Mining Handbook, LNBIP (Vol. 448, p. 320–346). Heidelberg: Springer. https://doi.org/10.1007/978-3-031-08848-3_10

Di Francescomarino, C., Dumas, M., Federici, M., et al. (2018). Genetic algorithms for hyperparameter optimization in predictive business process monitoring. Inf Syst, 74(Part), 67–8. https://doi.org/10.1016/j.is.2018.01.003

Di Francescomarino, C., Dumas, M., Maggi, F. M., et al. (2019). Clustering-based predictive process monitoring. IEEE Transactions on Services Computing, 12(6), 896–909. https://doi.org/10.1109/TSC.2016.2645153

El-Khawaga, G., Abu-Elkheir, M., & Reichert, M. (2022). XAI in the context of predictive process monitoring: An empirical analysis framework. Algorithms, 15(6), 199. https://doi.org/10.3390/A15060199

Federici, M., Rizzi, W., & Di Francescomarino, C., et al. (2015). A ProM operational support provider for predictive monitoring of business processes. In Proceedings of the BPM Demo Session 2015, CEUR Workshop Proceedings (Vol. 1418, pp 1–5). CEUR-WS.org, RWTH Aachen. http://ceur-ws.org/Vol-1418/paper1.pdf

Folino, F., Guarascio, M., & Pontieri, L. (2012). Discovering context-aware models for predicting business process performances. In On the move to meaningful internet systems: OTM 2012, confederated international conferences: CoopIS, DOA-SVI, and ODBASE 2012, Proceedings, Part I (pp. 287–304)https://doi.org/10.1007/978-3-642-33606-5_18

Galanti, R., de Leoni, M., & Marazzi, A., et al. (2021). Integration of an explainable predictive process monitoring system into ibm process mining suite (extended abstract). In ICPM 2021 Doctoral Consortium and Demo Track 2021, Proceedings, CEUR Workshop Proceedings (Vol. 3098, pp 53–54). CEUR-WS.org. https://ceur-ws.org/Vol-3098/demo_216.pdf

Giannoulidis, A., & Gounaris, A. (2023). A context-aware unsupervised predictive maintenance solution for fleet management. Journal of Intelligent Information System, 60(2), 521–547. https://doi.org/10.1007/S10844-022-00744-2

Hundogan, O., Lu, X., & Du, Y., et al. (2023). CREATED: generating viable counterfactual sequences for predictive process analytics. In Advanced information systems engineering - 35th international conference, CAiSE 2023, Zaragoza, Spain, June 12-16, 2023, Proceedings, Lecture Notes in Computer Science (Vol. 13901, pp. 541–557). Springer, https://doi.org/10.1007/978-3-031-34560-9_32

La Rosa, M., Reijers, H. A., van der Aalst, W. M. P., et al. (2011). APROMORE: An advanced process model repository. Expert Systems with Applications, 38(6), 7029–7040. https://doi.org/10.1016/j.eswa.2010.12.012

Leontjeva, A., Conforti, R., & Di Francescomarino, C., et al. (2015). Complex symbolic sequence encodings for predictive monitoring of business processes. In Business process management - 13th international conference, BPM 2015, Proceedings, LNCS (Vol. 9253, pp. 297–313). Heidelberg: Springer.https://doi.org/10.1007/978-3-319-23063-4_21

Lundberg, S.M., & Lee, S. (2017). A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017 (pp. 4765–4774). https://proceedings.neurips.cc/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html

Luo, G. (2015). Mlbcd: A machine learning tool for big clinical data. Health Information Science and Systems, 3(1), 1–19. https://doi.org/10.1186/s13755-015-0011-0

Maita, A.R.C., Fantinato, M., & Peres, S.M., et al. (2023). Towards a business-oriented approach to visualization-supported interpretability of prediction results in process mining. In Proceedings of the 25th International Conference on Enterprise Information Systems, ICEIS 2023 (Vol. 1, pp. 395–406). Prague, Czech Republic: SciTePress. https://doi.org/10.5220/0011976000003467

Peeperkorn, J., & vanden Broucke S, Weerdt JD,. (2023). Can recurrent neural networks learn process model structure? Journal of Intelligent Information System, 61(1), 27–51. https://doi.org/10.1007/S10844-022-00765-X

Pfeiffer, P., Lahann, J., & Fettke, P. (2022). The label ambiguity problem in process prediction. In Business process management workshops - BPM 2022 International Workshops, Münster, Germany, September 11-16, 2022, Revised Selected Papers, Lecture Notes in Business Information Processing (Vol. 460, pp. 37–44). Springer https://doi.org/10.1007/978-3-031-25383-6_4

Polato, M., Sperduti, A., & Burattin, A., et al. (2014). Data-aware remaining time prediction of business process instances. In 2014 International Joint Conference on Neural Networks, IJCNN 2014 (pp. 816–823).https://doi.org/10.1109/IJCNN.2014.6889360

Polato, M., Sperduti, A., Burattin, A., et al. (2018). Time and activity sequence prediction of business process instances. Computing, 100(9), 1005–1031. https://doi.org/10.1007/s00607-018-0593-x

Rama-Maneiro, E., Vidal, J. C., & Lama, M. (2023). Deep learning for predictive business process monitoring: Review and benchmark. IEEE Transactions on Services Computing, 16(1), 739–756. https://doi.org/10.1109/TSC.2021.3139807

Ribeiro, M.T., Singh, S., & Guestrin, C. (2016). “why should I trust you?”: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135–1144). New York City: ACM, https://doi.org/10.1145/2939672.2939778

Rizzi, W., Comuzzi, M., & Di Francescomarino, C., et al. (2022a). Explainable predictive process monitoring: A user evaluation. https://doi.org/10.48550/arXiv.2202.07760

Rizzi, W., Di Francescomarino, C., Ghidini, C., et al. (2022b). How do I update my model? on the resilience of predictive process monitoring models to change. Knowledge and Information Systems, 64(5), 1385–1416. https://doi.org/10.1007/S10115-022-01666-9

Sani, M. F., Vazifehdoostirani, M., Park, G., et al. (2023). Performance-preserving event log sampling for predictive monitoring. Journal of Intelligent Information System, 61(1), 53–82. https://doi.org/10.1007/S10844-022-00775-9

Schieferdecker, I., Din, G., & Apostolidis, D. (2005). Distributed functional and load tests for web services. Int J Softw Tools Technol Transf, 7(4), 351–360. https://doi.org/10.1007/s10009-004-0165-6

Senderovich, A., Di Francescomarino, C., & Ghidini, C., et al (2017). Intra and inter-case features in predictive process monitoring: A tale of two dimensions. In Business process management - 15th international conference, BPM 2017, Proceedings, LNCS (Vol. 10445, pp. 306–323). Heidelberg: Springer, https://doi.org/10.1007/978-3-319-65000-5_18

Shafique, M., & Labiche, Y. (2015). A systematic review of state-based test tools. Int J Softw Tools Technol Transf, 17(1), 59–7. https://doi.org/10.1007/s10009-013-0291-0

Stevens, A., Smedt, J.D., & Peeperkorn, J., et al (2022). Assessing the robustness in predictive process monitoring through adversarial attacks. In 4th International Conference on Process Mining, ICPM 2022 (pp. 56–63). Bolzano, Italy: IEEE, https://doi.org/10.1109/ICPM57379.2022.9980753

Stierle, M., Brunk, J., & Weinzierl, S., et al (2021). Bringing light into the darkness - A systematic literature review on explainable predictive business process monitoring techniques. In 28th European conference on information systems - liberty, equality, and fraternity in a digitizing world, ECIS 2020. https://aisel.aisnet.org/ecis2021_rip/8

Tavares, G. M., Oyamada, R. S., Barbon, S., et al. (2023). Trace encoding in process mining: A survey and benchmarking. Engineering Applications of Artificial Intelligence, 126(Part D), 10702. https://doi.org/10.1016/J.ENGAPPAI.2023.107028

Tax, N., Verenich, I., & Rosa, M.L., et al (2017). Predictive business process monitoring with LSTM neural networks. In Advanced information systems engineering - 29th international conference, CAiSE 2017, Proceedings, LNCS (Vol. 10253, pp 477–492). Heidelberg: Springer. https://doi.org/10.1007/978-3-319-59536-8_30

Teinemaa, I., Dumas, M., La Rosa, M., et al. (2019). Outcome-oriented predictive process monitoring: Review and benchmark. ACM Trans Knowl Discov Data,13(2). https://doi.org/10.1145/3301300

Thornton, C., Hutter, F., & Hoos, H.H., et al. (2013). Auto-WEKA: Combined selection and hyperparameter optimization of classification algorithms. In Proc. of KDD-2013 (pp. 847–855). https://doi.org/10.1145/2487575.2487629