Abstract

The process parameters used for building a part utilizing the powder-bed fusion (PBF) additive manufacturing (AM) system have a direct influence on the quality—and therefore performance—of the final object. These parameters are commonly chosen based on experience or, in many cases, iteratively through experimentation. Discovering the optimal set of parameters via trial and error can be time-consuming and costly, as it often requires examining numerous permutations and combinations of parameters which commonly have complex interactions. However, machine learning (ML) methods can recommend suitable processing windows using models trained on data. They achieve this by efficiently identifying the optimal parameters through analyzing and recognizing patterns in data described by a multi-dimensional parameter space. We reviewed ML-based forward and inverse models that have been proposed to unlock the process–structure–property–performance relationships in both directions and assessed them in relation to data (quality, quantity, and diversity), ML method (mismatches and neglect of history), and model evaluation. To address the common shortcomings inherent in the published works, we propose strategies that embrace best practices. We point out the need for consistency in the reporting of details relevant to ML models and advocate for the development of relevant international standards. Significantly, our recommendations can be adopted for ML applications outside of AM where an optimum combination of process parameters (or other inputs) must be found with only a limited amount of training data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Additive manufacturing (AM), also known as 3D printing, is a technology that provides distinct advantages over traditional manufacturing approaches. In this process, three-dimensional (3D) products are built incrementally by gradually adding thin layers of material based on computer-aided design (CAD) models (DebRoy et al., 2018). This unique printing procedure supports the production of complex shapes, without the need for costly tooling typically used in conventional manufacturing methods. The design freedom allows personalization by easily customizing the desired geometry characteristics (Kumar et al., 2021; Szymczyk-Ziółkowska et al., 2022; Tamayo et al., 2021). AM also decreases the requirements for assembly or the integration of multiple components through welding or nuts and bolts (adding weight), as it builds the final object in a single fabrication process. AM printing processes are categorized into seven groups according to the ASTM F42 Standard (Gibson et al., 2021). Among these, a particularly popular technique is powder-bed fusion (PBF), which includes laser powder-bed fusion (L-PBF) and electron beam powder-bed fusion (E-PBF). These processes employ a laser or electron beam, respectively, to melt specific areas of a thin layer of powder. When repeated, a 3D part is built-up layer by layer under computer control (Wang et al., 2020). PBF is highly versatile and can be utilized to make complex products from a wide range of materials, making it the focus of much research and industry attention.

Despite its several advantages, AM has remained a niche process due to the challenges involved in building complex geometries without defects. Multiple factors such as material properties and process parameters influence the part quality (Gao et al., 2015). Given the multidimensional parameter space and interactions between parameters, it is onerous to humanly identify the hidden relationships underpinning the AM process. While high-fidelity physical-based models can help identify some of the cause-and-effect relations via ‘what-if’ simulations, their deterministic nature does not allow the uncertainties that occur during the AM process to be incorporated easily, and they typically require significant computational resources (Wang et al., 2020). Additionally, it is challenging to consolidate the models for different AM phenomena occurring at varied scales into one unified cohesive structure. Under these circumstances, Machine learning (ML) techniques provide a pathway forward.

ML has been used in many industries, such as healthcare, automobile (e.g., self-driving cars), manufacturing, and marketing, to uncover relationships—often unexpected—between inputs and outputs, to solve challenging real-world problems (Larrañaga et al., 2018). It is a subset of Artificial Intelligence (AI) that uses data and algorithms to imitate human comprehension and learning. ML’s learning strategy comprises three main components: a decision process concerning the estimation of outputs based on the problem at hand (i.e., supervised classification vs regression) and the discovered patterns in input data (unsupervised pattern recognition); an error function calculation step that evaluates the model’s performance by comparing known and unknown samples; and finally model optimization, which adjusts the model’s weights to minimize the variance between known samples and model predictions. This process of evaluation and hyper-parameter optimization is repeated until the model reaches a minimum error value (Miclet et al., 2008). At this point, it is considered to have “converged” to an acceptable architecture.

Numerous studies have demonstrated the benefits of using ML for various AM applications (Jin et al., 2020; Wang et al., 2020), and these benefits are summarized in Fig. 1. Firstly, ML can be utilized in the design phase of an AM process to improve topology and material composite design (Chandrasekhar & Suresh, 2021; Fayyazifar et al., 2024; Han & Wei, 2022; Karimzadeh & Hamedi, 2022; Patel et al., 2022). Secondly, it can be employed for process parameter development (PPD) with a view to obtaining an ideal processing window that would ensure the built part is within specifications (Aoyagi et al., 2019; Tapia et al., 2018). These PPD ML applications in the form of “forward” and “inverse” models can also investigate the influence of process parameters on process signature, microstructure, property, performance, and build quality, and vice versa (as explained in detail in section “ML frameworks for the PPD problem: forward vs inverse models”). Moreover, ML can be deployed in tandem with in-situ monitoring where various sensors (e.g., infrared, acoustic, or optical devices) are used to collect data during the printing process, which can then be analyzed in real-time by an ML model to predict the ensuing events or identify the likelihood of any potential defects forming (Ogoke & Farimani, 2021; Ramani et al., 2022; Rezaeifar & Elbestawi, 2022). Data collected by sensors can also be used offline in training ML models for PPD applications to analyze relationships between parameters and microstructure/part quality/performance (Dharmadhikari et al., 2023; Lapointe et al., 2022; Park et al., 2022; Sah et al., 2022; Wang et al., 2022; Yeung et al., 2019; Zhan & Li, 2021) (the use of ML for PPD applications is the focus of this work and will be discussed in some detail below, in section “Process parameter development (PPD) in PBF: the role and requirements of ML”). Furthermore, ML techniques can help assess the quality of the final part by detecting various anomalies/defects (Fu et al., 2022; Taherkhani et al., 2022; Wang & Cheung, 2022). Other applications of ML in the AM domain in areas such as manufacturing planning and data security can be found elsewhere, e.g., (Mahmoud et al., 2021; Qin et al., 2022; Wang et al., 2013).

This article is organized as follows: in section “Background and knowledge gap“, the inverse and forward models are introduced, and the knowledge gap is explained. In section “Research questions and search strategy”, research questions, and the search strategy are presented. In section “Process parameter development (PPD) in PBF: the role and requirements of ML”, we introduce the process parameters relevant to PBF and discuss the potential benefits of utilizing ML techniques for PPD applications. In sections “Data-related issues: quality, quantity, and diversity, Choices of ML methods: mismatch and neglect of history”, and “Inadequate analysis of the model’s performance”, we identify shortcomings of the existing ML forward and inverse models under three main categories. We also propose improvement strategies that could assist in developing reliable and well-generalized ML models for PBF. In section “Shortcomings in the reporting protocols”, other minor limitations related to reviewed publications are discussed in detail. Section “Further guidelines on selecting the appropriate ML method” provides further guidelines for choosing the most appropriate ML method. Lastly, in section “Conclusions”, we summarize the study and outline our conclusions.

Background and knowledge gap

Use of ML for PPD applications

In the current paper, we focus on PPD aimed at determining the optimum processing window for a given part on a nominated AM machine to achieve the desired part quality in terms of prescribed product specifications. The development of a rigorous ML framework for PPD can significantly shorten the process development cycle by reducing the cost and time needed to build new custom shapes that are entirely different from historical prints and thus requiring unique combinations of process parameters.

The most recent review papers (Hashemi et al., 2022; Liu et al., 2023) divide ML approaches used to discover various links in the process–structure–property–performance (PSPP) relationship into three different categories. These are: mapping relationships between process parameters and: (1) structure (20, 32), (2) property and structure (PSP) (Costa et al., 2022; Sah et al., 2022), and (3) property and performance (Tapia et al., 2018). These relationships may be explained using Fig. 2, which exemplifies the PSPP relationship. For a given alloy composition, the processing route chosen to produce a part has the most influence in determining the microstructure and geometrical integrity of the part. This is because critical variables such as heat addition rates and heat removal rates, which affect the microstructure and build geometry, are governed by the processing method. In AM the above heat transfer rates, and therefore the resulting microstructures and build quality, vary based on their position on the part. This is unlike in traditional manufacturing routes where bulk solidification occurs, the part is built incrementally in AM, and solidification is highly localized. Observing the temperature and size characteristics of the highly transient microscopic melt pool is a convenient way to gauge the influence of the time-varying heat transfer rates in AM. These melt pool characteristics are widely regarded as a reliable means for correlating process parameters with spatially varying part microstructure and build quality and are referred to as “process signatures” (Fig. 2). The properties of the part are determined by the microstructures. The properties, in turn, underpin the performance of the part under service conditions.

ML forward models versus ML inverse models in the process–structure–property–performance (PSPP) continuum. ML F1, F2, F3, F4, and F5 refer to models where process parameters are the inputs and the process signature, the part’s microstructure/micro-anomalies, properties, build quality, and performance are the outputs, respectively. In contrast, ML I1, I2, I3, I4, and I5 show the inverse models in which the process signature, microstructure/micro-anomalies, part’s properties, build quality and performance are the inputs, and process parameters are predicted as outputs

ML frameworks for the PPD problem: forward vs inverse models

The relationships in the PSPP continuum may be developed in the forward direction where process parameters are the starting point. Equally, the linkages may be mapped in the reverse order where the process parameters are the outputs. The associated ML models are appropriately termed ‘Forward’ and ‘Inverse,’ respectively (see, Fig. 2). In Forward Models, the ML algorithm receives different process parameters as input and predicts/estimates various aspects of PBF, i.e., process signature, microstructure, property, build quality, and performance as output. The forward models are ideal for discovering the variables that exert the most influence; for example, identifying that the laser (or electron beam) parameters are a dominant factor in dictating the likelihood of porosity on an AM build (La Fé-Perdomo et al., 2022). However, forward models cannot be used for obtaining time-saving direct solutions for resolving critical issues associated with the process as they require a series of ‘what-if’ forward simulations to pinpoint the causes. In such situations, Inverse Models must be used. As their name indicates, this class of models are the opposite of the forward models. They work backward to predict/output optimum process parameter values given the desired process signature, microstructure, property, build quality, or performance (which become the inputs).

Knowledge gap

There is a limited number of articles in the open domain that focus on optimizing process parameters for PBF using experimental data. While most employed the forward strategy, a small fraction presented inverse models. In this paper, we first point out the shortcomings of existing forward and inverse models in the PBF domain through a critical review of the literature. Then, we propose improvements to address the drawbacks for developing a reliable and well-generalized ML forward and/or inverse model. To the best of our knowledge, we are the first to conduct a critical, systematic review of ML models in AM relating to PPD from a forward/inverse modelling standpoint and to articulate improvements. We followed the methodology of previously published systematic reviews, e.g., (Chukhrova & Johannssen, 2019), to search and categorize the relevant articles. Our contributions are outlined as follows:

-

Cataloguing and reviewing the ML forward and inverse models in the AM domain developed for PPD applications.

-

Investigating the data-related shortcomings, analyzing the ML methods used, and scrutinizing the models’ evaluation techniques.

-

Suggesting improvement strategies to address the above-mentioned drawbacks and proposing a rigorous and well-generalized ML model for PPD needs.

-

Highlighting the need to consider part geometry and represent it in the form of geometry descriptor in developing ML forward and inverse models.

Research questions and search strategy

We conducted a systematic literature review of the ML algorithms developed for PPD in both L-PBF and E-PBF processes. Our three research questions (RQs) are listed in Table 1 where the motivations are also summarized.

Our review process commenced with identifying papers published between January 2012 and June 2023 that established ML approaches in PBF processes. We conducted a comprehensive search using Google Scholar with the exact string using Boolean operators combining with potential inputs and outputs in forward and inverse models: ((“additive manufacturing” OR “3D printing” OR “powder bed fusion”) AND (“machine learning” OR “artificial intelligence” OR “deep learning” OR “data-centric model”) AND (“process parameters” OR “process map” OR “property” OR “performance” OR “porosity” OR “defect” OR “melt-pool”)). This search yielded 17,800 references sorted by relevance, allowing the search for potential primary sources to be concentrated on the initial pages of references across 85 pages. To restrict the studies for conducting a critical review in a reasonable length of time, we used the following exclusion criteria:

-

(1)

Articles not published in English.

-

(2)

Works concentrated on technologies other than ML for developing a PPD framework.

-

(3)

Studies focused on ML technologies for AM processes other than PBF.

-

(4)

Works solely concentrated on defect detection without dealing with process parameters.

-

(5)

Studies utilized ML solely for optimizing design parameters.

-

(6)

Approaches utilizing only simulation data and ML models developed using data captured from other published papers.

We further applied the following inclusion criteria to the articles that were selected:

-

(1)

Frameworks developed ML models but containing at least one shortcoming.

-

(2)

Articles applied a few improvement strategies to their proposed ML technique.

Based on the above conditions, 20 papers were retained. To ensure comprehensiveness, we expanded our search to include four digital libraries: ACM Digital Library, IEEE Xplore Digital Library, ScienceDirect, and SpringerLink, using our primary search terms. This secondary search resulted in 594, 599, 1146, and 715 references, respectively. After applying our criteria, and removing duplicates from Google Scholar’s results, an additional two papers were included, bringing the total to 22 papers for this study. Table 2 lists a summary of all the critically reviewed papers, categorized under forward and inverse models (as shown in Fig. 2), which were used in finding answers to our RQs. An in-depth analysis of the articles is provided in the Appendix.

It is not uncommon in “systematic literature” reviews to have a small number of publications as the core set. This is because the inclusion/exclusion criteria are designed to find the most relevant high-quality publications that offer the greatest value to the reader. In our study, while we identified 22 core references, the comprehensive nature of our discussion led us to incorporate several other supporting references, bringing the total number of references to over 188. This approach ensures that our analysis is both thorough and well-supported, providing a robust foundation for our findings.

Process parameter development (PPD) in PBF: the role and requirements of ML

Unlike in traditional manufacturing where only a handful of process parameters need to be tuned, a large set of process parameters (see, e.g., Fig. 1) are required to be adjusted in PBF before the start of a build. While providing flexibility and an increased degree of control, such a multi-dimensional parameter space nonetheless makes it challenging for human machine operators to arrive at the optimum values efficiently—leading to expensive trial and error. Additionally, the sets of parameters that give the same result in terms of part properties, for example, can be non-unique. That is to say, several different permutations and combinations of process parameters could potentially give the same final result on the part (Gunasegaram & Steinbach, 2021). Furthermore, human analysis may not detect all the complex interactions that could occur between various parameters. While some commercial PBF machines have in-built functionality for suggesting first-guess nominal values for controllable process parameters based on the CAD data, such features are not available on many machines. Even those with the capability can mostly accommodate only simple part geometries, so where more complicated geometries comprising advanced features (e.g., overhangs and/or thin walls) are involved, the machine-prompted values are unlikely to result in a high-quality part. Under these circumstances, the solution is to apply AI techniques (e.g., ML). Table 3 summarizes different ways in which AI can assist in the area of PPD.

Two different ML approaches (i.e., forward and inverse models) are available, as shown in Fig. 2 (and discussed briefly in section “ML frameworks for the PPD problem: forward vs inverse models”). ML F1 is a forward model that outputs predicted process signature given a certain combination of processing parameters and powder composition as inputs. Similarly, other forward models ML F2, ML F3, ML F4, and ML F5 frameworks accept two input variables each, i.e., process parameters and material composition, and predict microstructure, property, build quality, and performance, respectively. The most common type of model available in the open domain was ML F3, often used to predict part density. Density is known to be one of the main indicators of part quality, and can be determined as a function of porosity and/or surface roughness resulting from the process (Vock et al., 2019). A higher level of porosity results in a lower density of a part which is, in turn, more likely to develop fatigue related cracks during cyclic loading (Feng et al., 2021). Surface roughness can also affect the fatigue performance of a part, since a rougher surface may trigger premature failure (Fox et al., 2016). The variables used mostly as inputs in ML models were laser power, laser scanning speed, laser beam size, powder layer thickness, and hatch distance due to their greater influence on porosity creation in metal parts (Dilip et al., 2017; Kamath et al., 2014; Kumar et al., 2019; Yeung et al., 2019). It is noteworthy that porosity is the most significant defect forms on products built using the PBF technique (Mostafaei et al., 2022; Wang et al., 2020).

The inverse models including ML I1, ML I2, ML I3, ML I4, and ML I5 identify the specific processing parameters for a given powder composition. In inverse models, the inputs are the desirable values of process signature, microstructure, property, build quality, or performance. Unlike in the case of forward models where examples were found for all five types (Gaikwad et al., 2020; Hassanin et al., 2021; Le-Hong et al., 2023; Sah et al., 2022; Zhan & Li, 2021), only a handful of instances were detected for the inverse types ML I1 and ML I2 (Lapointe et al., 2022; Silbernagel et al., 2020). To date, no study appears to have been conducted to predict optimal process parameters based on desired properties and build quality (i.e., ML I3 and ML I4).

To increase their range of applicability, the ML models must be generalized, for example, they should be capable of parsing unknown part shape, its dimensions, etc. Additionally, a well-generalized ML model should support unique geometrical features on AM parts such as thick bulk regions, thin walls, overhangs, sharp corners, and thin gaps. Furthermore, on parts with a combination of such features, the ML should be able to identify the specific combination of process parameters for each geometry feature. For instance, if the objective is to obtain a higher quality surface finish on a given section of a part, the model should deliberately focus on a few influential parameters, including laser/electron-beam power, scan speed, overhang angles, scan strategy, and layer height to achieve the desired result (Oliveira et al., 2020). Thus, generalizability should be one of the requirements that must be satisfied in developing best practices for ML models. Additionally, the ML models must be accurate enough to predict the values of desired targets with minimal errors between the predictions and the actual values. Moreover, such a model must be reliable in the face of noisy data (e.g., noisy in-situ monitoring data in AM). It has been recently shown that the combination of variance tolerance factors (VTF) (Li & Barnard, 2023) and a Rashomon set of models (Li et al., 2023) can return universal rankings of variable and the ability to focus a model on specific inputs without compromising quality.

Another example of the utility of ML in PPD is when the same part requires an entirely different material. The same set of optimum process parameters cannot be used interchangeably in these cases even if the geometry remains the same because the material properties (e.g., thermal diffusivity, laser absorption percentage) vary considerably. The trial and error required in each instance can be cut short using an ML strategy. Similar ML PPD approaches can also be applied to multi-material printing.

Finally, it has been suggested that ML models can be used for PPD on-the-fly during a build process. Interrogatable ML models may be deployed in feedback and feedforward control of the AM machines to monitor the build process and regulate the parameters in real-time or near-real-time (Gunasegaram et al., 2021a, 2021b; Gunasegaram et al., 2021a, 2021b). In these cases, AI algorithms that obtain process intelligence from ML models can adjust the process parameters during the printing process based on the current state of the process and its trajectory.

Various data types available for ML models in PPD

Different types of data can be collected from various sensors, which monitor the printing process to develop forward and inverse ML models. In forward models, numerical data is a type for primary input of models (i.e., process parameters). Different data types can be used for outputs to represent process signature, build quality, microstructure, and property. For instance, the process signature can be numerical (e.g., melt-pool width and height), videos/images (e.g., melt-pool videos), and/or signals (e.g., acoustic, photodiode). While numerical data is commonly used to train a model which predicts build quality, microstructure, and property, images/videos related to the defect types (e.g., porosity/lack-of-fusion) can also be employed to develop models predicting microstructure and/or property. The same data types are utilized to train and test inverse models but in the opposite direction.

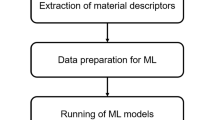

Requirements of an ideal ML model for use in real-world scenarios

Several steps are involved in deploying an ML model in a real-world setting (Hladka & Holub, 2015). These steps begin with understanding the problem and evaluating if it can be solved through ML approaches. Not all problems can. Assuming ML is suitable, structured, usable, and appropriate, data needs to be identified, collected in sufficient quantities, cleaned, and prepared (e.g., outlier removal, feature selection, feature engineering (Samadiani & Moameri, 2017)). In the next phase, the most appropriate ML technique must be selected based on various criteria (e.g., performance, interpretability) and the properties of the data set (e.g., linearity, size, dimensionality, imbalance, annotation). The data must be split and stratified to allow for training, testing, and evaluation. Once a model has been trained, its performance must be assessed to evaluate its performance with regard to accuracy, generalizability, efficiency and interpretability. Finally, the model predictions must be verified with domain experts before being implemented and deployed for practical use (Bhattacharya, 2021). Thus, from a data science point of view, ML models used for PPD applications in PBF must satisfy certain key preconditions to ensure they are robust enough to be relied upon in commercial environments.

Our review, however, found that several models in the public domain fell short of these requirements. Broadly, we identified the following limitations in datasets: poor quality, insufficient quantity, inadequate diversity, limited range, lack of balance, and highly non-reproducible. All these issues lead to model bias. Other shortcomings included the following: a lack of compatibility between data and the ML methods used, neglect of the time-dependency of information, and inadequacies in the evaluation metrics used for ML models. We also detected inadequate reporting of the details concerning experiments and/or ML models in many cases, which made it impossible for others to make independent judgments on the validity of the results presented in the studies. These weaknesses are considered further below under relevant headings in sections “Data-related issues: quality, quantity, and diversity–Shortcomings in the reporting protocols”. We conclude each discussion with suggestions for improvements provided from a data science perspective. Additionally, we provide further guidelines for selecting appropriate ML methods for PPD related applications in AM (section “Further guidelines on selecting the appropriate ML method”).

Data-related issues: quality, quantity, and diversity

Poor-quality and/or insufficient data

It is well known that the quality of an ML model is underpinned by the quality of data used for its training (Breck et al., 2019; Jain et al., 2020; Polyzotis et al., 2017). Higher quality data may be ensured through validation (Polyzotis et al., 2017), e.g., by checking its type, range, and format and/or by incorporating a separate autonomous validation module in intricate frameworks (Neudecker et al., 2020). Cleaning and engineering data is paramount and is particularly important when using ANNs which are more sensitive to data quality than tree-based models. This assists with the early detection of errors, model quality enhancement, and consequently streamlines the debugging process. Improving data quality can also allow a smaller quantity of data to be used for training (since outliers that can distort a model are avoided), facilitating the development of data-centric ML frameworks. This can be determined using learning curves that report convergence of the under-fitting and over-fitting via the training and cross-validation scores. These frameworks contrast with that of the model-centric approaches which rely on big data to obtain an accurate picture of the physical domain (Emmert-Streib & Dehmer, 2022).

In the AM literature, model-centric algorithms were used with insufficient amounts of data, resulting in non-generalized ML models that may not maintain a comparable performance on unseen data. The lack of big data in AM may be traced to the fact that the labor-intensive design phase and time-consuming trial-and-error stage make it challenging to generate vast amounts of information. This is particularly problematic when using ANNs which require more data than tree-based models. Furthermore, post-processing to obtain quality indicators, e.g., X-ray computed tomography (Carmignato et al., 2018; Tosi et al., 2022) and tensile testing (Uddin et al., 2018), are also labor-intensive. Additional manual effort is required in generating ground-truth labels, mandatory in the creation of supervised ML models, from these analyses. Moreover, the large variety in the types of PBF machines and their different technologies result in highly incompatible datasets which cannot be combined easily. Due to these difficulties, unsurprisingly, a comprehensive benchmark dataset is not available in the public domain. As a result, we found that many of the scholars relied on poor-quality ‘small data’ which is inappropriate for use in a model-centric framework.

Suggested improvements

While the acquisition of big data can improve the generalizability of ML models, this is a significant challenge in metal AM due to the expensive nature of the process and the multitude of variations present in the form of tunable process parameters and hardware. Therefore, the following suggestions are provided to address the issue.

Modularization: using multiple process parameters in a single build

One way of reducing the number of experiments is to divide a build geometry into several regions and use different sets of parameters for each of these sections. This will allow data to be generated for several different combinations of parameters on a single build. We found a study (Pandiyan et al., 2022) in the literature that applied this strategy on cubes (for developing a defect detection system). The authors initialized the print using the nominal process parameters to collect data from defect-free layers. Then, a second set of parameters was used to deliberately introduce some keyholes, starting from layer 31. Two further combinations of parameters were employed in layers 61–90 and 91–120 to force lack-of-fusion porosities and conduction mode, respectively. A transition dimension of 30 layers was selected as it was an ideal height at which the X-ray radiation could pass through the sample with no occlusion of build plate edges. It must be noted that the regions need not be divided solely based on layer numbers as above. For instance, Lapointe et al. (2022) chose their regions systematically depending on proximity to geometrical features such as edges, corners, and overhangs, on the premise that different heat transfer rates can be expected in these areas. This resulted in different sets of process parameters being used on the same layer depending on the position.

In summary, using different combinations of process parameters in a single build can reduce the costs of generating a big dataset. However, some trial and error and/or input from domain experts may be necessary in deciding how to divide a build geometry logically into regions. Also, the ML analyst must keep in mind that datasets generated in this way may not always be fully representative of the actual build conditions.

Data fusion: using simulations to reduce the number of experimental datasets

Another strategy for reducing the number of experimental datasets used is to utilize simulations to either generate additional datasets or constrain the ANNs using physics laws so that the ML algorithm no longer relies on a large volume of datasets to develop a robust understanding of the process (Raissi, 2018; Raissi et al., 2019). The need for big data is reduced because the simulated datasets could be considered inherently ‘clean,’ since validated physics models can be relied upon to not output out-of-range predictions. In the first case, when using simulations to add to the number of training datasets, the simulation derived datasets (white box data, since explainable) are combined with comparable experimental datasets (black box data) to create grey box data. Some are of the view that grey box data helps train a more robust model than purely experimentally derived big data because the boundaries for predictions are better defined in the former. In the second case, when using physics laws to constrain neural networks, the strategy followed generally involves minimizing not only the errors between data and predictions as in the traditional ML loss function, but also minimizing the residual relating to the solution of Partial Differential Equations (PDEs) that represent the governing physics (Xu et al., 2023). Therefore, the new effective loss function is a composite of ML-related loss and physics-related loss.

Data augmentation

The amount of data collected may be expanded using data augmentation techniques which manipulate the data systematically to modify them slightly (Van Dyk & Meng, 2001) as explained below with examples. These alternations preserve the original information but create extra data with additional perspectives for training, validation, and testing. The newly generated data points are appended to the original dataset for developing a well-generalized ML model. Incidentally, they are also beneficial for resolving the issues of imbalanced and non-diverse datasets (refer to sections “Imbalanced and non-reproducible datasets and Inadequate diversity and range in datasets” for more details). Various data augmentation methods are available for the different data types (e.g., image, signal). For instance, for an image, manipulation techniques such as geometric transformation, rotation, flipping, and contrast variation, can be applied to produce additional images (Shorten & Khoshgoftaar, 2019). Figure 3b–g illustrate six augmented examples of applying these techniques to two original near-infrared images (Fig. 3a) captured from two shapes, annular and star, of a print. The different perspectives provided by these manipulated images benefit the training in two ways: (1) these modified images, which may look vastly different from the original to a human, are less so to the ML algorithm–resulting in a multiplicative effect that simply increases the number of usable data points, and (2) expose the model to potential variations that might be found in the captured data (e.g., due to a camera lens no longer being clean, etc.)—making it more rigorous via the added awareness. Similarly, signal data can be augmented by adding noise, employing Fourier transforms, or using different sampling methods. In the AM literature, Zhang et al. (Zhang et al., 2023) utilized 26 image augmentation techniques to multiply 64 original images to 1664 data points for classifying part quality using an ML model in a Fused Deposition Modeling (FDM) process. In another study (Becker et al., 2020) that aimed to detect anomalies in an FDM process, three techniques including time stretching, pitch shifting, and amplifying with different parameters (see Fig. 4b–g) were used to generate augmented signals from the experimentally collected acoustic emission data.

a 3D solid models of two different objects and their original near-infrared images captured during the print using an Arcam Q10 machine. The following images illustrate data augmentation techniques employed: b changes in brightness and contrast of the image, c adding Gaussian blur to the image, d image rotation with arbitrary angle, e randomly image distortion, f random affine transform of the image, and g contrast adjustment of the image

a The audio signal recorded during the print in the study (Becker et al., 2020). The following signals depict data augmentation techniques employed: b time stretching (k = 1.5), c time stretching (k = 0.5), d Signal pitch shifting (k = −10), e signal pitch shifting (k = 10), f Signal amplifying (k = 0.1) and g signal amplifying (k = 0.5)

Data generation

Synthetic data. A dataset can be extended through adding synthetic data created by generative techniques (Nikolenko, 2021). Synthetic data refers to artificially generated data that mimics the characteristics and patterns of real-world data (Bolón-Canedo et al., 2013). Generative Adversarial Networks (GANs) (Goodfellow et al., 2020) are one of the most popular methods to produce various types of synthetic data in many domains. GANs include two main components, a generator, and a discriminator (see Fig. 5a). The former creates new synthetic/fake samples from the original input and the latter, a binary classification, distinguishes the generated fake data from the original. The generation process terminates when no more real data is available for the generative module and all data has been recognized by the discriminator model. In the AM literature, a GAN structure, namely conditional single-image GAN (ConSinGAN (Hinz et al., 2021)) was utilized to produce 35 synthetic images with five different defects—holes (due to local absence of powder), spattering, incandescence (occurred due to incorrectly cooling down of melt pool), horizontal flaws and vertical anomalies (caused by improper powder spreading) (Cannizzaro et al., 2021). Figure 5b shows a training image and the corresponding synthetic image in which three kinds of defects simultaneously appeared. This phenomenon (i.e., getting several types of anomalies on a single picture) rarely occurs in a real experiment. Thus, it is noted that synthetic data can be beneficial for improving the model’s generalizability as the information related to rare situations can also be provided. The researchers used the ML model trained using this synthetic data to successfully detect defects in near real-time between layers of a PBF build.

a A general scheme of a generative adversarial network (GAN), b the original image and the corresponding synthetic data created by the ConSinGAN model in (Cannizzaro et al., 2021). The holes, horizontal and vertical defects were produced artificially

Transfer learning

This technique addresses the dearth of data through transferring knowledge (e.g., patterns, relationships) from a comparable system to improve the performance of an ML model. In transfer learning, a model trained on a source problem, which typically includes many labeled data, is leveraged to learn a target scenario, which lacks adequate labeled data or contains a different data distribution (Weiss et al., 2016). Transfer learning accelerates the training process of the newly developed model by skipping the training from scratch (starts from a semi-optimized point). It can be particularly useful in proposing ML models in AM as it allows for exchanging knowledge between the models which were trained on the data from various 3D printers, different AM processes, and a wide range of materials. For instance, Pandiyan et al. (2022) transferred the knowledge obtained from a trained model, which predicted the quality of objects created by stainless steel, to their new ML algorithm that assessed the quality of bronze AM products.

Significantly, transfer learning may be applied to AM scenarios from non-AM domains. For instance, the initial layers in a DNN which may be tasked with identifying top-level features like the boundaries could receive pre-trained knowledge from other fields. Some AM researchers have achieved promising results when they used the knowledge gained from pre-trained models on unsimilar datasets. For instance, Fischer et al. (2022) used an Xception network (Chollet, 2017) trained on ImageNet dataset (Deng et al., 2009), which included 1000 classes of around 20,000 object categories (e.g., balloon, strawberry) (Deng et al., 2009). The network was tuned to detect eight different anomalies, such as balling and incomplete spreading. In another study (Scime & Beuth, 2018), the knowledge obtained from an AlexNet (Krizhevsky et al., 2017) trained on ImageNet dataset was used to initialize the weights of the Multi-scale Convolutional Neural Network (MsCNN) to recognize the spreading defects in an L-PBF system.

Data-centric ML models

As highlighted above, these ML algorithms (Bourhis et al., 2016; Whang et al., 2023) are capable of being trained on small amounts of data. Data-centric models focus on the characteristics of the data being used, rather than on the specific problem that the model is being applied to (Bourhis et al., 2016; Whang et al., 2023). In developing these models, the region of interest is restricted to a smaller area to achieve the foremost bias and variance trade-off (refer to section “Mismatches between data and ML methods” for more details) (Li et al., 2022; Yang et al., 2020). This solution helps create a model which characterizes the patterns between samples as comprehensively as possible with fewer data points. Some data-centric models which were developed for detecting anomalies (Zeiser et al., 2023) and optimizing the design (Chen et al., 2022) in PBF processes are available in the open domain. However, none of these related to PPD studies related to AM.

Imbalanced and non-reproducible datasets

As highlighted earlier, the dataset used for training an ML model should be balanced. However, real-world datasets, including in AM, are mostly imbalanced where the target class (e.g., outputs such as porosities and cracks) has an uneven distribution of samples. For example, in PBF processes, the occurrence of pores is likely to be higher than some rare defects such as cracks. This means, the model trained on the data may become more familiar with porosity than cracks, and thus might misclassify cracks as pores on occasions (as it doesn’t have enough examples of cracks to distinguish them from pores). Such results biased towards the majority target class become a more challenging issue particularly when our interest is in predicting one of the minority classes. Among the past works, we found that data in a vast majority was sufficiently balanced. However, there were some exceptions. For instance, Aoyagi et al. (2019) created a process map without paying attention to the imbalance in their data. Out of the eleven cylinders they built, the surface roughness of only three samples were classified as acceptable, which meant that an overwhelming majority were categorized as unacceptable. Therefore, the process map may not be fully reliable.

In addition to the issue of imbalance, the data obtained from AM processes is highly irreproducible because the process itself is notoriously non-repeatable. The stochastic nature of powder dynamics and melt-pool behavior cause these processes to perform differently from build to build even for the same geometry (Huang & Li, 2021). Thus, the quality of the final product may vary from one build to another. In other words, if the dataset contains data points from samples which were made only once, the ML predictions might not be reliable. Therefore, the datasets should be obtained from several instances of the same build process. Concerning the non-reproducible datasets, we found that most researchers (Aoyagi et al., 2019; Costa et al., 2022; Kwon et al., 2020; Lapointe et al., 2022; Nguyen et al., 2020; Park et al., 2022; Sah et al., 2022; Silbernagel et al., 2020; Singh et al., 2012; Tapia et al., 2016; Wang et al., 2022; Yeung et al., 2019) printed their geometries only once, limiting the reliability of their ML models. However, two recent studies addressed this issue somewhat. Firstly, Le-Hong et al. (2023) made a point of repeating six of their builds while Hassanin et al. (2021) did so for three of their builds. Such repetitions, although adding to the time and cost of experimentation, may be justified on the basis of the significant value they add to an ML model.

Suggested improvements

Sampling: Over-sampling and under-sampling to address imbalance

The over-sampling technique is used to balance the class distribution. It involves increasing the number of instances in the minority class by duplicating or creating new data points (Mohammed et al., 2020). One of the common over-sampling methods is Synthetic Minority Over-sampling Technique (SMOTE) (Chawla et al., 2002) which generates synthetic data for the minority class by interpolating between existing instances. Although GANs are also utilized for creating synthetic data (as explained in the fourth improvement of section “Poor-quality and/or insufficient data”), they are different from SMOTE. GANs consider overall class distribution to create new samples with the aim of including more diverse and realistic data, while SMOTE generates new data points based on local information to tackle the imbalance issue. Recently, hybrid GAN-based oversampling techniques have been developed to balance datasets (Shafqat & Byun, 2022; Zhu et al., 2022).

The under-sampling technique, on the other hand, refers to reducing the quantity of instances in the majority class to address imbalance. This assists with preventing the model from being biased towards the majority class. Tomek Link Removal (TLR) (Devi et al., 2017; Tomek, 1976), a common under-sampling method, reduces the probability of the model misclassification by recognizing and removing instances from the majority class that are close to instances from the minority class. It is worth mentioning that while TLR can be effective, it might not be suitable for all scenarios. However, in some cases, a combination of both SMOTE and Tomek link removal (de Rodrigues et al., 2023) can be useful to address imbalance issue.

Big data collection

Imbalance and repeatability issues are automatically resolved when sufficiently large datasets are collected. Please refer to the first improvement (expanded data collection) recommended in section “Inadequate diversity and range in datasets” immediately below. If, however, large datasets cannot be created, alternative solutions such as augmentation techniques, which were explained in the third solution of section “Inadequate diversity and range in datasets”, can be employed to tackle the problem of imbalanced datasets.

Inadequate diversity and range in datasets

ML models require a large diversity in datasets to learn a wide range of situations that may be encountered during the printing process. Lack of diversity introduces selection bias, so the creation of robust ML algorithms needs datasets that reflect the variety in the AM process. Accordingly, objects with relatively complex geometries representing wide-ranging shapes and dimensions must be printed in generating the training data. Additionally, different permutations and combinations of process parameters must be employed in building these objects. Hence, we focused our attention on how past works have addressed these needs.

In terms of geometries investigated, an overwhelming majority of models relied on primitive shapes such as cubes (Hassanin et al., 2021; Knaak et al., 2021; Kwon et al., 2020; Nguyen et al., 2020; Özel et al., 2020; Park et al., 2022; Sah et al., 2022; Tapia et al., 2016) and cylinders (Aoyagi et al., 2019; Kappes et al., 2018), or single-track experiments (Gaikwad et al., 2020; Le-Hong et al., 2023; Silbernagel et al., 2020; Tapia et al., 2018). The resulting datasets were used to train ML models that identified optimal process parameters or predicted part density, porosity, surface roughness, melt-pool properties (e.g., temperature, size), and melt-pool dynamics (i.e., minor axis, major axis, center, center-to-edge distance). Single tracks such as the ones shown in Fig. 6a were created in the first category of studies (Gaikwad et al., 2020; Lee et al., 2019; Le-Hong et al., 2023; Silbernagel et al., 2020; Tapia et al., 2018). Other approaches (Hassanin et al., 2021; Knaak et al., 2021; Kwon et al., 2020; Nguyen et al., 2020; Özel et al., 2020; Park et al., 2022; Sah et al., 2022; Tapia et al., 2016) constructed cubes with dimensions ranging from 4 mm per edge to 16 mm per edge (e.g., Fig. 6b). Costa et al. (Costa et al., 2022) and Kappes et al. (Liu et al., 2021) built cuboids (3D rectangles) with sizes 4 mm × 10 mm × 30 mm and cylinders of 2 mm diameter and 4 mm height, respectively. While the above works deployed L-PBF machines, Aoyagi et al. .(2019) used an E-PBF machine to build cylinders with a diameter of 6 mm and a height of 5 mm (Fig. 6c). Examining the sizes of the geometries mentioned above, it was clear that the maximum dimension of the objects printed did not exceed 30 mm. Since the commercial AM parts frequently exceed this size, it may be argued that the datasets used so far have not sufficiently addressed industry requirements.

PBF processes offer users a multitude of tunable process parameters. However, only a small quantity of parameter combinations was employed during the printing of experimental objects in the reported studies. For example, in many cases, only power and/or scan speed were varied (L-PBF: Kwon et al., 2020; Lapointe et al., 2022; Le-Hong et al., 2023; Tapia et al., 2018), E-PBF: (Aoyagi et al., 2019)), possibly because these generally had the strongest influence on process signatures like the melt pool size and/or temperature. A handful of studies included other parameters, e.g., Silbernagel et al. (2020) varied three parameters: scan speed, laser point distance and layer thickness, and Sah et al. (2022) and Singh et al. (2012) investigated laser power, scan velocity and hatch distance.

A common weakness in the AM experiments was that only a limited number of data points were generated in the published works for each parameter. For instance, Aoyagi et al. (2019) selected only eleven values each for two process parameters they studied. Similarly, Hassanin et al. (2021) used only five values each for four parameters to generate 26 combinations of process parameters. Therefore, limitations in the number of process parameters investigated and the modest range of values studied for each parameter restrict the usefulness of ML models trained using these datasets.

Some recent studies have begun to address the drawbacks found in previous works. For instance, Lapointe et al. (Lapointe et al., 2022) fabricated seven geometries (A, B, C, D, E, F, and G in Fig. 6d) containing canonical part features like thin walls and overhangs with multiple sizes (with a maximum dimension of 7.8 mm) and 190 different values of laser power and scan speed. Figure 6d illustrates the objects they constructed, including four cuboids with thin walls of four different heights, and three connected cuboids of different sizes which formed simple overhangs.

Other recent works have tackled the limitations in the number of process parameters used. For example, at least four process parameters with a wide range of values for each were employed by some researchers, e.g., (Hassanin et al., 2021; Nguyen et al., 2020; Park et al., 2022; Wang et al., 2022). Despite this, in all these works, only cubes were built, limiting the value of datasets. In another study, Costa et al. (2022) added a build-orientation parameter to the set that comprised five other processing parameters (i.e., laser power, scan speed, scan strategy, layer thickness, and hatch distance) to generate a more diverse dataset. Although simple cuboids were built, they were tilted to the vertical within the range of [0–90] degrees to simulate overhang regions of different angles (see Fig. 6f, where support structures were added for angles exceeding 45 degrees to the vertical).

In summary, ML models developed in most of the past works suffered from limitations in the datasets used for training. The sources of these drawbacks were the modest numbers of shapes and sizes of build geometries, the low quantity of process parameters varied, and the narrow ranges applied to each parameter that was changed. Various studies (98–102) have demonstrated that geometry has a significant influence on build temperature which, in turn, affects melt pool characteristics and even spatter formation. Similarly, data obtained from single-track experiments is unlikely to be fully representative of the multi-track builds typically required for commercial products. The limited diversity in the training datasets of ML models used for PPD causes two challenges for employing the techniques in industry settings: firstly, the ML model has not been trained on all possibilities. Secondly, the ML model has not been exposed to the complex interactions that commonly occur between various process parameters. Some guidance for AM community to tackle these issues are provided below.

Suggested improvements

Data collection from relatively complex designs and expanded range of process parameters

It is essential to design and build relatively complex geometries comprising various geometric features, such as bulk regions, thin walls, hollow features, overhangs, and sharp corners with different angles, and using a wide range of process parameters. This is because, the heat transfer rates which exert a strong influence on part quality, are affected by these geometrical features that are present in real-world parts. For instance, the melt pool stability is influenced by the local processing state (Chen et al., 2017; Clijsters et al., 2014), where unstable melt pools can lead to the development of internal pores or surface defects (Downing et al., 2021; Wang et al., 2013). Thus, the local geometry can affect thermal conduction for cooling the melt pool, for example, overhang regions are particularly vulnerable to overheating (Ashby et al., 2022). Similarly, the geometric features influence the occurrence of defects including their distribution and size (Ameta et al., 2015; Shrestha et al., 2019). Additional consideration must be paid to design parameters such as part size and orientation of the build. The influence can be local (i.e., surrounding a geometric feature) and/or global (i.e., on the whole 3D object). For example, Jones et al. (Jones et al., 2021) showed that the surface roughness and positional error are affected by the inclination angle, where areas with angles less than 30 degrees were susceptible to significant deformations, heightened surface roughness, and had a higher risk of component failure. It is important to highlight that geometric features can also cause large variabilities in process signature data recorded. This was demonstrated in a work (Eschner et al., 2020) that sought to relate process signatures (acoustic emissions) to part property (density). That study showed that adding new geometric features to their initial cubes (i.e., increasing the complexity level of geometries) changed the shape and length of acoustic data, and caused the ML model to fail the density classification of the builds. Three levels of complexities were constructed as shown in Fig. 7: (1) simple cube, (2) cube with small-angled region (i.e., arch), and (3) cube with a hollow circle and arch. An ANN was trained by acoustic data from building complexity 1 and tested on the data from fabrication of complexities 2 and 3. Although the proposed model was only tested on data from the cube region of each of these complexities, its performance worsened by approximately 19 and 27% on complexities 2 and 3, respectively. The authors concluded that the ANN should be trained on acoustic data from all complexities to maintain a steady performance.

Three different levels of geometry complexity were built by Eschner et al. (2020) to further assess their proposed ML model on density classification

Larger distribution of geometries can be collected and prepared with less effort by fabricating some combinations of simple designs, such as cubes and cylinders, and more complicated add-ons. These composite geometries can be formed by connecting the primitive shapes to other geometric features (e.g., overhang) which influence the melt-pool stability differently. The level of complexity is increased further when various permutations of every geometric feature are added to the previous shapes. Additionally, sample sizes should be varied to consist of roughly equal numbers of small, medium, and large shapes. A few extra builds of all geometries are necessary to repeat the print for confirming the process repeatability. It is recommended that complexity indices be used based on complexity metrics introduced by (Amor et al., 2019; Ben Amor et al., 2022; Joshi & Anand, 2017), to measure the geometry complexity score of an object. The methods developed in (Amor et al., 2019; Ben Amor et al., 2022) calculate the complexity for every layer of a geometry, based on five factors, such as the number of cavities, number of independent surfaces, and the shape complexity between two consecutive layers. By contrast, the technique proposed in (Joshi & Anand, 2017) computes the complexity score of a whole object as a function of thin gaps, sharp corners, support structure volume, height and volume of the part. The resulted score ranges from 0 to 1, where values closer to 1 indicating a greater level of difficulty in achieving successful printing. When the complexity index is used, one should fabricate geometries that are selected from different complexity scores.

It is essential to use a large range for each process parameter, preferably including different permutations and combinations. Employing uniformly distributed values between the lower and upper bounds of parameters is recommended. One must note that the allowed ranges for process parameters are usually machine dependent, so to prevent out-of-range predictions, the acceptable ranges for the combination of different adjustable parameters should be defined for the ML model. For instance, during their training process, Costa et al. (2022) removed the datasets relating to over-melted parts that were built with two pairs of laser power and scan speed, (60W, 2200 mm/s) and (400 W, 500 mm/s). The process parameters recommended by inverse ML models (e.g., ML I1 in Fig. 2) developed using the above recommendations are likely to be location-dependent based on the geometric features being built or the proximity to these features. Therefore, when programming process parameters for a build, this fact must be kept in mind. Similarly, when using the forward models (e.g., ML F1 in Fig. 2), the analyst may choose to provide location-dependent process parameters as inputs.

It must be noted that there are some pitfalls to tuning a large number of the process parameters. While the model’s understanding of the whole printing process will be much more accurate when a greater number of parameters is chosen, it can also make the model more complex and non-linear, as the ML algorithm will require a higher-dimensional search region to discover the various connections between the input and the desired outputs. Although a handful of approaches (Costa et al., 2022; Wang et al., 2022) successfully studied the relationships of five/six process parameters in their respective forward models, predicting large quantity of parameters in an inverse model is yet to be carried out in the PPD literature relating to AM.

In summary, we have recommended the use of diverse geometrical features and expanded ranges of process parameters to adequately capture the variations found in real-world AM builds into a well-generalized ML model and avoid evaluation bias. As this will result in a large dataset, we suggest that the following be taken into consideration: (1) defining the geometry descriptor as complementary information to provide the ML model with the knowledge related to each geometric feature (as described in the next paragraph), and (2) applying the improvements recommended previously in section “Poor-quality and/or insufficient data”.

Geometry descriptor as complementary feature

Including diverse data from various relatively complex geometries is very likely to create large variations within a class (e.g., classes based on various density levels of parts - for forward models). These variations force the ML model architecture to be more complex, resulting in potential delays in its convergence performance. Additionally, the probability of the model finding local minima rather than the global minimum increases, leading to unreliable predictions. This can be addressed by using complementary information which provides the model with extra knowledge of the various geometric features, allowing the model to learn more effectively by including these features in the relationships. It is therefore essential to define a unique geometry descriptor which informs the ML model about the shape and location of geometric features. (Note that this descriptor is entirely different from the complexity index discussed in the previous dot point). For instance, Lapointe et al. (2022) proposed a geometry descriptor including five shape-related properties to specify five geometric features, i.e., overhang, bulk region, edge, corner, and thin wall. Two features, viz. the distances to the nearest overhang region in both build direction z and x–y plane, determined the overhang area on the object. Similarly, distances to the nearest column/bulk area and to the nearest edge characterized the bulk region and edge, respectively. A fifth feature was also defined to help recognize corners and thin walls when it was combined with the distance to the nearest edge feature. In another study, five other features were extracted from the STL file of 3D objects to create a geometry descriptor (Baturynska, 2019). This included the number of triangles, surface and volume, part location (x, y, and z coordinates), and the object orientation. The geometry descriptors were employed to propose an ML model which successfully predicted mechanical properties in polymer AM products.

In summary, a geometry descriptor helps the ML model identify the regions which perform differently during the printing process (e.g., by distinguishing regions that vary in terms of heat transfer rates). Additionally, it helps to accelerate the model’s convergence due to a better recognition of the variations within a class using the extra knowledge provided. We recommend that the quantity and volume of support structures should also be included in the geometry descriptor as they heavily influence heat transfer rates, and therefore, part quality (Douard et al., 2018; Joshi & Anand, 2017).

Choices of ML methods: mismatch and neglect of history

Mismatches between data and ML methods

The type of ML algorithm chosen for training must be appropriate for the datasets that have been generated. For instance, if the data was limited in its diversity, a traditional method (e.g., support vector machine (SVM) or K-nearest neighbor (KNN)) may be considered in place of a more advanced artificial neural network (ANN) which is better suited to creating more complex, generalized models. The complexity of a model is subjective and can be characterized by different factors, such as the quantity of features incorporated in a predictive model, the nature of the model itself (e.g., linear or non-linear, parametric or non-parametric), and algorithmic learning complexity. One of the popular ways to measure model complexity is by determining the number of trainable parameters which are derived by the model’s structural hyperparameters. These structural hyperparameters, which determine the architecture of the ML algorithms, are defined before a model is trained and remain unchanged. By contrast, model’s trainable parameters (the weights associated with structural hyperparameters) are tuned during training based on the learnt relationships between inputs and outputs.

We investigated the sophistication levels in the complexity of the models found in the published AM literature. In doing so, we pursued two approaches to accomplish our analysis on complexity: “1:10 rule” (Baum & Haussler, 1988; Haykin, 2009) and “model’s performance consistency” (Alwosheel et al., 2018). The 1:10 rule specifies that the minimum size of training data should be around 10 times the trainable parameters (i.e., number of weights) in the ANNs (Abu-Mostafa et al., 2012). A recent study (Alwosheel et al., 2018) even suggested a minimum sample size of 50 times the number of weights in ANNs to ensure creating a well-generalized model. We found several studies in metal AM related research that failed to obey the 1:10 rule. For instance, roughly 11,400 trainable parameters existed in the ANN (3-330-28-29-2) developed by (Singh et al., 2012) while the training data points (99 samples) 0.8% of the trainable parameters. Similarly, the number of training data (26 samples) that was fed to a deep neural network (DNN) (3–50–50–50–50–2) was a mere 0.325% times the trainable parameters (approximately 8000) (Hassanin et al., 2021). In a fraction of the studies (Nguyen et al., 2020; Sah et al., 2022; Wang et al., 2022) which developed ANN/DNNs the structural hyperparameters were not reported, making it impossible for us to calculate the trainable parameters, and then evaluate them based on the 1:10 rule.

Secondly, we observed that some models (Costa et al., 2022; Sah et al., 2022) performed differently (e.g., in terms of accuracy) between training and validation/test datasets. This difference may be attributed to a mismatch between the method chosen and the data, resulting in over-fitting (i.e., fitting to the noise, not the signal). The training aim of every ML model is to learn the underlying data generating process (DGP), which, in our case, relates to the mechanisms of the AM process. A successful approximation of DGP leads to a generalized model which maintains a consistent performance on the training data and the future, yet unseen, data generated by the same DGP. By contrast, an unnecessarily complex model for the underlying DGP may fail to preserve such generality, and thus performance consistency. For instance, an ANN that was developed by Sah et al. (2022) to predict density by measuring porosity defects showed high accuracy during training but failed in achieving the similar result on a new sample; even with a previously known geometry and the same range of process parameters. This performance inconsistency showed that the trained model, which included two to five hidden layers with 50 neurons per layer, was unnecessarily complex compared with the underlying DGP of available data, resulting in extreme levels of over-fitting and lack of generalizability. All these works demonstrate that a good trade-off between data and the complexity of selected ML algorithms is necessary for deployment in complex industrial or research settings.

Pleasantly, recent works have begun to address the issues surrounding mismatch. For instance, Lapointe et al. (2022) followed the 1:10 rule and conducted many trial computations to arrive at the architecture of their DNN by adjusting structural hyperparameters for matching the trainable parameters with the quantity of data. They used a dataset containing around 500 K photodiode signals to train many DNNs with different numbers of hidden layers and neurons. Accordingly, the number of trainable parameters varied from 2.4 K to 6.3 M. They found that the test error quickly reduced when the number of parameters reached about 10 K (data was 50 times the parameters). Increasing the parameters to 1 M maintained the MSE error almost steady in a range of 0.040 and 0.062. The results obtained from testing the model on two unseen complex geometries confirmed a consistent performance between the training and the new data, which meant the developed model was well generalized.

To summarize, we used two criteria, the 1:10 rule and performance consistency, to show that the complexity of some existing ML algorithms was unnecessary high compared with the underlying data. We noticed that many AM related studies did apply the 1:10 rule; similarly, in some works, inconsistency in performance between training and validation/test datasets was observed.

Suggested improvements

To obtain a good match between data and method, the ML method may be chosen based on the trade-off between bias and variance (Glaze et al., 2018). High bias in a regressor/classifier can prevent the algorithm from learning all relevant relationships between input and output (under-fitting), while high variance can cause the model to overfit due to sensitivity to small variations that may exist in training data. Consequently, high bias/low variance methods such as Naive Bayes and logistic regression can achieve better results with fewer data instances. On the other hand, low bias/high variance techniques such as ANN/DNNs and RF may outperform these when larger datasets are available (Knox, 2018; Yu et al., 2006). It must be noted here that some high variance algorithms such as SVM and SVR are generally unsuitable for use with big data since their requirement of memory for their Kernel matrices will increase quadratically with data samples (Duan & Keerthi, 2005; Wang & Hu, 2005).

To maintain a comparable performance of ML models between training and validation data, using a good representation of underlying DGP in the training dataset assists the ML model learn the hidden patterns and relationships effectively. To this end, it is essential to accurately divide the data into three sets for training, validation, and testing. For instance, K-fold cross-validation (Arlot & Celisse, 2010) is one of the data division methods which divides the data into K subsets and the model is trained and tested K times, using a different subset as the test set each time. This is very useful in maximizing the use of the available data for both training and validating and reducing the bias/variance issues when the amount of data is limited. Several studies (Aoyagi et al., 2019; Costa et al., 2022; Gaikwad et al., 2020; Gu et al., 2023; Kappes et al., 2018; Park et al., 2022; Tapia et al., 2018) have employed this technique to train their forward and inverse models developed for PPD.

Additionally, a better representation of the underlying DGP can be accomplished by augmenting physical insights to the data using Physics-Informed Machine Learning (PIML). The use of physics-based ‘clean’ data, which is noise-free, can improve the robustness and accuracy of an ML model. For instance, the accuracy of an ML F3 type model (Fig. 2) developed by Wang et al. (2022) was improved by augmenting data with physics-based features. They added physical information of powder melting (i.e., peak temperature of molten pool and Marangoni flow) to two sets of input data: (1) four process parameters, and (2) 40-dimensional features of process parameters in which the value of every feature was computed using various mathematical functions (e.g., \({x}^{1/2}, {x}^{3},\mathit{log}(1+x))\). The results demonstrated that integrating physical information to the original data and the dimensionally augmented parameters could improve the accuracy of four different ML models (ANN, Bayesian network, SVM, and RF) in predicting surface roughness and density. In short, the underlying DGP should be well represented by the data and corresponding features to create a robust and accurate ML model which maintains constant performance in the real world.

In addition to the above suggestions, the shortcomings identified above may be addressed by paying closer attention to the choice of the ML algorithm. In section “Further guidelines on selecting the appropriate ML method”, we discuss six criteria that assist with selecting the most appropriate algorithm.

Limited attention paid to history

The layer-by-layer nature of 3D printing may lead to defects that can extend from one layer to the next, potentially resulting in the part being rejected. In addition, defects such as keyholes may move from one layer to the next when thermal conditions dictate. To address such flaws, the layer-wise information must be analyzed by ML methods and remembered in order to better manage the build. For example, if conditions that could initiate defects in subsequent layers are detected on the current layer, an ML model connected to a real-time (or near real-time) feedback control system should be able to recommend adjustments in process parameters so that such defects are avoided in future layers. Even when not used in a feedback control setup, the ability to correlate historical process events to future deleterious effects is of exceptional value as evasive action may be commenced by the operator (or the process terminated). We found that an overwhelming majority of approaches did not consider the information which described interconnections or time-varying dependencies between layers. For instance, studies (Aoyagi et al., 2019; Costa et al., 2022; Gockel et al., 2019; Le-Hong et al., 2023; Nguyen et al., 2020; Park et al., 2022; Sah et al., 2022; Silbernagel et al., 2020; Singh et al., 2012; Tapia et al., 2016, 2018; Wang et al., 2022) recorded the initial set of parameters, final part quality, and process signatures, but did not connect the latter with the layer information. As an example, while Kwon et al. (2020) recorded much information in the form of melt-pool videos (time-series data) with a 2500 fps from layer 50 to layer 99 for each sample, they only used a fraction of the frames taken (several layers apart) to train their DNN model. It was thus impossible for the ML model to learn the trends between subsequent layers. In summary, the AM community needs to move towards processing layer-wise data in their ML algorithms to provide complementary information, which can enable effective real-time adaptation when building complex geometries.

Suggested improvement—layer-by-layer optimization

Analyzing both spatial and temporal dependencies are crucial to understanding the overall build process. While temporal dependencies capture the influence of past layers on current and subsequent layers, spatial dependencies account for the relationship between different zones of the printed volume, including within a single layer and across multiple layers.

To analyze temporal dependencies, ML algorithms developed for time-series analysis which incorporate memory units have great potential for improving PPD approaches for PBF systems. Various regression methods (e.g., Seasonal Autoregressive Integrated Moving Average (SARIMA)) and DNN algorithms (e.g., Spiking Neural Networks, Recurrent Neural Networks-based models, and transformers) (Vaswani et al., 2017) can be employed to investigate the dependency between layers in forward and inverse models.

Seasonal autoregressive integrated moving average (SARIMA) (Ebhuoma et al., 2018; Zhang et al., 2011) algorithm is used to predict future values according to historical dependencies. SARIMA combines autoregressive (AR), differencing (I), and moving average (MA) components to capture both the autocorrelation and seasonality (i.e., patterns that repeat at fixed intervals) present in a time series. This technique can be useful to process layer-wise data in AM as it captures the temporal dependencies in the data (e.g., the influence of the prior layers on subsequent layers) and considers the impact of past prediction errors on the processing of current observation.

Spiking neural networks (SNNs) are a type of ANN, where neurons exchange information discontinuously via spikes (Ponulak & Kasinski, 2011). Unlike ANNs which transmit information at the end of each propagation cycle, SNNs only generate spikes when a particular event occurs (e.g., detection of a pore) to pass information to other synapses. In addition to neuronal and synaptic status, SNNs incorporate time into their algorithm by modeling the process as time series when they associate the value 1 to timeslots where the event occurred and 0 to the rest of the process. Since these events are sparse, this class of models noticeably reduces the required computational time for training an accurate model. As the AM is a layer-by-layer procedure with sparse faults in each layer, SNNs are ideal for efficient modelling of the relationships between process parameters and structure/property (e.g., ML F2 and ML F3 in Fig. 2).