Abstract

Twitter data has been widely used by researchers across various social and computer science disciplines. A common aim when working with Twitter data is the construction of a random sample of users from a given country. However, while several methods have been proposed in the literature, their comparative performance is mostly unexplored. In this paper, we implement four common methods to create a random sample of Twitter users in the US: 1% Stream, Bounding Box, Location Query, and Language Query. Then, we compare these methods according to their tweet- and user-level metrics as well as their accuracy in estimating the US population. Our results show that users collected by the 1% Stream method tend to have more tweets, tweets per day, followers, and friends, a fewer number of likes, are younger accounts, and include more male users compared to the other three methods. Moreover, it achieves the minimum error in estimating the US population. However, the 1% Stream method is time-consuming, cannot be used for the past time frames, and is not suitable when user engagement is part of the study. In situation where these three drawbacks are important, our results support the Bounding Box method as the second-best method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Twitter data have been widely used by researchers across various social and computer sciences disciplines (King et al. 2017). One of the key challenges in working with Twitter data is to obtain a random sample of users from a country (Kim et al. 2018). The goal is usually to get a platform or population-representative sample of users (Wang et al. 2019). The sample is then used for public opinion research, for experimental research, or for training machine learning algorithms. For example, random samples of Twitter users have been used for estimating public opinion (Barberá et al. 2019; Alizadeh et al. 2019; Alizadeh and Cioffi-Revilla 2014), studying the diffusion of misinformation (Shao et al. 2018), studying conspiracy theories (Batzdorfer et al. 2022), evaluating the performance of large language models for text annotation tasks (Alizadeh et al. 2023), measuring the influence of information operations (Barrie and Siegel 2021), and developing supervised models for detecting inauthentic activities (Alizadeh et al. 2020). However, there are at least two significant challenges in obtaining a random sample of users from a country: (1) while several methods have been proposed in the literature, it is not clear which one is the best, and (2) the extent to which these random samples are actually representative of the population is questionable.

There are at least four popular methods to construct a random sample of Twitter users for a specific country. First, at the moment of writing, Twitter provides 1% of all tweets worldwide in real-time through its free stream API. One can collect this stream for a specific period of time, even with filtering for language or country of interest, obtain a list of users who posted tweets, and then sample from them. Second, it is possible to use Twitter’s search-tweet API and query for a specific language, and after ingesting tweets for a period of time, filter for a language of interest, which matches different countries to different degrees. Third, one can directly query for a country of interest using the Search API. Fourth, the Twitter API allows queries based on the bounding box coordinates enclosing a specific country and researchers used it to get a random sample of a country’s Twitter users (e.g. Barrie and Siegel 2021).

The extent to which these four methods produce similar results, and which one produces a more representative sample of a population, is mostly unexplored. Although Twitter shut off the free access to the API in February 2023, many researchers have archived a wealth of data and still publishing novel research using Twitter data (e.g. Truong et al. 2024; Mosleh and Rand 2024). Moreover, in compliance with the EU’s Digital Service Act, Twitter is now accepting researcher API access applications. In this paper, we compare these methods with respect to fourteen metrics at the level of tweets (e.g. distribution of the number of tweets per day), users (e.g. distribution of age and gender), and population. For the population-level metrics, our goal is to investigate which of the four Twitter sampling methods provides the best data for creating a nationally representative sample of users. To this end, we follow the approach proposed in Wang et al. (2019) and create representative samples from each of the four Twitter sampling methods and use them separately to estimate a population of US from Twitter data.

In the following text, we review the theoretical background and related work to highlight the need and importance of comparing various existing Twitter sampling methods. Next, we discuss our methodology to collect Twitter users, exclude non-active, non-individual, and bot-like accounts, infer the age, gender, and location, create nationally-representative samples from them, and compare them according to various evaluation metrics, which we devised from carefully reviewing the literature.

The results show that the 1%Stream Twitter sampling method is the one that produces the best population-representative sample and which exhibits different characteristics, compared to the other three sampling methods. The results further underscore the Bounding Box sampling method as the best replacement for situations under which the 1% Stream method might not be feasible or suitable. Our results illuminate the positive and negative characteristics of each sampling method and help researchers choose the one that best suits their research goals and designs. By identifying the best sampling methods, our results also pave the way for conducting more accurate social listening studies and building more accurate machine learning models.

2 Related work

Generally speaking, there are two types of methodological approaches to collecting social media data, including Twitter: (1) keyword-based approach, and (2) sample-based approach. In the keyword-based approach, researchers create a list of hashtags or keywords and collect all matched tweets over a period of time. Although this approach is popular due to its ease of automation, it suffers from some shortcomings (see Kim et al. 2018 for a discussion). The most crucial drawback of the keyword-based data collection is that most researchers often pick keywords in ad hoc ways that are far from optimal and usually biased (see King et al. 2017; Munger et al. 2022 for potential solutions). Other researchers take the sample-based approach, which is the focus of this study, and try to sample desired tweets or users.

From the tweet sampling perspective, since Twitter has yet to be transparent about how its data sampling is performed, early research on getting data from Twitter data were focused on understanding its underlying mechanisms. Comparing the limited Streaming API with the unlimited but costly Firehose API, Morstatter et al. (2013) tried to answer whether data obtained through Twitter’s sampled Streaming API is a sufficient representation of activity on Twitter as a whole. They found that for larger numbers of matched tweets, the Streaming API’s coverage is reduced, but its ability to estimate the top hashtags is comparable to the Firehose. Interestingly, the results showed that the Streaming API almost returns the complete set of geotagged tweets despite sampling.

One of the critical questions about the Twitter Streaming API is whether it provides similar results to that of multiple simultaneous API requests from different connections. Joseph et al. (2014) compared samples of tweets collected using the Streaming API that tracked the same set of keywords at the same time. Their results showed that, on average, over 96% of the tweets in various samples are the same. In practice, this means that an infinite number of Streaming API samples are required to collect most of the tweets containing a particular popular keyword (Joseph et al. 2014). In another work, Tufekci (2014) proposed a framework to address potential biases in tweet collection and how to mitigate them.

More recently, Kim et al. (2018) compared simple daily random sampling with constructed weekly sampling. Their results underscored simple daily random sampling as the efficient way to obtain a representative sample of tweets for a specific period of time. In another important study, Pfeffer et al. (2018) showed that the Streaming API can be deliberately manipulated by adversarial actors due to the nature of Twitter’s sampling mechanism. Their results further showed that technical artifacts of the Streaming API can skew tweet samples and therefore those samples should not be regarded as random.

In a distinct investigation, researchers had reverse-engineered the sampling mechanism used by Twitter’s Sample API. The sample was based on the timestamp of when tweets arrived at Twitter’s servers and any tweet arriving between 657 and 666 ms was included in the 1% Sample API (Pfeffer et al. 2018).

In De Choudhury et al. (2010), researchers explored the impact of attribute and topology-based sampling strategies on the discovery of information diffusion in Twitter. The study analyzed several widely-adopted sampling methods that selected nodes based on attributes and topology, and developed metrics based on user activity, topology, and temporal characteristics to evaluate the sample’s quality. The results showed that incorporating both network topology and user-contextual attributes significantly improved the estimation of information diffusion by 15–20%.

Another research highlighted the lack of common standards for data collection and sampling in the emerging field of digital media and social interactions. The paper focused on Twitter and compared the networks of communication reconstructed using different sampling strategies. The paper concluded that a more careful account of data quality and bias, and the creation of standards that can facilitate the comparability of findings, would benefit the emerging area of research (González-Bailón et al. 2014).

In another work, Wang et al. (2015b) compared the representativeness of two Twitter data samples obtained from the Twitter stream API, Spritzer and Gardenhose, with a more complete Twitter dataset. The study found that both sample datasets capture the daily and hourly activity patterns of Twitter users and provide representative samples of the public tweets, but tend to overestimate the proportion of low-frequency users.

A detailed analysis of the effects of Twitter data sampling on measurement and modeling studies across different timescales and subjects was presented in Wu et al. (2020). It validated the accuracy of Twitter rate limit messages in approximating the volume of missing tweets and identified significant temporal and structural variations in the sampling rates across different scales and entities. They also suggested the use of the Bernoulli process with a uniform rate for counting statistics and provided effective methods for estimating ground-truth statistics.

This paper (Hino and Fahey 2019) addressed the challenges researchers face in accessing representative and high-quality data from social media platforms like Twitter. The authors proposed a methodology for creating a cost-effective and accessible archive of Twitter data through population sampling, resulting in a highly representative database. The study demonstrated the high degree of representativeness achieved by comparing the sample data with the ground truth of Twitter’s full data feed, making it suitable for post-hoc analyses and enabling researchers to refine their keyword searches and collection strategies. Overall, this approach provided an alternative solution for researchers with limited resources to access social media data under resource constraints.

In terms of comparing expert sampling and random sampling, Zafar et al. (2015) explored the advantages and disadvantages of the two methods. The study found that expert sampling offers a number of advantages over random sampling, including more rich information content, trustworthiness, and timely capture of important news and events. However, random sampling preserves the statistical properties of the entire data set and automatically adapts to the growth and changes of the network, while expert sampling does not. The authors suggested that both random and expert sampling techniques would be needed in the future, and called for equal focus on expert sampling of social network data.

From the Twitter user sampling perspective, the most important issue that researchers have tried to tackle is the problem of sampling bias. Indeed, much of the extant literature on sampling users from Twitter is related to pointing out sampling biases in election prediction studies and the necessity to control for it (see Jungherr et al. 2012 for a discussion and Gayo-Avello 2013 for an early review). More recently, Yang et al. (2022) focused on the issue of inauthentic accounts that could skew the behavior of voters. They proposed a method to identify potential voters on Twitter and compared their behavior with various samples of American Twitter users. The results showed that users sampled from the Streaming API are more active and conservative compared to the potential voters and randomly selected users. They further showed that the users in the Streaming API sample tend to exhibit more inauthentic behaviors, involve in more bot-like activities, and share more links to low-credibility sources.

Although the problem of sampling bias in Twitter has been recognized in many works, none of the papers we discussed above addressed the issue, due to the lack of a valid methodology to do so. In a seminal work, Wang et al. (2019) proposed a method by combining demographic inference with post-stratification to make social media samples a more representative of a population. First, they created a multimodal deep neural network classifier for joint identification of age, gender, and non-individual accounts. Second, they proposed a multilevel logistic regression approach to correct for sampling biases. The proposed debiasing approach estimates inclusion probabilities of users from various demographic groups from inferred joint populations and ground-truth population histograms. They further showed that their fully debiased sample outperforms a baseline and marginally debiased samples in the prediction task of estimating European regions’ population from Twitter data.

Creating a random sample of Twitter users from a country or geographical region has been cited in some of the research discussed above (e.g. Pfeffer et al. 2018; Yang et al. 2022). However, no study pointed out the fact that there are several methods for creating such a sample from Twitter users. For example, one can use Streaming API to collect tweets published in a certain country or language and then randomly sample from the list of tweets’ authors, or just simply use the Search API and query for country or language or both. We identified four such Twitter sampling methods in the literature (plus a fifth one which is not feasible anymore). The extent to which these random (or near-random) sampling methods produce similar results or are more representative of a population is unexplored. In this paper, we attempt to provide answers to the following research questions:

-

RQ1: Do different methods for creating a random sample of Twitter users from a country produce similar results in terms of the tweet- and user-level metrics?

-

RQ2: Are different methods for creating a random sample of Twitter users from a country produce representative sample of the population? If not, which method provides a more representative sample of the population?

To answer these research questions, we collect US Twitter data using four widely-used Twitter user sampling methods for one month and compare the results according to multiple tweet- (e.g. distribution of tweets), user- (e.g. distribution of age and gender), and population-level evaluation metrics. For our population-level metrics, following (Wang et al. 2019), we use five different prediction errors for estimating the population of US from Twitter data. The goal of the population-level metrics is to explore which sampling and debiasing methods provide the minimum prediction error, and thus, more representative of the population.

3 Methodology

3.1 Sampling methods

We use four different Twitter sampling methods (Table 1) that are widely used in the literature to create a random sample of Twitter users in a country. They include (1) 1% Stream, in which we use Twitter Streaming API to get 1% stream tweets, and filter for languages or country of interest; (2) Country Query, in which we query for the country into Twitter Search API and get all tweets; (3) Language Query, in which we query for languages that are related to the country of interest and use Twitter Search API same as Country Query; and (4) Bounding Box, in which we divide a country to multiple small bounding-box coordinates and get all tweets within them by Twitter Search API. There is also a fifth sampling method, in which one could generate random Twitter user IDs, check whether it exists on Twitter, and if they existed, filter to country or language of interest (Barberá et al. 2019). However, this method is no longer feasible due to technical changes on Twitter.Footnote 1

3.2 Data

We used Twitter’s V2 API to collect tweets over a month (i.e. from 2022-09-07 to 2022-10-08). As a first method, we used the sampled Streaming API. This is the simplest method that returns 1 % of all tweets during the listening period, but it generates the most amount of noise as well. For the second method, we used Twitter Search API and collected tweets posted in the United States by setting the filter argument as place_country:US. For the third method, we used the same endpoint as the second one but filtered tweets based on English language and US country by setting the filter argument as lang:en and place_country:US. As for the fourth method, we used the bounding boxes available in the Twitter Search API, which uses the coordinates of a specific area. The bounding boxes are limited in size (25 miles in height and width) and their form (rectangular). To mitigate the limitations of the bounding boxes, we implemented a grid of small boxes plotted over the US, with points defined by a longitudinal and latitudinal distance of 0.3\(^{\circ }\). We also included points along the borders of the countries by shifting the points diagonally up and down for one unit. This resulted in a comprehensive approximation of the United States, represented by 9,541 bounding boxes. In summary, Our research scope focuses on Twitter users in the United States. However, we applied certain query parameters in all four methods to narrow down our user selection within this context. It’s important to note that we did not use any specific keywords or hashtags that could potentially introduce bias towards any particular topic.

3.3 User pre-processing

Following the data collection, the next step involves the pre-processing of accounts. Typically, when we generate a random sample of Twitter users from a specific country, our goal is to obtain a sample consisting of authentic individuals rather than organizations or malicious accounts like bots. This is important as the inclusion of such accounts could introduce bias into our sample (Yang et al. 2022). To achieve this, we selected a random sample of 30K users from each dataset associated with each of the four Twitter sampling methods. This equalizes the dataset sizes and facilitates a more accurate comparison of changes in data volume after applying each pre-processing filter. From the initial sample of 30K randomly selected users, we applied several filters to exclude specific categories, including bots [identified using botometer (Yang et al. 2022)], verified accounts, protected accounts, low-activity accounts (those with fewer than 100 tweets), recently created accounts (less than 9 months old), and suspended accounts (as detailed in Table 2). Additionally, we eliminated accounts whose bios contain keywords such as "journalist," "magazine," "member," "organization," "mayor," "actress," etc., as outlined in Table 2. This step ensures that our sample comprises regular individuals rather than celebrities or organizational accounts. Lastly, we excluded users whose tweet language is not English and those whose tweet coordinates did not correspond to locations in the United States.

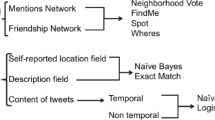

3.4 Inferring users’ demographics

We utilized the M3 model (Wang et al. 2019), which is a multimodal deep learning model, to predict the gender and age of Twitter users. This model functions across 32 languages and relies solely on users’ profile details, including their screen_name, user_name, bio, and profile picture. The M3 model offers two modes, and we employed the ’full’ model, which is more accurate and incorporates the profile image. In addition to gender and age, the model also discerns whether a Twitter account belongs to an organization. Consequently, we excluded organizational accounts from our dataset. Regarding location, we followed established research practices by utilizing self-reported user location or information from their close connections to estimate the users’ locations at the state level (Barberá et al. 2019).

3.5 Creating representative population estimates

In this step, we’ve inferred all the necessary features, enabling us to create our sample. We’ve selected all the valid users that have passed through the previous filters, resulting in our final 10K samples for each method. From this point forward, we will use this 10K-sample dataset for our analysis. Previous research has demonstrated that when demographic information is available, and proper statistical adjustments like re-weighting and post-stratification are applied, non-representative polls can still yield accurate population estimates (Wang et al. 2015a). The primary demographic characteristics that survey analysts focus on to address non-representativeness are age, gender, and location (Wang et al. 2019). We follow the approach introduced in (Wang et al. 2019) to learn inclusion probabilities based on users’ demographics. This method utilizes multilevel regression techniques to estimate the likelihood of an individual with specific demographics being present on a particular platform. It does this by considering inferred joint population counts and ground-truth population data. To implement this approach, we require gender, age, and geographic location information for each Twitter user, along with ground-truth data about the population. In the case of the United States, we rely on census data as our ground truth).Footnote 2

3.6 Evaluation metrics

We compare the results of each method to create a nationally-random sample of Twitter users according to three categories of metrics including (1) tweet-level (Table 3), (2) account-level (Table 3), and (3) population-level metrics (Table 4). Tweet-level metrics include total number of tweets generated by each sampling method, average number of tweets collected from each account, and share of English tweets. User-level metrics encompass various aspects, including: Total number of unique users, Distribution of tweet counts for accounts, considering the correlation between tweet count and account age, Distribution of the average tweet count, calculated by dividing the tweet count by the account’s age in days, Distribution of the number of likes received by the last tweet of each user, Distribution of account creation dates, Distribution of the number of followers and friends by each user, and Distribution of age and gender among users.

For the population-level metric, our objective is to determine which of the four Twitter sampling methods is most effective for generating a nationally representative sample of users. To achieve this, we adopt a test outlined in Wang et al. (2019) and employ the representative samples detailed in Sect. Inferring users’ demographics to estimate the overall population of the United States based on Twitter data. In essence, we conduct a regression analysis that correlates the actual population sizes of various areas within the United States (such as states, divisions, or regions) with the number of American Twitter users from different age and gender groups in those specific locations. This analysis helps us assess the representativeness of the Twitter data for estimating the US population.

In a more detailed breakdown, we compare five distinct models that rely on different data sources and operate under different assumptions. The first model (N \(\sim \) M) serves as the baseline and utilizes solely the total population count obtained from the census data along with Twitter user data, without applying any debiasing coefficients. The subsequent three models are grounded on the assumption of homogeneous inclusion probabilities: The second model (N \(\sim \sum _g\) M(g)) uses only gender-specific marginal counts. The third model (N \(\sim \sum _g\) M(a)) uses only age-specific marginal counts. The fourth model (N \(\sim \sum _{a,g}\) M(a, g)) employs the joint distribution inferred from Twitter data alongside the total population counts from the census data. Finally, the fifth model (log N(a, g) \(\sim \) log M(a, g) + a + g) leverages the joint distribution inferred from both Twitter data and census data. For each of these five prediction tasks, we assess their performance using the mean absolute percentage errors (MAPE) evaluation metric, calculated as specified in Eq. 1. In this equation, \({\hat{N}}_i\) represents the predicted population size, \(N_i\) is the actual population size, and the summation is performed over all geographical units of interest, such as states, regions, or divisions in the United States.

4 Results

The following subsections provide a detailed presentation of our results by comparing the four Twitter sampling methods. First, we present essential statistics regarding the outcomes of each sampling method. Second, in accordance with the methodology outlined in Sect. User pre-processing, we randomly sample 30K accounts from the output of each sampling method. Subsequently, we apply pre-processing filters and select a random sample of 10K users from the remaining pool. We then report the metrics at both tweet- and user-levels for this subset. Lastly, we generate debiased samples from each 10K random sample by computing inclusion probabilities. We compare their mean absolute percentage errors (MAPE) for the task of estimating the United States population using Twitter data.

4.1 Tweet-level and user-level metrics

In Table 5, we provide a comparison of various tweet-level metrics across the four Twitter sampling methods employed to create random user samples from a country. These metrics include the number of tweets, the count of unique users, the average number of tweets per account, and the percentage of tweets in English. The results show that the bounding box (BB) and location query (Loc) sampling methods produce a significantly higher number of tweets compared to the language query (Lang) and 1% steam methods. Among the four Twitter sampling methods, BB and Loc methods produce more than 18 million tweets, whereas the Lang and 1% stream methods generate 4.5 million and 174,000 tweets, respectively, within the same timeframe. The same pattern is observed when examining the number of unique users and the average tweets per account metrics, except that the difference between BB and Loc methods and Lang method is notably smaller than their difference in the number of tweets. This suggests that the BB and Loc methods have a higher rate of account duplication compared to the other two sampling methods. Lastly, among the three methods that do not explicitly filter for language, the BB method has a higher proportion of English tweets.

Due to the computational expense of bot detection and age, gender, and location inferences, our aim is to compare a random sample of 10K users from each Twitter sampling method. To ensure that we have at least 10K users from each method after applying pre-processing steps, we initially select a random sample of 30K users from each Twitter sampling method. We then execute all pre-processing steps on this larger sample and subsequently select a random sample of 10K users from the remaining pool. The table in Table 6 provides information about the number of accounts that have been removed after each filter has been applied to the 30K-sample.

The comparison results of tweet- and user-level metrics are reported in Fig. 1 and Tables 7 and 8, and the corresponding t-test results are illustrated in Fig. 2. More specifically, the distributions of the total number of tweets are presented in Fig. 1a. Notably, the 1% stream method generates more tweets (M = 19,873.9, p = 0.00) compared to the other methods (see Table 7 and Fig. 2). Additionally, since the number of generated tweets depends on account age, we also depict the distributions of the number of tweets per day in Fig. 1b. This metric is calculated by dividing a user’s total number of tweets by the number of days since their account creation. Once again, we observe that users from the 1% stream method tend to tweet more frequently (M = 5.81, p = 0.00) than those from other methods (see Table 7). We have also conducted a comparison of the number of likes across the four Twitter sampling methods and have depicted the corresponding distributions in Fig. 1c. It’s worth noting that the BB, Loc, and Lang methods exhibit nearly identical distributions. However, users sampled from the 1% stream method tend to have significantly fewer likes (M = 0.00, p = 0.00). This discrepancy is primarily attributed to the fact that the 1% stream method collects data in real-time, often when engagements with posts have just commenced. This is in contrast to the other methods, which may include tweets posted up to the past seven days, allowing for more time for engagements to accumulate. Additionally, we present the distributions of account creation times in Fig. 1d. Across all methods, we notice a peak around the year 2009, likely reflecting the rapid growth of Twitter during that period (Yang et al. 2022). There is also an increase in the number of created accounts in 2011, coinciding with a period of significant user growth on Twitter.Footnote 3 In comparison to other methods, the 1% stream method appears to generate more younger accounts (p = 0.00), specially those that were created in 2022. However, for the remaining time period, the distributions appear similar across all four methods.

Figures 1e and f display the distributions of the numbers of followers and friends, respectively. These figures illustrate that users in the 1% stream method tend to have slightly more followers (M = 911.3, p = 0.00) and friends (M = 1059.6, p = 0.00) compared to users in the other sampling methods (see Table 8 and Fig. 2). Interestingly, in Fig. 1f, it becomes evident that nearly half of the users in the 1% stream sample have over 1000 friends. Moreover, the 1% method exhibits a higher number of accounts with approximately 5000 friends or more. It’s worth noting that the peak around 5000 friends in Fig. 1f is attributed to a Twitter anti-abuse limitation, which stipulates that an account cannot follow more than 5000 friends unless it has more than 5000 followers.Footnote 4 This policy leads to the observed distribution pattern.

As outlined in Sect. Inferring users’ demographics, age, gender, and location are critical demographics for constructing a nationally-representative sample of individuals. Consequently, it is essential to compare the user samples generated by each sampling method in terms of these three metrics. Figure 1 displays the distributions of age and gender across the four Twitter sampling methods. When examining gender distributions (Fig. 1g), two notable observations emerge: (1) All four methods yield unbalanced gender data, with a majority of users (over 64%) being male, (2) The 1% stream method produces slightly fewer women compared to the other three methods, which generate a nearly equal fraction of women.

Regarding the age (Fig. 1h), the percentage of users who are under 18 or over 40 is nearly identical across all methods. However, there is a significant difference for the 19–29 and 30–39 age cohorts in the 1% stream method. While the other three methods generate approximately 27% of users in both the 19–29 and 30–39 age groups, the 1% stream method has only 18% of users in the 19–29 cohort and 35% in the 30–39 cohort, indicating a notable deviation.

Finally, in Fig. 3, we present the distribution of the number of users located in each state within the United States. As discussed in Sect. Inferring users’ demographics, we adopted the methodology proposed in Barberá et al. (2019) to estimate the location of Twitter users at the US state level. In general, the distribution pattern is highly similar across all four Twitter sampling methods, with no significant variations between them. As expected, states with larger populations such as California, New York, Texas, and Florida have a higher number of Twitter users. Additionally, within our 10K-user sample, all four sampling methods managed to provide at least one user from each state, ensuring a diverse geographical representation.

4.2 Population-level metrics

The objective of this section is to assess the accuracy of the four debiased samples created from the four Twitter sampling methods in predicting the population of the United States using Twitter data. This prediction task is conducted at the state level, which necessitates having a sufficient number of users for all age and gender groups in all 50 US states. However, despite all four sampling methods providing at least one user in each state (as shown in Fig. 3), none of them yielded enough data to cover all combinations of age and gender across all US states. Table 9 provides information on the number of US states where there are insufficient users to represent all age and gender groups, across different sample sizes (i.e., 5K, 8K, or 10K users) and Twitter sampling methods. As indicated in Table 9, even with a sample size of 10K users, there are still between 7 and 11 states lacking at least one demographic group (e.g., women aged 30–39 in the State of New York).

In our regression model for estimating the US population from Twitter data, each row corresponds to a specific demographic group within a particular state (e.g., men above 40 in New Jersey). If any demographic group is absent in the Twitter data for a specific state, all values in the corresponding row of the regression model would be zeros. However, it’s important to note that no state has all demographic groups missing. Consequently, we made the decision to remove rows with all-zero values from our regression model. This led to the removal of 16 rows (3.92%) from the bounding-box sample, 12 rows (2.94%) from the Country Query sample, 16 rows (3.92%) from the Language Query sample, and 21 rows (5.15%) from the 1% Stream sample. It’s worth mentioning that all of these samples consist of an equal size of 10K users each.

Following the approach outlined in Wang et al. (2019), we assess the accuracy of the four representative samples using a leave-one-state-out cross-validation framework. In this evaluation, we calculate the mean absolute percentage error (MAPE) for the population estimates of the state that is left out of the analysis. The MAPE is computed using the formula specified in Eq. 1. As mentioned in Table 1, we calculate MAPE under five different scenarios. These scenarios encompass a baseline model that relies solely on the total population without the use of debiasing coefficients. Additionally, there are three models based on homogeneity, which consider whether to include both age and gender or only one of these variables. Lastly, there is a full model that takes into account heterogeneity by including both age and gender as factors in the analysis.

In Fig. 4, we observe that the results of the leave-one-state-out evaluation show a benefit to using the 1% Stream Twitter sampling method. Across all five debiasing models, the 1% Stream seems to achieve the minimum and the Language Query method achieves the maximum prediction error. For the N \(\sim \) M model, which is a baseline that does not use any debiasing, the 1% Stream method achieves MAPE of 27%, which is the least compared to BB, Loc, and Lang, which achieved MAPE of 33%, 39%, and 45%, respectively. The inclusion of the inferred age in the debiasing models N \(\sim \sum \) M (a) decreases MAPE of the 1% Stream method to 21%, which again is the minimum among the BB (26%), Loc (25%), and Lang (31%) methods. The inclusion of the inferred gender in the debiasing models N \(\sim \sum \) M (g) decreases the MAPE of the 1% Stream method, but not as big as the inclusion of the age. Nonetheless, the 1% Stream sample shows the minimum prediction error with MAPE of 25% (the BB, Loc, and Lang methods obtained MAPE of 27%, 30%, and 41% respectively). Same pattern holds true for N \(\sim \sum \) M (a, g) and logN(a,g) \(\sim \) logM(a,g) + a + g models. Moreover, the results show that even the baseline model of the 1% Stream sample outperforms the other three sampling methods in all five modelling scenarios.

4.3 Robustness tests

To ensure that the observed results are not the artifact of our regression settings, in which we removed the rows with all zero values, or artifact of our pre-processing decisions, we replicate the results of Fig. 4 for the following scenarios: (1) including the District of Columbia to the 50 states and remove rows with zero values in the regression model (see Fig. S1 in Appendix), (2) removing all states that their Twitter data are missing at least one demographic group from the Twitter and census data and measure MAPE for the remaining states (see Fig. S2 in Appendix), (3) aggregate data at the nine US divisions (the U.S. Census Bureau groups the 50 states and the District of Columbia into four geographic regions and nine divisions based on geographic proximity,Footnote 5) which results in no missing demographic group at all nine divisions (see Fig. S3 in Appendix), (4) removing users with less than 200 tweets in the pre-processing step instead of the original 100 tweet threshold (note that due the recent restrictions imposed on Twitter API, we are not able to test the lower threshold because it will produce new users, for which, we cannot obtain bot score, age, gender, and location) (see Figs. S4 and S5 in Appendix), and 5) removing users with the age of less than one year instead of the 9 month threshold (see Figs. S6 and S7 in Appendix).

Across all of these five robustness tests, the overall qualitative patterns of the comparison between the four Twitter sampling methods remain the same, with the 1% Stream sampling method obtaining the minimum prediction error on the US population inference task. Interestingly, for the leave-one-division-out cross-validation setting, we see that the error rates drop nearly in half across the four sampling methods and five population inference tasks (Fig. S3). This error reduction suggests that knowledge of the nine US division specific platform biases is more important than the state-specific ones for accurate estimates of the country population. In other words, to achieve a good performance, a model at least need to be exposed to some divisions within the US during its training period.

5 Discussion

Twitter has become the most studied social media platform where people express their everyday opinions, especially about politics. This fact encouraged a wealth of social and computer science scholars to use Twitter data for measuring national-level statistics for political outcomes, health metrics, or public opinion research. However, Twitter is not a representative sample of the population due to demographic imbalance in usage and penetration rates. To address this fact, researchers often try to create a random sample of Twitter users from a country. However, at least four widely-used sampling methods exist in the literature, and the extent to which their outputs are similar or different has not been explored systematically so far.

In this paper, we tackled this issue by comparing the performance of the four different Twitter sampling methods on some carefully devised evaluation metrics. More specifically, the four methods include (1) 1% stream, in which one uses Twitter Stream API to get 1% of tweets in real-time and then sample from the authors of the tweets, (2) location query, in which one uses Twitter Search API and query for a country of interest, (3) language query, in which one uses Twitter Search API, query for language(s) representing the country of interest, and filter for the country, and (4) bounding-box, in which one uses the ‘bounding-box’ field in the Search API and query for the coordinates enclosing the country of interest. After carefully reviewing the literature, we devised three tweet-level, eight user-level, and five population-level evaluation metrics to compare these four Twitter sampling methods.

Our results highlight the 1% Stream Twitter sampling method, which exhibits different characteristics compared to the other three sampling methods and fits as the top candidate in most use cases. More particularly, Twitter users collected by the 1% Stream method tend to have more tweets, tweets per day, followers, and friends, and fewer number of likes. In addition, it appears that the 1% Stream sampling method provides slightly younger accounts (i.e. accounts created around 2022), slightly more male users, significantly fewer users in the 19-29 age stratum, and significantly more users in the 30-39 age stratum, compared to the other three sampling methods.

The 1% Stream method achieves the minimum error, compared to the other three methods, in the prediction task of estimating the population of the US from Twitter users. This is true across five different debiasing models, each attempting to make the sample representative of the US population. A baseline model using the 1% Stream, in which we do not implement any debiasing technique, outperforms or equates all debiased forms of the other sampling methods in terms of the prediction error (except for the Location Query method when using marginal age counts).

However, the 1% Stream Twitter sampling method has some practical and theoretical disadvantages. Practically, it is time-consuming because the Twitter Stream API provides tweets in real-time. This means that, for example, if one needs to collect a month of stream data, she cannot get it immediately and has to wait for a whole month. This is not true for the other sampling methods, in which one can get the same period of data in a few hours or days, depending on the size of the country. In addition, researchers cannot access historical stream data using the 1% Stream method unless they began collecting it beforehand.

Theoretically, although the location and language filters used in all Twitter sampling methods are consistent, the 1% Stream method may produce a sample biased towards more active users. This is because the more frequently a user tweets, the higher the likelihood they will be included in the 1% sample. Conversely, the other three methods offer equal chances for all users to be sampled. Additionally, the 1% Stream method is not ideal for studies focused on user engagement metrics (such as the number of likes, retweets, comments, and views). This is because it gathers tweets in real-time, often capturing very recent tweets that have not yet been widely viewed or engaged with, as demonstrated in Fig. 1.

These drawbacks of the 1% Stream Twitter sampling method underscore the importance of the second-best sampling method in our study, which is the bounding box method. While its results are identical to Location Query and Language Query methods in terms of tweet- and user-level metrics (see Fig. 1), it clearly outperforms them with respect to the prediction error in the US population estimation task.

While Twitter has been one of the most studied social media platforms, the advantages and disadvantages of different sampling strategies remain unclear. Our results illuminate the positive and negative characteristics of the four main sampling methods used in the literature and help researchers choose the one that best suits their research goals and designs. By identifying the best sampling methods, our results also pave the way for conducting more accurate social listening studies and building more accurate machine learning models. Moreover, our approach and results may be adapted to conduct similar studies for other social media platforms.

The performance of the 1% Stream and bounding box Twitter sampling methods is better compared to the other two sampling methods when used for estimation of the population of US. Future research should explore the role of large language models in improving the performance of or automating usage of different models used in the process of computing the inclusion probabilities (Cerina and Duch 2023), determine significant parameters in the calculation of inclusion probabilities (Alizadeh et al. 2011), and test the temporal and regional validity of the results. Finally, due the the shutdown of the Twitter API, future work should compare the results of these study to those of data donation (Boeschoten et al. 2022) and crawling methods.

Data Availibility Statement

All codes and tweet IDs are available at an OSF repository: https://osf.io/vmcn9/.

Notes

For a comprehensive explanation and description of our improved method, please refer to Appendix Random User ID Generation Sampling Method.

census.gov.

References

Alizadeh M, Cioffi-Revilla C (2014). Distributions of opinion and extremist radicalization: insights from agent-based modeling. In: Social Informatics: 6th international conference, SocInfo 2014, Barcelona, Spain, November 11–13, 2014. proceedings 6. Springer, pp 348–358

Alizadeh M, Weber I, Cioffi-Revilla C, Fortunato S, Macy M (2019) Psychology and morality of political extremists: evidence from Twitter language analysis of alt-right and Antifa. EPJ Data Sci 8(1):1–35

Alizadeh M, Lewis M, Zarandi MHF, Jolai F (2011) Determining significant parameters in the design of ANFIS. In: 2011 Annual meeting of the North American fuzzy information processing society. IEEE, pp 1–6

Alizadeh M, Shapiro JN, Buntain C, Tucker JA (2020) Content-based features predict social media influence operations. Sci Adv 6(30):eabb5824

Alizadeh M, Kubli M, Samei Z, Dehghani S, Bermeo J. D, Korobeynikova M, Gilardi F (2023) Open-source large language models outperform crowd workers and approach ChatGPT in text-annotation tasks. arXiv:2307.02179

Barberá P, Casas A, Nagler J, Egan PJ, Bonneau R, Jost JT, Tucker JA (2019) Who leads? Who follows? Measuring issue attention and agenda setting by legislators and the mass public using social media data. Am Polit Sci Rev 113(4):883–901

Barrie C, Siegel AA (2021) Kingdom of trolls? Influence operations in the Saudi Twittersphere. J Quantitat Descr 1:1–41

Batzdorfer V, Steinmetz H, Biella M, Alizadeh M (2022) Conspiracy theories on Twitter: emerging motifs and temporal dynamics during the COVID-19 pandemic. Int J Data Sci Anal 13(4):315–333

Boeschoten L, Ausloos J, Möller JE, Araujo T, Oberski DL (2022) A framework for privacy preserving digital trace data collection through data donation. Comput Commun Res 4(2):388–423

Cerina R, Duch R (2023) Artificially intelligent opinion polling. arXiv:2309.06029

De Choudhury M, Lin Y-R, Sundaram H, Candan KS, Xie L, Kelliher A (2010) How does the data sampling strategy impact the discovery of information diffusion in social media? Proc Int AAAI Conf Web Soc Media 4:34–41

Gayo-Avello D (2013) A meta-analysis of state-of-the-art electoral prediction from Twitter data. Soc Sci Comput Rev 31(6):649–679

González-Bailón S, Wang N, Rivero A, Borge-Holthoefer J, Moreno Y (2014) Assessing the bias in samples of large online networks. Soc Netw 38:16–27

Hino A, Fahey RA (2019) Representing the Twittersphere: archiving a representative sample of Twitter data under resource constraints. Int J Inf Manag 48:175–184

Joseph K, Landwehr PM, Carley, KM (2014) Two 1% s don’t make a whole: Comparing simultaneous samples from Twitter’s streaming API. In: International conference on social computing, behavioral-cultural modeling, and prediction. Springer, pp 75–83

Jungherr A, Jürgens P, Schoen H (2012) Why the pirate party won the german election of 2009 or the trouble with predictions: a response to tumasjan, a., sprenger, to, sander, pg, & welpe, im “predicting elections with twitter: What 140 characters reveal about political sentiment. Soc Sci Comput Rev 30(2):229–234

Kim H, Jang SM, Kim S-H, Wan A (2018) Evaluating sampling methods for content analysis of Twitter data. Soc Media Soc 4(2):2056305118772836

King G, Lam P, Roberts ME (2017) Computer-assisted keyword and document set discovery from unstructured text. Am J Polit Sci 61(4):971–988

Morstatter F, Pfeffer J, Liu H, Carley K (2013) Is the sample good enough? comparing data from twitter’s streaming api with twitter’s firehose. Proc Int AAAI Conf Web Soc Media 7:400–408

Mosleh M, Rand DG (2024) Who is on Twitter (“X”)? Identifying demographic of Twitter users

Munger K, Egan PJ, Nagler J, Ronen J, Tucker J (2022) Political knowledge and misinformation in the era of social media: evidence from the 2015 UK election. Br J Polit Sci 52(1):107–127

Pfeffer J, Mayer K, Morstatter F (2018) Tampering with Twitter’s sample API. EPJ Data Sci 7(1):50

Pointer D (2023) System design interview: scalable unique ID generator (twitter snowflake or a similar service). Accessed: 2023-02-08

Shao C, Hui P-M, Wang L, Jiang X, Flammini A, Menczer F, Ciampaglia GL (2018) Anatomy of an online misinformation network. PLoS ONE 13(4):e0196087

Truong BT, Allen OM, Menczer F (2024) Account credibility inference based on news-sharing networks. EPJ Data Sci 13(1):10

Tufekci Z (2014) Big questions for social media big data: representativeness, validity and other methodological pitfalls. In: Eighth international AAAI conference on weblogs and social media

Wang W, Rothschild D, Goel S, Gelman A (2015) Forecasting elections with non-representative polls. Int J Forecast 31(3):980–991

Wang Y, Callan J, Zheng B (2015) Should we use the sample? Analyzing datasets sampled from Twitter’s stream API. ACM Trans Web (TWEB) 9(3):1–23

Wang Z, Hale S, Adelani DI, Grabowicz P, Hartman T, Flöck F, Jurgens D (2019) Demographic inference and representative population estimates from multilingual social media data. In: The world wide web conference, pp 2056–2067

Wu S, Rizoiu M-A, Xie L (2020) Variation across scales: measurement fidelity under twitter data sampling. Proc Int AAAI Conf Web Soc Media 14:715–725

Yang K-C, Ferrara E, Menczer F (2022) Botometer 101: social bot practicum for computational social scientists. J Computat Soc Sci 1–18

Yang K-C, Hui P-M, Menczer F (2022) How Twitter data sampling biases US voter behavior characterizations. PeerJ Comput Sci 8:e1025

Zafar MB, Bhattacharya P, Ganguly N, Gummadi KP, Ghosh S (2015) Sampling content from online social networks: comparing random vs expert sampling of the twitter stream. ACM Trans Web (TWEB) 9(3):1–33

Acknowledgements

This project received funding from the European Research Council under the EU’s Horizon 2020 research and innovation program (Grant Agreement No. 883121). We thank conference participants at the IC2S2 2023 and APSA 2023 conferences for their helpful feedback. We thank Sara Yari Mehmandoust, Mahdis Abbasi, Sima Mojtahedi, Mohammad Hormati, and Zahra Baghshahi for outstanding research assistance.

Funding

Open access funding provided by University of Zurich.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alizadeh, M., Zare, D., Samei, Z. et al. Comparing methods for creating a national random sample of twitter users. Soc. Netw. Anal. Min. 14, 160 (2024). https://doi.org/10.1007/s13278-024-01327-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13278-024-01327-5