Abstract

In this article, a new operational matrix method based on shifted Legendre polynomials is presented and analyzed for obtaining numerical spectral solutions of linear and nonlinear second-order boundary value problems. The method is novel and essentially based on reducing the differential equations with their boundary conditions to systems of linear or nonlinear algebraic equations in the expansion coefficients of the sought-for spectral solutions. Linear differential equations are treated by applying the Petrov–Galerkin method, while the nonlinear equations are treated by applying the collocation method. Convergence analysis and some specific illustrative examples include singular, singularly perturbed and Bratu-type equations are considered to ascertain the validity, wide applicability and efficiency of the proposed method. The obtained numerical results are compared favorably with the analytical solutions and are more accurate than those discussed by some other existing techniques in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Spectral methods (see, for instance [1–6]) are one of the principal methods of discretization for the numerical solution of differential equations. The main advantage of these methods lies in their accuracy for a given number of unknowns. For smooth problems in simple geometries, they offer exponential rates of convergence/spectral accuracy. In contrast, finite difference and finite-element methods yield only algebraic convergence rates. The three spectral methods, namely, the Galerkin, collocation, and tau methods are used extensively in the literature. Collocation methods [7, 8] have become increasingly popular for solving differential equations, since they are very useful in providing highly accurate solutions to nonlinear differential equations. Petrov–Galerkin method is widely used for solving ordinary and partial differential equations; see for example [9–13]. The Petrov–Galerkin methods [14] have generally come to be known as “stablized” formulations, because they prevent the spatial oscillations and sometimes yield nodally exact solutions where the classical Galerkin method would fail badly. The difference between Galerkin and Petrov–Galerkin methods is that the test and trial functions in Galerkin method are the same, while in Petrov–Galerkin method, they are not.

The subject of nonlinear differential equations is a well-established part of mathematics and its systematic development goes back to the early days of the development of calculus. Many recent advances in mathematics, paralleled by a renewed and flourishing interaction between mathematics, the sciences, and engineering, have again shown that many phenomena in applied sciences, modeled by differential equations, will yield some mathematical explanation of these phenomena (at least in some approximate sense).

Even order differential equations have been extensively discussed by a large number of authors due to their great importance in various applications in many fields. For example, in the sequence of papers [12, 15–17], the authors dealt with such equations by the Galerkin method. They constructed suitable basis functions which satisfy the boundary conditions of the given differential equation. For this purpose, they used compact combinations of various orthogonal polynomials. The suggested algorithms in these articles are suitable for handling one- and two-dimensional linear high even-order boundary value problems. In this paper, we aim to give some algorithms for handling both of linear and nonlinear second-order boundary value problems based on introducing a new operational matrix of derivatives, and then applying Petrov–Galerkin method on linear equations and collocation method on nonlinear equations.

Of the important high-order differential equations are the singular and singular perturbed problems (SPPs) which arise in several branches of applied mathematics, such as quantum mechanics, fluid dynamics, elasticity, chemical reactor theory, and gas porous electrodes theory. The presence of a small parameter in these problems prevents one from obtaining satisfactory numerical solutions. It is a well-known fact that the solutions of SPPs have a multi-scale character, that is, there are thin layer(s) where the solution varies very rapidly, while away from the layer(s) the solution behaves regularly and varies slowly.

Also, among the second-order boundary value problems is the one-dimensional Bratu problem which has a long history. Bratu’s own article appeared in 1914 [19]; generalizations are sometimes called the Liouville–Gelfand or Liouville–Gelfand–Bratu problem in honor of Gelfand [20] and the nineteenth century work of the great French mathematician Liouville. In recent years, it has been a popular testbed for numerical and perturbation methods [21–27].

Simplification of the solid fuel ignition model in thermal combustion theory yields an elliptic nonlinear partial differential equation, namely the Bratu problem. Also due to its use in a large variety of applications, many authors have contributed to the study of such problem. Some applications of Bratu problem are the model of thermal reaction process, the Chandrasekhar model of the expansion of the Universe, chemical reaction theory, nanotechnology and radiative heat transfer (see, [28–32]).

The Bratu problem is nonlinear (BVP) and extensively used as a benchmark problem to test the accuracy of many numerical methods. It is given by:

where \(\lambda >0\). The Bratu problem has the following analytical solution:

where \(\theta\) is the solution of the nonlinear equation \(\theta =\sqrt{2\lambda }\cosh \theta\).

Our main objectives in the present paper are:

-

Introducing a new operational matrix of derivatives based on using shifted Legendre polynomials and harmonic numbers.

-

Using Petrov–Galerkin matrix method (PGMM) to solve linear second-order BVPs.

-

Using collocation matrix method (CMM) to solve a class of nonlinear second-order BVPs, including singular, singularly perturbed and Bratu-type equations.

The outlines of the paper is as follows. In "Some properties and relations of Shifted Legendre polynomials and harmonic numbers", some relevant properties of shifted Legendre polynomials are given. Some properties and relations of harmonic numbers are also given in this section. In "A shifted Legendre matrix of derivatives", and with the aid of shifted Legendre polynomials polynomials, a new operational matrix of derivatives is given in terms of harmonic numbers. In "Solution of second-order linear two point BVPs", we use the introduced operational matrix for reducing a linear or a nonlinear second-order boundary value problems to a system of algebraic equations based on the application of Petrov–Galerkin and collocation methods, and also we state and prove a theorem for convergence. Some numerical examples are presented in "Numerical results and discussions" to show the efficiency and the applicability of the suggested algorithms. Some concluding remarks are given in "Concluding remarks".

Some properties and relations of shifted Legendre polynomials and harmonic numbers

Shifted Legendre polynomials

The shifted Legendre polynomials \(L^{*}_k(x)\) are defined on [a, b] as:

where \(L_{k}(x)\) are the classical Legendre polynomials. They may be generated by using the recurrence relation

with \(L^{*}_0(x)=1,\,L^{*}_1(x)=\displaystyle \frac{2x-b-a}{b-a}.\)These polynomials are orthogonal on [a, b], i.e.,

The polynomials \(L^{*}_{k}(x)\) are eigenfunctions of the following singular Sturm–Liouville equation:

where \(D\equiv \frac{d}{dx}\).

Harmonic numbers

The nth harmonic number is the sum of the reciprocals of the first n natural numbers, i.e.,

The numbers \(H_{n}\) satisfy the recurrence relation

and have the integral representation

The following Lemma is of fundamental importance in the sequel.

Lemma 1

The harmonic numbers satisfy the following three-term recurrence relation:

Proof

The recurrence relation (6) can be easily proved with the aid of the relation (5). \(\square.\)

A shifted Legendre matrix of derivatives

Consider the space (see, [33])

and choose the following set of basis functions:

It is not difficult to show that the set of polynomials \(\{\phi _k(x):\, k=0,1,2,\dots \}\) are linearly independent and orthogonal in the complete Hilbert space \(L_0^2[a, b],\) with respect to the weight function \(w(x)=\displaystyle \frac{1}{(x-a)^2\, (b-x)^2}\), i.e.,

Any function \(y(x)\in L_0^2[a,b]\) can be expanded as

where

If the series in Eq. (8) is approximated by the first \((N+1)\) terms, then

where

Now, we state and prove the basic theorem, from which a new operational matrix can be intoduced.

Theorem 1

Let \(\phi _{i}(x)\) be as chosen in (7). Then for all \(i\ge 1\), one has

where \(\delta _{i}(x)\) is given by

Proof

We proceed by induction on i. For \(i=1,\) it is clear that the left hand side of (11) is equal to its right-hand side, which is equal to: \(\displaystyle a-b+\frac{6\, (x-a) (b-x)}{b-a}\). Assuming that relation (11) is valid for \((i-2)\) and \((i-1)\), we want to prove its validity for i. If we multiply both sides of (3) by \((x-a)(x-b)\) and make use of relation (7), we get

which immediately gives

Now, application of the induction step on \(D\phi _{i-1}(x)\) and \(D\phi _{i-2}(x)\) in (14), yields

where

Substitution of the recurrence relation (13) in the form

into relation (15), and after performing some rather lenghthy algebraic manipulations, give

where

Now, the elements \(m_{ij}\) in (18) can be written in the alternative form

which can be simplified with the aid of Lemma 1, to take the form

Repeated use of Lemma 1 in (17), and after performing some manipulation, leads to

and by noting that

then

and this completes the proof of Theorem 1. \(\square\)

Corollary 1

Let \(x\in [-1,1]=[a,b],\, \psi _i(x)=(1-x^2)L_i(x).\) Then for all \(i\geqslant 1,\) one has

where

Now, and based on Theorem 1, it can be easily shown that the first derivative of the vector \({\varvec{\Phi} (x)}\) defined in (10) can be expressed in the matrix form:

where

and \(M=\big (m_{ij}\big )_{0\leqslant i,j\leqslant N}\), is an \((N+1)\times (N+1)\) matrix whose nonzero elements can be given explicitly from relation (11) as:

For example, for \(N=5\), we have

Remark 1

The second derivative of the vector \(\varvec{\Phi }(x)\) is given by

where

Solution of second-order linear two-point BVPs

In this section, both of linear and nonlinear second-order two-point BVPs are handled. For linear equations, a Petrov–Galerkin method is applied, while for nonlinear equations, the typical collocation method is applied.

Linear second-order BVPs subject to homogenous boundary conditions

Consider the linear second-order boundary value problem

subject to the homogenous boundary conditions

If we approximate y(x) as in (9), making use of relations (23) and (24), we have the following approximations for y(x), \(y'(x)\) and \(y''(x)\):

If we substitute the relations (27), (28) and (29) into Eq. (25), then the residual R(x), of this equation is given by:

The application of Petrov Galerkin method (see, [1]) yields the following \((N+1)\) linear equations in the unknown expansion coefficients, \(c_i,\) namely,

Thus, Eq. (31) generates a set of \((N+1)\) linear equations which can be solved for the unknown components of the vector \({\varvec{C}}\), and hence the approximate spectral solution \(y_{N}(x)\) given in (9) can be obtained.

Linear second-order BVPs subject to nonhomogeneous boundary conditions

Consider the following one-dimensional second-order equation:

subject to the nonhomogeneous boundary conditions:

It is clear that the transformation

turns the nonhomogeneous boundary conditions (33) into the homogeneous boundary conditions:

Hence it suffices to solve the following modified one-dimensional second-order equation:

subject to the homogeneous boundary conditions (34), where

Solution of second-order nonlinear two-point BVPs

Consider the nonlinear differential equation

subject to the homogenous conditions

If we follow the same procedure of "Linear second-order BVPs subject to homogenous boundary conditions", and approximate y(x) as in (27), then after making use of the two relations (23) and (24), then we get the following nonlinear equation in the unknown vector \(\mathbf C\)

To find the numerical solution \(y_{N}(x)\), we enforce (37) to be satisfied exactly at the first \((N+1)\) roots of the polynomial \(L^{*}_{N+1}(x)\). Thus a set of \((N+1)\) nonlinear equations is generated in the expansion coefficients, \(c_{i}\). With the aid of the well-known Newton’s iterative method, this nonlinear system can be solved, and hence the approximate solution \(y_{N}(x)\) can be obtained.

Remark 2

Following a similar procedure to that given in "Linear second-order BVPs subject to nonhomogeneous boundary conditions", the nonlinear second-order Eq. (36) subject to the nonhomogeneous boundary conditions given as in (33) can be tackled.

Convergence analysis

In this section, we state and prove a theorem for convergence of the proposed method.

Theorem 2

The series solutions of Eqs. (25) and (36) converge to the exact ones.

Proof

Let

be the exact and approximate solutions (partial sums) to Eqs. (25) and (36) with \(N\geqslant M\). Then we have

We show that \(y_{N}(x)\) is a Cauchy sequence in the complete Hilbert space \(L_0^2[a,b]\) and hence converges.

Now,

From Bessel’s inequality, we have \(\displaystyle \sum _{i=0}^{\infty }|c_{i}|^2\) is convergent, which yields \(\big \Vert y_{N}(x)-y_{M}(x)\big \Vert _{w(x)}^2\rightarrow 0\) as \(M, N\rightarrow \infty\) and hence \(y_{N}(x)\) converges to say b(x). We prove that \(b(x)=y(x),\)

This proves \(\displaystyle \sum _{i=0}^{\infty }c_{i}\phi _i(x)\) converges to y(x). \(\square\)

As the convergence has been proved, then consistency and stability can be easily deduced.

Numerical results and discussions

In this section, the presented algorithms in "Solution of second-order linear two point BVPs" are applied to solve regular, singular as well as singularly perturbed problems. As expected, the accuracy increases as the number of terms of the basis expansion increases.

Example 1

Consider the second-order nonlinear equation (see, [34]).

The exact solution of (38) is

In Table 1, the maximum absolute error E is listed for various values of N, while in Table 2 a comparison between the numerical solution of problem (38) obtained by the application of CMM with the two numerical solutions obtained by using a sinc-collocation and a sinc-Galerkin methods in [34] is given.

Example 2

Consider the second-order nonlinear singular equation (see, [34]).

with the exact solution

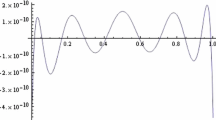

In Table 3, the maximum absolute error E is listed for various values of \(\sigma\) and N, while in Table 4 a comparison between the solution of Example 2 obtained by our method (CMM) with the two numerical solutions obtained in [34] is given for the case \(\sigma =\frac{1}{4}\). In addition, Fig. 1 illustrates the absolute error resulting from the application of CMM for the two cases corresponding to \(N=10,\, \sigma =\frac{1}{4}\) and \(N=15,\, \sigma =\frac{1}{4}\).

The absolute error of Example 2 for \(\sigma =\frac{1}{4}\)

Example 3

Consider the following singularly perturbed linear second-order BVP (see, [35])

where \(\bar{\epsilon }=\sqrt{4\epsilon +1},\) with the exact solution

In Table 5, the maximum absolute error E is listed for various values of \(\epsilon\) and N, while in Table 6, we give a comparison between the solution of Example 3 obtained by our method (PGMM) with the solution obtained by the shooting method given in [35].

Example 4

Consider the following nonlinear second-order boundary value problem:

with the exact solution \(y(x)=x^2-x^4.\) Making use of (9) with \(N=2\) yields

Moreover, in this case the two matrices M and \(M^2\) take the forms

Now, with the aid of Eq. (37), we have

We enforce (40) to be satisfied exactly at the roots of \(L_3(x)\), namely, \(-\sqrt{\frac{3}{5}},\,0,\,\sqrt{\frac{3}{5}}.\) This immediately yields three nonlinear algebraic equations in the three unknowns, \(c_0, c_1\) and \(c_2\). Solving these equations, we get

and hence

which is the exact solution.

Example 5

Consider the following Bratu Equation (see, [28–31]).

With the analytical solution

where \(\theta\) is the solution of the nonlinear equation \(\theta =\sqrt{2\lambda }\cosh \theta\). The presented algorithm in Section 4.3 is applied to numerically solve Eq. (41), for the three cases corresponding to \(\lambda =1,\,2\) and 3.51 which yield \(\theta =1.51716,\,2.35755\) and 4.66781, respectively. In Table 7, the maximum absolute error E is listed for various values of N, and in Table 8, we give a comparison between the best errors obtained by various methods used to solve Example 5. This table shows that our method is more accurate compared with the methods developed in [28–31]. In addition, Fig. 2 illustrates a comparison between different solutions obtained by our algorithm (CMM) in case of \(\lambda =1\) and \(N=1,2,3\).

Different solutions of Example 5

Concluding remarks

In this paper, a novel matrix algorithm for obtaining numerical spectral solutions for second-order boundary value problems is presented and analyzed. The derivation of this algorithm is essentially based on choosing a set of basis functions satisfying the boundary conditions of the given boundary value problem in terms of shifted Legendre polynomials. The two spectral methods, namely, Petrov–Galerkin and collocation methods, are used for handling linear second-order and nonlinear second-order boundary value problems, respectively. One of the main advantages of the presented algorithms is their availability for application on both linear and nonlinear second-order boundary value problems including some important singular perturbed equations and also a Bratu-type equation. Another advantage of the developed algorithms is that high accurate approximate solutions are achieved by using a few number of terms of the suggested expansion. The obtained numerical results are comparing favorably with the analytical ones. We do believe that the proposed algorithms in this article can be extended to treat other types of problems including some two-dimensional problems.

References

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral methods in fluid dynamics. Springer, New York (1988)

Fornberg, B.: A practical guide to pseudospectral methods. Cambridge University Press, Cambridge (1998)

Peyret, R.: Spectral methods for incompressible viscous flow. Springer, New York (2002)

Trefethen, L.N.: Spectral methods in MATLAB. SIAM, Philadelphia (2000)

Gottlieb, D., Hesthaven, J.S.: Spectral methods for hyperbolic problems. Comp. Appl. Math. 128, 83–131 (2001)

Bhrawy, A.H.: An efficient Jacobi pseudospectral approximation for nonlinear complex generalized Zakharov system. Appl. Math. Comp. 247, 30–46 (2014)

Guo B.-Y., Yan J.-P.: Legendre–Gauss collocation method for initial value problems of second order ordinary differential equations. Appl. Numer. Math. 59, 1386–1408 (2009)

Bhrawy, A.H., Zaky, M.A.: A method based on the Jacobi tau approximation for solving multi-term timespace fractional partial differential equations. J. Comp. Phys. 281, 876–895 (2015)

Doha, E.H., Abd-Elhameed, W.M.: Effcient spectral ultraspherical-dual-PetrovGalerkin algorithms for the direct solution of (2n+1)th-order linear differential equations. Math. Comput. Simulat. 79, 3221–3242 (2009)

Doha, E.H., Abd-Elhameed, W.M., Youssri, Y.H.: Efficient spectral-Petrov–Galerkin methods for the integrated forms of third- and fifth-order elliptic differential equations using general parameters generalized Jacobi polynomials. Appl. Math. Comput. 218, 7727–7740 (2012)

Doha, E.H., Abd-Elhameed, W.M., Youssri, Y.H.: Efficient spectral-Petrov–Galerkin methods for third- and fifth-order differential equations using general parameters generalized Jacobi polynomials. Quaest. Math. 36, 15–38 (2013)

Doha, E.H., Abd-Elhameed, W.M., Bhrawy, A.H.: New spectral-Galerkin algorithms for direct solution of high even-order differential equations using symmetric generalized Jacobi polynomials. Collect. Math. 64(3), 373–394 (2013)

Abd-Elhameed W. M.: Some algorithms for solving third-order boundary value problems using novel operational matrices of generalized Jacobi polynomials. Abs. Appl. Anal., vol 2015, Article ID 672703, p. 10

Yu, C.C., Heinrich, J.C.: Petrov–Galerkin methods for the time dependent convective transport equation. Int. J. Num. Mech. Eng. 23, 883–901 (1986)

Doha, E.H., Abd-Elhameed, W.M.: Efficient spectral-Galerkin algorithms for direct solution of second-order equations using ultraspherical polynomials. SIAM J. Sci. Comput. 24, 548–571 (2006)

Doha, E.H., Abd-Elhameed, W.M., Bassuony, M.A.: New algorithms for solving high even-order differential equations using third and fourth Chebyshev–Galerkin methods. J. Comput. Phys. 236, 563–579 (2013)

Abd-Elhameed, W.M.: On solving linear and nonlinear sixth-order two point boundary value problems via an elegant harmonic numbers operational matrix of derivatives. CMES Comp. Model. Eng. Sci. 101(3), 159–185 (2014)

Doha, E.H., Abd-Elhameed, W.M., Bhrawy, A.H.: Efficient spectral ultraspherical-Galerkin algorithms for the direct solution of 2nth-order linear differential equations. Appl. Math. Model. 33, 1982–1996 (2009)

Bratu, G.: Sur les quations intgrales non linaires. Bull. Soc. Math. France 43, 113–142 (1914)

Gelfand, I.M.: Some problems in the theory of quasi-linear equations. Trans. Amer. Math. Soc. Ser. 2, 295–381 (1963)

Aregbesola, Y.A.S.: Numerical solution of Bratu problem using the method of weighted residual. Electron. J. South. Afr. Math. Sci. 3, 1–7 (2003)

Hassan, I., Erturk, V.: Applying differential transformation method to the one-dimensional planar Bratu problem. Int. J. Contemp. Math. Sci. 2, 1493–1504 (2007)

He, J.H.: Some asymptotic methods for strongly nonlinear equations. Int. J. Mod. Phys. B 20, 1141–1199 (2006)

Li, S., Liao, S.: An analytic approach to solve multiple solutions of a strongly nonlinear problem. Appl. Math. Comput. 169, 854–865 (2005)

Mounim, A., de Dormale, B.: From the fitting techniques to accurate schemes for the Liouville–Bratu–Gelfand problem. Numer. Meth. Part. Diff. Eq. 22, 761–775 (2006)

Syam, M., Hamdan, A.: An efficient method for solving Bratu equations. Appl. Math. Comput. 176, 704–713 (2006)

Wazwaz, A.M.: Adomian decomposition method for a reliable treatment of the Bratu-type equations. Appl. Math. Comput. 166, 652–663 (2005)

Abbasbandy, S., Hashemi M.S., Liu C.-S.: The Lie-group shooting method for solving the Bratu equation. Appl. Math. Comput. 16, 4238–4249 (2011)

Caglar, H., Caglar, N., Özer M., Valaristos A., Anagnostopoulos A.N.: B-spline method for solving Bratu’s problem. Int. J. Comput. Math. 87(8), 1885–1891 (2010)

Khuri, S.A.: A new approach to Bratu’s problem. Appl. Math. Comput. 147, 131–136 (2004)

Deeba, E., Khuri, S.A., Xie, S.: An algorithm for solving boundary value problems. J. Comput. Phys. 159, 125–138 (2000)

Boyd, P.J.: One-point pseudospectral collocation for the one-dimensional Bratu equation. Appl. Math. Comput. 217, 5553–5565 (2011)

Adams, R.A., Fournier, J.F.: Sobolev spaces, pure and applied mathematics series. Academic Press, New York (2003)

Mohsen, A., El-Gamel, M.: On the Galerkin and collocation methods for two-point boundary value problems using sinc bases. Comput. Math. Appl. 56, 930–941 (2008)

Natesan, S., Ramanujam, N.: Shooting method for the solution of singularly perturbed two-point boundary-value problems having less severe boundary layer. Appl. Math. Comput. 133, 623–641 (2002)

Acknowledgments

The author would like to thank the referee for carefully reading the paper and for his useful comments which have improved the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Abd-Elhameed, W.M., Youssri, Y.H. & Doha, E.H. A novel operational matrix method based on shifted Legendre polynomials for solving second-order boundary value problems involving singular, singularly perturbed and Bratu-type equations. Math Sci 9, 93–102 (2015). https://doi.org/10.1007/s40096-015-0155-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-015-0155-8

Keywords

- Shifted Legendre polynomials

- Second-order equations

- Singular and singularly perturbed

- Bratu equation

- Petrov–Galerkin method

- Collocation method