Abstract

Motivated by the hunting strategies of cheetahs, this paper proposes a nature-inspired algorithm called the cheetah optimizer (CO). Cheetahs generally utilize three main strategies for hunting prey, i.e., searching, sitting-and-waiting, and attacking. These strategies are adopted in this work. Additionally, the leave the pray and go back home strategy is also incorporated in the hunting process to improve the proposed framework's population diversification, convergence performance, and robustness. We perform intensive testing over 14 shifted-rotated CEC-2005 benchmark functions to evaluate the performance of the proposed CO in comparison to state-of-the-art algorithms. Moreover, to test the power of the proposed CO algorithm over large-scale optimization problems, the CEC2010 and the CEC2013 benchmarks are considered. The proposed algorithm is also tested in solving one of the well-known and complex engineering problems, i.e., the economic load dispatch problem. For all considered problems, the results are shown to outperform those obtained using other conventional and improved algorithms. The simulation results demonstrate that the CO algorithm can successfully solve large-scale and challenging optimization problems and offers a significant advantage over different standards and improved and hybrid existing algorithms. Note that the source code of the CO algorithm is publicly available at https://www.optim-app.com/projects/co.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Background

Recently, solving optimization problems have become a challenging and exciting topic in most research area. Decision-making problems that are overgrowing can be defined as optimization problems. An optimization problem includes one or more objective functions, decision variables, and constraints to be minimized or maximized1. Many deterministic approaches, such as linear programming, Newton methods, quadratic programming, dynamic programming, simplex method, gradient method, etc., are the well-known classical methods to solve optimization problems2,3. These algorithms robustly result in the same solution for a given optimization problem with an identical initial starting point. Although such techniques can find optimal solutions in a reasonable time, they need the objective function and constraints to be convex and derivable4. These cause the deterministic algorithms to fall into locally optimal solutions, which is the main shortcoming of such methods in solving real-world problems. This defect becomes more prominent as the dimension of the problem increases. Therefore, stochastic methods for dealing with it have been developed5,6,7. These algorithms intrinsically neglect the characteristics of the objective functions and constraints, so they treat the problem as a black box. Another advantage of the most metaheuristic algorithms is their simplicity.

How metaheuristics solve a problem is similar. Evolve a set of random solutions in an iterative procedure and keep a good balance between exploration and exploitation phases8. To this end, the search process is divided into two stages exploration and exploitation9. The former assists an algorithm to search globally, and the latter helps it locally search around the probable region obtained by the former phase10. The common feature in metaheuristic algorithms is their randomness which can help them to avoid local solutions. However, these features cause metaheuristic algorithms not to guarantee the global solution. Furthermore, they may result in different solutions in each run11.

According to the No Free Lunch (NFL) theorem12, there is no unique algorithm to solve all optimization problems effectively. This theorem motivates researchers to introduce new algorithms to be applied in various fields of study13. Many recently developed nature-inspired metaheuristic algorithms evidenced this theorem and encouraged us to introduce a novel nature-inspired algorithm based on cheetahs' hunting strategies.

Literature review

Metaheuristic algorithms can be categorized into two main classes, single-solution-based and multiple-solution-based (or population-based). The most popular single-solution-based metaheuristic algorithm is simulated annealing (SA)14. This algorithm's process starts with a random candidate solution (a population) and then moves and improves it in the promising search space in an iterative manner to find the superior solution. However, multiple-solution-based algorithms implement more than one random candidate solution to enhance the speed and the chance to avoid local optima entrapment.

Population-based metaheuristic algorithms can be classified into evolutionary, physics, chemistry, and swarm-based algorithms15. Most popular evolutionary-based metaheuristic algorithms are genetic algorithm (GA)16, genetic programming (GP)17, evolutionary programming (EP)18, evolutionary strategy (ES)19, biogeography-based optimizer (BBO)20 and differential evolution (DE)21. The basis of population improvement and movement in these algorithms is derived from the concept of evolution in nature.

Physics-based metaheuristic algorithms are another type of population-based optimization algorithm. The improvement and movement of the population through search space in these algorithms is made by directly deploying the known laws of physics. These rules include the laws of mechanics, relativity, gravity, electrodynamics, electromagnetism, optics, etc.15. The most famous of these algorithms only cover those which have at least 100 citations, as measured by Google Scholar (collected in March 2021) are, respectively: gravitational search algorithm (GSA)22, big bang-big crunch (BB-BC)23, charged system search (CSS)24, the electromagnetism-like mechanism (EM)25, water cycle algorithm (WCA)26, extremal optimization (EO)27, ray optimization (RO)28, central force optimization (CFO)29, intelligent water drops (IWD)30, chaos optimization algorithm (COA)31, galaxy-based search algorithm (GBSA)32 and river formation dynamics algorithm (RFDA)33.

Another class of population-based metaheuristic algorithms is chemistry-based algorithms. These algorithms are created based on molecules' chemical reactions and features. The most popular chemistry-based algorithms are artificial chemical reaction optimization algorithm (ACROA)34, gases Brownian motion optimization (GBMO)35 and artificial chemical process (ACP)36.

The most popular class of population-based metaheuristic algorithms for researchers is swarm-based algorithms. This type of algorithm is a model of the behavior and social intelligence of a group of living things, such as birds, ants, swarms, schools and so on15. Some of the most popular algorithms in this category are particle swarm optimization (PSO) in 199537, ant colony algorithms (ACO) in 199138, artificial bee colony (ABC) in 200739, Cuckoo Search (CS) in 200940, grey wolf optimizer (GWO) in 201441, firefly algorithm (FA) in 200942, bacterial foraging algorithm (BFA) in 200243, whale optimization algorithm (WOA) in 201644, bat algorithm (BA) in 201045, Shuffled frog leaping algorithm (SFLA) in 200346, bees algorithm (BA) in 200647, moth-flame optimization (MFO) in 201548, krill herd (KH) in 201249, ant lion optimizer (ALO) in 201550, fruit fly optimization algorithm (FOA) in 201251, and glowworm swarm optimization (GSO) in 200952.

Motivation

Some swarm intelligence algorithms are based on animals' hunting and foraging behaviors in nature. Some hunters can hunt the prey individually or in a herd with some numbers, and other members may not participate in the hunting process. Furthermore, in some cases, a small number of hunters can cover a large hunting area. These special features of the cheetah for hunting motivated us to study its behavior more carefully and base it on the development of an optimization algorithm. The hunting processes are modeled in two simple sit and wait beside the attacking mode strategies. Indeed, despite other methods which use some complicated equations in the evolution process, the cheetah optimizer (CO) employs some simple techniques, while the hunting strategies help increase the algorithm's effectiveness. Sitting and waiting to make the prey available, back to home in case of unsuccessful hunting process, return to last successful hunting if the prey not found for sometimes. These are the main strategies in CO. The algorithm performance confirms that the hunting process's characteristics have been modeled in the proposed CO.

Contributions

The main contributions of this paper are listed as follows:

-

1.

A new population-based metaheuristic called CO algorithm is investigated, formulated, and tested on different benchmark functions.

-

2.

Since the hunting processes of cheetahs, i.e., search, sit-and-wait, attack, and leave the prey and go back home, are modeled wholly and simply; thus, the number of initial populations are decreased dramatically in small to large scale optimization problems.

-

3.

The proposed CO method needs a small number of equations while the hunting strategies try to model the hunting process. These strategies create a suitable trade-off between the exploration and exploitation searches and prevent premature convergence in the different optimization problems. Therefore, the concepts of proposed strategies can be used to enhance the performance of other metaheuristic algorithms effectively.

It should be noted that there are existing algorithms in the literature inspired by cheetah53,54,55. In53, it has been tried to use the cheetahs' feature in the chasing mode as an optimization algorithm, but the model is not well shown conceptually and mathematically. In54, an algorithm based on the hunting behavior of cheetahs was introduced. The model has been established based on GWO and modified to adapt to the group hunting behavior of cheetahs, such as leadership hierarchy and communication between teammates during the hunting. However, these algorithms have not been able to make good use of all the features and strategies of cheetahs during hunting and model them mathematically. This article tries to cover these shortcomings well to present a more realistic behavior of cheetahs in creating a robust meta-heuristic algorithm.

The rest of this paper is organized as follows. The inspiration for the suggested method is briefly addressed in “Inspiration” section. “Mathematical model and algorithm” section presents the proposed CO algorithm's mathematical model. “Simulation results” section presents experimental findings and discussions for various benchmark test functions and economic load dispatch (ELD) problems. Finally, concluding remarks and future work are summarized in “Conclusion” section.

Inspiration

Cheetah (Acinonyx jubatus) is the primary cat breed and fastest land animal living in the central areas of Iran and Africa56. The cheetah's speed can reach over 120 km per hour. The cheetahs' agility and speed are their physical characteristics like a long tail, long and thin legs, lightweight and flexible spine. Cheetahs are quick animals capable of stealthy movement, fast returning during predation, and specific spotted coats; however, these visual predators cannot maintain their high-speed action for a long time. Therefore, the chasing must be less than half of a minute57.

Moreover, their speed significantly decreases from 93 km/h or 58 mph to 23 km/h 14 mph only in three strides after catching the prey. Due to the mentioned limitation of cheetahs in maintaining their speed, they precisely observe the environment after staying on small branches or hills to identify their prey. Furthermore, these big cats can effortlessly blend into the high and dry grass due to their specific coats58.

These predators usually hunt gazelles, specifically Thomson's gazelles, impalas, antelopes, hares, birds, rodents, and calves of more fabulous herd animals. First, they move slowly toward the prey with a crouched posture to be hidden and reach the minimum distance, stopping hidden and waiting for the prey to approach the predator. This is because they stop hunting if the prey observes the predator. The mentioned minimum distance is almost 60–70 m or 200–230 ft; however, it is determined to be 200 m or 660 ft if they cannot stay hidden appropriately. Specifically, the pursuit duration is 60 s with a mean distance of 173 m or 568 ft to 559 m or 1834 ft. Then, the prey's balance is lost after their rump is beaten with the cheetah's forepaw, and finally, the predator brings down the prey using too much force and turns it, which makes the prey try to escape59. Cheetahs' muscular tails' back and forth movement also helps them achieve sharp turns60. Generally, hunting the animals that move far from their herds or have less caution is much easier61,62. It should be noted that there are various determinants associated with predation, including maturity, gender, the number of predators, and the carelessness of prey. Also, coalitions or mothers with cubs tend to hunt more giant animals successfully.

According to the biological investigations, it has been found that cheetahs have remarkable spinal flexibility and long tails that lead to their physical balance. Moreover, they have collarbone-separated shoulder blades that facilitate the movement of the shoulders. All the characteristics mentioned earlier make these big cats considered remarkable predators; however, not all the predations are successful.

Mathematical model and algorithm

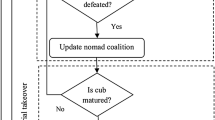

When a cheetah is patrolling or scanning its surroundings, it is possible to detect prey. Seeing the prey, the cheetah may sit in its place and wait until the prey gets closer to it and then starts the attack. The attack mode includes rushing and capturing phases. The cheetah may give up the hunting for several reasons, such as its energy limits, fast prey fleeing, etc. Then, they may go back home to rest and start new hunting. By assessing the prey, his/her condition, area and distance to the prey, the cheetah may choose one of these strategies, as depicted in Fig. 163. Overall, the CO algorithm is based on intelligently utilizing these hunting strategies during hunting periods (iterations).

-

Searching: Cheetahs need to search, including scanning or active search, in their territories (search space) or the surrounding area to find their prey.

-

Sitting-and-waiting: After the prey is detected, but the situation is not proper, cheetahs may sit and wait for the prey to come nearer or for the position to be better;

-

Attacking: This strategy has two essential steps:

-

Rushing: When the cheetah decides to attack, they rush toward the prey with maximum speed.

-

Capturing: The cheetah used speed and flexibility to capture the prey by approaching the prey.

-

-

Leave the prey and go back home: Two situations are considered for this strategy. (1) If the cheetah is unsuccessful in hunting the prey, it should change its position or return to its territory. (2) In cases with no successful hunting action in some time interval, it may change its position to the last prey detected and searched around it.

The mathematical models of the hunting mentioned above strategies are provided in detail in the following sections. Then, the CO is outlined.

Search strategy

Cheetahs seek prey in two ways; either scan the environment while sitting or standing or actively patrols the surrounding area. Scanning mode is more suitable when the prey is dense and grazing while walking on the plains. On the other hand, choosing an active mode that needs more energy than the scan mode is better if the prey is scattered and active. Therefore, during the hunting period, depending on the prey's condition, the coverage of the area, and the condition of the cheetahs themselves, a chain of these two search modes may be selected by the cheetah. To mathematically model this searching strategy of cheetahs, let \(X_{i,j}^{t}\) denote the current position of cheetah i (i = 1, 2, …, n) in arrangement j (j = 1, 2, …, D), where n is the number of cheetahs population and D is the dimension of the optimization problem. Indeed, each cheetah experiences different situations dealing with various prey. Each prey is a location of a decision variable corresponding to the best solution while the cheetah's states (other arrangements) construct a population.

Then, the following random search equation is proposed for updating the new position of cheetah i in each arrangement based on their current position, and an arbitrary step size as follows:

where \(X_{i,j}^{t + 1}\) and \(X_{i,j}^{t}\) are the next and the current positions of cheetah i in arrangement j, respectively. Index t denotes the current hunting time, and \(T\) is the maximum length of hunting time. \(\hat{r}_{i,j}^{ - 1}\) and \(\alpha_{i,j}^{t}\) are the randomization parameter and step length for cheetah i in arrangement j, respectively. The second term is the randomization term, where the randomization parameter \(\hat{r}_{i,j}^{{}}\) is normally distributed random numbers from a standard normal distribution. The step length \(\alpha_{i,j}^{t} > 0\) in most cases can be set at \(0.001 \times t/T\) as cheetahs are slow-walking searchers. In encountering other hunters (enemies), the cheetahs may escape rapidly and change their directions. To reflect such behavior as well as near/far destination search mode, the random number \(\hat{r}_{i,j}^{ - 1}\) is used here for each cheetah in different hunting periods. In some cases, \(\alpha_{i,j}^{t}\) can be adjusted by the distance between the cheetah i and his/her neighborhood or leader. The position of a cheetah (named leader) in each arrangement of cheetahs is updated by assuming \(\alpha_{i,j}^{t}\) equal to \(0.001 \times t/T\) multiplied by the maximum step size (here, we consider it based on the variable limits, i.e., the upper limit minus the lower limit). For other members, \(\alpha_{i,j}^{t}\) in each cheetah's arrangement is calculated by multiplying the distance between the position of cheetah i and a randomly selected cheetah. Figure 2a illustrates the search strategy.

There is a distance between the leader and the prey (i.e., the best solution in this paper). Thus, the leader position is selected based on the prey position by changing some variables in the best solution. It is to be expected that the leader and the prey would be closer by the time unless the hunting time is terminated, leading to an updated leadership position. It should be noted that the step size of the cheetah is entirely random, and the CO would consider this. Therefore, the CO can effectively solve the optimization problems correctly by utilizing any randomization parameter and random step size, i.e.,\({ }\hat{r}_{i,j}^{ - 1}\) and \(\alpha_{i,j}^{t}\).

Sit-and-wait strategy

During the searching mode, the prey may expose to a cheetah's field of vision. In this situation, every movement of the cheetah may make the prey aware of his/her presence and lead to the escape of the prey. To avoid this concern, the cheetah may decide to ambush (by lying on the ground or hiding among the bushes) to get close enough to the prey. Therefore, in this mode, the cheetah remains at his/her position and waits for the prey to come nearer (see Fig. 2b). This behavior can be modeled as follows:

where \(X_{i,j}^{t + 1}\) and \(X_{i,j}^{t}\) are the updated and current positions of cheetah i in arrangement j, respectively. This strategy requires the CO algorithm not to change all cheetahs simultaneously in each group to increase the success of hunting (finding a better solution) and hence can assist it in avoiding premature convergence.

Attack strategy

Cheetahs use two crucial factors to attack their prey: speed and flexibility. When a cheetah decides to attack, he/she rushes to the prey at full speed. After a while, the prey notices the cheetah's attack and begins to flee. The cheetah rapidly pursues the prey in the interception path with its keen eyes, as shown in Fig. 2c. In other words, the cheetah follows the position of the prey and adjusts its direction of movement in such a way as to block the prey's way at one point. Because the cheetah has reached a short distance from the prey at maximum speed, the prey must escape and change its position suddenly to survive, as shown in Fig. 2d, i.e., the next position of the cheetah is near the last position of prey. Also, as shown in Fig. 2d, probably, the one cheetah doesn't participate in an attacking strategy that completely matches the natural hunting of cheetahs. The cheetah uses speed and flexibility to capture the prey in this phase. In a group hunting method, each cheetah may adjust his/her position based on the fleeing prey and the position of the leader or neighborhood cheetah. Simply, these all attacking tactics of cheetahs are mathematically defined as follows:

where \(X_{B,j}^{t}\) is the current position of the prey in arrangement j. In other words, it is the current best position of the population. \(\check{r}_{i,j}\) and \(\beta_{i,j}^{t}\) are respectively the turning factor and interaction factor associated to the cheetah i in arrangement j. \(X_{B,j}^{t}\) is used in (3) because, in attacking mode, the rushing strategy of cheetahs by utilizing maximum speed helps them get as close as possible to the prey's position in a short time. Hence, this paper calculates the new position of the i-th cheetah in attacking mode based on the prey's current position. In the second term, the turning factor \(\beta_{i,j}^{t}\) reflects the interaction between the cheetahs or between a cheetah and leader in the capturing mode. Mathematically, this factor can be defined as the difference between the neighborhood cheetah's position, \(X_{k,j}^{t}\) (\(k \ne i\)), and the i-th cheetah's position, \(X_{i,j}^{t}\). The turning factor \(\check{r}_{i,j}\) is a random number that is equal to \(|r_{i,j} |^{{{\text{exp}}\left( {r_{i,j} /2} \right)}} {\text{sin}}\left( {2\pi r_{i,j} } \right)\) in this paper. \(r_{i,j}\) is normally distributed random numbers from a standard normal distribution. This factor reflects the sharp turns of the cheetahs in the capturing mode.

Hypotheses

Based on the behaviors of cheetahs in haunting, the following assumptions are considered in the proposed CO algorithm:

-

1.

Each row of the cheetahs’ population is modeled as a cheetah in different states. Each column represents a specific arrangement of cheetahs concerning the prey (best solution of each decision variable). In other words, cheetahs follow their prey (best point of a variable). To find the best optimal solution, the cheetahs require success in capturing the prey in each arrangement. The performance of each cheetah is evaluated by the value of fitness function for that cheetah in all arrangements. The higher performance of a cheetah indicates a higher probability of hunting success.

-

2.

In a real group hunting process, the reactions of all cheetahs are different from others. Indeed, in every arrangement, a cheetah may be in attacking mode while the other cheetahs may be in one of searching, sitting-and-waiting, and attacking modes. Also, the cheetahs' energy is independent of the prey. Modeling each decision variable as an arrangement of cheetahs, besides using the random parameters \(\hat{r}_{i,j}^{ - 1}\) and \(\check{r}_{i,j}\) results in preventing premature convergence even in an extremely large evolution process. These random variables can be considered an energy source for cheetahs during the hunting process. These two critical ideas have been neglected in previous hunting evolutionary methods that significantly affect optimization performance. In the attacking strategy, the cheetah's direction depends on the prey, but the cheetah movements have completely random behavior in the searching strategy.

-

3.

The behaviors of cheetahs during the searching or the attacking strategies are assumed to be completely random, as depicted in Fig. 2 by the red dash-line, but during the rushing and the capturing mode, the prey scape in a sharp changing direction, as depicted in the last movement shown in Fig. 2d. The randomization parameter \(\hat{r}_{i,j}^{{}}\) and the turning factor \(\check{r}_{i,j}\) model these random movements. Changing the step length \(\alpha_{i,j}^{t}\) and interaction factor \(\beta_{i,j}^{t}\) with completely random variables also lead to a suitable optimization process. These confirm that the hunting process is modeled precisely.

-

4.

In the hunting process, the searching or the attacking strategy is deployed randomly, but the searching strategy becomes more likely over time due to decreasing the cheetah's energy level. In some cases, the first steps are devoted to the search strategy, while the attack strategy is selected for large values of t to achieve better solutions. Assuming \(r_{2}\) and \(r_{3}\) as uniformly random numbers from [0, 1]. If \(r_{2} \ge r_{3}\) the sit and wait strategy is selected; otherwise, one of the searching or the attacking strategies is selected based on a random value \(H = {\text{e}}^{{2\left( {1 - t/T} \right)}} \left( {2r_{1} - 1} \right)\) where \(r_{1}\) is a uniformly random number from [0, 1]. By tuning \(r_{3}\), the switching rate between the sit-and-wait strategy and two other strategies can be controlled. For instance, based on our experiences, if the objective function is too sensitive to the changes in some decision variables (this can reflect the sensitivity of prey to the movement of the cheetah), the value of \(r_{3}\) can be selected as small random numbers. This situation increases the sit-and-wait mode to be chosen by a cheetah, decreasing the rate of changing decision variables. Hence, the success probability of hunting (finding better solutions) is increased. Increasing \(t\) in the \(H\) function decreases the chance of choosing the attacking strategy by a cheetah due to the energy limitation. Still, this probability is not zero, which get entirely inspired by the cheetah's behavior. To do this, if \(H \ge r_{4}\), attack mode is selected else the search mode is implemented. \(r_{4}\) is a random number between 0 and 3. Here, higher values of the \(r_{4}\) highlights the exploitation phase while decreasing it increases the exploration process.

-

5.

The scanning and sitting-and-waiting strategies have the same meaning in the CO algorithm, indicating that the cheetah (search agent) has no movement during the hunting period.

-

6.

If the leader fails in hunting in some consecutive hunting processing, the position of a randomly chosen cheetah is changed into the last place of success hunting (i.e., the prey position). Maintaining the prey position among a small population in this algorithm strengthens the exploration phase.

-

7.

Each group of cheetahs has a limitation on the hunting time due to their energy limitations. Hence, if a group couldn't be succussed in a hunting period, the current prey is left, and the group comes back to its home range (initial position in this paper) to rest and then start another hunting. Indeed, a group of cheetahs will return home if their energies (which is modeled by hunting time) decrease and the leader's position is constant. In this condition, the position of leader is also updated. As a result of this strategy, it is possible to avoid getting stuck in local optimum solutions.

-

8.

In each iteration, part of members participated in the evolution process.

The essential phases of the CO algorithm may be represented as the pseudo-code summarized in Algorithm 1 based on cheetah hunting techniques and assumptions. The source codes of this algorithm can be found in Supplementary Information.

Algorithm complexity

When evaluating the performance of an algorithm, its complexity is an important metric. In order to initialize each population in the CO as well as other algorithms such as PSO, GWO, GA, and DE, it requires \({\mathcal{O}}\left( {o \times n} \right)\) time, where \(o\) represents the number of objectives and \(n\) represents the number of populations. Every algorithm has an \({\mathcal{O}}\left( {MaxIt \times ef} \right)\) complexity, where \(MaxIt\) is the maximum number of iterations and \(ef\) defines evaluation function complexity for a given problem. If the entire process is simulated, \({\mathcal{O}}\left( N \right)\) would require. Accordingly, algorithms such as PSO, and GWO have a computational complexity of \({\mathcal{O}}\left( {N \times MaxIt \times o \times n \times ef} \right)\). For CO, GA and DE algorithms, the computational complexity is \({\mathcal{O}}\left( {N \times MaxIt \times o \times n \times ef \times \left( {mu + cr} \right)} \right)\), where \(mu\) denotes mutation operations, and \(cr\) denotes crossover. Table 1 provides information on the average running time of CO and other algorithms for shifted sphere function with 10 variables for 1000 function evaluations and 25 independent runs. The population size for all algorithms is set at 10 and the other parameters of the competitor algorithms are given in Table 2. GWO is more time efficient compared to other approaches in terms of seconds. By contrast, GA exhibit relatively higher computational time than other competitors. The results of Table 1 indicate that, the CO can find better optimal solution in terms of mean and standard deviation (SD) than other algorithms in a reasonable time. It should be noted that the computational time also depends on the coding method of each algorithm. We use typical source codes in this comparison.

Simulation results

Results of CO on 500-D shifted CEC2005 test functions

In this benchmark, f1 to f7 are classical unimodal functions, and f8 to f13 are multimodal functions. This case study is designed to analyze the CO behavior compared to well-known algorithms such as DE, GWO, GA, PSO, and TLBO in large-scale 500-D shifted benchmark functions. In Table 2, the parameters for CO and other competing algorithms are shown. Since the population number differs from one case to another, the maximum number of function evaluations is set to 12 × 105 for all algorithm to have a firm comparison. The statistical results of this case are presented in Table 3. These results confirm the superiority of CO algorithm over the competing algorithms for nine test functions f1, f3, f4, f6, f7, f9, f10, f12, and f13 in terms of mean and standard deviation. For test functions f2, and f11, DE algorithm is better than the other algorithms. GA provides better solution for test function f8, and TLBO shows better solution for test function f5. Also, according to Freidman’s rank test, the proposed CO algorithm shows the best performance among different competitor algorithms.

One of the major features of CO algorithm is the high capability in the exploitation phase, where the proposed strategies keep the population diversity and search the whole feasible search space, even in large scale optimization problem with small size of initial population, i.e., n = 6. Indeed, in all objective functions, the population moves towards better solutions in consecutive iterations without trapping in local optima.

Results of CO on shifted-rotated CEC2005 test functions

The set of benchmarks with 14 shifted-rotated CEC2005 functions with 30 variables are tested in this section. The results of CO are compared with several powerful, well-known evolutionary algorithms such as whale optimization algorithm (WOA)44, emperor penguin optimizer (EPO)64, slime mould algorithm (SMA)65, Jaya66, heat transfer search (HTS)67, modified particle swarm optimizer (MPSO)68, self-adaptive DE (jDE)69, DE70, and global and local real-coded genetic algorithms based on parent-centric crossover operators (GL-25)71. The parameters of each algorithm are reported in Table 4 and for a fair comparison the number of fitness evaluations are set to 3 × 105 for all algorithms.

The performance of CO is analyzed through the searching history, convergence, and trajectory curves. Also, some aspects of CO are cleared using the curves of minimum, maximum and mean value of the objective function in each iteration. Table 5 compares the statistical results of CO with nine evolutionary algorithms. As can be observed from Table 5, the proposed CO algorithm in all functions provides better solution than the WOA, EPO, SMA, Jaya, HTS, DE, and jDE algorithms. Also, in all test functions except f11, CO algorithm overcomes the MPSO algorithm. Compared to GL-25, CO algorithm has been able to get better solutions for nine functions, i.e., functions f2, f3, f7, f8, f9, f11, f12, f13, and f14. By contrast, for f1, f4, f5, f6, f10 GL-25 is better than other algorithms. Mean rank values from Freidman test reveal that CO algorithm has the best performance among the competitor algorithms for solving 30-D shifted-rotated CEC2005 test functions.

The plotted search history in Fig. 3 shows that the CO gains a better solution through exploring the feasible search space. As shown in Fig. 3, each variable changes its location from the lower limit to its upper limits several times, which guarantees to find suitable solutions. This regular searching history is a unique feature in the CO. The first graph from the right shows the minimum, maximum and average values of the objective function corresponding to the population in each iteration. Also, Fig. 3 shows that the leave the prey and go back home strategy keeps the population diversity during the evolution process. The cheetah's strategies are adopted to proceed with the optimal solution despite population diversity in each step of evolution.

Results of CO on large scale optimization problems

In this section, to investigate how the CO method deals with the large-scale optimization problem, it is tested on CEC-2010 and CEC-2013 large-scale functions to validate its performance. The results of CO in the original condition are compared to other well-known modified algorithms which are tuned to solve the large-scale optimization problems, including72: (1) SLPSO, (2) CSO, (3) DMS-L-PSO, (4) CCPSO2, (5) DECC-G, (6) MLCC, and (7) DECC-DG methods as presented in Table 6. The results show that the CO can effectively enhance the results of eight benchmark functions while revealing completely comparable results in other objective functions. In the case of large-scale optimization problems, the CO uses 6-population while 2 of them are called in each iteration. The maximum number of function evaluations for all algorithms are set to 3 × 106 for these problems.

A comparison study between CO algorithm with a new modified version of PSO named dynamic group learning distributed particle swarm optimization (DGLDPSO)73 is done in Table 7. As can be seen, the CO algorithm can defeat DGLDPSO in 10 functions while almost other objectives reach comparable values. It is worth noting that the CO can also be modified to achieve better solutions.

The CEC2013 large-scale benchmark functions74 are selected as another case study. Implementing the CO algorithm on these benchmarks is compared with seven well-known modified optimization algorithms adopted to solve the large-scale optimization problems in Table 8. The CO algorithm enhances the results of ten benchmark functions which confirm the effectiveness of CO algorithm in dealing with large-scale optimization problems. The initial populations are set to 6, while 2-population is used in each iteration. Indeed, by changing the turning factor and interaction factor (\(\check{r}_{i,j}\) and \(\beta_{i,j}^{t}\)) and randomization parameter and step length (\(\hat{r}_{i,j}^{ - 1}\) and \(\alpha_{i,j}^{t}\)) the results of other benchmark functions can be enhanced.

Results of CO on the real-life engineering application

The ELD problem is one of the most significant, well-known, and complicated optimization problems in power systems. This problem tries to determine the optimal output power generation of thermal units to meet the power system's required load demand while minimizing the units' fuel expenditures. ELD may also evaluate transmission system power losses and multi-fuel and valve point effects. The primary limitations of this problem include the restriction on load balance, restriction on power generation, restriction on the ramp rate, and restriction on prohibited operation zone.

The detailed ELD formulation is explained in75. a 15-unit test system is considered to evaluate the performance of CO algorithm to solve this nonconvex optimization issue. This system's total demand is 2630 MW. Other information about this system can be found in75. The results of statistical comparisons between CO and different meta-heuristic and improved algorithms in recent studies are summarized in Table 9. The competitive modified and hybrid algorithms in these two cases are: bacterial foraging optimization (BFO)76, a modified ion motion optimization (MIMO) conglomerated with crisscross search (CSO) named C-MIMO-CSO77, TLBO78, an improved orthogonal design particle swarm optimization (IODPSO) algorithm79, synergic predator–prey optimization (SPPO) algorithm80, multi-strategy ensemble biogeography-based optimization (MsEBBO)81, a new variant for the firefly algorithm, considering a non-homogeneous population named NhFA-Rnp82, clustering cuckoo search optimization (CCSO)83, a novel variant of competitive swarm optimizer (CSO) referred to as OLCSO84, and adaptive charged system search (ACSS)85. The results demonstrate that the algorithm outperforms other state-of-the-art and improved algorithms in terms of worst, mean, best, and standard deviation values.

Conclusion

We proposed in this paper an optimization algorithm named cheetah optimizer (CO) based on the hunting process of cheetahs in nature. The proposed algorithm relies on several hunting strategies used by cheetahs instead of using mathematically complex approaches. In this regard, each decision variable is considered a possible arrangement of a group of cheetahs. Hence, each population can be regarded as a probable arrangement of cheetahs. The search, sit-and-wait and attack were mathematically modeled as the primary strategies of the proposed CO algorithm. Leave the prey and back to the home strategy was also implemented to enhance the algorithm's abilities in avoiding premature convergence and local optimal entrapment. These concepts were modeled in the CO framework so that it became an easy, fast, and powerful evolutionary method. The experimental results confirmed the monotonic behavior of the CO algorithm in dealing with low- and large-scale optimization problems. Finally, we validated the performance of the CO algorithm over the practical nonconvex ELD problem. The results showed that the suggested algorithm outperformed existing state-of-the-art algorithms in solving complex and challenging optimization problems. The main direction for future works is the development of the multi-objective CO, the application of CO to some complex engineering problems, and the hybridization of the proposed hunting strategies with other evolutionary methods.

Data availability

Data sharing is not applicable—no new data is generated.

References

Sergeyev, Y. D., Kvasov, D. E. & Mukhametzhanov, M. S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 8, 1–9 (2018).

Luo, X., Liu, H., Gou, G., Xia, Y. & Zhu, Q. A parallel matrix factorization based recommender by alternating stochastic gradient decent. Eng. Appl. Artif. Intell. 25, 1403–1412 (2012).

Lu, T. & Liu, S.-T. Fuzzy nonlinear programming approach to the evaluation of manufacturing processes. Eng. Appl. Artif. Intell. 72, 183–189 (2018).

Koc, I., Atay, Y. & Babaoglu, I. Discrete tree seed algorithm for urban land readjustment. Eng. Appl. Artif. Intell. 112, 104783 (2022).

Spall, J. C. Introduction to Stochastic Search and Optimization: Estimation, Simulation, and Control Vol. 65 (Wiley, 2005).

Cruz, Y. J. et al. Ensemble of convolutional neural networks based on an evolutionary algorithm applied to an industrial welding process. Comput. Ind. 133, 103530 (2021).

Haber, R. E., Beruvides, G., Quiza, R. & Hernandez, A. A simple multi-objective optimization based on the cross-entropy method. IEEE Access 5, 22272–22281 (2017).

Alba, E. & Dorronsoro, B. The exploration/exploitation tradeoff in dynamic cellular genetic algorithms. IEEE Trans. Evol. Comput. 9, 126–142 (2005).

Wang, J.-S. & Li, S.-X. An improved grey wolf optimizer based on differential evolution and elimination mechanism. Sci. Rep. 9, 1–21 (2019).

Lozano, M. & García-Martínez, C. Hybrid metaheuristics with evolutionary algorithms specializing in intensification and diversification: overview and progress report. Comput. Oper. Res. 37, 481–497 (2010).

Kirkpatrick, S. Optimization by simulated annealing: quantitative studies. J. Stat. Phys. 34, 975–986 (1984).

Wolpert, D. H. & Macready, W. G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1, 67–82 (1997).

Singh, P., Dhiman, G. & Kaur, A. A quantum approach for time series data based on graph and Schrödinger equations methods. Mod. Phys. Lett. A 33, 1850208 (2018).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680 (1983).

Xing, B. & Gao, W.-J. Innovative Computational Intelligence: A Rough Guide to 134 Clever Algorithms (Springer, 2014).

Holland, J. H. Genetic algorithms. Sci. Am. 267, 66–73 (1992).

Koza, J. R. Genetic programming as a means for programming computers by natural selection. Stat. Comput. 4, 87–112. https://doi.org/10.1007/BF00175355 (1994).

Yao, X., Liu, Y. & Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 3, 82–102 (1999).

Rechenberg, I. Evolutionsstrategie’94 (Frommann-holzboog, 1994).

Simon, D. Biogeography-based optimization. IEEE Trans. Evol. Comput. 12, 702–713 (2008).

Storn, R. & Price, K. Differential evolution: a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11, 341–359 (1997).

Rashedi, E., Nezamabadi-Pour, H. & Saryazdi, S. GSA: a gravitational search algorithm. Inf. Sci. 179, 2232–2248 (2009).

Erol, O. K. & Eksin, I. A new optimization method: big bang–big crunch. Adv. Eng. Softw. 37, 106–111 (2006).

Kaveh, A. & Talatahari, S. A novel heuristic optimization method: charged system search. Acta Mech. 213, 267–289 (2010).

Birbil, Şİ & Fang, S.-C. An electromagnetism-like mechanism for global optimization. J. Glob. Optim. 25, 263–282 (2003).

Eskandar, H., Sadollah, A., Bahreininejad, A. & Hamdi, M. Water cycle algorithm: a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 110, 151–166 (2012).

Boettcher, S. & Percus, A. G. Optimization with extremal dynamics. Complexity 8, 57–62 (2002).

Kaveh, A. & Khayatazad, M. A new meta-heuristic method: ray optimization. Comput. Struct. 112, 283–294 (2012).

Formato, R. A. Central force optimization. Prog. Electromagn. Res. 77, 425–491 (2007).

Hosseini, H. S. IEEE Congress on Evolutionary Computation 3226–3231 (IEEE, 2007).

Bing, L. & Weisun, J. Chaos optimization method and its application. Control Theory Appl. 14, 613–615 (1997).

Shah-Hosseini, H. Principal components analysis by the galaxy-based search algorithm: a novel metaheuristic for continuous optimisation. Int. J. Comput. Sci. Eng. 6, 132–140 (2011).

Rabanal, P., Rodríguez, I. & Rubio, F. in International Conference on Unconventional Computation. 163–177 (Springer).

Alatas, B. ACROA: artificial chemical reaction optimization algorithm for global optimization. Expert Syst. Appl. 38, 13170–13180 (2011).

Abdechiri, M., Meybodi, M. R. & Bahrami, H. Gases Brownian motion optimization: an algorithm for optimization (GBMO). Appl. Soft Comput. 13, 2932–2946 (2013).

Irizarry, R. LARES: an artificial chemical process approach for optimization. Evol. Comput. 12, 435–459 (2004).

Kennedy, J. & Eberhart, R. in Proceedings of ICNN'95-International Conference on Neural Networks. 1942–1948 (IEEE).

Colorni, A., Dorigo, M. & Maniezzo, V. in Proceedings of The First European Conference on Artificial Life. 134–142.

Karaboga, D. & Basturk, B. A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm J. Glob. Optim. 39, 459–471 (2007).

Yang, X.-S. & Deb, S. in World Congress on Nature & Biologically Inspired Computing (NaBIC). 210–214 (IEEE, 2009).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey Wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Yang, X.-S. in International Symposium on Stochastic Algorithms. 169–178 (Springer).

Passino, K. M. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. Mag. 22, 52–67 (2002).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67 (2016).

Yang, X.-S. in Nature Inspired Cooperative Strategies for Optimization (NICSO 2010). 65–74 (Springer, 2010).

Eusuff, M. M. & Lansey, K. E. Optimization of water distribution network design using the shuffled frog leaping algorithm. J. Water Resour. Plan. Manag. 129, 210–225 (2003).

Pham, D. T. et al. Intelligent Production Machines and Systems 454–459 (Elsevier, 2006).

Mirjalili, S. Moth-flame optimization algorithm: a novel nature-inspired heuristic paradigm. Knowl. Based Syst. 89, 228–249 (2015).

Gandomi, A. H. & Alavi, A. H. Krill herd: a new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 17, 4831–4845 (2012).

Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 83, 80–98 (2015).

Pan, W.-T. A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl. Based Syst. 26, 69–74 (2012).

Krishnanand, K. & Ghose, D. Glowworm swarm optimization for simultaneous capture of multiple local optima of multimodal functions. Swarm Intell. 3, 87–124 (2009).

Goudhaman, M. Cheetah chase algorithm (CCA): a nature-inspired metaheuristic algorithm. Int. J. Eng. Technol. 7, 1804–1811 (2018).

Saravanan, D., Paul, P. V., Janakiraman, S., Dumka, A. & Jayakumar, L. A new bio-inspired algorithm based on the hunting behavior of cheetah. Int. J. Inf. Technol. Project Manag. (IJITPM) 11, 13–30 (2020).

Klein, C. E., Mariani, V. C. & dos Santos Coelho, L. Cheetah Based Optimization Algorithm: A Novel Swarm Intelligence Paradigm. In ESANN 685–690 (2018).

O’Brien, S. J., Johnson, W. E., Driscoll, C. A., Dobrynin, P. & Marker, L. Conservation genetics of the cheetah: lessons learned and new opportunities. J. Hered. 108, 671–677 (2017).

Marker, L., Boast, L. K. & Schmidt-Küntzel, A. Cheetahs: Biology and Conservation (Academic Press, 2018).

Krausman, P. R. & Morales, S. M. Acinonyx jubatus. Mamm. Species 2005, 1–6 (2005).

Estes, R. D. The Behavior Guide to African Mammals: Including Hoofed Mammals, Carnivores (Primates. University of California Press, 2012).

Patel, A. & Braae, M. in IEEE/RSJ International Conference on Intelligent Robots and Systems. 5506–5511 (IEEE, 2013).

Aarde, R. J. & Dyk2, A. Inheritance of the king coat colour pattern in cheetahs Acinonyx jubatus. J. Zool. 209, 573–578 (1986).

Phillips, J. A. Bone consumption by cheetahs at undisturbed kills: evidence for a lack of focal-palatine erosion. J. Mammal. 74, 487–492 (1993).

Dhiman, G. & Kumar, V. Emperor penguin optimizer: a bio-inspired algorithm for engineering problems. Knowl. Based Syst. 159, 20–50 (2018).

Li, S., Chen, H., Wang, M., Heidari, A. A. & Mirjalili, S. Slime mould algorithm: a new method for stochastic optimization. Future Gener. Comput. Syst. 111, 300–323 (2020).

Rao, R. Jaya: a simple and new optimization algorithm for solving constrained and unconstrained optimization problems. Int. J. Ind. Eng. Comput. 7, 19–34 (2016).

Patel, V. K. & Savsani, V. J. Heat transfer search (HTS): a novel optimization algorithm. Inf. Sci. 324, 217–246 (2015).

Shi, Y. & Eberhart, R. in IEEE International Conference on Evolutionary Computation Proceedings. IEEE World Congress on Computational Intelligence (Cat. No. 98TH8360). 69–73 (IEEE, 1998).

Qiang, J., Mitchell, C. & Qiang, A. in IEEE Congress on Evolutionary Computation (CEC). 4061–4068 (IEEE, 2016).

Fu, L., Zhu, H., Zhang, C., Ouyang, H. & Li, S. Hybrid harmony search differential evolution algorithm. IEEE Access 9, 21532–21555 (2021).

Wang, Y., Cai, Z. & Zhang, Q. Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans. Evol. Comput. 15, 55–66 (2011).

Jian, J.-R., Chen, Z.-G., Zhan, Z.-H. & Zhang, J. Region encoding helps evolutionary computation evolve faster: a new solution encoding scheme in particle swarm for large-scale optimization. IEEE Trans. Evol. Comput. 25, 779–793 (2021).

Wang, Z.-J. et al. Dynamic group learning distributed particle swarm optimization for large-scale optimization and its application in cloud workflow scheduling. IEEE Trans. Cybern. 50, 2715–2729 (2019).

Li, X. et al. Benchmark functions for the CEC 2013 special session and competition on large-scale global optimization. Gene 7, 8 (2013).

Sun, J., Fang, W., Wang, D. & Xu, W. Solving the economic dispatch problem with a modified quantum-behaved particle swarm optimization method. Energy Convers. Manag. 50, 2967–2975 (2009).

Panigrahi, B. & Pandi, V. R. Bacterial foraging optimisation: Nelder-Mead hybrid algorithm for economic load dispatch. IET Gener. Transm. Distrib. 2, 556–565 (2008).

Kumar, M. & Dhillon, J. S. A conglomerated ion-motion and crisscross search optimizer for electric power load dispatch. Appl. Soft Comput. 83, 105641 (2019).

Nee Dey, S. H. Teaching learning based optimization for different economic dispatch problems. Sci. Iran. 21, 870–884 (2014).

Qin, Q., Cheng, S., Chu, X., Lei, X. & Shi, Y. Solving non-convex/non-smooth economic load dispatch problems via an enhanced particle swarm optimization. Appl. Soft Comput. 59, 229–242 (2017).

Singh, N. J., Dhillon, J. & Kothari, D. Synergic predator-prey optimization for economic thermal power dispatch problem. Appl. Soft Comput. 43, 298–311 (2016).

Xiong, G., Shi, D. & Duan, X. Multi-strategy ensemble biogeography-based optimization for economic dispatch problems. Appl. Energy 111, 801–811 (2013).

Kapelinski, K., Neto, J. P. J. & dos Santos, E. M. Firefly algorithm with non-homogeneous population: a case study in economic load dispatch problem J. . Oper. Res. Soc. 72, 519–534 (2021).

Yu, J., Kim, C.-H. & Rhee, S.-B. Clustering cuckoo search optimization for economic load dispatch problem. Neural Comput. Appl. 32, 16951–16969 (2020).

Xiong, G. & Shi, D. Orthogonal learning competitive swarm optimizer for economic dispatch problems. Appl. Soft Comput. 66, 134–148 (2018).

Zakian, P. & Kaveh, A. Economic dispatch of power systems using an adaptive charged system search algorithm. Appl. Soft Comput. 73, 607–622 (2018).

Author information

Authors and Affiliations

Contributions

Conceptualization: M.A.A. and M.Z.; methodology: M.A.A. and M.Z.; software: M.A.A. and M.Z.; validation: R.A., S.M. and M.D.; formal analysis: R.A., S.M. and M.D.; investigation: M.A.A. and M.Z.; resources: S.M. and M.D.; data curation: M.D.; writing—original draft preparation, M.A.A. and M.Z.; writing—review and editing: R.A., S.M. and M.D.; visualization: X.X.; supervision: S.M. and M.D.; project administration: M.D.; funding acquisition: M.D. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Akbari, M.A., Zare, M., Azizipanah-abarghooee, R. et al. The cheetah optimizer: a nature-inspired metaheuristic algorithm for large-scale optimization problems. Sci Rep 12, 10953 (2022). https://doi.org/10.1038/s41598-022-14338-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-14338-z

- Springer Nature Limited

This article is cited by

-

Hippopotamus optimization algorithm: a novel nature-inspired optimization algorithm

Scientific Reports (2024)

-

Optimizing dynamic economic dispatch through an enhanced Cheetah-inspired algorithm for integrated renewable energy and demand-side management

Scientific Reports (2024)

-

Hybrid cheetah particle swarm optimization based optimal hierarchical control of multiple microgrids

Scientific Reports (2024)

-

Lotus effect optimization algorithm (LEA): a lotus nature-inspired algorithm for engineering design optimization

The Journal of Supercomputing (2024)

-

Metaheuristics for Solving Global and Engineering Optimization Problems: Review, Applications, Open Issues and Challenges

Archives of Computational Methods in Engineering (2024)