Abstract

High-energy impacts, like vehicle crashes or falls, can lead to pelvic ring injuries. Rapid diagnosis and treatment are crucial due to the risks of severe bleeding and organ damage. Pelvic radiography promptly assesses fracture extent and location, but struggles to diagnose bleeding. The AO/OTA classification system grades pelvic instability, but its complexity limits its use in emergency settings. This study develops and evaluates a deep learning algorithm to classify pelvic fractures on radiographs per the AO/OTA system. Pelvic radiographs of 773 patients with pelvic fractures and 167 patients without pelvic fractures were retrospectively analyzed at a single center. Pelvic fractures were classified into types A, B, and C using medical records categorized by an orthopedic surgeon according to the AO/OTA classification system. Accuracy, Dice Similarity Coefficient (DSC), and F1 score were measured to evaluate the diagnostic performance of the deep learning algorithms. The segmentation model showed high performance with 0.98 accuracy and 0.96–0.97 DSC. The AO/OTA classification model demonstrated effective performance with a 0.47–0.80 F1 score and 0.69–0.88 accuracy. Additionally, the classification model had a macro average of 0.77–0.94. Performance evaluation of the models showed relatively favorable results, which can aid in early classification of pelvic fractures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Pelvic ring injury can occur after a high-energy blunt trauma, such as a motor vehicle crash, motorcycle crash, pedestrian-vehicle collision, or falls1,2,3. Rapid diagnosis and treatment of pelvic fractures are crucial owing to potential severe bleeding and damage to internal organs or blood vessels4,5,6.

Pelvic radiography is an important diagnostic procedure in the early stage of injury, providing information quickly on the extent and location of fractures. However, detecting fractures can be challenging owing to the pelvic ring’s complex anatomy and varying image quality. Overlapping bony structures and variable patient positioning during radiographic imaging complicate accurate assessment of pelvic fractures, often requiring multiple imaging angles and occasionally resulting in ambiguous interpretations. Although hemorrhage is a major concern as a reversible factor in mortality after trauma, detecting bleeding from a pelvic fracture is difficult without a computed tomography (CT) scan, even if the fracture is diagnosed on a pelvic radiograph7,8. This difficulty is compounded by the fact that timely and accurate detection of hemorrhage is critical to direct appropriate surgical or interventional management, which can be life-saving.

Recently, in hemodynamically unstable patients with pelvic fractures, treatments such as pelvic binder application, preperitoneal pelvic packing, or endovascular balloon occlusion of the aorta are sometimes performed at the accident scene or in the emergency department (ED) for rapid hemorrhage control without imaging studies and before definitive management, such as surgery or angiographic embolization4,6. However, most of these procedures are performed after a pelvic fracture diagnosis on a pelvic radiograph6.

The Association for Osteosynthesis (AO) Foundation and Orthopedic Trauma Association (OTA) (AO/OTA) classification system, adopting the imaging-based Tile classification, grades pelvic instability based on the anatomic location of pelvic ring injuries, associated displacements, and instabilities9,10,11. Initially developed for classification using radiographs, the AO/OTA classification system is now used for more accurate classification using CT imaging12,13. However, its complex criteria make the AO/OTA classification system difficult to use in emergency settings14, where rapid decision-making is required. This complexity often leads to delays in treatment initiation and can negatively affect patient outcomes.

Deep learning is increasingly utilized across complex domains such as image and speech recognition and natural language processing15. Convolutional neural networks, one of the artificial neural networks, perform excellently in image recognition by using multiple layers of filters to recognize patterns in input images and extract their features. In the field of medical imaging, these networks’ diagnostic and classification performance for various diseases has been analyzed15,16,17,18. However, studies on deep learning algorithms for classifying fracture patterns on pelvic radiographs according to the AO/OTA classification scheme are unavailable.

In this research, we employed advanced image segmentation techniques combined with a custom-designed deep learning algorithm to accurately classify pelvic fractures. Our method differs from existing approaches by addressing the complex anatomical features of the pelvic region, utilizing advanced convolutional neural network architectures to enhance both detection accuracy and efficiency. Notably, we introduced a technical innovation by using Attention U-Net for segmenting the pelvic ring area19, and subsequently classifying fractures into Type A, B, and C using Inception-ResNet-V220, focused solely on the segmented area. This methodology not only enhances fracture detection but also categorizes fractures precisely in accordance with the AO/OTA classification system, offering a significant improvement over traditional methods.

Therefore, this study aimed to develop a deep learning algorithm to classify pelvic fractures diagnosed on pelvic radiographs according to the AO/OTA classification system and evaluate its performance. Our findings illustrate significant technological advancements over existing methods, highlighting the importance of neural networks designed to meet specific challenges.

Materials and methods

Proposed deep learning models

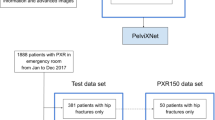

In this study, we developed and evaluated two deep learning models for pelvic fractures. The first model segments the pelvic ring from collected image data, while the second model classifies fracture patterns using the AO/OTA classification system (Supplementary Fig. S1). We employed a fivefold cross-validation to assess the general performance of both segmentation and classification, and the results were subsequently compared and analyzed.

To segment the pelvic ring, we used the Attention U-Net architecture, which focuses on critical regions crucial for accurately delineating complex anatomical structures. For classification, the Inception-ResNet-V2 architecture was chosen to efficiently capture multi-scale features and simplify the learning process with residual connections. This approach, optimized for intricate pelvic structures, surpasses conventional models. The overall methodology and procedures are illustrated in Fig. 1.

Overall procedures of the proposed method. (a) Data collection. (b) ROI labeling for the fracture sites (left) and the boundary of the pelvic ring (right). (c) Data preprocessing using histogram equalization. (d) Training of the segmentation and classification models. (e) Performance evaluation of the trained models. ROI, region of interest.

Research environment

The experiment in this study used a system consisting of an NVIDIA RTX A5000 (NVIDIA, Santa Clara, CA, USA) graphics processing unit, an Intel® Xeon® Silver 4216 (Intel, Santa Clara, CA, USA) CPU, and 32 GB RAM, and was performed using the Ubuntu 20.04.6 operating system. NVIDIA driver 525.147.05, Computing Unified Device Architecture (CUDA) 11.2, and Tensorflow 2.6.0 were used.

Dataset acquisition

This study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Gachon University Gil Medical Center (GAIRB2022-153). Due to the retrospective nature of the study, the Institutional Review Board of Gachon University Gil Medical Center waived the need of obtaining informed consent.

Antero-posterior (AP) pelvic radiographs were collected from 773 adults ≥ 18 years diagnosed with pelvic fractures and 167 adults without pelvic fractures who visited Gachon University Gil Hospital between January 2015 and December 2020.

The location of pelvic fractures was based on the (1) radiologist’s readings of the AP pelvic radiographs, (2) result of the pelvic CT scan, and (3) orthopedic surgeon’s opinion described in the medical records. A trauma surgeon, with > 10 years’ experience, identified the fracture sites on the AP pelvic radiographs. The identified fracture areas were delineated by drawing a square-shaped region of interest (ROI) using ImageJ version 1.53t (National Institutes of Health, Bethesda, MD, USA) and marking the ROI along the boundary of the pelvic ring (Supplementary Fig. S2).

The AO/OTA classification system is divided into the major categories of A, B, and C and their subcategories A1–3, B1–3, and C1–311. The AP pelvic radiograph of 773 patients with pelvic fractures was used to classify the fractures as types A, B, and C according to the AO/OTA classification system defined by the orthopedic surgeon. The trauma surgeon made the final classification by confirming the pelvic AP radiograph and pelvic CT findings.

Data preprocessing

Some of the pelvic AP radiographs were too bright or dark to accurately detect the location of the fracture. A preprocessing called histogram equalization, was applied to these images.

Histogram equalization is an image processing method that improves contrast by equalizing the brightness values of the image. By remapping the brightness values, the lost contrast is recovered to obtain a clearer image21,22. Supplementary Fig. S3 shows the pelvic AP radiographs with low contrast converted to high contrast using histogram equalization.

Segmentation in pelvic AP radiographs using attention U-Net

The pelvic ring is formed by two innominate bones that articulate posteriorly with the sacrum and anteriorly with the pubic symphysis. Each innominate bone comprises three fused bones, namely the ilium, ischium, and pubis. The sacrum articulates upward with the fifth lumbar vertebra, and the acetabulum on each side of the pelvis articulates with the femoral head. Given that the pelvic AP radiograph shows the lower lumbar spine and proximal femur, we conducted a segmentation process specifically classifying fractures confined to the pelvic ring. In addition, we constructed an artificial intelligence (AI) model using the Attention U-net architecture to generate a segmentation mask for the pelvic ring region in pelvic AP radiographs (Fig. 2)19.

The U-net architecture involves repetitive convolution operations to downsize the input, thereby extracting overall image features. Subsequently, up-sampling progressively reconstructs these features to generate an output image the same size as the original. During the downsizing process, the feature maps generated are combined with corresponding up-sampling layers to prevent information loss, thereby enhancing segmentation accuracy. The Attention U-net incorporates an attention mechanism during the combination stage of feature maps. This mechanism compares the feature map being transmitted with the output of the previous stage, assigning weights to emphasize more critical areas. This addition enhances the accuracy of the segmentation process.

For model training, the Dice Coefficient Loss was used as the error function. An Adam optimizer with a learning rate of 0.001 was employed to correct errors. The batch size was set at four, and the model underwent training for 100 epochs. ReduceLROnPlateau was used to dynamically adjust the learning rate, and EarlyStopping was employed to prevent overfitting. Pelvic AP radiographs served as input for model training. An ROI mask specifying the pelvic ring region was used as the label image during the training. Upon completion of the training, the model automatically generated pelvic ring masks with pelvic AP radiographs.

AO/OTA classification using inception-ResNet-V2

In this study, we developed an AO/OTA classification model within the pelvic ring region using a segmentation model. The developed model is based on the Inception-ResNet-V2 architecture (Fig. 3)20, using segmented images of the pelvic ring area from pelvic AP radiographs as input data (Supplementary Fig. S1). The Inception-ResNet-V2 model combines the Inception network and ResNet, integrating a 1 × 1 convolution layer to reduce computational complexity within the Inception structure and simplifying the model’s learning process using ResNet’s shortcut connections20.

The model’s loss function employed was a Categorical Crossentropy, and an Adam optimizer with a learning rate of 0.00004 was used to adjust the model’s loss. The batch size was set to 4, and the input size was 512 × 512. ReduceLROnPlateau was used to dynamically adjust the learning rate, and multi-processing was utilized. The training was conducted for 300 epochs; however, to prevent overfitting, the training was stopped early. The model’s training was halted if the loss did not decrease > 20 epochs.

Performance evaluation and statistical analysis

To assess the performance of the pelvic ring segmentation model, we divided the dataset into five folds for model training and conducted a fivefold cross-validation. Performance metrics, including sensitivity, specificity, accuracy, and the Dice Similarity Coefficient (DSC) were measured23. Similarly, the AO/OTA classification model using the segmented images underwent a fivefold cross-validation. The precision, sensitivity, accuracy, and F1 score were used as performance metrics. The receiver operating characteristic–area under the curve (ROC–AUC) values were analyzed to comprehensively evaluate the performance across different classifications (normal and types A, B, and C fractures)24,25. The DSC and F1 score use the same equation. DSC is widely used in image segmentation, while F1 score is commonly used in classification tasks. In this study, we employed the macro average due to the multi-class classification involved. The macro average calculates the performance indicators for each class in a multi-class classification and then computes the arithmetic mean of these indicators.

The precision, representing the ratio of correctly identified positive instances, was calculated using Eq. (1):

Specificity, which represents the proportion of true negative instances correctly identified by a model among all negative instances, was calculated as follows (Eq. 2):

Sensitivity, indicating the proportion of true positive instances correctly identified by a model among all positive instances, was calculated as follows (Eq. 3):

The DSC, a metric measuring the overlap between predicted and actual regions in a model, was calculated as follows (Eq. 4):

Accuracy, representing the ratio of correct predictions by a model, was calculated as follows (Eq. 5):

The F1 score, a metric providing a harmonic mean between precision and sensitivity (recall), was calculated as follows (Eq. 6):

The macro average, which is the arithmetic mean of performance indicators for each class in a multi-class classification, is defined as follows (Eq. 7):

Statistical analysis was conducted using MedCalc version 19.6.1 (MedCalc Software Ltd, Ostend, Belgium), SPSS version 23.0 (IBM Corp., Armonk, NY, USA), machine learning frameworks provided by scikit-learn (1.0.2), and Keras (2.6) in Python. Continuous variables are expressed as mean ± standard deviation, and categorical variables are expressed as numbers (%). Statistical significance was set at P < 0.05.

Results

Patient and data characteristics

A total of 940 pelvic AP X-ray images were identified, of which 773 had pelvic fractures, and 167 had no pelvic fractures. The mean age of all participants was 52.2 ± 19.5 years, with 554 (58.9%) males and 386 (41.1%) females. The pelvic AP radiographs of patients with pelvic fractures were classified according to the AO/OTA classification system, with 447, 196, and 130 images identified as type A, B, and C pelvic fractures, respectively. Table 1 presents the demographic characteristics of the dataset.

Performance of the pelvic ring segmentation model

The pelvic ring segmentation image, obtained using the segmentation model, is depicted in Fig. 4. This figure presents both the ROI mask and the predicted image of the pelvic ring. The shape and position of the pelvic ring are accurately represented. However, Fig. 4d illustrates inaccuracies in the finer details of the sacrum and the obturator foramen, where the predicted mask incorrectly fills spaces that should be empty, as noted in the ROI mask. Performance analysis of the model indicated an accuracy of 0.98 and a DSC ranging from 0.96 to 0.97, as detailed in Table 2.

Performance of the AO/OTA classification model

Figure 5 shows the representative activation maps of types A, B, and C pelvic fractures based on the AO/OTA classification model using the deep learning algorithm. In the class activation map, areas with fractures are highlighted in red, with a green bounding box encircling the fracture area. The red coloring indicates the regions that the model primarily focuses on during image classification, suggesting that when the classification model identifies different fracture types, it accurately analyzes the characteristics of each type. Table 3 displays the performance of the AO/OTA classification model using fivefold cross-validation, with each fold showing variable performance. The results can be relatively high when the test dataset contains simpler data, and lower with more complex data. The F1 score ranged from 0.47 to 0.80, and accuracy varied from 0.69 to 0.88. The model achieved precision between 0.65 and 0.82 and sensitivity from 0.47 to 0.80. The highest score achieved across all evaluations was 0.80 or higher. In addition, the AO/OTA classification model’s performance was validated with a macro average ROC–AUC value ranging from 0.77 to 0.94 for each fold, as shown in Fig. 6a–e. The average AUC of all folds is over 0.7, indicating relatively accurate learning. The maximum average AUC was 0.94 and the minimum average AUC was 0.77. There were also differences in the AUC for each class, with the AUCs of normal (no pelvic fractures) and type C pelvic fractures being relatively high. ‘Normal’ had no fracture, and type C pelvic fractures were classified relatively well because the fractures were severe enough to significantly alter the pelvic shape. Figure 7 presents the confusion matrix for each fold. Although there were some differences for each fold, overall, it showed relatively good classification performance.

Activation map of pelvic fractures based on the AO/OTA classification. (a) Attention is primarily focused on the bilateral pubic rami. (b) Attention is primarily focused on the Rt. SI joint, pubic ramus, and acetabulum. (c) Maximal attention is primarily focused on the Rt. SI joint and pubic rami. AO/OTA, Association for Osteosynthesis Foundation and Orthopedic Trauma Association; Rt, Right; SI, Sacroiliac.

ROCs and AUC of the deep learning model classifying pelvic fractures diagnosed from pelvic radiographs according to the AO/OTA classification system. K-fold cross-validation (k = 5) was used to estimate and compare the performance of the deep learning model. The ROC curves, micro-average ROC curves, and macro-average ROC curves of each class (normal and types A, B, and C fractures) are presented, and the corresponding area under the ROC curves is shown in (a–e). AO/OTA, Association for Osteosynthesis Foundation and Orthopedic Trauma Association; ROCs, Receiver operating characteristic curves; AUC, Area under the curve

Discussion

Pelvic fractures can be diagnosed using a pelvic radiography or CT scan, and a CT scan is necessary to detect sites of bleeding with fractures5,26. However, in patients with hemodynamic instability, time-consuming imaging studies such as CT scans can be dangerous when considering patient safety4. Recently, whole-body CT has been increasingly used to reduce examination time in the early stages of injury; however, its practice is not yet universal4,5,8,27. Pelvic radiography is easy and quick to examine; however, the exact site of the bleeding cannot be identified4. Moreover, pelvic radiography is limited in diagnosing fractures owing to the complex anatomy of the pelvic bone structure and the shading of internal organs7,28.

The AO/OTA classification system was used in studies to predict the need for massive blood transfusion or angiographic embolization in pelvic fractures2,3,4,29,30 with a primary focus on its application during the early stages of injury3,29. If this approach is tried in practice in the early stages of injury, it could have a positive impact on patient prognosis. However, the AO/OTA classification system is not widely used in the ED owing to its complexity2.

The usefulness of applying AI in medical domains for the rapid diagnosis required for screening and classifying disease severity based on complex criteria has been studied15,18,31. Using convolutional neural networks to diagnose fractures in various parts of the human body on a radiograph has 80–90% accuracy32,33,34.

Presently, studies that used AI in the pelvic region focused on diagnosing or classifying proximal femur fractures using pelvic radiography31,35,36,37, while other studies focused on pelvic fractures localized to the pelvic ring38,39,40,41,42. Wang et al. used deep learning to detect pelvic fractures on pelvic radiographs40. In their fracture detection performance evaluation, they reported a relatively high detection performance of 91% accuracy and 84% sensitivity. However, they did not segment the pelvic ring, and the detection area of fractures included pelvic and proximal femur fractures.

In contrast, studies have detected pelvic fractures confined to the pelvic ring. Kassem et al. used Grad-CAM methods to create an explainable AI framework for detecting pelvic fractures in pelvic radiography. Specifically, they employed class activation maps to visually demonstrate which areas played a crucial role in the predictions, achieving 98.5% accuracy, precision, and specificity, respectively42. Similarly, our study also used class activation maps to address the problematic “black-box” nature of deep learning models, presenting the results accordingly. Other studies were diagnosed using pelvic CT38,39,41. Ukai et al. evaluated the fracture detection performance acquired using 3D-CT using pelvic images without additional segmentation of the pelvic ring. The proposed method showed a high performance of 0.824 AUC, 0.805 recall, and 0.907 precision for detecting pelvic fractures39. Wu et al. employed the same process of segmenting bone structures in images as in this study to detect pelvic fractures. They segmented the lumbar spine, pelvis, and proximal femur in the axial 2D-CT view and showed relatively good fracture detection results with 91.98% accuracy, 93.33% sensitivity, and 89.26% specificity41. Similar to this study, a study used AI to perform AO/OTA classification for pelvic fractures in whole-body CT38. The study applied a multi-view concatenated deep network leveraging 3D information from orthogonal thick multi-planar reformat images without a segmentation process for the pelvic ring. Focusing on pelvic instability, the study evaluated whether the deep learning model could distinguish between rotational and translational instability of the pelvis and showed an accuracy of 74% and 85%, respectively38.

Dreizin et al. analyzed images in the axial, coronal, and sagittal planes using 3D images from whole-body CT scans38, while this study used the AO/OTA classification on one AP pelvic radiograph. The accuracy was 0.69–0.88, and the AUC value reached 0.77–0.94 for the macro average in the performance evaluation of classifying normal pelvis and each fracture type using ROC. In addition, the significant achievement of this study is that we developed a pelvic ring segmentation model from pelvic radiographs, which was not done in other studies, and the segmentation performance was high.

Our research offers several notable advantages. Firstly, we utilized advanced neural networks, such as the Attention U-Net and Inception-ResNet-V2, for the classification and segmentation of pelvic fractures. The Attention U-Net is particularly effective in focusing on critical regions, which enhances segmentation accuracy by emphasizing significant features within the complex pelvic anatomy. Conversely, the Inception-ResNet-V2 architecture aids in accurately classifying fracture patterns due to its ability to handle multi-scale features and integrate residual connections that simplify the learning process. Additionally, we adopted a fivefold cross-validation method to ensure the reliability and reproducibility of our models across different data subsets. This rigorous validation approach is particularly important in studies like ours, where the performance of the model can have a significant impact on clinical outcomes. We also utilized a comprehensive array of performance metrics, including sensitivity, specificity, accuracy, DSC, F1 score, and ROC–AUC. These metrics help provide a detailed evaluation of the models’ effectiveness in practical scenarios.

Furthermore, to address issues related to image quality variability, which can significantly influence the performance of image analysis algorithms, we implemented histogram equalization. This preprocessing step ensures consistent image quality, thus facilitating more reliable fracture detection across varying imaging conditions.

Nevertheless, this study had some limitations, and a further improvement in the accuracy and other performance metrics of the developed model is warranted. First, this study employed only an internal validation, which makes generalizing the results difficult. Therefore, external validation through a multicenter study is required. Second, we believe that various intravascular catheters, urinary catheters, prosthetic implants, and pelvic binders inserted or applied in the human body that is visible on pelvic radiographs could potentially affect the training and validation results of each deep learning model. Nevertheless, studying images in varying situations to improve their clinical utility is essential. Third, a more precise approach for marking ROIs on fractures and joint planes on pelvic X-rays is needed. Rather than simply leaving a square-shaped ROI around the fracture area, further research is needed to distinguish between fracture lines and joint separation by drawing the fracture shape or by separating the sacral and iliac joints. Fourth, the sacroiliac joints and sacral region at the posterior pelvic ring may be obscured by visceral shading and are not clear on AP pelvic radiographs. Therefore, it may be important to further analyze the difference in performance between using a single plane of AP pelvic radiograph and a combination of AP, inlet, outlet, and oblique images, similar to other existing studies, in the analysis for AO/OTA classification. Finally, although the immediate application of our AI algorithm for classifying pelvic fractures may not be feasible during the acute management of hemodynamically unstable patients, its value becomes significant once the patient’s condition has stabilized. In such scenarios, rapid and accurate classification of pelvic fractures can facilitate efficient planning and prioritization of imaging and surgical resources, which are critical in the subsequent phases of patient management. Therefore, while the initial stabilization remains paramount, our AI tool stands to enhance the efficiency and accuracy of fracture assessment in the follow-up stages. Further research is needed to expand its application scope so that such AI diagnostic tools can be immediately utilized in diagnostics and treatment in the future.

In conclusion, we developed a deep learning model to segment the pelvic ring using AP pelvic radiographs and classified the instability of pelvic fractures using the AO/OTA classification system. The pelvic ring segmentation model showed a high accuracy of 0.98, and the AO/OTA classification model showed an accuracy of 0.69‒0.88. This study paves the way for the automatic classification of pelvic fracture from AP pelvic radiographs to be realized using deep learning in clinical practice. AI can be used in the early stages of injury to make a faster diagnosis, and even if the AO/OTA classification criteria are complex, AI can quickly classify fracture patterns. Furthermore, this study’s results should serve as an important reference for medical imaging technology development that applies AI technology.

Data availability

The datasets generated and/or analyzed during the current study are not publicly available due to restrictions of the Institutional Review Board of Gachon University Gil Medical Center to protect patients’ privacy, but are available from the corresponding author on reasonable request.

Code availability

The source codes for our deep learning model have been made publicly accessible at https://github.com/user-dynamite/Deep-learning-pelvic.

References

van Vugt, A. B. & van Kampen, A. An unstable pelvic ring. The killing fracture. J. Bone Jt. Surg. Br. 88, 427–433 (2006).

Agri, F. et al. Association of pelvic fracture patterns, pelvic binder use and arterial angio-embolization with transfusion requirements and mortality rates; A 7-year retrospective cohort study. BMC Surg. 17, 104 (2017).

Kim, M. J., Lee, J. G. & Lee, S. H. Factors predicting the need for hemorrhage control intervention in patients with blunt pelvic trauma: A retrospective study. BMC Surg. 18, 101 (2018).

Coccolini, F. et al. Pelvic trauma: WSES classification and guidelines. World J. Emerg. Surg. 12, 5 (2017).

Slater, S. J. & Barron, D. A. Pelvic fractures-A guide to classification and management. Eur. J. Radiol. 74, 16–23 (2010).

Costantini, T. W. et al. Current management of hemorrhage from severe pelvic fractures: Results of an American Association for the Surgery of Trauma multi-institutional trial. J. Trauma Acute Care Surg. 80, 717–723 (2016).

Benjamin, E. R. et al. The trauma pelvic X-ray: Not all pelvic fractures are created equally. Am. J. Surg. 224, 489–493 (2022).

Sierink, J. C. et al. Immediate total-body CT scanning versus conventional imaging and selective CT scanning in patients with severe trauma (REACT-2): A randomized controlled trial. Lancet 388, 673–683 (2016).

Alton, T. B. & Gee, A. O. Classifications in brief: Young and burgess classification of pelvic ring injuries. Clin. Orthop. Relat. Res. 472, 2338–2342 (2014).

Berger-Groch, J. et al. The intra- and interobserver reliability of the Tile AO, the Young and Burgess, and FFP classifications in pelvic trauma. Arch Orthop. Trauma Surg. 139, 645–650 (2019).

Meinberg, E. G., Agel, J., Roberts, C. S., Karam, M. D. & Kellam, J. F. Fracture and dislocation classification compendium-2018. J. Orthop. Trauma 32(Suppl 1), S1–S170 (2018).

Gabbe, B. J. et al. The imaging and classification of severe pelvic ring fractures: Experiences from two level 1 trauma centres. Bone Jt. J. 95-B, 1396–1401 (2013).

Koo, H. et al. Interobserver reliability of the young-burgess and tile classification systems for fractures of the pelvic ring. J. Orthop. Trauma 22, 379–384 (2008).

Au, J. et al. AO pelvic fracture classification: Can an educational package improve orthopaedic registrar performance?. ANZ J. Surg. 86, 1019–1023 (2016).

Qu, B. et al. Current development and prospects of deep learning in spine image analysis: A literature review. Quant Imaging Med. Surg. 12, 3454–3479 (2022).

Thian, Y. L. et al. Convolutional neural networks for automated fracture detection and localization on wrist radiographs. Radiol. Artif. Intell. 1, e180001 (2019).

Kitamura, G., Chung, C. Y. & Moore, B. E. 2nd. Ankle fracture detection utilizing a convolutional neural network ensemble implemented with a small sample, de novo training, and multi-view incorporation. J. Digit. Imaging. 32, 672–677 (2019).

Shazia, A. et al. A comparative study of multiple neural network for detection of COVID-19 on chest X-ray. EURASIP J. Adv. Signal Process 2021, 1–16 (2021).

Oktay, O. et al. Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv: 1804.03999 (2018).

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the AAAI Conference on Artificial Intelligence 2017.

Lu, L., Zhou, Y., Panetta, K. & Agaian, S. Comparative study of histogram equalization algorithms for image enhancement. Mobile Multimed./Image Process. Secur. Appl. 7708, 337–347 (2010).

Hashemi, S., Kiani, S., Noroozi, N. & Moghaddam, M. E. An image contrast enhancement method based on genetic algorithm. Pattern Recognit. Lett. 31, 1816–1824 (2010).

Li, X. et al. Dice loss for data-imbalanced NLP tasks. arXiv preprint arXiv,191102855 (2019).

Lin, T. Y. et al. Microsoft coco: Common objects in context. In: Computer Vision–ECCV 2014 740–755 (2014).

Everingham, M., Van Gool, L., Williams, C. K., Winn, J. & Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88, 303–338 (2010).

Raniga, S. B., Mittal, A. K., Bernstein, M., Skalski, M. R. & Al-Hadidi, A. M. Multidetector CT in vascular injuries resulting from pelvic fractures: A primer for diagnostic radiologists. Radiographics 39, 2111–2129 (2019).

Dreizin, D. & Munera, F. Blunt polytrauma: Evaluation with 64-section whole-body CT angiography. Radiographics 32, 609–631 (2012).

Hurson, C. et al. Rapid prototyping in the assessment, classification and preoperative planning of acetabular fractures. Injury 38, 1158–1162 (2007).

Kim, M. J., Lee, J. G., Kim, E. H. & Lee, S. H. A nomogram to predict arterial bleeding in patients with pelvic fractures after blunt trauma: A retrospective cohort study. J. Orthop. Surg. Res. 16, 122 (2021).

Osterhoff, G. et al. Comparing the predictive value of the pelvic ring injury classification systems by Tile and by Young and Burgess. Injury 45, 742–747 (2014).

Krogue, J. D. et al. Automatic hip fracture identification and functional subclassification with deep learning. Radiol. Artif. Intell. 2, e190023 (2020).

Olczak, J. et al. Artificial intelligence for analyzing orthopedic trauma radiographs. Acta Orthop. 88, 581–586 (2017).

Gan, K. et al. Artificial intelligence detection of distal radius fractures: A comparison between the convolutional neural network and professional assessments. Acta Orthop. 90, 394–400 (2019).

Chung, S. W. et al. Automated detection and classification of the proximal humerus fracture by using deep learning algorithm. Acta Orthop. 89, 468–473 (2018).

Cheng, C. T. et al. Application of a deep learning algorithm for detection and visualization of hip fractures on plain pelvic radiographs. Eur. Radiol. 29, 5469–5477 (2019).

Lee, C. et al. Classification of femur fracture in pelvic X-ray images using meta-learned deep neural network. Sci. Rep. 10, 13694 (2020).

Urakawa, T. et al. Detecting intertrochanteric hip fractures with orthopedist-level accuracy using a deep convolutional neural network. Skeletal. Radiol. 48, 239–244 (2019).

Dreizin, D. et al. An automated deep learning method for Tile AO/OTA pelvic fracture severity grading from trauma whole-body CT. J. Digit. Imaging 34, 53–65 (2021).

Ukai, K. et al. Detecting pelvic fracture on 3D-CT using deep convolutional neural networks with multi-orientated slab images. Sci. Rep. 11, 11716 (2021).

Wang, Y. et al. Weakly supervised universal fracture detection in pelvic x-rays. In: Medical Image Computing and Computer Assisted Intervention–MICCAI2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Proceedings, Part VI 22 459–467 Springer (2019).

Wu, J. et al. Fracture detection in traumatic pelvic CT images. Int. J. Biomed. Imaging. 2012, 327198 (2012).

Kassem, M. A. et al. Explainable transfer learning-based deep learning model for pelvis fracture detection. Int. J. Intell. Syst. 2023, 3281998 (2023).

Acknowledgements

We thank Yang Bin Jeon and Kang Kook Choi from the Department of Traumatology at Gachon University College of Medicine, Kun Woo Kim from the Department of Thoracic and Cardiovascular Surgery at Gachon University College of Medicine, and Kyu Hyouck Kyoung from the Department of Surgery at Ulsan University Hospital for their assistance with the manuscript revision.

Author information

Authors and Affiliations

Contributions

Conceptualization, S.H.L. and K.G.K.; methodology, S.H.L. and K.G.K.; formal analysis, J.J.; investigation, J.J., J.Y.P., Y.J.K., G.J.L., K.G.K., and S.H.L.; resources, K.G.K.; data curation, S.H.L.; writing-original draft preparation, S.H.L. and J.J.; writing-review and editing, J.J., J.Y.P., Y.J.K., G.J.L., K.G.K., and S.H.L.; supervision and project administration, Y.J.K., G.J.L., K.G.K., and S.H.L. All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lee, S.H., Jeon, J., Lee, G.J. et al. Automated Association for Osteosynthesis Foundation and Orthopedic Trauma Association classification of pelvic fractures on pelvic radiographs using deep learning. Sci Rep 14, 20548 (2024). https://doi.org/10.1038/s41598-024-71654-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-71654-2

- Springer Nature Limited