Abstract

Background

Neurological disorders have had a substantial rise the last three decades, imposing substantial burdens on both patients and healthcare costs. Consequently, the demand for high-quality research has become crucial for exploring effective treatment options. However, current neurology research has some limitations in terms of transparency, reproducibility, and reporting bias. The adoption of reporting guidelines (RGs) and trial registration policies has been proven to address these issues and improve research quality in other medical disciplines. It is unclear the extent to which these policies are being endorsed by neurology journals. Therefore, our study aims to evaluate the publishing policies of top neurology journals regarding RGs and trial registration.

Methods

For this cross-sectional study, neurology journals were identified using the 2021 Scopus CiteScore Tool. The top 100 journals were listed and screened for eligibility for our study. In a masked, duplicate fashion, investigators extracted data on journal characteristics, policies on RGs, and policies on trial registration using information from each journal’s Instruction for Authors webpage. Additionally, investigators contacted journal editors to ensure information was current and accurate. No human participants were involved in this study. Our data collection and analyses were performed from December 14, 2022, to January 9, 2023.

Results

Of the 356 neurology journals identified, the top 100 were included into our sample. The five-year impact of these journals ranged from 50.844 to 2.226 (mean [SD], 7.82 [7.01]). Twenty-five (25.0%) journals did not require or recommend a single RG within their Instructions for Authors webpage, and a third (33.0%) did not require or recommend clinical trial registration. The most frequently mentioned RGs were CONSORT (64.6%), PRISMA (52.5%), and ARRIVE (53.1%). The least mentioned RG was QUOROM (1.0%), followed by MOOSE (9.0%), and SQUIRE (17.9%).

Conclusions

While many top neurology journals endorse the use of RGs and trial registries, there are still areas where their adoption can be improved. Addressing these shortcomings leads to further advancements in the field of neurology, resulting in higher-quality research and better outcomes for patients.

Similar content being viewed by others

Introduction

Over the last three decades, there has been a significant surge in neurological disorder prevalence across the United States [1]. With an estimated 100 million Americans affected by over a thousand different neurological and neurodegenerative diseases, these patients rely on high-quality clinical neurology research to improve current treatment interventions [2, 3]. These individuals not only experience challenges to their quality of life, but also bear significant financial burden – costing Americans approximately $800 billion in medical expenses [2]. Considering the severities and rapidly growing expenses associated with neurological disorders, the demand for evidence-based research has become increasingly invaluable for alleviating these burdens [2]. Despite the substantial amount of funding from the government, [4] neurological research may not achieve its maximum potential due to poor research reporting practices in the field– including limitations in reproducibility, transparency, and selective outcome bias [5, 6]. These limitations often result in misleading conclusions and contribute to outcome reporting that is difficult to interpret [7,8,9,10]. By addressing these shortcomings in clinical neurology research, scientific journals can ensure that only high-quality studies are published to their audiences – ultimately leading to improved patient outcomes, elimination of harmful interventions, reduced research waste, and the alleviation of rising healthcare costs [5, 11, 12].

One approach for improving research quality is the use of reporting guidelines (RGs) by prospective authors before publishing their work. Reporting guidelines serve as structured checklists that promote standardization of reported data in literature [13]. Previous studies have demonstrated that using RGs correctly can improve the quality of research and reduce the risk of bias [14,15,16,17]. For instance, Moher et al. found that the adherence to the Consolidated Standards of Reporting Trials (CONSORT) statement led to improved reporting of randomized controlled trials (RCTs) in the majority of the journals analyzed [14]. Likewise, Nawijn et al. found that academic journals that endorsed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist exhibited higher-quality reporting in comparison to journals that did not endorse it [16]. To improve accessibility and discoverability of RGs, the Enhancing the Quality of Transparency of Health Research (EQUATOR) Network developed its database which complies RGs across various study designs [18]. Consisting of over 500 RGs, the EQUATOR Network provides education and training to ensure effective RG use and awareness in clinical research [18, 19]. Despite these efforts, it appears that RG adoption across various fields of medicine remains insufficient. For instance, Innocenti et al. found that a small percentage of high-impact rehabilitation journals acknowledged the use of RGs, and an even smaller percentage properly adhered to the stated RG. [20] Additionally, Tan et al. identified inconsistencies in RG endorsement among high-impact general surgery and vascular surgery journals, demonstrating the need for improvement [21]. Poor research practices can compromise quality of research – therefore, identifying gaps in RG adherence is a critical step for producing high-quality research.

Another method for improving research reporting is implementation of public registries for RCTs. Trial registration prior to the initiation of a study prevents biased reporting of selective outcomes – which improves transparency in the study’s results and leads to more reliable research [22,23,24,25]. Due to these reasons, the World Health Organization (WHO) promotes the use of trial registration by RCTs, and the International Committee of Medical Journal Editors (ICMJE) requires registration of all RCTs prior to patient enrollment [26, 27]. However, promoting the adoption of rigorous research practices requires proper enforcement of trial registration. Journals may encourage implementation by requiring or recommending study registration in their Instructions for Authors webpage – however, previous studies have identified significant gaps in trial registration and enforcement across various fields of medicine [28,29,30].

Currently, it is unclear the extent that clinical neurology journals advocate for the use of RGs and clinical trial registration. The purpose of this study is to evaluate the publishing policies of the leading clinical neurology journals regarding RGs and trial registration. Our aim is to understand the degree to which journals are endorsing these policies and to identify areas for improvement.

Methods

Study design

We conducted a cross-sectional analysis of the top neurology journals using the Strengthening The Reporting of Observational Studies in Epidemiology (STROBE) checklist [31]. Data was obtained directly from each journals’ Instructions for Authors webpage.

Standard protocol approvals, registrations, and patient consents

Due to the nature of our study, no human participants were included in our investigation. Therefore, oversight by the Institutional Review Board was not implicated.

Search strategy

On November 18, 2022, eligible journals were identified by consultation between one investigator (CAS) and a medical research librarian. The 2021 Scopus CiteScore tool supplied the journal listings using the website’s “Neurology” subject area [32]. The CiteScore for a given year is calculated by dividing the number of citations within the previous 4 years by the number of publications in the previous four years:

This metric provides a comprehensive measure of a journal’s citation impact, reflecting its influence and reach within the academic community. Identified journal listings were cross-checked using Google Scholar Metrics h5-index’s “Neurology” category, which confirmed the top twenty journals found by Scopus [33].

Eligibility

We evaluated the top 100 peer-reviewed academic journals in the “Neurology” subject area according to the 2021 Scopus CiteScore tool. We used Google Translate to translate journals with non-English websites, which has been proven to be a reliable application for extracting data from foreign articles [34, 35].

Exclusion criteria

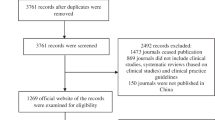

We excluded journals from our study if they met any of the following criteria: (i) had been discontinued, (ii) did not provide contact information for the editorial office on their website, as we sought to limit bias by providing editors the opportunity to elaborate on their publication policies, (iii) was an academic book, as they merely provide a summary of current research, or (iv) did not accept any of the study designs being assessed for in this study. In the event of an exclusion during the initial screening process, the subsequent journal identified by the Scopus CiteScore tool was used to maintain a sample size of 100. Exclusions with rationale are provided in Fig. 1.

Investigator training

The two investigators (AVT, JKS) received instructions from CAS over the data collection process prior to this study’s initiation. Following the discussion of the scope, rationale, and methods, both investigators extracted data from five journals that were not included in the study sample. To ensure consistency in methodology and accuracy of recorded data, this data extraction training was done in a masked, duplicate fashion. If warranted, an additional set of five journals would have been provided for further practice. Once a consensus was reached during the training session, the two investigators began extracting data from the generated study sample.

Data collection process

Two investigators extracted data from the Instructions for Authors webpage of each included journal in a masked, duplicate fashion. Data was collected using a standardized Google Form – designed a priori by investigators CAS, DN, and MV. After data extraction was completed, data was reconciled in an unmasked manner. Any discrepancies that could not be resolved by the two investigators were resolved by a third investigator (ZE).

Data items

Data extracted from each journal included: email response rate by each journal editor, journal title, five-year impact factor, mention of the EQUATOR Network in the Instructions for Authors, mention of the ICMJE in the Instructions for Authors, and geographical zone of publication (i.e., North America, South America, Europe, Asia, etc.). Informational statements pertaining to study registration at databases – e.g. Clinicaltrials.gov, WHO, PROSPERO (The International Prospective Register of Systematic Reviews) – were also extracted for each journal. For each journal’s Instructions for Authors webpage, statements regarding the use of popular RGs were extracted. A description of these RGs and their respective study designs can be found in Table 1.

Data points for a guideline or trial registry were recorded as either “not mentioned,” “required,” or “recommended” for each journal. In cases where verbiage within the Instructions for Authors section included words or phrases such as “required,” “must,” “need,” “mandatory,” or “studies will not be considered for publication unless…,” we would record as “required” by the journal. “Recommended” was recorded when words or phrases such as “recommended,” “should,” “preferred,” or “encouraged,” were used. Study investigators resolved unclear verbiage upon reconciliation of data. If a journal mentioned the EQUATOR Network as the source for proper guideline usage instead of listing specific guidelines in the Instructions for Authors webpage, we assumed that the journal used the relevant RG for a specific study design.

To ensure that policies regarding study designs not accepted for publication are fairly assessed, a standardized email was sent to the editorial staff of each journal in our sample. This email asked about the study designs listed in Table 1 and whether they were accepted by the journal. We repeated this process once per week for three consecutive weeks to increase response rates [36]. If no response was received during that time, it was assumed that all relevant study designs were accepted – therefore, investigators further examined the journals’ Information for Authors based on all the previously mentioned data points.

Outcomes

The primary outcome of this study is to explore the proportion of journals that require/recommend the use of popular RGs for each evaluated study design. The secondary outcome evaluated the proportion of journals that require/recommend the registration of RCTs.

Statistical methods

We used R (version 4.2.1) and RStudio to descriptively summarize collected data from our sample. Descriptive statistics included: (i) frequencies/percentages of guidelines requirement/recommendation in included journals and (ii) the frequencies/percentages of journals requiring/recommending clinical trial registration. This study was a direct analysis of journal webpages, therefore, analyses for bias were not warranted.

Reproducibility

This study was conducted based upon a protocol designed a priori. To ensure transparency and reproducibility of our study, we uploaded the protocol, raw data, extraction forms, STROBE checklist, analysis scripts, and standardized email prompts to Open Science Framework (OSF) [37].

Results

During our initial screening, there were 356 clinical neurology journals identified using the 2021 Scopus CiteScore tool. We selected the top 100 journals based on the highest five-year impact factors. We excluded four journals: two were discontinued and two did not accept the study designs investigated. Following our protocol, we included the next four journals identified by the Scopus CiteScore tool to replace those that were excluded (Fig. 1).

Our analysis consisted of 100 clinical neurology journals, with five-year impact factors ranging from 50.844 to 2.226 (mean [SD], 7.82 [7.01]). Following the review of the Instructions for Authors and editorial staff email responses (response rate, 60/100; 60.0%), the following RGs were removed from computing proportions as the study type was not accepted by the journal: QUOROM (1/100, 1.0%), PRISMA (1/100, 1.0%), STARD (2/100, 2.0%), ARRIVE (4/100; 4.0%), CARE (8/100; 8.0%), CHEERS (3/100; 3.0%), SRQR (6/100; 6.0%), SQUIRE (5/100; 5.0%), SPIRIT (8/100; 8.0%), COREQ (6/100; 6.0%), TRIPOD (2/100; 2.0%), PRISMA-P (9/100; 9.0%).

Reporting guidelines

In our sample, the EQUATOR Network was mentioned in 52 out of 100 journals (52.0%). Additionally, 85 of 100 (85.0%) referenced the ICMJE Uniform Requirements for Manuscripts. Twenty-five journals (25/100; 25.0%) did not mention any RGs within their Instructions for Authors. The most frequent RG mentioned was CONSORT (64/99; 64.6%), followed by PRISMA (52/99; 52.5%) and ARRIVE (51/96; 53.1%). Of the journals that mentioned the CONSORT guideline, 22 (22/64; 34.4%) required adherence and 42 (42/64; 65.6%) recommended adherence. For PRISMA, 12 (12/52; 23.1%) journals required adherence and 40 (40/52; 76.9%) recommended adherence. For ARRIVE, 6 (6/51; 11.8%) required adherence and 45 (45/51; 88.2%) recommended adherence. The least mentioned RG was QUOROM (1/99; 1.0%), followed by MOOSE (9/100; 9.0%) and SQUIRE (17/95; 17.9%). The only journal that mentioned the QUOROM guideline only recommended adherence. Of the journals that mentioned MOOSE, 2 (2/9; 22.2%) journals required adherence and 7 (7/8; 77.8%) recommended adherence. For SQUIRE, 1 (1/17; 5.9%) journal required adherence and 16 (16/17; 94.1%) recommended adherence. Independent data for all journals in our sample can be found in Supplementary Table 1.

Clinical trial registration

Out of the 99 neurology journals in our sample that accepted clinical trials, 67.0% (66/99) mentioned clinical trial registration. Of those journals, 54 (54/66; 81.9%) required registration and 11 (11/66; 16.7%) recommended it. For the 52 (52/100; 52.0%) journals that mentioned the EQUATOR Network in our sample, there were 43 (43/52; 82.7%) that required trial registration, 5 (5/52; 9.6%) that recommended trial registration, 3 (3/52; 5.8%) that did not mention trial registration, and only 1 (1/52; 1.9%) did not accept clinical trials.

Discussion

Our study found that among the top 100 clinical neurology journals, a quarter (25.0%) did not mention a single RG in their Instructions for Authors webpage, and a third (33.3%) did not mention any clinical trial registration policies. Our findings are consistent with previous research conducted in other medical disciplines, [20, 21, 38] and further highlights the inadequate endorsement of RGs and clinical trial registration policies. The gap in RG adherence within the field of clinical neurology impedes research quality and promotes misinformation – limiting potential advancements, contributes to poorer patient outcomes, and increased financial burdens for the patient. Furthermore, our findings emphasize a greater need for journals to implement proactive measures that encourage authors to adhere to RGs and registration requirements, ultimately resulting in improvements to the quality of clinical neurology research and reduce the possibility of biased reporting [39].

Insufficient endorsement of RGs and trial registration has been studied extensively across multiple medical specialties. Our findings within clinical neurology are consistent with the current RG literature regarding the issues of inadequate reporting in clinical research [40]. For instance, a prior study examining orthopedic surgery journals found a lack of RG and trial registration requirements in their field, indicating inadequate reporting practices [41]. Sims et al. conducted a similar study and found that almost half of critical care journals did not advocate for the use of any RGs – further supporting the idea that inadequate reporting requirements is a prevalent problem across medical specialties [28]. However, Wayant et al. found that within oncology journals, only 4.8% did not endorse any RGs or trial registration requirements, indicating a higher level of support for RGs and trial registry within this field specifically [42]. Although there is existing evidence supporting the increased incorporation of RGs and trial registration within certain specialties, our findings suggest that significant improvements are still warranted within clinical neurology. Suboptimal endorsement of RGs and trial registration policies to any degree can hinder the quality of research and promote harmful research practices.

Failure to prospectively register a clinical trial can lead to reporting bias, which compromises the integrity of evidence-based research and could potentially harm trial participants. Even journal editors have acknowledged that prospective trial registration is the most effective tool for promoting unbiased reporting [43]. To address this issue and encourage prospective trial registration, the U.S. Food and Drug Administration (FDA) introduced FDAAA 801, which requires all conducted studies to be registered prior to initiation – minimizing the risks associated with reporting bias [44]. Despite implementing these proactive measures, non-compliance to registration policies and selective outcome reporting continues to be a significant problem. Mathieu et al. found that less than half of the trials assessed were adequately registered prior to completion [38]. Additionally, over 30% of the adequately registered trials showed discrepancies between registered and published outcomes [38]. These findings highlight a concerning reality within clinical research – a lack of accountability results in diminished research quality. In our study, we discovered that 55% of neurology journals required trial registration, suggesting a reluctance to fully endorse sufficient registration requirements. This disinclination is concerning because journals have both a professional duty to guide authors towards conducting comprehensive research and an ethical obligation to protect the well-being of patients and trial participants. After further evaluation of current research and comparing the results to our findings, we believe that a greater portion of this research burden lies on journals compared to authors.

Based on our findings, we suggest that journals encourage authors to submit an organized checklist that verifies adherence before their work is accepted for publication. Additionally, journal editors should consider providing constructive feedback to authors whose submissions do not adequately meet the journal’s expectations. We also recommend that journals update their Instructions for Authors pages regularly to make it easier for authors to locate the journal’s expectations regarding RGs, the EQUATOR Network, and clinical trial registration. Furthermore, journals that do not currently adhere to RGs or registration policies should incorporate the EQUATOR Network into their Instructions for Authors section to help aid authors and reviewers in reporting and evaluating scientific research.

Our study had several strengths. First, we conducted all screening and data extraction in a masked, duplicate fashion, which is an approach that has been recommended by the Cochrane Collaboration to mitigate the potential for bias and errors [45]. A second strength was that we followed a protocol developed a priori to ensure adequate and clear reporting of observational studies [31, 42, 46]. Third, to promote transparency and reproducibility, our protocol, raw data, extraction forms, STROBE checklist, analysis scripts, and standardized email prompts were uploaded to OSF [37]. However, it is important to acknowledge that our study has some limitations. We encountered some challenges when contacting journal editors. Unfortunately, some editors did not respond to our inquiries, which made it difficult to confirm whether certain study designs were accepted for publication by the journal. Additionally, some of the webpages were outdated, resulting in uncertainty of whether the study designs were still accepted for publication in those journals. It is important to note that prospective authors may face similar difficulties when trying to understand journal expectations prior to submitting their articles. While it seems evident that higher impact factor journals might be associated with better quality publications, our study did not directly assess the uptake of RGs or trial registration by authors post-publication, nor did it perform a statistical analysis to correlate journal impact factor with the quality of published articles. This is a significant limitation as it restricts our ability to conclusively determine whether higher impact factor journals indeed achieve better rates of RG reporting and mandatory trial registration. Future research should aim to quantitatively assess this correlation to provide more concrete evidence on the impact factor’s influence on research quality. Lastly, due to the cross-sectional nature of this study, our findings may not be generalizable to other fields of medicine and should be interpreted within this context.

Conclusion

In conclusion, our study found that a majority of the top 100 clinical neurology journals required or recommended the adherence to RGs and trial registration policies. However, our analysis also identified significant shortcomings in journal compliance with these standards. To address this issue, we recommend that journals adopt proactive approaches to publishing articles by developing policies that encourage adherence to these RGs and trial registration policies. Ultimately, promoting these policies may improve research quality in the field of clinical neurology, resulting in better outcomes for patients.

Data availability

To ensure transparency and reproducibility of our study, we uploaded the protocol, raw data, extraction forms, STROBE checklist, analysis scripts, and standardized email prompts to Open Science Framework (OSF): https://osf.io/wrtke/.

References

GBD 2017 US Neurological Disorders Collaborators, Feigin VL, Vos T, et al. Burden of neurological disorders across the US from 1990–2017: A Global Burden of Disease Study. JAMA Neurol. 2021;78(2):165–76.

Gooch CL, Pracht E, Borenstein AR. The burden of neurological disease in the United States: a summary report and call to action. Ann Neurol. 2017;81(4):479–84.

Kaji R. Global burden of neurological diseases highlights stroke. Nat Rev Neurol. 2019;15(7):371–2.

R43341.pdf. https://sgp.fas.org/crs/misc/R43341.pdf

Rauh S, Torgerson T, Johnson AL, Pollard J, Tritz D, Vassar M. Reproducible and transparent research practices in published neurology research. Res Integr Peer Rev. 2020;5:5.

Howard B, Scott JT, Blubaugh M, Roepke B, Scheckel C, Vassar M. Systematic review: Outcome reporting bias is a problem in high impact factor neurology journals. PLoS ONE. 2017;12(7):e0180986.

Ioannidis JPA. Why most published research findings are false. PLoS Med. 2005;2(8):e124.

Munafò MR, Nosek BA, Bishop DVM, et al. A manifesto for reproducible science. Nat Hum Behav. 2017;1:0021.

Landis SC, Amara SG, Asadullah K, et al. A call for transparent reporting to optimize the predictive value of preclinical research. Nature. 2012;490(7419):187–91.

Gerrits RG, van den Berg MJ, Kunst AE, Klazinga NS, Kringos DS. Reporting health services research to a broader public: an exploration of inconsistencies and reporting inadequacies in societal publications. PLoS ONE. 2021;16(4):e0248753.

Prasad V, Ioannidis JP. Evidence-based de-implementation for contradicted, unproven, and aspiring healthcare practices. Implement Sci. 2014;9:1.

Samanta D, Landes SJ. Implementation science to Improve Quality of Neurological Care. Pediatr Neurol. 2021;121:67–74.

What is a reporting guideline? Accessed January 5. 2023. https://www.equator-network.org/about-us/what-is-a-reporting-guideline/

Moher D, Jones A, Lepage L, CONSORT Group (Consolidated Standards for Reporting of Trials). Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA. 2001;285(15):1992–5.

Turner L, Shamseer L, Altman DG, Schulz KF, Moher D. Does use of the CONSORT Statement impact the completeness of reporting of randomised controlled trials published in medical journals? A Cochrane review. Syst Rev. 2012;1:60.

Nawijn F, Ham WHW, Houwert RM, Groenwold RHH, Hietbrink F, Smeeing DPJ. Quality of reporting of systematic reviews and meta-analyses in emergency medicine based on the PRISMA statement. BMC Emerg Med. 2019;19(1):19.

Cobo E, Cortés J, Ribera JM, et al. Effect of using reporting guidelines during peer review on quality of final manuscripts submitted to a biomedical journal: masked randomised trial. BMJ. 2011;343:d6783.

Grant S, Montgomery P, Hopewell S, Macdonald G, Moher D, Mayo-Wilson E. Developing a Reporting Guideline for Social and psychological intervention trials. Res Soc Work Pract. 2013;23(6):595–602.

Simera I, Moher D, Hoey J, Schulz KF, Altman DG. The EQUATOR Network and reporting guidelines: helping to achieve high standards in reporting health research studies. Maturitas. 2009;63(1):4–6.

Innocenti T, Salvioli S, Giagio S, Feller D, Cartabellotta N, Chiarotto A. Declaration of use and appropriate use of reporting guidelines in high-impact rehabilitation journals is limited: a meta-research study. J Clin Epidemiol. 2021;131:43–50.

Tan WK, Wigley J, Shantikumar S. The reporting quality of systematic reviews and meta-analyses in vascular surgery needs improvement: a systematic review. Int J Surg. 2014;12(12):1262–5.

Why should I register and submit results? Accessed August 2. 2023. https://clinicaltrials.gov/ct2/manage-recs/background

McFadden E, Bashir S, Canham S, et al. The impact of registration of clinical trials units: the UK experience. Clin Trials. 2015;12(2):166–73.

Lindsley K, Fusco N, Li T, Scholten R, Hooft L. Clinical trial registration was associated with lower risk of bias compared with non-registered trials among trials included in systematic reviews. J Clin Epidemiol. 2022;145:164–73.

Viergever RF, Ghersi D. The quality of registration of clinical trials. PLoS ONE. 2011;6(2):e14701.

International Clinical Trials Registry Platform (ICTRP). Accessed August 2. 2023. https://www.who.int/clinical-trials-registry-platform

ICMJE, Accessed. August 2, 2023. https://www.icmje.org/recommendations/browse/publishing-and-editorial-issues/clinical-trial-registration.html

Sims MT, Checketts JX, Wayant C, Vassar M. Requirements for trial registration and adherence to reporting guidelines in critical care journals: a meta-epidemiological study of journals’ instructions for authors. JBI Evid Implement. 2018;16(1):55.

Hooft L, Korevaar DA, Molenaar N, Bossuyt PMM, Scholten RJPM. Endorsement of ICMJE’s clinical Trial Registration Policy: a survey among journal editors. Neth J Med. 2014;72(7):349–55.

Kunath F, Grobe HR, Keck B, et al. Do urology journals enforce trial registration? A cross-sectional study of published trials. BMJ Open. 2011;1(2):e000430.

Checklists STROBE, Accessed. July 31, 2023. https://www.strobe-statement.org/checklists/

Baas J, Schotten M, Plume A, Côté G, Karimi R. Scopus as a curated, high-quality bibliometric data source for academic research in quantitative science studies. Quant Sci Stud. 2020;1(1):377–86.

Vine R. Google Scholar. J Med Libr Assoc. 2006;94(1):97.

Jackson JL, Kuriyama A, Anton A, et al. The Accuracy of Google Translate for Abstracting Data from Non-english-language trials for systematic reviews. Ann Intern Med. 2019;171(9):677–9.

Google Translate. Accessed January 8. 2023. https://translate.google.com/

Harrison S, Henderson J, Alderdice F, Quigley MA. Methods to increase response rates to a population-based maternity survey: a comparison of two pilot studies. BMC Med Res Methodol. 2019;19(1):65.

Nees D, Young A, Hughes G et al. Endorsement of reporting guidelines and clinical trial registration by clinical journals. Published online May 12, 2023. Accessed August 22, 2023. https://osf.io/wrtke/

Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302(9):977–84.

Simera I, Moher D, Hirst A, Hoey J, Schulz KF, Altman DG. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 2010;8:24.

Altman DG, Simera I. Using reporting guidelines effectively to ensure good reporting of health research. Guidelines for reporting Health Research: a user’s Manual. John Wiley & Sons, Ltd; 2014. pp. 32–40.

Checketts JX, Sims MT, Detweiler B, Middlemist K, Jones J, Vassar M. An evaluation of reporting guidelines and clinical Trial Registry requirements among orthopaedic surgery journals. J Bone Joint Surg Am. 2018;100(3):e15.

Wayant C, Moore G, Hoelscher M, Cook C, Vassar M. Adherence to reporting guidelines and clinical trial registration policies in oncology journals: a cross-sectional review. BMJ Evid Based Med. 2018;23(3):104–10.

Weber WEJ, Merino JG, Loder E. Trial registration 10 years on. BMJ. 2015;351:h3572.

FDAAA 801 and the Final Rule. Accessed August 21. 2023. https://classic.clinicaltrials.gov/ct2/manage-recs/fdaaa

Higgins JPT, Thomas J, Chandler J, et al. Cochrane Handbook for Systematic Reviews of Interventions. Wiley; 2019.

Cuschieri S. The STROBE guidelines. Saudi J Anaesth. 2019;13(Suppl 1):S31–4.

Acknowledgements

We are grateful to Jon Goodell, M.A., AHIP and the OSU medical library for their procurement of relevant journal information.

Funding

This study was not funded.

Author information

Authors and Affiliations

Contributions

A.V.T. contributed to Investigation, Manuscript Writing, Review & Editing, and Visualization (submission of images for figure preparation). J.K.S. and Z.E. were involved in Investigation and Manuscript Writing. C.A.S. conceptualized the study, developed methodology, provided software, conducted formal analysis, and curated data. D.N. contributed to conceptualization and methodology. G.K.H. provided software, conducted formal analysis, and curated data. M.V. supervised the project and managed administration. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

No financial or other sources of support were provided during the development of this manuscript. Dr. Vassar reports receipt of funding from the National Institute on Drug Abuse, the National Institute on Alcohol Abuse and Alcoholism, the US Office of Research Integrity, Oklahoma Center for Advancement of Science and Technology, and internal grants from Oklahoma State University Center for Health Sciences — all outside of the present work. All other authors have nothing to report.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tran, A.V., Stadler, J.K., Ernst, Z. et al. Evaluating guideline and registration policies among neurology journals: a cross-sectional analysis. BMC Neurol 24, 321 (2024). https://doi.org/10.1186/s12883-024-03839-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12883-024-03839-1