Abstract

Background

The successful integration of artificial intelligence (AI) in healthcare depends on the global perspectives of all stakeholders. This study aims to answer the research question: What are the attitudes of medical, dental, and veterinary students towards AI in education and practice, and what are the regional differences in these perceptions?

Methods

An anonymous online survey was developed based on a literature review and expert panel discussions. The survey assessed students' AI knowledge, attitudes towards AI in healthcare, current state of AI education, and preferences for AI teaching. It consisted of 16 multiple-choice items, eight demographic queries, and one free-field comment section. Medical, dental, and veterinary students from various countries were invited to participate via faculty newsletters and courses. The survey measured technological literacy, AI knowledge, current state of AI education, preferences for AI teaching, and attitudes towards AI in healthcare using Likert scales. Data were analyzed using descriptive statistics, Mann–Whitney U-test, Kruskal–Wallis test, and Dunn-Bonferroni post hoc test.

Results

The survey included 4313 medical, 205 dentistry, and 78 veterinary students from 192 faculties and 48 countries. Most participants were from Europe (51.1%), followed by North/South America (23.3%) and Asia (21.3%). Students reported positive attitudes towards AI in healthcare (median: 4, IQR: 3–4) and a desire for more AI teaching (median: 4, IQR: 4–5). However, they had limited AI knowledge (median: 2, IQR: 2–2), lack of AI courses (76.3%), and felt unprepared to use AI in their careers (median: 2, IQR: 1–3). Subgroup analyses revealed significant differences between the Global North and South (r = 0.025 to 0.185, all P < .001) and across continents (r = 0.301 to 0.531, all P < .001), with generally small effect sizes.

Conclusions

This large-scale international survey highlights medical, dental, and veterinary students' positive perceptions of AI in healthcare, their strong desire for AI education, and the current lack of AI teaching in medical curricula worldwide. The study identifies a need for integrating AI education into medical curricula, considering regional differences in perceptions and educational needs.

Trial registration

Not applicable (no clinical trial).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

The popularity of artificial intelligence (AI) in healthcare has exponentially risen in recent years, attracting the attention of professionals and students alike [1, 2]. The emergence of large language models like ChatGPT has further expanded AI’s potential in medicine, offering new possibilities for clinical applications and medical training [3, 4]. AI has demonstrated expert-level performance in various medical domains, including breast cancer screening, chest radiograph interpretation, and prediction of treatment outcomes [5,6,7,8].

The increasing prevalence of AI in healthcare necessitates its incorporation into medical education. AI offers numerous potential benefits for medical training, such as enhancing understanding of complex concepts, providing personalized learning experiences, and simulating clinical scenarios [9,10,11,12]. Moreover, familiarizing medical students with AI tools and technologies prepares them for the realities of their future professional lives [13, 14]. However, the integration of AI also raises significant ethical challenges, including concerns about patient autonomy, beneficence, non-maleficence, and justice [9, 15, 16].

Existing literature has primarily focused on the technical aspects of AI in medicine or its potential applications in specific medical specialties [17]. Other studies have explored healthcare professionals' perceptions of AI, but these have been limited by small sample sizes and lack of geographic diversity [17]. This gap in the literature precludes a comprehensive understanding of how future healthcare professionals across different regions perceive and prepare for AI integration in their fields.

This multicenter study addresses this gap by investigating the perspectives of medical, dental, and veterinary students on AI in their education and future practice across multiple countries. Specifically, we examine: 1) students' technological literacy and AI knowledge, 2) the current state of AI in their curricula, 3) their preferences for AI education, and 4) their attitudes towards AI's role in their fields. By exploring regional differences on a large, international scale, this study offers a unique comparative overview of students' perceptions worldwide.

Methods

This multicenter cross-sectional study was conducted in accordance with the Strengthening the reporting of observational studies in epidemiology (STROBE) statement and received ethical approval from the Institutional Review Board at Charité – University Medicine Berlin (EA4/213/22), serving as the principal institution, in compliance with the Declaration of Helsinki and its later amendments [18, 19]. To ensure participant anonymity, the necessity for informed consent was waived.

Instrument development and design

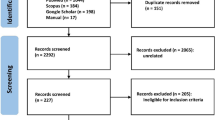

Following the Association for Medical Education in Europe (AMEE) guide, this study aimed to develop an anonymous online survey to assess: 1) the technological literacy and knowledge of informatics and AI, 2) the current state of AI in their respective curricula and preferences for AI education, and 3) the perspectives towards AI in the medical profession among international medicine, dentistry, and veterinary medicine students [20]. To inform instrument development, a literature review of existing publications on the attitudes of medical students towards AI in medicine was independently performed by four reviewers (FB, LH, KKB, LCA), leveraging MEDLINE, Scopus, and Google Scholar databases in December 2022. Studies were selected for review based on the following criteria: 1) the publications were original research articles, 2) the scope aligned with our research objectives and targeted medical students, 3) the survey was conducted in English language, 4) the items were publicly accessible, 5) the measurement of perspectives towards AI was not restricted to a particular medical subfield. Following these criteria, five articles comprising a total of 96 items were identified as relevant to the research scope [21,22,23,24,25]. After a consensus-based discussion, items that did not match our research objectives or overlapped in content were excluded, resulting in 23 remaining items. These items were subsequently tailored to fit the context of medical education and the medical profession.

A review cycle was undertaken with a focus group of medical AI researchers and students, as well as an expert panel including physicians, medical faculty members and educators, AI researchers and developers, and biomedical statisticians (FB, LH, DT, MRM, KKB, LCA, AB, RC, GDV, AH, LJ, AL, PS, LX). The finalized survey consisted of 16 multiple-choice items, eight demographic queries, and one free-field comment section. These items were further refined based on content-based domain samples, and responses were standardized using a four- or five-point Likert scale where applicable.

The preliminary assessment was conducted through cognitive interviews with ten medical students at Charité – University Medicine Berlin to evaluate the scale's comprehensiveness and overall length. The feedback resulted in two rewordings and one item removal, finalizing the survey with 15 multiple-choice items and eight demographic queries supported by one free-field comment section. The final questionnaire items and response options can be viewed in Table 1.

Using REDCap (Research Electronic Data Capture) hosted at Charité – University Medicine Berlin, the English survey was subsequently disseminated through the medical student newsletter at Charité and deactivated after receiving responses from 50 medical students who served as the pilot study group and were not included in the final participant pool [26, 27]. After psychometric validation, participating sites distributed the REDCap online survey among medical, dental, and veterinary students at their faculty. Due to the large number of Spanish-speaking sites, a separate Spanish online version of the survey was employed using paired forward and backward translation with reconciliation by two bilingual medical professionals (LG, JSPO). Depending on their faculty location, participating sites distributed either the English or Spanish online survey via their faculty newsletters and courses using a QR code or the direct website link (non-probability convenience sampling). The survey was available for participation from April to October 2023.

Our data collection methodology was designed to mitigate several risks related to privacy, confidentiality, consent, transparency of recruitment, and minimization of harm, as highlighted before [28]. By using faculty newsletters and course distributions, we reduced the exposure of personal information on social media platforms, thereby maintaining a higher level of privacy. This method ensured that our participants' identities and responses were not publicly available or exposed to wider online networks. To further secure the data, the survey platform used was selected for its robust security features, including data encryption and secure storage. We explicitly informed participants about how their data would be used and protected, ensuring transparency and building trust.

Distributing the survey through official academic channels, such as faculty newsletters, implied a degree of formality and oversight, increasing the likelihood that participants were adequately informed of the study's intentions. By detailing the purpose of the study, the use of data and participants' rights on the first page of the survey, participants had to indicate their understanding and agreement by ticking an 'I agree' box before proceeding.

Using institutional channels for distribution provided a transparent and credible recruitment process that was likely to reach a relevant and engaged audience. We ensured that participants were aware that their participation was completely voluntary and that they could withdraw from the study at any time without penalty. We also provided contact details for participants to ask questions about the study, promoting openness and trust.

By avoiding the use of social media for recruitment, we eliminated the risk of participants' responses being exposed to their social networks, thereby protecting their privacy and reducing potential social risks. The content of the survey was carefully reviewed to ensure that no questions could cause distress or harm to participants. Participants were informed that they could skip any questions they felt uncomfortable answering, ensuring their well-being and autonomy throughout the survey process.

Inclusion and exclusion criteria

Inclusion criteria consisted of students at least 18 years of age, actively enrolled in a (human) medicine, dentistry, or veterinary medicine degree program, who responded to the survey during its open period and were proficient in either English or Spanish, depending on their faculty location. Participants had to confirm their enrollment in a relevant program and input their age to verify they were above 18 years old. Only those meeting these criteria could proceed with the survey. Respondents who started the survey but did not answer any multiple-choice items were excluded from the analysis. Partial missing responses to survey items resulted in exclusion from each subanalysis.

Statistical analysis

Statistical analyses were performed with SPSS Statistics 25 (version 28.0.1.0) and R (version 4.2.1), using the "tidyverse", "rnaturalearth", and "sf" packages [29,30,31,32]. The Kolmogorov–Smirnov test was used to test for normal distribution. Categorical and ordinal data were reported as frequencies with percentages. Medians and interquartile ranges (IQR) were reported for non-parametric continuous data. Variances were reported for items in Likert scale format. The response rate was derived from the overall student enrollment numbers at each faculty according to the faculty websites or the Times Higher Education World University Rankings 2024 due to the unavailability of official data on enrolled medical, dentistry, or veterinary students. In the pilot study group, item reliability was measured using Cronbach's α, with values above 0.7 interpreted as acceptable internal consistency. Explanatory factor analysis was used to examine the structure and subscales of the instrument, using an eigenvalue cutoff of 1 for item extraction. Items with factor loadings of 0.4 or higher were retained. Data suitability for structural evaluation was assessed using the Kaiser–Meyer–Olkin measure and Bartlett's test of sphericity. For geographical subgroup analysis, respondents were categorized based on their faculty location (Global North versus Global South) according to the United Nations' Finance Center for South-South Cooperation [33]. Additionally, participants were grouped into continents based on the United Nations geoscheme [34]. Due to the substantial number of European participants, students in North/West and South/East Europe were analyzed separately. Further subgroup analyses based on gender, age, academic year, technological literacy, self-reported AI knowledge, and previous curricular AI events can be found in the appendix (see Supplementary Tables 1–7). The Mann–Whitney U-test was employed for subgroup analyses of two independent non-parametric samples. For continental comparison, the Kruskal–Wallis one-way analysis of variance and Dunn-Bonferroni post hoc test were performed. To estimate effect size, we calculated r, with 0.5 indicating a large effect, 0.3 a medium effect, and 0.1 a small effect [35]. An asymptotic two-sided P-value below 0.05 was considered statistically significant.

Results

Pilot study

The median age of the pilot study group was 24 years (IQR: 21–26 years). 58% of participants identified as female (n = 29), 38% as male (n = 19), and 4% (n = 2) did not report their gender. The median current academic year was 2 (IQR: 2–4 years) out of 6 total academic years. Internal consistency for our scale's dimensions ranged from acceptable to good, as indicated by Cronbach's α. The section on "Technological literacy and knowledge of informatics and AI" registered an α of 0.718, while the section "Current state of AI in the curriculum and preferences for AI education" scored an α of 0.726, both displaying acceptable internal consistency. A Cronbach's α value of 0.825 for the "Perspectives towards AI in the medical profession" section denoted good internal consistency. The Kaiser–Meyer–Olkin measure for sampling adequacy was 0.801, confirming the sample's representational validity. Bartlett's test of sphericity returned a P-value of less than 0.001, validating the chosen method for factor analysis. Factor analysis yielded a structure comprising 15 items across three dimensions, collectively explaining 54% of the total variance. Factor loadings for individual items ranged from 0.495 for "Which of these technical devices do you use at least once a week?" to 0.888 for "What is your general attitude toward the application of artificial intelligence (AI) in medicine?".

Study cohort

Between the first of April and the first of October 2023, 4900 responses were recorded, of which 4345 (88.7%) were collected via the English survey and 555 (11.3%) via the Spanish survey version. Of these, 283 (5.8%) respondents reported degrees other than medicine, dentistry, or veterinary medicine or indicated that they had completed their studies, while 21 (0.4%) did not respond to any multiple-choice item or did not indicate their degree. The final study cohort comprised 4596 participants from 192 faculty and 48 countries, of whom 4313 (93.8%) were medical, 205 (4.5%) dentistry, and 78 (1.7%) veterinary medicine students. Of 5,575,307 enrolled students from all degrees at the 183 (95.3%) participating faculties in which the total enrollment number was publicly available, the survey achieved an average response rate of 0.2% (standard deviation: 0.4%). Most respondents studied in Southern/Eastern European (n = 1240, 27%) countries, followed by Northern/Western Europe (n = 1110, 24.2%), Asia (n = 944, 20.5%), South America (n = 555, 12.1%), North America (n = 515, 11.2%), Africa (n = 125, 2.7%), and Australia (n = 104, 2.3%). Please refer to Fig. 1 to view the distribution of participating institutions in relation to the number of participants on a world map. A detailed list of survey participants divided by country, faculty, city, degree, number of enrolled students, and response rate is provided in the appendix (see Supplementary Table 8). The median age of the study population was 22 years (IQR: 20–24 years). 56.6% of the participants were female (n = 2600) and 42.4% male (n = 1946), with a median academic year of 3 (IQR: 2–5 years). Full descriptive data, including items on technological literacy and preferences for AI teaching in the medical curriculum, are displayed in Table 2. Any free field comments of the survey participants are listed in the appendix (see Supplementary Table 9), with selected comments highlighted in Fig. 2.

Diverse perspectives from medical students on the integration of artificial intelligence (AI) in healthcare education and practice. The selected quotes reflect a range of sentiments, from concerns about dehumanization and potential challenges in low-resource settings to viewing AI as a beneficial tool that complements rather than replaces the human touch in medicine

Collective perceptions towards artificial intelligence

Table 3 displays the survey results for Likert scale items. Students generally reported a rather or extremely positive attitude towards the application of AI in medicine (3091, 67.6%). The highest positive attitude towards AI in the medical profession was recorded for the item "How do you estimate the effect of artificial intelligence (AI) on the efficiency of healthcare processes in the next 10 years?" with 4042 respondents (88.4%) estimating a moderate or great improvement. Contrarily, 3171 students (69.4%) rather or completely agreed with the item "The use of artificial intelligence (AI) in medicine will increasingly lead to legal and ethical conflicts.". Regarding AI education and knowledge, 3451 students (75.3%) reported no or little knowledge of AI, and 3474 (76.1%) rather or completely agreed that they would like to have more teaching on AI in medicine as part of their curricula. On the other hand, 3497 (76.3%) students responded that they did not have any curricular events on AI as part of their degree, as illustrated on the country level in Fig. 3. Variability in responses was observed, ranging from 0.279 for the item "How would you rate your general knowledge of artificial intelligence (AI)?" —measured on a four-point Likert scale— to 1.372 for "With my current knowledge, I feel sufficiently prepared to work with artificial intelligence (AI) in my future profession as a physician.". Notably, the items capturing the trade-offs in medical AI diagnostics revealed that most students preferred AI explainability (n = 3659, 80.2%) over a higher accuracy (n = 902, 19.8%) and higher sensitivity (n = 2906, 63.9%) over higher specificity (n = 1118, 24.6%) or equal sensitivity/specificity (n = 524, 11.5%), as visualized in Fig. 4.

Pie charts illustrating student responses at the country level for the item "As part of my studies, there are curricular events on artificial intelligence (AI) in medicine.". A more filled, darker red chart indicates a higher proportion of students reporting no AI events, while a less filled, greener chart indicates fewer students reporting the absence of AI events. The missing portion of each chart displays the proportion of students who reported AI events, regardless of the duration. An all-white pie chart indicates that all students reported AI events in the medical curriculum. The absolute number of responses per country is shown above each chart. Analysis of the pie charts from countries with a representative sample of at least 50 respondents reveals that, among 28 nations, only four (Indonesia, Switzerland, Vietnam, and China) exhibited over 50% of students reporting the inclusion of AI events within their medical curriculum. Data from the USA displayed an equal proportion of students reporting the presence or absence of AI events in their curriculum (50% each). The residual 23 countries, encompassing Germany, Portugal, Mexico, Brazil, Poland, UAE, Austria, Italy, India, Argentina, Macedonia, Canada, Slovenia, Ecuador, Australia, Azerbaijan, Japan, Spain, Chile, Moldova, South Africa, Nepal, and Nigeria, had a lower proportion of students reporting the integration of AI in the medical curriculum. Abbreviations: UAE, United Arab Emirates; USA, United States of America

Regional comparisons

Please refer to Table 4 to view the results of the comparison of responses from the Global North and South for Likert scale format items. Perceptions between the Global North and South differed significantly for nine Likert scale format items. The highest effect size was observed for the item on AI increasing ethical and legal conflicts, with respondents from the Global North indicating a higher agreement (median: 4, IQR: 3–5) compared to those from the Global South (median: 4, IQR: 3–4; r = 0.185; P < 0.001). Notably, Global South students felt more prepared to use AI in their future practice (median: 3, IQR: 2–4) compared to their Global North counterparts (median: 2, IQR: 1–3; r = 0.162; P < 0.001) and reported longer AI-related curricular events (median: 1, IQR: 1–2; Global North: median: 1, IQR: 1–1; r = 0.090; P < 0.001). Conversely, Global North students rated their AI knowledge higher (median: 2, IQR: 2–3; Global South: median: 2, IQR: 2–2; r = 0.025; P < 0.001).

For continental comparison, the Kruskal–Wallis one-way analysis of variance revealed significantly different Likert scale responses across all survey items (see Table 5). Subsequent Dunn-Bonferroni post hoc analysis displayed various significant differences in Likert scale responses for pairwise regional comparisons, while median and IQR remained largely consistent. Considering only medium to large effect sizes, the item "The use of artificial intelligence (AI) in medicine will increasingly lead to legal and ethical conflicts." yielded an r of 0.301 when comparing Northern/Western European (median: 4, IQR: 4–5) and South American participants (median: 4, IQR: 3–4; P < 0.001), and an r of 0.311 between South American and Australian participants (median: 4, IQR: 4–5; P < 0.001). Similarly, the statement "With my current knowledge, I feel sufficiently prepared to work with artificial intelligence (AI) in my future profession as a physician." displayed strong effect sizes in comparisons between North/West Europe (median: 2, IQR: 1–2) and Asia (median: 3, IQR: 2–4; r = 0.531; P < 0.001), South/East Europe (median: 2, IQR: 2–3) and Asia (r = 0.342; P < 0.001), and South America (median: 2, IQR: 2–3) and Asia (r = 0.398; P < 0.001).

Discussion

Our multicenter study of 4596 medical, dental, and veterinary students from 192 faculties in 48 countries provides crucial insights into the global landscape of AI perception and education in healthcare curricula. The findings reveal a nuanced picture: while students generally express optimism about AI’s role in future healthcare practice, this is tempered by significant concerns and a striking lack of preparedness.

The educational basis of our study lies in addressing a critical gap in AI education within medical curricula, exploring how this deficiency varies across different regions, particularly between continents and the Global North and South. As AI rapidly advances and promises to reshape healthcare, the need for future physicians to be adequately prepared through comprehensive AI education becomes increasingly urgent. Our study goes beyond merely asserting the necessity of AI education by elucidating regional differences in perceptions and experiences related to AI among healthcare students.

Our findings extend previous research highlighting inadequacies in AI education in medical schools globally. Kolachalama and Garg [36] noted that AI is not widely taught in medical schools, with most curricula lacking substantial AI training modules. Chan and Zary [37] reinforced this, emphasizing the gap between recognizing AI’s potential benefits and actually integrating AI education into medical programs. Our study confirms these deficiencies on a larger, international scale, revealing that over three-quarters of students reported no AI-related events in their curriculum, despite strong interest in such education. Importantly, our research uncovers regional disparities in AI education and perception.

Students from the Global South were generally less likely to report having AI incorporated into their curricula compared to their counterparts in the Global North. This discrepancy underscores the need for tailored educational strategies that consider these regional differences to ensure equitable preparation for an AI-enhanced medical landscape. The observed differences in perceived preparedness for working with AI, particularly among Asian students, may reflect varying national AI policies, educational strategies, and macroeconomic factors [38, 39].

Depending on the study and item design, self-reported AI knowledge in the literature ranges from 2.8% of 2981 medical students in Turkey in 2022 who reported feeling informed about the use of AI in medicine to 51.8% of 900 medical students in Jordan in 2021 who indicated having read articles about AI or machine learning in the past two years [21, 40,41,42,43,44]. On the other hand, the reported prevalence of AI training in the medical curriculum ranges, for instance, from 9.2% in a 2020 survey of 484 medical students in the United Kingdom up to 24.4% in a 2022 study among 2981 medical students in Turkey, although variations in item designs and demographic contexts hinder a comprehensive longitudinal analysis [22, 40, 42, 43, 45]. In our study, less than 18% (n = 5) of countries with a sample size of 50 or more participants had a higher or equal proportion of students reporting any duration of AI teaching, pointing to a persistent deficit in medical AI education across various demographic landscapes. Overall, the incorporation of AI into medical education on a broader national or international scale is limited, and the adoption of frameworks, certification programs, interdisciplinary collaborations, modules, and formal lectures seems still to be at an early stage [14, 46,47,48, 49].

While our study design and varying sample sizes across regions complicate causal analysis, the fact that three of four countries with over 50% of students reporting AI training were in Asia suggests a potential link between educational exposure and perceived readiness.

Despite the overall positive outlook, our study reveals a pronounced concern among students about the ethical and legal challenges posed by AI integration in healthcare. This echoes findings from Mehta et al. and Civaner et al. [40, 50], highlighting the critical need for AI education to address not only technical skills but also ethical, legal, and societal implications.

In terms of educational preferences, most of the participants in our study indicated their interest in learning practical skills, followed by future perspectives and legal and ethical aspects of medical AI. This underscores the great potential of AI education to not only improve medical students' oversight, knowledge, and practical skills in using AI but also to educate about ethical, legal, and societal implications — topics that are also addressed in other AI education frameworks, such as the United Nations Educational, Scientific and Cultural Organization K-12 AI curricula report [51].

In our subgroup analysis of respondents across continents, two items displayed moderate to large effect sizes. First, participants from South America were less likely to agree that the use of medical AI will increase ethical and legal conflicts compared to participants from Northern/Western Europe and Australia. Yet, students' median responses in these regions were identical. Thus, the level of effect size primarily reflects outliers rather than a uniform regional disparity in opinion. Second, Asian students reported being better prepared to work with AI in their future careers. Although these differences in perceived preparedness could be driven by different national AI policies and educational strategies as well as macroeconomic factors, our study design and varying sample sizes across regions complicate a causal analysis [38, 39].

Finally, the strong preference for explainable AI systems over highly accurate but opaque ones underscores the growing emphasis on ‘Explainable AI’ in medicine, underlining the importance of transparency in fostering trust and acceptance among future healthcare professionals [52,53,54, 55].

This study has limitations. First, the uneven regional distribution of participants potentially biased results in favor of overrepresented regions. In addition, the online design and language availability in either English or Spanish, as well as the non-probability convenience sampling method, may have introduced selection bias by excluding students without internet access, students who were not proficient in either language, or students who did not wish to participate. Another potential source of selection bias could be that respondents with a specific interest in or experience with AI were more likely to participate in the survey. Furthermore, the calculated response rate appeared to be rather low due to the lack of data on the number of students enrolled in each medical discipline for most participating institutions. Consequently, we derived the response rate using the total student enrollment numbers, which significantly underestimated the true rate of participation among medical students as it assumes that all students within each faculty received an invitation to participate. Moreover, the presence of 20 institutions with fewer than 50 student respondents has skewed the response rate further downward.

Conclusions

In conclusion, our study -the currently largest survey of medical students’ perceptions towards AI in healthcare education and practice- reveals a broadly optimistic view of AI’s role in healthcare. It draws on insights from students with diverse geographical, sociodemographic, and cultural backgrounds, underlining the critical need for AI education in medical curricula around the world and identifying a universal challenge and opportunity: to adeptly prepare healthcare students for a future that integrates AI into healthcare practice.

Availability of data and materials

All data collected and analyzed as part of this study is available at figshare at: https://doi.org/10.6084/m9.figshare.24422422.

Abbreviations

- AI:

-

Artificial intelligence

- AMEE:

-

Association for Medical Education in Europe

- ChatGPT:

-

Chat Generative Pre-trained Transformers

- IQR:

-

Interquartile range

- LLM:

-

Large language model

- REDCap:

-

Research Electronic Data Capture

- STROBE:

-

Strengthening the reporting of observational studies in epidemiology

- USMLE:

-

United States Medical Licensing Examination

References

Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. 2019;6(2):94–8. https://doi.org/10.7861/futurehosp.6-2-94.

Chen M, Zhang B, Cai Z, Seery S, Gonzalez MJ, Ali NM, et al. Acceptance of clinical artificial intelligence among physicians and medical students: A systematic review with cross-sectional survey. Front Med. 2022;9. https://doi.org/10.3389/fmed.2022.990604.

Milmo D. ChatGPT reaches 100 million users two months after launch. In: The Guardian. 2023. https://www.theguardian.com/technology/2023/feb/02/chatgpt-100-million-users-open-ai-fastest-growing-app. Accessed 17 Oct 2023.

OpenAI. GPT-4 Technical Report. arXiv. 2023;arXiv:2303.08774. https://doi.org/10.48550/arXiv.2303.08774.

Qu J, Zhao X, Chen P, Wang Z, Liu Z, Yang B, et al. Deep learning on digital mammography for expert-level diagnosis accuracy in breast cancer detection. Multimed Syst. 2022;28(4):1263–74. https://doi.org/10.1007/s00530-021-00823-4.

Tiu E, Talius E, Patel P, Langlotz CP, Ng AY, Rajpurkar P. Expert-level detection of pathologies from unannotated chest X-ray images via self-supervised learning. Nat Biomed Eng. 2022;6(12):1399–406. https://doi.org/10.1038/s41551-022-00936-9.

Cygu S, Seow H, Dushoff J, Bolker BM. Comparing machine learning approaches to incorporate time-varying covariates in predicting cancer survival time. Sci Rep. 2023;13(1):1370. https://doi.org/10.1038/s41598-023-28393-7.

Huang C, Clayton EA, Matyunina LV, McDonald LD, Benigno BB, Vannberg F, et al. Machine learning predicts individual cancer patient responses to therapeutic drugs with high accuracy. Sci Rep. 2018;8(1):16444. https://doi.org/10.1038/s41598-018-34753-5.

Chan KS, Zary N. Applications and challenges of implementing artificial intelligence in medical education: integrative review. JMIR Med Educ. 2019;5(1):e13930. https://doi.org/10.2196/13930.

Li YS, Lam CSN, See C. Using a machine learning architecture to create an ai-powered chatbot for anatomy education. Med Sci Educ. 2021;31(6):1729–30. https://doi.org/10.1007/s40670-021-01405-9.

Nagy M, Radakovich N, Nazha A. Why machine learning should be taught in medical schools. Med Sci Educ. 2022;32(2):529–32. https://doi.org/10.1007/s40670-022-01502-3.

Fernández-Alemán JL, López-González L, González-Sequeros O, Jayne C, López-Jiménez JJ, Toval A. The evaluation of i-SIDRA – a tool for intelligent feedback – in a course on the anatomy of the locomotor system. Int J Med Inform. 2016;94:172–81. https://doi.org/10.1016/j.ijmedinf.2016.07.008.

Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230–43. https://doi.org/10.1136/svn-2017-000101.

Paranjape K, Schinkel M, Nannan Panday R, Car J, Nanayakkara P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med Educ. 2019;5(2):e16048. https://doi.org/10.2196/16048.

Beauchamp TL, Childress JF. Principles of Biomedical Ethics. 8th ed. Oxford: Oxford Publishing Press; 2019.

Busch F, Adams LC, Bressem KK. Biomedical ethical aspects towards the implementation of artificial intelligence in medical education. Med Sci Educ. 2023;33(4):1007–12. https://doi.org/10.1007/s40670-023-01815-x.

Mousavi Baigi SF, Sarbaz M, Ghaddaripouri K, Ghaddaripouri M, Mousavi AS, Kimiafar K. Attitudes, knowledge, and skills towards artificial intelligence among healthcare students: A systematic review. Health Sci Rep. 2023;6(3):e1138. https://doi.org/10.1002/hsr2.1138.

Vandenbroucke JP, von Elm E, Altman DG, Gøtzsche PC, Mulrow CD, et al. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and Elaboration. PLoS Med. 2007;4(10):e297. https://doi.org/10.1371/journal.pmed.0040297.

World Medical Association. World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA. 2013;310(20):2191–4. https://doi.org/10.1001/jama.2013.281053.

Artino AR, La Rochelle JS, Dezee KJ, Gehlbach H. Developing questionnaires for educational research: AMEE Guide No. 87. Med Teacher. 2014;36(6):463–74. https://doi.org/10.3109/0142159X.2014.889814.

Bisdas S, Topriceanu C-C, Zakrzewska Z, Irimia A-V, Shakallis L, Subhash J, et al. Artificial Intelligence in Medicine: A Multinational Multi-Center Survey on the Medical and Dental Students' Perception. Front Public Health. 2021;9. https://doi.org/10.3389/fpubh.2021.795284.

Sit C, Srinivasan R, Amlani A, Muthuswamy K, Azam A, Monzon L, et al. Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: a multicentre survey. Insights Imaging. 2020;11(1):14. https://doi.org/10.1186/s13244-019-0830-7.

Park CJ, Yi PH, Siegel EL. Medical Student Perspectives on the Impact of Artificial Intelligence on the Practice of Medicine. Curr Probl Diagn Radiol. 2021;50(5):614–9. https://doi.org/10.1067/j.cpradiol.2020.06.011.

Ejaz H, McGrath H, Wong BL, Guise A, Vercauteren T, Shapey J. Artificial intelligence and medical education: A global mixed-methods study of medical students’ perspectives. Digit Health. 2022;8:20552076221089100. https://doi.org/10.1177/20552076221089099.

Blease C, Kharko A, Bernstein M, Bradley C, Houston M, Walsh I, et al. Machine learning in medical education: a survey of the experiences and opinions of medical students in Ireland. BMJ Health Care Inform. 2022;29(1). https://doi.org/10.1136/bmjhci-2021-100480.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. https://doi.org/10.1016/j.jbi.2008.08.010.

Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O’Neal L, et al. The REDCap consortium: Building an international community of software platform partners. J Biomed Inform. 2019;95:103208. https://doi.org/10.1016/j.jbi.2019.103208.

Azer SA. Social media channels in health care research and rising ethical issues. AMA J Ethics. 2017;19(11):1061–9.

R Core Team. R: A language and environment for statistical computing. 2023. https://www.R-project.org/. Accessed 17 Oct 2023.

Wickham H, Averick M, Bryan J, Chang W, McGowan L, François R, et al. Welcome to the Tidyverse. J Open Source Softw. 2019;4:1686. https://doi.org/10.21105/joss.01686.

Pebesma E. Simple Features for R: Standardized Support for Spatial Vector Data. R Journal. 2018;10:439–46. https://doi.org/10.32614/RJ-2018-009.

Pebesma E, Bivand R. Spatial Data Science: With Applications in R. 1st ed. New York: Chapman and Hall/CRC; 2023. https://doi.org/10.1201/9780429459016.

The Finance Center for South-South Cooperation. Global South Countries (Group of 77 and China). 2015. http://www.fc-ssc.org/en/partnership_program/south_south_countries. Accessed 18 Oct 2023.

United Nations, Statistics Division. Methodology Standard country or area codes for statistical use. 1999. Available from: https://unstats.un.org/unsd/methodology/m49/. Accessed 18 Oct 2023.

Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd ed. Hillsdale, NJ: Erlbaum; 1988.

Kolachalama VB, Garg PS. Machine learning and medical education. NPJ Dig Med. 2018;1(1):54.

Chan KS, Zary N. Applications and challenges of implementing artificial intelligence in medical education: integrative review. JMIR Med Educ. 2019;5(1):e13930.

Groth OJ, Nitzberg M, Zehr D. Comparison of National Strategies to Promote Artificial Intelligence. 1st ed. Sankt Augustin; Berlin: Konrad-Adenauer Foundation e.V.; 2019.

Zhang W, Cai M, Lee HJ, Evans R, Zhu C, Ming C. AI in Medical Education: Global situation, effects and challenges. Educ Inf Technol. 2023. https://doi.org/10.1007/s10639-023-12009-8.

Civaner MM, Uncu Y, Bulut F, Chalil EG, Tatli A. Artificial intelligence in medical education: a cross-sectional needs assessment. BMC Med Educ. 2022;22(1):772. https://doi.org/10.1186/s12909-022-03852-3.

Yüzbaşıoğlu E. Attitudes and perceptions of dental students towards artificial intelligence. J Dent Educ. 2021;85(1):60–8. https://doi.org/10.1002/jdd.12385.

Swed S, Alibrahim H, Elkalagi NKH, Nasif MN, Rais MA, Nashwan AJ, et al. Knowledge, attitude, and practice of artificial intelligence among doctors and medical students in Syria: A cross-sectional online survey. Front Artif Intell. 2022;5:1011524. https://doi.org/10.3389/frai.2022.1011524.

Al Saad MM, Shehadeh A, Alanazi S, Alenezi M, Eid H, Alfaouri MS, et al. Medical students’ knowledge and attitude towards artificial intelligence: An online survey. Open Public Health J. 2022;15(1). https://doi.org/10.2174/18749445-v15-e2203290.

Teng M, Singla R, Yau O, Lamoureux D, Gupta A, Hu Z, et al. Health Care Students’ Perspectives on Artificial Intelligence: Countrywide Survey in Canada. JMIR Med Educ. 2022;8(1):e33390. https://doi.org/10.2196/33390.

Stewart J, Lu J, Gahungu N, Goudie A, Fegan PG, Bennamoun M, et al. Western Australian medical students’ attitudes towards artificial intelligence in healthcare. PLoS ONE. 2023;18(8):e0290642. https://doi.org/10.1371/journal.pone.0290642.

Thongprasit J, Wannapiroon P. Framework of Artificial Intelligence Learning Platform for Education. Int Educ Stud. 2022;15:76. https://doi.org/10.5539/ies.v15n1p76.

McCoy LG, Nagaraj S, Morgado F, Harish V, Das S, Celi LA. What do medical students actually need to know about artificial intelligence? NPJ digital medicine. 2020;3(1):86. https://doi.org/10.1038/s41746-020-0294-7.

Schinkel K, Nannan M, Panday R, Car J. Introducing Artificial Intelligence Training in Medical Education. JMIR Med Educ. 2019;5(2):e16048. https://doi.org/10.2196/16048.

Ngo B, Nguyen D, van Sonnenberg E. The Cases for and against Artificial Intelligence in the Medical School Curriculum. Radiol Artif Intell. 2022;4(5):e220074. https://doi.org/10.1148/ryai.220074.

Mehta N, Harish V, Bilimoria K, Morgado F, Ginsburg S, Law M, et al. Knowledge and Attitudes on Artificial Intelligence in Healthcare: A Provincial Survey Study of Medical Students [version 1]. MedEdPublish. 2021. https://doi.org/10.15694/mep.2021.000075.1.

United Nations Educational, Scientific and Cultural Organization. K-12 AI curricula: a mapping of government-endorsed AI curricula. 2022. https://unesdoc.unesco.org/ark:/48223/pf0000380602. Accessed 19 Oct 2023.

Kundu S. AI in medicine must be explainable. Nat Med. 2021;27(8):1328. https://doi.org/10.1038/s41591-021-01461-z.

Bienefeld N, Boss JM, Lüthy R, Brodbeck D, Azzati J, Blaser M, et al. Solving the explainable AI conundrum by bridging clinicians’ needs and developers’ goals. NPJ Digit Med. 2023;6(1):94. https://doi.org/10.1038/s41746-023-00837-4.

Ribeiro MT, Singh S, Guestrin C. "Why Should I Trust You?" Explaining the Predictions of Any Classifier. Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations. 2016; 97–101. https://doi.org/10.18653/v1/N16-3020.

Lundberg SM, Lee S-I. A unified approach to interpreting model predictions. Adv Neural Inf Process Syst. 2017;30:4768–77.

Acknowledgements

Members of the COMFORT consortium (alphabetically listed by surname):

First name, middle initials (if applicable), last name | Affiliation | ORCID |

|---|---|---|

Nitamar, Abdala | Department of Radiology, Federal University of São Paulo, São Paulo, Brazil | 0000–0002-0421–0959 |

Álvaro, Aceña Navarro | Department of Cardiology, Hospital Universitario Fundación Jiménez Díaz, Madrid, Spain; Department of Medicine, Universidad Autónoma de Madrid, Madrid, Spain | 0000–0002-5975–5761 |

Hugo, J.W.L, Aerts | Artificial Intelligence in Medicine (AIM) Program, Mass General Brigham, Harvard Medical School, Boston, MA, USA; Department of Radiation Oncology and Radiology, Dana-Farber Cancer Institute and Brigham and Women's Hospital, Boston, MA, USA; Radiology and Nuclear Medicine, CARIM & GROW, Maastricht University, Maastricht, The Netherlands | 0000–0002-2122–2003 |

Catarina, Águas | Radiology Department, University of Algarve, Faro, Portugal | 0000–0002-1575–6367 |

Martina, Aineseder | Radiology Department, Hospital Italiano de Buenos Aires, Ciudad Autónoma de Buenos Aires, Argentina | 0000–0002-8733–856 |

Muaed, Alomar | Department of Clinical Sciences, College of Pharmacy and Health Sciences, Ajman University, Ajman, UAE | 0000–0001-6526–2253 |

Salita, Angkurawaranon | Department of Radiology, Faculty of Medicine, Chiang Mai University, Chiang Mai, Thailand | 0000–0001-6211–5717 |

Zachary, G., Angus | Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, Melbourne, Australia, Faculty of Medicine, Nursing and Health Sciences, Monash University, Melbourne, Australia | 0000–0003-3117–6116 |

Eirini, Asouchidou | Anatomy Laboratory, Aristotle University of Thessaloniki, Greece | - |

Sameer, Bakhshi | Department of Medical Oncology, Dr. B.R.A. Institute Rotary Cancer Hospital, All India Institute of Medical Sciences, New Delhi, India | 0000–0001-9367–4407 |

Panagiotis, D., Bamidis | Lab of Medical Physics & Digital Innovation, School of Medicine, Aristotle University of Thessaloniki, Thessaloniki, Greece | 0000–0002-9936–5805 |

Paula, N.V.P., Barbosa | Department of Imaging A.C.Camargo Cancer Center, São Paulo, Brazil | 0000–0002-3231–5328 |

Nuru, Y., Bayramov | Department of I Surgical Diseases, Azerbaijan Medical University, Baku, Azerbaijan | 0000–0001-6958–5412 |

Antonios, Billis | Lab of Medical Physics & Digital Innovation, School of Medicine, Aristotle University of Thessaloniki, Thessaloniki, Greece | 0000–0002-1854–7560 |

Almir, G.V., Bitencourt | Department of Imaging, A.C.Camargo Cancer Center, São Paulo, Brazil | 0000–0003-0192–9885 |

Antonio, J., Bollas Becerra | Department of Cardiology, Hospital Universitario Fundación Jiménez Díaz, Madrid, Spain | 0000–0003-4612–3949 |

Fabrice, Busomoke | Ministry of Health—Byumba Hospital, Byumba, Rwanda | 0009–0002-2520–2488 |

Andreia, Capela | Medical Oncology Department, Centro Hospitalar Vila Nova de Gaia-Espinho, Vila Nova de Gaia, Portugal; Associação de Investigação de Cuidados de Suporte em Oncologia (AICSO), Vila Nova de Gaia, Portugal | 0000–0002-7576–6938 |

Riccardo, Cau | Department of Radiology, Azienda Ospedaliero Universitaria (A.O.U.), di Cagliari—Polo di Monserrato s.s. 554 Monserrato, Cagliari, Italy | 0000–0002-7910–1087 |

Warren, Clements | Department of Radiology, Alfred Health, Melbourne, Australia; Department of Surgery, Monash University Central Clinical School, Melbourne, Australia; National Trauma Research Institute, Melbourne, Australia | 0000–0003-1859–5850 |

Alexandru, Corlateanu | Department of Respiratory Medicine and Allergology, Nicolae Testemițanu State University of Medicine and Pharmacy, Chișinău, Republic of Moldova | 0000–0002-3278-436X |

Renato, Cuocolo | Department of Medicine, Surgery and Dentistry, University of Salerno, Baronissi, Italy | 0000–0002-1452–1574 |

Nguyễn, N., Cương | Radiology Center Hanoi, Medical University Hospital Hanoi, Hanoi, Vietnam | 0000 0001 8809 9583 |

Zenewton, Gama | Department of Collective Health, Federal University of Rio Grande do Norte, Natal, Brazil | 0000–0003-0818–9680 |

Paulo, J., de Medeiros | Department of Integrated Medicine, Federal University of Rio Grande do Norte, Natal-RN, Brazil | 0000–0002-2409–9944 |

Guillermo, de Velasco | Department of Biochemistry and Molecular Biology, School of Biology, Complutense University, Madrid, Spain; Instituto de Investigaciones Sanitarias San Carlos (IdISSC), Madrid, Spain | 0000–0002-1994–2386 |

Vijay, B., Desai | Department of Clinical Sciences, College of Dentistry, Ajman University, Ajman, UAE | 0000–0003-3256–4778 |

Ajaya, K., Dhakal | Department of Pediatrics, KIST Medical College and Teaching Hospital, Kathmandu, Nepal | 0000–0002-2881-655X |

Virginia, Dignum | Department of Computing Science, Umeå University, Umeå, Sweden | 0000–0001-7409–5813 |

Izabela, Domitrz | Department of Neurology, Faculty of Medicine and Dentistry, Medical University of Warsaw, Warsaw, Poland; Bielanski Hospital, Warsaw, Poland | 0000–0003-3130–1036 |

Carlos, Ferrarotti | Department of Diagnostic Imaging, Centro de Educación Médica e Investigaciones Clínicas "Norberto Quirno" (CEMIC), Autonomous City of Buenos Aires, Argentina | - |

Katarzyna, Fułek | Department of Otolaryngology, Head and Neck Surgery, Wroclaw Medical University, Wroclaw Poland | 0000–0002-1147-774X |

Shuvadeep, Ganguly | Department of Medical Oncology, Dr. B.R.A. Institute Rotary Cancer Hospital, All India Institute of Medical Sciences, New Delhi, India | 0000–0002-7296–6088 |

Ignacio, García-Juárez | Department of Gastroenterology and Unit of Liver Transplantation, Instituto Nacional de Ciencias Médicas y Nutrición Salvador Zubirán, Mexico City, Mexico | 0000–0003-2400–1887 |

Cvetanka, Gjerakaroska Savevska | University Clinic for Physical Medicine and Rehabilitation, Ss Cyril and Methodius University, Skopje, Republic of North Macedonia | 0000–0002-2328–4873 |

Marija, Gjerakaroska Radovikj | University Clinic for State Cardiac Surgery, Ss Cyril and Methodius University, Skopje, Republic of North Macedonia | 0000–0003-4916–6178 |

Natalia, Gorelik | Department of Radiology, McGill University Health Center, Montreal, Canada | 0000–0001-9672–6807 |

Valérie, Gorelik | Dawson College, Montreal, Canada | 0009–0004-0184–2231 |

Luis, Gorospe | Department of Radiology, Ramón y Cajal University Hospital, Madrid, Spain | 0000–0002-2305–7064 |

Ian, Griffin | Department of Radiology, University of Florida, Florida, USA | 0009–0006-6565–4971 |

Andrzej, Grzybowski | Institute for Research in Ophthalmology, Foundation for Ophthalmology Development, Poznań, Poland | 0000–0002-3724–2391 |

Alessa, Hering | Department of Radiology and Nuclear Medicine, Radboud University Medical Center, Nijmegen, The Netherlands; Fraunhofer MEVIS, Institute for Digital Medicine, Bremen, Germany | 0000–0002-7602-803X |

Michihiro, Hide | Department of Dermatology, Hiroshima City Hiroshima Citizens Hospital, Hiroshima, Japan | 0000–0002-1569–6034 |

Bruno, Hochhegger | Department of Radiology, University of Florida, Florida, USA | 0000–0003-1984–4636 |

Jochen, G., Hofstaetter | Michael Ogon Laboratory for Orthopaedic Research, Orthopaedic Hospital Vienna-Speising, Vienna, Austria; 2nd Department, Orthopaedic Hospital Vienna-Speising, Vienna, Austria | 0000–0001-7741–7187 |

Mehriban, R., Huseynova | Department of I Surgical Diseases, Azerbaijan Medical University, Baku, Azerbaijan | 0000–0002-4040–5868 |

Oana-Simina, Iaconi | Research Cooperation Unit within the Research Department, National Institute of Research in Medicine and Health, Nicolae Testemițanu State University of Medicine and Pharmacy, Chișinău, Republic of Moldova | 0009–0003-3139–7004 |

Pedro, Iturralde Torres | Subdirección de Diagnóstico y Tratamiento, Instituto Nacional de Cardiología—Ignacio Chávez, Mexico City, Mexico | - |

Nevena, G., Ivanova | Department of Urology and General Medicine, Medical University of Plovdiv, Plovdiv, Bulgaria; Department of Cardiology, Karidad Medical Health Center, Plovdiv, Bulgaria | 0000–0002-4213–8142 |

Juan, S., Izquierdo-Condoy | One Health Research Group, Universidad de Las Américas, Quito, Ecuador | 0000–0002-1178–0546 |

Aidan, B., Jackson | Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, Melbourne, Australia, St Vincent's Hospital Melbourne, Fitzroy 3065, Fitzroy, Australia | 0000–0002-3809–8301 |

Ashish, K., Jha | Department of Nuclear Medicine, Tata Memorial Hospital, Mumbai, India, Homi Bhabha National Institute—Deemed University, Anushaktinagar, Mumbai, India | 0000–0001-5998–3206 |

Nisha, Jha | Clinical Pharmacology and Therapeutics, KIST Medical College and Teaching Hospital, Kathmandu, Nepal | 0000–0003-1089–6042 |

Lili, Jiang | Department of Computing Science, Umeå University, Umeå, Sweden | 0000–0002-7788–3986 |

Rawen, Kader | Division of Surgery and Interventional Sciences, University College London, London, United Kingdom | 0000–0001-9133–0838 |

Padma, Kaul | Department of Medicine, University of Alberta, Edmonton, Alberta, Canada, Canadian VIGOUR Centre, University of Alberta, Edmonton, Alberta, Canada | 0000–0003-2239–3944 |

Gürsan, Kaya | Department of Nuclear Medicine, Hacettepe University, Faculty of Medicine, Ankara, Turkey | 0000–0003-3157–5782 |

Katarzyna, Kępczyńska | Department of Neurology, Faculty of Medicine and Dentistry, Medical University of Warsaw, Warsaw, Poland | - |

Israel, K., Kolawole | Department of Anaesthesia, University of Ilorin/Teaching Hospital, Ilorin, Nigeria | 0000–0001-5823–8685 |

George, Kolostoumpis | European Cancer Patient Coalition (ECPC), Brussels, Belgium | 0000–0001-9768–9526 |

Abraham, Koshy | Department of Gastroenterology, Lakeshore Hospital, Kochi, India | 0000–0002-9997–6569 |

Nicholas, A., Kruger | Orthopaedic Department, University of Cape Town, Cape Town, South Africa | 0000–0002-8543–5745 |

Alexander, Loeser | Berlin University of Applied Sciences and Technology (BHT), Berlin, Germany | 0000–0002-4440–3261 |

Marko, Lucijanic | Department of Hematology, Clinical Hospital Dubrava, Zagreb, Croatia, Department of Internal Medicine, School of Medicine University of Zagreb, Zagreb, Croatia | 0000–0002-1372–2040 |

Stefani, Maihoub | Department of Otorhinolaryngology, Head and Neck Surgery, Semmelweis University, Budapest, Hungary | 0000–0002-3024–6197 |

Sonyia, McFadden | School of Health Sciences, Londonderry, Northern Ireland | 0000–0002-4001–7769 |

Maria, C., Mendez Avila | Department of Imaging, University of Costa Rica, San Jose, Costa Rica | 0009–0000-7124–2662 |

Matúš, Mihalčin | Department of Infectious Diseases, Faculty of Medicine, Masaryk University, Brno, Czech Republic; Department of Infectious Diseases, University Hospital Brno, Brno, Czech Republic | 0000–0002-2946-658X |

Masahiro, Miyake | Department of Ophthalmology and Visual Sciences, Kyoto University Graduate School of Medicine, Kyoto, Japan | 0000–0001-7410–3764 |

Roberto, Mogami | Departamento de Medicina Interna, Faculdade de Ciências Médicas da Universidade do Estado do Rio de Janeiro, Rio de Janeiro, Brazil | 0000–0002-7610–2404 |

András, Molnár | Department of Otorhinolaryngology, Head and Neck Surgery, Semmelweis University, Budapest, Hungary | 0000–0002-4417–5166 |

Wipawee, Morakote | Department of Radiology, Faculty of Medicine, Chiang Mai University, Chiang Mai, Thailand | 0000–0002-8670–7386 |

Issa, Ngabonziza | Ministry of Health—Byumba Hospital, Byumba, Rwanda | 0000–0001-6092-166X |

Trung, Q., Ngo | Department of Urology and Renal Transplantation, People's Hospital 115, Ho Chi Minh City, Vietnam | 0000–0001-8044–6376 |

Thanh, T., Nguyen | Department of Radiology, University of Medicine and Pharmacy, Hue University, Hue, Vietnam | 0000–0001-9379–6359 |

Marc, Nortje | Orthopaedic Department, University of Cape Town, Cape Town, South Africa | 0000–0002-7737-409X |

Subish, Palaian | Department of Clinical Sciences, College of Pharmacy and Health Sciences, Ajman University, Ajman, UAE | 0000–0002-9323–3940 |

Rui, P., Pereira de Almeida | Radiology Department, University of Algarve, Faro, Portugal; Comprehensive Health Research Center, University of Évora, Évora, Portugal | 0000–0001-7524–9669 |

Barbara, Perić | Department of Surgical Oncology, Institute of Oncology Ljubljana, Ljubljana, Slovenia; Faculty of Medicine, University of Ljubljana, Ljubljana, Slovenia | 0000–0001-7228–8267 |

Gašper, Pilko | Department of Surgical Oncology, Institute of Oncology Ljubljana, Ljubljana, Slovenia; Faculty of Medicine, University of Ljubljana, Ljubljana, Slovenia | 0009–0003-0470–2034 |

Monserrat, L., Puntunet Bates | Unidad de Calidad, Instituto Nacional de Cardiología—Ignacio Chávez, Mexico City, Mexico | - |

Mitayani, Purwoko | Medical Biology, Faculty of Medicine Universitas Muhammadiyah Palembang, Palembang, Indonesia | 0000–0002-3936–3883 |

Clare, Rainey | School of Health Sciences, Londonderry, Northern Ireland | 0000–0003-0449–8646 |

João, C., Ribeiro | Coimbra University and Medical School, Coimbra, Portugal | 0000–0002-1039–6358 |

Gaston, A., Rodriguez-Granillo | Centro de Educación Médica e Investigaciones Clínicas "Norberto Quirno" (CEMIC), Autonomous City of Buenos Aires, Argentina | 0000–0003-0820–2611 |

Nicolás, Rozo Agudelo | Instituto Global de Excelencia Clínica, Bogotá D.C, Colombia | 0000–0003-0409–2515 |

Luca, Saba | Department of Radiology, Azienda Ospedaliero Universitaria (A.O.U.), di Cagliari—Polo di Monserrato s.s. 554 Monserrato, Cagliari, Italy | 0000–0003-2870–3771 |

Shine, Sadasivan | Department of Gastroenterology, Amrita Institute of Medical Sciences & Research Centre, Kochi, India | 0000–0001-5676–5000 |

Keina, Sado | Department of Ophthalmology and Visual Sciences, Kyoto University Graduate School of Medicine, Kyoto, Japan | 0009–0002-7596–4325 |

Julia, M., Saidman | Radiology Department, Hospital Italiano de Buenos Aires, Ciudad Autónoma de Buenos Aires, Argentina | 0000–0002-7626–7356 |

Pedro, J., Saturno-Hernandez | AXA Chair in Healthcare Quality, CIEE, National Institute of Public Health, Cuernavaca, Mexico | 0000–0002-4991–5805 |

Gilbert, M., Schwarz | Department of Orthopedics and Trauma Surgery, Medical University of Vienna, Vienna, Austria | 0000–0001-6434–0520 |

Sergio, M., Solis-Barquero | Department of Imaging, University of Costa Rica, San Jose, Costa Rica | 0000–0002-2513–0747 |

Javier, Soto Pérez-Olivares | Department of Radiology, Ramón y Cajal University Hospital, Madrid, Spain | 0000–0002-0858–1394 |

Petros, Sountoulides | Urology Department, Aristotle University of Thessaloniki, Thessaloniki, Greece | 0000–0003-2671-571X |

Arnaldo, Stanzione | Department of Advanced Biomedical Sciences, University of Naples "Federico II", Naples, Italy | 0000–0002-7905–5789 |

Nikoleta, G., Tabakova | Medical University of Varna, Varna, Bulgaria | 0000–0003-4177–3897 |

Konagi, Takeda | Department of Radiology, Hiroshima City Hiroshima Citizens Hospital, Hiroshima, Japan | 0009–0004-3763–5701 |

Satoru, Tanioka | Department of Neurosurgery, Mie University Graduate School of Medicine, Tsu, Japan; Charité Lab for Artificial Intelligence in Medicine, Corporate Member of Freie Universität Berlin, Charité—University Medicine Berlin, Berlin, Germany | 0000–0002-4678–6163 |

Hans, O., Thulesius | Research and Development Department Region Kronoberg, Växjö, Sweden; Department of Medicine and Optometry, Linnaeus University, Kalmar, Sweden | 0000–0002-3785–5630 |

Liz, N., Toapanta-Yanchapaxi | Department of Neurology, Instituto Nacional de Ciencias Médicas y Nutrición Salvador Zubirán, Mexico City, Mexico | 0000–0002-1218–1721 |

Minh, H., Truong | Department of Urology and Renal Transplantation, People's Hospital 115, Ho Chi Minh City, Vietnam; Department of Nephro-Urology and Andrology, Pham Ngoc Thach University of Medicine, Ho Chi Minh City, Vietnam | - |

Murat, Tuncel | Department of Nuclear Medicine, Hacettepe University, Faculty of Medicine, Ankara, Turkey | 0000–0003-2352–3587 |

Elon, H.C., van Dijk | Department of Ophthalmology, Leiden University Medical Center, Leiden, The Netherlands; Department of Ophthalmology, Alrijne Hospital, Leiderdorp, The Netherlands | 0000–0002-6351–7942 |

Peter, van Wijngaarden | Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, Melbourne, Australia; Ophthalmology, Department of Surgery, University of Melbourne, Melbourne, Australia | 0000–0002-8800–7834 |

Lina, Xu | Department of Radiology, Charité – Universitätsmedizin Berlin, Corporate Member of Freie Universität Berlin and Humboldt Universität zu Berlin, Berlin, Germany | 0009–0007-4119–1033 |

Tomasz, Zatoński | Department of Otolaryngology, Head and Neck Surgery, Wroclaw Medical University, Wroclaw, Poland | 0000–0003-3043–4806 |

Longjiang, Zhang | Department of Radiology, Jinling Hospital, Medical School of Nanjing University, Nanjing, China | 0000–0002-6664–7224 |

The authors want to thank Jaime Moujir-López, Javier Blázquez-Sánchez (Department of Radiology, Ramón y Cajal University Hospital, Madrid, Spain), Rubens Chojniak (Department of Imaging, A.C.Camargo Cancer Center, São Paulo, Brazil), and Dania Saad Rammal, Aya Mutasem Baradie, and Farrah Emad Elsubeihi (College of Pharmacy and Health Sciences, Ajman University, Ajman, United Arab Emirates) for supporting the data collection at their institutions. The authors acknowledge financial support from the Open Access Publication Fund of Charité—Universitätsmedizin Berlin.

Funding

Open Access funding enabled and organized by Projekt DEAL. This research is funded by the European Union (COMFORT (Computational Models FOR patienT stratification in urologic cancers – Creating robust and trustworthy multimodal AI for health care), project number: 101079894, authors involved: FB, MRM, LCA, PDB, AB, RC, GDV, VD, AH, LJ, GK, AL, PS, principal investigator: KKB, sponsors' website: https://www.comfort-ai.eu). Views and opinions expressed are, however, those of the authors only and do not necessarily reflect those of the European Union. The European Union cannot be held responsible for them. The funding had no role in the study design, data collection and analysis, manuscript preparation, or decision to publish.

Author information

Authors and Affiliations

Consortia

Contributions

Conceptualization: FB, LH, DT, MRM, KKB, LCA, AB, RC, GdV, LG, AH, LJ, AL, JSPO, PS, LX; Project administration: FB, LH, KKB, LCA; Resources: FB, KKB, LCA, COMFORT consortium; Software: FB, LH, KKB, LCA; Data curation: FB, LH, KKB, LCA; Formal analysis: FB, LH, KKB, LCA, COMFORT consortium; Funding acquisition: FB, MRM, KKB, LCA, PDB, AB, RC, GdV, VD, AH, LJ, GK, AL, PS; Investigation: FB, LH, KKB, LCA; Methodology: FB, LH, DT, KKB, LCA; Supervision: FB, KKB; Validation: FB, LH, DT, EOP, MRM, KKB, LCA, COMFORT consortium; Visualization: FB, EOP, KKB; Writing – original draft preparation: FB, KKB, LCA; Writing – review & editing: FB, LH, DT, EOP, MRM, KKB, LCA, COMFORT consortium. All COMFORT consortia authors equally contributed to the data collection at their institutions, critically revised the final version of the manuscript for intellectual content, gave their final approval of the version to be published, and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval was obtained from the Institutional Review Board at Charité – University Medicine Berlin (EA4/213/22) in compliance with the Declaration of Helsinki and its later amendments. To ensure participant anonymity, the necessity for informed consent was waived.

Consent for publication

Not applicable.

Competing interests

KKB reports grants from the Wilhelm Sander Foundation and receives speaker fees from Canon Medical Systems Corporation. KKB is a member of the advisory board of the EU Horizon 2020 LifeChamps project (875329) and the EU IHI project IMAGIO (101112053). MA reports consultant fees from Segmed, Inc. The competing interests had no role in the study design, data collection and analysis, manuscript preparation, or decision to publish. All other authors declare no financial or non-financial competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Busch, F., Hoffmann, L., Truhn, D. et al. Global cross-sectional student survey on AI in medical, dental, and veterinary education and practice at 192 faculties. BMC Med Educ 24, 1066 (2024). https://doi.org/10.1186/s12909-024-06035-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-024-06035-4