Abstract

In this paper, we propose a new iterative algorithm for solving the multiple-sets split feasibility problem (MSSFP for short) and the split equality fixed point problem (SEFPP for short) with firmly quasi-nonexpansive operators or nonexpansive operators in real Hilbert spaces. Under mild conditions, we prove strong convergence theorems for the algorithm by using the projection method and the properties of projection operators. The result improves and extends the corresponding ones announced by some others in the earlier and recent literature.

Similar content being viewed by others

1 Introduction and preliminaries

Let \(H_{1}\), \(H_{2}\), and \(H_{3}\) be three real Hilbert spaces with inner product \(\langle \cdot,\cdot \rangle \) and induce norm \(\|\cdot \|\). We use \(\mathrm{Fix}(T)\) to denote the set of fixed points of a mapping T.

The split feasibility problem (SFP) in finite-dimensional Hilbert spaces was first introduced by Censor and Elfving [6] for modeling inverse problems which arise from phase retrievals and in medical image reconstruction [3]. The SFP can be formulated as finding a point \(x^{*}\) in \(\mathbb{R}^{n}\) with the property

where C and Q are nonempty closed convex subsets of \(\mathbb{R}^{n}\) and \(\mathbb{R}^{m}\), respectively, and A is an \(m \times n\) matrix. SFP (1.1) has recently been studied in a more general space. For example, Xu [21] studied it in an infinite dimensional Hilbert space.

The SFP has been widely studied in recent years. Recently, it has been found that it can also be used to model the intensity-modulated radiation therapy; see, e.g., [7–11]. One of the well-known methods for solving the SFP is Byrne’s CQ algorithm [3, 4], which generated a sequence \(\{x_{n}\}\) by the following iterative algorithm:

where C and Q are nonempty closed convex subsets of \(H_{1}\) and \(H_{2}\) and the step size \(\tau _{n}\) is located in the interval \((0,2/\|A\|^{2})\), \(A^{*}\) is the adjoint of A, \(P_{C}\) and \(P_{Q}\) are the metric projections onto C and Q.

The multiple-set split feasibility problem (MSSFP), which has functions in the inverse problem of intensity-modulated radiation therapy (see [18]), has recently been presented in [5] and is formulated as follows:

where \(r_{1},r_{2}\in N, C_{1},\ldots,C_{r_{1}}\) are closed convex subsets of \(H_{1}\), \(Q_{1},\ldots,Q_{r_{2}}\) are closed convex subsets of \(H_{2}\), and \(A:H_{1}\rightarrow H_{2}\) is a bounded linear operator.

Assuming consistency of the MSSFP, Censor et al. [5] introduced the following projection algorithm:

where \(0<\gamma <\frac{2}{L}\) with \(L=\sum_{i=1}^{r_{1}}\alpha _{i}+\rho (A^{*}A)\sum_{j=1}^{r_{2}} \beta _{j}\) and \(\rho (A^{*}A)\) is the spectral radius of \(A^{*}A\). They proved convergence of algorithm (1.4) in the case where both \(H_{1} and H_{2}\) are finite dimensional.

Moudafi [17] came up with the split equality problem (SEP) as follows:

where \(A:H_{1}\rightarrow H_{3}\), \(B:H_{2}\rightarrow H_{3}\) are two bounded linear operators, \(C\subset H_{1}\), \(Q\subset H_{2}\) are two nonempty closed convex sets. Let \(B=I\), it is easy to see that the SFP is the special case of the SEP. The SEP has already been applied in game theory (see [1]) and intensity-modulated radiation therapy [5, 12]. Furthermore, the author considered the following scheme for solving the SEP:

He obtained a weak convergence of (1.6) under certain appropriate assumptions on the parameters.

Shi [19] proposed a modification of Moudafi’s ACQA algorithms to solve the SEP and proved its strong convergence:

i.e.,

Recently, Moudafi [16] introduced the following split equality fixed point problem (SEFPP):

where \(U:H_{1}\rightarrow H_{1}\) and \(T:H_{2}\rightarrow H_{2}\) are two firmly quasi-nonexpansive operators. The SEFPP has been proved very useful in decomposition methods for PDEs as well as in game theory and intensity-modulated radiation therapy. For solving SEFPP (1.9), he proposed the following iterative algorithm:

Further, he proved a weak convergence theorem for SEFPP (1.9) under some mild restrictions on the parameters.

In this paper, we introduce a multiple-sets split feasibility problem (MSSFP) and a split equality fixed point problem (SEFPP), the MSSFP is to find a pair \((x,y)\) such that

The SEFPP is to find a pair \((x,y)\) such that

where \(T_{1}, T_{2}\) are two firmly quasi-nonexpansive operators or nonexpansive operators, and \(A_{1}:H_{1}\rightarrow H_{3}, A_{2}:H_{1}\rightarrow H_{3}, B_{1}:H_{2} \rightarrow H_{3}, B_{2}:H_{2}\rightarrow H_{3}\) are four bounded linear operators. \(C_{i}\in H_{1},i=1,2,\ldots,t_{1}; Q_{j}\in H_{2},j=1,2,\ldots,r_{1}; D_{i}\in H_{3},i=1,2,\ldots,t_{2}; \Theta _{j}\in H_{3},j=1,2 ,\ldots,r_{2}\), are nonempty closed convex subsets.

Guan [15] proposed a new iterative scheme to solve the above problems:

and

Further, he proved a weak convergence theorem under some mild restrictions on the parameters.

Inspired by the results, we propose the following questions.

Question 1.1

Can we modify iterative scheme (1.8) to a more general iterative scheme for solving a multiple-sets split feasibility problem and a split equality fixed point problem instead of solving the split equality problem?

Question 1.2

Can we obtain a strong convergence by the iterative scheme for MSSFP and SEFPP?

The purpose of this paper is to construct a new algorithm for MSSFP and SEFPP so that strong convergence is guaranteed. The paper is organized as follows. In Sect. 2, we denote the concept of minimal norm solution of MSSFP and SEFPP. Using Tychonov regularization, we obtain a net of solutions for some minimization problem approximating such minimal norm solutions (see Theorem 2.5). In Sect. 3, we introduce an algorithm and prove the strong convergence of the algorithm, more importantly, its limit is the minimum-norm solution of MSSFP and SEFPP (see Theorem 3.2).

Throughout the rest of this paper, let I denote the identity operator on a Hilbert space H, and let ▽f denote the gradient of the function \(f:H\rightarrow R\).

Definition 1.3

([21])

An operator T on a Hilbert space H is nonexpansive if, for each x and y in H,

T is said to be strictly nonexpansive if, for each x and y in H,

An operator T on a Hilbert space H is firmly nonexpansive if, for each x and y in H,

T is firmly nonexpansive if \(2T-I\) is nonexpansive, Equivalently, \(T=(I+S)/2\), where \(S: H\rightarrow H\) is nonexpansive.

T is said to be averaged if there exist \(0<\alpha <1\) and a nonexpansive operator N such that

T is said to be quasi-nonexpansive if \(F(T)\neq \emptyset \) for each x in H, q in \(F(T)\),

T is said to be strictly quasi-nonexpansive if \(F(T)\neq \emptyset \) for each x in H, q in \(F(T)\),

T is said to be firmly quasi-nonexpansive if \(F(T)\neq \emptyset \) for each x in H, q in \(F(T)\),

Let \(P_{S}\) denote the projection from H onto a nonempty closed convex subset S of H; that is,

It is well known that \(P_{S}(w)\) is characterized by the inequality

\(P_{S} and (I-P_{S})\) are nonexpansive, averaged, and firmly nonexpansive.

Next we should collect some elementary facts which will be used in the proofs of our main results.

Lemma 1.4

Let X be a Banach space, C be a closed convex subset of X, and \(T: C\rightarrow C\) be a nonexpansive mapping with \(\mathrm{Fix}(T)\neq \emptyset \). If \(\{x_{n}\}\) is a sequence in C weakly converging to x and if \(\{(I-T)x_{n}\}\) converges strongly to y, then \((I-T)x=y\).

Lemma 1.5

([2])

Let \(\{s_{n}\}\) be a sequence of nonnegative real numbers, \(\{\alpha _{n}\}\) be a sequence of real numbers in [0,1] with \(\sum_{n=1}^{\infty }\alpha _{n}=\infty \), \(\{u_{n}\}\) be a sequence of nonnegative real numbers with \(\sum_{n=1}^{\infty }u_{n}<\infty \), and \(\{t_{n}\}\) be a sequence of real numbers with \(\limsup_{n}t_{n}\leq 0\). Suppose that

Then \(\lim_{n\rightarrow \infty }s_{n}=0\).

Lemma 1.6

([20])

Let \(\{w_{n}\}, \{z_{n}\}\) be bounded sequences in a Banach space, and let \(\{\beta _{n}\}\) be a sequence in [0,1] which satisfies the following condition:

Suppose that \(w_{n+1}=(1-\beta _{n})w_{n}+\beta _{n}z_{n}\) and \(\limsup_{n\rightarrow \infty }\|z_{n+1}-z_{n}\|-\|w_{n+1}-w_{n}\|\leq 0\), then \(\lim_{n\rightarrow \infty }\|z_{n}-w_{n}\|=0\).

Lemma 1.7

([4])

Let f be a convex and differentiable function, and let C be a closed convex subset of H. Then \(x\in C\) is a solution of the problem

if and only if \(x\in C\) satisfies the following optimality condition:

Moreover, if f is, in addition, strictly convex and coercive, then the minimization problem has a unique solution.

Lemma 1.8

([4])

Let \(A,B\) be averaged operators and suppose that \(\mathrm{Fix}(A)\cap \mathrm{Fix}(B)\) is nonempty. Then \(\mathrm{Fix}(A)\cap \mathrm{Fix}(B)=\mathrm{Fix}(AB)=\mathrm{Fix}(BA)\).

2 Minimum-norm solution of SEFPP and MSSFP

In this section, we define the concept of the minimal norm solution of MSSFP (1.11) and SEFPP (1.12). Using Tychonov regularization, we obtain a net of solutions for some minimization problems approximating such minimal norm solutions.

We use Γ to denote the solution set of SEFPP and MSSFP, i.e.,

and assume the consistency of SEFPP and MSSFP, so that Γ is closed, convex, and nonempty.

We aim to propose a new iterative algorithm for solving MSSFP (1.11) and SEFPP (1.12). Let the sets \(C_{i}, Q_{i}, D_{i}, \Theta _{i}\) be defined as

and

where \(c_{i}:H_{1}\rightarrow \mathbb{R},i=1,2,\ldots,t_{1};q_{i}:H_{2} \rightarrow \mathbb{R},j=1,2,\ldots,r_{1};d_{i}:H_{3}\rightarrow \mathbb{R},i=1,2,\ldots,t_{2}\); and \(\phi _{i}:H_{3}\rightarrow \mathbb{R},j=1,2,\ldots,r_{2}\), are convex functions.

In order to solve MSSFP (1.11) and SEFPP (1.12), we consider the following minimization problem:

where

here \(\sum_{i=1}^{t_{1}}\alpha _{i}=\sum_{i=1}^{t_{2}}\beta _{i}= \sum_{j=1}^{r_{1}}\gamma _{j}=\sum_{j=1}^{r_{2}}\delta _{j}=1\). The minimization problem is in general ill-posed. A classical way to deal with such a possibly ill-posed problem is the well-known Tychonov regularization, which approximates a solution of problem (2.3) by the unique minimizer of the regularized problem

where \(\alpha >0\) is the regularization parameter. Denote by \(w_{\alpha }=(x_{\alpha },y_{\alpha })\) the unique solution of (2.4).

Lemma 2.1

For the sake of convenience, let \(H=H_{1}\times H_{2}\), define:

and

where \(G:H\rightarrow H_{3}\) and \(G^{*}G:H\rightarrow H\), then \(M, \lambda _{1}N\) and \(\lambda _{2}G^{*}G\) are firmly nonexpansive operators, where \(0<\lambda _{1}<1/(\max \{\rho (A_{1}^{*}A_{1}),\rho (B_{1}^{*}B_{1})\})\) and \(0<\lambda _{2}<1/\rho (G^{*}G)\).

Proof

By \((I-P_{S})\) and \(P_{S}\) are firmly nonexpansive operators, \(x=(x_{1},x_{2})\in H_{1}\times H_{2}, y=(y_{1},y_{2})\in H_{1} \times H_{2}. \|Mx-My\|^{2}=\|\sum_{i=1}^{t_{1}}\alpha _{i}(I-P_{C_{i}})x_{1}- \sum_{i=1}^{t_{1}}\alpha _{i}(I-P_{C_{i}})y_{1}\|^{2}+\|\sum_{j=1}^{r_{1}} \gamma _{j}(I-P_{Q_{j}})x_{2}-\sum_{j=1}^{r_{1}}\gamma _{j}(I-P_{Q_{j}})y_{2} \|^{2}\leq \langle x_{1}-y_{1}, \sum_{i=1}^{t_{1}}\alpha _{i}(I-P_{C_{i}})x_{1}- \sum_{i=1}^{t_{1}}\alpha _{i}(I-P_{C_{i}})y_{1}\rangle +\langle x_{2}-y_{2}, \sum_{j=1}^{r_{1}}\gamma _{j}(I-P_{Q_{j}})x_{2}-\sum_{j=1}^{r_{1}} \gamma _{j}(I-P_{Q_{j}})y_{2}\rangle =\langle x-y,Mx-My\rangle \), so M is a firmly nonexpansive operator. Similarly, we can prove that \(\lambda _{1}N\) and \(\lambda _{2}G^{*}G\) are firmly nonexpansive operators. □

Proposition 2.2

Let \(T=T_{1}\times T_{2}\), which is mentioned in (1.12), \(w=(x,y)\). For any \(\alpha >0\), the solution \(w_{\alpha }=(x_{\alpha },y_{\alpha })\) of (2.4) is uniquely defined. Then \(w_{\alpha }=(x_{\alpha },y_{\alpha })\) is characterized by the inequality

i.e.,

and

Proof

It is well known that \(h(x,y)=\frac{1}{2}\sum_{i=1}^{t_{1}}\alpha _{i}\|(I-P_{C_{i}})x\|^{2}+ \frac{1}{2}\sum_{i=1}^{t_{2}}\beta _{i}\|(I-P_{D_{i}})A_{1}x\|^{2}+ \frac{1}{2}\sum_{j=1}^{r_{1}}\gamma _{j}\|(I-P_{Q_{j}})y\|^{2}+ \frac{1}{2}\sum_{j=1}^{r_{2}}\delta _{j}\|(I-P_{\Theta _{j}})B_{1}y \|^{2}+\frac{1}{2}\|A_{2}x-B_{2}y\|^{2}\) is convex and differentiable with gradient \(\bigtriangledown h(w)=Mw+Nw+G^{*}Gw, h_{\alpha }(w)=h(w)+\frac{1}{2} \alpha \|w\|^{2}\). We can get that \(h_{\alpha }\) is strictly convex, coercive, and differentiable with gradient

It follows from Lemma 1.7 that \(w_{\alpha }\) is characterized by the inequality

We can get that

and

□

Definition 2.3

An element \(\bar{w}=(\bar{x},\bar{y})\in \Gamma \) is said to be the \(minimal norm solution\) of MSSFP (1.11) and SEFPP (1.12) if \(\|\bar{w}\|=\inf_{w\in \Gamma } \|w\|\).

The next result collects some useful properties of \(\{w_{\alpha }\}\), the unique solution of (2.4).

Proposition 2.4

Let \(w_{\alpha }\) be given as the unique solution of (2.4) for any sequence \(\{\alpha _{n}\}\) such that \(\lim_{n}\alpha _{n}=0\), let \(w_{\alpha _{n}}\) be abbreviated as \(w_{n}\). Then the following assertions hold:

-

(i)

\(\|w_{\alpha }\|\) is decreasing for \(\alpha \in (0,\infty )\);

-

(ii)

\(\alpha \mapsto w_{\alpha }\) defines a continuous curve from \((0,\infty )\) to H.

Proof

Let \(\alpha >\beta >0\); since \(w_{\alpha }\) and \(w_{\beta }\) are the unique minimizers of \(h_{\alpha }\) and \(h_{\beta }\), \(w_{\alpha }=(x_{\alpha },y_{\alpha }), w_{\beta }=(x_{\beta },y_{\beta })\), respectively, we can get that

and

Hence we can obtain that \(\|w_{\alpha }\|\leq \|w_{\beta }\|\). That is to say, \(\|w_{\alpha }\|\) is decreasing for \(\alpha \in (0,\infty )\).

By Proposition 2.2, we have

and

It follows that

Then

and

Then

Hence

It turns out that

Thus \(\alpha \mapsto w_{\alpha }\) defines a continuous curve from \((0,\infty )\) to H. □

Theorem 2.5

Let \(w_{\alpha }\) be given as the unique solution of (2.4). Then \(w_{\alpha }\) converges strongly as \(\alpha \rightarrow 0\) to the minimum-norm solution  of MSSFP (1.11) and SEFPP (1.12).

of MSSFP (1.11) and SEFPP (1.12).

Proof

For any \(0<\alpha <\infty, w_{\alpha }\) is given as (2.4), it follows that

Since \(\bar{w}\in \Gamma \) is a solution for MSSFP and SEFPP, we get

Hence, \(\|w_{\alpha }\|\leq \|\bar{w}\|\) for all \(\alpha >0\). That is to say, \(\{w_{\alpha }\}\) is a bounded net in \(H=H_{1}\times H_{2}\).

For any sequence \(\{\alpha _{n}\}\) such that \(\lim_{n}\alpha _{n}=0\), let \(w_{\alpha _{n}}\) be abbreviated as \(w_{n}\). All we need to prove is that \(\{w_{n}\}\) contains a subsequence converging strongly to w̄.

Indeed \(\{w_{n}\}\) is bounded and \(\mathrm{Fix}(T)\) is bounded convex. By passing to a subsequence if necessary, we may assume that \(\{w_{n}\}\) converges weakly to a point \(\hat{w} \in \mathrm{Fix}(T)\). By Proposition 2.2, we get that

and

It follows that

i.e.,

and

i.e.,

By \(\bar{w}\in \Gamma \),

we have

Furthermore, note that \(\{w_{n}\}\) converges weakly to a point \(\hat{w}\in \mathrm{Fix}(T)\), then \(\{h(w_{n})\}\) converges weakly to \(h(\hat{w})\). It follows that \(h(\hat{w})=0\), i.e., \(\hat{w}\in \Gamma \).

By (2.11),

i.e.,

Then

we have

Consequently, that \(\{w_{n}\}\) converges weakly to ŵ actually implies that \(\{w_{n}\}\) converges strongly to ŵ. At last, we prove that \(\hat{w}=\bar{w}\), and this finishes the proof.

Since \(\{w_{n}\}\) converges weakly to ŵ and \(\|w_{n}\|\leq \|\bar{w}\|\), we can get that

This shows that ŵ is also a point in Γ which assumes a minimum norm. Due to the uniqueness of a minimum-norm element, we obtain \(\hat{w}=\bar{w}\). □

Finally, we introduce another method to get the minimum-norm solution of MSSFP and SEFPP.

Lemma 2.6

Let \(S=I-\sigma _{1}M-\sigma _{2}\lambda _{1}N-\sigma _{3}\lambda _{2}G^{*}G\), where \(0<\lambda _{1}<1/(\max \{\rho (A_{1}^{*}A_{1}), \rho (B_{1}^{*}B_{1})\}), 0<\lambda _{2}<1/\rho (G^{*}G), \sigma _{i}>0, i=1, 2, 3\). \(\sigma _{1}+\sigma _{2}+\sigma _{3}\leq 1\) with \(\rho (A_{1}^{*}A_{1}), \rho ( B_{1}^{*}B_{1}), \rho (G^{*}G)\) being the spectral radius of the self-adjoint operator \(A_{1}^{*}A_{1}, B_{1}^{*}B_{1}, G^{*}G\) on H, then we have the following:

(1) \(\|S\|\leq 1\) (i.e., S is nonexpansive) and averaged;

(2) \(\mathrm{Fix}(S)=\{(x,y)\in H_{1}\times H_{2}, x \in \bigcap_{i=1}^{t_{1}}C_{i}, y \in \bigcap_{j=1}^{r_{1}}Q_{j}, A_{1}x \in \bigcap_{i=1}^{t_{2}}D_{i} , B_{1} y \in \bigcap_{j=1}^{r_{2}}\Theta _{j},A_{2}x=B_{2}y\}, \mathrm{Fix}(P_{\mathrm{Fix}(T)}S)=\mathrm{Fix}(P_{\mathrm{Fix}(T)}) \cap \mathrm{Fix}(S)=\Gamma \);

(3) \(w\in \mathrm{Fix}(P_{\mathrm{Fix}(T)}S)\) if and only if w is a solution of the variational inequality \(\langle \bigtriangledown h(x,y), v-w\rangle, \forall v\in \mathrm{Fix}(T)\).

Proof

(1)

\(\lambda _{2}\|G^{*}G(x-y)\|\leq \|x-y\|\).

Let \(S_{1}=\sigma _{1}M+\sigma _{2}\lambda _{1}N+\sigma _{3}\lambda _{2}G^{*}G\), we have

We can get that \(S_{1}\) is a nonexpansive operator.

so \(\|S\|\leq 1\), i.e., S is nonexpansive.

Indeed, let \(\eta \in (0,1)\) such that \((\sigma _{1}+\sigma _{2}+\sigma _{3})/(1-\eta )\in (0,1]\), then \(S=I-\sigma _{1}M-\sigma _{2}\lambda _{1}N-\sigma _{3}\lambda _{2}G^{*}G= \eta I+(1-\eta )V\), where \(V=I-\frac{1}{1-\eta }(\sigma _{1}M+\sigma _{2}\lambda _{1}N+\sigma _{3} \lambda _{2}G^{*}G)\) is a nonexpansive mapping. That is to say, S is averaged.

(2) If \(w\in \{(x,y)\in H_{1}\times H_{2},x \in \bigcap_{i=1}^{t_{1}}C_{i},y \in \bigcap_{j=1}^{r_{1}}Q_{j},A_{1}x \in \bigcap_{i=1}^{t_{2}}D_{i} ,B_{1} y \in \bigcap_{j=1}^{r_{2}}\Theta _{j},A_{2}x=B_{2}y\}\), it is obvious that \(w\in \mathrm{Fix}(S)\). Conversely, assuming that \(w\in \mathrm{Fix}(S)\), we have \(w=w-\sigma _{1}Mw-\sigma _{2}\lambda _{1}Nw-\sigma _{3}\lambda _{2}G^{*}Gw\), hence \(\sigma _{1}Mw+\sigma _{2}\lambda _{1}Nw+\sigma _{3}\lambda _{2}G^{*}Gw=0, \forall \breve{w}\in \Gamma \),

This leads to \(w\in \{(x,y)\in H_{1} \times H_{2},x \in \bigcap_{i=1}^{t_{1}}C_{i},y \in \bigcap_{j=1}^{r_{1}}Q_{j},A_{1}x \in \bigcap_{i=1}^{t_{2}}D_{i} ,B_{1} y \in \bigcap_{j=1}^{r_{2}}\Theta _{j}, A_{2}x=B_{2}y\}\), it is obvious that \(w\in \mathrm{Fix}(S)\).

(3)

□

Remark 2.7

Take constants \(\lambda _{1} and \lambda _{2} \), where \(0<\lambda _{1}<1/(\max \{\rho (A_{1}^{*}A_{1}), \rho (B_{1}^{*}B_{1}) \} ), 0<\lambda _{2}<1/\rho (G^{*}G)\), with \(\rho (A_{1}^{*}A_{1}), \rho (B_{1}^{*}B_{1}), \rho (G^{*}G)\) being the spectral radius of the self-adjoint operator \(A_{1}^{*}A_{1}, B_{1}^{*}B_{1}, G^{*}G\). For \(\tau _{1}\in (0,(1-\lambda _{1}(\max \{\|A_{1}^{*}A_{1}\|, \|B_{1}^{*}B_{1} \|\}))/\sigma _{2} \lambda _{1}), \tau _{2}\in (0,(1-\lambda _{2}\|G^{*}G \|)/{\sigma _{3}\lambda _{2}}), \tau =\min \{\tau _{1},\tau _{2}\}, (\sigma _{1}+\sigma _{2}+\sigma _{3})/(1-\sigma _{2}\lambda _{1}\tau - \sigma _{3}\lambda _{2}\tau )\in (0,1)\), we define a mapping

It is easy to check that \(W_{\alpha }\) is contractive. So, \(W_{\alpha }\) has a unique fixed point denoted by \(w_{\alpha }\), that is,

Theorem 2.8

Let \(w_{\alpha }\) be given as (2.12). Then \(w_{\alpha }\) converges strongly as \(\alpha \rightarrow 0\) to the minimum-norm solution w̄ of MSSFP and SEFPP.

Proof

Let w̆ be a point in Γ. \(I-\sigma _{1}/(1-\sigma _{2}\lambda _{1}\tau -\sigma _{3}\lambda _{2} \tau )M-\sigma _{2}\lambda _{1}/(1-\sigma _{2}\lambda _{1}\tau - \sigma _{3}\lambda _{2}\tau )N-\sigma _{3}\lambda _{2}/(1-\sigma _{2} \lambda _{1}\tau -\sigma _{3}\lambda _{2}\tau )G^{*}G\) is nonexpansive. It follows that

Hence,

Then \(\{w_{\alpha }\}\) is bounded.

From (2.12), we have

Next we show that \(w_{\alpha }\) is relatively norm compact as \(\alpha \rightarrow 0^{+}\). In fact, assuming that \(\{\tau _{n}\} \subseteq (0,\min \{(1-\lambda _{1}(\max \{\|A_{1}^{*}A_{1} \|, \|B_{1}^{*}B_{1}\|\}))/\sigma _{2}\lambda _{1}, (1-\lambda _{2} \|G^{*}G\|)/{\sigma _{3}\lambda _{2}}\})\) is such that \(\tau _{n}\rightarrow 0^{+}\) as \(n\rightarrow \infty \). Put \(w_{n}:=w_{\alpha _{n}}\), we have the following:

We deduce that

Therefore,

In particular,

Since \(\{w_{n}\}\) is bounded, there exists a subsequence of \(\{w_{n}\}\) which converges weakly to a point w̄. Without loss of generality, we may assume that \(\{w_{n}\}\) converges weakly to w̄. Notice that

and by Lemma 1.4, we can get \(\bar{w}\in \mathrm{Fix}(TS)=\Gamma \).

By

we have

Consequently, that \(\{w_{n}\}\) converges weakly to w̄ actually implies that \(\{w_{n}\}\) converges strongly to w̄. That is to say, \(\{w_{\alpha }\}\) is relatively norm compact as \(\alpha \rightarrow 0^{+}\).

On the other hand, by

let \(n\rightarrow \infty \), we have

This implies that

which is equivalent to

It follows that \(\bar{w}\in P_{\mathrm{Fix}(T)}(0)\). Therefore, each cluster point of \(w_{\alpha }\) equals w̄. So \(w_{\alpha }\rightarrow \bar{w}(\alpha \rightarrow 0)\) is the minimum-norm solution of SFP and SEFPP. □

3 Main results

In this section, we introduce the following algorithm to solve MSSFP and SEFFP. The purpose for such a modification lies in the hope of strong convergence.

Algorithm 3.1

For an arbitrary point \(w_{0}=(x_{0},y_{0})\in H=H_{1}\times H_{2}\), the sequence \(\{w_{n}\}=\{(x_{n},y_{n})\}\) is generated by the iterative algorithm

i.e.,

and

where \(\tau _{n}>0\) is a sequence in (0,1) such that

-

(i)

\(\lim_{n}\tau _{n}=0\);

-

(ii)

\(\sum_{n=0}^{\infty }\tau _{n}=\infty \);

-

(iii)

\(\sum_{n=0}^{\infty }|\tau _{n+1}-\tau _{n}|<\infty \) or \(\lim_{n}|\tau _{n+1}-\tau _{n}|/\tau _{n}=0\).

Now, we prove the strong convergence of the iterative algorithm.

Theorem 3.2

The sequence \(\{w_{n}\}\) generated by Algorithm 3.1converges strongly to the minimum-norm solution w̄ of MSSFP and SEFPP.

Proof

Let \(R_{n}\) and R be defined by

where \(S=I-\sigma _{1}M-\sigma _{2}\lambda _{1}N-\sigma _{3}\lambda _{2}G^{*}G\). By Lemma 2.6 it is easy to see that \(R_{n}\) is a contraction with contractive constant \((1-\tau _{n})\); and Algorithm 3.1 can be written as \(w_{n+1}=R_{n}w_{n}\).

For any \(\breve{w}\in \Gamma \), we have

Hence

It follows that \(\|w_{n}-\breve{w}\|\leq \max \{\|w_{0}-\breve{w}\|,|\breve{w}\|\}\). So \(\{w_{n}\}\) and \(\{Sw_{n}\}\) are bounded.

Next we prove that \(\lim_{n}\|w_{n+1}-w_{n}\|=0\).

Indeed,

Notice that

Hence,

By virtue of assumptions (i)–(iii) and Lemma 1.5, we have

Therefore,

The demiclosedness principle ensures that each weak limit point of \(\{w_{n}\}\) is a fixed point of the nonexpansive mapping \(R=TS\), that is, a point of the solution set Γ of MSSFP and SEFPP.

At last, we will prove that \(\lim_{n}\|w_{n+1}-\bar{w}\|=0\).

Choose \(0<\delta <1\) such that \((\sigma _{1}+\sigma _{2}+\sigma _{3})/(1-\delta )\in (0,1)\), then \(S=I-\sigma _{1}M-\sigma _{2}\lambda _{1}N-\sigma _{3}\lambda _{2}G^{*}G= \delta I+(1-\delta )V\), where \(V=I-\sigma _{1}/(1-\delta )M-\sigma _{2}\lambda _{1}/(1-\delta )N- \sigma _{3}\lambda _{2}/(1-\delta )G^{*}G\) is a nonexpansive mapping. Taking \(z\in \Gamma \), we deduce that

Then

Note that \(S=I-\sigma _{1}M-\sigma _{2}\lambda _{1}N-\sigma _{3}\lambda _{2}G^{*}G= \delta I+(1-\delta )V\), it follows that \(\lim_{n}\|Sw_{n}-w_{n}\|=0\).

Take a subsequence \(\{w_{n_{k}}\}\) of \(\{w_{n}\}\) such that \(\limsup_{n}\langle w_{n}-\bar{w},-\bar{w}\rangle =\lim_{k}\langle w_{n_{k}}- \bar{w},-\bar{w}\rangle \).

By virtue of the boundedness of \(\{w_{n}\}\), we may further assume, with no loss of generality, that \(w_{n_{k}}\) converges weakly to a point w̆. Since \(\|Rw_{n}-w_{n}\|\rightarrow 0\), using the demiclosedness principle, we know that \(\breve{w}\in \mathrm{Fix}(R)=\mathrm{Fix}(P_{\mathrm{Fix}(T)}S)=\Gamma \). Noticing that w̄ is the projection of the origin onto Γ, we get that

Finally, we compute

Since \(\limsup_{n}\langle w_{n}-\bar{w},-\bar{w}\rangle \leq 0, \|Sw_{n}-w_{n} \|\rightarrow 0\), we know that \(\limsup_{n}(\tau _{n}\|\bar{w}\|^{2}+2(1-\tau _{n})\langle Sw_{n}- \bar{w},-\bar{w}\rangle )\leq 0\). By Lemma 1.5, we conclude that \(\lim_{n}\|w_{n+1}-\bar{w}\|=0\). This completes the proof. □

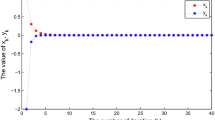

4 Numerical experiments

We provide a numerical example to illustrate the effectiveness of our algorithm. The program was written in Mathematica. All results are carried out on a personal DELL computer with Intel(R) Core(TM)i5-5200 CPU @ 2.20 GHz and RAM 4.00 GB.

In this algorithm, we take \(\mathrm{error} = 10^{-5}, 10^{-7}, 10^{-10}, 10^{-12}, 10^{-15}\), respectively. We consider the split feasibility problem (1.1) with \(H_{1}=\mathbb{R}, H_{2}=\mathbb{R}\), \(C=(-\infty,0]\), \(Q=(-\infty,0]\), \(D=(-\infty,0]\), \(\Theta =(-\infty,0]\). \(T_{1}x=x, T_{2}y=y\), \(A_{1}=B_{1}=A_{2}=1, B_{2}=-1\), \(\sigma _{1}=\sigma _{2}=\sigma _{3}=\frac{1}{3}\), \(\lambda _{1}=\frac{1}{\|A_{1}\|^{2}}, \lambda _{2}=1\). Take \(\tau _{n}=\frac{2}{3}\), an initial point \(x_{1} = -20, y_{1} = -10\). Obviously, \(x^{*}= 0, y^{*} = 0\) is a solution of this problem. In consideration of Algorithm 3.1, we have

As for iterative method (1.13) and (1.14), we take \(H_{1}=\mathbb{R}, H_{2}=\mathbb{R}\), \(C=(-\infty,0]\), \(Q=(-\infty,0]\), \(D=(-\infty,0]\), \(\Theta =(-\infty,0]\). \(T_{1}x=x, T_{2}y=y\), \(A_{1}=B_{1}=A_{2}=1, B_{2}=-1\), \(\lambda =\xi =\sigma =\zeta =\frac{1}{3}\). Take \(\tau =\frac{1}{8}\), an initial point \(x_{1} = -20, y_{1} = -10\).

In consideration of algorithms (1.13) and (1.14), we have

From Table 1, it is easy to see that our iterative method converges faster in less time.

5 Conclusions

The paper proposed a new iterative method to solve the split equality fixed point problem of firmly quasi-nonexpansive or nonexpansive operators and multiple-sets split feasibility problem and obtained a strong convergence result without any semi-compact assumption imposed on operators. The results improved and unified many recent results.

Availability of data and materials

All data generated or analysed during this study are included in this published article.

References

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Alternating proximal algorithms for weakly coupled minimization problems. Applications to dynamical games and PDE’s. J. Convex Anal. 15, 485–506 (2008)

Aoyama, K., Kimura, Y., Takahashi, W., Toyoda, M.: Approximation of common fixed points of a countable family of nonexpansive mappings in a Banach space. Nonlinear Anal., Theory Methods Appl. 67(8), 2350–2360 (2007)

Byrne, C.: Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 18, 441–453 (2002)

Byrne, C.: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103–120 (2004)

Censor, Y., Elfving, T., Kopf, N., Bortfeld, T.: The multiple-sets split feasibility problem and its applications for inverse problems. Inverse Probl. 21, 2071–2084 (2005)

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms. 8, 221–239 (1994)

Ceng, L.C., Yao, J.C.: Relaxed and hybrid viscosity methods for general system of variational inequalities with split feasibility problem constraint. Fixed Point Theory Appl. 2013, Article ID 43 (2013)

Ceng, L.C., Ansari, Q.H., Yao, J.C.: Mann type iterative methods for finding a common solution of split feasibility and fixed point problems. Positivity. 16, 471–495 (2012)

Ceng, L.C., Liou, Y.C., Wen, C.F.: Extragradient method for convex minimization problem. J. Inequal. Appl. 2014, 444 (2014)

Ceng, L.C., Pang, C.T., Wen, C.F.: Multi-step extragradient method with regularization for triple hierarchical variational inequalities with variational inclusion and split feasibility constraints. J. Inequal. Appl. 2014, 492 (2014)

Ceng, L.C., Xu, H.K., Wen, C.F.: Relaxed viscosity approximation methods with regularization for constrained minimizaBtion problems. J. Appl. Math. 2013, 131–137 (2013)

Censor, Y., Bortfel, D., Martin, B., Trofimov, A.: A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 51, 2353–2365 (2006)

Geobel, K., Kirk, W.A.: Topics in Metric Fixed Point Theory. Cambridge Studies in Advanced Mathematics, vol. 28. Cambridge University Press, Cambridge (1990)

Geobel, K., Reich, S.: Uniform Convexity, Nonexpansive Mappings, and Hyperbolic Geometry. Dekker, New York (1984)

Guan, J.L.: A new iterative algorithm for the multiple-sets split feasibility problem and the split equality fixed point problem. Mediterr. J. Math. 18, 19 (2021)

Moudafi, A.: Alternating CQ-algorithm for convex feasibility and split fixed-point problems. J. Nonlinear Convex Anal. 15, 809–818 (2014)

Moudafi, A.: A relaxed alternating CQ-algorithm for convex feasibility problems. Nonlinear Anal. 79, 117–121 (2013)

Palta, R.J., Mackie, T.R.: Intensity-Modulated Radiation Therapy: The State of Art (Medical Physics Monograph 29). Medical Physics Publishing, Madison (2003)

Shi, L.Y., Chen, R.D., Wu, Y.J.: Strong convergence of iterative algorithms for split equality problem. J. Inequal. Appl. 2014, Article ID 478 (2014)

Suzuki, T.: Strong convergence theorems for infinite families of nonexpansive mappings in general Banach space. Fixed Point Theory Appl. 1, 103–123 (2005)

Xu, H.K.: Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 26, 105018 (2010)

Acknowledgements

The authors would like to express their sincere thanks to the editors and reviewers for their noteworthy comments, suggestions, and ideas, which helped to improve this paper.

Funding

This research was supported by NSFC Grants No: 11301379; No: 11226125; No: 11671167.

Author information

Authors and Affiliations

Contributions

The main idea of this paper was proposed by TX, and LS prepared the manuscript initially and performed all the steps of the proofs in this research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, T., Shi, L. Multiple-sets split feasibility problem and split equality fixed point problem for firmly quasi-nonexpansive or nonexpansive mappings. J Inequal Appl 2021, 120 (2021). https://doi.org/10.1186/s13660-021-02656-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-021-02656-1