Abstract

Background

Although there are many proven effective physical activity (PA) interventions for older adults, implementation in a real world setting is often limited. This study describes the systematic development of a multifaceted implementation intervention targeting the implementation of an evidence-based computer-tailored PA intervention and evaluates its use and feasibility.

Methods

The implementation intervention was developed following the Intervention Mapping (IM) protocol, supplemented with insights from implementation science literature. The implementation intervention targets the municipal healthcare policy advisors, as an important implementation stakeholder in Dutch healthcare system. The feasibility of the implementation intervention was studied among these stakeholders using a pretest–posttest design within 8 municipal healthcare settings. Quantitative questionnaires were used to assess task performance (i.e. achievement of performance objectives), and utilization of implementation strategies (as part of the intervention). Furthermore, changes in implementation determinants were studied by gathering quantitative data before, during and after applying the implementation intervention within a one-year period. Additionally, semi-structured interviews with stakeholders assessed their considerations regarding the feasibility of the implementation intervention.

Results

A multi-faceted implementation intervention was developed in which implementation strategies (e.g. funding, educational materials, meetings, building a coalition) were selected to target the most relevant identified implementation determinants. Most implementation strategies were used as intended. Execution of performance objectives for adoption and implementation was relatively high (75–100%). Maintenance objectives were executed to a lesser degree (13–63%). No positive changes in implementation determinants were found. None of the stakeholders decided to continue implementation of the PA intervention further, mainly due to the unforeseen amount of labour and the disappointing reach of end-users.

Conclusion

The current study highlights the importance of a thorough feasibility study in addition to the use of IM. Although feasibility results may have demonstrated that stakeholders broadly accepted the implementation intervention, implementation determinants did not change favorably, and stakeholders had no plans to continue the PA intervention. Yet, choices made during the development of the implementation intervention (i.e. the operationalization of Implementation Mapping) might not have been optimal. The current study describes important lessons learned when developing an implementation intervention, and provides recommendations for developers of future implementation interventions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

As societies are rapidly ageing worldwide, healthcare services face vast challenges that will increase in the years to come [1]. A large body of evidence has demonstrated the effectiveness of physical activity (PA) interventions to stimulate the health of older adults, and thereby to lower the impact of the ageing population on healthcare utilisation [2,3,4,5,6,7]. This evidence mostly comes from controlled trial settings. As the ultimate impact of these interventions not only depends on their effectiveness but also on their actual reach and use in practice, implementation studies are important. Several reports have noted a substantial gap between scientific knowledge and public health practice with regard to implementing PA interventions [8,9,10,11,12]. Furthermore, implementation studies so far mainly focused on the level of the individual end-user (i.e. target population of the intervention), whereas studies on implementation requiring an organizational- or system-level adoption are sparse [13]. However, when implementing PA interventions, important stakeholders (i.e. intermediary organizations or implementation actors) are often needed. These stakeholders are the vital link between the intervention developer and the actual end-user, and influence the exposure of the intervention to the target population. Those stakeholders therefore have a crucial role in the implementation process [14], and stimulating the organizational adoption of PA interventions by engaging those stakeholders is thus highly needed.

In the Netherlands, municipalities are responsible to promote the health and wellbeing of their inhabitants and as such they receive yearly grants of the government. Municipalities were therefore identified as important stakeholders and key intermediaries for implementing preventive health interventions [15]. In each municipality, policy advisors are responsible for putting preventive health policies into action. These healthcare policy advisors are therefore considered to be the most relevant stakeholders when implementing PA interventions to promote the health of older adults in the Netherlands, and are considered the ‘agents of implementation’ in this project.

To increase the public impact of PA interventions, we systematically developed and evaluated an implementation intervention (targeting the municipal healthcare policy advisors) to implement the evidence-based Active Plus PA intervention. Active Plus is a computer-tailored, theory-driven and evidence-based eHealth intervention, designed to stimulate or maintain PA levels among adults aged over fifty by targeting psycho-social determinants like awareness, motivation, self-efficacy and coping planning [16,17,18]. The intervention can be provided in a Web- or print-based format, and optionally includes information about existing local PA opportunities [17,18,19]. Participants receive automated computer-tailored advice at three time points within a four month period [16, 17]. The intervention showed significant effects on PA, decreased incidence numbers for PA-related diseases, and has been included in national databases for proven effective interventions [20,21,22,23].

To adequately implement evidence-based interventions, a systematic process is needed to develop an effective implementation intervention that considers determinants, mechanisms, and strategies for effecting change [24]. Using a systematic approach following the Intervention Mapping protocol [25] combined with literature and theory on implementation (i.e. Rogers’ Theory of Innovations [26], the framework of determinants of innovation processes described by Paulussen et al. [27], and the Consolidated Framework for Implementation Research (CFIR [28])), we build upon previously identified potentially relevant stakeholders (i.e. the municipal healthcare policy advisor) and implementation determinants for Active Plus [15] to develop an implementation intervention. The current study aims to describe the systematic development and feasibility study of a multifaceted implementation intervention to guide healthcare policy advisors in the implementation of the Active Plus intervention within the municipality. Reflecting on the choices made during the development of the current implementation intervention the current study describes important lessons learned, and provides recommendations for future implementation intervention developers.

Methods

Study design

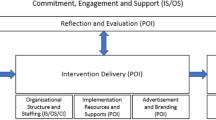

A multifaceted implementation intervention was developed according to the principles of the Intervention Mapping protocol [25], supplemented with relevant insights from then available implementation science literature [26,27,28,29]. Subsequently, a pre-test post-test feasibility study was performed within 8 municipal healthcare settings. As recommended by Fernandez et al. (2019), the products of the five tasks of Implementation Mapping are presented in a model (presented in the result section), that illustrates the logic of how the strategies will affect implementation outcomes. The design of the current feasibility study is in line with this model and evaluates all different levels of the logic model considering the evaluation of (1) the use of the implementation strategies, (2) changes in implementation determinants, (3) achievement of performance objectives, and (4) the implementation output.

Implementation process questionnaires were used to assess both the use of the implementation strategies (i.e. level 1 of the logic model) as well as the achievement of performance objectives (i.e. level 3 of the logic model). Those assessments took place at 2 months after the start of the implementation intervention (after the first educational meeting and implementation activities), at 4 months (after the second educational meeting and implementation activities), and at 8–11 months (after the final educational meeting and implementation activities) (see Timeline in Fig. 1). In addition to those questionnaires, semi-structured telephone interviews were performed with stakeholders sharing their views and experiences regarding the feasibility of the implementation intervention.

Quantitative data on implementation determinants (i.e. level 2 of the logic model) was gathered before (T0), during (T1, after 2 months) and after applying the implementation intervention (T2, after 8 to 11 months) (see timeline in Fig. 1). Questionnaires were filled in by the local municipal healthcare policy advisor who was identified as the implementation actor. At baseline, data on implementation determinants was also gathered among healthcare policy advisors not receiving the implementation intervention nor implementing the Active Plus intervention.

Furthermore, implementation output (level 4 of the logic model) was assessed as the adoption rate (i.e. amount of municipalities willing to adopt the intervention divided by the number invited to adopt the intervention), the reach of the end-user (i.e. number of people using the intervention divided by the number invited to use the intervention), intervention continuation (attrition) among the end-user (number of people dropping out divided by the number that adopted the intervention) and implementation continuation among the healthcare policy advisors (i.e. number deciding to continue implementation divided by the number that started the implementation). Data was collected from August 2017 to October 2018. Effects of implementation on healthcare use were no subject of the current study, as intervention effects on PA and health have been investigated in previous studies [20, 23, 30].

Study Population and procedure

For the current study, the healthcare policy advisor of each municipality (N = 33) in Limburg (i.e. a Dutch region with a large increase in the portion of older adults) received an email in which they were invited to implement Active Plus. This invitation was accompanied with the first questionnaire and an information leaflet about Active Plus. All invitations were directed to the healthcare policy advisors. Since the organization of policy advisors can differ between municipalities, some healthcare policy advisors appointed a colleague for participation in this project, as they might focus on an overlapping policy topic, like sports or elderly. Therefore, the implementation intervention is not aimed exclusively at the healthcare policy advisor, but at the policy advisor in general, who best meets the performance objectives as specified in the next sections.

Within each participating municipality, the policy advisor was allowed to invite a maximum of 1,000 inhabitants aged over 65 to participate in the Active Plus intervention, whereby the policy advisor could apply more specific selection criteria for participants (e.g. the policy advisor was allowed to include only participants aged over 75 years if this was more compatible with the policy of the municipality). This age-group was chosen in this study since the amount of people that are sufficiently physically active decreases significantly from this age onwards in the Netherlands [31]. This study was approved by the Research Ethics Committee of the Open University of the Netherlands (reference number U2016/0237373/HVM). The Active Plus intervention itself was registered at the Dutch Trial Register (NTR2297). All participants gave their informed consent before participation.

Development of the implementation intervention

The implementation intervention was developed according to the principles of the Intervention Mapping protocol, supplemented with relevant insights from at the time available implementation science literature. This proved to be largely compatible with the later introduced Implementation Mapping, whose terminology we will use below [24].

The first task of Implementation Mapping (IM), i.e. conducting a needs assessment and identify intervention adopters and implementers, was already performed in a previous study [15], in which municipalities were identified as one of the optimal organization to implementation Active Plus. Regional Health Counselors referred to the healthcare policy advisor within the municipality as the most important implementation agent.

Regarding IM-task 2, implementation determinants were also identified in a previous study [15], in which the potential implementers filled in questionnaires about implementation determinants, based on Rogers’ Theory of Innovations [26] and the framework of determinants of innovation processes described by Paulussen et al. [27]. For the development of the current implementation intervention, the identified determinants were classified into three main domains of the Consolidated Framework for Implementation Research (CFIR [28]). In the CFIR domain Intervention characteristics, the determinants relative advantage of the intervention, outcome expectancy, and complexity were identified [15]. In the CFIR domain Inner setting, the determinants perceived task responsibility, compatibility available resources, self-efficacy and relative priority were identified. Within the CFIR domain Outer setting the determinants subjective norm and social support were identified. These determinants form the basis for the selection of implementation strategies.

Furthermore, within IM-task 2, performance objectives for implementers (i.e. the municipal policy advisors) were specified by the intervention owners (i.e. the research team of the Open University who developed the implementation intervention). Performance objectives are essentially the tasks required to adopt, implement, or maintain a program. Three researchers (JB, BB and DP) discussed what sub-behaviours had to be performed in order to adequately implement the Active Plus intervention in practice. These performance objectives were based on previous experience with evaluations of intervention implementation [15, 32, 33], and where needed based on practical limitations (e.g., there was budget to finance participation of 1.000 inhabitants per municipality).

Within IM-task 3 and 4, implementation strategies were selected by the research team aimed to target the identified determinants. Although at present, guidance on how to select implementation strategies is available (for example in the CFIR-ERIC matching tool [34]), at the time of selecting the implementation strategies in the current project, literature regarding effective implementation strategies was limited. However the research team could draw on their broad experience within behavior change, by selecting behavior change techniques (BCTs, as already applied in the development of the Active Plus intervention itself [16,17,18]), as for example described within the Intervention Mapping protocol [25]. BCTs were selected from the tables within the Intervention Mapping protocol matching the previously described implementation determinants, and these BCTs were combined and translated to one of the discrete implementation strategies from the compilation of Powell et al. (2015). E.g. ‘Consciousness raising’ was selected as a method to increase awareness regarding the perceived advantages of the intervention which was integrated in the development of the educational materials and the educational meetings. ‘Arguments’ (also integrated in the development of the educational materials and the educational meetings) and ‘direct experience’ were selected as methods to increase positive outcome expectations. The ‘direct experience’ was incorporated in the implementation strategy ‘Audit and provide feedback’. ‘Mobilizing social support’ was selected as a method to stimulated social support while implementing the intervention, which was integrated in the implementation strategy ‘Build a coalition’ and ‘Conduct educational meetings’. Implementation strategies were specified in the result section of the manuscript, following the guidelines for reporting by Proctor et al. [35]. The intervention owner and the municipal policy advisors were specified as the actors of the implementation strategies. Further, no specific criteria regarding their expertise were specified for the included actors, besides working as a policy advisor in the municipality related to health, elderly, physical activity and/or prevention. IM step 4 requires planners to create design documents, draft content, pretest and refine content, and produce final materials. Materials developed in the current project are considered as draft content, that can be refined based on the results of the current feasibility study.

IM-task 5 concerns the evaluation of the implementation using a combination of questionnaires, semi-structures telephone interviews, research notes and registration data. To evaluate the use of the implementation intervention and its effect on implementation determinants and implementation output, a feasibility study was performed as described below.

Measurement instrument

Municipal policy advisors received two types of questionnaires: (a) three questionnaires aiming to test the changes in implementation determinants after using the implementation intervention, and (b) three questionnaires assessing the feasibility of the implementation intervention (i.e. achievement of the performance objectives and utilization of implementation strategies).

Implementation determinants questionnaire

This questionnaire (see Appendix 1, in Dutch) was developed based on Rogers’ theory of Innovations [26], the CFIR [28], the implementation questionnaire used by Bessems et al. [36], and in-depth interviews in a previous study [15]. All determinants from IM-task 2 relevant for a specific time point were assessed with several items per construct (Table 1). Questionnaires were filled out at baseline (T0; the adoption phase), after 2 months (T1; within two weeks after the first implementation strategies have been performed) and after 8–11 months (T2; when Active Plus was completed and data on reach of the intervention and effects on PA were available). The policy advisor of municipal healthcare settings not implementing the intervention were only requested to fill in the baseline questionnaire assessing the adoption determinants.

Feasibility questionnaire

This questionnaire (see Appendix 2, in Dutch) evaluated the execution of the performance objectives and the use of implementation strategies as part of the implementation intervention. Policy advisors were requested to state whether they had performed the prescribed performance objectives by answering dichotomous statements (‘Yes’ vs. ‘No’). Additionally, open-ended questions were asked to state (concisely) the main reason for not performing a certain task or for making a certain decision. These questionnaires were sent after 2 months (the T0 measurement for this process; i.e. within two weeks after the first implementation strategies should have been performed), after 4 months (T1; i.e. within two weeks after the second stage of implementation strategies should have been performed) and after 8–11 months (T2; i.e. when Active Plus was finished).

Semi-structured telephone interview and research notes

Semi-structured telephone interviews were performed with policy advisors evaluating their considerations on how they performed the performance objectives and implementation strategies. When a task was not performed, they were asked to elaborate on the reason(s) and whether the task was scheduled for a later moment (see appendix 3, in Dutch). If the policy advisors did not yet fill in the implementation process questionnaire before the deadline, questions that were stated in this questionnaire were asked within the interview as well. These qualitative data were supplemented with data acquired during meetings within the implementation process. As the qualitative data were collected to enhance interpretation of the quantitative data but not for the purpose of an exhaustive qualitative analysis, they were not recorded or analyzed as such.

Analyses

Questionnaire data were analyzed using SPSS version 24. For the feasibility study, the dichotomously scored performance objectives were described for each municipal healthcare setting over time and as a percentage of all planned performance objectives. Execution of each performance objective over all municipal healthcare settings was expressed as a percentage. Qualitative data (i.e. notes taken during telephonic interviews) were matched with the relevant performance objectives, implementation strategies and determinants by a researcher not being the interviewer.

Univariate one-way analyses of variance (ANOVA) were used to examine differences in baseline scores on implementation determinants between municipal healthcare advisors that implemented the intervention and those that did not. Friedman non-parametric tests assessed changes in implementation determinant scores over time. Furthermore, the adoption rate of municipalities was assessed by dividing the number of implementers, by the number of municipalities invited to implement the intervention. The reach of the end-user was calculated by dividing the number of Active Plus participants per municipality, by the number of inhabitants that were invited to participate per municipality. Attrition was calculated by dividing the number of participants that completed the second Active Plus questionnaire, by the number of participants that completed the baseline questionnaire.

Results

Within this section, firstly the results of applying the Intervention Mapping protocol are presented, resulting in a multifaceted implementation intervention, followed by the results of the feasibility study.

A multifaceted implementation intervention

Building upon the results of a previous study (i.e. identification of relevant adopters and implementers and identification of implementation determinants, IM-task 2 resulted in the formulation of relevant performance objectives to be achieved during intervention implementation. An overview of these performance objectives is presented in Table 2.

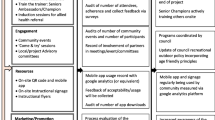

As a result from IM-task 3 and 4, Table 3 provides an overview of the implementation strategies selected, following the guidelines for reporting by Proctor et al. (29).

The results of following all tasks of IM are summarized in a comprehensive logic model (see Fig. 2), illustrating how the selected implementation strategies (visualized at the left part of the logic model) can influence the determinants of implementation behaviors, and consequently the performance objectives for adoption, implementation, and maintenance, which in turn influence implementation outcomes.

Results of the feasibility study

In line with the different levels of the logic model, the feasibility study provided insight in the use of the implementation strategies, achievement of performance objectives, changes in implementation determinants and implementation output.

Use of implementation strategies

The use of the implementation strategies is discussed below, in order of appearance in Table 3.

Access new funding: All policy advisors employed the funding that was made available to invite 1000 participants. Within two municipal healthcare settings both the Web- and print-based format was implemented: as the additional labour, material and postage costs were not covered by the funding, they arranged additional funding within their own municipal healthcare settings.

Develop and distribute educational materials: The intervention owner developed and distributed the materials as stated in Table 3. The interviews showed that within municipal healthcare settings Active Plus was actively promoted by announcements in their local newspapers or on social media. One policy advisor organized a kick-off meeting for the target population; other policy advisors found this impossible to organize because of time constraints, and one policy advisor decided against such a meeting out of fear that older adults who were not invited for the intervention would feel left out. Stakeholders were provided with leaflets and recruitment letters that they could provide to the target population. About one third of the policy advisors did not distributed the recruitment letters among the target population at T0 because of time constraints. At T1, all policy advisor but two expressed that they did not perform any additional recruitment activities after sending the recruitment letter; time constraints were mentioned as main cause.

Develop and distribute an implementation manual: the manual was developed and distributed among the policy advisors by the intervention developer. Additionally, a step-by-step checklist was provided and during the educational meeting the essential implementation strategies were discussed. At T0, five policy advisor expressed that they used either the manual, the checklist or both. At T1, all policy advisor expressed that they did not use the manual anymore but either found enough guidance in the meetings or in the checklist.

Build a coalition: At T0, all policy advisors reported that they approached or intended to approach their regular contacts among stakeholders; no policy advisor tried or intended to establish new collaborations. At T1, the majority of policy advisors declared that they performed this task mostly as planned. If not, it was due to time constraints. All policy advisors expressed to be content with the coalition that was formed. In the implementation manual, the Regional Health Service (RHS) and the Senior Citizen Organisations (SCO) were explicitly mentioned as potential coalition partners. Policy advisors had divergent ideas on the usefulness of the RHS: half found the RHS of no additional value and therefore did not contact them, the other half approached them actively for advice on what target population to choose. The majority of policy advisors expressed that SCOs have other goals than being included in an intervention implementation effort and therefore did not contact them.

Conduct educational meetings: These were organized by the intervention owner on T0, T1 and T2, on which respectively seven, six and six policy advisors were present. The meetings lasted about three hours and at all meetings, representatives of the RHS and SCO were present.

Capturing and sharing local knowledge: All policy advisors actively participated in the interactive educational meetings. During the interviews the policy advisors declared that they did not organise any collaboration sessions with the partners in their coalition: stakeholders were contacted individually. Again, time constraints were mentioned as a reason.

Centralize technical assistance: The intervention owner arranged technical assistance for each municipal healthcare setting which was available both by telephone and email. Policy advisors also arranged this for the participants and three also arranged personal assistance to fill in the questionnaires.

Promote adaptability: All policy advisor added their logo to their homepage and information on local PA opportunities such as hiking clubs or opening hours of swimming pools. Furthermore, six policy advisor adapted the recruitment letter to optimize it to the needs of older adults in their municipal healthcare setting.

Audit and provide feedback: During the course of the intervention, the policy advisor could access real-time data via their personal website on reach, demographic features of the participants and their PA behaviour. These data were also presented by the intervention owner during the final educational meeting.

Achievement of performance objectives

Overall, execution of the performance objectives for adoption and implementation was relatively high, ranging from 75 to 100% execution score per municipal healthcare setting (see Table 4). The two performance objectives for maintenance were executed to a lesser degree (12.5% and 62.5%). Regarding the decision to adopt Active Plus, within the first two months five policy advisors did not know yet whether they would choose to implement the online or the printed version. The decision which 1.000 inhabitants to invite was made within the first two months by all policy advisors. In the interviews, all policy advisors stated that they balanced between inviting a target population that could benefit most from the intervention (e.g. low Socio Economic status neighbourhoods, or inhabitants with sufficient digital skills for an online intervention) and a target population that was pragmatic to invite (e.g. the oldest 1000 inhabitants).

Six policy advisors indicated that they expected higher participation rates and effectiveness by offering Active Plus both web- and print-based; four decided to offer web-based only due to either difficulties in complying to the then newly implemented privacy protection regulations (i.e. the General Data Protection Regulation (GDPR)) (N = 2), time investment and printing costs (N = 3) or because the selected inhabitants were deemed to be sufficiently e-literate for a web-based version (N = 1). During the telephone interviews, a large majority of policy advisors expressed that if there were no constraints regarding the number of invitations, different choices would have been made: most policy advisors would then prefer inviting either a larger group or to additionally offer a printed delivery mode.

Five policy advisors inquired information at the RHS about integration with other initiatives, but only after inviting the end-users. The other implementation performance objectives were executed by all policy advisors.

Overall, five policy advisors contacted the RHS about integration with local policies, and one municipality made a plan about continuation. Four policy advisors reported that they indeed considered continuation of Active Plus a task for municipal healthcare settings, because it fits their responsibility to care, be close to inhabitants and bring stakeholders together. The two policy advisors that did not feel the responsibility to continue Active Plus stated that implementation involved too much practical work that should be outsourced or would come with additional financial burden.

Changes in determinants of implementation

At baseline, mean scores on implementation determinants (scaled from 1–5) varied from 2.82 to 4.14 among non-implementing municipal healthcare settings and from 3.19 to 4.50 among implementing municipal healthcare settings (see Table 5). Highest scores were seen in relative priority, among both non-implementers and implementers. The score on perceived relative advantage was lower among non-implementers compared to implementers (2.82 versus 3.41; p = 0.083). The perceived compatibility was significantly higher among implementers compared to non-implementers (3.79 versus 3.29; p = 0.040).

Over time, several changes in implementation determinant scores were identified among the policy advisors who implemented the intervention. These changes were supported by the findings from interviews with the policy advisors, which are reported below per cluster of implementation determinants.

Perceived intervention characteristics

A decrease was observed in the perceived relative advantage of the intervention, starting with a score of 3.41 at baseline and ending with a score of 2.72 (p = 0.022). Around half of the policy advisors expressed that the online delivery mode of the intervention, and specifically the few administrative tasks associated with it, were considered a relative advantage compared to other interventions. However, policy advisors found the other implementation strategies, such as getting stakeholders involved, more time-consuming than expected, which is in line with the found decrease in perceived relative advantage. Outcome expectancies significantly varied over time with an average score of 3.58 at baseline, 4.01 at interim and 3.32 at the end of the period (p = 0.050). In the interviews, five policy advisors expressed from baseline on that the intervention would only be sustained after the research period if outcomes were satisfactory. Regarding outcome expectancy, policy advisors mainly referred to the number of participants and the attrition over time, and much less so to the effectiveness of the intervention. Six of the policy advisors were not satisfied with both participation and attrition, which is in line with the decrease in outcome expectancy.

Inner setting

Self-efficacy significantly varied over time with an average score of 3.42 at baseline, 3.86 at interim and 3.09 at the end of the period (p = 0.006). Half of the policy advisors stated that they used the implementation intervention only at the start of the intervention and expressed that it gave them the guidance needed at that point in time. Later on, six policy advisors stated that they did not use the implementation intervention anymore. In combination with the extra labour that was needed to reach sufficient end-users and collaborating stakeholders, implementation was found more challenging, possibly accounting for the decrease in self-efficacy.

Outer setting

No significant changes were observed in outer setting determinants.

Intention to implement within the next year

The intention varied significantly over time with an average score of 3.40 at baseline, 3.57 at interim and 2.30 at the end of the period (p = 0.023). In the interviews, all policy advisors stated that the participation degree of elderly in the intervention was the main reason for their lack of intention to continue the implementation. Also, budgetary reasons, was frequently stated as reason. Three policy advisors stated that the decision to implement would also depend on policies being developed in the upcoming years.

Implementation output

The implementation output was reflected in an adoption rate of 24%; 8 of the 33 invited municipal healthcare setting were willing to implement Active Plus. Among the end-users, a reach of 8% (range 4 to 11%) was achieved, i.e. 624 older adults participated in Active Plus. The second questionnaire of the intervention was completed by 124 end-users, implying an attrition of 80.1% in three months.

None of the municipal policy advisors decided to continue implementation of the PA intervention. In the telephone interviews at T0, all but one policy advisor stated that they intended to continue implementation if results were satisfactory and if sufficient budget was available. Regarding the budget, policy advisors also mentioned that obtaining sufficient budget could be problematic as financial means were already allocated for the next year. At T2, 75% of the policy advisors mentioned the disappointing participation rate of older adults as the main reason to discontinue implementation: only 25% mentioned financial limitations as reason.

Discussion

The current study describes the systematically developed multifaceted implementation intervention to support implementation of the evidence-based Active Plus intervention and the results of its feasibility-test.

Following the principles of the Intervention Mapping protocol, supplemented with relevant insights from then available implementation science literature, a multi-faceted implementation intervention was developed in which implementation strategies (e.g. funding, educational materials, meetings, building a coalition) were selected to target the most relevant identified implementation determinants. Results of the feasibility study showed that most implementation strategies were performed adequately and that execution of the performance objectives for adoption and implementation was relatively high. This may demonstrate that implementers broadly accepted the presented strategies and performance objectives and recognized them as being useful. Despite this, no positive changes in implementation determinants were observed over time: remarkably, a decrease was observed in the perceived relative advantage of the intervention and after an initial increase, scores on outcome expectancies, self-efficacy and intention to implement the intervention decreased. Eventually, none of the implementers decided to continue intervention implementation. As the most important reason not to continue the implementation, implementers declared that the unforeseen amount of labour required to promote the intervention among stakeholders and end-user (due to the disappointing reach and attrition) made the implementation more time-consuming than expected.

In addition to the above, the fact that no positive changes in implementation determinants were found may have several explanations. First of all, it might be explained by the depth in which we assessed determinants of implementation in our previous study, which forms the base for the developed implementation intervention. Secondly, the selection of implementation strategies used in the current study might have not been optimal. These two explanations will be elaborated on below.

With regard to implementation determinants, the study identifying these determinants [15] was targeted to only one person within each municipal healthcare setting, which might have resulted in a lack of insight in potential barriers and facilitators of implementation perceived by other relevant implementation actors. As stated by Fernandez et al. [24] in their Implementation Mapping protocol, which was published after the design of our implementation intervention, implementation outcomes and performance objectives should be stated specific for each adopter and implementer. If adoption and implementation involve multiple actors such as administrators, policy advisors and policy makers, each may have their own performance objectives. In line with this recommendation of Fernandez et al., it can be recommended to gain insight in the perceived determinants by all these different adopters and implementers as well, and not only by one stakeholder per municipal healthcare setting. In addition, more in-depth insight to some implementation determinants might be useful. For example, whereas outcome expectancy and visibility of the intervention effects were previously [15] identified as important implementation determinants, the current study showed that the reach and attrition of participants seemed a more important outcome measure than the effectiveness of the intervention on PA for the decision to discontinue the implementation of Active Plus. It would therefore be advisable to explicitly ask the implementing organisations at which outcomes they would consider the intervention to be successful.

Insight in determinants related to the CFIR-domain ‘implementation process’ were lacking in our previous study [15], as that study focused on municipalities who had not yet implemented the intervention. The current study showed that within the implementation process, hindering aspects mainly related to the CFIR constructs ‘engaging’ (e.g. the difficulties perceived to get other external stakeholders involved in the implementation, and in recruitment of intervention participants) and the construct ‘planning’ (e.g. the mentioned time constraints which affected timelines of task completion). Identifying determinants associated with the implementation process can be challenging among potential implementation actors who have no prior experience with the intervention. This underscores the significance of conducting a feasibility study during the development of an implementation intervention, as it has the potential to yield fresh insights into the determinants linked to the implementation process itself.

Determinants related to the ‘outer setting’ domain (i.e. contextual influences like policy and local agenda settings) changed over time. The identification of determinants and the actual implementation of the intervention were three years apart, so determinants related to the outer-setting might have changed, as well as the prioritization of the determinants, which might both have consequences for the adequateness of the selected implementation strategies. Changes in context or policy might influence the role that municipalities play in intervention implementation. This was supported by our qualitative data: three municipalities expressed that changes in policy influence if and which interventions to stimulate PA would be implemented. Anticipating on changes in context and policy (and thus regularly assessing the related determinants) is highly recommended.

The second explanation on why no positive changes in implementations determinants were found might lay in not selecting the most optimal implementation strategies. As there is still no consensus in literature on how to best select implementation strategies, the current implementation intervention was based on a pragmatic selection of most suitable strategies targeting the identified implementation determinants. A useful tool to make a first selection of implementation strategies is the ‘CFIR-ERIC strategy matching tool’. However, this tool was not yet available during the development of the implementation intervention described in the current study. The CFIR-ERIC matching tool can help to select strategies to address barriers that were identified using the CFIR. This tool is based on the work of Powell et al. [29] and the work of Waltz et al. [34]. The selection of strategies could further have been optimized by including stakeholders in the selection and development of these implementation strategies as well (i.e. user-centered development). Previous studies have shown that a user-centered design also results in better program sustainability [10, 37, 38]. This user-centered development should not only involve the implementing organizations, but also to the target population (i.e. the end-user). The policy advisors stated that they were not content with the participation and attrition rates. Adding implementation strategies like ‘increase demand’ (attempting to influence the market for Active Plus) and ‘intervene with consumers to enhance uptake and adherence’ [29] might positively affect the participation and attrition of the target population. This could demonstrate the need for active involvement of the target population in the development of implementation strategies.

Furthermore, although formulating a plan for sustainability of implementation was one of the intended outcomes, implementation strategies to facilitate continuation were not sufficiently included in the current implementation intervention. Since we provided funding for the intervention cost as part of the evaluation, implementers may have regarded the implementation as a test without any obligation or may have not sufficiently considered financial support for continuing the intervention. Furthermore, the current implementation intervention mainly aimed to target determinants related to the intervention characteristics and the inner setting, and to a much lesser degree the implementation determinants related implementation process and the outer setting. Based on the current insights, recommendations can be provided for (additional) more suitable implementation strategies affecting determinants relating to the implementation process itself and the outer setting, e.g., implementation strategies like conducting local consensus discussions, inform local opinion leaders, promote network weaving, and obtain formal commitments might positively influence the engagement [29]. Furthermore, as the use of local opinion leaders has been identified as one of the most effective implementation strategies [39], implementation of Active Plus could benefit from including this strategy within its implementation intervention as well.

To our knowledge, this is the first study providing insight over a longer implementation period in the use and effects of a systematically developed implementation intervention aiming to target the implementation of an evidence-based eHealth PA intervention for older adults. One of the strengths of the current study is that it provides insight into the use of the implementation strategies, as well as in the changes in determinant scores regarding implementation over time and consequently the results of the implementation intervention (i.e. the implementation output). Furthermore, the mixed method design, i.e. the use of both quantitative and qualitative methods of evaluating the implementation can be considered a strength of our study. While the quantitative data has provided us with statistical information on how the implementation of an intervention varies, the qualitative data has provided insights on why it varies, and integrating these data generates a better insight in factors that determine intervention implementation [40].

Although this study provides relevant insight, some limitations should be noted. First, no ad-verbatim transcripts were made of the qualitative data. Hence, the qualitative data should be interpreted with care. However, it has been argued that the benefits of combining quantitative data with qualitative data outweighs the strict use of guidelines: instead, a pragmatic approach to interpreting qualitative data has been advocated, especially in a study like ours which leans on existing theoretical frameworks [41]. The interviews provide useful insights into the complexity of the choices made by the implementers. As the interviews were analysed by another researcher than the one that performed the interviews, a sufficient level of objectivity was accounted for. Furthermore, the current study aimed to evaluate the feasibility within municipalities in a region of the Netherlands counting 33 municipalities in total. Reaching a sample size of 8 municipalities (i.e. an adoption rate of 24%) was therefore evaluated as a reasonable sample size considering these sample size limitations. However, guidelines for designing and evaluating feasibility studies recommended sample sizes about 30 to establish feasibility [42, 43]. The quantitative results of the current study should therefore be interpreted with caution.

Another limitation of the current study is that causal pathways cannot be confirmed due to the lack of a control group implementing Active Plus not receiving the current implementation intervention. Nonetheless, the lessons learned and systematic description of the implementation of a PA intervention by intermediaries are relevant to all organizations developing and implementing public health interventions in real-life.

Furthermore, a practical limitation of the current project is that the intervention owners (i.e., the authors) were heavily engaged in driving the implementation process. To increase the sense of shared ownership, a more bottom-up approach may be recommended in which the needs and actions of policy advisors and other stakeholders drive the implementation process.

Conclusion

Several explanations can be found for the lack of effect of our implementation intervention, that can all be reflected in the choices made during its systematic development. Although both the Intervention Mapping protocol and the Implementation Mapping protocol are very useful protocols, the guidance on ‘how to’ gain the insights that are needed to inform the different steps of these protocols are limited. Our feasibility study therefore resulted in several important lessons that could help other implementation intervention developers. In line with the Implementation Mapping protocol, we would like to highlight the importance of including the perceptions of different implementation actors when identifying implementation determinants, stating implementation outcomes and performance objectives specific for each adopter and implementer, and to select implementation strategies matching the perceptions of the different implementation actors (no one size fits all implementation plan).

Most important lessons learned from our feasibility study:

-

Use a mixed-method approach when identifying the implementation determinants and when evaluating the feasibility of your implementation plan, as in-depth insights are very meaningful.

-

Include a pre-test post-test design within your feasibility study to monitor whether the implementation intervention positively effects the implementation determinants.

-

Ensure a broad perspective on implementation determinants (e.g. by using a framework like CFIR) as all domains seem to have relevant aspects when implementing an intervention.

-

Use an iterative approach as relevant implementation actors, determinants and consequently the needed implementation strategies change over time, even within a year.

Availability of data and materials

The quantitative data described in the current study, can be requested at the corresponding author. Qualitative data cannot be made available as persons are identifiable with the combination of answers in this data, and therefore not in line with GDPR. The questionnaire used to assess determinants of implementation and the guideline for the semi-structured interview assessing the implementation process are made available in appendixes of the current manuscript.

References

Organisation WH. Ageing and Health. 2022. https://www.who.int/news-room/fact-sheets/detail/ageing-and-health.

Das P, Horton R. Rethinking our approach to physical activity. Lancet. 2012;380(9838):189–90.

Department of Health. At least five a week: Evidence on the impact of physical activity and its relationship to health. London: Department of Health; 2004.

Nelson ME, Rejeski WJ, Blair SN, Duncan PW, Judge JO, King AC, et al. Physical activity and public health in older adults: recommendation from the American College of Sports Medicine and the American Heart Association. Med Sci Sports Exerc. 2007;39(8):1435–45.

Haskell WL, Lee IM, Pate RR, Powell KE, Blair SN, Franklin BA, et al. Physical Activity and Public Health: Updated Recommendation for Adults From the American College of Sports Medicine and the American Heart Association. Circulation. 2007;116(9):1081–93.

Thomas E, Battaglia G, Patti A, Brusa J, Leonardi V, Palma A, et al. Physical activity programs for balance and fall prevention in elderly: A systematic review. Medicine (Baltimore). 2019;98(27):e16218.

de Labra C, Guimaraes-Pinheiro C, Maseda A, Lorenzo T, Millán-Calenti JC. Effects of physical exercise interventions in frail older adults: a systematic review of randomized controlled trials. BMC Geriatr. 2015;15:154.

Wilson P, Petticrew M, Calnan M, Nazareth I. Disseminating research findings: what should researchers do? A systematic scoping review of conceptual frameworks. Implement Sci. 2010;5(1):91.

Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, et al. Bridging the gap between prevention research and practice: the interactive system framework for dissemination and implementation. Am J Community Psychol. 2008;41:171–81.

Rabin BA, Brownson RC, Kerner JF, Glasgow RE. Methodological challenges in disseminating evidence-based interventions to promote physical activity. Am J Prev Med. 2006;31(4s):24–34.

Granja C, Janssen W, Johansen MA. Factors determining the success and failure of eHealth interventions: systematic review of literature. JMIR. 2018;20(5):e10235.

Reis R, Slavo D, Ogilvie D, Lambert E, Goenka S, Brownson R. Scaling up physical activity interventions world wide: stepping up to larger and smarter approaches to get people moving. Lancet. 2016;388(10051):1337–48.

Greenhalgh T, Wherton J, Papoutsi C, Lynch J, Hughes G, A’Court C, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. JMIR. 2017;11:e367.

Bessems K, Van Nassau F, Kremers SPJ. Implementatie van interventies. In: Brug J, Van Assema P, Kremers SPJ, Lechner L, editors. Gezondheidsvoorlichting en gedragsverandering: een planmatige aanpak. Assen: Koninklijke van gorcum; 2022.

Peels DA, Mudde AN, Bolman C, Golsteijn RHJ, De Vries H, Lechner L. Correlates of the intention to implement a tailored physical activity intervention: perceptions of intermediaries. Int J Environ Res Public Health. 2014;11:1185–903.

Van Stralen MM, Kok G, De Vries H, Mudde AN, Bolman C, Lechner L. The Active Plus protocol: systematic development of two theory and evidence-based tailored physical activity interventions for the over-fifties. BMC Public Health. 2008;8:399.

Peels DA, Van Stralen MM, Bolman C, Golsteijn RHJ, De Vries H, Mudde AN, et al. The Development of a Web-Based Computer Tailored Advice to Promote Physical Activity Among People Older Than 50 Years. J Med Internet Res. 2012;14(2):e39.

Boekhout JM, Peels DA, Berendsen BAJ, Bolman C, Lechner L. An eHealth Intervention to Promote Physical Activity and Social Network of Single, Chronically Impaired Older Adults: Adaptation of an Existing Intervention Using Intervention Mapping. J Med Internet Res. 2017;6(11):e230.

Van Stralen MM, De Vries H, Mudde AN, Bolman C, Lechner L. The working mechanisms for an environmentally tailored physical activity intervention for older adults: a randomized controlled trial. Int J Behav Nutr Phys Act. 2009;6:83.

Peels DA, Bolman C, Golsteijn RHJ, De Vries H, Mudde AN, Van Stralen MM, et al. Long-term efficacy of a tailored physical activity intervention among older adults. Int J Behav Nutr Phys Act. 2013;10:104.

Peels DA, Van Stralen MM, Bolman C, Golsteijn RHJ, De Vries H, Mudde AN, et al. The Differentiated Effectiveness of a Printed versus a Web-based Tailored Intervention to Promote Physical Activity among the Over-fifties. Health Educ Res. 2014;29(5):870–82.

Boekhout JM, Berendsen BAJ, Peels DA, Bolman C, Lechner L. Evaluation of a Computer-Tailored Healthy Ageing Intervention to Promote Physical Activity among Single Older Adults with a Chronic Disease. Int J Environ Res Public Health. 2018;15(2):e346.

Peels DA, Hoogenveen RR, Feenstra TL, Golsteijn RHJ, Bolman C, Mudde AN, et al. Long-term health outcomes and cost-effectiveness of a computer-tailored physical activity intervention among people aged over fifty: modeling the results of a randomized controlled trial. BMC Public Health. 2014;14:1099.

Fernandez ME, Ten Hoorn GA, Van Lieshout S, Rodriguez SA, Beidas RS, Parcel GS, et al. Implementation Mapping: Using Intervention Mapping to Develop Implementation Strategies. Front Public Health. 2019;7:158.

Bartholomew LK, Markham CM, Ruiter RAC, Fernandez ME, Kok G, Parcel GS. Planning health promotyion programs: an intervention mapping approach. 4th ed. San Francisco: Jossey-Bass; 2016.

Rogers EM. Diffusion of innovations. New York: The Free Press; 2003.

Paulussen T, Bessems K. Disseminatie en implementatie van interventies. In: Brug J, Van Assema P, Lechner L, editors. Gezondheidsvoorlichting en gedragsverandering: een planmatige aanpak. Assen: Van Gorcum; 2017.

Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Powell BJ, Walt TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Peels DA, Bolman C, Golsteijn RHJ, van Stralen MM, De Vries H, Mudde AN, et al., editors. Differences in cost-effectiveness in tailored interventions to promote physical activity among the over fifties. In Bolman, C (Chair), Integrating economic evaluation in health promotion and public health research: challenges and future directions. European Health Psychology Society; 2012; Charles University in Prague.

CBS. Leefstijlmonitor: Rijksinstituut voor Volksgezondheid; 2014 [Available from: volksgezondheidenzorg.info.

Berendsen BAJ, Peels DA, Bolman C, Lechner L. Beweeggedrag van ouderen stimuleren in uw gemeente: handvaten en implementatiestrategieen. Heerlen: Open University; 2017.

Berendsen BAJ, Kremers SPJ, Savelberg HHCM, Schaper NC, Hendriks MRC. The implementation and sustainability of a combined lifestyle intervention in primary care: mixed method process evaluation. BMC Fam Pract. 2015;16:37.

Waltz TJ, Powell BJ, Fernández ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implementation Sci. 2019;14:42.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Bessems KMHH. The dissemination of the healthy diet programme Krachtvoer for Dutch prevocational schools. Maastricht: Datawyse / Universitaire Pers Maastricht; 2011. https://www.academischewerkplaatslimburg.nl/wp-content/uploads/Proefschrift_Krachtvoer_Kathelijne_Bessems.pdf

Paulussen T, Wiefferink K, Mesters I. Invoering van effectief gebleken interventies. In: Brug J, Van Assema P, Lechner L, editors. Gezondheidsvoorlichting en gedragsverandering. Assen: Van Gorcum; 2008.

Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–250.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the Impact of Implementation Strategies in Healthcare: A Research Agenda. Front Public Health. 2019;7:3.

Bryman A. Integrating quantitative and qualitative research: how is it done? Qual Res. 2006;6(1):97–113.

Ramanadhan S, Revette AC, Lee RM, Aveling EL. Pragmatic approaches to analyzing qualitative data for implementation science: an introduction. Implementation Science Communications. 2021;2(1):70.

Teresi JA, Yu X, Stewart AL, Hays RD. Guidelines for Designing and Evaluating Feasibility Pilot Studies. Med Care. 2022;60(1):95–103.

Hooper R. Justify sample size for a feasibility study [cited 2024 14–03–2024]. Available from: https://www.rds-london.nihr.ac.uk/resources/justify-sample-size-for-a-feasibility-study/.

Acknowledgements

We thank Audrey Beaulen for her assistance in gathering the data for the current study, and thank all municipalities for their involvement in the current study.

Funding

The current project was funded by Fonds Nutsohra (reference number 102.509). The writing proces was funded by ZonMw, grant number 05460402110001. The APC was funded by the Open Access Fund of the Open University of The Netherlands.

Author information

Authors and Affiliations

Contributions

Authors DP, JB, LL, CB and BB were involved in the development and evaluation of the Active Plus intervention. DP and BB were involved in data collection and analyses of the quantitative implementation data. JB was responsible for analyzing the qualitative data and attributing these insights to the quantitative results. FvN was actively involved in writing the current manuscript after the data was already gathered. All authors were involved in the design of the current study, have approved the submitted version, and agreed to be personally accountable for their own contribution and ensure that questions related to the accuracy or integrity of any part of the work can be appropriately investigated.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Research Ethics Committee of the Open University of the Netherlands (U202200520). All participants gave their informed consent before participation.

Consent for publication

Not applicable.

Competing interests

Although the Open University is the owner of the Active Plus intervention, and several authors were involved in the development and evaluation of the Active Plus Intervention, the Open University is a non-profit organisation and there are thus no financial competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Peels, D.A., Boekhout, J.M., van Nassau, F. et al. Promoting the implementation of a computer-tailored physical activity intervention: development and feasibility testing of an implementation intervention. Implement Sci Commun 5, 90 (2024). https://doi.org/10.1186/s43058-024-00622-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-024-00622-8