Abstract

Privacy preservation is a key issue that has to be addressed in biometric recognition systems. Template protection schemes are a suitable way to tackle this task. Various template protection approaches originally proposed for other biometric modalities have been adopted to the domain of vascular pattern recognition. Cancellable biometrics are one class of these schemes. In this chapter, several cancellable biometrics methods like block re-mapping and block warping are applied in the feature domain. The results are compared to previous results obtained by the use of the same methods in the image domain regarding recognition performance, unlinkability and the level of privacy protection. The experiments are conducted using several well-established finger vein recognition systems on two publicly available datasets. Furthermore, an analysis regarding subject- versus system-dependent keys in terms of security and recognition performance is done.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Finger vein recognition

- Template protection

- Cancellable biometrics

- Biometric performance evaluation

- Block re-mapping

- Warping

1 Introduction

Various methods exist to protect the subject-specific information contained in biometric samples and/or templates. According to several studies, e.g. Maltoni et al. [16], and ISO/IEC Standard 24745 [7] each method should exhibit four properties: Security, Diversity, Revocability and Performance. These shall ensure that the capture subject’s privacy is protected and at the same time a stable and sufficient recognition performance during the authentication process is achieved. The first aspect deals with the computational hardness to derive the original biometric template from the protected one (security-irreversability). Diversity is related to the privacy enhancement aspect and should ensure that the secured templates cannot be matched across different databases (unlinkability). The third aspect, revocability, should ensure that a compromised template can be revoked without exposing the biometric information, i.e. the original biometric trait/template remains unaltered and is not compromised. After removing the compromised data, a new template representing the same biometric instance can be generated. Finally, applying a certain protection scheme should not lead to a significant recognition performance degradation of the whole recognition system (performance).

One possibility to secure biometric information, cancellable biometrics, are introduced and evaluated on face and fingerprint data by Ratha et al. in [22]. The applied template protection schemes, block re-mapping and warping, have also been applied in the image domain and evaluated on iris [5, 14] and finger vein [20] datasets, respectively. Opposed to the latter study we want to investigate these schemes not in the image domain, but in the feature domain as several advantages and disadvantages exist in both spaces. These positive and negative aspects will be described in Sect. 16.2.

A detailed discussion on finger vein related template protection schemes, that can be found in literature, is given in Chap. 1 [26]. Thus, the interested reader is referred to this part of the handbook.

The rest of this chapter is organised as follows: The considered experimental questions are discussed in Sects. 16.2, 16.3 and 16.4 respectively. The employed non-invertible transform techniques are described in Sect. 16.5. Section 16.6 introduces the datasets utilised during the experimental evaluation, the finger vein recognition tool-chain as well as the evaluation protocol. The performance and unlinkability evaluation results are given and discussed in Sect. 16.7. Section 16.8 concludes this chapter and gives an outlook on future work.

2 Application in the Feature or Image Domain

If a template protection scheme is applied in the image/signal domain immediately after the image acquisition, the main advantage is that the biometric features extracted from the transformed sample do not correspond to those features computed from the original image/signal. So, the “real” template is never computed and does occur at no stage in the system and further, the sample is never processed in the system except at the sensor device. This provides the highest level of privacy protection for the capture subject. The main disadvantage of the application in the image/signal domain is that the feature extraction based on the protected image/signal might lead to incorrect features and thus, to inferior recognition performance. Especially in finger vein recognition, most of the well-established feature extraction schemes rely on tracking the vein lines, e.g. based on curvature information. By applying template protection methods like block re-mapping in the image domain right after the sample is captured, connected vein structures will become disconnected. These veins are then no longer detected as continuous vein segments which potentially causes problems during the feature extraction and might lead to an incomplete or faulty feature representation of the captured image. Consequently, the recognition performance of the whole biometric system can be negatively influenced by the application of the template protection scheme.

On the contrary, if template protection is conducted in the feature domain, the feature extraction is finished prior to the application of the template protection approach. Thus, the extracted feature vector or template is not influenced by the template protection scheme at this stage and represents the biometric information of the capture subject in an optimal way.

3 Key Selection: Subject- Versus System-Specific

There are two different types of key selection, subject- and system-specific keys. In the subject-specific key approach, the template of each subject is generated by a key which is specific for each subject while for a system-specific key, the templates of all subjects are generated by the same key.

Subject dependent keys have advantages in terms of preserving the capture subjects’ privacy compared to system-dependent keys. Assigning an individual key to each capture subject ensures that if an adversary gets to know the key of one of the capture subjects, he can not compromise the entire database as each key is individual. A capture subject-specific key also ensures that insider attacks performed by legitimate registered subjects can not be performed straight forward. Such an attack involves a registered capture subject, who is been granted access to the system and has access to the template database as well. This adversary capture subject wants to be legitimated as one of the other capture subjects of the same biometric system. So he/she could just try to copy one of his/her templates over the template belonging to another capture subject and claim that this is his/her identity, thus trying to get authenticated as this other, genuine capture subject. If capture subject-specific keys are used, this is not easily possible as each of the templates stored in the database has been generated using an individual key. However, it remains questionable if such an insider attack is a likely one. In fact, it would probably be easier for an advisory who has access to the entire template database to simply create and store a new genuine capture subject that exhibits his/her biometric information together with a key he sets in order to get the legitimation he wants to acquire. Another advantage of capture subject-specific keys is that the system’s recognition performance in enhanced by introducing more inter-subject variabilities and thus impacting the performed impostor comparisons. The additional variability introduced by the subject-specific key in combination with the differences between different biometric capture subjects leads to a better separation of genuine and impostor pairs which enhances the overall system’s performance.

One drawback of using capture subject-specific keys is that the system design gets more complex, depending on how the capture subject-specific keys are generated and stored. In contrast to a system-specific key, which is valid for all capture subjects and throughout all components of the biometric recognition system, the individual capture subject-specific keys have to be generated and/or stored somehow. One possibility is to generate the key based on the capture subject’s biometric trait every time the capture subject wants to get authenticated. This methodology refers to the basic idea of Biometric Cryptosystems (BCS), which have originally been developed for either securing a cryptographic key applying biometric features or generating a cryptographic key based on the biometric features [9]. Thus, the objective to employ a BCS is different but the underlying concept is similar to the one described earlier. The second option can be used to generate the capture subject specific key once and then store this key which is later retrieved during the authentication process. This key can either be stored in a separate key database or with the capture subject itself. Storing the keys in a key database of course poses the risk of the key database getting attacked and eventually the keys getting disclosed to an adversary. Storing the keys with the capture subject is the better option in terms of key security, however it lowers the convenience of the whole system from the capture subjects’ perspective as they have to be aware of their key, either by remembering the key or by using smart cards or similar key storage devices.

4 Unlinkability

The ISO/IEC Standard 24745 [7] defines that irreversibility is not sufficient for protected templates, as they also need to be unlinkable. Unlinkability guarantees that stored and protected biometric information can not be linked across various different applications or databases. The standard defines templates to be fully linkable if a method exists which is able to decide if two templates protected using a different key were extracted from the same biometric sample with a certainty of 100%. The degree of linkability depends on the certainty of the method which decides if two protected templates originate from the same capture subject. However, the standard only defines what unlinkability means but gives no generic way of quantifying it. Gomez-Barrero et al. [4] present a universal framework to evaluate the unlinkability of a biometric template protection system based on the comparison scores. They proposed the so-called \(D_{sys}\) measurement as a global measure to evaluate a given biometric recognition and template protection system. Further details are given in Sect. 16.6.3 where the experimental protocol is introduced.

The application of the proposed framework [4] allows a comparison to previous work done on the aspect of key-sensitivity using the same protection schemes by Piciucco et al. [20]. Protected templates generated from the same biometric data by using different keys should not be comparable. Thus, the authors of [20] used the so-called Renewable Template Matching Rate (RTMR) to prove a low matching rate between templates generated using different keys on both protection schemes. This can also be interpreted as a high amount of unlinkability as the RTMR can be interpreted as a restricted version of the \(D_{sys}\) measure.

5 Applied Cancellable Biometrics Schemes

The two investigated non-invertible transforms, block re-mapping and warping, are both based on a regular grid. Some variants of them have been investigated and discussed in [21, 22]. The input (regardless if a binary matrix or image) is subdivided into non-overlapping blocks using a predefined block size. The constructed blocks are processed individually, generating an entire protected template or image. As we aim to utilise the same comparison module for the unprotected and protected templates, there is one preliminary condition that must be fulfilled for the selected schemes: The protected template must exhibit a structure similar to the original input template. In particular, we interpret the feature vector (template) as binary image, representing vein patterns as 1s and background information as 0s. Based on this representation, each x-/y-coordinate position (each pixel) in the input image can be either described as background pixel or as vein pattern pixel. Thus, our approach can be used in the signal domain as well as in the feature domain and the template protection performance results obtained in image domain can be directly compared to results obtained in the feature domain. Note that in the signal domain the input as well as the protected output images are no binary but greyscale ones, which does not change the way the underlying cancellable biometrics schemes are applied (as they only change positions of pixels and do not relate single pixel values to each other). In the following, the basic block re-mapping scheme as well as the warping approach are described.

5.1 Block Re-mapping

In block re-mapping [22], the number of predefined blocks is separated into two classes, where the total number of blocks remains unaltered. The blocks belonging to the first class are randomly placed at different positions to the ones they have been located in the original input. This random allocation is done by assigning random numbers generated by a number generator according to a predefined key. This key must be stored, such that a new image acquired during authentication can be protected using the same number generator specification. The blocks belonging to the second class are dismissed and do not appear in the output. This aspect ensures the irreversibility property of the block re-mapping scheme. The percentage of blocks belonging to each of the two classes is set by a predefined value. The more blocks in the second class, the less biometric information is present in the output. Usually, the percentage of blocks in the first class is between 1/4 and 3/4 of the total blocks.

Figure 16.1 shows the block re-mapping scheme which has been implemented in a slightly adopted version compared to the original one done by Piciucco et al. [20]. The main difference is the randomised block selection: We introduce an additional parameter, which controls the number of blocks that remain in the transformed template. To enable comparable results, we fixed the number of blocks that remain in the transformed templates to be at 75% of the original blocks. The required key information consists of the two set-up keys for the random generator and the block-size information for the grid construction. By comparing Fig. 16.1 (a) and (b) the following can be observed: While the blocks 4, 6 and 8 are present in (a) they do not occur in the protected, re-mapped image. All the other blocks are used to construct the re-mapped version (b) that has the same size as the original unprotected image or feature representation (a). It also becomes obvious that the blocks 3 and 5 are inserted multiple times into (b) in order to compensate for the absence of the non-considered blocks 6 and 8.

Due to the random selection, it is possible that some blocks are used more than once and others are never used. Otherwise, the re-mapping would resemble a permutation of all blocks, which could be reverted by applying a brute-force-attack testing all possible permutations or some more advanced attacks based on square jigsaw puzzle solver algorithms, e.g. [2, 19, 23].

The bigger the block size, the more biometric information is contained per block and thus, the higher the recognition performance is assumed to be after the application of block re-mapping. Of course, this argument also might depend on the feature extraction and comparison method as well as if it is done in signal or feature domain. Block re-mapping creates discontinuities at the block boundaries which influences the recognition performance if applied in the image domain as several of the feature extraction schemes try to follow continuous vein lines, which are not there any longer. This gets worse with decreasing block sizes. If block re-mapping is applied in the feature domain, this is not an issue as the feature extraction was done prior to applying the block re-mapping. However, due to the shifting process involved during comparison, the re-mapping of blocks can cause problems as a normalised region-of-interest is considered, especially for blocks that are placed at the boundaries of the protected templates. This might eventually lead to a degradation in the biometric systems performance because the information contained in those blocks is then “shifted out” of the image and the vein lines present in the blocks do not contribute to the comparison score anymore. In addition, blocks that share a common vein structure in the original template might be separated after performing the block re-mapping, posing a more severe problem due to the shifting applied during the comparison step. The vein structures close to the block borders are then shifted to completely different positions and cannot be compared any longer, leading to a decrease in the genuine comparison scores. Furthermore, it can also happen that the block re-mapping introduces new vein structures by combining two blocks that originally do not belong to each other. Both of the aforementioned possibilities have a potentially negative influence on the recognition performance. These problems due to the shifting applied during the comparison step are visualised in Fig. 16.2. It clearly can be seen that most of the vein structures visible in the original—left template, are not present in the protected—right template, but other structures have been newly introduced.

On the other hand, the larger the block size, the more of the original biometric information is contained per single block, lowering the level of privacy protection. Hence, we assume that a suitable trade-off between loss of recognition accuracy and level of privacy protection has to be found. Furthermore, the block size also corresponds to the irreversibility property of the transformation. The bigger the block size, the more information is contained per single block and the lower is the total number of blocks. The lower the number of blocks and the higher the information per block, the more effective are potential attacks on this protection scheme as discussed in the literature, e.g. [2, 19, 23].

5.2 Block Warping

Another non-invertible transformation in the context of cancellable biometrics is the so called “warping” (originally named “mesh warping” [27]). Warping can be applied in the image as well as in the template domain. Using this transformation, a function is applied to each pixel in the image which maps the pixel of the input at a given position to a certain position in the output (can also be the same position as in the input again). Thus, this mapping defines a new image or template containing the same information as the original input but in a distorted representation. The warping approach utilised in this chapter is a combination of using a regular grid, as in the block re-mapping scheme, and a distortion function based on spline interpolation. The regular grid is deformed per each block and adjusted to the warped output grid. The number of blocks in the output is the same as in the input, but the content of each individual block is distorted in the warped output.

This distortion is introduced by randomly altering the edge positions of the regular grid, leading to a non-predictable deformation of the regular grid. Spline based interpolation of the input information/pixels is applied to adopt the area of each block with respect to the smaller or larger block area obtained after the deformation application (warping might either stretch or shrink the area of the block as the edge positions are changed). This distortion is key dependent and the key defines the seed value for the random generator responsible for the replacement of the grid edges. This key needs to be protected by some cryptographic encryption methods and stored in a safe place. However, if the key gets disclosed, it is not possible to reconstruct all of the original biometric data in polynomial time due to the applied spline based interpolation. Figure 16.3 shows the basic warping scheme, while in Fig. 16.4 an example of a original—left template and its protected—right template is given.

The application of interpolation does increase the template protection degree as the relation between original vein structures is distorted. However, these transformations might destroy dependencies between the vein lines which are necessary in the feature extraction step in order to enable the same recognition performance as on the original, unprotected data. On the one hand, the application of warping transformations increases the capture subject’s privacy but on the other hand the recognition performance is likely to decrease. For more information about other warping methods, the interested reader is referred to [3], where a review of several different possible solutions including the use of parametric and non-parametric functions can be found.

6 Experimental Set-Up

In the following, the experimental set-up, including the datasets, the finger vein recognition tool-chain as well as the experimental protocol are explained.

6.1 Finger Vein Datasets

The experiments are conducted on two datasets: The first one is the University of Twente Finger Vascular Pattern Database (UTFVP) [25]. It consists of 1440 images, which were acquired from 60 subjects in a single session. Six fingers were captured, including the index, ring and middle finger of both hands with 4 images per finger. The finger vein images have a resolution of \(672\,\times \,380\) pixels and a density of 126 pixels/cm, resulting in a width of 4–20 pixels for the visible blood vessels.

The second dataset we utilise here is the PLUSVein-FV3 Dorsal–Palmar finger vein dataset and which has been introduced in [10] and is partly discussed in Chap. 3 [12]. To enable a meaningful comparison with the UTFVP results, we only use the palmar subset. Region-Of-Interest (ROI) images containing only the centre part of the finger where most of the vein pattern information is located have been extracted from the captured images as well. Some example images of the PLUSVein-FV3 subsets are given in Fig. 16.5.

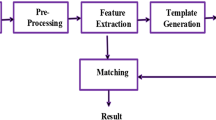

6.2 Finger Vein Recognition Tool-Chain

In this subsection an overview of the most important parts of a typical finger vein recognition tool-chain is given. There are several studies about finger vein recognition systems, e.g. [8], that present and discuss different designs, but they all include a few common parts or modules. These main modules consist of: the finger vein scanner (image acquisition), the preprocessing module (preprocessing), the feature extraction module (feature extractor), the template comparison module (matcher) and the decision module (final decision). The system may contain an optional template protection module, either after the preprocessing module (image domain) or after the feature extraction module (feature domain). As the main focus of this chapter is on template protection applied in the feature domain, the system used during the experiments contains the template protection as part of the feature extractor. For feature extraction we selected six different methods: Gabor Filter (GF) [13], Isotropic Undecimated Wavelet Transform (IUWT) [24], Maximum Curvature (MC) [18], Principal Curvature (PC) [1], Repeated Line Tracking (RLT) [17] and Wide Line Detector (WLD) [6].

To calculate the final comparison scores an image correlation based comparison scheme as introduced by Miura et al. in [17] is applied to the baseline (unprotected) templates (features) as well as to the templates protected by block re-mapping and block warping. As the comparison scheme is correlation based, including a necessary pixel wise shifting, we selected a shift range of 80 pixels in x- and 30 pixels in y-direction, respectively. Further details on the deployed recognition tool-chain can be found in Chap. 4 [11] of this handbook.

6.3 Experimental Protocol and Types of Experiments

The necessary comparison scores are calculated using the correlation based comparison scheme described before and the comparison to be performed are based on the Fingerprint Verification Contests’ (FVC) protocol [15]. To obtain the genuine scores, all possible comparisons are performed, i.e. the number of genuine scores is \(60 * 6 * \frac{4*3}{2} = 2160\) (UTFVP) and \(60 * 6 * \frac{5*4}{2} = 3600\) (PLUSVein-FV3), respectively. For the impostor scores, only a subset of all possible comparisons is performed. The first image of each finger is compared against the first image of all other fingers. This results in \(\frac{60*6*(60*6-1)}{2} = 64{,}620\) impostor comparisons for each dataset (as both of them contain 60 subjects and 6 fingers per capture subject). As the employed comparison scheme is a symmetric measure, no symmetric comparisons (e.g. 1–2 and 2–1) are performed. The FVC protocol reduces the number of impostor comparisons in order to keep the computation time low for the whole performance evaluation while ensuring that every finger is compared against each other finger at least once. To quantify the recognition performance, several well-known measures are utilised: The equal error rate (\(\mathrm {EER}\), point where the FMR and the FNMR are equal), \(FMR_{100}\) (the lowest false Non-Match Rate (FNMR) for false match rate (FMR) \(\le 1\%\)), \(FMR_{1000}\) (the lowest FNMR for FMR \(\le 0.1\%\)) as well as the ZeroFMR (the FNMR for FMR \(= 0\%\)).

We conduct four sets of experiments:

-

1.

In the first set of experiments the unprotected templates are considered. The first experiments provide a baseline to compare the recognition performance of the protected templates to

-

2.

The second set of experiments deals with the protected templates, generated by applying one of the aforementioned cancellable biometrics schemes to the same templates that have been used during the first set of experiments. For score calculation, these protected templates are compared against each other. For both employed cancellable schemes, 10 runs using different system keys are performed to assess the recognition performance variability and key dependency of the recognition performance.

-

3.

The third set of experiments compares capture subject specific and system-specific keys. Therefore, a different key (note: the key is controlling the random selection of blocks or the repositioning of the grid) is assigned to each finger, thus resulting in 360 virtual subjects (not only the 60 physical ones). Again, 10 runs with different keys per run are performed and averaged afterwards. These capture subject-specific key results are then compared to the system-specific key ones as obtained in the second set of experiments.

-

4.

The last set of experiments is committed to the unlinkability analysis. The approach by Gomez et al. [4], introduced in Sect. 16.4 describes the extent of linkability in the given data, with a range of \(D_{sys}\) from [0, 1]. The higher the value, the more linkable are the involved templates. Thus, the resulting measure represents a percentage of linkability that is present. Of course, full unlinkability is given if the score is 0. \(D_{sys}\) is based on the local D(s) value, which is calculated based on the comparison scores of several mated (genuine) as well as non-mated (impostor) comparison between templates protected by the same template protection system but using different keys, thus originating from different applications or systems. We utilise this measure to assess the unlinkability of the presented cancellable biometric schemes for finger vein recognition (block re-mapping and warping).

To comply with the principles of reproducible research we provide all experimental details, results as well as the used vein recognition SDK, settings files and scripts to run the experiments for download on our website: http://www.wavelab.at/sources/Kirchgasser19b/. The used datasets are publicly available as well, hence it is possible to reproduce our results for anyone who is interested to do so.

6.4 Selecting the Processing Level to Insert Template Protection

If template protection is done in the signal domain cancellable biometrics schemes are applied directly after the image acquisition and before the feature extraction. Otherwise, template protection is applied to the extracted binary vein features in order to protect the contained private biometric information right after the feature extraction is finished (feature domain).

The main purpose of this chapter and the experiments performed here is to provide a recognition performance comparison to the previous results obtained by Piciucco et al. [20]. The authors used the same cancellable methods on the UTFVP finger vein images, but as opposed to this chapter, not in the feature domain, but in the image domain. To ensure that our results are comparable with the previous ones by Piciucco et al. [20], we use the same block sizes during our experiments and select the same maximum offset for the block warping approach. Thus, we select block sizes of \(16\,\times \,16\), \(32\,\times \,32\), \(48\,\times \,48\), and \(64\,\times \,64\) for block re-mapping and block warping. For block warping, maximum offset values of 6, 12, 18, and 24 pixel are considered. In the following result tables block re-mapping is abbreviated using remp_16 (block size: \(16\,\times \,16\)) till remp_64 (block size: \(64\,\times \,64\)), while all warping experiments correspond to warp_16_6 (block size: \(16\,\times \,16\), offset: 6) till warp_64_24 (block size: \(64\,\times \,64\), offset: 24).

In contrast to the work of Piciucco et al. [20], we do not perform an analysis of the renewability and the key-sensitivity of the employed cancellable biometrics schemes. The key-sensitivity and renewability are expected to be similar for the schemes applied in the feature domain and in the image domain. Instead, we consider different issues like the comparison of capture subject vs. system-depended keys, and a thorough unlinkability analysis.

7 Experimental Results

This section presents and discusses all relevant results concerning the various template protection methods’ impact on the recognition performance and unlinkability in the four sets of experiments that have been considered. As we aim to compare the experimental results to the corresponding results reported in [20], we first summarise their main results:

-

(a)

The best performance results regarding EER were found for the block re-mapping scheme using a block size of \(64\,\times \,64\).

-

(b)

The best achieved EER was 1.67% for the protected data and 1.16% for the unprotected templates of the UTFVP dataset (using GF features).

-

(c)

Block re-mapping outperformed block warping.

7.1 Baseline Experiments

Table 16.1 lists the performance results of the baseline experiments in percentage terms for the UTFVP and the PLUSVein-FV3 dataset. Overall, the performance on the UTFVP dataset is slightly superior compared to the PLUSVein-FV3 dataset for most of the evaluated recognition schemes.

On the UTFVP, the best recognition performance result with an EER of 0.09% is achieved by MC, followed by PC with an EER of 0.14%, then IUWT, WLD and RLT while GF has the worst performance with an EER of 0.64%. On both subsets of the PLUSVein-FV3 the best results are achieved by using MC as well, with an EER of 0.28% and 0.33% on the LED and laser subset, respectively. RLT performed worst compared to the other schemes on both subsets. Nevertheless, each of the evaluated recognition schemes achieves a competitive performance on all of the tested datasets. The other performance figures, i.e. \(FMR_{100}\), \(FMR_{1000}\) and ZeroFMR are in line with the EER values and support the general trend that most of the applied feature extraction methods perform reasonably well on the given data sets using the baseline, unprotected templates.

7.2 Set 2—Protected Template Experiments (System Key)

As mentioned before, there are several parameters that have an essential influence on the recognition performance results obtained by applying the different cancellable biometrics schemes.

Table 16.2—feature domain and 16.3—signal domain, respectively, present the EER by using the mean (\(\bar{x}\)) and the standard deviation (\(\sigma \)) for both datasets. These results are calculated by randomly choosing 10 different keys and running the experiments first before the presented results are obtained by calculating \(\bar{x}\) and \(\sigma \) of the performed computations.

At first we will discuss the results given by Table 16.2. Not surprisingly, the worst performance is observed for block re-mapping (remp_16, remp_32, remp_48 and remp_64) using \(16\,\times \,16\) as smallest block size while GF was applied (UTFVP). This trend is in line with the findings of Piciucco et al. [20], which have been observed in the signal domain. It has to be mentioned that the observed results are strongly depending on the particular feature extraction method. As in [20] only the GF method was used for feature extraction, a direct comparison can only be done based on the GF results using the UTFVP dataset. This direct comparison shows that our best results on GF are worse compared to the results presented in [20] as we used a different implementation of the scheme. However, the best results using UTFVP are obtained by MC using a block size of \(64\,\times \,64\) (EER 3.27). In general remp_48 and remp_64 always resulted in the best performance for all datasets and not only on UTFVP (best EER of 5.52/4.42 for Laser/LED was achieved by applying WLD and remp_48). The only exception to this trend is given by RLT on the Laser/LED dataset. In this particular case, remp_64 was performing worst, but this is a feature extraction type based observation.

In contrast to the block re-mapping based methods, the recognition performance of the warping based experiments (warp_16_6 till warp_64_24) is better as observed for block re-mapping. This is in line with results reported for warping based experiments done in other biometric applications, e.g. [22] but opposed to the result of [20]. The best result on UTFVP is obtained for using PC and warp_16_6 (EER 0.71). Nevertheless, there is not a big difference to the EER given by warp_32_12, warp_48_18 and warp_64_24. It seems that the parameter choice has not a very high influence on the reported performance. For the other two datasets using WLD is resulting in the best EER values (Laser: 2.02, LED: 1.00).

As we want to compare the recognition performance of the feature domain template protected data to the same experiments which have been considering the transformations in the signal domain we will discuss the corresponding results now. The EER values applying template protection in the signal domain using system based keys are presented in Table 16.3.

The most important aspect using block re-mapping in the signal domain instead of applying the template protection schemes in the feature domain is a quite high-performance degradation in most of the conducted experiments. As mentioned in Sect. 16.2 it is likely that the feature extraction of the vein patterns after the template protection done in the signal domain might cause problems. This overall trend is confirmed by the observed EER results presented in Table 16.3. On UTFVP data, IUWT and PC resulted in the same trend that bigger block sizes are favourable in terms of performance (best average EER, 12.84, is given by IUWT using remp_64). For all other extraction schemes the EER values for remp_16 or remp_32 are better compared to remp_64. However, the performance difference is quite small.

Using warping, the influence on extracting the finger vein based features in the signal domain as compared to conducting the extraction in the feature domain is not so high as reported for block re-mapping. Hence, the overall performance trend using warping regardless of which dataset is considered, is similar to the results given in Table 16.2 (feature domain). IUWT again performs best in terms of EER. For warp_16_6 the best performance can be reported. Surprisingly, the best average EER, 1.08, and the other performance values which are achieved applying IUWT on the template protected images are very similar for UTFVP and the LED dataset among each other.

7.3 Set 3—Subject Dependent Versus System-Dependent Key

In this subsection, the capture subject-specific key experiments and their results are described and compared to the performance values obtained by using a system-dependent key. For the capture subject specific key experiments, a different and unique key for each finger is selected, compared to only one system-specific key, which is the same for all fingers. This should lead to a better differentiation of single capture subjects as the inter-subject variability is increased. Considering the subject dependent template protection experiments the results are summarised in Tables 16.4—feature domain, and 16.5—signal domain, respectively. As expected, it becomes apparent that the overall performance of all experiments using subject dependent keys is much better compared to the system-specific key results. This can be explained as the usage of subject dependent keys provides a better separation of genuine and impostor score distributions after applying the transformation.

The best feature domain based performance (see Table 16.4) is obtained on UTFVP using WLD during remp_64 (EER 1.90) and MC during warp_16_6 (EER 0.68), on the Laser dataset using IUWT (EER 1.84 for remp_64, EER 1.81 for warp_16_6) and finally on the LED dataset using IUWT/WLD (EER 1.93/0.85) applying remp_64/warp_16_6. According to the EER values highlighted in Table 16.5 (signal domain) the overall best recognition performance is achieved by applying the template protection schemes in the signal domain using subject-specific keys. This observation is interesting because it seems that in most cases subject-specific keys have a more positive effect on the protected features’ performance if the corresponding transformation was applied in the signal domain. However, there are also some cases where the subject-specific keys’ signal domain performance is lower compared to the best results obtained in the feature domain, e.g. Laser dataset using WLD and warp_16_6. Compared to [20] the recognition performance presented in Table 16.5 using GF is outperforming the findings stated by Piciucco et al. no matter if block re-mapping or warping is considered. All other results obtained for UTFVP are better as well.

7.4 Set 4—Unlinkability Analysis

The unlinkability analysis is performed to ensure that the applied template protection schemes meet the principles established by the ISO/IEC 24745 standard [7], in particular the unlinkability requirements. If there is a high amount of linkability for a certain template protection scheme, it is easy to match two protected templates from the same finger among different applications using different keys. In that case, it is easy to track the capture subjects across different applications, which poses a threat to the capture subjects’ privacy. The unlinkability is likely to be low (linkability high) if there is too little variation between protected templates based on two different keys (i.e. the key-sensitivity is low) or the unprotected and the protected template in general. Tables 16.6, 16.7, 16.8 and 16.9 lists the global unlinkability scores, \(D_{sys}\), for all datasets using block re-mapping and warping, similar to the tables that have been used to describe the recognition performance. The \(D_{sys}\) ranges normally from 0 to 1, where 0 represents the best achievable unlinkability score. We shifted the range from [0, 1] to values in [0, 100] to improve the readability of the results.

The \(D_{sys}\) ranges reveal that there are several block re-mapping configurations leading to a low linkability score, indicating that the protected templates cannot be linked across different applications (high unlinkability). This can be observed not only for applying block re-mapping in the feature domain using system-specific keys but also for the application in all other feature spaces and key selection strategies. The lowest \(D_{sys}\) scores can be detected for the usage of remp_16. For most block sizes \(48\,\times \,48\) or \(64\,\times \,64\) the unlinkability values are higher compared to the schemes using lower block sizes. Thus, the linkability is increased.

For warping the situation is different. First, the obtained \(D_{sys}\) is mostly quite high which indicates a high linkability regardless the choice of key selection strategy or the domain. Second, warp_32_12 or warp_48_18 exhibit the lowest unlinkability scores, clearly the highest amount of linkability detected for warp_16_6. The reason for this is given by the applied warping scheme. If small block sizes are used the offset, which is responsible for the amount of introduced degradation during the transformation, is small as well. Thus, for an offset of 6 only a little amount of variation in the original image (signal domain) or extracted template (feature domain) is caused. Of course, this results in a high linkability score as the transformed biometric information is minimally protected.

In Fig. 16.6 4 examples exhibiting score distributions and corresponding \(D_{sys}\) values are shown for block re-mapping: First row—remp_16 (a) and remp_54 (b), and warping: Second row—warp_16_6 (c) and warp_64_24 (d). The blue line represents the process of \(D_{sys}\) for all threshold selections done during the computation (see [4]). The green distribution corresponds to the so called mated samples scores. These comparison scores are computed from templates extracted from samples of a single instance of the same subject using different keys [4]. The red coloured distribution describes the non-mated samples scores, which are yielded by templates generated from samples of different instances using different keys. According to [4] a fully unlinkable scenario can be observed if both coloured distributions are identical, while full linkability is given if mated and non-mated distributions can be fully separated from each other. For block re-mapping, (a) and (b) almost full unlinkability is achieved in both cases, while for the warping examples, (c) and (d) the distributions can be partly separated from each other. The worst result regarding the ISO/IEC Standard 24745 [7] property of unlinkability is exhibited by example (c) as both distributions are separated quite well, which leads to a high amount of linkability. Thus, in warp_16_6 it is possible to decide with high probability to which dataset a protected template belongs. Furthermore, from a security point of view warping is not really a suitable template protection scheme using the given parameters. As the amount of linkability decreases using bigger block sizes and more importantly larger offsets it seems to be possible to select a parameter set-up that is providing both a good recognition performance and a quit low linkability at the same time.

According to these results, it is possible to summarise the findings taking the recognition performance and unlinkability evaluation into account:

-

(a)

Only a very low amount of capture subject’s privacy protection for configurations exhibiting a low EER is obtained for the application of warping schemes.

-

(b)

A high EER is observed for the configurations that exhibit a high unlinkability (e.g. detected during the application of block re-mapping schemes in most cases).

Additionally, it must be mentioned that the template protection application in feature or signal domain shows differences regarding the unlinkability aspect. For both, block re-mapping and warping, it is better to apply template protection in the signal domain as the \(D_{sys}\) values are lower for almost all cases. If the recognition performance is taken into account as well the best obtained experimental setting is the template protection application in the signal domain using subject-specific keys.

However, the provided level of privacy protection, especially if it comes to unlinkability is clearly not enough for a practical application of warping based cancellable schemes in the feature domain and several signal domain settings using the selected parameters. Furthermore, the worse recognition performance restricts the use of block re-mapping schemes for real-world biometric systems in the most cases as well.

8 Conclusion

In this chapter, template protection schemes in finger vein recognition with a focus on cancellable schemes and their application in the feature domain were presented and evaluated. The focus was hereby on cancellable schemes that can be applied in both the signal and the feature domain in the context of finger vein recognition. Two well-known representatives of those schemes, namely, block re-mapping and block warping were evaluated in signal and feature domain on two different publicly available finger vein data sets: the UTFVP and the palmar subsets of the PLUSVein-FV3. These schemes are the same ones that have been applied in the image domain in the previous work of Piciucco et al. [20].

Compared to the previous results obtained in [20], none of the block re-mapping methods performed well in the feature and signal domain using system-specific keys. The experiments considering a capture subject-specific key instead of a system specific one lead to an improvement regarding the recognition performance, especially in the signal domain. Warping performed much better in both domains but further results on the unlinkability revealed that the privacy protection amount is very limited. Thus, an application in real-world biometric systems is restricted for the most experimental settings according to the fact that it is possible to track a subject across several instances generated with various keys.

Nevertheless, it was possible to observe the following trend that leads to an optimistic conclusion. Of course, both template protection schemes have their weaknesses, block re-mapping exhibits recognition performance problems, while warping lacks in terms of unlinkability, but according to the results it seems that the selection of a larger offset could reduce the unlinkability issue for warping in the signal domain. In particular, the larger the offset was selected the better the unlinkability performed, while the recognition performance was hardly influenced. According to this observation, we claim that warping is a suitable cancellable template protection scheme for finger vein biometrics if it is applied in the signal domain using subject-specific keys and a large offset to achieve sufficient unlinkability.

References

Choi JH, Song W, Kim T, Lee S-R, Kim HC (2009) Finger vein extraction using gradient normalization and principal curvature. Proc SPIE 7251:9

Gallagher AC (2012) Jigsaw puzzles with pieces of unknown orientation. In: 2012 IEEE Conference on computer vision and pattern recognition (CVPR). IEEE, pp 382–389

Glasbey CA, Mardia KV (1998) A review of image-warping methods. J Appl Stat 25(2):155–171

Gomez-Barrero M, Galbally J, Rathgeb C, Busch C (2018) General framework to evaluate unlinkability in biometric template protection systems. IEEE Trans Inf Forensics Secur 13(6):1406–1420

Hämmerle-Uhl J, Pschernig E, Uhl A (2009) Cancelable iris biometrics using block re-mapping and image warping. In: Samarati P, Yung M, Martinelli F, Ardagna CA (eds)Proceedings of the 12th international information security conference (ISC’09), volume 5735 of LNCS. Springer, pp 135–142

Huang B, Dai Y, Li R, Tang D, Li W (2010) Finger-vein authentication based on wide line detector and pattern normalization. In: 2010 20th International conference on pattern recognition (ICPR). IEEE, pp 1269–1272

ISO/IEC 24745—Information technology—Security techniques—biometric information protection. Standard, International Organization for Standardization, June 2011

Jadhav M, Nerkar PM (2015) Survey on finger vein biometric authentication system. Int J Comput Appl (3):14–17

Jain AK, Nandakumar K, Nagar A (2008) Biometric template security. EURASIP J Adv Signal Process 2008:113

Kauba C, Prommegger B, Uhl A (2018) The two sides of the finger—an evaluation on the recognition performance of dorsal vs. palmar finger-veins. In: Proceedings of the international conference of the biometrics special interest group (BIOSIG’18), Darmstadt, Germany (accepted)

Kauba C, Prommegger B, Uhl A (2019) An available open source vein recognition framework. In: Uhl A, Busch C, Marcel S, Veldhuis R (eds) Handbook of vascular biometrics. Springer Science+Business Media, Boston, MA, USA, pp 77–112

Kauba C, Prommegger B, Uhl A (2019) Openvein—an open-source modular multi-purpose finger-vein scanner design. In: Uhl A, Busch C, Marcel S, Veldhuis R (eds) Handbook of vascular biometrics. Springer Science+Business Media, Boston, MA, USA, pp 77–112

Kumar A, Zhou Y (2012) Human identification using finger images. IEEE Trans Image Process 21(4):2228–2244

Lai Y-L, Jin Z, Teoh ABJ, Goi B-M, Yap W-S, Chai T-Y, Rathgeb C (2017) Cancellable iris template generation based on indexing-first-one hashing. Pattern Recognit 64:105–117

Maio D, Maltoni D, Cappelli R, Wayman JL, Jain AK (2002) FVC2002: second fingerprint verification competition. In: 16th international conference on pattern recognition, 2002. Proceedings, vol 3. IEEE, pp 811–814

Maltoni D, Maio D, Jain AK, Prabhakar S (2009) Handbook of fingerprint recognition, 2nd edn. Springer

Miura N, Nagasaka A, Miyatake T (2004) Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification. Mach Vis Appl 15(4):194–203

Miura N, Nagasaka A, Miyatake T (2007) Extraction of finger-vein patterns using maximum curvature points in image profiles. IEICE Trans Inf Syst 90(8):1185–1194

Paikin G, Tal A (2015) Solving multiple square jigsaw puzzles with missing pieces. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4832–4839

Piciucco E, Maiorana E, Kauba C, Uhl A, Campisi P (2016) Cancelable biometrics for finger vein recognition. In: 2016 First international workshop on sensing, processing and learning for intelligent machines (SPLINE). IEEE, pp 1–5

Ratha NK, Chikkerur S, Connell JH, Bolle RM (2007) Generating cancelable fingerprint templates. IEEE Trans Pattern Anal Mach Intell 29(4):561–572

Ratha NK, Connell J, Bolle R (2001) Enhancing security and privacy in biometrics-based authentication systems. IBM Syst J 40(3):614–634

Sholomon D, David O, Netanyahu NS (2013) A genetic algorithm-based solver for very large jigsaw puzzles. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1767–1774

Starck J-L, Fadili J, Murtagh F (2007) The undecimated wavelet decomposition and its reconstruction. IEEE Trans Image Process 16(2):297–309

Ton BT, Veldhuis RNJ (2013) A high quality finger vascular pattern dataset collected using a custom designed capturing device. In: 2013 International conference on biometrics (ICB). IEEE, pp 1–5

Uhl A (2019) State-of-the-art in vascular biometrics. In: Uhl A, Busch C, Marcel S, Veldhuis R (eds) Handbook of vascular biometrics. Springer Science+Business Media, Boston, MA, USA, pp 3–62

Wolberg G (1998) Image morphing: a survey. Vis Comput 14(8):360–372

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2020 The Author(s)

About this chapter

Cite this chapter

Kirchgasser, S., Kauba, C., Uhl, A. (2020). Cancellable Biometrics for Finger Vein Recognition—Application in the Feature Domain. In: Uhl, A., Busch, C., Marcel, S., Veldhuis, R. (eds) Handbook of Vascular Biometrics. Advances in Computer Vision and Pattern Recognition. Springer, Cham. https://doi.org/10.1007/978-3-030-27731-4_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-27731-4_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-27730-7

Online ISBN: 978-3-030-27731-4

eBook Packages: Computer ScienceComputer Science (R0)