Abstract

Optical quality control of MEMS and microsystems is challenging as these structures are micro-scale and three dimensional. Here we lay out different optical systems that can be used for 3D optical quality control in general and for such structures in particular. We further investigate one of these technologies – plenoptic cameras and characterize them for the described task, showing advantages and disadvantages. Key advantages are a huge increase in depth of field compared to conventional microscope camera systems allowing for fast acquisition of non-flat systems and secondly the resulting total focus images and depth maps. Finally we conclude that these advantages render plenoptic cameras a valuable technology for the application of quality control.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 MEMS and Typical Defects

Advancements in manufacturing techniques of micro-electro-mechanical systems (MEMS) and microsystems such as deep reactive ion-etching further increased the range of possible applications of these systems. Bridging the world of electronics and mechanics, MEMS are used for applications as micro actuators, generating sound, vibration and liquid flow in microfluidic pumps, while micro sensors turn acceleration, gyroscopic forces, inertia, pressure, sound waves et cetera into electric signals. These actuators and sensor are used in many industries such as mobile computing, automotive, VR/AR, electronic entertainment, pharma and medical. To achieve high production yield and quality of the final products quality control is key. Functional testing of MEMS is often only possible in a further integrated assembly. Yet it is desirable to assess product quality as early as possible to avoid assembly of defect components into integrated systems rendering a whole assembly worthless. To assess quality in an early stage avoiding further assembly, optical quality control is one possibility. However, MEMS have a key property that makes optical quality control specially challenging: functional structures of MEMS are intrinsically extended in depth (see Fig. 1, Fig. 2 and Fig. 3).

An optical quality control system for MEMS must therefore fulfill three requirements: (1) Micrometer-scale features must be resolved in all three spatial dimensions. Production defects such as a displaced transducer bridge or wire bond may only be detectable by utilizing height data captured by the optical system. (2) The relevant depth of the product must be acquired at sufficient resolution. (3) The system’s throughput must be high enough to allow for real-time inspection of produced units.

1.2 State of the Art (3D) Inspection Technology

Different optical acquisition systems can be used for quality control in general but only a few are suitable for MEMS inspection. The straightforward approach is the application of conventional wide field microscopy. Considering a typical microscope objective with sub-micrometer resolution a Field of View (FoV) in the range of 0.3 mm2 might be captured with one image. Capturing the area of an eight-inch wafer (30’000 mm2) would already require 100’000 images. The typical Depth of Field (DoF) of the same lens covers maximally 2 in height [1]. For MEMS with 3D structures covering a thickness ranging from sub-micrometer up to several 100 µm, a technique called “focus stacking” may be used to expand the DoF and acquire depth data [2]. For very common structures 100 µm in height the eight-inch wafer required an amount of 5 million images. Even with a fast camera enabling 100 fps (frames per second) and a manipulation system matching this speed for repositioning the wafer it would take 14 h to capture the 3D data, not yet considering any kind of image analysis. While 2D inspection is a very common technique, 3D by focus stacking is not employed in industry for MEMS inspection.

Another possibility to obtain 3D depth information are stereo camera images by analyzing shift of parallax of image features. However, parts of an image that are occluded from one view lack parallax information. This renders inference of the depth impossible at these places. Systems with more cameras mitigate this issue as the chance of occluding parts of the image for all but one camera decreases with the number of cameras [3]. However, the complexity and bulkiness of the system also increases with the number of cameras. The need for two lenses and cameras imaging the same FoV limits the application to macroscopic lenses. Thus the limited achievable resolution, laterally as well as in height, so far prohibits the application of stereo camera systems for MEMS inspection (Fig. 4).

Stereo camera system offered by Ensenso GmbH [4].

Laser Line Triangulation (LLT) illuminates the sample by swiping a projected laser line from an angle to the viewing direction [5]. Depth displacement in the imaged sample shifts the apparent laser line position. On uniform samples such systems deliver precise depth maps quickly [6] but samples with strong differences in reflectivity and absorption can cause artefacts. The lateral resolution of such systems is mostly limited by diffraction properties of the projected laser beam (several µm) while the depth resolution is a function of incidence angle, sensor pixel size and lens magnification. Common systems range from µm to several 10 µm. LLT systems do not generate photo-like images and therefore need to be combined with microscope cameras in order to deliver image and depth map.

A very similar technology probably in direct competition to plenoptic cameras is structured illumination microscopy. It probably also is the most versatile technology ranging from moiré fringe exploitation [7] to various kind of pattern projection and 3D information extraction [8]. In the first case the superposition of a well-defined illumination pattern with the structure of the object generates a beat pattern otherwise known as moiré fringes. The scene has to be captured several times with slightly re-oriented or phase shifted pattern. The simpler pattern projection relies on known geometric relations between camera and projector with the advantage to need only one image per scene, depending on the actual pattern. In both cases subsequent processing of the image(s) enables the generation of 3D data. With proper computational hardware and a fast camera several 3D images might be obtained per second. In terms of DoF, FoV and resolution the same constraints as for any other optical system apply (Fig. 5).

Imaging based on holography employing laser interference (DHM Digital Holographic Microscopy) offers outstanding depth resolution around 20 nm and simultaneously a considerable DoF of up to a few 100 µm [9,10,11]. The latter one, however, is only true for rather continuous structures. As soon as steps and sharp edges are involved those numbers get down to sub-um up to a few um. The lateral resolution as well as the FoV are limited by laws of physics just as for the other optical imaging technologies as well. Even so this technology has some drawbacks, it offers very high performance in terms of 3D imaging. Unfortunately, this comes literally at costs at least an order of magnitude higher than any of the other systems (Fig. 6).

Typical outline of a Digital Holographic Microscopy system [11].

OCT (Optical Coherence Tomography) as a 3D imaging technology shall be mentioned as well. Although an interferometric principle as holography it relies on a short coherence length of broadband light sources such as superluminescent LEDs [12]. Typical depth resolution achieved goes down to the sub-micrometer range. The DoF of an OCT system depends on either a mechanical movement of a mirror in the reference arm (Time Domain OCT) or the wavelength sweeping of the source (Frequency Domain OCT) and is for TD-OCT at least in theory only limited by size constraints. Lateral resolution again is limited by physics, depending on the lens used. Besides the need for depth scanning which increases the acquisitions time the small number of pixels of available cameras with smart pixels (e.g. 280 × 292 [13]) limits the FoV and therewith further increases the acquisition time. It shall be mentioned that standard cameras may be used as well but demand a way more complex setup [14].

2 3D Real-Time Imaging with Plenoptic Camera

In the following the hard- and software technology of plenoptic cameras and their applications in our context of inspection systems are described.

2.1 Principle of Plenoptic Camera Technology

The general principle of a plenoptic, also known as light field camera dates back to Leonardo Da Vinci and he even described the principle of a pinhole camera to capture the light field [15]. The first multi-lens concept and actual camera was described and developed by physicist Gabriel Lippmann in 1908 [16] although the term “light field” was not used at this time. In 1992 Edward Adelson and John Wang published an article describing the plenoptic camera concept as, in its basic principle, is used until today [17].

Conventional 2D digital cameras use objectives which images an object onto a 2-dimensional array of photosensitive elements. In a plenoptic camera, as Adelson and Wang described, a microlens array is placed between main lens and the photosensitive camera chip. Hereby the main lens generates an intermediate image which subsequently is imaged by the microlenses onto subsegments of the photosensitive part of the chip [18] (see Fig. 7).

As depicted by the small red respectively yellow arrow on the right side of Fig. 7, the object point will be imaged on a different camera pixel with respect to the optical axis of the corresponding microlens. Based on that not only the position of the object point in space can be determined but as well the direction wherefrom the light rays originated. Adjacent microlenses are producing sub-images with are partially overlapping FoVs. Knowing the configuration of main lens, microlens array and camera chip it is possible to computationally reconstruct a 2D image and a depth map [19] (Fig. 8) which together are used to form the 3D image as depicted in Fig. 9.

2.2 Application of Plenoptic Cameras for MEMS and Microsystems Inspection

As summarized above many 3D imaging technologies exist, each having its strengths and weaknesses. The same is true for plenoptic cameras. Due to redundant imaging of object points on several microlens sub-images the reconstructed 2D image loses planar resolution by a factor of 2-times in every direction. This may be countered by use of large pixel count camera chips. However, this increases the amount of data to be handled by the camera interface, the computational hardware and finally by means of storage. Actually these are the main reasons why plenoptic cameras became available for real world applications only during the last decade. Modern GPU hardware made real-time reconstruction of high pixel count light field camera images manageable.

Plenoptic cameras of Raytrix offer unique advantages for industrial applications. As only one camera with a standard main lens is needed, the entire setup becomes rather lean and consumes little space. Assuming a fast interface, e.g. Camera Link, acquisition of full 3D images at 80 frames per second is feasible. As a side effect of the special Raytrix technology using microlenses with different focal lengths on each of the arrays [19], the DoF is increased by a factor of 6 compared to a standard cameras. The probably biggest advantage compared to many of the other 3D imaging technologies is the almost total lack of occlusion. As can be seen in Fig. 10 this enables inspection of devises with high aspect ratios and steep edges.

Upper left: raw image from a plenoptic camera, Lower left: detail crop from the area marked by the red rectangle. Round sub-images created by the microlenses are visible. Note the duplication of features in neighboring sub-images. Lower right: algorithmically reconstructed sharp image. Right: 3D rendering of the image. (Color figure online)

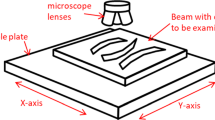

Within a European project “CITCOM”Footnote 1 we are investigating in detail the 3D MEMS inspection using light field cameras. We used and assessed two different plenoptic camera-main lens combinations during development of an automatic optical quality-control system. Specs of those are provided in Table 1. Shown in Fig. 11 is the test setup (no cabling) with a Raytrix R26 camera and 3× telecentric lens on the left side and a R12 in microscope configuration employing a 20× main lens.

The R26 setup enables very fast capturing of an entire eight-inch wafer. Considering an overlap of 10% to enable stitching of the images only about 600 images are needed which might be acquired in less than 10 s. In practice, however, not the speed of the camera is limiting the acquisition time but the movement of the stages. While the 3D reconstruction of a single image can be obtained at the maximal frame rate on PCs employing 4 GPUs (Graphic Processing Units), image stitching and automated recognition of defects and anomalies have to be considered as well.

While most of the typical defects and anomalies might be found with this setup, some demand for higher resolution in both, lateral as well as vertical direction. For those cases a second camera, an R12 in combination with a microscope objective, are used on specific areas of interest. Scanning the entire wafer with this camera is neither useful considering the necessary 130’000 images at 30 fps (frames per second) taking more than one hour at best, nor is it needed. With a FoV covering less than 0.3 mm2, most of the wafer or substrate area appears as a plain, feature-less surface, while in specific areas a closer look might be highly appreciated.

Images taken with the setup described above are presented in Fig. 12 allowing for metrology in all spatial dimensions on a sub-micrometer scale.

An actual disadvantage that accounts for other techniques as well is the inability to computationally reconstruct the depth map at locations with missing contrast. However this can be mitigated by illuminating with a light patterned as depicted in Fig. 13. Those images were generated within a Bachelor thesis [20] initiated and supervised by CSEM. This structured illumination reveals the surface topology and allows a 3D reconstruction even if contrast is lacking.

Imaging of component with partially low contrast resulting in lack of depth information (left). Patterned illumination projection mitigates the effect of contrast-arm surfaces (right). Colored images depict the color-coded depth maps of the 3D objects. Images by [20].

As the reconstructed total focus images and depth maps can be exported as 2D image data, existing software for quality control, fault detection and anomaly detection can directly process and analyze these images. In order to achieve a proper performance of the system, parameters for reconstruction of total focus and depth maps need to be adapted for different inspection scenarios.

3 Conclusion

Real-time 3D inspection is not needed for every application. However, where it is the case multiple technologies might be considered ranging from simple stacking of 2D images over stereo camera configuration all the way to interferometric systems. As usual complexity and cost are increasing with more demanding needs. Plenoptic cameras might not be perfect and certainly will not replace every other inspection technology. However, as is the case for MEMS and other microsystems in general, plenoptic cameras have a huge potential whenever the following criteria seem to be advantageous:

-

6-times larger Depth of Field compared to standard camera

-

occlusion-free 3D imaging

-

real-time 3D image acquisition up to 80 frames per second

-

simple implementation due to single camera/lens configuration

-

cost efficient compared to competing technologies with similar performance

-

simple combination with other means of inspection, e.g. electrical probing possible

Plenoptic cameras are a valuable instrument for the inspections of MEMS because of its unique advantages at their competitive price.

Notes

- 1.

www.citcom.eu; This project has received funding from the European Union’s HORIZON 2020 research and innovation program under Grant Agreement no. 768883.

References

Edmund Optics Ltd: 20× Mitutoyo Plan Apo Infinity Corrected Long WD Objective. Edmund Optics Ltd. (2019). https://www.edmundoptics.de/p/20x-mitutoyo-plan-apo-infinity-corrected-long-wd-objective/6625/. Accessed 16 Sept 2019

Ens, J., Lawrence, P.: An investigation of methods for determining depth from focus. IEEE Trans. Pattern Anal. Mach. Intell. 15(2), 97–108 (1993)

Wilburn, B., et al.: High performance imaging using large camera arrays. ACM Trans. Graph. 24(3), 765–776 (2005)

Ensenso GmbH: www.ensenso.com. Ensenso GmbH (2018). https://www.ensenso.com/. Accessed 12 Sept 2019

Callen, D.: Vision-Spectra.com: Coherent Inc., May 2017. https://www.photonics.com/Article.aspx?AID=62061. Accessed 11 Sept 2019

Micro-Epsilon: Micro Epsilon Laser Triangulation system. MICRO-EPSILON UK & Ireland Ltd. (2019). https://www.micro-epsilon.co.uk/service/glossar/Laser-Triangulation.html

Gustafson, M.G.L.: Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy. J. Microsc. 198(2), 82–87 (2000)

Geng, J.: Structured-light 3D surface imaging: a tutorial. Adv. Opt. Photonics 3(2), 128–160 (2011)

Lyncée Tec: www.lynceetec.com. https://www.lynceetec.com/. Accessed 11 Sept 2019

Pagliarulo, V., Russo, T., Miccio, L., Ferraro, P.: Numerical tools for the characterization of microelectromechanical systems by digital holographic microscopy. J. Micro/Nanolithogr. MEMS MOEMS 14(4), (2015)

Wikipedia Contributors: Digital holographic microscopy. Wikipedia, The Free Encyclopedia, 28 August 2019. https://en.wikipedia.org/w/index.php?title=Digital_holographic_microscopy&oldid=912841452. Accessed 12 Sept 2019

Wikipedia Contributors: Optical coherence tomography. Wikipedia, The Free Encyclopedia, 11 July 2019. https://en.wikipedia.org/w/index.php?title=Optical_coherence_tomography&oldid=905735980. Accessed 12 Sept 2019

Heliotis AG: www.heliotis.ch. https://www.heliotis.ch/index.html. Accessed 12 Sept 2019

Dunsby, C., Gu, Y., French, P.M.W.: Single-shot phase-stepped wide-field coherence-gated imaging. Opt. Express 11(2), 105–115 (2003)

LightField Forum: History of light field photography (timeline). http://lightfield-forum.com/what-is-the-lightfield/history-of-light-field-photography-timeline/. Accessed 13 Sept 2019

Lippmann, G.: Épreuves réversibles donnant la sensation du relief. J. Phys. Theor. Appl. 7(1), 821–825 (1908)

Adelson, E.H., Wang, J.Y.A.: Single lens stereo with a plenoptic camera. IEEE Trans. Pattern Anal. Mach. Intell. 14(2), 99–106 (1992)

Lumsdaine, A., Georgiev, T.: Full resolution lightfield rendering. Indiana University and Adobe Systems (2008)

Perwass, C., Wietzke, L.: Single lens 3D-camera with extended depth-of-field. Proc. SPIE 8291, 4- (2012)

Ammann, S., Gilson, O.: Pattern Illumination for Lightfield Camera. Interstaatliche Hochschule für Technik Buchs, Buchs (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this paper

Cite this paper

Kirschmann, M.A., Pierer, J., Steinecker, A., Schmid, P., Erdmann, A. (2021). Plenoptic Inspection System for Automatic Quality Control of MEMS and Microsystems. In: Ratchev, S. (eds) Smart Technologies for Precision Assembly. IPAS 2020. IFIP Advances in Information and Communication Technology, vol 620. Springer, Cham. https://doi.org/10.1007/978-3-030-72632-4_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-72632-4_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-72631-7

Online ISBN: 978-3-030-72632-4

eBook Packages: Computer ScienceComputer Science (R0)