Abstract

Human attention processes play a major role in the optimization of human-machine interaction (HMI) systems. This work describes a suite of innovative components within a novel framework in order to assess the human factors state of the human operator primarily by gaze and in real-time. The objective is to derive parameters that determine information about situation awareness of the human collaborator that represents a central concept in the evaluation of interaction strategies in collaboration. The human control of attention provides measures of executive functions that enable to characterize key features in the domain of human-machine collaboration. This work presents a suite of human factors analysis components (the Human Factors Toolbox) and its application in the assembly processes of a future production line. Comprehensive experiments on HMI are described which were conducted with typical tasks including collaborative pick-and-place in a lab based prototypical manufacturing environment.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

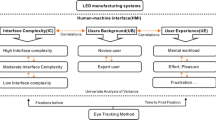

Human-machine interaction in manufacturing has recently experienced an emergence of innovative technologies for intelligent assistance [1]. Human factors are crucial in Industry 4.0 enabling human and machine to work side by side as collaborators. Human-related variables are essential for the evaluation of human-interaction metrics [2]. To work seamlessly and efficiently with their human counterparts, complex manufacturing work cells, such as for assembly, must similarly rely on measurements to predict the human worker’s behavior, cognitive and affective state, task specific actions and intent to plan their actions. A typical, currently available application is anticipatory control with human-in-the-loop architecture [3] to enable robots to perform task actions that are specifically based on recently observed gaze patterns to anticipate actions of their human partners according to its predictions.

In order to characterize the human state by means of quantitative measurement technologies we expect that these should refer to performance measures that represent psychologically relevant parameters. Executive functions [18] were scientifically investigated to represent activities of cognitive control, such as, (i) inhibition in selective attention, (ii) task switching capabilities, or (iii) task planning. These functions are dependent on dynamic attention and are relevant too many capability requirements in assembly. (i) appropriate focus at the right time to manual interaction, (ii) switching attention between multiple tasks at the same time, and (iii) action planning in short time periods for optimized task performance. Consequently, measuring and modeling of the state of cognition relevant human factors as well as the current human situation awareness are mandatory for the understanding of immediate and delayed action planning. However, the state-of-the-art does not yet sufficiently address these central human cognitive functions to an appropriate degree. This work intends to contribute to fill this gap by presenting a novel framework for estimating important parameters of executive functions in assembly processes by means of attention analysis. On the basis of mobile eye tracking that has been made available for real-time interpretation of gaze we developed software for fast estimation of executive function parameters solely on the basis of eye tracking data. In summary, this work describes a review of novel methodologies developed at the Human Factors Lab of JOANNEUM RESEARCH to measure the human factors states of the operator in real-time with the purpose to derive fundamental parameters that determine situation awareness, concentration, task switching and cognitive arousal as central for the interaction strategies of collaborative teams. The suite of gaze based analysis software is referred as Human Factors Toolbox (Fig. 1).

Human-robot collaboration and intuitive interface (HoloLens, eye tracking, markers for OptiTrack localization) for the assessment of human factors state to characterize key features in the collaboration domain. HRC within a tangram puzzle assembly task. (a) The operator collaborates with the robot in the assembly (only robot can treat ‘dangerous’ pieces). (b) Egocentric operator view with augmented reality based navigation (arrow), piece localization, gaze (blue sphere), current state of mental load (L) and concentration (C) in the HoloLens display. (c) Recommended piece (arrow) and gaze on currently grabbed puzzle piece.

Human situation awareness is determined on the basis of concrete measures of eye movements towards production relevant processes that need to be observed and evaluated by the human. Motivated by the theoretical work of [4] on situation awareness the presented work specifically aims at dynamically estimating (i) distribution of attentional resources with respect to task relevant ‘areas of interaction’ over time, determined by features of 3D gaze analysis and a precise optical tracking system, and (ii) derive from this human factors in real-time, such as, (a) human concentration on a given task, (b) human mental workload, (c) situation awareness and (d) executive functions related measure, i.e., task switching rate. Gaze in the context of collaboration is analyzed in terms of - primarily, visual - affordances for collaboration. In this work we demonstrate the relevance of considering eye movement features for a profound characterization of the state of human factors in manufacturing assembly cells by means of gaze behavior, with the purpose to optimize the overall human-machine collaboration performance.

In the following, Sect. 2 provides an overview on emerging attention analyses technologies in the context of manufacturing human-machine interaction. Section 3 provides insight into the application of a learning scenario in an assembly working cell. Section 4 provides conclusions and proposals for future work,

2 Human Factors Measurement Technologies for Human-Machine Interaction

2.1 Intuitive Multimodal Assistive Interface and Gaze

In the conceptual work on intuitive multimodal assistive interfaces, the interaction design is fully aligned with the user requirements on intuitive understanding of technology [5]. For intuitive interaction, one opts for a human-centered approach and starts from inter-human interactions and the collaborative process itself. The intuitive interaction system is based on the following principles:

-

Natural interaction: Mimicking human interaction mechanisms a fast and intuitive interaction processes has to be guaranteed.

-

Multimodal interaction: Implementation of gaze, speech, gestural, and Mixed-Reality interaction offers as much interaction freedom as possible to the user.

-

Tied modalities: Linking the different interaction modalities to emphasize the intuitive interaction mechanisms.

-

Context-aware feedback: Feedback channels deliver information regarding task, environment to the user. One has to pay attention at what is delivered when and where.

A central component entitled ‘Interaction Model’ (IM) acts as interaction control and undertakes the communication with the periphery system. The IM also links the four interaction modalities and ensures information exchange between the components. It triggers any form of interaction process, both direct and indirect, and controls the context-sensitivity of the feedback. It is further responsible for dialog management and information dispatching.

In human factors and ergonomics research, the analysis of eye movements enables to develop methods for investigating human operators’ cognitive strategies and for reasoning about individual cognitive states [6]. Situation awareness (SA) is a measure of an individual’s knowledge and understanding of the current and expected future states of a situation. Eye tracking provides an unobtrusive method to measure SA in environments where multiple tasks need to be controlled. [7] provided first evidence that fixation duration on relevant objects and balanced allocation of attention increases SA. However, for the assessment of executive functions, the extension of situation analysis towards concrete measures of distribution of attention is necessary and described as follows.

2.2 Recovery of 3D Gaze in Human-Robot Interaction

Localization of human gaze is essential for the localization of situation awareness with reference to relevant processes in the working cell [8]. Firstly proposed 3D information recovery of human gaze with monocular eye tracking and triangulation of 2D gaze positions of subsequent key frames within the scene video of the eye tracking system. Santner et al. [9] proposed gaze estimation in 3D space and achieved accuracies ≈1 cm with RGB-D based position tracking within a predefined 3D model of the environment. In order to achieve the highest level of gaze estimation accuracy in a research study, it is crucial to track user’s frustum/gaze behavior with respect to the worker’s relevant environment. Solutions that realize this include vision-based motion capturing systems: OptiTrackFootnote 1 can achieve high gaze estimation accuracy (≈0.06 mm).

2.3 Situation Awareness

Based on the cognitive ability, flexibility and knowledge of human beings on the one hand and the power, efficiency and persistence of industrial robots on the other hand, collaboration between both elements is absolutely essential for flexible and dynamic systems like manufacturing [10]. Efficient human-robot collaboration requires a comprehensive perception of essential parts of the working environment of both sides. Human decision making is a substantial component of collaborative robotics under dynamic environment conditions, such as, within a working cell. Situation awareness and human factors are crucial, in particular, to identify decisive parts of task execution.

In human factors, situation awareness is principally evaluated through questionnaires, such as, the Situational Awareness Rating Technique (SART [11]). Psychological studies on situation awareness are drawn in several application areas, such as, in air traffic control, driver attention analysis, or military operations. Due to the disadvantages of the questionnaire technologies of SART and SAGAT, more reliable and less invasive technologies were required, however, eye tracking as a psycho-physiologically based, quantifiable and objective measurement technology has been proven to be effective [7, 12]. In several studies in the frame of situation awareness, eye movement features, such as dwell and fixation time, were found to be correlated with various measures of performance. [13, 14] have developed measurement/prediction of Situation Awareness in Human-Robot Interaction based on a Framework of Probabilistic Attention, and real-time eye tracking parameters.

2.4 Cognitive Arousal and Concentration Estimation

For quantifying stress we used cognitive arousal estimation based on biosensor data. In the context of eye movement analysis, arousal is defined by a specific parametrization of fixations and saccadic events within a time window of five seconds so that there is good correlation (r = .493) between the mean level of electro-dermal activity (EDA) and the outcome of the stress level estimator [15] (Fig. 2).

Measure of attentional concentration on tasks. (a) Concentration level during a session without assistance (red line is the mean; red is standard deviation), and (b) concentration level during the session with assistance. On average, the concentration increased when using the intuitive assistance. (Color figure online)

For the estimation of concentration or sustained attention, we refer to the areas of interaction (AOI) in the environment as representing the spatial reference for the task under investigation. Maintaining the attention on task related AOI is interpreted as the concentration on a specific task [16], or on session related tasks in general. Various densities of the fixation rate enable the definition of a classification of levels of actual concentration within a specific period of time, i.e., within a time window of five seconds.

2.5 Estimation of Task Switching Rate

Task switching, or set-shifting, is an executive function that involves the ability to unconsciously shift attention between one task and another. In contrast, cognitive shifting is a very similar executive function, but it involves conscious (not unconscious) change in attention. Together, these two functions are subcategories of the broader cognitive flexibility concept. Task switching allows a person to rapidly and efficiently adapt to different situations [17].

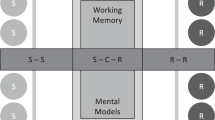

In a multi-tasking environment, cognitive resources must be shared or shifted between the multiple tasks. Task switching, or set-shifting, is an executive function that involves the ability to unconsciously shift attention between one task and another. Task switching allows a person to rapidly and efficiently adapt to different situations. The task-switching rate is defined by the frequency by which different tasks are actually operated. The difference between tasks is defined by the differences in the mental model which is necessary to represent an object or a process in the mind of the human operator. Mental models are subjective functional models; a task switch requires the change of the current mental model in consciousness and from this requires specific cognitive resources and a load.

In the presented work, processing of a task is determined by the concentration of the operator on a task related area of interaction (AOI). Interaction is defined by areas in the operating environment where the operator is manipulating the location of puzzle objects, i.e., grabbing puzzle pieces from a heap of pieces, or putting pieces onto a final position in order to form a tangram shape. Whenever the gaze of the operator intersects with an AOI that belongs to a specific task, then it is associated with an on-going task. The task switch rate is then the number of switches between tasks per period of time, typically the time of a whole session (see Fig. 3 for a visualization). Task switching has been proposed as a candidate executive function along with inhibition, the maintenance and updating of information in working memory, and the ability to perform two tasks at the same time. There is some evidence not only that the efficiency of executive functions improves with practice and guidance, but also that this improvement can transfer to novel contexts. There are demonstrable practice-related improvements in switching performance [18,19,20].

Task switching between collaborative and the single task. (a) Placing a puzzle piece to goal area in the collaborative task. (b) The operator places a puzzle piece to the goal area of single task. (c) Switch between collaborative task (S1, above, green) and single task (S2, below, red). Task duration is determined by the human gaze being focused within an AOI related to the specific task. (Color figure online)

2.6 Cognitive Arousal and Attention in Multitasking

Stress is defined in terms of psychological and physical reactions of animate beings in response to external stimuli, i.e., by means of stressors. These reactions empower the animate to cope with specific challenges, and at the same time there is the impact of physical and mental workload. [21, 22] defined stress in terms of a physical state of load which is characterized as tension and resistance against external stimuli, i.e., stressors which refers to the general adaptation syndrome. Studies confirm that the selectivity of human attention is performing better when impacted by stress [35] which represents the ‘narrowing attention’ effect. According to this principle, attention processes would perform in general better with increasing stress impact.

Executive functions are related to the dynamic control of attention which refers to a fundamental component in human-robot collaboration [36]. Executive functions refer to mechanisms of cognitive control which enable a goal oriented, flexible and efficient behavior and cognition [37, 38], and from this the relevance for human everyday functioning is deduced. Following the definition of [23], executive functions above all include attention and inhibition, task management or control of the distribution of attention, planning or sequencing of attention, monitoring or permanent attribution of attention, and codification or functions of attention. Attention and inhibition require directional aspects of attention in the context of relevant information whereas irrelevant information and likewise actions are ignored. Executive functions are known to be impacted by stress once the requirements for regulatory capacity of the organism are surpassed [24, 40]. An early stress reaction is known to trigger a saliency network that mobilizes exceptional resources for the immediate recognition and reactions to given and in particular surprising threats. At the same time, this network is down-weighted for the purpose of executive control which should enable the use of higher level cognitive processes [41]. This executive control network is particularly important for long-term strategies and it is the first that is impacted by stress [25].

In summary, impact of stress can support to focus the selective attention processes under single task conditions, however, in case of multiple task conditions it is known that stress impacts in a negative way the systematic distribution of attention onto several processes and from this negatively affects the performance of executive functions. Evaluation and prevention of long-term stress in a production work cell is a specific application objective. Stress free work environments are more productive, provide more motivated co-workers and error rates are known to be lower than for stressed workers. The minimisation of interruption by insufficient coordination of subtasks is an important objective function which has executive functions analysis as well as impact by stress as important input variables.

Multi-tasking, Executive Functions and Attention.

Multi-tasking activities are in indirect relation with executive functions. Following Baddeley’s model of a central executive in the working memory [38] there is an inhibitor control and cognitive flexibility directly impacting the (i) multi-tasking, (ii) the change between task and memory functions, (iii) selective attention and inhibition functions. The performance in relation to an activity becomes interrupted once there is a shift between one to the other (“task switch”). The difference between a task shift which particularly requires more cognitive resources, and a task repetition is referred to as ‘switch cost’ [26] and in particular of relevance if the task switch is more frequently, and also referring to the frequency of interruptions after which the operator has to continue the activity from a memorized point where the switch started. Task switches in any case involve numerous executive function processes, including shift of focus of attention, goal setting and tracking, resources for task set reconfiguration actions, and inhibition of previous task groups. Multi-Tasking and the related switch costs define and reflect the requirements for executive functions and from this the cognitive control of attention processes, which provides highly relevant human factors parameters for the evaluation of human-robot collaboration systems.

The impact of executive functions on decision processes was investigated in detail by [27]. Specifically they researched on aspects of applying decision rules based on inhibition and the consistency in the perception of risk in the context of ‘task shifting’. The results verify that the capacity to focus and therefore to inhibit irrelevant stimuli represents a fundamental prerequisite for successful decision processes. At the same time, the change between different contexts of evaluation is essential for consistent estimation of risk (Fig. 4).

Bio-sensor and eye tracking glasses setup in the Human Factors Lab at JOANNEUM RESEARCH. (a) Bio-sensor setup with EDA sensor, HRV and breathing rate sensors. (b, c) Eye-hand coordination task interrupted by a robot arm movement. (d) Target areas with holes and gaze intersections centered around the center as landing point of eye-coordination task.

Measurements of Emotional and Cognitive Stress.

Stress or activity leads to psychophysiological changes, as described by [28]. The body provides more energy to handle the situation; therefore the hypothalamic-pituitary-adrenal (HPA) axis and the sympathetic nervous system are involved. Sympathetic activity can be measured from recordings of, for example, electro-dermal activity (EDA) and electrocardiogram (ECG). Acute stress leads to a higher skin conductance level (SCL) and also to more spontaneous skin conductance reactions (NS.SCR). [29] showed in his summary of EDA research that these two parameters are sensitive to stress reactions. In a further step, [30] examined the reaction of stress by measuring EDA with a wearable device, during cognitive load, cognitive and emotional stress, and eventually classified the data with a Support Vector Machine (SVM) whether the person received stress. The authors conclude that the peak high and the instantaneous peak rate are sensitive predictors for the stress level of a person. The SVM was capable to classify this level correctly with an accuracy larger than 80%. The electrocardiogram shows a higher heart rate (HR) during stress conditions meanwhile the heart rate variability (HRV) is low [31], and the heart rate was found to be significantly higher during cognitive stress than in rest. [32] found in their survey that the HRV and the EDA are the most predictive parameters to classify stress. [33] used a cognitive task in combination with a mathematical task to induce stress. Eye activity can be a good indicator for stressful situations as well. Some studies report more fixations and saccades guided by shorter dwell time in stress, other studies reported fewer fixations and saccades but longer dwell time. Many studies use in addition the pupil diameter for classification of stress, such as reported. In the survey from [32], the pupil diameter achieved good results for detecting stress. A larger pupil diameter indicates more stress. In the study of [34], blink duration, fixations frequency and pupil diameter were the best predictors for work load classification (Fig. 5).

Measurement of stress using eye movement features in relation to EDA measures. (a) The relative increase and decrease of EDA and ‘stress level’ method based output are correlated with Pearson r = .493 and from this the gaze based method provides an indication of arousal as measured by EDA. (b) The stress level measured with higher and lower cognitive stress (NC and SC condition) very well reflects the required cardinal ranking.

Stress Level Estimation.

Eye movement based classification method, i.e., the ‘stress level’ estimation method, very well estimates the EDA arousal level, as well as the cardinal ranking for different levels of stress impact. The stress level estimation is computed by thresholds on the number of saccades and the mean dwell time during an observation window of 5000 ms. For level 2 the number of saccades should be between 15 and 25, and mean dwell time below 500 ms, For level 3, more than 25 saccades and mean dwell time below 250 ms is requested.

Sample Study on Stress and Attention in Multitasking Human-Robot Interaction.

The presented work aimed to investigate how the impact of cognitive stress would affect the attentional processes, in particular, the performance of the executive functions that are involved in the coordination of multi-tasking processes. The study setup for the proof-of-concept involved a cognitive task as well as a visuomotor task, concretely, an eye-hand coordination task, in combination with an obstacle avoidance task that is characteristic in human-robot collaboration. The results provide the proof that increased stress conditions can actually be measured in a significantly correlated increase of an error distribution as consequence of the precision of the eye-hand coordination. The decrease of performance is a proof that the attentional processes are a product of executive function processes. The results confirm the dependency of executive functions and decision processes on stress conditions and will enable quantitative measurements of attention effects in multi-tasking configurations.

3 Use Case for Real-Time Human Factors Measurements in Assembly Work Cells

The use case for an assembly work cell is depicted in Fig. 6. The worker learns about to assemble a transformer with its individual components, interacting with various areas in its environment, e.g., to pick up a component from one area of a table and to place it to another part of a table.

Learning scenario demonstrating the feasibility of the concentration measurement technology. (1a) View on the worker with focus on work (1a, 3a) and being interrupted by a chat with a colleague (2a). Accordingly, the level of concentration measured drops down during the chat (2c) but is high during focused work (1c, 3c). (1b, 2b, 3b) demonstrates the egocentric view of the worker by means of the HoloLens headset with gaze cursor (blue) and concentration (orange) and stress (yellow) measurement bars. (Color figure online)

The learning scenario demonstrates the feasibility of the concentration measurement technology. (1a) View on the worker with focus on work (1a, 3a) and being interrupted by a chat with a colleague (2a). Accordingly, the level of concentration measured drops down during the chat (2c) but is high during focused work (1c, 3c). (1b, 2b, 3b) demonstrates the egocentric view of the worker by means of the HoloLens headset with gaze cursor (blue) and concentration (orange) and stress (yellow) measurement bars.

The development of the demonstrator about the learning scenario is in progress. It is overall based on the human factors measurement technologies described above. Upon completion, a supervisor worker will be capable to select specific tasks from a novel assembly work and investigate how well a rooky worker is performing in comparison to expert data which are matched towards the novel data from the rooky. A distance function will finally provide insight into parts of the interaction since with substantial distance to the expert profile and from this enable to put the focus on parts of the sequence that most need training. Furthermore, the pattern of interaction, i.e., gaze on objects and following interactions, is further investigated for additional optimisations if applicable.

4 Conclusions and Future Work

This work presented innovative methodologies for the assessment of executive functions to represent psychologically motivated quantitative estimations of human operator performance in assembly processes. We estimated dynamic human attention from mobile gaze analysis with the potential to measure, such as, attention selection and inhibition, as well as task switching ability, in real-time. We presented a suite of different components with gaze based human factors analysis that is relevant for the measurement of cognitive and psychophysiological parameters. In this manner, this work intends to provide a novel view on human operator state estimation as a means for the global optimization of human-machine collaboration.

Within a typical learning scenario of a human operator within an assembly work cell and the study setup including application of state-of-the-art intuitive assistance technology for the performance of collaborative tasks, we illustrated the potential of interpretation from gaze based human factors data in order to evaluate a collaborative system.

In future work we will study the potential of more complex eye movement features, such as, for planning processes, to enable a more detailed analysis of the dynamic distribution of attentional resources during the tasks.

References

Saucedo-Martínez, J.A., et al.: Industry 4.0 framework for management and operations: a review. J. Ambient Intell. Humaniz. Comput. 9, 1–13 (2017)

Steinfeld, A., et al.: Common metrics for human-robot interaction. In: Proceedings of the ACM SIGCHI/SIGART Human-Robot Interaction (2006)

Huang, C.-M., Mutlu, B.: Anticipatory robot control for efficient human-robot collaboration. In: Proceedings of the ACM/IEEE HRI 2016 (2016)

Endsley, M.R.: Toward a theory of situation awareness in dynamic systems. Hum. Factors 37(1), 32–64 (1995)

Paletta, L., et al.: Gaze based human factors measurements for the evaluation of intuitive human-robot collaboration in real-time. In: Proceedings of the 24th IEEE Conference on Emerging Technologies and Factory Automation, ETFA 2019, Zaragoza, Spain, 10–13 September 2019 (2019)

Holmqvist, K., Nyström, M., Andersson, R., Dewhusrt, R., Jarodzka, H., van de Weijler, J.: Eye Tracking – A Comprehensive Guide to Methods and Measures, p. 187. Oxford University Press, Oxford (2011)

Moore, K., Gugerty, L.: Development of a novel measure of situation awareness: the case for eye movement analysis. Hum. Factors Ergon. Soc. Ann. Meet. 54(19), 1650–1654 (2010)

Munn, S.M., Pelz, J.B.: 3D point-of-regard, position and head orientation from a portable monocular video-based eye tracker. In: Proceedings of the ETRA 2008, pp. 181–188 (2008)

Santner, K., Fritz, G., Paletta, L., Mayer, H.: Visual recovery of saliency maps from human attention in 3D environments. In: Proceedings of the ICRA 2013, pp. 4297–4303 (2013)

Heyer, C.: Human-robot interaction and future industrial robotics applications. In: Proceedings of the IEEE/RSJ IROS, pp. 4749–4754 (2010)

Taylor, R.M.: Situational awareness rating technique (SART): the development of a tool for aircrew systems design. In: Situational Awareness in Aerospace Operations, pp. 3/1–3/17 (1990)

Stanton, N.A., Salmon, P.M., Walker, G.H., Jenkins, D.P.: Genotype and phenotype schemata and their role in distributed situation awareness in collaborative systems. Theoret. Issues Ergon. Sci. 10, 43–68 (2009)

Dini, A., Murko, C., Paletta, L., Yahyanejad, S., Augsdörfer, U., Hofbaur, M.: Measurement and prediction of situation awareness in human-robot interaction based on a framework of probabilistic attention. In: Proceedings of the IEEE/RSJ IROS 2017 (2017)

Paletta, L., Pittino, N., Schwarz, M., Wagner, V., Kallus, W.: Human factors analysis using wearable sensors in the context of cognitive and emotional arousal. In: Proceedings of the 4th International Conference on Applied Digital Human Modeling, AHFE 2015, July 2015 (2015)

Paletta, L., Pszeida, M., Nauschnegg, B., Haspl, T., Marton, R.: Stress measurement in multi-tasking decision processes using executive functions analysis. In: Ayaz, H. (ed.) AHFE 2019. AISC, vol. 953, pp. 344–356. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-20473-0_33

Bailey, B.P., Konstan, J.A.: On the need for attention-aware systems: Measuring effects of interruption on task performance, error rate, and affective state. Comput. Hum. Behav. 22(4), 685–708 (2006)

Monsell, S.: Task switching. Trends Cogn. Sci. 7(3), 134–140 (2003)

Miyake, A., Friedman, N.P., Emerson, M.J., Witzki, A., Howerter, A., Wager, T.D.: The unity and diversity of executive functions and their contributions to complex ‘frontal lobe’ tasks: a latent variable analysis. Cogn. Psychol. 41, 49–100 (2000)

Jersild, A.T.: Mental set and shift. Arch. Psychol. 89 (1927)

Kramer, A.F., Hahn, S., Gopher, D.: Task coordination and aging: explorations of executive control processes in the task switching paradigm. Acta Psychol. 101, 339–378 (1999)

Selye, H.: A syndrome produced by diverse nocuous agents. Nature 138(32) (1936)

Folkman, S.: Stress: Appraisal and Coping. Springer, New York (1984). https://doi.org/10.1007/978-1-4419-1005-9_215

Smith, E., Jonides, J.: Storage and executive processes in the frontal lobes. Science 283, 1657–1661 (1999)

Dickerson, S., Kemeny, M.: Acute stressors and cortisol responses: a theoretical integration and synthesis of laboratory research. Psychol. Bull. 130, 355–391 (2004). https://doi.org/10.1037/0033-2909.130.3.355

Diamond, A.: Executive functions. Annu. Rev. Psychol. 64, 135–168 (2013). https://doi.org/10.1146/annurev-psych-113011-143750

Meyer, D.E., et al.: The role of dorsolateral prefrontal cortex for executive cognitive processes in task switching. J. Cogn. Neurosci. 10 (1998)

Del Missier, F., Mäntyla, T., Bruine de Bruin, W.: Executive functions in decision making: an individual differences approach. Think. Reason. 16(2), 69–97 (2010)

Cannon, W.B.: The Wisdom of the Body. Norton, New York (1932)

Boucsein, W.: Electrodermal Acivity. Plenum, New York (1992)

Setz, C., Arnrich, B., Schumm, J., La Marca, R., Tröster, G., Ehlert, U.: Discriminating stress from cognitive load using a wearable EDA device. IEEE Trans. Inf Technol. Biomed. 14(2), 410–417 (2010)

Taelman, J., Vandeput, S., Spaepen, A., Van Huffel, S.: Influence of mental stress on heart rate and heart rate variability. In: Vander Sloten, J., Verdonck, P., Nyssen, M., Haueisen, J. (eds.) 4th European Conference of the International Federation for Medical and Biological Engineering, vol. 22, pp. 1366–1369. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-540-89208-3_324

Sharma, N., Gedeon, T.: Objective measures, sensors and computational techniques for stress recognition and classification: a survey. Comput. Methods Program. Biomed. 108, 1287–1301 (2012)

Sun, F.-T., Kuo, C., Cheng, H.-T., Buthpitiya, S., Collins, P., Griss, M.: Activity-aware mental stress detection using physiological sensors. In: Gris, M., Yang, G. (eds.) MobiCASE 2010. LNICST, vol. 76, pp. 211–230. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-29336-8_12

Van Orden, K.F., Limbert, W., Makeig, S.: Eye Activity Correlates of Workload during a Visuospatial Memory Task. Hum. Factors: J. Hum. Factors Ergon. Soc. 43, 111–121 (2001)

Chajut, E., Algom, D.: Selective attention improves under stress: implications for theories of social cognition. J. Pers. Soc. Psych. 85, 231–248 (2003)

Bütepage, J., Kragic, D.: Human-robot collaboration: from psychology to social robotics (2017). arXiv:1705.10146

Baddeley, A.D., Hitch, G.: Working memory. In: Bower, G.A. (ed.) The Psychology of Learning and Motivation: Advances in Research and Theory, vol. 8, pp. 47–89 (1974)

Lezak, M.D.: Neuropsychological Assessment. Oxford University Press, New York (1995)

Koolhaas, J.M., Bartolomucci, A., Buwalda, B., De Boer, S.F., Flügge, G., Korte, S.M., et al.: Stress revisited: a critical evaluation of the stress concept. Neurosci. Biobehav. Rev. 35, 1291–1301 (2011)

Hermans, E.J., Henckens, M.J.A.G., Joels, M., Fernandez, G.: Dynamic adaptation of large-scale brain networks in response to acute stressors. Trends Neurosci. 37, 304–314 (2014)

Acknowledgments

This work has been supported by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK) within projects MMASSIST (no. 858623) and FLEXIFF (no. 861264).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this paper

Cite this paper

Paletta, L. (2021). Attention Analysis for Assistance in Assembly Processes. In: Ratchev, S. (eds) Smart Technologies for Precision Assembly. IPAS 2020. IFIP Advances in Information and Communication Technology, vol 620. Springer, Cham. https://doi.org/10.1007/978-3-030-72632-4_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-72632-4_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-72631-7

Online ISBN: 978-3-030-72632-4

eBook Packages: Computer ScienceComputer Science (R0)