Abstract

The impact of land tenure interventions on sustainable development outcomes is affected by political, social, economic, and environmental factors, and as a result, multiple types of evidence are needed to advance our understanding. This chapter discusses the use of counterfactual impact evaluation to identify causal relationships between tenure security and sustainable development outcomes. Rigorous evidence that tenure security leads to better outcomes for nature and people is thin and mixed. Using a theory of change as a conceptual model can help inform hypothesis testing and promote rigorous study design. Careful attention to data collection and use of experimental and quasi-experimental impact evaluation methods can advance understanding of causal connections between tenure security interventions and development outcomes.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Identifying the Causal Impacts of Tenure Security Interventions

Conventional wisdom holds that land tenure security can help achieve a variety of sustainable development goals. Strengthening tenure security is commonly assumed to promote poverty alleviation, agricultural investment and productivity, food security, better health, gender empowerment, and natural resource conservation and restoration (Arnot et al., 2011; Holden & Ghebru, 2016; Lawry et al., 2016). Yet rigorous empirical evidence to support these causal links is thin and mixed (Higgins et al., 2018). In fact, even the direction of influence, if any, between tenure security and development objectives has been found to vary. An example is the relationship between tenure security and deforestation (Robinson et al., 2014). On the one hand, stronger secure tenure could spur deforestation by improving land managers’ access to credit and commodity markets, which, in turn, could lead to agricultural extensification (Liscow, 2013; Buntaine et al., 2015). But, on the other hand, more secure tenure could stem deforestation by enabling land managers to deter encroachment by outsiders and by dampening their incentives to ‘clear land to claim it’ (Holland et al., 2017).

Impact evaluation methods aim to assess the causal effects of a project or intervention by comparing what happens when a project, policy, or program is undertaken to what would have happened without that project, policy, or program. Thus, impact evaluation differs from other common forms of monitoring, such as performance measurement, by including a group of observations that do not receive the intervention to better assess what would have happened without it. A well-designed impact evaluation can not only shed light on the average effects of tenure interventions but can also indicate how these impacts are moderated by contextual factors. This can help decision makers understand the conditions under which various types of tenure interventions are likely to be effective. The use of impact evaluation has grown rapidly across development sectors, including agriculture, health, water and sanitation, education, finance, and natural resource management (Cameron et al., 2015). Although the use of impact evaluation methods to study land tenure security interventions has also increased, it lags behind these other sectors. The majority of rigorous evaluations of tenure security have focused on a handful of development outcomes like agricultural investment, access to credit, and income, with fewer measuring outcomes like conflicts, female empowerment, food security, and environmental degradation (Holden & Ghebru, 2016; Higgins et al., 2018).

The gaps in rigorous evidence on the effects of tenure security interventions may be partly due to the fact that tenure evaluations can be more challenging than those for other development interventions, like access to education or health care, for a number of reasons. First, programs and policies aimed at strengthening land tenure often are part of a package of interventions, including, for example, land titling, capacity building, and awareness raising (see Lisher, 2019). In such cases, it can be quite complex and sometimes impossible to disentangle the effects of specific components of this package of interventions. Second, the intended outcomes from strengthening tenure may occur many years after the intervention. Third, as discussed in other chapters, land security is inherently difficult to measure. And finally, outcomes expected from strengthening land tenure are moderated by a multitude of political, economic, and environmental conditions—in general, impacts are heavily dependent on local contextual factors. Given these challenges, evaluating the effect of tenure security interventions requires particular care: clear articulation of a conceptual framework, careful data collection, and attention to the design of a strategy for analyzing those data, so as to identify causal effects.

Articulating a Theory of Change

A theory of change (TOC) is simply a detailed and well-articulated set of hypotheses about exactly how an intervention should affect an intended outcome, that is, it maps out a hypothesized causal chain. Developing a TOC should be the first step in impact evaluation (Qiu et al., 2018). A TOC identifies the logical and ordered causal links moving from the intervention to intended impacts and typically includes a visual illustration (Funnell & Rogers, 2011). These links, visual or otherwise, should be viewed as the hypotheses to be tested in the impact evaluation. A TOC can be based on expert knowledge and/or literature review but should involve local-level or project-level involvement in order to accurately reflect a program’s TOC and appropriately account for contextual considerations in tenure interventions. For example, a TOC developed for a land tenure security intervention in Ghana visually details the expected causal links between providing farm-level land tenure documentation to farmers and deforestation-free cocoa production (Persha & Protik, 2019). The TOC consists of a series of ‘if-then’ statements, laying out the project’s central hypothesis that tenure documents will improve land and tree security and improve farmers’ access to financial services. The TOC also highlights that the land tenure intervention is only likely to work when contemporaneous development activities occur, in this case, financial and technical services that support shade-grown cocoa and education on land-use planning.

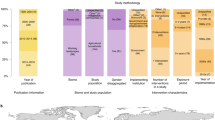

While any TOC can serve as the basis for designing an evaluation, directed acyclic graphs (DAGs) are perhaps particularly well-suited for that purpose because they explicitly recognize multiple types of associations among factors at play (Sills & Jones, 2018; Ferraro & Hanauer, 2015). The generic DAG in Fig. 14.1a shows the causal pathway from the intervention or treatment (T) to final outcome or impact (Y). These impacts could be direct relationships between T and Y, but more often will be propagated through a series of mediators, which correspond to the short-, medium-, or long-term outcomes of the treatment. A mediator (also referred to as a mechanism in the evaluation literature) is a variable that helps explain the relationship between T and Y. In the case of interventions aimed at strengthening tenure security, treatment T might be formalization of land tenure through titling, and the mediator might be changes in tenure security, with the intended impact (Y) of reducing deforestation (Fig. 14.1b). Moderators are distinct from mediators in that they influence the strength of the relationship between T and Y. The researcher typically wants to be aware of these to isolate the casual effect and may be interested in understanding the variation in impact that occurs due to a specific moderator. For example, there may be heterogeneity in the impact of strengthening tenure security by gender, poverty level, or land quality (Fig. 14.1b). By measuring these, the researcher can test for differential effects of an intervention. Confounding factors (sometimes referred to as contextual factors) include any observable or unobservable variables (both of which are ideally included in a DAG or TOC) that mask the true effect of T on Y and need to be controlled for by the researcher in order to measure the ‘true’ effect of the intervention. In evaluating tenure security, confounding factors may include contemporaneous programs (Fig. 14.1b), or broader socioeconomic or biophysical conditions.

A TOC should also include a ‘situation analysis’ to explain the current state of insecure conditions and justify why a formal intervention is needed. Additionally, it should lay out the set of assumptions required to move from program inputs to final impacts. In many contexts where tenure interventions are implemented, customary land tenure systems are in place. A situation analysis should highlight current land tenure arrangements (often informal) and how these will be affected (positively or negatively) by formal interventions (Dubois, 1997; Bromely, 2009). These customary systems will interact with and influence how a formal land tenure intervention is implemented and the outcomes of interest (Dubois, 1997; Bromely, 2009).

Collecting Data

A TOC will help to identify the data that need to be collected for an impact evaluation and when those data need to be collected. Regarding the timing of data collection, final impacts may take years to materialize. Therefore, it may be appropriate to start with evaluating intermediate outcomes hypothesized to lead to final impacts. As for what data to collect, at a minimum, the researcher needs to collect data on the intervention, outcomes of interest, and any observable confounding factors that are correlated to both the intervention and the outcomes of interest. So, for example, in the case of an evaluation of land titling on deforestation, data is needed on land titling, deforestation, and confounders such as contemporaneous technical assistance interventions that might affect land titling effectiveness and deforestation decisions (Fig. 14.1b). In addition, in order to test specific mediators or moderators, data also needs to be collected on these factors. To continue with our example, mediators and moderators may relate to the biophysical and socioeconomic characteristics of titled properties.

Using metrics and indicators that are similar to those used in other studies can allow for future comparison and synthesis of evidence of tenure security interventions in systematic reviews. Alternatively, global comparative analyses of similar tenure interventions in different contexts can provide valuable information on how contextual factors influence impacts (e.g., Sunderlin et al., 2018). The use of longitudinal data in impact evaluation is, unfortunately, less common than the use of cross-sectional data, undoubtedly because the costs of collecting longitudinal data are relatively high. However, longitudinal data is much preferred to cross-sectional data because, as discussed below, it facilitates more reliable and more credible analysis. Most evaluations of land tenure interventions are one-off studies (Higgins et al., 2018), providing a static measure of tenure effects. However, most land tenure security interventions lead to a series of intermediate outcomes that are necessary to reach the final impact, and the identification of outcomes in one time period does not necessarily imply that these outcomes will persist over time.

Impact Evaluation: Key Concepts

Conceptually, measuring the effect of an intervention on an intended outcome requires first identifying the group that gets the intervention and then, for that same group, comparing outcomes with the intervention and without it. For example, understanding the effect of land titling on household income requires first identifying the group that receives title and then comparing (i) their income with title and (ii) their income without title. The core challenge in rigorous causal analysis is that of course we cannot observe the outcomes for the group that gets the intervention without the intervention, a scenario termed the counterfactual. In a nutshell, rigorous impact evaluation entails using different strategies for constructing the unobserved counterfactual through use of real-world proxy groups. A number of books and articles discuss these strategies in detail (e.g., Abadie & Cattaneo, 2018; Samii, 2016; Gertler et al., 2016; Imbens & Rubin, 2015; Imbens & Wooldridge, 2009). Here we introduce just a few key concepts and approaches.

Two important types of problems crop up when evaluators construct a counterfactual: selection bias and contemporaneous bias (Ferraro & Pattanayak, 2006; Greenstone & Gayer, 2009). Selection bias becomes an issue when impact evaluations assess outcomes for units of analysis (e.g., households and settlements) that have not received the intervention to represent the counterfactual, but these other units of analysis have systematic differences from the treatment group. For example, a study of the effects of a land titling program on deforestation might use as the counterfactual deforestation on forest management units (FMUs) that were not titled. That is, they might define the effect of titling on deforestation as the difference between the average rates of deforestation on titled versus untitled FMUs. Selection bias would be a concern if the FMUs that were titled were not randomly selected and tended to have pre-existing socioeconomic and/or biophysical characteristics either positively or negatively correlated with deforestation. For example, say, titled FMUs were located closer to cities where deforestation rates were relatively high. In that case, the titled FMUs would very likely have more deforestation than untitled FMUs, but that difference could be due to differences in land-use pressures and completely unrelated to titling. Here, selection bias could result in the misleading conclusion that titling exacerbates deforestation.

Contemporaneous bias occurs when impact evaluations use pre-intervention outcomes for units that received the intervention as the counterfactual. To continue the above example, this counterfactual would be deforestation rates on titled FMUs, but before they received titles. In other words, the evaluation relies on a before-after comparison. Contemporaneous bias is a concern when events that coincide with the intervention affect outcomes. So, for our example, this might be a problem if titling coincided with say technical assistance in sustainable forest management or a drop in timber prices over the same period. A before-after comparison could misleadingly conclude that titling reduced deforestation, when it was actually due to technical assistance or a drop in market value of timber. Both selection bias and contemporaneous bias can ‘confound’ the results of a land tenure security evaluation. Impact evaluation designs typically account for potential confounding due to selection bias and contemporaneous bias by carefully selecting a comparison (or ‘control’) group to serve as a counterfactual, and by using data on outcomes from both before the intervention (‘baseline’ data) and after the intervention (‘endline’ data) (Greenstone & Gayer, 2009; Imbens & Wooldridge, 2009).

Two more terms often encountered in impact evaluation are ‘additionality’ and ‘spillover.’ Additionality refers to the changes in outcomes that can be attributed solely to the intervention and not confounding factors. In essence, additionality is the goal of all impact evaluation methods: to be able to determine what effects are because of the project itself over and beyond what would have happened anyway. Spillovers are impacts of the intervention on units not in the treatment group (e.g., Robalino et al., 2017). These impacts could be positive or negative. For example, providing land title to FMUs could cause illegal logging to shift to other nearby FMUs. Spillovers are important to identify because they could contaminate the control group and or influence the estimated program impact.

Impact Evaluation: Key Strategies

Here we provide a brief overview of the principal strategies for rigorous impact evaluation, each of which entails a different approach to constructing the unobserved counterfactual. Experimental designs, or randomized control trials (RCTs), are considered the gold standard of rigorous impact evaluation. They randomly assign an intervention among a population of potential recipients and then define the counterfactual as outcomes for those who did not receive it. The key logic is that if the intervention is randomly assigned, then (if the study population is large enough) the group that does not receive it should have the same average characteristics as the group that did, so that the control group’s outcomes are a suitable proxy for the unobserved counterfactual. Put slightly differently, random assignment ensures that selection bias will not be an issue, that is that any correlation between the intervention and the intended outcome will be due to the intervention and not a spurious correlation with confounding factors.

RCTs have been used in a handful of land tenure evaluations (Higgins et al., 2018). While interventions are sometimes assigned to individuals, in the case of tenure security interventions, assignment to clusters or groups (e.g., communities, regions) will often be more politically feasible and more likely to avoid spillovers. Box 14.1 illustrates the use of an RCT assigned at village level to evaluate the impact of a land registration program in Benin.

Box 14.1 An Experimental Study of the Effect of Land Demarcation on Investment

In rural sub-Saharan Africa, only a small portion of farmers have formal titles for the land to which they claim ownership, a situation that in principle could result in under-investment and a low agricultural productivity trap. Existing rigorous evidence on the effect on investment of land tenure interventions aimed at formalizing land tenure is mixed. To help fill that gap, Goldstein et al. (2015) report on results of a randomized controlled trial aimed at identifying the effect of a land formalization program in Benin, the first such randomized controlled trial study. The program they evaluate entailed two key steps, each of which required extensive input from treated communities: first, mapping and demarcating with stone markers all parcels in each treated community and second formally and legally documenting ownership. The formalization program was randomly assigned at the village level and data were collected from individual households within these villages on each of the multiple land parcels claimed by the household. As many as 298 villages participated in the study of which 191 were treated and 98 were not. Secondary baseline and primary endline survey data were compiled or collected for 6064 land parcels claimed by 2972 households in these study villages. The authors develop a formal analytical model that serves as a theory of change. They use OLS regressions to estimate treatment effects. These regressions control for differences in observable characteristics between the treated and control parcels, while randomization is assumed to control for differences in unobserved characteristics. The authors find that two years after the start of implementation, treated households were 23–43 percent more likely to grow perennial cash crops and to invest in trees on their parcels. They also find that household characteristics moderated investment effects. For example, female-headed-treated households invested more in fallowing and tended to shift investment away from demarcated land to less secure parcels in order to secure their claims on them.

Despite their advantage, a number of constraints often make it challenging to implement RCTs in a land tenure context. First, local stakeholders are often justifiably resistant to randomly selecting a group of participants to not receive a tenure security intervention. As a result, RCTs are often used when resources for the intervention in its initial phase are limited so that only a subset of potential units can receive the intervention at one time, so that the subset that gets it initially is randomly selected. Second, in some cases randomization is simply not feasible, e.g., when tenure security interventions are applied to all landholders at regional or national scales. Third, the timing of interventions and outcomes can complicate implementation. RCTs need to be designed before an intervention takes place, which facilitates the collection of baseline data prior to any implementation of the intervention. So RCTs are impractical for interventions already underway.

When random assignment of the treatment and control group are not practical, a quasi-experimental design can be used to estimate the causal effects (e.g., Abadie & Cattaneo, 2018; Gertler et al., 2016). Quasi-experimental designs rely on statistical techniques rather than random assignment of the treatment to construct a valid counterfactual and to estimate the effect of treatment. Here we provide a very brief introduction to the most important causal identification strategies. The first three—matching, difference in difference (DID), and synthetic control method (SCM)—are so-called conditioning strategies. In these designs, one assumes the bias to causal analysis only comes from observable variables or unobservable variables that do not vary over time and equally affects both the treated and control group. Observable variables are those that can be easily measured and controlled for explicitly in statistical analysis (e.g., parcel size), whereas unobservable variables are those where data are nonexistent or too costly to collect (e.g., farmer management capacity). By conditioning on a set of variables, confounding factors can be blocked, and the true relationship between T and Y can be estimated (Fig. 14.1a). The last two methods—regression discontinuity design (RDD) and instrumental variables (IV)—rely on naturally occurring sources of variation in assignment to the intervention. Causal effects are estimated by identifying an exogenous observable variable that is only related to potential outcomes through its effect on the treatment.

The first of the conditioning strategies, matching, entails constructing a counterfactual group by ‘matching’ treated units to untreated units with similar observable characteristics. Since matching relies on observable data, it is susceptible to bias if important confounding factors are unobservable (i.e., there is no data available) to the researcher. A variety of statistical techniques can be used to help ‘match’ each treatment unit to observationally similar control units (Abadie & Imbens, 2006; Rosenbaum & Rubin, 1983). One of the most widely used matching algorithms is propensity score matching (PSM). In PSM, a probit or logit regression is used to estimate the probability that each unit in the study sample gets the treatment, conditional on its observed characteristics. The estimated parameters of that regression are then used to predict the probability of receiving the treatment for each unit—the so-called propensity score. Propensity scores can be interpreted as univariate weighted indices of observable characteristics. These scores are then used to match treated and untreated observations. That is, each treated observation is matched to the control observation(s) with the closest propensity score(s). For example, Melesse and Bulte (2015) match households in Ethiopia that received land certification to households that did not based on the following observable variables: age, education, livestock, distance to market, parcel size, distance to parcel, parcel fertility, and parcel slope.

A variety of strategies are available for using matched samples to identify the effects of an intervention. The simplest is to calculate the difference in mean outcomes for the treatment group and matched control group. A more sophisticated method is regression adjustment, that is running a regression using a matched sample that includes treated observations and matched control observations (Imbens & Wooldridge, 2009). Santos et al. (2014) use PSM to match Indian households that were selected for a land-allocation and registration program with households that were not selected. The authors use their matched sample along with a weighted regression analysis to estimate the effect of the program on food security and a number of intermediate outcomes that might influence food security. While matching is one of the easier quasi-experimental methods to employ based on data collection needs, it also requires stronger assumptions and is therefore more susceptible to bias (Ho et al., 2007).

DID, sometimes referred to as before-after-control-impact (BACI), compares before-after changes in outcomes for a treatment group and a control group. Instead of statistically constructing a control group as in matching, this technique uses both the temporal (within) variation and cross-sectional (between) variation to construct the counterfactual outcome. This method can control for selection bias and contemporaneous bias, but only to the extent that these biases apply to both groups in all time periods. DID strategies require data on outcomes from at least two time periods. Economists typically use fixed effects panel regression to estimate the treatment effect in a DID design (Jones & Lewis, 2015). Box 14.2 illustrates the use of DID in evaluating the impact of a land titling campaign on forest clearing and degradation in indigenous communities in Peru. An increasingly common strategy is to combine matching and DID to reduce potential selection bias. In this case, matching is first used to ‘trim’ the sample and DID is used to estimate the effect of the intervention on the trimmed sample.

Box 14.2 A Quasi-Experimental Study of the Effect of Titling Indigenous Communities on Forest Cover Change

Blackman et al. (2017) analyze the effect on both forest clearing and degradation of a program in the early 2000s that provided formal legal title to indigenous communities in the Peruvian Amazon. As noted in the main body of this chapter, ex ante the direction of effect of titling on forest cover change is unclear: it could stem clearing by, for example, improving monitoring and enforcement of land-use restrictions, or could spur it, by, for example, improving land managers’ access to credit. Blackman et al. develop a detailed theory of change that maps out hypothesized causal links between inputs into titling (community meetings, interactions with external stakeholders, and territorial demarcation), their treatment (the award of title), intermediate outcomes (changes in regulatory pressure, internal governance, public sector interactions, and private sector interactions, livelihoods), and the final outcomes (forest clearing and degradation). Their study sample consists of all 51 communities in the Peruvian Amazon that received title between 2002 and 2005. They use contemporaneous remotely sensed data on their outcomes and GIS data from an indigenous community NGO on the timing and location of the award of title. In addition, they use secondary data from a variety of sources to control for confounding factors (crop prices, rainfall, and temperature) and to test for the effect of moderating factors. They use an ‘event-study’ quasi-experimental design that entails estimating fixed effects panel data models similar to a DID panel data model except that the regression sample consists only of treated observations, a necessity in this case as GIS data on untitled communities does not exist. In essence, this approach identifies the effect of titling by comparing changes in forest cover before and after title is awarded controlling for observed time-varying confounding factors (crop prices, rainfall, and temperature) as well as all unobserved time-invariant factors. The authors find that titling reduces clearing by more than three-quarters and forest disturbance by roughly two-thirds in a two-year window spanning the year title is awarded and the year afterward. In addition, they find that these effects are more pronounced in communities that are smaller and closer to sizable population centers.

Both matching and DID work best when a large number of units have received the intervention and a large number of potential control units (e.g., households, parcels of land) are available. In that sense, quasi-experimental methods are considered ‘data hungry’ because we need large samples to find the necessary exogenous identifying variation (Caliendo & Kopeinig, 2008). This requirement poses a challenge for evaluating some types of land tenure interventions, particularly those more likely to have been implemented at large geographic scales, such as a state or country, rather than a household- or parcel-level.

SCM is a conditioning strategy that can be employed for causal analysis when only one or a few units receive the intervention. SCM estimates the effect of an intervention when only a single unit (e.g., one state), or very few units, is exposed to that intervention. It uses both observed characteristics and historical data on outcomes to determine ‘similarity’ of potential control units. It then estimates a treatment effect by comparing outcomes for the treated unit to a weighted average of outcomes for control units. Thus, it incorporates elements of matching in that it conditions on similar observable characteristics and of DID in that it takes into account changes over time. This method has been promoted as a complement to comparative case studies used in political science, providing a more transparent and robust method of constructing a comparison group (Abadie et al., 2010; Abadie et al., 2015).

RDD relies on the naturally occurring variation in assignment of the intervention to identify a control group. RDD can be used only in situations in which the evaluator can identify a clearly defined threshold for eligibility to receive the intervention that effectively determines assignment into the treatment and control groups (Abadie & Cattaneo, 2018). An example might be a land titling program available only to landholdings at least 10 ha in size. RDD defines treatment and control groups as those just above and below this threshold. The key assumption is that the observable and unobservable characteristics of these groups are the same on average near the threshold of discontinuity (here, households with just under and just over 10 ha of land) so that difference in intended outcomes can be safely attributed to the intervention. This design is considered as good as an experimental approach in terms of controlling for selection bias. A limitation, however, is that the results are only applicable to the sample of the population that is represented at that cut-off point. Ali et al. (2014) apply a spatial RDD in Rwanda to evaluate the impact of a land tenure regularization pilot program, aimed at clarifying rights to land parcels, on gender equality, agricultural investment, and land markets. They use the spatial discontinuity (boundary) between land parcels that were part of the pilot program and land parcels on both sides of the pilot parcels’ borders to identify the causal effects.

Finally, an IV design requires that the researcher identify an exogenous variable that affects the treatment but not the potential outcomes, except for its effect through the treatment variable (Angrist et al., 1996). The IV approach controls for selection bias in program assignment by using an instrument (third variable) to represent the treatment variable and thus break the bias that might exist between directly estimating the effect of the treatment on the outcome. A two-step procedure is employed, where the researcher first estimates the effect of the instrument on the treatment and then uses the predicted value of the treatment from this first step to estimate the effect on the outcome of interest. Selection of a ‘good’ instrument is the most challenging part of an IV approach, and common weaknesses include selecting an instrument that is only weakly correlated with the treatment or is correlated with omitted variables. Similar to RDD, the IV approach can lead to unbiased estimates of the treatment effect, but only for the sample that is represented by the exogenous variable. In a systematic review by Higgins et al. (2018), the IV approach was the most common quasi-experimental design used to measure the impact of tenure security on development outcomes. Common instruments used for tenure security interventions included a household’s distance to land offices or parcels, mode of land acquisition, exposure to information on reforms, and tenure status of neighboring parcels (Higgins et al., 2018).

All of the evaluation methods discussed above have their strengths and weaknesses, and each of the quasi-experimental approaches comes with a set of assumptions that have to be met in order to effectively measure the ‘true’ impact. A well-articulated TOC can help identify when the assumptions of each method are likely to be met. Combining any of the above methods with qualitative data from focus groups, interviews, or ethnographic methods can also help elucidate the various causal pathways, or mediators, that are operating; the effect of moderators on heterogeneity in outcomes; and the influence confounding factors might be having on masking impacts. Box 14.3 illustrates how qualitative information can be used alongside impact evaluation to shed light on effects, or lack thereof, of tenure interventions and reveal unintended outcomes. In the context of participatory research, in which the researcher returns to the field to clarify questions and resolve anomalies, qualitative observations can be combined with quantitative data to elicit knowledge about motivations to participate in specific programs and to verify estimated impacts (see Rao & Woolcock, 2003 and Arriagada et al. 2009).

Box 14.3 A Mixed Methods Evaluation of the Impact of Land Titling on Deforestation

Holland et al. (2017) combine the quasi-experimental impact evaluation method of DID with focus group discussions to elucidate the causal pathways (i.e., mediators) of a land titling intervention in Ecuador. Holland et al. evaluate the impact of receiving formal land titles in 2009 on avoided deforestation around a national forest reserve in the Ecuadorian Amazon. They compare 1067 land parcels that receive a formal land title to 268 land parcels that do not receive a formal land title, but that are located in the same 61 communities. The authors use data on annual changes in forest cover between the years 2000 and 2014 and estimate the effect of titling on avoided deforestation using fixed effects panel regression methods. They find that the average effect of receiving a formal land title is that deforestation decreases by 37 percentage points. They combined this analysis with 15 community focus group discussions. Through focus group discussion they learn that de facto tenure security is already strong before receiving formal land titles, a finding that has been documented in other studies of rural communities in Ecuador. Individuals were asked about specific mechanisms or pathways through which land titling had changed their forest behaviors, but respondents gave no indication that titles had led to abatement of forest harvesting. Instead, the impact of land titling appeared to occur only because pressure on lands left untitled spiked during the evaluation time period. It is not clear if this spike was due to illegal activity or other pressures. Unintended consequences of the land titling campaign were also discovered in the focus group interviews since many respondents were dissatisfied by restrictions put on subdividing their land for future generations. These nuances to the impact of formal land titles on forest conservation would not have been discovered without the use of mixed methods.

For all of the approaches described above, it is important to consider the internal and external validity of the results. Results are said to be internally valid when the evaluation has controlled for biases, including selection bias, contemporaneous bias, and bias due to spillovers. Internal validity is what impact evaluation designs strive for—estimating the true impact of the program for that population. Results are said to be externally valid when they can be generalized to the population of all eligible units. External validity can be harder to achieve in impact evaluation. It requires that the treatment and control groups be representative of the population of all eligible units. Certain research designs lend themselves better to this, in particular those that randomly assign treatment and control units from the population of eligible units. Others are more problematic in this regard: IV and RDD. Measuring potential moderators and estimating sub-group impacts, in addition to average impacts, can increase the ability to extrapolate results to all eligible units (Sills & Jones, 2018).

Advancing Our Understanding of Tenure Security

The growing attention to the potential benefits of strengthening tenure security on sustainable development outcomes is a welcome advance. Evidence on where, when, and why these impacts are occurring would help ensure more efficient use of financial investments. As noted, tenure security interventions and their links to intended final impacts are often complicated, making measurement and isolating the impact of tenure security alone difficult. This underscores the need for well-designed impact evaluations. That, in turn, requires developing a TOC for interventions that clearly articulates the links between interventions and final outcomes and that identifies confounding factors, moderators, and potential mediators. It also requires collecting requisite data and selecting experimental and quasi-experimental impact evaluation methods that, to the extent possible, will control for selection bias, contemporaneous bias, and spillover.

While the number of impact evaluations of tenure security interventions is increasing, the focus is limited to a small set of potential outcomes (Higgins et al., 2018). More research efforts are needed that measure the effects of tenure security on sustainable development goals such as gender equality, public health, and environmental degradation. Additionally, impact evaluations of tenure security interventions would benefit from prioritizing comparative and longitudinal studies. Comparative studies can ensure similar metrics are used in multiple contexts, shedding light on where tenure interventions work and why. Longitudinal studies would help advance knowledge on the dynamics of strengthening land tenure and take into account how exogenous changes may influence the sustained impacts of secure tenure. Any increase in the evidence base on land tenure needs to acknowledge multiple ways of knowing (Tengö et al., 2014) and should ensure that research questions and assessments are co-developed with the practitioners and decision makers that have on the ground understanding of the local tenure context.

It is important to note that not all tenure security interventions will be conducive to rigorous impact evaluation. Evaluations may not be feasible where project conditions are not generalizable to larger areas and where the costs of conducting an evaluation are high. But not all tenure interventions need to be evaluated to advance our knowledge on what works, where, and why. Increasing the use of impact evaluation methods to assess tenure security interventions by even a fraction would greatly enhance the evidence base and provide decision makers with better knowledge on what interventions will work in different contexts.

References

Abadie, A., & Cattaneo, M. D. (2018). Econometric methods for program evaluation. Annual Review of Economics, 10, 465–503.

Abadie, A., Diamond, A., & Hainmueller, J. (2015). Comparative politics and the synthetic control method. American Journal of Political Science, 59(2), 495–510.

Abadie, A., Diamond, A., & Hainmueller, J. (2010). Synthetic control methods for comparative case studies: Estimating the effect of California’s tobacco control program. Journal of the American Statistical Association, 105(490), 493–505.

Abadie, A., & Imbens, G. W. (2006). Large sample properties of matching estimators for average treatment effects. Econometrica, 74(1), 235–267.

Ali, D. A., Deininger, K., & Goldstein, M. (2014). Environmental and gender impacts of land tenure regularization in Africa: pilot evidence from Rwanda. Journal of Development Economics, 110, 262–275.

Angrist, J. D., Imbens, G. W., & Rubin, D. B. (1996). Identification of causal effects using instrumental variables. Journal of the American Statistical Association, 91(434), 444–455.

Arnot, C. D., Luckert, M. K., & Boxall, P. C. (2011). What is tenure security? Conceptual implications for empirical analysis. Land Economics, 87(2), 297–311.

Arriagada, R., Sills, E., Pattanayak, S. K., & Ferraro, P. (2009). Combining qualitative and quantitative methods to evaluate participation in Costa Rica's Program of Payments for Environmental Services. Journal of Sustainable Forestry, 28(3-5), 343–367.

Blackman, A., Corral, L., Lima, E., & Asner, G. (2017). Titling Indigenous Communities Protects Forests in the Peruvian Amazon. Proceedings of the National Academy of Sciences of the United States of America, 114, 123–4128.

Bromely, D. W. (2009). Formalising property relations in the developing world: the wrong prescription for the wrong malady. Land Use Policy, 26(1), 20–27.

Buntaine, M. T., Hamilton, S. E., & Millones, M. (2015). Titling community land to prevent deforestation: an evaluation of a best-case program in Morona-Santiago, Ecuador. Global Environmental Change, 33, 32–43.

Caliendo, M., & Kopeinig, S. (2008). Some Practical Guidance for the Implementation of Propensity Score Matching. Journal of Economic Surveys, 22(1), 31–72.

Cameron, D. B., Mishra, A., & Brown, A. N. (2015). The growth of impact evaluation for international development: how much have we learned? Journal of Development Effectiveness, 8(1), 1–21.

Dubois, O. (1997). Rights and Wrongs of Rights to Land and Forest Resources in Subsaharan Africa: Bridging the Gap between Customary and Formal Rules. IIED Forest Participation Series No. 10. IIED, London, UK.

Ferraro, P. J., & Hanauer, M. M. (2015). Through what mechanisms do protected areas affect environmental and social outcomes? Phil. Trans. R. Soc. B, 370(20140267), 1–11.

Ferraro, P. J., & Pattanayak, S. K. (2006). Money for nothing? A call for empirical evaluation of biodiversity conservation investments. PLoS Biology, 4(4), 1.

Funnell, S. C., & Rogers, P. J. (2011). Purposeful program theory: Effective use of theories of change and logic models. Jossey-Bass.

Gertler, P. J., Martinez, S., Premand, P., Rawlings, L. B., & Vermeersch, M. J. (2016). Impact Evaluation in Practice (2nd ed.). World Bank.

Goldstein, M., Houngbedji, K., Kondylis, F., O’Sullivan, M., & Selod, H. (2015). Formalization without certification? Experimental evidence on property rights and investment. Journal of Development Economics, 132, 57–74.

Greenstone, M., & Gayer, T. (2009). Quasi-experimental and experimental approaches to environmental economics. Journal of Environmental Economics and Management, 57(1), 21–44.

Higgins, D., Balint, T., Liversage, H., & Winters, P. (2018). Investigating the impacts of increased rural land tenure security: a systematic review of the evidence. Journal of Rural Studies, 61, 34–62.

Ho, D. E., Imai, K., King, G., & Stuart, E. A. (2007). Matching as nonparametric preprocessing for reducing model dependence in parametric causal inference. Political Analysis, 15(3), 199–236.

Holden, S. T., & Ghebru, H. (2016). Land tenure reforms, tenure security and food security in poor agrarian economies: casual linkages and research gaps. Global Food Security, 10, 21–28.

Holland, M. B., Jones, K. W., Naughton-Treves, L., Freire, J. L., Morales, M., & Suárez, L. (2017). Titling land to conserve forests: the case of Cuyabeno Reserve in Ecuador. Global Environmental Change, 44, 27–38.

Imbens, G. W., & Rubin, D. B. (2015). Causal Inference in Statistics and the Medical and Social Sciences. Cambridge University Press.

Imbens, G. W., & Wooldridge, J. M. (2009). Recent Developments in the Econometrics of Program Evaluation. Journal of Economic Literature, 47(1), 5–86.

Jones, K. W., & Lewis, D. J. (2015). Estimating the counterfactual impact of conservation programs on land cover outcomes: The role of matching and panel regression techniques. PLoS ONE, 10(10), e0141380.

Lawry, S., Samii, C., Hall, R., Leopold, A., Hornby, D., & Mtero, F. (2016). The impact of land property rights interventions on investment and agricultural productivity in developing countries: a systematic review. Journal of Development Effectiveness, 9(1), 61–81.

Liscow, Z. D. (2013). Do property rights promote investment but cause deforestation? Quasi-experimental evidence from Nicaragua. Journal of Environmental Economics and Management, 65, 241–261.

Lisher, J. W. (2019). Guidelines for impact evaluation of land tenure and governance interventions. UN Human Settlements Programme.

Melesse, M. B., & Bulte, E. (2015). Does land registration and certification boost farm productivity? Evidence from Ethiopia. Agricultural Economics, 46, 757–768.

Persha, L., & Protik, A. (2019). Evaluation of the “Supporting Deforestation Free Cocoa in Ghana” Project Bridge Phase: Evaluation Design Report. USAID, Washington, DC. Accessed at https://www.land-links.org/wp-content/uploads/2019/05/Evaluation-of-the-Supporting-Deforestation-Free-Cocoa-in-Ghana-Project-Bridge-Phase-Evaluation-Design-Report.pdf

Qiu, J., Game, E. T., Tallis, H., Olander, L. P., Glew, L., Kagan, J. S., Kalies, E. L., Michanowicz, D., Phelan, J., Polasky, S., Reed, J., Sills, E. O., Urban, D., & Weaver, S. K. (2018). Evidence-based causal chains for linking health, development and conservation actions. BioScience, 68(3), 182–193.

Rao, V., & Woolcock, M. (2003). Integrating qualitative and quantitative approaches in program evaluation. In F. Bourguignon & L. P. da Silva (Eds.), The impact of economic policies on poverty and income distribution: Evaluation techniques and tools (pp. 165–190). Oxford University Press.

Robalino, J., Pfaff, A., & Villalobos, L. (2017). Heterogeneous local spillovers from protected areas in Costa Rica. Journal of the Association of Environmental and Resource Economists, 4(3), 795–820.

Robinson, B. E., Holland, M. B., & Naughton-Treves, L. (2014). Does secure land tenure save forests? A meta-analysis of the relationship between land tenure and tropical deforestation. Global Environmental Change, 29, 281–293.

Rosenbaum, P. R., & Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrika, 70(1), 41–55.

Santos, F., Fletschner, D., Savath, V., & Peterman, A. (2014). Can government-allocated land contribute to food security? Intrahousehold analysis of West Bengal's Microplot Allocation program. World Development, 64, 860–872.

Samii, C. (2016). Causal empiricism in quantitative research. The Journal of Politics, 78(3), 941–955.

Sills, E. O., & Jones, K. W. (2018). Causal inference in environmental conservation: the role of institutions. Handbook of Environmental Economics, 4, 395–437.

Sunderlin, W. D., de Sassi, C., Sills, E. O., Duchelle, A. E., Larson, A. M., Resosudarmo, I. A. P., Awono, A., Kewka, D. L., & Huynh, T. B. (2018). Creating an appropriate tenure foundation for REDD+: The record to date and prospects for the future. World Development, 106, 376–392.

Tengö, M., Brondizio, E. S., Elmqvist, T., Malmer, P., & Spierenburg, M. (2014). Connecting diverse knowledge systems for enhanced ecosystem governance: the multiple evidence base approach. Ambio, 43(5), 579–591.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Jones, K.W., Blackman, A., Arriagada, R. (2022). Methods to Advance Understanding of Tenure Security: Impact Evaluation for Rigorous Evidence on Tenure Interventions. In: Holland, M.B., Masuda, Y.J., Robinson, B.E. (eds) Land Tenure Security and Sustainable Development . Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-81881-4_14

Download citation

DOI: https://doi.org/10.1007/978-3-030-81881-4_14

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-81880-7

Online ISBN: 978-3-030-81881-4

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)