Abstract

The EQ-VT protocol for valuing the EQ-5D-5L offered the opportunity to develop a standardised experimental design to elicit EQ-5D-5L values. This chapter sets out the various aspects of the EQ-VT design and the basis on which methodological choices were made in regard to the stated preference methods used, i.e., composite time trade-off (cTTO) and discrete choice experiments (DCE). These choices include the sub-set of EQ-5D-5L health states to value using these methods; the number of cTTO and DCE valuation tasks per respondent; the minimum sample size needed; and the randomisation schema. This chapter also summarises the research studies developing and testing alternative experimental designs aimed at generating a “Lite” version of the EQ-VT design. This “Lite” version aimed to reduce the number of health states in the design, and thus the sample size, to increase the feasibility of undertaking valuation studies in countries with limited resources or recruitment possibilities. Finally, this chapter outlines remaining methodological issues to be addressed in future research, focusing on refinement of current design strategies, and identification of new designs for novel valuation approaches.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

3.1 Introduction

As explained in Chap. 2, having decided that the protocol for valuing EQ-5D-5L would include both composite time trade-off (cTTO) and discrete choice experiment (DCE) as elicitation techniques, the next step was to provide a study design that would enable the researchers to identify a model that would appropriately predict values for all 3125 potential health states. For this, choices needed to be made about:

-

1.

the selection of health states in the cTTO part of the study,

-

2.

the selection of pairs of health states in the DCE tasks,

-

3.

the number of respondents and

-

4.

the number of tasks per respondent.

It was envisaged that not all respondents needed to value the same health states and that respondents could be randomised over different blocks of health states. The aim of this chapter is to describe the basis for the protocol designs and the factors that were considered in developing them. In addition, alternative designs and directions for future research with respect to designs will be addressed.

Valuation studies do not test hypotheses, and as such there is no classic power calculation as with randomised clinical trials. Generally, the more subjects and the more data per health state decreases the standard errors around the value for each health state, decreases the standard errors around the model estimates and one would expect it to decrease the probability of misspecification. A traditional method to test different designs is by simulating experiments (i.e., simulate respondents’ answers to the tasks – informed by prior evidence on how people respond) and compare the simulated means with the means one would expect. The simulated experiments are analysed to determine whether the model that is being estimated corresponds with the model which underlies the simulations (the true model) and what the width is of the confidence intervals surrounding the estimates.

Within the above considerations it was also decided that both the cTTO task and the DCE task needed to be designed such that the data would allow for estimating separate models without the need for data from the other part of the study. This would leave room for the scientists conducting such studies to estimate models using only cTTO data, or only DCE data, or hybrid models combining the two sets of data. The EQ-VT designs were developed using a staged approach. Designs were created for the pilot studies that informed the development of the EQ-VT protocol. These pilot studies also informed refinements with respect to the experimental design.

As described in Chap. 1, for EQ-5D-3L valuation studies, the study protocols and experimental designs were not standardised, although most studies followed some or all of the protocol used in the first time trade-off (TTO) study for EQ-5D-3L: the Measurement and Valuation of Health (MVH) study conducted in the United Kingdom (Dolan 1997). In the end, different countries produced EQ-5D-3L value sets based on different elicitation tasks. Some used a visual analogue scale (VAS) based elicitation technique, while others used TTO. In addition, different numbers of health states were used (e.g., 43 health states in total for MVH, while others used e.g., 17 states or 24 states, or a more saturated 196 health state approach), and a different selection of health states. These methodological differences between studies hampered comparison between countries: it is unknown to what extent differences in results obtained between countries were due to differences in the preferences of the study populations or due to differences in the study protocol and experimental design. Therefore, for the valuation of the EQ-5D-5L, the EuroQol Group decided to create a standardised study protocol including an experimental design (see Chap. 2 for more details on this standardisation).

3.2 EQ-VT Designs

3.2.1 cTTO Design

The states selected for the design of the cTTO need to be optimised for model estimation. This means the objective is to avoid introducing a bias in the model that originates from the selection of the health states included in the design. For example, if mild or moderate states are highly overrepresented in the design, this could lead to a bias in the model estimation. In addition, there should be enough states included, so that the model can be specified. For example, since there are 20 main effect parameters (i.e., the four dummy parameters for each of the five EQ-5D dimensions) the theoretical minimum number of health states to be included would be 21 (20 main effects +1 error term). For the main pilot study, also referred to as the core multinational pilot study (Oppe et al. 2014), the number of states that would be required for estimating an EQ-5D-5L value set was expected to be around 100. It was considered that a main effects model would have 21 parameters (5*4 dummy variables for the main effects + intercept) leaving 79 degrees of freedom. Such a number of states would allow estimation of random coefficient models, and inclusion of different kinds of interactions and/or the effects of background variables.

When regarding the number of observations per EQ-5D-5L health state included in the cTTO tasks, we found that in the cTTO pilot study with 121 observations per state, the standard errors for the severe states were around 0.056, while those for the mild states were around 0.01 (Janssen et al. 2013). This suggested we would achieve adequate average precision of the mean observed values with 100 observations per cTTO state. This was based on the assumption that with the standard errors at those levels, a repetition of the sample would result in observed mean values that would very likely fall within the bounds provided by these standard errors.

From the pilot studies, as well as the valuation studies undertaken for the EQ-5D-3L, it was clear that respondents would be able to complete at least 17 cTTO tasks each without negatively impacting on data quality (Tsuchiya et al. 2002; Lamers et al. 2006). However, since we also wanted to include a DCE task for the same respondents, it was decided that we should limit the number of cTTO tasks per respondent to ten (excluding warm-up and practice tasks). In order to counteract biases due to framing effects, a blocked design was chosen to achieve a balanced mix of states with respect to where they are expected to lie on the overall value scale. That is to say, each respondent should complete a good balance of health states, covering the entire range from mild to severe. Therefore, each block was designed to include one of the five very mild states (i.e., states 21111, 12111, 11211, 11121, 11112) and the worst state (i.e., state 55555, sometimes referred to as the “pits” state). It was decided to include ten blocks with two fixed states in each block such that eight states per block would need to be generated, i.e. 80 states. This implied that we would have (10*8 + 5 + 1=) 86 states in total, which is a little less than in the main pilot study, but still more than four times the number of parameters for a typical main effects model.

Putting the above together, ten blocks of ten EQ-5D-5L states each, with 100 observations per block lead to a required sample size of 1000 respondents. This leaves the final part of the design for the cTTO part of the EQ-VT: selecting the set of 80 EQ-5D-5L states to be included.

We selected the 80 states from the total set of 3119 (i.e., the 3125 states in the EQ-5D-5L, minus the six states that were already included in the design, namely the five mildest states and the “pits” state) using Monte Carlo simulation (see Box 3.1). First, values for all 3125 states for a sample of n=1000 respondents were simulated using a simulation programme implemented in R. Details of the simulation programme can be found in (Oppe and van Hout 2010). For the simulation as well as the optimisation algorithm, a main effects model (without constant) was used. This was decided based on the pilot studies and on two previous studies using the EQ-5D-3L. In the first EQ-5D-3L study, OLS models including main effects and the N3 term (an interaction parameter which takes the value of 1 if any dimension is at level 3, or 0 otherwise) were estimated on the full data set of the MVH study, which resulted in an adjusted R2 of 0.43, and on a data set that included only the mean observed values of the 42 states included in the MVH study (thereby removing the within state variance), which resulted in an adjusted R2 of 0.97 (Oppe et al. 2013). These results indicate that the main contributor to the uncertainty is the within state variance, not the between state variance; that there is very little to gain by adding interaction terms (i.e., R2 can only increase marginally from 0.97); that you run the risk of overfitting if interactions are added. The EQ-5D-5L pilot valuation studies showed that interactions similar to N3 or D1 (a parameter which corresponds to the number of impaired dimensions beyond the first) from the EQ-5D-3L models did not improve the EQ-5D-5L models. Lastly, in a DCE study for EQ-5D-3L using a design optimised for main effects plus all two-way interactions the (pseudo) R2 increased from 0.266 for main effects to 0.277 for a model including interactions. In total there were 12 model parameters, but three of the main effects were no longer included (Stolk et al. 2010). Therefore, it becomes an issue of parsimony: Is adding interactions – consequently making the model less interpretable – worth a small increase in model fit?

A random design of 80 states was generated from the simulated data. An OLS main effects regression model (without constant) was estimated on the simulated set of cTTO data comprising the 80 states and 1000 respondents. Next, the sum of the mean squared errors (MSE) was calculated between the parameters that were used to create the simulated preference data and the parameters resulting from the OLS model. The difference between perfect level balance and achieved level balance of the 80 generated states was also calculated. The construction of the level balance criterion can be found in Appendix A. The regression procedure was repeated 10,000 times and an iterative procedure was used where designs that had either worse level balance or worse MSE were discarded.

The “optimal” set of 80 states was divided over the ten blocks using the blocking algorithm included in the “AlgDesign” package in R (Wheeler 2004). The blocking algorithm divides the states over the blocks in such a way that the within block variance is maximised (i.e., the full severity range is more or less covered within a block), while the between block variance is minimised (i.e., all blocks are more or less the same with respect to the mean severity per block).

Box 3.1: Algorithm for Selection of cTTO Health States Used for the EQ-VT Design

start | |

step 1 | simulate dataset with 1000 respondents and all 3125 states |

step 2 | split the data set into six “fixed states” and 3119 “selection states” |

step 3 | randomly select 80 health states from the “selection states” of the simulated data set and add the six states |

step 4 | calculate level balance |

step 5 | calculate main effects OLS regression model |

step 6 | calculate difference with parameter estimates used to create simulated data |

step 7 | repeat steps 3 to 6 10,000 times and keep the design with best level balance, and smallest difference of parameter estimates |

step 8 | block the design of 80 states found in step 7 into ten blocks of eight health states |

step 9 | add the worst state and one of the five mildest states to each block |

end | |

In summary, the design of the cTTO experiment consists of 86 states divided over ten blocks with 100 observations per block, leading to about 10,000 observations in total, where the five very mild states and state 55555 were oversampled compared to the other 80 states. For a main effects model this means that there will be about 400 observations per model parameter (8000 observations/20 parameters). The required sample size was determined to be 1000 (i.e., 10 blocks * 100 observations per block). The 86 states of the cTTO design can be found in Appendix B.

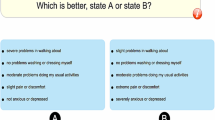

3.2.2 DCE Design

A Bayesian efficient design algorithm was used to select the pairs for the DCE. The priors were based on the results of a main effects model (without intercept) estimated on the data of an EQ-5D-3L DCE study (Stolk et al. 2010). We assumed that the levels 1, 2 and 3 from the EQ-5D-3L study corresponded to the levels 1, 3 and 5 for the EQ-5D-5L, while the levels 2 and 4 were assumed to be the mid-points between the levels 1 and 3, and 3 and 5 respectively. The standard errors of the parameters of the model we estimated on the EQ-5D-3L DCE data varied between 0.06 and 0.08. Conservatively, these were increased to 0.10 for the priors. The priors that were used can be found in Table 3.1.

Similar to the cTTO design there was an interest in making sure that at least some pairs of health states containing only mild states would be included in the DCE design. Therefore, ten such pairs were created manually. Pilot studies showed that the sample size of 1000 respondents determined for the cTTO would also be sufficient for estimating a DCE model (Krabbe et al. 2014; Oppe et al. 2014). In order to put limits on respondent burden, the number of DCE pairs per respondent was set to seven. The minimum number of observations needed per pair was deemed to be 35. This was based on being slightly more conservative than Hensher and colleagues, who refer to a minimum of 30 responses per set, based on the law of large numbers as stated by Bernoulli (Hensher et al. 2005). Putting these numbers together, a 196 pair design divided over 28 blocks of seven pairs was created using a Bayesian D-efficient design algorithm (see Box 3.2).

First, the set of ten mild pairs was manually selected. Next, a set of 186 pairs was randomly generated. For this set of 186 pairs, the Bayesian D-error of the design was determined using 1000 randomly drawn sets of priors. This process was repeated 10,000 times and the 186 pair design with the best D-error was kept. The ten mild pairs were added to this design, and the total set of 196 pairs was then blocked into 28 blocks of seven pairs each.

The Bayesian D-efficient design algorithm was implemented in R and we used the blocking algorithm included in the “AlgDesign” package in R (Wheeler 2004).

Box 3.2: Algorithm for Selection of DCE Pairs of Health States Used for the EQ-VT Design

start | |

start outer loop | |

step 1 | a set of 186 pairs of states is randomly generated |

start inner loop | |

step 2 | a set of priors is drawn |

step 3 | the D-error of the design is computed |

step 4 | steps 2 and 3 are repeated |

end inner loop | |

step 5 | the overall D error is calculated (i.e., the combined D error from the inner loops) |

step 6 | repeat steps 1 to 5 10,000 times and keep the design with the best overall D error |

end outer loop | |

step 7 | add the ten fixed pairs of mild health states to the set of 186 pairs from step 7 |

step 8 | block the design from step 7 into 28 blocks of seven pairs each |

end | |

In summary, the DCE designs consists of 196 pairs divided over 28 blocks of seven pairs each. With the same sample size as the cTTO, this leads to a total of 7000 observations, meaning about 350 observations per parameter for a main effects model. The 196 pair DCE design can be found in Appendix C.

3.2.3 Other Considerations

Apart from the sample size, and the selection of the set of health states for the cTTO and pairs for the DCE, another consideration for the experimental design of the EQ-VT was the randomisation schema needed. This is important, as a proper randomisation schema can counteract potential biases. For the cTTO, each respondent is randomly allocated one block of ten health states. The order in which the ten health states appear for each respondent is also randomised. For the DCE, each respondent is randomly assigned to one of the 28 blocks of pairs. The order of appearance of the seven pairs allocated to each respondent is also randomised, and for each pair of health states the order of appearance on the screen of the two health states comprising a pair (i.e., left versus right) is randomised. The order of appearance of the dimensions was not randomised, because the EQ-5D-5L instrument itself has a fixed order of appearance with respect to the dimensions (see Chap. 1).

3.3 Alternative cTTO Designs

As noted above, the cTTO design of the EQ-VT protocol includes the selection of 86 different EQ-5D-5L health states and a minimal sample size of 1000. While this sample size is considered sufficient and achievable for most countries, reducing the number of states in the design, and thus the sample size, has the appeal that it could increase the feasibility of a valuation study in countries with limited resources or difficulty recruiting such a large number of respondents.

An important criterion for using a small design is that the accuracy of the estimated health state values should not be compromised (i.e., bias should be minimized). Following the study design established by Yang et al. in comparing EQ-5D-3L designs in a saturated VAS study (Yang et al. 2018), this process was replicated for the EQ-5D-5L. First, an EQ-5D-5L saturated VAS dataset was collected from a Chinese university student sample, with 100 VAS values for all 3125 EQ-5D-5L health states. Next, 100 variants of an orthogonal designFootnote 1 with 25 health states were created and modelled. Their predictive performances were quantified by calculating the Root Mean Squared Error (RMSE) against the observed VAS values from the saturated dataset. 25 health states were chosen as it is the minimal number for an orthogonal design in a five-dimension five-level classification system. For comparison, 100 variants of a random design and 100 variants of a D-efficient design were created, also with 25 states in each design. The EQ-VT design was also included as a reference (Yang et al. 2019a). The results showed that the RMSE was 3.44 for the EQ-VT design and 3.40 for the orthogonal design on the VAS scale (from 0 to 100). Little variance is observed among the 100 variants of the orthogonal design. Nevertheless, the inclusion of 11111 in the orthogonal design degraded the overall prediction performance. When extending the orthogonal design with the five mildest states and the “pits” state (to counteract biases due to framing effects), the RMSE was 3.87. These results showed that the orthogonal design extended with five mildest and the “pits” state could allow robust and precise estimations of EQ-5D-5L VAS values, as the RMSE was only slightly increased compared with the RMSE of 3.44 for EQ-VT design (i.e., the difference was 0.43 on VAS scale).

Considering the data distribution characteristics of the cTTO values from EQ-5D-5L valuation studies using the EQ-VT (e.g., they are not normally distributed, their distribution was separated by death into two parts, they displayed large heterogeneity etc.), a second study was performed validating the performance of orthogonal designs using cTTO data (Yang et al. 2019b). Following the EQ-VT protocol version 1.1 (as described in Chap. 2) cTTO data were collected from a sample of Chinese university students. In total, three designs were included in the study, i.e., (1) the EQ-VT design; (2) the best performing orthogonal design variant from the VAS saturated study; (3) a D-efficient design with 25 states. In total, 100 observations per health state were collected for the three designs of a total 136 health states (i.e., 86 + 25 + 25). Next, the value sets were modelled by design and their prediction accuracy was evaluated for the 136 states. The RMSEs of the (1) EQ-VT, (2) orthogonal + five mildest states + the “pits” state and (3) D-efficient designs + five mildest states + the “pits” state were 0.053, 0.066 and 0.063 on the value scale (0-1) respectively. Based on the findings of these two studies, the use of the EQ-VT design was confirmed as a default design choice for EQ-5D-5L valuation studies. However, the orthogonal design with 25 states + five mildest states + one “pits” state can be used in some specific contexts, e.g., when resources are not available for a standard EQ-VT study. Peru is the first country to use the orthogonal design + five mildest states + one “pits” state (referred as ‘Lite’ protocol; see Appendix D) for establishing its EQ-5D-5L value set (see Chap. 4). In that study, the modelling results suggested the ‘Lite’ protocol could produce logical consistent coefficients, but some coefficients were not significant. Additionally, the DCE coefficients and cTTO coefficients were found to be inequivalent in that study and a hybrid model of combining both responses was not used for the final Peruvian EQ-5D-5L value set. For the above-mentioned reasons, the authors suggest more research is needed to further explore the feasibility of such ‘Lite’ protocol (Augustovski et al. 2020).

3.4 Future Research

Regarding design principles employed in EQ-5D-5L valuation studies, ongoing work focuses on refinement of current design strategies, and identification of new designs for emerging valuation approaches. Regarding the design used in the EQ-VT, evidence to date suggests that the design is fit for purpose. Across the range of studies already conducted with the EQ-VT (see Chap. 1 for details), the design has allowed precise estimation of health state values. However, there are a number of issues that require addressing in future.

First, it is apparent that the ten pairs of relatively mild health states appended to the DCE design are potentially problematic, and may cause bias in the parameter estimates. One plausible explanation for this is that the values for these health states are likely to be similar (as they are all close to full health and to each other), but the choice probabilities are not necessarily close to 50/50 since there may be a small but consistent preference for accepting a particular dimension at level two over another. Second, it may be that using a broader set of EQ-5D-5L health states yields a more accurate value set as the value of health states not directly observed in the data are more likely to have a near neighbour health state valued. For instance, the ongoing Indian EQ-5D-5L valuation study is exploring the use of an expanded set of 150 health states as part of the cTTO (Jyani et al. 2020). Third, the number of DCE choice pairs typically asked in the standard EQ-VT (i.e., seven) is limiting in terms of the models we might seek to estimate using the resultant data. For example, if we are interested in preference heterogeneity of the DCE data, then only having a small amount of DCE data precludes reliable estimation of more sophisticated latent class, mixed logit or generalised multinomial logit models (Fiebig et al. 2010), particularly if we are concerned with estimating correlations.

One more novel valuation approach that is under consideration currently is the use of DCE as a stand-alone task, a concept which has been growing in popularity in the health preference literature more generally (Mulhern et al. 2019). There are a range of potential advantages of a stand-alone DCE. Most importantly, the task can be undertaken without an interviewer (hence reducing the cost significantly) (Mulhern et al. 2013). Further, as it does not require interviewer travel, a smoother geographical distribution of respondents can be achieved (assuming that the surveying approach is equally accessible across regions). However, if we are reliant on the DCE alone (rather than as a component of the EQ-VT alongside cTTO tasks), then there is a need for more than seven choice observations per person, particularly if we want to move beyond estimation of population mean preferences, which is useful if we want to identify population sub-groups with specific views. Further, there is a need to anchor the data so they can be used to populate cost-utility analysis, for instance through including one or more of a duration attribute, a ‘dead’ health state, or some other external anchor. Regarding design strategy for a stand-alone DCE, there has been particular focus on generator-type approaches and efficient designs. The relative merits of each have been widely discussed in the literature. For example, EuroQol-funded work has conducted a large DCE in Peru looking at different composite approaches to anchoring and design; these results have been reported as part of a larger study including cTTO and latent DCE tasks (Augustovski et al. 2020). Ongoing analysis of these data, and similar data collected in Denmark (Jensen et al. 2021), will explore whether there is clear enough evidence of superiority of one or the other design approach for this specific purpose, and then to identify a design (or design approach) which can be used across countries conducting such a valuation survey.

This chapter has described the design strategies that have been used in existing EQ-5D-5L valuation projects, and their relative advances on those used for the EQ-5D-3L. The designs have been selected to balance statistical efficiency with respondent ease (and hence data quality), and the current approach appears to reflect a good trade-off between the two, with good completion rates, precise model estimates, and face validity of the final value sets across a number of languages, countries and cultures. The approaches used to this point are flexible, and can be adapted to meet the challenge of novel valuation approaches which may become more prominent in future years, and give policy makers confidence that the valuation surveys have accurately captured the attitudes of the general public without bias.

Notes

- 1.

An orthogonal design satisfies the criterion that all severity levels and all severity level combinations are equally prevalent and therefore balanced.

References

Augustovski F, Belizán M, Gibbons L, Reyes N, Stolk E, Craig BM, Tejada RA (2020) Peruvian valuation of the EQ-5D-5L: a direct comparison of time trade-off and discrete choice experiments. Value Health 23(7):880–888

Dolan P (1997) Modeling valuations for EuroQol health states. Med Care 35(11):1095–1108

Fiebig DG, Keane MP, Louviere J, Wasi N (2010) The generalized multinomial logit model: accounting for scale and coefficient heterogeneity. Mark Sci 29(3):393–421

Hensher DA, Rose JM, Greene WH (2005) Applied choice analysis: a primer. Cambridge University Press, New York

Janssen BM, Oppe M, Versteegh MM, Stolk EA (2013) Introducing the composite time trade-off: a test of feasibility and face validity. Eur J Health Econ 14(Suppl 1):S5–S13

Jensen CE, Sørensen SS, Gudex C, Jensen MB, Pedersen KM, Ehlers LH (2021) The Danish EQ-5D-5L value set: a hybrid model using cTTO and DCE data. Appl Health Econ Health Policy. https://doi.org/10.1007/s40258-021-00639-3

Jyani G, Prinja S, Kar SS, Trivedi M, Patro B, Purba F, Pala S, Raman S, Sharma A, Jain S, Kaur M (2020) Valuing health-related quality of life among the Indian population: a protocol for the development of an EQ-5D value set for India using an extended design (DEVINE) study. BMJ Open 10(11):e039517. https://doi.org/10.1136/bmjopen-2020-039517

Krabbe PFM, Devlin NJ, Stolk EA, Shah KK, Oppe M, van Hout B, Quik EH, Pickard AS, Xie F (2014) Multinational evidence of the applicability and robustness of discrete choice modeling for deriving EQ-5D-5L health-state values. Med Care 52(11):935–943

Lamers LM, McDonnell J, Stalmeier PFM, Krabbe PFM, Busschbach JJ (2006) The Dutch tariff: results and arguments for an effective design for national EQ-5D valuation studies. Health Econ 15(10):1121–1132

Mulhern B, Longworth L, Brazier J, Rowen J, Rowen D, Bansback N, Devlin N, Tsuchiya A (2013) Binary choice health state valuation and mode of administration: head-to-head comparison of online and CAPI. Value Health 16(1):104–113

Mulhern B, Norman R, Street DJ, Viney R (2019) One method, many methodological choices: a structured review of discrete-choice experiments for health state valuation. PharmacoEconomics 37(1):29–43

Oppe M, van Hout B (2010) The optimal hybrid: Experimental design and modelling of a combination of TTO and DCE. In: Proceedings of the 27th Scientific Plenary Meeting of the EuroQol Group, Athens, Greece, September 2010. https://eq-5dpublications.euroqol.org/download?id=0_53738&fileId=54152. Accessed 26 June 2021

Oppe M, van Hout B (2017) The “power” of eliciting EQ-5D-5L values: the experimental design of the EQ-VT. EuroQol Working paper 17003. https://euroqol.org/wp-content/uploads/2016/10/EuroQol-Working-Paper-Series-Manuscript-17003-Mark-Oppe.pdf Accessed 12 July 2021

Oppe M, Oppe S, de Charro F (2013) Statistical uncertainty in TTO derived utility values. In: Oppe M. Mathematical approaches in economic evaluations. Dissertation, Erasmus University Rotterdam. https://repub.eur.nl/pub/41260. Accessed 26 Jun 2021

Oppe M, Devlin NJ, van Hout B, Krabbe PF, de Charro F (2014) A program of methodological research to arrive at the new international EQ-5D-5L valuation protocol. Value Health 17(4):445–453

Stolk EA, Oppe M, Scalone L, Krabbe PFM (2010) Discrete choice modeling for the quantification of health states: the case of the EQ-5D. Value Health 13(8):1005–1013

Tsuchiya A, Ikeda S, Ikegami N, Nishimura S, Sakai I, Fukuda T, Hamashima C, Hisashige A, Tamura M (2002) Estimating an EQ-5D population value set: the case of Japan. Health Econ 11(4):341–353

Wheeler RE (2004) AlgDesign: algorithmic experimental design. The R project for statistical computing. https://CRAN.R-project.org/package=AlgDesign. Accessed 26 Jun 2021

Yang Z, Luo N, Bonsel G, Busschbach J, Stolk E (2018) Selecting health states for EQ-5D-3L valuation studies: statistical considerations matter. Value Health 21(4):456–461

Yang Z, Luo N, Bonsel G, Busschbach J, Stolk E (2019a) Effect of health state sampling methods on model predictions of EQ-5D-5L values: small designs can suffice. Value Health 22(1):38–44

Yang Z, Luo N, Oppe M, Bonsel G, Busschbach J, Stolk E (2019b) Toward a smaller design for EQ-5D-5L valuation studies. Value Health 22(11):1295–1302

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendices

3.1.1 Appendix A: Construction for Level Balance Optimisation Criterion

-

Step 1: A matrix (labelled “EQ lvl mat”) with the counts for each level-domain combination is constructed (note that the example tables below contain hypothetical data using ten EQ-5D-5L states for illustrative purposes):

MO | SC | UA | PD | AD | |

|---|---|---|---|---|---|

lvl 1 | 2 | 2 | 1 | 3 | 1 |

lvl 2 | 1 | 2 | 2 | 2 | 3 |

lvl 3 | 3 | 2 | 2 | 1 | 2 |

lvl 4 | 2 | 2 | 3 | 1 | 1 |

lvl 5 | 2 | 2 | 2 | 3 | 3 |

-

Step 2: Using the data from “EQ lvl mat” a second matrix, containing the squares of the differences between the presence of levels per dimension is created (labelled “lvl dist mat”):

MO | SC | UA | PD | AD | |

|---|---|---|---|---|---|

(lvl 1 - lvl 2)^2 | 1 | 0 | 1 | 1 | 4 |

(lvl 1 - lvl 3)^2 | 1 | 0 | 1 | 4 | 1 |

(lvl 1 - lvl 4)^2 | 0 | 0 | 4 | 4 | 0 |

(lvl 1 - lvl 5)^2 | 0 | 0 | 1 | 0 | 4 |

(lvl 2 - lvl 3)^2 | 4 | 0 | 0 | 1 | 1 |

(lvl 2 - lvl 4)^2 | 1 | 0 | 1 | 1 | 4 |

(lvl 2 - lvl 5)^2 | 1 | 0 | 0 | 1 | 0 |

(lvl 3 - lvl 4)^2 | 1 | 0 | 1 | 0 | 1 |

(lvl 3 - lvl 5)^2 | 1 | 0 | 0 | 4 | 1 |

(lvl 4 - lvl 5)^2 | 0 | 0 | 1 | 4 | 4 |

-

Step 3: The elements of “lvl dist mat” are summed and the square root is taken over the sum to obtain the optimisation parameter (labelled “lvl bal check”):

-

“lvl bal check” = square root ( sum ( “lvl dist mat” ) ) = 7.75

-

A value for “lvl bal check” = 0 indicates perfect level balance (i.e. each level-domain combination occurs twice).

-

A value for “lvl bal check” = 44.72 indicates the worst possible level balance: for each domain only 1 level is included. In this case “EQ lvl mat” contains one 10 and four 0’s for each domain; “lvl dist mat” contains four 100’s and six 0’s, and the sum of “lvl dist mat” = 2000, with a square root = 44.72.

-

Note that perfect level balance is not a requirement (and might actually be undesirable in some cases). Small deviations can be allowed by e.g., setting a maximum allowable value for “lvl bal check” and letting the algorithm sample designs until it finds one for which “lvl bal. check” is lower than this pre-set maximum.

-

3.1.2 Appendix B: The cTTO design of the EQ-VT

3.1.3 Appendix C: The DCE design of the EQ-VT

3.1.4 Appendix D: The Design Used in the Peruvian EQ-5D-5L Valuation Study

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Oppe, M., Norman, R., Yang, Z., van Hout, B. (2022). Experimental Design for the Valuation of the EQ-5D-5L. In: Devlin, N., Roudijk, B., Ludwig, K. (eds) Value Sets for EQ-5D-5L. Springer, Cham. https://doi.org/10.1007/978-3-030-89289-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-89289-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89288-3

Online ISBN: 978-3-030-89289-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)