Abstract

Simulation of AM products can capture a number of aspects. Apart from the traditional types of simulation of the end product, such as mechanical, thermal and fluid analyses, it is possible to simulate the AM build process. While simulating products intended for AM can sometimes be performed in exactly the same way as with products intended for traditional manufacturing, there are several aspects that may require a specialized workflow.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Simulation of AM products can capture a number of aspects. Apart from the traditional types of simulation of the end product, such as mechanical, thermal and fluid analyses, it is possible to simulate the AM build process. While simulating products intended for AM can sometimes be performed in exactly the same way as with products intended for traditional manufacturing, there are several aspects that may require a specialized workflow. In particular, the great benefit of AM in offering “complexity for free” is also a major challenge in simulating the part performance. In this section, a review of the various tools and methods for simulating AM products will be made.

This chapter will be structured according to the basic workflow of setting up a simulation, that is, a geometry needs to be defined and most often discretized, material properties need to be defined, boundary conditions need to be applied, analysis settings need to be defined and results need to be interpreted, as illustrated in Fig. 5.1.

5.1 Simulation

5.1.1 Geometry Definition

The task of defining the geometry for analysis is obviously a part of the design process, which is dealt with in other chapters of this book, but some aspects are worth noting regarding simulation.

Simplification

A geometry intended for production is often not ideal for simulation. More often than not, simplification steps need to be taken to make it feasible to analyse the geometry. However, since many use cases of AM relies on exploiting “complexity for free” it is not always straightforward to exclude complex features from the analysis. Traditionally, features such as non-structural rounds, lettering, screw threads, small holes etc. are eliminated from the geometry before analysis (as seen in Fig. 5.2). This can either be done through the original modelling software, or through specialized pre-processing defeaturing software. This process is largely manual and often requires considerable effort if the model is complex. Moreover, it is important to not oversimplify the model by removing features that may initially look irrelevant to the performance of the product.

When dealing with models intended for AM, it may not even be possible to simplify away features such as lattice structures or organic shapes with many rounds. Although computing power is ever increasing, many simulations of highly complex lattice structures may not be currently feasible to run, at least not if several iterations of the design are expected. There are approaches, such as homogenization, where complex small-scale structures are replaced by a solid material that replicates the properties of the structure. These approaches, however, require additional work to characterize the material response and are not easily applicable when the small scale-structure varies in, for instance, lattice member diameter.

Facet-Based and Parametric Models

Traditionally, the geometry intended for simulation is represented in a parametric CAD format, such as STEP. Parametric formats represent curved surfaces as, for instance NURBS (Non-uniform rational basis spline), which can in turn be discretized into finite elements or volumes with varying density. As parametric models are represented by surfaces, it is straightforward to determine aspects such as curvature, which simplifies discretization and application of boundary conditions. Moreover, parametric models enable non-geometric data to be linked to the model, such as surface-ids and material data, which can help reduce some of the modelling effort when analysing several variants of the same basic concept.

However, within AM, it is common to have geometry represented as facetted polygon meshes in, for instance, the STL-format. As the facetted representation is already discretized and does not easily allow for the identification of surfaces such as planes and cylinders, facet-based models pose several challenges for simulation pre-processing. While there are some tools that simplify the selection of connected areas of triangles, these selections will typically only be valid for that particular mesh. If a slightly modified version of the model is to be analysed, all selections will have to be updated again manually.

There are several tools for transforming a facet-based representation into a parametric model, sometimes called reverse engineering tools. These tools analyse the facetted mesh to identify areas that can be represented as, for instance, cylindrical or planar surfaces and can fit NURBS geometry to the underlying facets. This makes discretizing and applying boundary conditions easier, but the process is computationally expensive, often requires manual adjustment and will need to be redone if the mesh is altered.

5.1.2 Discretization

For discretizing a facetted model without first translating it into a NURBS-representation, the main approach is to voxelise it. This process generates a number of volumetric elements, or voxels, approximating the facet-based model. The voxels are usually aligned with the coordinate system and may also be aligned to the face of the mesh, to better approximate the shape of the mesh, as shown in Fig. 5.3.

This is a robust method for making a facet -based model compatible with simulation and is less computationally expensive than re-interpreting the mesh as surfaces. Table 5.1 gives a summary of the advantages and disadvantages of the different methods.

If you are doing the analysis for validation and will not be analysing several iterations, re-interpreting the geometry is probably a good option. If you are analysing several concepts or will be iterating on the design, voxel-based meshing is probably a good option.

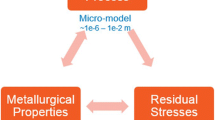

5.1.3 Material Properties

While many research teams are very actively characterizing the material properties of AM parts, it is still hard to know beforehand what material properties the finished part will have. This is in principle due to the large number of parameters that affect the part properties, such as type of energy source (e.g. electron or laser beam), energy density, scanning strategy, powder properties, heat treatment, part geometry, part orientation, etc. While build process simulation tools can take many of these factors into account on a small scale, it is not currently feasible to simulate an entire product in such detail. Instead, most simulations are based on estimated properties calibrated from printed samples. Moreover, factors such as yield and ultimate strength, fatigue life, surface roughness, and thermal and electrical conductivity can only be estimated and need to be tested to ensure reliable results. As AM is inherently anisotropic, the material properties also vary in the different directions and need to be tested individually. Moreover, many AM methods produce parts with considerable residual stresses, which may need to be accounted for through, for instance, build process simulation. The produced geometry will also differ from the CAD-model, not only due to the tolerances of the machine, but more importantly due to the residual stresses and deformations. This effect will be more or less pronounced depending on the production method, part geometry, support strategy and heat treatment. Part warpage can be compensated for by build process simulation.

5.1.4 Boundary Conditions

Applying boundary conditions to AM parts is, in principle, no different from parts intended for traditional manufacturing. The only new challenge is if the model is facet-based, and thus lacks information about underlying surfaces and volumes, as shown in Fig. 5.4. Depending on the type of analysis, it may be beneficial to convert the facet-based model into a parametric model using reverse-engineering tools such as SpaceClaim. Otherwise, the boundary conditions will have to be applied to individual elements after discretization. As this type of selection does not typically automatically update if the facet-based model is modified, applying boundary conditions for repeated analysis of similar designs can be time-consuming and is not easily automated.

5.1.5 Post-processing Results

Results need, as always, to be analysed with care. In AM products, that are often complex with many small-scale structures such as lattices, this is particularly true. Stress concentrations are not always immediately obvious and mesh independence should, as always, be checked for. However, due to the limitations of facet-based models in terms of resolving small features, it may not always be possible in practice to simulate the model as precisely as needed. Therefore, predictions about maximum stresses and fatigue life are not always accurate and should be validated through physical testing if the design is intended for critical applications. However, for concept design evaluation and comparison, the results should yield usable numbers.

5.2 AM Build Process Simulation

There are now several commercial vendors of build process simulation software. While they differ in several respects in terms of capabilities and material libraries, the basic principles and main features are similar. A build process simulation can take into account the layer-by-layer build process of a part including the machine-specific parameters, support material, heat treatment and the removal of support material (Fig. 5.5).

5.2.1 Geometry Definition

As geometry is commonly available in facetted formats, the simulation software supports both parametric and facet-based formats. To fully capture the effects of parameters such as build-plate heat conduction and the effects of surrounding loose powder, the part needs to be positioned in the same position and orientation as it will be built in. Apart from the product geometry, the geometry for the support material also needs to be defined or imported. Some software can automatically create support material based on the part geometry, but it is often more convenient to generate the support material geometry in a dedicated build preparation software.

5.2.2 Discretization

As models are often facet-based, voxel-based meshing methods are common for build-process simulation. This is a robust and convenient way of discretizing complex non-parametric models, but the amount of detailed control over the discretization is limited. Separate discretization settings for support and part geometries is usually possible to reduce the computation time. As voxel-based discretization often has the same voxel-size throughout the model, it may not be possible in practice to fully resolve small features such as lattice structures. Instead, a sort of homogenization of the small-scale features is possible in some software. Homogenization in this case means that the properties of each voxel is based on how much of its volume is filled by the actual geometry, as illustrated in Fig. 5.6. It is, however, important to make sure that the voxel-size is chosen with respect to wall-thicknesses and minimum feature sizes in the model.

5.2.3 Material Definition

The material properties are typically provided by the software vendor.

5.2.4 Build-Process Parameters

To fully represent the build-process, the intended AM machine needs to be described. This is typically done by inputting information such as energy densities, scan speeds, layer heights, scan strategies. However, unless a full thermo-mechanical simulation of the beam-path is done, other approaches for approximating the material behaviour during printing is needed. Typically, this is achieved by printing and measuring a set of calibration specimens, designed to capture information about the residual strains in the material in different directions, as shown in Fig. 5.7. This type of calibration data, however, is only valid for a particular set of build-process parameters, and thus needs to be done again if, for instance, the laser power or scan strategy is altered. Using calibrated data like this allows for several layers to be simulated in bulk, greatly reducing the computation time.

5.2.5 Post-processing

Commonly available results include stresses, strains and deformations, but most software can also display plots of likelihood of part failure, likelihood of recoater impact, missing layers due to deformation of the part during print. Additionally, it is common to be able to export a deformation-compensated version of the original model, where the predicted deformations of the part due to the build process are used to compensate the geometry in the opposite direction. As there is no direct relationship between the deformations and the correct compensation, several iterations of simulation, compensation and re-simulation may be needed to reach a geometry that is sufficiently close to the original model to meet the dimensional specifications.

5.2.6 Limitations

While build-process simulation is becoming more common and the accuracy and computational efficiency is increasing, it is still best used for a rough estimate of problems that may arise during printing and to rule out completely unfeasible build-orientations or designs.

5.3 Optimization

As with any design, optimizing the part performance is crucial. Design optimization can be done in a number of ways, such as size optimization and topology optimization. Size optimization is often done on parametric models to fine-tune dimensions and is a relatively well-established field with many commercial software options. While size optimization is a very general method that can be applied to almost any optimization problem where constraints and objectives can be quantified, it quickly becomes unfeasible as more parameters are added. On the other hand, topology optimization can quickly indicate where material should be added or removed from a design to optimize its performance. However, topology optimization is limited in the types of constraints and objectives that can be handled and is thus not as general as size optimization.

5.3.1 Topology Optimization

Methods for topology optimization (TO) started being developed around the same time as additive manufacturing. Due to the organic and often quite complex shapes produced through topology optimization, the usefulness of the approach for products intended for traditional manufacturing methods has been limited, but with AM the number of feasible applications has grown. The number of commercial software intended for TO has grown quickly over the last years, as has the number of applications. At its core, topology optimization is used to minimize or redistribute material within a design space according to certain constraints and objectives, such as minimizing the compliance or deflection of the part. While TO has mainly been used for structural applications where light weight is of importance, other types of physics are possible (e.g. thermal, fluid flow, eigenfrequencies). Topology optimization for AM is not limited to applications where the end product needs to be as light as possible, for instance within the aerospace industry, but is also beneficial for reducing the print cost and production time.

TO can largely be divided into 8 steps.

-

1.

Define design space

-

2.

Define non-design space

-

3.

Define boundary conditions

-

4.

Define constraints and objectives

-

5.

Define optimization settings

-

6.

Solve

-

7.

Interpret the results

-

8.

Validate.

5.3.2 Define Design Space

The design space is the volume within which the TO algorithm can redistribute material. In order to give the optimization algorithm, the best possible starting point, the design space should be as large as possible. An overly constrained design space will not allow the algorithm to find the optimal load paths, as illustrated in Fig. 5.8. A good starting point can be to look at the interfaces to other components that need to be respected. It is also beneficial to reduce the complexity of the design space as much as possible, that is, to remove unnecessary details like small rounds, threads, decorative elements or holes. Another factor in determining the design space is build size limitations of the intended machine. As with any optimization decision, iteration may be necessary to find the optimal design space if the results indicate that the optimal load carrying path exists outside of the design space.

5.3.3 Define Non-design Space

As surfaces where the product interfaces with other parts, such as fasteners or bearings, should remain unaltered by the optimization, these should be marked as non-design space. While it sometimes is possible to simply exclude them entirely from the design space, it is often convenient to be able to apply boundary conditions on the non-design spaces, as shown in Fig. 5.9.

5.3.4 Define Boundary Conditions

As with any analysis, the boundary conditions need to be chosen with care. They should as closely as possible represent the real loading of the part. It is easy to, for instance, add a constraint that will take up forces in all directions, whereas in reality the support might only take up forces in compression. However, many topology optimization software are limited to conducting linear analyses, where, for instance, compression only supports may not be available. In those cases, special care needs to be taken to ensure that the supporting area is sufficient for the loads during validation.

As most structures will be subject to several different loads during operation, it is important to make sure that all major loads that may act on the part are represented. If one is missing, the optimization will typically recommend a design that is weak in that direction. A good strategy may be to add a new load case for each force acting on the structure.

5.3.5 Define Constraints and Objectives

There are a number of possible constraints and objectives that may be set up for TO. The most commonly available objectives include minimizing the compliance (i.e., maximizing the stiffness) and minimizing the mass. These objectives will obviously not generate useful design by themselves and need to be coupled to constraints. For instance, minimizing the compliance is often linked to a constraint on the percentage of the volume that should be retained. Minimizing the mass is often linked to a constraint on the stresses or deformation of the part. As knowing beforehand what the optimal design should weigh is impossible, it is recommended to experiment with several percentages. It is also worth mentioning that stresses calculated during TO are only rough estimates, and do not necessarily correspond to the results calculated during validation. The topology optimization can remove or change the mechanical properties of individual elements which makes it difficult to accurately predict the stresses. Moreover, the mesh density that is suitable for TO is not necessarily suitable for stress calculations. While research is being done into how to get more accurate stress values, it is recommended to add additional constraints to the optimization, such as deflection, to get more robust results.

Apart from structural constraints, many software vendors have added support for taking AM-specific manufacturing constraints into account. One can, for instance, reduce the need for support material by adding an overhang constraint, which limits the number of surfaces with an angle below the defined support angle and build direction. As the build direction may not initially be known, however, it may be beneficial to run optimizations in several build directions to evaluate the difference in build height, amount of support material, and part performance. It common for the overhang constraint to be contradictory to the structural objectives, and thus there is often a need to compromise between the amount of support material and the part performance, depending on whether cost or performance is more important.

5.3.6 Define Optimization Settings

Most software will allow the user to define parameters such as mesh density and minimum feature size. The mesh size affects the level of detail that the resulting geometry will have, and of course the computation time. In theory, the finer the mesh, the more optimal the results (as for instance Michell structures shown in Fig. 5.10), however, computational limits and production constraints will typically mean that the mesh size is fairly coarse, compared to traditional FEA meshes. This also means that traditional TO is not suitable for generating structures with the typical lattice structures, and other approaches are needed for generating them.

A Michell structure (Courtesy of Arek Mazurek). https://commons.wikimedia.org/wiki/File:MichellCantilever.jpg

5.3.7 Solve

Solution time will go up with the number of load cases and the mesh density. While reducing the number of load cases to reduce the computational time may be tempting, doing so will yield results that are not useful. Reducing the number of elements, on the other hand, will not typically affect the overall distribution of material, but will only have local effects. For initial optimization of concepts, it is therefore recommended to adjust the number of elements.

5.3.8 Interpret the Results

The results from a topology optimization is most commonly in the form of a mesh-based model, although there are a number of experimental methods that aim to make the process from optimization to manufacturing more streamlined. While it could be possible to directly print this part, doing so is often not preferred, as the mesh from the TO is quite coarse, may have many stress concentrations, and may not fit well with interfacing components. Due to this, there is a need to remodel the mesh-based result to make it suitable for manufacturing and use. There are three main approaches for this, as illustrated in Fig. 5.11:

-

1.

Mesh-based smoothing

-

2.

NURBS interpretation

-

3.

Manual re-design.

Mesh-Based Smoothing

There are several tools for smoothing the facet-based result and many smoothing algorithms. While this may produce satisfactory results for one-off prints, the facet-based model will not have any link to the initial CAD model, and it will be difficult and time consuming to do parametric changes to it, such as increasing dimensions.

NURBS Interpretation

There are several tools for reverse engineering a facet-based model into a parametric model, as discussed earlier. Some require manual input, and some are more automated, but all will require some amount of manual manipulation to get good results. Although these tools generate parametric models, the complexity of them is often very high, with few planar or cylindrical/spherical surfaces. Moreover, the model is “dead” and there is no information about the features or design intent. This makes it difficult and time-consuming to continue working with the model in standard CAD-packages, and the models are not easily parametrically controllable, if changes to the dimensions need to be done or if one wants to conduct a size optimization.

Manual Re-design

This method is obviously the most time-consuming, but also generates a model with high parametric controllability and design intent. If the model is to be further adapted for manufacturing or optimization, manual re-design is usually the most straight-forward option.

5.3.9 Validate

A design validation always needs to be done on the reinterpreted model as the results reported during the TO are not usually very accurate.

5.3.10 Topologic Design with Altair Software

-

1.

Software Selection

Once the initial geometry evaluation for PBF-EB/M technology is completed, it is time to optimize the geometry using the computer tools. The software selected is Altair Inspire and the main reasons for this selection are explained below:

-

It is an easy computer tool to navigate in its menus and it has a very intuitive interface.

-

It is useful to obtain the first approximation to the optimized shape. The optimization process is complex and needs the model to be able to evolve in different phases and taking into account diverse factors.

-

It allows obtaining fast results, avoiding the difficulties of selecting types of elements and meshing. As will be seen, this is both an advantage and a handicap when there are problems associated to the automatic mesh.

Figure 5.12 exhibits the workflow of Altair Inspire: geometry setting, case loads assignment, analysis and optimization, results evaluation and CAD model translation.

Workflow of Altair inspire (https://www.altair.com/inspire/) (Courtesy of Altair)

-

2.

Geometry

First of all, it is important to learn the available abilities to create and modify the models. It is crucial to develop the initial geometry and widen the software´s optimization options. Generally, all the space available inside the maximum volume of the part should be included in the optimization process unless there are technical reasons not to, such as mounting space or interferences with other parts in its normal function (Fig. 5.13).

Altair inspire geometry tools (https://www.altair.com/inspire/) (Courtesy of Altair)

Simplifications are also required with these kinds of tools. In order to speed up the calculations, it is recommended that all the small fillets, rounds or chamfers that are not necessary in the structural behaviour should be deleted. This deletion should pay special attention to not include simplifications that can introduce significative errors in the results. Nonetheless, the final optimized shape should be validated afterwards through a new analysis.

Linked to the optimization process and the software layout, a distinction between design parts and non-design parts is required. Therefore, all parts that will have loads or constrains must be defined as non-design parts. To meet this requirement, the user should adapt the model using the geometry tools.

-

3.

Load Cases Setup

After completing the geometry modifications, the load cases that will feed the software must be set in order to obtain the optimization. The typical tools to set loads such as forces, pressures or torques and constrains in any freedom degree can be easily found and applied in this software (Fig. 5.14), as in many other CAE software. Furthermore, in most cases, it is also necessary to set fasteners, joints or connectors to bond the parts.

Altair inspire case setup tools (https://www.altair.com/inspire/) (Courtesy of Altair)

Other less usual conditions that the selected software (Altair Inspire) allows us to add are imposed displacements, accelerations, temperature conditions and concentrated masses.

Contact definition between parts can also be found in this section and it offers us the possibility to define the faces in contact as bonded, contacting or without contact.

In this section, the material properties to the components also need be set. The more accurate these properties are, the more trustworthy the results will be. However, it is also important to know that this software does not allow anisotropic materials setting and this is a significant deficiency because additive technologies normally require this material behaviour. The way to compensate this deficiency and assure safety is to consider the weaker properties. That is, the structural problem is solved as an isotropic material. Again, it should be mentioned that this optimization is a first stage and the final design will need to be validated.

-

4.

Analysis/Optimization

On one hand, an option to perform structural analysis is included. Before the analysis can be launched, the user has to set the analysis parameters. The software chosen includes only a few parameters that can be modified: mesh size, contact’s type and accuracy. This is due to the simplicity and navigability of the software concept.

The analysis can be completed before and after the optimization. It is good to analyse it prior to the optimization to know the stress level or the model behaviour, in order to help the user, set the parameters in the optimization set up. The analysis is performed after the optimization to check the proposed shape in the different load cases. However, this second analysis can display high levels of stress concentrated in specific points because the raw model shape has imperfections that will be removed in the final model.

On the other hand, the optimization analysis offers different approaches depending on the objective. It can maximize the stiffness or minimize the mass. For the stiffness maximization, the program needs the mass target. For the mass minimization, the safety factor is required.

All of these analyses require the parameters of mesh size (defined as thickness constraints), level of accuracy (faster/more accurate) and contact’s assumption (sliding only/with separation). In case of error, the user can modify the size mesh. Reducing it can help avoid meshing problems due to geometry imperfections. An alternative option is suggested: to check and correct the geometry imperfections, since this reduces the work time increase that would result if a smaller mesh size were used.

Finally, the user can select what load cases are used in the optimization. It is not possible to assign different weights to each load case directly. Different weight assignments need to be made through new load cases modifying the load.

-

5.

Results/Comparison

The results should be evaluated visually first. Some cases can give us incomplete shapes with reinforcing linking elements that are not fully developed. If it happens, there are two options: (1) the user can modify the result directly, increasing the mass with a slider tool included in the software, or (2) the optimization can be recalculated, increasing the level of mass or the safety factor.

The junction between design and non-design spaces is usually a week point and also needs to be evaluated to decide if it would meet the structural requirements. These issues can be pointed out as difficulties, even if they are accepted, they should be checked in the next phases (Fig. 5.15).

Optimization issues (https://community.altair.com/community) (Courtesy of Altair)

The next step is to establish the goals and to select the results that allow the evaluation and comparison in the different optimized models. The most used results are the stress level, the safety factor and the displacement. The software has utilities to assist us in the comparison process, by displaying the selected outcomes in every optimization case (Fig. 5.16).

Comparison results (https://community.altair.com/community) (Courtesy of Altair)

The user has to be aware that models include the imperfections generated in the optimization process and that the surface roughness is not considered. These two points can lead to an overestimation of the model in fatigue cases. This is a common issue in PBF-EB/M technologies because they have considerable surface roughness. A validation check is strongly suggested to be carried out considering this factor after the optimization.

-

6.

Conversion From Optimized Result to Buildable Geometry Model

The conversion of the selected optimized model to a buildable geometry model can be done in the proposed software by three means:

-

Save as STL model and design it in the CAD Software.

-

Normally, it is not possible to save the optimized model directly as a parametric model. Therefore, it can be saved as a STL model which can be treated by the CAD software. The user can build a smooth and buildable model following the shape of the STL model.

-

Use polinurbs tools to acquire a parasolid.

-

The software used (Altair Inspire) includes the option to build a parametric model directly. It does not offer the usual tools of CAD software and sometimes the process can be time consuming and does not offer the same freedom to modify the model. However, this option is recommended if you do not have other software or it is a small and easy model.

-

Use the automatic adjustment.

-

The new version of this software offers the option to automatically adjust the resulting shape to a polinurbs model. It is fast and produces a buildable parametric model. However, it sometimes creates a large quantity of faces to adapt to the initial shape. It can be a problem to manage or modify the model but saves the time in the production of the initial adaptation (Fig. 5.17).

Fig. 5.17 Automatic adjustment tool (https://community.altair.com/community) (Courtesy of Altair)

-

7.

Key Observations

The use of the proposed software offers a good approximation of an optimized structure in a reasonable time. However, depending on the use, the final design may need to pass an additional structural analysis.

The user should take into account that AM may leave a considerable surface roughness and that fatigue is not considered in this optimization process, for the applicable cases.

There are multiple options to achieve an optimization and it can be difficult to manage all the results and evaluate them. It is recommended to define the selected measurable factors first.

The tools to adapt the optimized shape to a CAD model are being improved. Nonetheless, the adaptations might still be complex when the user tries to translate the model between the different software.

Lattice structures can help lighten the SLS study models, but they may increase the difficulties in their use on the CAD software and the 3D printer machine.

5.3.11 Topologic Design with Altair Software for PBF-EB/M

As PBF-EB/M is a technology that needs to build supports, the software has a specific tool to set a limit and optimize the geometry adapted to this manufacturing process. This tool is applied if the model exceeds the slope degrees limit from the fusion bed. This tool is called Overhang shape control in Altair Inspire software. It allows users to set a maximum degrees/angle from the baseline prior to the analysis, however, this requires a decision on the building direction and this decision will condition the result (Fig. 5.18).

Altair inspire overhang tool (https://www.altair.com/inspire/) (Courtesy of Altair)

This tool is very useful for PBF-EB/M because the use of supports can be avoided. The main limitations include: that the user has to define the building direction; once set, the tool will not consider the use of supports and this can entail a heavier result. If one of the main goals is mass reduction, this option will probably not be the appropriate approach. For mass reduction the material should be used to build supports instead of reinforcing the model.

A suggested workflow to optimize the use of this tool would be to try each piece with and without the tool and subsequently, check if adding supports uses a lesser amount of material.

-

Example 1. Topological optimization of a demo bracket for Ti6Al4V processed by PBF-EB/M

This is an example of topological optimization for a structural AM part suitable for the aeronautical sector. Figure 5.19 shows the original bracket, the optimization result and the polynurbs geometry.

Different options of topological optimization are represented in Fig. 5.20.

5.3.12 Topologic Design with Altair Software for PBF-LB/P (SLS)

Since PBF-LB/P technologies do not need supports in the building process they offer flexibility in regard to the model shape. The only shape restriction will be to consider the spaces required to ensure the dust removal. The available utility to create lattice structures can be interesting in this type of manufacturing technologies due to its capacity to lighten the model even more.

The process of optimization with lattice geometries follows the same premises aforementioned, but adds an additional optimization using the model generated by the first result. Apart from the objective and contact parameters, the user has to set the percentage of lattice structure, the target length of the bar elements and the minimum and maximum values of the bar elements’ diameter. The software will provide a result substituting the base material by bar elements following the lattice structure percentage given (Fig. 5.21).

Altair inspire lattice optimization (https://community.altair.com/community) (Courtesy of Altair)

On the contrary, the use of lattice structures with too many bars may be a problem for the CAD software or the file management by the 3D printer machine.

As a reminder of the material properties, the user has to be aware that the results do not take in account the surface roughness in case of fatigue loads. Therefore, the model needs to be validated properly.

-

Example 1. Topological optimization of a demo bracket for PA12 processed by PBM-LB/P (SLS)

Same example as PBF-EB/M case. Figure 5.22 shows the original bracket volume together with three optimization results where a volume reduction up to 87.62 % can be found.

Optimized AM parts processed in polyamide by PBF-LB/P technology can be observed in Fig. 5.23. After production, mechanical testing are performed to evaluate the mechanical behaviour.

5.4 Lattice-Based Topology Optimization

As traditional TO is typically not suitable for generating very fine structures with high complexity, hybrid methods have been developed. There are different approaches, some create a free lattice structure from the underlying geometry, and some rely on a cell-based approach. The cell-based approaches first determine an optimal pseudo-density field within the design space, and based on this density field, a lattice geometry can be generated. The general workflow is to define the lattice type, define the cell size, define the wall/strut thickness, define the minimum/maximum density, solve, interpret the results and validate.

5.4.1 Lattice Type

The lattice type can range from simple lattices like cubic trusses to more complex shapes like gyroidal or other triply periodic minimal surfaces. The lattice shape should be chosen with respect to the type of loading the part will be subject to, and with respect to printability and build orientation. One should be especially careful to avoid lattice types which require support material in larger cell sizes.

5.4.2 Define the Cell Size

The cell size will determine the dimensions of each lattice cell. While small cell sizes may be useful for creating self-supporting structures, larger cell sizes will be computationally more efficient to generate and validate. Moreover, large cell sizes may be beneficial for getting loose powder out after printing.

5.4.3 Define the Shell Thickness

As many applications require a smooth outer surface, it is usually possible to define a shell thickness of the geometry where the lattice structure is excluded. The choice of thickness depends on the application, but in order for the lattice structure to have any effect, it should be chosen as small as possible.

5.4.4 Define the Minimum/Maximum Density

The density will affect how thick the walls/struts in each lattice cell are. As a very low density may result in non-buildable wall thicknesses, it is important to set a minimum density requirement. Exactly what the lower limit should be depends on the machine and on the software, so some experimentation may be required. Additionally, a very high density will lead to lattice cells with very small openings, which, in turn, make powder removal difficult. Therefore, a maximum density should also be set. A good starting point may be 20% minimum density and 80% maximum density.

5.4.5 Interpret the Results

The results from the optimization is either a geometry or a density field, but there will typically be built-in tools for converting the density field into a facet-based model. As the lattice-structure itself should not need any further manipulation, it does not need to be converted into a parametric format. Depending on the software and output lattice structure, some sort of mesh smoothening may be beneficial to reduce stress concentrations.

5.4.6 Validate

Validation of lattice structures is difficult as the complexity is very high, the models are typically facet-based, and depending on the lattice shape, there could be many stress concentrations (Fig. 5.24). Moreover, as lattice structures do not lend themselves to easy post-processing, the surface finish can be rough and be detrimental to fatigue life. Therefore, lattice structures in critical applications, especially where cyclic loading will occur, is not recommended without physical validation.

Stress concentrations in lattice-based design (https://community.altair.com/community). (Courtesy of Axel Nordin)

5.5 Non-parametric Mesh Modelling

Non-parametric modelling normally starts from the 3D data obtained from a scanned object. A laser scans an object in three dimensions. The result is points is space, or rather a “point cloud”. This point cloud is triangulated into a mesh before the non-parametric modelling starts. Common formats are the obj (object) and the stl (stereo lithography)-formats. The mesh may later be cleaned, repaired and altered according to the operators’ demands. The scanners that are the easiest and fastest to operate are normally the scanners that are intended for the consumer market. Some of these are handheld and can for example be attached to an iPad, see Fig. 5.25. Football sized objects can easily be scanned by turning the object by hand or by simply waking around the object. The disadvantage with these scanners is the low scanning precision. The precision is, in practice, at best + − 2 mm. The top end of the handheld scanners operate in a similar fashion, but with higher precision, they are however more complex to operate and the entry level price is about 7000 €.

The number of different types of scanners on the market is immense, they may scan everything from wear and tear on small mechanical parts to the topography of entire landscapes. Another type of scanners are the ones that are not handheld. What they all have in common is that the scanning unit is fixed or mounted on an axis controlled by for example a step engine. These scanners can offer precision up to about + − 0.01 mm depending on the circumstances. Some of these scanners have the appearance of a box with a door and with a rotating plate to place the object to be scanned at the bottom. Naturally they come in different sizes. One drawback is that they have to have rather large dimensions if one, for example, intend to scan a human head or a human limb for later prosthesis manufacture.

The non-parametric manipulation of a mesh let the user change the density of the mesh according to his or her demands. Ideally, the mesh is made as light and with as few vertices and triangles as possible without jeopardizing the description relevant for the surface at hand. The minimal number of triangles to describe a perfectly straight rectangular surface is two. Double bent surfaces require a far greater number of triangles, and hence a more dense and complex mesh to be described with sufficient accuracy. Non-parametric mesh modelling will however let a user to add and reduce the complexity of the mesh locally according the requirements on the surface accuracy.

Normally when an object has been scanned or a CAD file has been saved in order to be able to print the object one loses some of the information. The scanned object is no longer identical to the fully defined measurements of the product, and its original CAD/Step file. This causes problems if one wants to continue to manipulate a scanned object or want to return a 3D print file to parametric software. A solution to this shortcoming is to deploy non-parametric modelling. Exact measurements may, in some circumstances, be of marginal importance. The purpose may for example be to print a human bone, or an object with a purely aesthetical purpose. In these cases, only the scale, level of detailing and quality of the surfaces are important. Another example is if one wants to print or make a mould from for example a scanned branch from a three, or a seashell.

When one has turned such objects to digital data for further form manipulation one has, as mentioned, converted the original surface into a mesh. This mesh can be kept as a mesh surface or be converted into a solid mesh body. There are however several mesh formats. What they all have in common is that they triangulate the surface of a volume into a triangular mesh. First, when this has been done, one may let 3D printer software slice this mesh into slices. This process is however, an obligatory and last step also for parametric CAD models to be able to print in 3D.

Non-parametric CAD-programs such as, for example, MeshMixer and MeshLab let the user manipulate a mesh as in Fig. 5.26 rather than like parametric CAD software as, for example, Creo and SolidWorks that normally produce the mesh only as a last conversion strep to facilitate 3D printing. One may nowadays however, import a mesh in some traditional parametric CAD software, but it is still a delicate, restricted process that require a mesh of limited complexity.

The actual manipulation of the mesh can in some cases be described as working in real clay, but on a 2-dimentinal computer screen. The main tools in Meshmixer and Meshlab could be divided into three main types. One type is filters. Meshlab have a great selection of different mesh filters. A typical function of a filter is to repair a mesh with undesired holes, reduce mesh complexity with a mathematical formula, or make a “ruff” mesh more complex or smoother. Another type of tools are different sculpt and brush tools that let the operator manipulate the mesh locally. A typical function of such a tool let the operator to drag, inflate, smoothing, flatten or move a selected part of the mesh as in Fig. 5.27. The last type are tools that let the operator offset, duplicate, transform, shell, solidify and merge/subtract different mesh bodies from each other. There are numerous software on the market for the manipulation of meshes. Some focus on repairing and “cleaning up” damaged meshes, some focus on optimizing mesh complexity whilst others let the operator create new interesting forms with for example “un-proportional scale” and “sculpt” tools.

The drawback with the mesh format is that it will always present a soft double bent surface as a number of straight triangles, and the claylike manipulation will not result in fully defined surfaces as in parametric software. The strength is that a user may create amazing organic forms that would be next into impossible or very time consuming to create with parametric modelling by deploying extrusion of two-dimensional sketches, this is exampified in Fig. 5.28.

Change history

06 January 2023

In the original version of the book, the co-author name “Bernardo Vicente Morell” was mistakenly omitted and has now been inserted in the Chapter “General Process Simulations”. The chapter and book have been updated with the changes.

References

Bendsøe, M.P., Sigmund, O.: Topology Optimization: Theory, Methods, and Applications. Springer, Berlin, Heidelberg (2004)

Bendsøe, M.P., Kikuchi, N.: Generating optimal topologies in structural design using a homogenization method. Comput. Methods Appl. Mech. Eng. 71(2), 197–224 (1988)

Brackett, D., Ashcroft, I., Hague, R.: Topology optimization for additive manufacturing. In: 22nd Annual International Solid Freeform Fabrication Symposium—An Additive Manufacturing Conference, SFF 2011, pp. 348–362 (2011)

Cai, S., Zhang, W., Zhu, J., Gao, T.: Stress constrained shape and topology optimization with fixed mesh: A B-spline finite cell method combined with level set function. Comput. Methods Appl. Mech. Eng. 278, 361–387 (2014). https://doi.org/10.1016/j.cma.2014.06.007

Chacón, J.M., Bellido, J.C., Donoso, A.: Integration of topology optimized designs into CAD/CAM via an IGES translator. Struct. Multidiscip. Optim. 50(6), 1115–1125 (2014). https://doi.org/10.1007/s00158-014-1099-6

Gaynor, A.T., Guest, J.K.: Topology optimization considering overhang constraints: eliminating sacrificial support material in additive manufacturing through design. Struct. Multidiscip. Optim. 54(5), 1157–1172 (2016). https://doi.org/10.1007/s00158-016-1551-x

Langelaar, M.: Topology optimization of 3D self-supporting structures for additive manufacturing. Addit. Manuf. 12, 60–70 (2016). https://doi.org/10.1016/j.addma.2016.06.010

Liu, J., Gaynor, A.T., Chen, S., Kang, Z., Suresh, K., Takezawa, A., et al.: Current and future trends in topology optimization for additive manufacturing: Springer Verlag. Struct. Multidiscip. Optim. 57(6) (2018)

Liu, J., Ma, Y.: A survey of manufacturing oriented topology optimization methods. Adv. Eng. Softw. 100, 161–175 (2016). https://doi.org/10.1016/j.advengsoft.2016.07.017

Mirzendehdel, A.M., Suresh, K.: Support structure constrained topology optimization for additive manufacturing. Comput.-Aided Des. 81, 1–13 (2016). https://doi.org/10.1016/j.cad.2016.08.006

Seo, Y.D., Kim, H.J., Youn, S.K.: Isogeometric topology optimization using trimmed spline surfaces. Comput. Methods Appl. Mech. Eng. 199 (49-52), 3270–3296 (2010). https://doi.org/10.1016/j.cma.2010.06.033

Sigmund, O., Maute, K.: Topology optimization approaches. Struct. Multidiscip. Optim. 48(6), 1031–1055 (2013). https://doi.org/10.1007/s00158-013-0978-6

SKIN project: Improvement of the performance of processed materials with additive manufacturing through post-processes. AIDIMME (2017–2018)

Wang, X., Xu, S., Zhou, S., Xu, W., Leary, M., Choong, P., et al.: Topological design and additive manufacturing of porous metals for bone scaffolds and orthopaedic implants: a review: Elsevier Ltd. 83, 18785905 (2016)

Zegard, T., Paulino, G.H.: Bridging topology optimization and additive manufacturing. Struct. Multidiscip. Optim. 53(1), 175–192 (2016). https://doi.org/10.1007/s00158-015-1274-4

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Nordin, A. et al. (2022). General Process Simulations. In: Godec, D., Gonzalez-Gutierrez, J., Nordin, A., Pei, E., Ureña Alcázar, J. (eds) A Guide to Additive Manufacturing. Springer Tracts in Additive Manufacturing. Springer, Cham. https://doi.org/10.1007/978-3-031-05863-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-05863-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05862-2

Online ISBN: 978-3-031-05863-9

eBook Packages: EngineeringEngineering (R0)