Abstract

The long run behaviour of linear dynamical systems is often studied by looking at eventual properties of matrices and recurrences that underlie the system. A basic problem in this setting is as follows: given a set of pairs of rational weights and matrices \(\{(w_1, A_1), \ldots , (w_m, A_m)\}\), does there exist an integer N s.t for all \(n \ge N\), \(\sum _{i=1}^m w_i\cdot A_i^n \ge 0\) (resp. \(> 0\)). We study this problem, its applications and its connections to linear recurrence sequences. Our first result is that for \(m\ge 2\), the problem is as hard as the ultimate positivity of linear recurrences, a long standing open question (known to be \(\mathsf {coNP}\)-hard). Our second result is that for any \(m\ge 1\), the problem reduces to ultimate positivity of linear recurrences. This yields upper bounds for several subclasses of matrices by exploiting known results on linear recurrence sequences. Our third result is a general reduction technique for a large class of problems (including the above) from diagonalizable case to the case where the matrices are simple (have non-repeated eigenvalues). This immediately gives a decision procedure for our problem for diagonalizable matrices.

This work was partly supported by DST/CEFIPRA/INRIA Project EQuaVE and DST/SERB Matrices Grant MTR/2018/000744.

Author names are in alphabetical order of last names.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The study of eventual or asymptotic properties of discrete-time linear dynamical systems has long been of interest to both theoreticians and practitioners. Questions pertaining to (un)-decidability and/or computational complexity of predicting the long-term behaviour of such systems have been extensively studied over the last few decades. Despite significant advances, however, there remain simple-to-state questions that have eluded answers so far. In this work, we investigate one such problem, explore its significance and links with other known problems, and study its complexity and computability landscape.

The time-evolution of linear dynamical systems is often modeled using linear recurrence sequences, or using sequences of powers of matrices. Asymptotic properties of powers of matrices are therefore of central interest in the study of linear differential systems, dynamic control theory, analysis of linear loop programs etc. (see e.g. [26, 32, 36, 37]). The literature contains a rich body of work on the decidability and/or computational complexity of problems related to the long-term behaviour of such systems (see, e.g. [15, 19, 27, 29, 36, 37]). A question of significant interest in this context is whether the powers of a given matrix of rational numbers eventually have only non-negative (resp. positive) entries. Such matrices, also called eventually non-negative (resp. eventually positive) matrices, enjoy beautiful algebraic properties ([13, 16, 25, 38]), and have been studied by mathematicians, control theorists and computer scientists, among others. For example, the work of [26] investigates reachability and holdability of non-negative states for linear differential systems – a problem in which eventually non-negative matrices play a central role. Similarly, eventual non-negativity (or positivity) of a matrix modeling a linear dynamical system makes it possible to apply the elegant Perron-Frobenius theory [24, 34] to analyze the long-term behaviour of the system beyond an initial number of time steps. Another level of complexity is added if the dynamics is controlled by a set of matrices rather than a single one. For instance, each matrix may model a mode of the linear dynamical system [23]. In a partial observation setting [22, 39], we may not know which mode the system has been started in, and hence have to reason about eventual properties of this multi-modal system. This reduces to analyzing the sum of powers of the per-mode matrices, as we will see.

Motivated by the above considerations, we study the problem of determining whether a given matrix of rationals is eventually non-negative or eventually positive and also a generalized version of this problem, wherein we ask if the weighted sum of powers of a given set of matrices of rationals is eventually non-negative (resp. positive). Let us formalize the general problem statement. Given a set \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\) , where each \(w_i\) is a rational number and each \(A_i\) is a \(k\times k\) matrix of rationals, we wish to determine if \(\sum _{i=1}^m w_i\cdot A_i^n\) has only non-negative (resp. positive) entries for all sufficiently large values of n . We call this problem Eventually Non-Negative (resp. Positive) Weighted Sum of Matrix Powers problem, or \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) for short. The eventual non-negativity (resp. positivity) of powers of a single matrix is a special case of the above problem, where \(\mathfrak A= \{(1, A)\}\). We call this special case the Eventually Non-Negative (resp. Positive) Matrix problem, or \(\mathsf {ENN_{Mat}}\) (resp. \(\mathsf {EP_{Mat} }\)) for short.

Given the simplicity of the \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) problem statements, one may be tempted to think that there ought to be simple algebraic characterizations that tell us whether \(\sum _{i=1}^m w_i\cdot A_i^n\) is eventually non-negative or positive. But in fact, the landscape is significantly nuanced. On one hand, a solution to the general \(\mathsf {ENN_{SoM}}\) or \(\mathsf {EP_{SoM} }\) problem would resolve long-standing open questions in mathematics and computer science. On the other hand, efficient algorithms can indeed be obtained under certain well-motivated conditions. This paper is a study of both these aspects of the problem. Our primary contributions can be summarized as follows. Below, we use \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\) to define an instance of \(\mathsf {ENN_{SoM}}\) or \(\mathsf {EP_{SoM} }\).

-

1.

If \(|\mathfrak A| \ge 2\), we show that both \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are as hard as the ultimate non-negativity problem for linear recurrence sequences (\(\mathsf {UNN_{LRS} }\), for short). The decidability of \(\mathsf {UNN_{LRS} }\) is closely related to Diophantine approximations, and remains unresolved despite extensive research (see e.g. [31]).

Since \(\mathsf {UNN_{LRS} }\) is \(\mathsf {coNP}\)-hard (in fact, as hard as the decision problem for universal theory of reals), so is \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\), when \(|\mathfrak A| \ge 2\). Thus, unless \(\mathsf {P}\) = \(\mathsf {NP}\), we cannot hope for polynomial-time algorithms, and any algorithm would also resolve long-standing open problems.

-

2.

On the other hand, regardless of \(|\mathfrak A|\), we show a reduction in the other direction from \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) to \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\), the strict version of \(\mathsf {UNN_{LRS} }\)). As a consequence, we get decidability and complexity bounds for special cases of \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\), by exploiting recent results on recurrence sequences [30, 31, 35]. For example, if each matrix \(A_i\) in \(\mathfrak A\) is simple, i.e. has all distinct eigenvalues, we obtain \(\mathsf {PSPACE}\) algorithms.

-

3.

Finally, we consider the case where \(A_i\) is diagonalizable (also called non-defective or inhomogenous dilation map) for each \((w_i,A_i)\in \mathfrak A\). This is a practically useful class of matrices and strictly subsumes simple matrices. We present a novel reduction technique for a large family of problems (including eventual non-negativity/positivity, everywhere non-negativity/positivity etc.) over diagonalizable matrices to the corresponding problem over simple matrices. This yields effective decision procedures for \(\mathsf {EP_{SoM} }\) and \(\mathsf {ENN_{SoM}}\) for diagonalizable matrices. Our reduction makes use of a novel perturbation analysis that also has other interesting consequences.

As mentioned earlier, the eventual non-negativity and positivity problem for single rational matrices are well-motivated in the literature, and \(\mathsf {EP_{Mat} }\) (or \(\mathsf {EP_{SoM} }\) with \(|\mathfrak A| = 1\)) is known to be in \(\mathsf {PTIME}\) [25]. But for \(\mathsf {ENN_{Mat}}\), no decidability results are known to the best of our knowledge. From our work, we obtain two new results about \(\mathsf {ENN_{Mat}}\): (i) in general \(\mathsf {ENN_{Mat}}\) reduces to \(\mathsf {UNN_{LRS} }\) and (ii) for diagonalizable matrices, we can decide \(\mathsf {ENN_{Mat}}\). What is surprising (see Sect. 5) is that the latter decidability result goes via \({\mathsf {ENN_{SoM}}}\), i.e. the multiple matrices case. Thus, reasoning about sums of powers of matrices, viz. \(\mathsf {ENN_{SoM}}\), is useful even when reasoning about powers of a single matrix, viz. \(\mathsf {ENN_{Mat}}\).

Potential Applications of \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\). A prime motivation for defining the generalized problem statement \(\mathsf {ENN_{SoM}}\) is that it is useful even when reasoning about the single matrix case \(\mathsf {ENN_{Mat}}\). However and unsurprisingly, \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are also well-motivated independently. Indeed, for every application involving a linear dynamical system that reduces to \(\mathsf {ENN_{Mat}}\)/\(\mathsf {EP_{Mat} }\), there is a naturally defined aggregated version of the application involving multiple independent linear dynamical systems that reduces to \(\mathsf {ENN_{SoM}}\)/\(\mathsf {EP_{SoM} }\) (e.g., the swarm of robots example in [3]).

Beyond this, \(\mathsf {ENN_{SoM}}\)/\(\mathsf {EP_{SoM} }\) arise naturally and directly when solving problems in different practical scenarios. Due to lack of space, we detail two applications here and describe more in the longer version of the paper [3].

Partially Observable Multi-modal Systems. Our first example comes from the domain of cyber-physical systems in a partially observable setting. Consider a system (e.g. a robot) with m modes of operation, where the \(i^{th}\) mode dynamics is given by a linear transformation encoded as a \(k \times k\) matrix of rationals, say \(A_i\). Thus, if the system state at (discrete) time t is represented by a k-dimensional rational (row) vector \(\mathbf {{u_t} }\), the state at time \(t+1\), when operating in mode i, is given by \(\mathbf {{u_t} }A_i\). Suppose the system chooses to operate in one of its various modes at time 0, and then sticks to this mode at all subsequent time. Further, the initial choice of mode is not observable, and we are only given a probability distribution over modes for the initial choice. This is natural, for instance, if our robot (multi-modal system) knows the terrain map and can make an initial choice of which path (mode) to take, but cannot change its path once it has chosen. If \(p_i\) is a rational number denoting the probability of choosing mode i initially, then the expected state at time n is given by \(\sum _{i=1}^m p_i\cdot \mathbf {{u_0} }A_i^n\) \(= \mathbf {{u_0} }\big (\sum _{i=1}^m p_i\cdot A_i^n\big )\). A safety question in this context is whether starting from a state \(\mathbf {{u_0} }\) with all non-negative (resp. positive) components, the system is expected to eventually stay locked in states that have all non-negative (resp. positive) components. In other words, does \(\mathbf {{u_0} }\big (\sum _{i=1}^m p_i\cdot A_i^n\big )\) have all non-negative (resp. positive) entries for all sufficiently large n? Clearly, a sufficient condition for an affirmative answer to this question is to have \(\sum _{i=1}^n p_i\cdot A_i^n\) eventually non-negative (resp. positive), which is an instance of \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)).

Commodity Flow Networks. Consider a flow network where m different commodities \(\{c_1,\ldots , c_m\}\) use the same flow infrastructure spanning k nodes, but have different loss/regeneration rates along different links. For every pair of nodes \(i,j\in \{1,\ldots ,k\}\) and for every commodity \(c\in \{c_1,\ldots ,c_m\}\), suppose \(A_c[i,j]\) gives the fraction of the flow of commodity c starting from i that reaches j through the link connecting i and j (if it exists). In general, \(A_c[i,j]\) is the product of the fraction of the flow of commodity c starting at i that is sent along the link to j, and the loss/regeneration rate of c as it flows in the link from i to j. Note that \(A_c[i,j]\) can be 0 if commodity c is never sent directly from i to j, or the commodity is lost or destroyed in flowing along the link from i to j. It can be shown that \(A_c^n[i,j]\) gives the fraction of the flow of c starting from i that reaches j after n hops through the network. If commodities keep circulating through the network ad-infinitum, we wish to find if the network gets saturated, i.e., for all sufficiently long enough hops through the network, there is a non-zero fraction of some commodity that flows from i to j for every pair i, j. This is equivalent to asking if there exists \(N\in \mathbb {N}\) such that \(\sum _{\ell =1}^m A_{c_\ell }^n>0\). If different commodities have different weights (or costs) associated, with commodity \(c_i\) having the weight \(w_i\), the above formulation asks if \(\sum _{\ell =1}^m w_\ell .A_{c_\ell }^n\) is eventually positive, which is effectively the \(\mathsf {EP_{SoM} }\) problem.

Other Related Work. Our problems of interest are different from other well-studied problems that arise if the system is allowed to choose its mode independently at each time step (e.g. as in Markov decision processes [5, 21]). The crucial difference stems from the fact that we require that the mode be chosen once initially, and subsequently, the system must follow the same mode forever. Thus, our problems are prima facie different from those related to general probabilistic or weighted finite automata, where reachability of states and questions pertaining to long-run behaviour are either known to be undecidable or have remained open for long ([6, 12, 17]). Even in the case of unary probabilistic/weighted finite automata [1, 4, 8, 11], reachability is known in general to be as hard as the Skolem problem on linear recurrences – a long-standing open problem, with decidability only known in very restricted cases. The difference sometimes manifests itself in the simplicity/hardness of solutions. For example, \(\mathsf {EP_{Mat} }\) (or \(\mathsf {EP_{SoM} }\) with \(|\mathfrak A| = 1\)) is known to be in \(\mathsf {PTIME}\) [25] (not so for \(\mathsf {ENN_{Mat}}\) however), whereas it is still open whether the reachability problem for unary probabilistic/weighted automata is decidable. It is also worth remarking that instead of the sum of powers of matrices, if we considered the product of their powers, we would effectively be solving problems akin to the mortality problem [9, 10] (which asks whether the all-0 matrix can be reached by multiplying with repetition from a set of matrices) – a notoriously difficult problem. The diagonalizable matrix restriction is a common feature in in the context of linear loop programs (see, e.g., [7, 28]), where matrices are used for updates. Finally, logics to reason about temporal properties of linear loops have been studied, although decidability is known only in restricted settings, e.g. when each predicate defines a semi-algebraic set contained in some 3-dimensional subspace, or has intrinsic dimension 1 [20].

2 Preliminaries

The symbols \(\mathbb {Q}, \mathbb {R}\), \(\mathbb {A}\) and \(\mathbb {C}\) denote the set of rational, real, algebraic and complex numbers respectively. Recall that an algebraic number is a root of a non-zero polynomial in one variable with rational coefficients. An algebraic number can be real or complex. We use \(\mathbb {R}\mathbb {A}\) to denote the set of real algebraic numbers (which includes all rationals). The sum, difference and product of two (real) algebraic numbers is again (real) algebraic. Furthermore, every root of a polynomial equation with (real) algebraic coefficients is again (real) algebraic. We call matrices with all rational (resp. real algebraic or real) entries rational (resp. real algebraic or real) matrices. We use \(A \in \mathbb {Q}^{k \times l}\) (resp. \(A \in \mathbb {R}^{k\times l}\) and \(A \in \mathbb {R}\mathbb {A}^{k\times l}\)) to denote that A is a \(k\times l\) rational (resp. real and real algebraic) matrix, with rows indexed 1 through k, and columns indexed 1 through l. The entry in the \(i^{th}\) row and \(j^{th}\) column of a matrix A is denoted A[i, j]. If A is a column vector (i.e. \(l = 1\)), we often use boldface letters, viz. \(\mathbf {A}\), to refer to it. In such cases, we use \(\mathbf {A}[i]\) to denote the \(i^{th}\) component of \(\mathbf {A}\), i.e. A[i, 1]. The transpose of a \(k \times l\) matrix A, denoted \({A}^{\mathsf T}\), is the \(l \times k\) matrix obtained by letting \({A}^{\mathsf T}[i,j] = A[j,i]\) for all \(i \in \{1, \ldots l\}\) and \(j \in \{1, \ldots k\}\). Matrix A is said to be non-negative (resp. positive) if all entries of A are non-negative (resp. positive) real numbers. Given a set \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\) of (weight, matrix) pairs, where each \(A_i \in \mathbb {Q}^{k\times k}\) (resp. \(\in \mathbb {R}\mathbb {A}^{k\times k}\)) and each \(w_i \in \mathbb {Q}\), we use \(\sum {\mathfrak A}^{{n}}\) to denote the weighted matrix sum \(\sum _{i=1}^m w_i\cdot A_i^n\), for every natural number \(n > 0\). Note that \(\sum \mathfrak A^n\) is itself a matrix in \(\mathbb {Q}^{k\times k}\) (resp. \(\mathbb {R}\mathbb {A}^{k\times k}\)).

Definition 1

We say that \(\mathfrak A\) is eventually non-negative (resp. positive) iff there is a positive integer N s.t., \(\sum {\mathfrak A}^{{n}}\) is non-negative (resp. positive) for all \(n \ge N\).

The \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) problem, described in Sect. 1, can now be re-phrased as: Given a set \(\mathfrak A\) of pairs of rational weights and rational \(k\times k\) matrices, is \(\mathfrak A\) eventually non-negative (resp. positive)? As mentioned in Sect. 1, if \(\mathfrak A= \{(1, A)\}\), the \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) problem is also called \(\mathsf {ENN_{Mat}}\) (resp. \(\mathsf {EP_{Mat} }\)). We note that the study of \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) with \(|\mathfrak A| = 1\) is effectively the study of \(\mathsf {ENN_{Mat}}\) and \(\mathsf {EP_{Mat} }\) i.e., wlog we can assume \(w=1\).

The characteristic polynomial of a matrix \(A \in \mathbb {R}\mathbb {A}^{k \times k}\) is given by \( det (A-\lambda I)\), where I denotes the \(k\times k\) identity matrix. Note that this is a degree k polynomial in \(\lambda \). The roots of the characteristic polynomial are called the eigenvalues of A. The non-zero vector solution of the equation \(A\mathbf {x} = \lambda _i \mathbf {x}\), where \(\lambda _i\) is an eigenvalue of A, is called an eigenvector of A. Although \(A \in \mathbb {R}\mathbb {A}^{k\times k}\), in general it can have eigenvalues \(\lambda \in \mathbb {C}\) which are all algebraic numbers. An eigenvector is said to be positive (resp. non-negative) if each component of the eigenvector is a positive (resp. non-negative) rational number. A matrix is called simple if all its eigenvalues are distinct. Further, a matrix A is called diagonalizable if there exists an invertible matrix S and diagonal matrix D such that \(S D S^{-1}= A\).

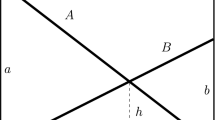

The study of weighted sum of powers of matrices is intimately related to the study of linear recurrence sequences (LRS), as we shall see. We now present some definitions and useful properties of LRS. For more details on LRS, the reader is referred to the work of Everest et al. [14]. A sequence of rational numbers \(\langle u \rangle \) = \(\langle u_n \rangle _{n=0}^{\infty }\) is called an LRS of order \(k ~(> 0)\) if the \(n^{th}\) term of the sequence, for all \(n \ge k\), can be expressed using the recurrence: \(u_n = a_{k-1}u_{n-1} + \ldots + a_1u_{n-k-1} + a_0u_{n-k}.\) Here, \(a_0 ~(\ne 0), a_1, \ldots , a_{k-1} \in \mathbb {Q}\) are called the coefficients of the LRS, and \(u_0, u_1, \ldots , u_{k-1} \in \mathbb {Q}\) are called the initial values of the LRS. Given the coefficients and initial values, an LRS is uniquely defined. However, the same LRS may be defined by multiple sets of coefficients and corresponding initial values. An LRS \(\langle u \rangle \) is said to be periodic with period \(\rho \) if it can be defined by the recurrence \(u_n = u_{n-\rho }\) for all \(n \ge \rho \). Given an LRS \(\langle u \rangle \), its characteristic polynomial is \(p_{\langle u \rangle }(x) = x^k - \sum _{i=0}^{k-1}a_{i}x^{i}\). We can factorize the characteristic polynomial as \(p_{{\langle u \rangle }}(x) = \prod _{j=1}^d(x-\lambda _j)^{\rho _j}\), where \(\lambda _j\) is a root, called a characteristic root of algebraic multiplicity \(\rho _j\). An LRS is called simple if \(\rho _j = 1\) for all j, i.e. all characteristic roots are distinct. Let \(\{\lambda _1, \lambda _2, \ldots , \lambda _d \}\) be distinct roots of \(p_{\langle u \rangle }(x)\) with multiplicities \(\rho _1, \rho _2, \ldots , \rho _d\) respectively. Then the \(n^{th}\) term of the LRS, denoted \(u_n\), can be expressed as \(u_n = \sum _{j=1}^{d}q_j(n)\lambda _j^n\), where \(q_j(x) \in \mathbb {C}(x)\) are univariate polynomials of degree at most \(\rho _j - 1\) with complex coefficients such that \(\sum _{j=1}^d\rho _j = k\). This representation of an LRS is known as the exponential polynomial solution representation. It is well known that scaling an LRS by a constant gives another LRS, and the sum and product of two LRSs is also an LRS (Theorem 4.1 in [14]). Given an LRS \(\langle u \rangle \) defined by \( u_n = a_{k-1}u_{n-1} + \ldots + a_1u_{n-k-1} + a_0u_{n-k}\), we define its companion matrix \(M_{\langle u \rangle }\) to be the \(k \times k\) matrix shown in Fig. 1.

When \(\langle u \rangle \) is clear from the context, we often omit the subscript for clarity of notation, and use M for \(M_{\langle u \rangle }\). Let \(\mathbf {u} = (u_{k-1}, \ldots , u_0)\) be a row vector containing the k initial values of the recurrence, and let \(\mathbf {e_k} = (0, 0, \ldots 1)^T\) be a column vector of k dimensions with the last element equal to 1 and the rest set to 0s. It is easy to see that for all \(n \ge 1\), \(\mathbf {u} M^n \mathbf {e_k}\) gives \(u_n\). Note that the eigenvalues of the matrix M are exactly the roots of the characteristic polynomial of the LRS \(\langle u \rangle \).

For \(\mathbf {u} = (u_{k-1}, \ldots , u_0)\), we call the matrix \(G_{\langle u \rangle }= \begin{bmatrix} 0 &{} \mathbf {u} \\ \mathbf {0}^T &{} M_{\langle u \rangle } \end{bmatrix}\) the generator matrix of the LRS \(\langle u \rangle \), where \(\mathbf {0}\) is a k-dimensional vector of all 0s. We omit the subscript and use G instead of \(G_{\langle u \rangle }\), when the LRS \(\langle u \rangle \) is clear from the context. It is easy to show from the above that \(u_n = G^{n+1}[1,k+1]\) for all \(n \ge 0\).

We say that an LRS \(\langle u \rangle \) is ultimately non-negative (resp. ultimately positive) iff there exists \(N > 0\), such that \(\forall n \ge N\), \(u_n \ge 0\) (resp. \(u_n > 0\))Footnote 1. The problem of determining whether a given LRS is ultimately non-negative (resp. ultimately positive) is called the Ultimate Non-negativity (resp. Ultimate Positivity) problem for LRS. We use \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) to refer to this problem. It is known [19] that \(\mathsf {UNN_{LRS} }\) and \(\mathsf {UP_{LRS} }\) are polynomially inter-reducible, and these problems have been widely studied in the literature (e.g., [27, 31, 32]). A closely related problem is the Skolem problem, wherein we are given an LRS \(\langle u \rangle \) and we are required to determine if there exists \(n \ge 0\) such that \(u_n = 0\). The relation between the Skolem problem and \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) has been extensively studied in the literature (e.g., [18, 19, 33]).

3 Hardness of Eventual Non-negativity and Positivity

In this section, we show that \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) polynomially reduces to \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) when \(|\mathfrak A| \ge 2\). Since \(\mathsf {UNN_{LRS} }\) and \(\mathsf {UP_{LRS} }\) are known to be \(\mathsf {coNP}\)-hard (in fact, as hard as the decision problem for the universal theory of reals Theorem 5.3 [31]), we conclude that \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are also \(\mathsf {coNP}\)-hard and at least as hard as the decision problem for the universal theory of reals, when \(|\mathfrak A| \ge 2\). Thus, unless \(\mathsf {P=NP}\), there is no hope of finding polynomial-time solutions to these problems.

Theorem 1

\(\mathsf {UNN_{LRS} }\) reduces to \(\mathsf {ENN_{SoM}}\) with \(|\mathfrak A| \ge 2\) in polynomial time.

Proof

Given an LRS \(\langle u \rangle \) of order k defined by the recurrence \(u_n = a_{k-1}u_{n-1} + \ldots + a_1u_{n-k-1} + a_0u_{n-k}\) and initial values \(u_0, u_1, \ldots , u_{k-1}\), construct two matrices \(A_1\) and \(A_2\) such that \(\langle u \rangle \) is ultimately non-negative iff \((A_1^n + A_2^n)\) is eventually non-negative. Consider \(A_1= \begin{bmatrix} 0 &{} \mathbf {u} \\ \mathbf {0}^T &{} M \end{bmatrix}\), the generator matrix of \(\langle u \rangle \) and \(A_2= \begin{bmatrix} 0 &{} \mathbf {0} \\ \mathbf {0}^T &{} P \end{bmatrix}\), where \(P \in \mathbb {Q}^{k \times k}\) is constructed such that : \(P[i,j] \ge |M[i,j]|\). For example P can be constructed as: \(P[i,j] = M[i,j]\) for all \(j \in [2,k]\) and \(i \in [1,k]\) and \(P[i,j] = max(|a_0|,|a_1|, \ldots , |a_{k-1}|) + 1\) for \(j=1\). Now consider the sequence of matrices defined by \(A_1^n + A_2^n\), for all \(n \ge 1\). By properties of the generator matrix, it is easily verified that \( A_1^n = \begin{bmatrix} 0 &{} \mathbf {u}M^{n-1} \\ \mathbf {0}^T &{} M^n \end{bmatrix}\). Similarly, we get \(A_2^n = \begin{bmatrix} 0 &{} \mathbf {0} \\ \mathbf {0}^T &{} P^n \end{bmatrix}\). Therefore, \(A_1^n + A_2^n =\) \(\begin{bmatrix} 0 &{} \mathbf {u}M^{n-1} \\ \mathbf {0}^T &{} P^n + M^n \end{bmatrix}\), for all \(n \ge 1\). Now, we can observe that \(P^n + M^n\) is always non-negative, since \(P[i,j] \ge |M[i,j]| \ge 0\) for all \(i, j \in \{1, \ldots k\}\) and hence \(P^n[i,j] + M^n[i,j] \ge 0\) for all \(i, j \in \{1, \ldots k\}\) and \(n \ge 1\). Thus we conclude that \(A(n) = A_1^n + A_2^n \ge 0\) (\(n \ge 1\)) iff \(\langle u \rangle \) is ultimately non-negative, since the elements \(A(n)[1,1] \ldots , A(n)[1,k+1]\) consists of \((u_{n+k-2} \ldots ,u_{n},u_{n-1})\) and the rest of the elements are non-negative. \(\square \)

Observe that the same reduction technique works if we are required to use more than 2 matrices in \(\mathsf {ENN_{SoM}}\). Indeed, we can construct matrices \(A_3, A_4, \ldots , A_m\) similar to the construction of \(A_2\) in the reduction above, by having the \(k \times k\) matrix in the bottom right (see definition of \(A_2\)) to have positive values greater than the maximum absolute value of every element in the companion matrix.

A simple modification of the above proof setting \(A_2 = \begin{bmatrix} 1 &{} \mathbf {0} \\ \mathbf {1}^T &{} P \end{bmatrix}\), where \(\mathbf {1}\) denotes the k-dimensional vector of all 1’s gives us the corresponding hardness result for \(\mathsf {EP_{SoM} }\) (see [3] for details).

Theorem 2

\(\mathsf {UP_{LRS} }\) reduces to \(\mathsf {EP_{SoM} }\) with \(|\mathfrak A| \ge 2\) in polynomial time.

We remark that for the reduction technique used in Theorems 1 and 2 to work, we need at least two (weight, matrix) pairs in \(\mathfrak A\). For explanation of why this reduction doesn’t work when \(|\mathfrak A|=1\), we refer the reader to [3]. Having shown the hardness of \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) when \(|\mathfrak A| \ge 2\), we now proceed to establish upper bounds on the computational complexity of these problems.

4 Upper Bounds on Eventual Non-negativity and Positivity

In this section, we show that \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) is polynomially reducible to \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)), regardless of \(|\mathfrak A|\).

Theorem 3

\(\mathsf {ENN_{SoM}}\), reduces to \(\mathsf {UNN_{LRS} }\) in polynomial time.

The proof is in two parts. First, we show that for a single matrix A, we can construct a linear recurrence \(\langle a \rangle \) such that A is eventually non-negative iff \(\langle a \rangle \) is ultimately non-negative. Then, we show that starting from such a linear recurrence for each matrix in \(\mathfrak A\), we can construct a new LRS, say \(\langle a^\star \rangle \), with the property that the weighted sum of powers of the matrices in \(\mathfrak A\) is eventually non-negative iff \(\langle a^\star \rangle \) is ultimately non-negative. Our proof makes crucial use of the following property of matrices.

Lemma 1

Adapted from Lemma 1.1 of [19]). Let \(A \in \mathbb {Q}^{k\times k}\) be a rational matrix with characteristic polynomial \(p_A(\lambda ) = det(A - \lambda I)\). Suppose we define the sequence \(\langle a^{ij} \rangle \) for every \(1 \le i,j \le k\) as follows: \(a^{i,j}_n = A^{n+1}[i,j]\), for all \(n \ge 0\). Then \(\langle a^{i,j} \rangle \) is an LRS of order k with characteristic polynomial \(p_A(x)\) and initial values given by \(a^{ij}_0 = A^1[i,j], \ldots a^{ij}_{k-1} = A^k[i,j]\).

This follows from the Cayley-Hamilton Theorem and the reader is referred to [19] for further details. From Lemma 1, it is easy to see that the LRS \(\langle a^{i,j} \rangle \) for all \( 1 \le i, j \le k\) share the same order and characteristic polynomial (hence the defining recurrence) and differ only in their initial values. For notational convenience, we say that the LRS \(\langle a^{i,j} \rangle \) is generated by A[i, j].

Proposition 1

A matrix \(A \in \mathbb {Q}^{k \times k}\) is eventually non-negative iff all LRS \(\langle a^{i,j} \rangle \) generated by A[i, j] for all \(1 \le i,j \le k\) are ultimately non-negative.

The proof follows from the definition of eventually non-negative matrices and the definition of \(\langle a^{ij} \rangle \). Next we define the notion of interleaving of LRS.

Definition 2

Consider a set \(S = \{ \langle u^i \rangle : 0 \le i < t \}\) of t LRSes, each having order k and the same characteristic polynomial. An LRS \(\langle v \rangle \) is said to be the LRS-interleaving of S iff \(v_{tn + s} = u^s_n\) for all \(n \in \mathbb {N}\) and \(0 \le s < t\).

Observe that, the order of \(\langle v \rangle \) is tk and its initial values are given by the interleaving of the k initial values of the LRSes \(\langle u^i \rangle \). Formally, the initial values are \(v_{tj+i} = u^i_j\) for \(0 \le i < t \) and \( 0 \le j < k\). The characteristic polynomial \(p_{\langle v \rangle }(s)\) is equal to \(p_{\langle u^i \rangle }(x^t)\).

Proposition 2

The LRS-interleaving \(\langle v \rangle \) of a set of LRSes \(S = \{ \langle u^{i} \rangle : 0 \le i < t \}\) is ultimately non-negative iff each LRS \(\langle u^i \rangle \) in S is ultimately non-negative.

Now, from the definitions of LRSes \(\langle a^{i,j} \rangle \), \(\langle u^i \rangle \) and \(\langle v \rangle \), and from Propositions 1 and 2, we obtain the following crucial lemma.

Lemma 2

Given a matrix \(A \in \mathbb {Q}^{k \times k}\), let \(S = \{\langle u^{i} \rangle \mid u_n^{i} = a_n^{pq},\) where \(p = \lfloor i/k \rfloor +1, ~q = i\mod k + 1, ~0 \le i < k^2\}\) be the set of \(k^2\) LRSes mentioned in Lemma 1. The LRS \(\langle v \rangle \) generated by LRS-interleaving of S satisfies the following:

-

1.

A is eventually non-negative iff \(\langle v \rangle \) is ultimately non-negative.

-

2.

\(p_{\langle v \rangle }(x) = \prod _{i=1}^k (x^{k^2} - \lambda _i)\), where \(\lambda _1, \ldots \lambda _k\) are the (possibly repeated) eigenvalues of A.

-

3.

\(v_{rk^2 + sk + t} = u_r^{sk+t} = a_r^{s+1,t+1} = A^{r+1}[s+1,t+1]\) for all \(r \in \mathbb {N}\), \(0 \le s,t < k\).

We lift this argument from a single matrix to a weighted sum of matrices.

Lemma 3

Given \(\mathfrak A= \{(w_1, A_1), \ldots , (w_m, A_m)\}\), there exists a linear recurrence \(\langle a^\star \rangle \), such that \(\sum _{i=1}^m w_i A_i^n\) is eventually non-negative iff \(\langle a^\star \rangle \) is ultimately non-negative.

Proof

For each matrix \(A_i\) in \(\mathfrak A\), let \(\langle v^i \rangle \) be the interleaved LRS as constructed in Lemma 2. Let \(w_i \langle v^i \rangle \) denote the scaled LRS whose \(n^{th}\) entry is \(w_i v^i_n\) for all \(n \ge 0\). The LRS \(\langle a^\star \rangle \) is obtained by adding the scaled LRSes \(w_1\langle v^1\rangle , w_2\langle v^2 \rangle , \ldots \) \(w_m\langle v^m \rangle \). Clearly, \(a^\star _n\) is non-negative iff \(\sum _{i=1}^m w_i v^i_n\) is non-negative. From the definition of \(v^i\) (see Lemma 2), we also know that for all \(n \ge 0\), \(v^i_n = A_i^{r+1}[s+1,t+1]\), where \(r = \lfloor n/k^2 \rfloor \), \(s = \lfloor (n \mod k^2)/k \rfloor \) and \(t = n \mod k\). Therefore, \(a^\star _n\) is non-negative iff \(\sum _{i=1}^m w_i A_i^{r+1}[s+1,t+1]\) is non-negative. It follows that \(\langle a^\star \rangle \) is ultimately non-negative iff \(\sum _{i=1}^m w_i A_i^n\) is eventually non-negative. \(\square \)

From Lemma 3, we can conclude the main result of this section, i.e., proof of Theorem 3. The following corollary can be shown mutatis mutandis.

Corollary 1

\(\mathsf {EP_{SoM} }\) reduces to \(\mathsf {UP_{LRS} }\) in polynomial time.

We note that it is also possible to argue about the eventual non-negativity (positivity) of only certain indices of the matrix using a similar argument as above. By interleaving only the LRS’s corresponding to certain indices of the matrices in \(\mathfrak A\), we can show this problem’s equivalence with \(\mathsf {UNN_{LRS} }\) (\(\mathsf {UP_{LRS} }\)).

5 Decision Procedures for Special Cases

Since there are no known algorithms for solving \(\mathsf {UNN_{LRS} }\) in general, the results of the previous section present a bleak picture for deciding \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\). We now show that these problems can be solved in some important special cases.

5.1 Simple Matrices and Matrices with Real Algebraic Eigenvalues

Our first positive result follows from known results for special classes of LRSes.

Theorem 4

\(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are decidable for \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\) if one of the following conditions holds for all \(i \in \{1, \ldots m\}\).

-

1.

All \(A_i\) are simple. In this case, \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are in \(\mathsf {PSPACE}\). Additionally, if the rank k of all \(A_i\) is fixed, \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are in \(\mathsf {PTIME}\).

-

2.

All eigenvalues of \(A_i\) are roots of real algebraic numbers. In this case, \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are in \(\mathsf {coNP^{PosSLP}}\) (a complexity class in the Counting Hierarchy, contained in \(\mathsf {PSPACE}\)).

Proof

Suppose each \(A_i \in \mathbb {Q}^{k\times k}\), and let \(\lambda _{i,1}, \ldots \lambda _{i,k}\) be the (possibly repeated) eigenvalues of \(A_i\). The characteristic polynomial of \(A_i\) is \(p_{A_i}(x) = \prod _{j=1}^k (x-\lambda _{i,j})\). Denote the LRS obtained from \(A_i\) by LRS interleaving as in Lemma 2 as \(\langle a^{i} \rangle \). By Lemma 2, we have (i) \(a^{i}_{rk^2 + sk + t} = A_i^{r+1}[s+1,t+1]\) for all \(r \in \mathbb {N}\) and \(0 \le s, t < k\), and (ii) \(p_{\langle a^{i} \rangle }(x) = \prod _{j=1}^k \big (x^{k^2}-\lambda _{i,j}\big )\). We now define the scaled LRS \(\{\langle b^{i} \rangle \), where \(\mid \) \(b^{i}_n = w_i~a^{i}_n\) for all \(n \in \mathbb {N}\). Since scaling does not change the characteristic polynomial of an LRS (refer [3] for a simple proof), we have \(p_{\langle b^{i} \rangle }(x) = \prod _{j=1}^k \big (x^{k^2}-\lambda _{i,j}\big )\). Once the LRSes \(\langle b^1 \rangle , \ldots \langle b^m \rangle \) are obtained as above, we sum them to obtain the LRS \(\langle b^\star \rangle \). Thus, for all \(n \in \mathbb {N}\), we have \(b^\star _n = \sum _{i=1}^m b^{i}_n = \sum _{i=1}^m w_i~a^{i}_n\) \(= \sum _{i=1}^m w_i~A_i^r[s,t]\), where \(n = rk^2 + sk + t\), \(r \in \mathbb {N}\) and \(0 \le s, t < k\). Hence, \(\mathsf {ENN_{SoM}}\) (resp. \(\mathsf {EP_{SoM} }\)) for \(\{(w_1, A_1), \ldots (w_m, A_m)\}\) polynomially reduces to \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) for \(\langle b^\star \rangle \).

By [14], we know that the characteristic polynomial \(p_{\langle b^\star \rangle }(x)\) is the LCM of the characteristic polynomials \(p_{\langle b^i \rangle }(x)\) for \(1 \le i \le m\). If \(A_i\) are simple, there are no repeated roots of \(p_{\langle b^i \rangle }(x)\). If this holds for all \(i \in \{1, \ldots m\}\), there are no repeated roots of the LCM of \(p_{\langle b^1 \rangle }(x), \ldots p_{\langle b^m \rangle }(x)\) as well. Hence, \(p_{\langle b^\star \rangle }(x)\) has no repeated roots. Similarly, if all eigenvalues of \(A_i\) are roots of real algebraic numbers, so are all roots of \(p_{\langle b^i \rangle }(x)\). It follows that all roots of the LCM of \(p_{\langle b^1 \rangle }(x), \ldots p_{\langle b^m \rangle }(x)\), i.e. \(p_{\langle b^\star \rangle }(x)\), are also roots of real algebraic numbers.

The theorem now follows from the following two known results about LRS.

-

1.

\(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) for simple LRS is in \(\mathsf {PSPACE}\). Furthermore, if the LRS is of bounded order, \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) is in \(\mathsf {PTIME}\) [31].

-

2.

\(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) for LRS in which all roots of characteristic polynomial are roots of real algebraic numbers is in \(\mathsf {coNP^{PosSLP}}\) [2]. \(\square \)

Remark: The technique used in [31] to decide \(\mathsf {UNN_{LRS} }\) (resp. \(\mathsf {UP_{LRS} }\)) for simple rational LRS also works for simple LRS with real algebraic coefficients and initial values. This allows us to generalize Theorem 4(1) to the case where all \(A_i\)’s and \(w_i\)’s are real algebraic matrices and weights respectively.

5.2 Diagonalizable Matrices

We now ask if \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) can be decided if each matrix \(A_i\) is diagonalizable. Since diagonalizable matrices strictly generalize simple matrices, Theorem 4(1) cannot answer this question directly, unless one perhaps looks under the hood of the (highly non-trivial) proof of decidability of non-negativity/positivity of simple LRSes. The main contribution of this section is a reduction that allows us to decide \(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) for diagonalizable matrices using a black-box decision procedure (i.e. without knowing operational details of the procedure or details of its proof of correctness) for the corresponding problem for simple real-algebraic matrices.

Before we proceed further, let us consider an example of a non-simple matrix (i.e. one with repeated eigenvalues) that is diagonalizable.

Specifically, matrix A in Fig. 2 has eigenvalues 2, 2 and \(-1\), and can be written as \(S D S^{-1}\), where D is the \(3\times 3\) diagonal matrix with \(D[1,1] = D[2,2] = 2\) and \(D[3,3] = -1\), and S is the \(3\times 3\) matrix with columns \({(-4, 1, 0)}^{\mathsf T}\), \({(2, 0, 1)}^{\mathsf T}\) and \({(-1, 1, 1)}^{\mathsf T}\).

Interestingly, the reduction technique we develop applies to properties much more general than \(\mathsf {ENN_{SoM}}{}\) and \(\mathsf {EP_{SoM} }{}\). Formally, given a sequence of matrices \(B_n\) defined by \(\sum _{i=1}^m w_i A_i^n\), we say that a property \(\mathcal {P}\) of the sequence is positive scaling invariant if it stays unchanged even if we scale all \(A_i\)s by the same positive real. Examples of such properties include \(\mathsf {ENN_{SoM}}{}\), \(\mathsf {EP_{SoM} }{}\), non-negativity and positivity of \(B_n\) (i.e. is \(B_n[i,j] \ge 0\) or \(< 0\), as the case may be, for all \(n\ge 1\) and for all \(1 \le i,j \le k\)), existence of zero (i.e. is \(B_n\) equal to the all 0-matrix for some \(n \ge 1\)), existence of a zero element (i.e. is \(B_n[i,j] = 0\) for some \(n \ge 1\) and some \(i,j \in \{1, \ldots k\})\), variants of the r-non-negativity (resp. r-positivity and r-zero) problem, i.e. does there exist at least/exactly/at most r non-negative (resp. positive/zero) elements in \(B_n\) for all \(n \ge 1\), for a given \(r \in [1,k]\)) etc. The main result of this section is a reduction for deciding such properties, formalized in the following theorem.

Theorem 5

The decision problem for every positive scaling invariant property on rational diagonalizable matrices effectively reduces to the decision problem for the property on real algebraic simple matrices.

While we defer the proof of this theorem to later in the section, an immediate consequence of Theorem 5 and Theorem 4(1) (read with the note at the end of Sect. 5.1) is the following result.

Corollary 2

\(\mathsf {ENN_{SoM}}\) and \(\mathsf {EP_{SoM} }\) are decidable for \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\) if all \(A_i\)s are rational diagonalizable matrices and all \(w_i\)s are rational.

It is important to note that Theorem 5 yields a decision procedure for checking any positive scaling invariant property of diagonalizable matrices from a corresponding decision procedure for real algebraic simple matrices without making any assumptions about the inner working of the latter decision procedure. Given any black-box decision procedure for checking any positive scaling property for a set of weighted simple matrices, our reduction tells us how a corresponding decision procedure for checking the same property for a set of weighted diagonalizable matrices can be constructed. Interestingly, since diagonalizable matrices have an exponential form solution with constant coefficients for exponential terms, we can use an algorithm that exploits this specific property of the exponential form (like Ouaknine and Worrell’s algorithm [31], originally proposed for checking ultimate positivity of simple LRS) to deal with diagonalizable matrices. However, our reduction technique is neither specific to this algorithm nor does it rely on any special property the exponential form of the solution.

The proof of Theorem 5 crucially relies on the notion of perturbation of diagonalizable matrices, which we introduce first. Let A be a \(k \times k\) real diagonalizable matrix. Then, there exists an invertible \(k \times k\) matrix S and a diagonal \(k \times k\) matrix D such that \(A = S D S^{-1}\), where S and D may have complex entries. It follows from basic linear algebra that for every \(i \in \{1, \ldots k\}\), D[i, i] is an eigenvalue of A and if \(\alpha \) is an eigenvalue of A with algebraic multiplicity \(\rho \), then \(\alpha \) appears exactly \(\rho \) times along the diagonal of D. Furthermore, for every \(i \in \{1, \ldots k\}\), the \(i^{th}\) column of S (resp. \(i^{th}\) row of \(S^{-1}\)) is an eigenvector of A (resp. of \({A}^{\mathsf T}\)) corresponding to the eigenvalue D[i, i], and the columns of S (resp. rows of \(S^{-1}\)) form a basis of the vector space \(\mathbb {C}^k\). Let \(\alpha _1, \ldots \alpha _m\) be the eigenvalues of A with algebraic multiplicities \(\rho _1, \ldots \rho _m\) respectively. Wlog, we assume that \(\rho _1 \ge \ldots \ge \rho _m\) and the diagonal of D is partitioned into segments as follows: the first \(\rho _1\) entries along the diagonal are \(\alpha _1\), the next \(\rho _2\) entries are \(\alpha _2\), and so on. We refer to these segments as the \(\alpha _1\)-segment, \(\alpha _2\)-segment and so on, of diagonal of D. Formally, if \(\kappa _i\) denotes \(\sum _{j=1}^{i-1} \rho _j\), the \(\alpha _i\)-segment of diagonal of D consists of entries \(D[\kappa _i+1, \kappa _i+1], \ldots D[\kappa _i+\rho _i, \kappa _i + \rho _i]\), all of which are \(\alpha _i\).

Since A is a real matrix, its characteristic polynomial has all real coefficients and for every eigenvalue \(\alpha \) of A (and hence of \({A}^{\mathsf T}\)), its complex conjugate, denoted \(\overline{\alpha }\), is also an eigenvalue of A (and hence of \({A}^{\mathsf T}\)) with the same algebraic multiplicity. This allows us to define a bijection \({h}_{{D}}\) from \(\{1, \ldots , k\}\) to \(\{1, \ldots k\}\) as follows. If D[i, i] is real, then \({h}_{{D}}(i) = i\). Otherwise, let \(D[i,i] = \alpha \in \mathbb {C}\) and let D[i, i] be the \(l^{th}\) element in the \(\alpha \)-segment of the diagonal of D. Then \({h}_{{D}}(i) = j\), where D[j, j] is the \(l^{th}\) element in the \(\overline{\alpha }\)-segment of the diagonal of D. The matrix A being real also implies that for every real eigenvalue \(\alpha \) of A (resp. of \({A}^{\mathsf T}\)), there exists a basis of real eigenvectors of the corresponding eigenspace. Additionally, for every non-real eigenvalue \(\alpha \) and for every set of eigenvectors of A (resp. of \({A}^{\mathsf T}\)) that forms a basis of the eigenspace corresponding to \(\alpha \), the component-wise complex conjugates of these basis vectors serve as eigenvectors of A (resp. of \({A}^{\mathsf T}\)) and form a basis of the eigenspace corresponding to \(\overline{\alpha }\).

Using the above notation, we choose matrix \(S^{-1}\) (and hence S) such that \(A = S D S^{-1}\) as follows. Suppose \(\alpha \) is an eigenvalue of A (and hence of \({A}^{\mathsf T}\)) with algebraic multiplicity \(\rho \). Let \(\{i+1, \ldots i +\rho \}\) be the set of indices j for which \(D[j, j] = \alpha \). If \(\alpha \) is real (resp. complex), the \(i+1^{st}, \ldots i+{\rho }^{th}\) rows of \(S^{-1}\) are chosen to be real (resp. complex) eigenvectors of \({A}^{\mathsf T}\) that form a basis of the eigenspace corresponding to \(\alpha \). Moreover, if \(\alpha \) is complex, the \({h}_{{D}}(i+s)^{th}\) row of \(S^{-1}\) is chosen to be the component-wise complex conjugate of the \(i+s^{th}\) row of \(S^{-1}\), for all \(s \in \{1, \ldots \rho \}\).

Definition 3

Let \(A = S D S^{-1}\) be a \(k\times k\) real diagonalizable matrix. We say that \(\mathcal {E}= (\varepsilon _1, \ldots \varepsilon _k) \in \mathbb {R}^k\) is a perturbation w.r.t. D if \(\varepsilon _i \ne 0\) and \(\varepsilon _i = \varepsilon _{{h}_{{D}}(i)}\) for all \(i \in \{1, \ldots k\}\). Further, the \(\mathcal {E}\)-perturbed variant of A is the matrix \(A' = S D' S^{-1}\), where \(D'\) is the \(k\times k\) diagonal matrix with \(D'[i,i] = \varepsilon _i D[i,i]\) for all \(i \in \{1, \ldots k\}\).

In the following, we omit "w.r.t. D" and simply say "\(\mathcal {E}\) is a perturbation", when D is clear from the context. Clearly, \(A'\) as defined above is a diagonalizable matrix and its eigenvalues are given by the diagonal elements of \(D'\).

Recall that the diagonal of D is partitioned into \(\alpha _i\)-segments, where each \(\alpha _i\) is an eigenvalue of \(A = SDS^{-1}\) with algebraic multiplicity \(\rho _i\). We now use a similar idea to segment a perturbation \(\mathcal {E}\) w.r.t. D. Specifically, the first \(\rho _1\) elements of \(\mathcal {E}\) constitute the \(\alpha _1\)-segment of \(\mathcal {E}\), the next \(\rho _2\) elements of \(\mathcal {E}\) constitute the \(\alpha _2\)-segment of \(\mathcal {E}\) and so on.

Definition 4

A perturbation \(\mathcal {E}= (\varepsilon _1, \ldots \varepsilon _k)\) is said to be segmented if the \(j^{th}\) element (whenever present) in every segment of \(\mathcal {E}\) has the same value, for all \(1\le j \le \rho _1\). Formally, if \(i = \sum _{s=1}^{l-1} \rho _s + j\) and \(1 \le j \le \rho _l \le \rho _1\), then \(\varepsilon _i = \varepsilon _j\).

Clearly, the first \(\rho _1\) elements of a segmented perturbation \(\mathcal {E}\) define the whole of \(\mathcal {E}\). As an example, suppose \((\alpha _1, \alpha _1, \alpha _1, \alpha _2, \alpha _2, \overline{\alpha _2}, \overline{\alpha _2}, \alpha _3)\) is the diagonal of D, where \(\alpha _1, \alpha _2, \overline{\alpha _2}\) and \(\alpha _3\) are distinct eigenvalues of A. There are four segments of the diagonal of D (and of \(\mathcal {E}\)) of lengths 3, 2, 2 and 1 respectively.

Example segmented perturbations in this case are \((\varepsilon _1, \varepsilon _2, \varepsilon _3, \varepsilon _1, \varepsilon _2, \varepsilon _1, \varepsilon _2, \varepsilon _1)\) and \((\varepsilon _3, \varepsilon _1,\) \(\varepsilon _2, \varepsilon _3, \varepsilon _1, \varepsilon _3, \varepsilon _1, \varepsilon _3)\). If \(\varepsilon _1 \ne \varepsilon _2\) or \(\varepsilon _2 \ne \varepsilon _3\), a perturbation that is not segmented is \(\widetilde{\mathcal {E}} = (\varepsilon _1, \varepsilon _2, \varepsilon _3, \varepsilon _2, \varepsilon _3, \varepsilon _2, \varepsilon _3, \varepsilon _1)\).

Definition 5

Given a segmented perturbation \(\mathcal {E}= (\varepsilon _1, \ldots \varepsilon _k)\) w.r.t. D, a rotation of \(\mathcal {E}\), denoted \(\tau _{D}(\mathcal {E})\), is the segmented perturbation \(\mathcal {E}' = (\varepsilon _1', \ldots \varepsilon _k')\) in which \(\varepsilon _{(i \mod \rho _1)+1}' = \varepsilon _i\) for \(i \in \{1, \ldots \rho _1\}\), and all other \(\varepsilon _i'\)s are as in Definition 4.

Continuing with our example, if \(\mathcal {E}= (\varepsilon _1, \varepsilon _2, \varepsilon _3, \varepsilon _1, \varepsilon _2, \varepsilon _1, \varepsilon _2, \varepsilon _1)\), then \(\tau _{D}(\mathcal {E}) =\) \((\varepsilon _3, \varepsilon _1, \varepsilon _2, \varepsilon _3, \varepsilon _1, \varepsilon _3, \varepsilon _1, \varepsilon _3)\), \(\tau _{D}^2(\mathcal {E})\) \(=\) \((\varepsilon _2, \varepsilon _3, \varepsilon _1,\) \(\varepsilon _2, \varepsilon _3, \varepsilon _2, \varepsilon _3, \varepsilon _2)\) and \(\tau _{D}^3(\mathcal {E}) = \mathcal {E}\).

Lemma 4

Let \(A = S D S^{-1}\) be a \(k\times k\) real diagonalizable matrix with eigenvalues \(\alpha _i\) of algebraic multiplicity \(\rho _i\). Let \(\mathcal {E}= (\varepsilon _1, \ldots \varepsilon _k)\) be a segmented perturbation w.r.t. D such that all \(\varepsilon _j\)s have the same sign, and let \(A_u\) denote the \(\tau _{D}^{u}(\mathcal {E})\)-perturbed variant of A for \(0 \le u < \rho _1\), where \(\tau ^0(\mathcal {E}) = \mathcal {E}\). Then \(A^n ~=~ \frac{1}{\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big ) }\sum _{u=0}^{\rho _1-1} A_u^n\), for all \(n \ge 1\).

Proof

Let \(\mathcal {E}_u\) denote \(\tau _{D}^u(\mathcal {E})\) for \(0 \le u < \rho _1\), and let \(\mathcal {E}_u[i]\) denote the \(i^{th}\) element of \(\mathcal {E}_u\) for \(1 \le i \le k\). It follows from Definitions 4 and 5 that for each \(i, j \in \{1, \ldots \rho _1\}\), there is a unique \(u \in \{0, \ldots \rho _1-1\}\) such that \(\mathcal {E}_u[i] = \varepsilon _j\). Specifically, \(u = i-j\) if \(i \ge j\), and \(u = (\rho _1-j)+i\) if \(i < j\). Furthermore, Definition 4 ensures that the above property holds not only for \(i \in \{1, \ldots \rho _1\}\), but for all \(i \in \{1, \ldots k\}\).

Let \(D_u\) denote the diagonal matrix with \(D_u[i,i] = \mathcal {E}_u[i] D[i,i]\) for \(0 \le i < \rho _1\). Then \(D_u^n\) is the diagonal matrix with \(D_u^n[i,i] = \big (\mathcal {E}_u[i] D[i,i]\big )^n\) for all \(n \ge 1\). It follows from the definition of \(A_u\) that \(A_u^n = S~ D_u^n ~S^{-1}\) for \(0 \le u < \rho \) and \(n \ge 1\). Therefore, \(\sum _{u=0}^{\rho _1-1} A_u^n\) \(=\) \(S ~\big (\sum _{u=0}^{\rho _1-1} D_u^n\big )~S^{-1}\). Now, \(\sum _{u=0}^{\rho _1-1} D_u^n\) is a diagonal matrix whose \(i^{th}\) element along the diagonal is \(\sum _{u=0}^{\rho _1-1} \big (\mathcal {E}_u[i] D[i,i]\big )^n\) \(=\) \(\big (\sum _{u=0}^{\rho _1-1} \mathcal {E}_u^n[i]\big )~D^n[i,i]\). By virtue of the property mentioned in the previous paragraph, \(\sum _{u=0}^{\rho _1-1} \mathcal {E}_u^n[i] = \sum _{j=1}^{\rho _1} \varepsilon _j^n\) for \(1 \le i \le k\). Therefore, \(\sum _{u=0}^{\rho _1-1} D_u^n\) \(=\) \(\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big ) ~D^n\), and hence, \(\sum _{u=0}^{\rho _1-1} A_u^n\) \(=\) \(\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big )~ S~D^n~S^{-1}\) \(=\) \(\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big )~ A^n\). Since all \(\varepsilon _j\)s have the same sign and are non-zero, \(\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big )\) is non-zero for all \(n \ge 1\). It follows that \(A^n ~=~ \frac{1}{\big (\sum _{j=1}^{\rho _1} \varepsilon _j^n\big ) }\sum _{u=0}^{\rho _1-1} A_u^n\). \(\square \)

We are now in a position to present the proof of the main result of this section, i.e. of Theorem 5. Our proof uses a variation of the idea used in the proof of Lemma 4 above.

Proof of Theorem 5. Consider a set \(\{(w_1, A_1), \ldots (w_i, A_i)\}\) of (weight, matrix) pairs, where each matrix \(A_i\) is in \(\mathbb {Q}^{k \times k}\) and each \(w_i \in \mathbb {Q}\). Suppose further that each \(A_i = S_i D_i S_i^{-1}\), where \(D_i\) is a diagonal matrix with segments along the diagonal arranged in descending order of algebraic multiplicities of the corresponding eigenvalues. Let \(\nu _i\) be the number of distinct eigenvalues of \(A_i\), and let these eigenvalues be \(\alpha _{i,1}, \ldots \alpha _{i, \nu _i}\). Let \(\mu _i\) be the largest algebraic multiplicity among those of all eigenvalues of \(A_i\), and let \(\mu = lcm(\mu _1, \ldots \mu _m)\). We now choose positive rationals \(\varepsilon _1, \ldots \varepsilon _\mu \) such that (i) all \(\varepsilon _j\)s are distinct, and (ii) for every \(i \in \{1, \ldots m\}\), for every distinct \(j, l \in \{1, \ldots \nu _i\}\) and for every distinct \(p, q \in \{1, \ldots \mu \}\), we have \(\frac{\varepsilon _p}{\varepsilon _q} \ne |\frac{\alpha _{i,j}}{\alpha {i,l}} |\). Since \(\mathbb {Q}\) is a dense set, such a choice of \(\varepsilon _1, \ldots \varepsilon _\mu \) can always be made once all \(|\frac{\alpha _{i,j}}{\alpha _{i,l}}|\)s are known, even if within finite precision bounds.

For \(1 \le i \le m\), let \(\eta _i\) denote \(\mu /\mu _i\). We now define \(\eta _i\) distinct and segmented perturbations w.r.t. \(D_i\) as follows, and denote these as \(\mathcal {E}_{i,1}, \ldots \mathcal {E}_{i, \eta _i}\). For \(1 \le j \le \eta _i\), the first \(\mu _i\) elements (i.e. the first segment) of \(\mathcal {E}_{i,j}\) are \(\varepsilon _{(j-1)\mu _i + 1}, \ldots \varepsilon _{j\mu _i}\) (as chosen in the previous paragraph), and all other elements of \(\mathcal {E}_{i,j}\) are defined as in Definition 4. For each \(\mathcal {E}_{i,j}\) thus obtained, we also consider its rotations \(\tau _{D_i}^u(\mathcal {E}_{i,j})\) for \(0 \le u < \mu _i\). For \(1 \le j \le \eta _i\) and \(0 \le u < \mu _i\), let \(A_{i,j,u} = S_i ~D_{i,j,u}~S_i^{-1}\) denote the \(\tau _{D_i}^u(\mathcal {E}_{i,j})\)-perturbed variant of \(A_i\). It follows from Definition 3 that if we consider the set of diagonal matrices \(\{D_{i,j,u} \mid 1 \le j \le \eta _i\), \(0 \le u < \mu _i\}\), then for every \(p \in \{1, \ldots k\}\) and for every \(q \in \{1, \ldots \mu \}\), there is a unique u and j such that \(D_{i,j,u}[p,p] = \varepsilon _q\). Specifically, \(j = \lfloor q/\mu _i\rfloor \). To find u, let \(\mathcal {E}_{i,j}[p]\) be the \(\widehat{p}^{th}\) element in a segment of \(\mathcal {E}_{i,j}\), where \(1 \le \widehat{p} \le \mu _i\), and let \(\widehat{q}\) be \(q\mod \mu _i\). Then, \(u = (\widehat{p} - \widehat{q})\) if \(\widehat{p} \ge \widehat{q}\) and \(u = (\mu _i - \widehat{q}) + \widehat{p}\) otherwise. By our choice of \(\varepsilon _t\)s, we also know that for all \(i \in \{1, \ldots m\}\), for all \(j, l \in \{1, \ldots \nu _i\}\) and for all \(p, q \in \{1, \ldots \mu \}\), we have \(\varepsilon _p \alpha _{i,l} \ne \varepsilon _q \alpha _{i,j}\) unless \(p=q\) and \(j=l\). This ensures that all \(D_{i,j,u}\) matrices, and hence all \(A_{i, j, u}\)s matrices, are simple, i.e. have distinct eigenvalues.

Using the reasoning in Lemma 4, we can now show that \(A_i^n = \frac{1}{\big (\sum _{j=1}^\mu \varepsilon _j^n\big )}\times \big (\sum _{j=1}^{\eta _i} \sum _{u=0}^{\mu _i-1} A_{i,j,u}^n\big )\) and so, \(\sum _{i=1}^m w_i A_i^n\) \(=\) \(\frac{1}{\big (\sum _{j=1}^\mu \varepsilon _j^n\big )}\times \big (\sum _{i=1}^m\sum _{j=1}^{\eta _i} \sum _{u=0}^{\mu _i-1} w_i A_{i,j,u}^n\big )\). Since all \(\varepsilon _j\)s are positive reals, \(\sum _{j=1}^\mu \varepsilon _j^n\) is a positive real for all \(n \ge 1\).

Hence, for each \(p, q \in \{1, \ldots k\}\), \(\sum _{i=1}^m w_i A_i^n[p,q]\) is \(> 0\), \(< 0\) or \(= 0\) if and only if \(\big (\sum _{i=1}^m\sum _{j=1}^{\eta _i}\sum _{u=0}^{\mu _i-1} w_i A_{i,j,u}^n[p,q]\big )\) is \(> 0\), \(< 0\) or \(= 0\), respectively. The only remaining helper result that is now needed to complete the proof of the theorem is that each \(A_{i,j,u}\) is a real algebraic matrix. This is shown in Lemma 5, presented at the end of this section to minimally disturb the flow of arguments.

\(\square \)

The reduction in proof of Theorem 5 can be easily encoded as an algorithm, as shown in Algorithm 1.

Further, in addition to Corollary 2, there are other consequences of our reduction. One such result (with proof in [3]) is below.

Corollary 3

Given \(\mathfrak A= \{(w_1, A_1), \ldots (w_m, A_m)\}\), where each \(w_i \in \mathbb {Q}\) and \(A_i \in \mathbb {Q}^{k\times k}\) is diagonalizable, and a real value \(\varepsilon > 0\), there exists \(\mathfrak B= \{(v_1, B_1),\) \(\ldots (v_M, B_M)\}\), where each \(v_i \in \mathbb {Q}\) and each \(B_i \in \mathbb {R}\mathbb {A}^{k\times k}\) is simple, such that

\( \left| \sum _{i=0}^m w_i A_i^n[p,q] - \sum _{j=0}^{M} v_j B_j^n[p,q]\right| < \varepsilon ^n\) for all \(p, q \in \{1, \ldots k\}\) and all \(n \ge 1\).

We end this section with the promised helper result used at the end of the proof of Theorem 5.

Lemma 5

For every real (resp. real algebraic) diagonalizable matrix \(A = S D S^{-1}\) and perturbation \(\mathcal {E}~\in \mathbb {R}^k\) (resp. \(\mathbb {R}\mathbb {A}^k\)), the \(\mathcal {E}\)-perturbed variant of A is a real (resp. real algebraic) diagonalizable matrix.

Proof

We first consider the case of \(A \in \mathbb {R}^{k\times k}\) and \(\mathcal {E}\in \mathbb {R}^k\). Given a perturbation \(\mathcal {E}\) w.r.t. D, we first define k simple perturbations \(\mathcal {E}_i ~(1 \le i \le k)\) w.r.t. D as follows: \(\mathcal {E}_i\) has all its components set to 1, except for the \(i^{th}\) component, which is set to \(\varepsilon _i\). Furthermore, if D[i, i] is not real, then the \({h}_{{D}}(i)^{th}\) component of \(\mathcal {E}_i\) is also set to \(\varepsilon _i\). It is easy to see from Definition 3 that each \(\mathcal {E}_i\) is a perturbation w.r.t. D. Moreover, if \(j = {h}_{{D}}(i)\), then \(\mathcal {E}_j = \mathcal {E}_i\).

Let \(\widehat{\mathcal {E}} = \{\mathcal {E}_{i_1}, \ldots \mathcal {E}_{i_u}\}\) be the set of all unique perturbations w.r.t D among \(\mathcal {E}_1, \ldots \mathcal {E}_k\). It follows once again from Definition 3 that the \(\mathcal {E}\)-perturbed variant of A can be obtained by a sequence of \(\mathcal {E}_{i_j}\)-perturbations, where \(\mathcal {E}_{i_j} \in \widehat{\mathcal {E}}\). Specifically, let \(A_{0,\widehat{\mathcal {E}}} = A\) and \(A_{v, \widehat{\mathcal {E}}}\) be the \(\mathcal {E}_{i_v}\)-perturbed variant of \(A_{v-1, \widehat{\mathcal {E}}}\) for all \(v \in \{1, \ldots u\}\). Then, the \(\mathcal {E}\)-perturbed variant of A is identical to \(A_{u, \widehat{\mathcal {E}}}\). This shows that it suffices to prove the lemma only for simple perturbations \(\mathcal {E}_i\), as defined above. We focus on this special case below.

Let \(A' = S D' S^{-1}\) be the \(\mathcal {E}_i\)-perturbed variant of A, and let \(D[i,i] = \alpha \). For every \(p \in \{1, \ldots k\}\), let \(\mathbf {e_p}\) denote the p-dimensional unit vector whose \(p^{th}\) component is 1. Then, \(A'\mathbf {e_p}\) gives the \(p^{th}\) column of \(A'\). We prove the first part of the lemma by showing that \(A'~\mathbf {e_p} = (S ~D~' S^{-1})~ \mathbf {e_p} \in \mathbb {R}^{k\times 1}\) for all \(p \in \{1, \ldots k\}\).

Let \(\mathbf {T}\) denote \(D' ~S^{-1}~ \mathbf {e_p}\). Then \(\mathbf {T}\) is a column vector with \(\mathbf {T}[r] = D'[r,r] ~S^{-1}[r,p]\) for all \(r \in \{1, \ldots k\}\). Let \(\mathbf {U}\) denote \(S \mathbf {T}\). By definition, \(\mathbf {U}\) is the \(p^{th}\) column of the matrix \(A'\). To compute \(\mathbf {U}\), recall that the rows of \(S^{-1}\) form a basis of \(\mathbb {C}^k\). Therefore, for every \(q \in \{1, \ldots k\}\), \(S^{-1}~\mathbf {e_q}\) can be viewed as transforming the basis of the unit vector \(\mathbf {e_q}\) to that given by the rows of \(S^{-1}\) (modulo possible scaling by real scalars denoting the lengths of the row vectors of \(S^{-1}\)). Similarly, computation of \(\mathbf {U} = S \mathbf {T}\) can be viewed as applying the inverse basis transformation to \(\mathbf {T}\). It follows that the components of \(\mathbf {U}\) can be obtained by computing the dot product of \(\mathbf {T}\) and the transformed unit vector \(S^{-1}~\mathbf {e_q}\), for each \(q \in \{1, \ldots k\}\). In other words, \(\mathbf {U}[q] = \mathbf {T}\cdot (S^{-1}~\mathbf {e_q})\). We show below that each such \(\mathbf {U}[q]\) is real.

By definition, \(\mathbf {U}[q] = \sum _{r=1}^k (\mathbf {T}[r]~S^{-1}[r,q])\) \(= \sum _{r=1}^k (D'[r,r] ~S^{-1}[r,p]~S^{-1}[r,q])\). We consider two cases below.

-

If \(D[i,i] = \alpha \) is real, recalling the definition of \(D'\), the expression for \(\mathbf {U}[q]\) simplifies to \(\sum _{r=1}^k (D[r,r] ~S^{-1}[r,p] ~S^{-1}[r,q])\) \(+\) \((\varepsilon _i - 1)~ \alpha ~S^{-1}[i,p]~ S^{-1}[i,q]\). Note that \(\sum _{r=1}^k (D[r,r] ~S^{-1}[r,p] ~S^{-1}[r,q])\) is the \(q^{th}\) component of the vector \((S D S^{-1}) ~\mathbf {e_p} = A ~\mathbf {e_p}\). Since A is real, so must be the \(q^{th}\) component of \(A ~\mathbf {e_p}\). Moreover, since \(\alpha \) is real, by our choice of \(S^{-1}\), both \(S^{-1}[i,p]\) and \(S^{-1}[i,q]\) are real. Since \(\varepsilon _i\) is also real, it follows that \((\varepsilon _i - 1)~\alpha ~S^{-1}[i,p] ~S^{-1}[i,q]\) is real. Hence \(\mathbf {U}[q]\) is real for all \(q \in \{1, \ldots k\}\).

-

If \(D[i,i] = \alpha \) is not real, from Definition 3, we know that \(D'[i,i] = \varepsilon _i~\alpha \) and \(D'[{h}_{{D}}(i),{h}_{{D}}(i)] = \varepsilon _i~\overline{\alpha }\). The expression for \(\mathbf {U}[q]\) then simplifies to \(\sum _{r=1}^k \big (D[r,r] ~S^{-1}[r,p] ~S^{-1}[r,q]\big )\) \(+\) \((\varepsilon _i - 1) ~(\beta + \gamma )\), where \(\beta = \alpha ~S^{-1}[i,p] ~S^{-1}[i,q]\) and \(\gamma = \overline{\alpha }~ S^{-1}[{h}_{{D}}(i),p]~ S^{-1}[{h}_{{D}}(i),q]\). By our choice of \(S^{-1}\), we know that \(S^{-1}[{h}_{{D}}(i),p] = \overline{S^{-1}[i,p]}\) and \(S^{-1}[{h}_{{D}}(i),q] = \overline{S^{-1}[i,q]}\). Therefore, \(\beta = \overline{\gamma }\) and hence \((\varepsilon _i - 1)~ (\beta + \gamma )\) is real. By a similar argument as in the previous case, it follows that \(\mathbf {U}[q]\) is real for all \(q \in \{1, \ldots k\}\).

The proof when \(A \in \mathbb {R}\mathbb {A}^{k \times k}\) and \(\mathcal {E}\in \mathbb {Q}^k\) follows from a similar reasoning as above, and from the following facts about real algebraic matrices.

-

If A is a real algebraic matrix, then every eigenvalue of A is either a real or complex algebraic number.

-

If A is diagonalizable, then for every real (resp. complex) algebraic eigenvalue of A, there exists a set of real (resp. complex) algebraic eigenvectors that form a basis of the corresponding eigenspace. \(\square \)

6 Conclusion

In this paper, we investigated eventual non-negativity and positivity for matrices and the weighted sum of powers of matrices (\(\mathsf {ENN_{SoM}}\)/\(\mathsf {EP_{SoM} }\)). First, we showed reductions from and to specific problems on linear recurrences, which allowed us give complexity lower and upper bounds. Second, we developed a new and generic perturbation-based reduction technique from simple matrices to diagonalizable matrices, which allowed us to transfer results between these settings.

Most of our results, that we showed in the rational setting, hold even with real-algebraic matrices by adapting the complexity notions and depending on corresponding results for ultimate positivity for linear recurrences and related problems over reals. As future work, we would like to extend our techniques for other problems of interest like the existence of a matrix power where all entries are non-negative or zero. Finally, the line of work started here could lead to effective algorithms and applications in varied areas ranging from control theory systems to cyber-physical systems, where eventual properties of matrices play a crucial role.

Notes

- 1.

Ultimately non-negative (resp. ultimately positive) LRS, as defined by us, have also been called ultimately positive (resp. strictly positive) LRS elsewhere in the literature [31]. However, we choose to use terminology that is consistent across matrices and LRS, to avoid notational confusion.

References

Akshay, S., Antonopoulos, T., Ouaknine, J., Worrell, J.: Reachability problems for Markov chains. Inf. Process. Lett. 115(2), 155–158 (2015)

Akshay, S., Balaji, N., Murhekar, A., Varma, R., Vyas, N.: Near optimal complexity bounds for fragments of the Skolem problem. In: Paul, S., Bläser, M. (eds.) 37th International Symposium on Theoretical Aspects of Computer Science, STACS 2020, 10–13 March 2020, Montpellier, France, volume 154 of LIPIcs, pp. 37:1–37:18. Schloss Dagstuhl - Leibniz-Zentrum für Informatik (2020)

Akshay, S., Chakraborty, S., Pal, D.: On eventual non-negativity and positivity for the weighted sum of powers of matrices. arXiv preprint arXiv:2205.09190 (2022)

Akshay, S., Genest, B., Karelovic, B., Vyas, N.: On regularity of unary probabilistic automata. In: 33rd Symposium on Theoretical Aspects of Computer Science, STACS 2016, 17–20 February 2016, Orléans, France, volume 47 of LIPIcs, pp. 8:1–8:14. Schloss Dagstuhl - Leibniz-Zentrum für Informatik (2016)

S. Akshay, Blaise Genest, and Nikhil Vyas. Distribution based objectives for Markov decision processes. In: 33rd Symposium on Logic in Computer Science (LICS 2018), vol. IEEE, pp. 36–45 (2018)

Almagor, S., Boker, U., Kupferman, O.: What’s decidable about weighted automata? Inf. Comput. 282, 104651 (2020)

Almagor, S., Karimov, T., Kelmendi, E., Ouaknine, J., Worrell, J.: Deciding \(\omega \)-regular properties on linear recurrence sequences. Proc. ACM Program. Lang. 5(POPL), 1–24 (2021)

Barloy, C., Fijalkow, N., Lhote, N., Mazowiecki, F.: A robust class of linear recurrence sequences. In: Fernández, M., Muscholl, A. (eds.) 28th EACSL Annual Conference on Computer Science Logic, CSL 2020, 13–16 January 2020, Barcelona, Spain, volume 152 of LIPIcs, pp. 9:1–9:16. Schloss Dagstuhl - Leibniz-Zentrum für Informatik (2020)

Bell, P.C., Hirvensalo, M., Potapov, I.: Mortality for 2 \(\times \) 2 matrices is NP-hard. In: Rovan, B., Sassone, V., Widmayer, P. (eds.) MFCS 2012. LNCS, vol. 7464, pp. 148–159. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-32589-2_16

Bell, P.C., Potapov, I., Semukhin, P.: On the mortality problem: from multiplicative matrix equations to linear recurrence sequences and beyond. Inf. Comput. 281, 104736 (2021)

Bell, P.C., Semukhin, P.: Decision questions for probabilistic automata on small alphabets. arXiv preprint arXiv:2105.10293 (2021)

Blondel, V.D., Canterini, V.: Undecidable problems for probabilistic automata of fixed dimension. Theory Comput. Syst. 36(3), 231–245 (2003). https://doi.org/10.1007/s00224-003-1061-2

Naqvi, S.C., McDonald, J.J.: Eventually nonnegative matrices are similar to seminonnegative matrices. Linear Algebra Appl. 381, 245–258 (2004)

Everest, G., van der Poorten, A., Shparlinski, I., Ward, T.: Recurrence Sequences. Mathematical Surveys and Monographs, American Mathematical Society, United States (2003)

Fijalkow, N., Ouaknine, J., Pouly, A., Sousa-Pinto, J., Worrell, J.: On the decidability of reachability in linear time-invariant systems. In: Ozay, N., Prabhakar, P. (eds.) Proceedings of the 22nd ACM International Conference on Hybrid Systems: Computation and Control, HSCC 2019, Montreal, QC, Canada, 16–18 April 2019, pages 77–86. ACM (2019)

Friedland, S.: On an inverse problem for nonnegative and eventually nonnegative matrices. Isr. J. Math. 29(1), 43–60 (1978). https://doi.org/10.1007/BF02760401

Gimbert, H., Oualhadj, Y.: Probabilistic automata on finite words: decidable and undecidable problems. In: Abramsky, S., Gavoille, C., Kirchner, C., Meyer auf der Heide, F., Spirakis, P.G. (eds.) ICALP 2010. LNCS, vol. 6199, pp. 527–538. Springer, . Probabilistic automata on finite words: Decidable and undecidable problems (2010). https://doi.org/10.1007/978-3-642-14162-1_44

Halava, V., Harju, T., Hirvensalo, M.: Positivity of second order linear recurrent sequences. Discrete Appl. Math. 154(3), 447–451 (2006)

Halava, V., Harju, T., Hirvensalo, M., Karhumäki, J.: Skolem’s Problem-on the Border Between Decidability and Undecidability. Technical report, Citeseer (2005)

Karimov, T., et al.: What’s decidable about linear loops? Proc. ACM Program. Lang. 6(POPL), 1–25 (2022)

Korthikanti, V.A., Viswanathan, M., Agha, G., Kwon, Y.: Reasoning about MDPs as transformers of probability distributions. In: QEST 2010, Seventh International Conference on the Quantitative Evaluation of Systems, Williamsburg, Virginia, USA, 15–18 September 2010, pp. 199–208. IEEE Computer Society (2010)

Lale, S., Azizzadenesheli, K., Hassibi, B., Anandkumar, A.: Logarithmic regret bound in partially observable linear dynamical systems. Adv. Neural Inf. Process. Syst. 33, 20876–20888 (2020)

Lebacque, J.P., Ma, T.Y., Khoshyaran, M.M.: The cross-entropy field for multi-modal dynamic assignment. In: Proceedings of Traffic and Granular Flow 2009 (2009)

MacCluer, C.R.: The many proofs and applications of Perron’s theorem. Siam Rev. 42(3), 487–498 (2000)

Noutsos, D.: On Perron-Frobenius property of matrices having some negative entries. Linear Algebra Appl. 412(2), 132–153 (2006)

Noutsos, D., Tsatsomeros, M.J.: Reachability and holdability of nonnegative states. SIAM J. Matrix Anal. Appl. 30(2), 700–712 (2008)

Ouaknine, J.: Decision problems for linear recurrence sequences. In: Gąsieniec, L., Wolter, F. (eds.) FCT 2013. LNCS, vol. 8070, pp. 2–2. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40164-0_2

Ouaknine, J., Pinto, J.S., Worrell, J.: On termination of integer linear loops. In: Indyk, R. (ed.) Proceedings of the Twenty-Sixth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2015, San Diego, CA, USA, 4–6 January 2015, pp. 957–969. SIAM (2015)

Ouaknine, J., Worrell, J.: Decision problems for linear recurrence sequences. In: Finkel, A., Leroux, J., Potapov, I. (eds.) RP 2012. LNCS, vol. 7550, pp. 21–28. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33512-9_3

Ouaknine, J., Worrell, J.: Positivity problems for low-order linear recurrence sequences. In: Chekuri, C. (ed.) Proceedings of the Twenty-Fifth Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2014, Portland, Oregon, USA, 5–7 January 2014, pp. 366–379. SIAM (2014)

Ouaknine, J., Worrell, J.: Ultimate positivity is decidable for simple linear recurrence sequences. In: Esparza, J., Fraigniaud, P., Husfeldt, T., Koutsoupias, E. (eds.) ICALP 2014. LNCS, vol. 8573, pp. 330–341. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-43951-7_28

Ouaknine, J., Worrell, J.: On linear recurrence sequences and loop termination. ACM Siglog News 2(2), 4–13 (2015)

Pan, V.Y., Chen, Z.Q.: The complexity of the matrix eigenproblem. In: Proceedings of the Thirty-First Annual ACM Symposium on Theory of Computing, STOC 2099, pp. 507–516, New York, NY, USA. Association for Computing Machinery (1999)

Rump, S.M.: Perron-Frobenius theory for complex matrices. Linear Algebra Appl. 363, 251–273 (2003)

Akshay, S., Balaji, N., Vyas, N.: Complexity of Restricted Variants of Skolem and Related Problems. In Larsen, K.G., Bodlaender, H.L., Raskin, J.F. (eds.) 42nd International Symposium on Mathematical Foundations of Computer Science (MFCS 2017), volume 83 of Leibniz International Proceedings in Informatics (LIPIcs), pp. 78:1–78:14, Dagstuhl, Germany. Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik (2017)

Tiwari, A.: Termination of linear programs. In: Alur, R., Peled, D.A. (eds.) CAV 2004. LNCS, vol. 3114, pp. 70–82. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-27813-9_6

Zaslavsky, B.G.: Eventually nonnegative realization of difference control systems. Dyn. Syst. Relat. Top. Adv. Ser. Dynam. Syst. 9, 573–602 (1991)

Zaslavsky, B.G., McDonald, J.J.: Characterization of Jordan canonical forms which are similar to eventually nonnegative matrices with the properties of nonnegative matrices. Linear Algebra Appl. 372, 253–285 (2003)

Zhang, A., et al.: Learning causal state representations of partially observable environments. arXiv preprint arXiv:1906.10437 (2019)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this paper

Cite this paper

Akshay, S., Chakraborty, S., Pal, D. (2022). On Eventual Non-negativity and Positivity for the Weighted Sum of Powers of Matrices. In: Blanchette, J., Kovács, L., Pattinson, D. (eds) Automated Reasoning. IJCAR 2022. Lecture Notes in Computer Science(), vol 13385. Springer, Cham. https://doi.org/10.1007/978-3-031-10769-6_39

Download citation

DOI: https://doi.org/10.1007/978-3-031-10769-6_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10768-9

Online ISBN: 978-3-031-10769-6

eBook Packages: Computer ScienceComputer Science (R0)