Abstract

In this chapter, the authors outline the characteristics of the contemporary information ecosystem and highlight the particular challenges that make the problem of misinformation even more difficult to solve: the asynchronous nature of information environment; the difficulties of researching the information environment because of limited data made available by the platforms; and the fact that disinformation flows across borders when responses are organised by nation state.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Information ecosystem

- Misinformation

- Disinformation

- Malinformation

- Information disorder

- Internet platforms

- Information environment

4.1 Introduction

By early 2019, social media platforms had started to make some tentative changes to their content moderation policies around vaccine-related health misinformation in response to the measles outbreaks in the USA (DiResta and Wardle 2019). However, the COVID-19 pandemic created an unprecedented situation where stronger action was required. As a result, many of the platforms instituted a range of new policy changes designed to mitigate the impact of COVID-19-related misinformation. Although these policy changes have resulted in key anti-vaccine misinformation accounts being de-platformed, as well as egregious falsehoods being labelled or removed, health misinformation remains a problem on all platforms (Krishnan et al. 2021).

Health misinformation is not only a platform issue. The past two years have demonstrated the impact of low-quality research, as well as the spreading of conspiracy theories by political elites, particularly when these are amplified through newspapers, television networks, and radio stations. In parallel, health misinformation continues to proliferate through conversations around the dinner table and at the school gate. In this chapter, we will focus on explaining the complexity of the current information environment and the challenges that have been exposed during the COVID-19 global public health crisis for those working to mitigate the impact of the infodemic.

4.2 The Information Environment

The information environment is a term used frequently to describe the infodemic but with no clear or agreed definition. There are a number of characteristics within the concept, however, that are critical to an understanding of the current crisis. Firstly, information is transferred through communication, which can be understood through answering five questions: Who? Says what? In which channel? To whom? With what effect? (Lasswell 1948). Certainly, the final question is very difficult to measure (as we discuss below) and, as a result, sweeping generalisations are too often made about the impact of different messages. In order to capture these five questions, Neil Postman (1970) used the metaphor of a media ecology, focusing on understanding the relationship between people and their communications technologies through the study of media structures, content, and impact. More recently, Luciano Floridi (2010) attempted to emphasise the ways in which the information environment constitutes ‘all informational processes, services, and entities, thus including informational agents as well as their properties, interactions, and mutual relations’ (p. 9, emphasis in original).

Terminology around the problem of misinformation has also multiplied and two major analogies are frequently used: that of information warfare (Schwartau 1993), using militarised language and metaphors; and that of information pollution (Phillips and Milner 2021). Wardle and Derakhshan (2017) coined the term ‘information disorder’ as a way of capturing the different characteristics of the current information environment, with an emphasis on types, elements, and phases.

The modern information environment is complex. In 2022, 62.5% of the world’s 7.9 billion people are reported to be internet users and 58.4% of the world population are reported to be using social media (We Are Social 2022). Media outlets are increasingly using paywalls for their business models, and artificial intelligence and advertising to grow their audience and attract traffic to their content (Reuters Institute 2022). Audiences are also increasingly using closed messaging apps to consume and share news. In Brazil alone, 38% of the population uses WhatsApp to share news (Kalogeropoulos 2021).

The COVID-19 pandemic struck in this complex information environment and, as a result, created an infodemic (WHO 2020). With the uncertainty that came with the pandemic, alongside the increasing public demand for information, conspiracy theories and misinformation found fertile ground in which to flourish. One data point for the scale of misinformation is the work done by fact-checkers during this period. The Coronavirus Facts Alliance, coordinated by the International Fact Checking Network, produced more than 16,000 fact-checks in over 40 languages, covering more than 86 countries since the onset of the pandemic (Poynter 2022).

The real-world harm of these rumours and falsehoods quickly became clear when claims started to lead to property damage, serious injury, and loss of life. In Iran, misinformation directly led to the death of a number of people who drank toxic methanol thinking it would protect them from COVID-19 (AlJazeera 2020). In Nigeria, the USA, and a number of countries in South America, cases of chloroquine poisoning were reported, linked to the statement by former US president Donald Trump that had said it could treat COVID-19 (Busari and Adebayo 2020). In the UK, the Republic of Ireland, and the Netherlands, numerous 5G towers were torched because vigilantes believed they were spreading the coronavirus (AP News 2020).

It is important to remember that misinformation has impeded public health responses in the past. For example, in 2003, there was a boycott of the polio vaccine in five northern Nigerian states because it was perceived by some religious leaders to be a plot to sterilise Muslim children (Ghinai et al. 2013). That action led to one of the worst polio outbreaks on the continent and set back wild polio eradication in Africa by nearly two decades. The information environment back then, however, was different from the one we are living in today. The speed that information travels and its real-life impact now is much more acute.

4.3 Challenges Posed by the Modern Information Ecosystem

The networked information ecosystem provides innumerable benefits, most notably giving previously unheard voices a platform and a mechanism to connect (Shirky 2008). However, as has been witnessed over the past few years, this is also leading to a number of serious unintended consequences, particularly in terms of false or misleading information resulting in confusion and dangerous behaviours (Office of the Surgeon General 2021). Over the past few years, it has become increasingly clear that there is no quick-fix.

As we consider the long-term work necessary for understanding and responding to these consequences, we face a number of significant challenges, with three in particular that require consideration within the specific infodemic context: (1) the asynchronous nature of information environments; (2) the difficulties associated with researching these issues due to the complexity of the information environment; and finally (3) the fact that disinformation flows across borders seamlessly, whereas responses are too often organised by nation states.

4.3.1 Asynchronous Nature of Information Environments

The pre-internet design of official communications was top-down, linear, and hierarchical. Limited numbers of news outlets played an inflated role in shaping the way people understood the world. It was designed so that a few trusted messengers – spokespeople, politicians, and news anchors – had the authority to disseminate messages to audiences. While communication theorists in the 1970s (Morley 1974) and 1980s (Hall 1980; Hartley 1987; Katz 1980) challenged the idea that this was a purely passive relationship, emphasising that audiences were active and able to read texts in an oppositional way, the restricted number of outlets, channels, and spaces where people could access information significantly limited the amount of information conveyed and, in almost all cases, guaranteed that only accurate information was being shared.

The advent of the internet transformed this status quo, allowing audiences to become active participants in the creation and dissemination of information. Critically, however, those in official positions today still rely heavily on the traditional model of communication, thinking of the internet as a way to distribute messages more quickly, and to more people, rather than as an opportunity to truly take advantage of the participatory nature of the technology. So, while a news outlet or health authority will use Facebook, Twitter, or Instagram to reach audiences, its use is too often restricted to us simply a ‘broadcast’ mechanism (Dotto et al. 2020).

In contrast, disinformation actors fundamentally understand the mechanics of the internet and the characteristics that make people feel part of something (Starbird et al. 2021). The most effective disinformation actors have understood that community is at the heart of effective communications. Therefore, they have spent time cultivating communities, often by infiltrating existing ones (Dodson et al. 2021), and creating content designed to appeal to people’s emotions (Freelon and Lokot 2020). They also provide opportunities for people to manifest their identification within that community by creating authentic content and messaging. Through that process, they become trusted messengers to recruit and build up the community further. The result is engaging, authentic, dynamic communication spaces, where people feel heard and experience a sense of agency.

Comparing official information environments with communities where disinformation flourishes provides a stark contrast. Official environments are ostensibly more traditional in the sense of being built on facts, science and reason, and rely heavily on text. They are also often structured top-down and rely on people continuing to trust official messengers. The other is built on community, emotion, anecdotes and personal stories, and tends to be far more visual and aural. The characteristics of these spaces align perfectly with the ways in which communities connect offline. They also align closely with the design of social platforms where algorithms privilege emotion and engagement (Schreiner et al. 2021).

Perhaps what is most critical to recognise here is that disinformation actors continue to find vulnerabilities in the traditional information environment. They are also aware that there is less understanding of the dynamics of a networked environment by official messengers who, unfortunately, still prepare as if it was 1992 rather than 2022. For example, disinformation actors will search for statistics or headlines that can be shared without context to tell a worrying or dangerous story, knowing that while it is accurate within the full context, when there is only a visual or headline (which is often all that is shown on social platforms), it will be the misleading content that takes hold (Yin et al. 2018).

Disinformation actors instigate dialogues in order to create opportunities to advance their opinions; for example, they pose a simple question on Facebook, such as asking whether people are concerned about vaccines impacting their fertility, and then utilise the comments by pushing bogus or misleading research that can lead people to reach false conclusions (DiResta 2021). Alternatively, disinformation actors can target journalists by pretending to be trusted sources but push false anecdotes or content in the hope that it will be covered by an outlet with a larger audience than that which they personally have access to (McFarland and Somerville 2020).

4.3.2 Difficulties of Researching the Information Environment

As already stated in Sect. 4.2, the information environment today is incredibly complex. Those studying media effects have continued to struggle with the challenges of measuring audience consumption of different media products (Allen 1981). While there have been ways of measuring television and radio exposure, understanding levels of engagement has always been problematic. For example, if someone has the television news on in the background all day, does it have the same impact as someone sitting down to watch their favourite hour-long soap opera in the evening? More challenging, of course, is an understanding of the intersection between traditional media content and offline conversations with peers. Back in the 1950s, Paul Lazarsfeld and Elihu Katz (Lazarsfeld et al. 1944; Katz and Lazarsfeld 1955) described a two-step flow theory, which incorporated the concept that ideas were rarely transmitted directly to audiences and, instead, people were persuaded when those same ideas were passed through opinion leaders.

The problems emphasised by communication scholars for decades are now complicated further by the intersection between off-line communications and professional broadcast media with online spaces, whether they are websites accessed via search engines, posts on social networks or closed groups on Facebook, or messaging apps such as WhatsApp, Telegram, or WeChat (de Vreese and Neijens 2016).

Globally, people are spending, on average, 170 min online every day, with an additional 145 min on social media (Statista 2021). For the majority, this time is being spent on smartphones rather than desktops. In addition, everyone’s daily diet of online activity and consumption is different. No two people’s search histories, newsfeeds, or chat history look the same. As such, there is no effective method for collecting an accurate picture of what people are consuming, and from where, without which makes measuring the direct impact of messages a seemingly impossible task.

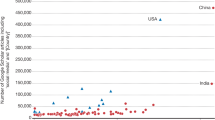

Researchers are doing their best to unpick these dynamics, but they face serious challenges. It is incredibly difficult to access data from social media platforms. The one exception is Twitter, where the platform either releases particular datasets or researchers are able to access the ‘firehose’ of tweets relatively easily (Tornes 2021). As a result, the vast majority of research on misinformation focuses on Twitter. While better than nothing, Twitter is, however, not the most popular platform and is rarely used in many countries (Mejova et al. 2015).

While it is possible to conduct some research with Facebook and Instagram data, it is limited by what is available via Crowdtangle, a tool owned by Facebook. However, this has been documented to have a number of limitations by researchers and journalists attempting to use it to undertake research. YouTube research is also possible, but again not easy. Those who have studied the platform have focused more on the impact of the algorithm on search results.

In many parts of the world, the most popular digital platform is WhatsApp (Statista 2022). However, the encrypted nature of the platform means research is seriously limited and reliant on tiplines or joining groups, both of which have significant limitations in terms of sampling. More importantly, the absence of engagement data means it is impossible to see how many people have viewed a particular post.

Much work has been done in terms of attempting to pressure platforms into sharing data (EDMO 2022). Certainly, there are very significant issues around privacy that have to be addressed. The ability to identify someone via the information they search for or consume is disturbingly easy. As such, platforms have pushed back on ethical grounds with regards to sharing data without the required protections in place. Social Science One,Footnote 1 a project in partnership with Facebook, is one example of a comprehensive and sophisticated attempt at providing necessary protections. However, although the data was shared after a complex de-identification platform was built, problems with the data were revealed in 2021 that undermined the whole exercise (Timberg 2021).

There have also been interesting attempts at citizen science approaches to studying the platforms. For example, ProPublica and The Markup, two US-based non-profit newsrooms, built browser extensions, the ‘Political Ad Collector’ (Merrill 2018) and the Citizen Browser (The Markup 2020), respectively. These browser plugins require user agreement to share the results of the content that appears on platforms via their browsers. It is a potentially promising avenue, but building an acceptance of ‘donating your data’ to science seems to be a long way off.

4.3.3 Cross-Border Disinformation Flows

The networked information environment is borderless. While languages work as something of a preventative measure, diaspora communities encourage the flow of information across borders (Longoria et al. 2021). In a world of visuals, memes, diagrams, and videos (with automated translated closed captions), a rumour can travel from Sao Paulo to Istanbul to Manila in seconds. For example, researchers have been able to track the transnational flow of rumours between Francophone countries (Smith et al. 2020). Genuine information can also travel but without context, or with mistakes in translation, it can turn into a rumour or piece of misleading information just as fast.

Disinformation actors use this situation to their advantage. The anti-vaccine movement, in particular, has been seen to build momentum in one place before taking advantage of personal connections in other countries via closed groups and large accounts. For example, research by First Draft analysed the ways in which anti-vaccine disinformation narratives flowed from the USA to western African countries (Dotto and Cubbon 2021). That such a process was taking place also became clear during the measles outbreak in Samoa in spring 2019. US-based anti-vaccine activists were infiltrating Facebook groups in the island nation to push rumours and falsehoods about the efficacy of vaccines against the disease. This activity was judged to have directly impacted subsequent vaccine uptake (BBC News 2019).

Over the past two years, there has been significant evidence of anti-mask and anti-vaccine activists based in the USA pushing narratives in western Europe and Australia. The conspiracy theory QAnon, which started as a specifically US phenomenon, has also been transported to many locations around the world, with different countries and cultures focusing on the parts of the conspiracy that resonate most strongly. Unfortunately, while disinformation flows across borders, this is much less common in terms of accurate information. Anti-disinformation initiatives such as fact-checking groups or media literacy programs, government regulation, and even funding mechanisms are almost entirely organised around nation-states.

Finally, while platform content moderation is starting to catch problematic content in English, we are aware that it falls short in other languages and cultures (Horwitz 2021; Wong 2021). Other than those headquartered in China, all social media platforms are based in Silicon Valley in the USA. As such, most of the research is being undertaken in the USA, and many of the initiatives are US based and funded by US philanthropists. This disproportionate response around one language, and one country, means the complexity of this truly global, networked problem is being overlooked and misunderstood.

4.4 Conclusion

We need to build an information environment where those relying on disseminating accurate messaging recognise the need to understand the networked dynamic attributes of today’s communication infrastructure. There needs to be new ways of making communication peer-to-peer, engaging, participatory, and where people feel they are being heard and have a part to play. Content needs to be much more visual, engaging and authentic to different communities, rather than designed top-down for mass broadcast and dissemination.

All those working in the information environment, from journalists, to health authority spokespeople, to healthcare practitioners, need to be trained in the mechanics of the modern communication environment so they are prepared for all the mechanisms that are being utilised.

While social media platforms should continue to be pressured to build systems for independent research that protects the privacy of users, there also needs to be more creative mechanisms for building research questions with impacted communities so that consent can be built in from the very beginning. Bringing people into the research process not only allows for more innovative research to take place, but asking people to be involved in the collection and sharing of their data will play an important role in terms of educating people about the ways in which algorithms impact what they see. This should also help kick-start a conversation about the type of information people are seeing on their social media feeds, what they think is appropriate, and what is not.

Disinformation actors generally think globally, either from the start of their campaigns, or by taking advantage once they see that disinformation has taken off and crossed borders. Platforms, too, are globally focused, potentially avoiding individual jurisdictions. Yet our responses to disinformation are too often at the national level and have a disproportionate focus on the USA. The response needs to be as global as the problem.

Notes

References

Al Jazeera (2020) Iran: over 700 dead after drinking alcohol to cure coronavirus. https://www.aljazeera.com/news/2020/4/27/iran-over-700-dead-after-drinking-alcohol-to-cure-coronavirus

Allen R (1981) The reliability and stability of television exposure. Commun Res 8(3):233–256

AP News (2020) Conspiracy theorists burn 5G towers claiming link to virus. https://apnews.com/article/health-ap-top-news-wireless-technology-international-news-virus-outbreak-4ac3679b6f39e8bd2561c1c8eeafd855

BBC News (2019) Samoa arrests vaccination critic amid deadly measles crisis, 6 December. https://www.bbc.com/news/world-asia-50682881

Busari S, Adebayo B (2020) Nigeria records chloroquine poisoning after Trump endorses it for coronavirus treatment. CNN, 23 March. https://www.cnn.com/2020/03/23/africa/chloroquine-trump-nigeria-intl/index.html

de Vreese CH, Neijens P (2016) Measuring media exposure in a changing communications environment. Commun Methods Meas 10(2–3):69–80

DiResta R (2021) The anti-vaccine influencers who are merely asking questions. The Atlantic, 24 April. https://www.theatlantic.com/ideas/archive/2021/04/influencers-who-keep-stoking-fears-about-vaccines/618687/

DiResta R, Wardle C (2019) Online misinformation around vaccines. In: Meeting the challenge of vaccine hesitancy. The Sabin-Aspen Vaccine Science & Policy Group, pp 137–173. https://www.sabin.org/sites/sabin.org/files/sabin-aspen-report-2020_meeting_the_challenge_of_vaccine_hesitancy.pdf

Dodson K, Mason J, Smith R (2021) Covid-19 vaccine misinformation and narratives surrounding Black communities on social media. First draft. https://firstdraftnews.org/long-form-article/covid-19-vaccine-misinformation-black-communities/

Dotto C, Cubbon S (2021) Disinformation exports: how foreign anti-vaccine narratives reached West African communities online. First draft. https://firstdraftnews.org/long-form-article/foreign-anti-vaccine-disinformation-reaches-west-africa/

Dotto C, Smith R, Looft C (2020) We’ve relied for too long on an outdated top-down view of disinformation. First draft. https://firstdraftnews.org/articles/the-broadcast-model-no-longer-works-in-an-era-of-disinformation/

EDMO (2022) Report of the European Digital Media Observatory’s Working Group on Platform-to-Researcher Data Access. European Digital Media Observatory. https://edmoprod.wpengine.com/wp-content/uploads/2022/02/Report-of-the-European-Digital-Media-Observatorys-Working-Group-on-Platform-to-Researcher-Data-Access-2022.pdf

Floridi L (2010) Information: a very short introduction, vol 225. Oxford University Press, Oxford

Freelon D, Lokot T (2020) Russian disinformation campaigns on Twitter target political communities across the spectrum. Collaboration between opposed political groups might be the most effective way to counter it. Harvard Misinf Rev 1(1). http://nrs.harvard.edu/urn-3:HUL.InstRepos:42401973

Ghinai I, Willott C, Dadari I, Larson HJ (2013) Listening to the rumours: what the northern Nigeria polio vaccine boycott can tell us ten years on. Glob Public Health 8(10):1138–1150. https://doi.org/10.1080/17441692.2013.859720

Hall S (1980) Encoding/decoding. In: Hall S, Hobson D, Lowe A, Willis P (eds) Culture, media, language: working papers in cultural studies. Hutchinson, London, pp 128–138

Hartley J (1987) Invisible fictions: television audiences, paedocracy, pleasure. Text Pract 1(2):121–138

Horwitz J (2021) The Facebook files. The Wall Street Journal. https://www.wsj.com/articles/the-facebook-files-11631713039

Kalogeropoulos A (2021) Who shares news on mobile messaging applications, why and in what ways? A cross-national analysis. Mobile Media Commun 9(2):336–352. https://doi.org/10.1177/2050157920958442

Katz E (1980) On conceptualising media effects. Stud Commun 1:119–141

Katz E, Lazarsfeld PF (1955) Personal influence. Free Press, New York

Krishnan N, Gu J, Tromble R, Abroms LC (2021) Research note: examining how various social media platforms have responded to COVID-19 misinformation. Harvard Kennedy School Misinf Rev 2(6). https://doi.org/10.37016/mr-2020-85

Lasswell HD (1948) The structure and function of communication in society. Commun Ideas 37(1):136–139

Lazarsfeld PF, Berelson B, Gaudet H (1944) The people’s choice: how the voter makes up his mind in a presidential campaign. Columbia University Press, New York

Longoria J, Acosta-Ramos D, Urbani S, Smith R (2021) A limiting lens: how vaccine misinformation has influenced hispanic conversations online. First draft. https://firstdraftnews.org/long-form-article/covid19-vaccine-misinformation-hispanic-latinx-social-media/

McFarland K, Somerville A (2020) How foreign influence efforts are targeting journalists. The Washington Post, 29 October. https://www.washingtonpost.com/politics/2020/10/29/how-foreign-influence-efforts-are-targeting-journalists/

Mejova Y, Weber I, Macy M (2015) Twitter: a digital socioscope. Cambridge University Press, Cambridge

Merrill J (2018) New partnership will help us hold Facebook and campaigns accountable. ProPublica, 8 October. https://www.propublica.org/nerds/new-partnership-will-help-us-hold-facebook-and-campaigns-accountable

Morley D (1974) Reconceptualising the media audience: towards an ethnography of audiences. Center for the Study of Cultural Studies. University of Birmingham.

Office of the Surgeon General (2021) Confronting health misinformation: the surgeon general’s advisory on building a healthy information environment. https://www.hhs.gov/sites/default/files/surgeon-general-misinformation-advisory.pdf

Phillips W, Milner RM (2021) You are here: a field guide for navigating polarized speech, conspiracy theories, and our polluted media landscape. MIT Press, Cambridge, MA

Postman N (1970) The reformed English curriculum. In: Eurich AC (ed) High school 1980:the shape of the future in American secondary education. Pittman, New York, pp 160–168

Poynter (2022) CoronaVirusFacts Alliance. https://www.poynter.org/coronavirusfactsalliance/

Reuters Institute (2022) Journalism, media, and technology trends and predictions 2022. Reuters Institute for the Study of Journalism. https://reutersinstitute.politics.ox.ac.uk/journalism-media-and-technology-trends-and-predictions-2022

Schreiner M, Fischer T, Riedl R (2021) Impact of content characteristics and emotion on behavioral engagement in social media: literature review and research agenda. Electron Commer Res 21(2):329–345

Schwartau W (1993) Information warfare: chaos on the electronic superhighway. Thunder’s Mouth Press, New York

Shirky C (2008) Here comes everybody: the power of organizing without organizations. Penguin Press, New York

Smith R, Cubbon S, Wardle C (2020) Under the surface: Covid-19 vaccine narratives, misinformation and data deficits on social media. First Draft. https://firstdraftnews.org/wp-content/uploads/2020/11/FirstDraft_Underthesurface_Fullreport_Final.pdf?x58095

Starbird K, Ahmer A, Wilson T (2021) Disinformation as collaborative work: surfacing the participatory nature of strategic information operations. University of Washington. https://faculty.washington.edu/kstarbi/StarbirdArifWilson_DisinformationasCollaborativeWork-CameraReady-Preprint.pdf

Statista (2021) Internet usage worldwide – statistics & facts, 3 September. https://www.statista.com/topics/1145/internet-usage-worldwide/#dossierKeyfigures

Statista (2022) Most popular social networks worldwide as of January 2022, ranked by number of monthly active users. https://www.statista.com/statistics/272014/global-social-networks-ranked-by-number-of-users/

The Markup (2020) The Citizen Browser Project– auditing the algorithms of disinformation, 16 October. https://themarkup.org/citizen-browser

Timberg C (2021) Facebook made big mistake in data it provided to researchers, undermining academic work. The Washington Post, 10 September. https://www.washingtonpost.com/technology/2021/09/10/facebook-error-data-social-scientists/

Tornes A (2021) Enabling the future of academic research with the Twitter API. Twitter Blog, 26 January. https://blog.twitter.com/developer/en_us/topics/tools/2021/enabling-the-future-of-academic-research-with-the-twitter-api

Wardle C, Derakhshan H (2017) Information disorder: toward an interdisciplinary framework for research and policy making. Council of Europe. https://rm.coe.int/information-disorder-toward-an-interdisciplinary-framework-for-researc/168076277c

We Are Social (2022) Digital 2022: another year of bumper growth. We Are Social UK, 26 January. https://wearesocial.com/uk/blog/2022/01/digital-2022-another-year-of-bumper-growth-2/

Wong JC (2021) Revealed: the Facebook loophole that lets world leaders deceive and harass their citizens. https://www.theguardian.com/technology/2021/apr/12/facebook-loophole-state-backed-manipulation?CMP=Share_iOSApp_Other

World Health Organization (2020) Dr. Tedros Munich conference speech. https://www.who.int/director-general/speeches/detail/munich-security-conference

Yin L, Roscher F, Bonneau R, Nagler J, Tucker J (2018) Your friendly neighborhood troll: the Internet Research Agency’s use of local and fake news in the 2016 presidential campaign. SMaPP Data Report:2018:01. New York University. https://smappnyu.wpcomstaging.com/wp-content/uploads/2018/11/SMaPP_Data_Report_2018_01_IRA_Links_1.pdf

Acknowledgments

We would like to thank Elisabeth Wilhelm for acting as a wonderful sounding board for a number of the ideas featured in this chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access Some rights reserved. This chapter is an open access publication, available online and distributed under the terms of the Creative Commons Attribution-NonCommercial 3.0 IGO license, a copy of which is available at (http://creativecommons.org/licenses/by-nc/3.0/igo/). Enquiries concerning use outside the scope of the licence terms should be sent to Springer Nature Switzerland AG.

The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of WHO concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. Dotted and dashed lines on maps represent approximate border lines for which there may not yet be full agreement.

The mention of specific companies or of certain manufacturers' products does not imply that they are endorsed or recommended by WHO in preference to others of a similar nature that are not mentioned. Errors and omissions excepted, the names of proprietary products are distinguished by initial capital letters.

Copyright information

© 2023 WHO: World Health Organization

About this chapter

Cite this chapter

Wardle, C., AbdAllah, A. (2023). The Information Environment and Its Influence on Misinformation Effects. In: Purnat, T.D., Nguyen, T., Briand, S. (eds) Managing Infodemics in the 21st Century . Springer, Cham. https://doi.org/10.1007/978-3-031-27789-4_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-27789-4_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-27788-7

Online ISBN: 978-3-031-27789-4

eBook Packages: MedicineMedicine (R0)