Abstract

It is argued that the concepts of mission-oriented innovation policy and also of the entrepreneurial state will lead to the implementation of policies that are highly vulnerable to behavioral biases and the inefficient use of heuristics. In political practice, we can therefore not expect efficient mission-oriented policies. In particular, I argue that missions as a political commitment mechanism intended to devote massive resources to a specific cause will often only work if biases like the availability bias and loss aversion are deliberately used in order to secure voter consent. Furthermore, I also argue that the argument used by Mazzucato (Mission Economy: A Moonshot Guide to Changing Capitalism. London: Penguin UK, 2021) herself also contains several behavioral biases.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

JEL Codes

Introduction

The concepts of the entrepreneurial state and of mission-oriented policymaking have been subjected to thorough criticism from innovation economics and also from political economics. The latter criticism recognizes specifically how policymakers may be driven by motives other than promoting welfare-enhancing innovation projects. From this perspective, the power given to governments in defining and executing missions likely is another lever for special interest policies with adverse effects on overall economic efficiency.

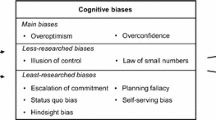

What is missing so far, however, appears to be a reading of these concepts from the point of view of behavioral economics. I make a first attempt to close this gap somewhat with this contribution. I begin by giving a brief overview over the emerging field of behavioral political economy, with some emphasis on typical biases and heuristics that matter for innovation policy in general. This is followed by a discussion of why the concept of a mission-oriented innovation policy is particularly susceptible to behavioral biases, and why an efficient application of this concept is unlikely. I then argue that Mazzucato’s (2021) argument for mission-oriented policies itself suffers from behavioral biases. In other words, not only will the application fail due to biases but the very concept itself as it emerged on the market for ideas contains major biases. Finally, the last section concludes.

Behavioral Political Economy in Innovation Policy

What Is Behavioral Political Economy?

Before taking a closer look at Mazzucato’s specific understanding of the state as an entrepreneur from a behavioral perspective, it is useful to briefly discuss on a more general level how departures from fully rational decision-making can have an influence on policymakers when deciding on innovation policy. In addition to the behavioral element, I also depart from the still popular assumption of welfare-maximizing policymakers and follow the standard public choice assumption of politicians, voters, and bureaucrats pursuing self-interested motives (see Schnellenbach and Schubert 2015, 2019).

Systematic deviations from full rationality are empirically well-established, and their existence is now also widely accepted in mainstream economics. Being heavily influenced by psychologists and their research methodology (Camerer and Loewenstein 2004), behavioral economics does not focus on deductive, axiomatic reasoning as theoretical microeconomics does, but on the empirical identification of typical patterns in individual decision-making. This has led to a more realistic but also a more complicated understanding of how decisions are formed and what influences them. Even a short glance into current textbooks on behavioral economics (e.g., Wilkinson and Klaes 2018; Angner 2021) shows that the established catalogue of observed biases, heuristics, and other deviations from neoclassical rationality is large. And it is not always clear which of these deviations are active or even dominant in a particular setting.

The many degrees of freedom that one often has in applying behavioral approaches to a particular decision-making situation often make it difficult to predict ex ante how an individual will behave. There may, after all, be different decision-making biases at work; they may even be counteracting each other; and they may be of different relative importance in different individuals. But nevertheless, there often are typical patterns of behavior. In explaining observed behavior (both in the laboratory and in the field) ex post, behavioral economics can be very powerful. And if a certain bias or a certain heuristic is consistently observed to matter in a certain decision situation, then the behavioral approach also gains predictive power (Angner 2021, pp. 252–254).

There is no reason to assume that innovation policy is not susceptible to the same behavioral influences that also affect decisions in other areas. If anything, the presence of complexity and uncertainty in innovation policy leaves room for a relatively greater impact of simple heuristics and behavioral biases on decision-making (Schnellenbach and Schubert 2019). Consider, for example, the discussion on national and regional systems of innovation, defined by Freeman (1987) as a network of private and public sector institutions that facilitate the interaction of individuals and organizations in innovation processes. These networks are complex, and while a comparative analysis of different systems of innovation may lead to hints at underdeveloped links within a particular system of innovation, it is far from clear that a single political intervention will causally improve its performance. For example, Frenken (2017) argues that the claim by Mazzucato that Europe should emulate government funding schemes from the United States may be unwarranted, because other important elements of the American innovation system, such as strong private research universities and a large military sector, are missing.

This high degree of complexity of innovation policy, combined with the frequent lack of clear-cut causal evidence on the effectiveness and efficiency of single policy measures, often invites reliance on intuitive reasoning, as well as the use of heuristics. It also leaves room for giving preference to policies that are in line with broader political prejudices and biases that every individual inhibits to a certain extent. This is not a new insight, and not even one specific to behavioral political economy. In an influential paper, Denzau and North (1994) already argued that under conditions of uncertainty and complexity, what they called shared mental models influence and facilitate decision-making. Communication, not only face-to-face but also through mass media, allows large groups to develop shared perceptions of how the world works and which policies may be successful or not. Recently, this line of research has been rejuvenated as narrative economics (Shiller 2019). Roos and Reccius (2023) argue that collective narratives are often the basis of economic policymaking, both in terms of agreeing upon policy objectives, and in terms of making sense of causal relationships in policymaking.

It is important to note that such narratives develop in a path-dependent fashion. They ought not to be expected to be the result of unbiased deliberation and Bayesian updating according to incoming new information. Rather, the collective nature of the process of finding a common narrative and the mutual expectation among individuals to stick to a narrative once it has been agreed upon often lead to persistence of interpretations of the world even if they could already be identified as factually wrong with available data (Schnellenbach 2005). In stabilizing narratives once they have emerged, not only interpersonal influences such as peer pressure play a role but also intrapersonal mechanisms.

Caplan (2005) coined the term “rational irrationality” to describe this phenomenon. He implies that individuals can have a preference for holding beliefs that are irrational in the sense that they are objectively false. The reason for a rational demand for irrational beliefs is the very limited damage they do individually in the political sphere. While inaccurate beliefs are likely to be quickly punished in terms of individual income losses in private decisions, an individual who reckons that she is one of millions with virtually no immediate influence on the collective decision can harbor false beliefs at no cost. Why should she do so? Because the zero cost is being outweighed by positive benefits. These may consist in being in line with her peer group. But they also may consist in the pleasure of holding beliefs with expressive value (see Hillman 2010; Hamlin and Jennings 2011). An individual who generally considers herself to be a supporter of free markets would therefore attempt to hold and defend beliefs that underpin this general orientation. They have expressive value for her, because they signal the support for policies that are in line with her general personal and political orientation.

In sum, the coincidence of complexity and absence of unambiguous, uncontested evidence of causal relations on the one hand, and low to no immediate punishment for individual errors in judgment make it easy for citizens/voters to be guided by faulty or oversimplifying narratives and to act according to other behavioral biases. One might argue that the situation is different for professional politicians, who are much more likely to be punished, e.g., at the ballot, for bad decisions with unsatisfactory outcomes. But politicians themselves are constrained in their actions by dominating public narratives. And they can even use them deliberately to their advantage, for example, by framing policies that actually serve influential vested interests in accordance with some popular narrative (Schnellenbach and Schubert 2015). It is therefore highly unlikely that we will observe benevolent, rational welfare-maximizers in the political arena. Rather, we will observe voters and political professionals who are both influenced by behavioral biases and who deliberately use behavioral biases to their own advantage.

Behavioral Political Economy in Innovation Policy

It can be argued that in practical innovation policy, behavioral biases and rational irrationality frequently play a role. Clearly, I cannot give an exhaustive overview here, but a few examples, drawing to a great extent from Schnellenbach and Schubert (2019), can serve as an illustration. One example is the overconfidence bias. Since early experimental studies by Alpert and Raiffa (1969), we know that under uncertainty, individuals tend to have too high confidence in their own judgments. There is also evidence indicating that individuals are particularly overconfident in areas where they have some expertise (e.g., Liu et al. 2017). Angner (2006) discusses a case study of economists acting as experts in policy advice and finds supporting evidence for the hypothesis that overconfidence matters in economic policy consulting, and learning from experience is imperfect. He argues that overconfidence in this area may be amplified because only experts who are very confident in their own judgment decide to enter the business of policy consulting in the first place.

One immediate effect of overconfidence in expert judgments is, plain and simple, bad policy advice. If a choice between different projects is to be made for subsidizing innovation with public funds, experts or politicians involved in the decision may be subconsciously driven by their own prejudices and preferences and decide accordingly in favor of supporting projects that a completely independent and unbiased individual would not have chosen. If overconfidence occurs, it may also present itself as a willingness to overpay once a decision has been made (Massey and Thaler 2013). Individuals become so convinced of the choice they have made that they begin to overestimate the returns associated with their choice drastically.

In the realm of innovation policy, this implies that the overconfidence bias is particularly threatening if the discretionary leeway of politicians and bureaucrats is large. While a broad and rule-based system of subsidizing innovation, e.g., through amplified tax credits for R&D-spending, would be largely immune to the overconfidence bias, a system relying on experts picking winners for discretionary subsidies would be extremely susceptible. Anecdotal evidence on cases where projects for innovation that eventually failed were picked is abundant. But this is not a problem in itself: Clearly, not every subsidized project can succeed. However, evidence indicates that politicians and bureaucrats are not more successful in picking winners (Elert and Henrekson 2022, pp. 360–361; Kirchherr et al. 2023). If anything, they are less successful (Murtinu et al. 2022). One important explanation for this may be that private venture capital firms risk significant economic losses if they do not learn to de-bias their process of decision-making to some extent.

Another question is how decisions on the winners to be picked are made. Real-world selection processes can often be convincingly criticized with standard political economy arguments. The risk of rent seeking and other types of favoritism granted to well-connected interest groups obviously exists. In some empirical studies, it is found that a Matthew effect in receiving innovation grants exists. Firms that already have received a number of grants are more likely to receive another one (Czarnitzki and Hussinger 2018). The explanations for this phenomenon are diverse. One is the establishment of a stable rent-seeking relationship between firms and politicians. Another explanation is that firms learn to specialize in writing successful grants (Karlson et al. 2020). This can be problematic, because those firms that are successful grant writers are not necessarily also the most efficient in putting subsidies to good use, as many third-party-funded academics also know. A third explanation is that politicians and bureaucrats may use the success of past grant applications as a heuristic to gauge the expected success of future projects (Antonelli and Crespi 2013). This does not mean that these firms have also been extremely successful in actually producing innovations with past grants. Political decision-makers normally have no means to evaluate the efficiency of past grant usage relative to the hypothetical performance of other firms. Rather, having received a grant and not having failed (or at least not having failed too miserably) serves as a heuristic for future grant-worthiness.

Clearly, using this heuristic does not systematically lead to an efficient allocation of grants, but primarily to a very defensive, risk-averse allocation: Those who have not done too much damage in the past are likely to receive money in the future. It can be politically rational to act in such a way if the political cost of large errors in picking winners is significant; in this case, one rather aims at avoiding picking losers. And there is another problem in this process: Whether a subsidized firm has failed or not is sometimes determined not in an objective evaluation, but in the creation of a positive narrative. Collin et al. (2022) find a strong positive bias in a sample of 110 evaluations for Swedish innovation policy and cast strong doubt on the objectivity of these evaluations.

Political costs associated with acknowledging failure may also exacerbate a pre-existing sunk-cost fallacy. An unhinged overconfidence bias will also lead to attempts of denying failure if denial is still possible. Projects are continued over an inefficiently long period of time, burning public funds. This well-known bias in decision-making also is easier to be left uncorrected if public, rather than private funds, finances the continuation of a failed project. A famous example in economic history is the development and production of the Concorde airplane, which also led to the use of the term “Concorde fallacy” in this context. According to Bletschacher and Klodt (1992), it was clear from relatively early on that, with permanently increasing kerosene prices, the project was most likely to be economically unsuccessful. Nevertheless, backed by the soft budget constraints secured through industrial policy and a political reluctance to write off sunk investments, the development was continued, and, once the planes were produced, they remained in service for decades even though employing them was profitable only for short periods.

These are only a few examples of how biases and heuristics can negatively influence innovation policy if it is characterized by a large scope for discretionary decision-making. I will discuss further examples when engaging with the entrepreneurial state and mission-oriented policymaking directly in the following two sections. An important takeaway thus far is that from a narrow behavioral perspective, rule-based and broad innovation policies that do not aim to define and implement single missions or pick winners to receive subsidies would be preferred (Schnellenbach and Schubert 2019).

Is the Mission-Oriented Entrepreneurial State Susceptible to Behavioral Biases?

Mission Orientation as a Political Commitment

Behavioral biases in political decisions are ubiquitous, and not only in innovation policy. The discussion so far shows that limiting discretionary scope and implementing rule-based policies instead may limit the damage done by biases. But it is not always possible to rely solely on these rule-based types of programs. However, the ideas of an entrepreneurial state and of mission-oriented innovation policy propose a particularly far-reaching, active role for politicians and bureaucrats. It is therefore an interesting question if, and if yes, in which way these concepts are particularly prone to behavioral influences that limit their expected economic efficiency.

It is useful to start with the basics: What exactly is mission orientation in innovation policy? Interestingly, Mazzucato (2021) does not rely on a formal, dry academic definition of mission orientation, but primarily uses historical examples to illustrate her understanding of a mission economy. In general, mission-oriented policies have the objective to “…target the development of specific technologies in line with state-defined goals (mission)” (Robinson and Mazzucato 2019, p. 938; emphases as in the original). In doing so, governments or government agencies are supposed to actively create new markets, for example, by introducing new goods or by guaranteeing a demand for new products that the private sector needs to develop. But if we look at the Apollo program, which is the case study Mazzucato (2021) chiefly uses to motivate her concept, another important characteristic becomes clear: Missions serve as a commitment device for governments.

When Kennedy declares that he will put Americans on the moon, regardless of the cost of doing so, he makes a political commitment to dedicate all resources necessary to reach this goal. And he knows that the political cost, in terms of a loss of reputation, will be tremendous if he (or his successor in office) fails. But we cannot be sure that this kind of commitment always works. It did in the case of the Apollo program, and one reason might have been the peculiar situation of the Cold War. Many ordinary American citizens demanded a big success story for their space program to signal technological superiority after the Sputnik shock. Being able to beat the Soviet Union in the race to the moon had an extraordinarily high symbolic value, and a large majority of the population was willing to devote substantial resources to this cause. Under those circumstances, the government’s commitment had a binding effect. The negative effect of failure would have been too high.

Would the commitment mechanism also work without such strongly aligned preferences? Not necessarily. A recent example is the 2022 announcement by the German government, in the face of the new Russian threat, to set up a fund of EUR 100 billion to acquire and develop better military material and to sustainably reach the NATO goal of spending 2 percent of GDP on defense annually. Even with Russian military aggressiveness presenting itself as a threat in the immediate neighborhood, this self-commitment has recently been watered down in terms of reaching the 2 percent goal after a general tightening of the fiscal leeway available to the German federal government. Due to a lack of salience and popularity of the issue in the German political debate, reneging on the earlier commitment was possible at low (if any) political cost for the government.

A change of priorities within government is one possible reason for a mission to be abandoned. A change of political priorities among voters is another. It is unlikely that governments will pursue a mission-oriented innovation policy against strong opposition within a population in any democracy.

Loss Aversion

In addition to the Apollo program, Mazzucato (2021, p. 92) also uses Covid vaccine development as an example for a successful innovation mission. It is interesting that she does so without mentioning the Biontech-Pfizer Covid vaccine anywhere in her book, while Moderna being helped and guided by the US government’s DARPA agency is used to illustrate the potential of mission-oriented innovation policy. In a sense, Biontech is a good counterexample: The firm has existed as a research firm for over a decade before producing its Covid vaccine. Before, it focused on using the mRNA technology to treat cancer, and while having produced important knowledge in basic research, it had no market-ready product before the pandemic. Biontech had also never been a part of any mission-oriented scheme of innovation policy. It had received some government research grants, but these were on a similar scale as normal research grants received by university researchers, and they were granted for well-defined, smaller projects, not for missions. Biontech never received a large grant before the fall of 2020. At this stage, the vaccine development had already been completed, and the purpose of the grant was to speed up the final stages of clinical trials and to enable the rapid buildup of production facilities. At this point in time, the German government did not conduct a mission-oriented innovation policy; it simply rewarded the massive positive externalities of a quick and broad vaccine rollout. Biontech is an example that shows how private entrepreneurship and serendipity, rather than government planning, result in a highly successful innovation.

What is more interesting in our context, however, is what Moderna/DARPA and the Apollo program have in common. They were both started in what behavioral economists call a loss frame. Within the framework of prospect theory, a behavioral and empirically founded alternative to neoclassical decision theory (see Kahneman and Tversky 1979), expected losses and gains are evaluated differently, starting from any given status quo reference point. Supported by extensive empirical research, the theory assumes that losses are generally associated with a larger marginal disutility compared to the positive marginal utility of gains. Individuals are loss averse. This empirical regularity in individual decision-making can be deliberately exploited, if individuals are put in a loss frame (Tversky and Kahneman 1981). One way of doing so is to present them with a decision situation in a way that strongly emphasizes losses, as well as the potential to avoid these losses by decisive action. Putting individuals in a loss frame will increase the willingness to take risks if the risks are associated with a chance to avoid the strongly negative outcome.

This has been the case both in the Moderna/DARPA and in the Apollo cases. Enduring a longer pandemic without a vaccine would have been associated with extremely high losses, both in terms of health, lives, and negative economic results. Losing the space race against the Soviet Union similarly would not only have led to a reputation loss but also been interpreted as an indicator of technological backwardness and ineffectiveness relative to the socialist Soviet Union, i.e., of negative real effects. In both cases, making the case for a loss frame was plausible, and a strong political support for investing large amounts of resources into the proposed missions could be mobilized. This is different in the third example mentioned above. Contrary to fears present in 2022, the war started by Russia in Ukraine now seems to be limited to Ukraine. The risk of the conflict spreading over to Western Europe is generally perceived as very low. Accordingly, it is not surprising that the German political debate seems to gradually leave the loss frame, and political support for investing heavily into a mission in defense policy is now much smaller than it was a year before.

In Mazzucato’s 2021 book, the creation of loss frames is a central means to motivate the analysis. In Chap. 2, entitled “Capitalism in Crisis” she discusses, inter alia, distributional issues, the supposed fragility of the financial sector, the supposed short-sightedness of private business decisions, and global warming. Some of these issues have little or nothing to do with innovation policy. The purpose of that chapter appears to be the establishment of a loss frame: Markets are leading us to negative, potentially even catastrophic outcomes, and only strong interventionist governments can prevent those outcomes. The same argumentative pattern is used when the so-called Green New Deal is discussed (Mazzucato 2021, pp. 99–104). And in fact, this allows Mazzucato to argue in a very simplistic fashion: If we do nothing, the outcomes are catastrophic, therefore we must do something, and in this case that something is the Green New Deal, which she uses as another example for mission-oriented policymaking. What she does not do, however, is to establish that the Green New Deal is the most efficient policy choice, or the one leading to success with a higher probability than others. For her argument, once loss aversion is triggered in the reader, it suffices to argue that this is something that can be done.

Picking Missions

Another interesting question concerns which innovation policy missions should be selected, and which will be selected. Larsson (2022) has already discussed the problem critically from a standard point of view. In particular, he highlights the problems of politicians to account for the opportunity cost of projects and argues that, often, missions that do the least good are chosen. His contribution allows us to focus on the behavioral side of the problem. Under the rubric “Selecting a mission,” Mazzucato (2021, p. 91) gives only very few criteria for picking a mission that is worth to be pursued:

First and foremost, a mission has to be bold and inspirational while having wide societal relevance. It must be clear in its intention to develop ambitious solutions that will directly improve people’s daily lives, and it should appeal to the imagination.

There is a relatively large intellectual distance between these criteria and standard economic thinking. From a normal economic point of view, we would expect a criterion such as expected cost-effectiveness to play a role, or the extent of positive externalities associated with the successful implementation of such a mission. That a policy should be bold and inspirational, and appeal to the imagination of citizens, is obviously not standard economic thinking, and these criteria are probably also difficult to operationalize. And in the rest of the paragraph on selecting missions, Mazzucato rather elaborates on the design of missions: They should, for example, allow for different technological pathways to the defined goal, and they should cut across different disciplines and economic sectors.

Given this very limited advice on how missions should be picked, it may be more interesting to ask how they actually will be picked. Will governments reliably address the most pressing societal needs by picking the corresponding missions? Some behavioral arguments lead to skepticism in this respect. In general, politicians are not very good at identifying the most pressing societal needs that need the most urgent political attention. This may be surprising at first, since this is often assumed to be a core competence of politicians. But the problem follows from a well-documented pervasive difficulty that people have with estimating low but important risks.

Research on the availability bias dates back to Tversky and Kahneman (1973). This bias leads individuals to overestimate small risks if examples where these risks have materialized in the past are available to their memories. A simple example is that I will overestimate the likelihood of a plane crash for a while if I have only recently read about a plane crash in the newspaper. On the individual level, the damage that is typically done by the bias is not too large. On the contrary, it can even be useful as it leads otherwise not very risk averse individuals to behave as if they were more risk averse, and this may lead them to avoid dangerous decisions. On the collective level, however, Kuran and Sunstein (1999) have shown how the availability bias may lead to mass scares about risks that are negligible. On the other hand, more important risks that may warrant regulatory attention often go unnoticed when all political attention is focused on availability cascades.

Cascades occur when an upward biased individual risk perception becomes amplified through media coverage and collective communication. Interest groups can deliberately trigger cascades in order to pursue their own self-interest through risk regulation. Kuran and Sunstein show how, once established, availability cascades are difficult to neutralize, even if clear scientific evidence contradicting them surfaces. They discuss cases where it has simply become socially unacceptable to state the correct, lower risks in public and where people who attempted to correct biased public risk perceptions became ostracized, for example, for supposedly showing too little empathy with the (imaginary) victims of (imagined) risks.

Mazzucato makes no attempt to propose any strategy that might help decision-makers to identify missions that are actually worth pursuing. And more importantly, she does not propose any mechanism to avoid a huge waste of resources on missions that in fact should not be pursued. This is a major gap in her approach.

Relatedly, Kirchherr et al. (2023) criticize a normativity bias underlying the mission-oriented policies. They argue that there is a danger that these policies are pursued if their stated objectives sound normatively appealing, without any detailed regard for the efficacy of the proposed measures, and for unintended side effects of the mission pursued. Any trade-offs between competing goals are often largely ignored. This is certainly also a major problem. Who would not want to stop climate change, or end poverty, or cure diseases? But with limited resources and possible trade-offs between different individually worthwhile and maybe even inspirational goals, Mazzucato gives no advice on how to prioritize competing missions. And again, the even more important question may be how to introduce safeguards that avoid resources being wasted on missions oriented toward goals that sound normatively appealing but are problematic on closer inspection.

Throughout her book, Mazzucato (2021) talks repeatedly about mission-oriented policy being inspirational, visionary, even about “imagining a better future” (p. 18), as well as about aligning public and private interests between broad societal goals. Sympathetically, one might call such an approach charismatic; critically, one might rather point toward the danger of inducing society-wide groupthink and the danger of discrediting dissent and criticism of mission-oriented policies. The conjunction of a normativity bias with the self-declared impetus to save the world, to present grand visions rather than strive for piecemeal progress, is not entirely riskless in itself. The economic and social damage inflicted by charismatic leaders who refuse to be questioned and criticized is large enough in single projects (see, e.g., the case of “Ethanol Jesus” in Sandström and Alm 2022) but may be significantly larger in a mission-oriented framework.

The Cognitively Biased Argument for the Mission-Oriented Approach

In several of Mazzucato’s publications, in particular in her popular 2015 and 2021 books, a strong narrative is created that is now shared by a substantial number of scholars, policymakers, and also by interested laymen, who, as engaged citizens, actively think about political solutions to current problems. But as I will argue in this section, that narrative itself is not evidence-based, but the result of an interpretation of the data that is influenced by cognitive biases.

Mazzucato’s concept of the entrepreneurial state has at its core a very positive perspective on the ability and willingness of politicians and bureaucrats to design and implement effective innovation policies. The word effective, rather than efficient, is used here on purpose, because for efficiency, a clear-cut normative benchmark such as welfare maximization or at least cost minimization of policies would need to be explicated. But in her entire book on The Entrepreneurial State, Mazzucato (2015) does not do this. She does not claim that her concept of innovation policy is efficient in any meaningful way. Rather, she repeatedly claims that it is effective in the sense that it yields the politically desired results, such as helping to invent technologies that are deemed politically important (Mazzucato 2015, p. 153), or becoming a co-owner of patents and administer the dissemination of innovation-related knowledge (Mazzucato 2015, p. 203).

The same pattern is found in Mazzucato (2021), where she again tells a story of how governments supposedly get things done in the realm of innovation policy. But she does not discuss in any detail what the costs are, either fiscally, or in terms of unintended side effects in the form of inefficient incentives. In this sense, her works do not offer a careful weighing of countervailing arguments that could be found in the literature. Rather, she presents the result of her own confirmation bias to the reader, i.e., a one-sided presentation of those cases and arguments that lead the reader to believe that governments could achieve nearly anything, if they only wanted to and if their leaders would only be sufficiently inspired, inspirational, and visionary.

Again, this approach is uncharacteristic for an economist. Economists normally tend to advocate rational institutions that direct self-interested individuals to make decisions that increase general social welfare. This is a central theme in economics at least since Adam Smith argued that we expect to be able to eat dinner thanks to the self-interest of the butcher and the baker, not thanks to their altruism. Mazzucato presents no theory of good institutions. Rather, she appeals to policymakers to become bold, visionary, inspirational political entrepreneurs. Certainly, we sometimes find similar voices also on the other side of the political spectrum, for example, when classical liberals praise reform-savvy politicians such as Margaret Thatcher (Kirchgässner 2002). But this is nevertheless not how economists typically reason: Their focus is on institutions. And it is very difficult to find empirical foundations for the belief that, once mission-oriented policymaking becomes common, politicians will act in a manner so different from what is typical in contemporary democracies.

We have discussed the normativity bias in the application of mission-oriented policymaking briefly above. It is noteworthy that Mazzucato (2021) extensively exploits the normativity bias of her readers in making her argument. Applying the mission-oriented approach to climate change is justified, because it is normatively justified to stop climate change. Hardly anyone would disagree with the second statement. But is a government mission in innovation policy the most efficient way to reach this goal? Could not simply setting the right incentives for private entrepreneurs by emission trading, together internalizing positive externalities through subsidies for basic research be more efficient? What does the mission orientation really add, compared to standard bread-and-butter innovation policy, apart from lofty calls for vision, leadership, and inspiration? Such questions are not answered conclusively; rather, the shortcut from the laudable goal to the justification of the political approach is taken.

A normativity bias on steroids appears when Mazzucato (2021, pp. 75–112) uses the 17 UN development goals to justify a number of worldwide missions. These goals have reached high status in activist academic circles because they can be used to justify almost every policy one desires. Again, it is not necessary to discuss the actual efficiency of policy proposals, as justification seamlessly spills over from the goal to the proposed means. More importantly, with 17 UN development goals, trade-offs and the need to prioritize are unavoidable. Nothing of this is discussed, and a false harmony of all kinds of desirable goals that should be pursued through missions is assumed. Opportunity costs of missions are largely ignored, and this is a behavioral bias in itself.

Moreover, Mazzucato frequently appeals to the availability bias of her readers and sets them into a loss frame, as we have already seen above. Mission orientation is the universal tool (and the only one discussed) to fend off the nearing apocalypse. Do you fear climate change? Then governments should organize a mission to fight it. Do you worry that you might suffer from dementia in your old age? Mission orientation will help. Do you want to save the dolphins from plastic waste in the sea? A mission will do. The so-called mission maps in Chap. 5 in her book tend to confuse, rather than clarify. Everything is somehow connecting with everything, and everyone needs to be mobilized for the mission. It is difficult not to see snake oil being sold here, but once enough fear has been spread and the loss frame made sufficiently vivid, some people seem to buy anything.

Conclusions

As in any field of policymaking, innovation policy can be significantly influenced and its quality significantly impaired, through behavioral biases influencing decision-makers on a subconscious level. As we have seen, politicians, interest groups, or even social scientists can also deliberately appeal to cognitive biases of their respective target audiences in order to promote their preferred policies. The complexity of innovation policy and the lack of clear empirical evidence on causal relations in this field increase the likelihood that behavioral influences work in both of these ways.

A central problem with mission-oriented policy is that it increases the discretionary leeway given to policymakers and bureaucrats. In a more rule-based framework for innovation policy, involving, for example, tax credits for R&D spending, the influence of biases will be low. In a framework where individuals define missions, pick policy instruments, decide which firms receive grants, and which subsidies should be continued, the door for behavioral influences to distort decisions and make them worse than they would have to be is wide open.

I have also argued that implicitly, behavioral biases matter a lot for the internal argument supporting Mazzucato’s concept of mission-oriented policymaking. Firstly, she is influenced by heuristics and biases herself. Among them are an extensive ignorance and/or intentional disregard of opportunity cost, a normativity bias where policy measures are justified by virtue of the goals they are supposed to implement, and a reliance on the quality of persons in office, rather than good institutions. But secondly, I have argued that Mazzucato also appeals to biases herself to influence her audience. An example is the frequent appeal to loss aversion, by depicting catastrophic scenarios, for which mission orientation is advertised as a solution.

In sum, from a behavioral perspective, this appears unlikely to be consistently successful. Even if some spectacular “missions” such as the Apollo program may have been effective in the sense that they reached their goal, generalizing this to a new approach to policy is unlikely to yield consistently good results. A more rule-based and broad innovation policy with less scope for behavioral biases to have an effect seems preferable.

References

Alpert, M., & Raiffa, H. (1969). A progress report on the training of probability assessors. Reprinted in D. Kahneman, P. Slovic, & A. Tversky (Eds.), Judgment under Uncertainty: Heuristics and Biases (pp. 294–304). New York, NY: Cambridge University Press.

Angner, E. (2006). Economists as experts: Overconfidence in theory and practice. Journal of Economic Methodology, 13(1), 1–24.

Angner, E. (2021). A Course in Behavioral Economics. 3rd edition. London: Palgrave Macmillan.

Antonelli, C., & Crespi, F. (2013). The Matthew effect in R&D public subsidies: The Italian evidence. Technological Forecasting and Social Change, 80(8), 1523–1534.

Bletschacher, G., & Klodt, H. (1992). Strategische Handels- und Industriepolitik. Theoretische Grundlagen, Branchenanalysen und Wettbewerbspolitische Implikationen. Kieler Studien 244. Tübingen: Mohr Siebeck.

Camerer, C. F., & Loewenstein, G. (2004). Behavioral economics: Past, present and future. In C. F. Camerer, G. Loewenstein, & M. Rabin (Eds.), Advances in Behavioral Economics (pp. 3–51). Princeton, NJ: Princeton University Press.

Caplan, B. C. (2005). Rational irrationality and the microfoundations of political failure. Public Choice, 107(3–4), 311–331.

Collin, E., Sandström, C., & Wennberg, K. (2022). Evaluating evaluations of innovation policy: Exploring reliability, methods, and conflicts of interest. In K. Wennberg & C. Sandström (Eds.), Questioning the Entrepreneurial State: Status-quo, Pitfalls, and the Need for Credible Innovation Policy (pp. 157–173). Cham: Springer.

Czarnitzki, D., & Hussinger, K. (2018). Input and output additionality of R&D subsidies. Applied Economics, 50(12), 1324–1341.

Denzau, A. T., & North, D. C. (1994). Shared mental models: Ideologies and institutions. Kyklos, 47(1), 3–31.

Elert, N., & Henrekson, M. (2022). Collaborative innovation blocs and mission-oriented innovation policy: An ecosystem perspective. In K. Wennberg & C. Sandström (Eds.), Questioning the Entrepreneurial State: Status-quo, Pitfalls, and the Need for Credible Innovation Policy (pp. 345–367). Cham: Springer.

Freeman, C. (1987). Technology Policy and Economic Performance. Lessons from Japan. London: Pinter.

Frenken, K. (2017). A complexity-theoretic perspective on innovation policy. Complexity, Governance & Networks, 3(1), 35–47.

Hamlin, A., & Jennings, C. (2011). Expressive political behavior: Foundations, scope and implications. British Journal of Political Science, 41(3), 645–670.

Hillman, A. L. (2010). Expressive behavior in economics and politics. European Journal of Political Economy, 26(4), 403–418.

Kahneman, D., & Tversky, A. (1979). Prospect theory: An analysis of decision under risk. Econometrica, 47(2), 263–291.

Karlson, N., Sandström, C., & Wennberg, K. (2020). Bureaucrats or markets in innovation policy? A critique of the entrepreneurial state. Review of Austrian Economics, 34(1), 81–95.

Kirchgässner, G. (2002). On the role of heroes in political and economic processes. Kyklos, 55(2), 179–196.

Kirchherr, J., Hartley, K., & Tukker, A. (2023). Missions and mission-oriented innovation policy for sustainability: A review and critical reflection. Environmental Innovation and Societal Transitions, 47(June), Article 100721.

Kuran, T., & Sunstein, C. R. (1999). Availability cascades and risk regulation. Stanford Law Review, 51(4), 683–768.

Larsson, J. P. (2022). Innovation without entrepreneurship: The pipe dream of mission-oriented innovation policy. In K. Wennberg & C. Sandström (Eds.), Questioning the Entrepreneurial State: Status-quo, Pitfalls, and the Need for Credible Innovation Policy (pp. 77–91). Cham: Springer.

Liu, X., Stoutenborough, J., & Vedlitz, A. (2017). Bureaucratic expertise, overconfidence, and policy choice. Governance, 30(4), 705–725.

Massey, C., & Thaler, R. H. (2013). The loser’s curse. Decision-making and market efficiency in the NFL draft. Management Science, 59(7), 1479–1495.

Mazzucato, M. (2015). The Entrepreneurial State. Debunking Public vs. Private Sector Myths. Revised Edition. New York: Public Affairs.

Mazzucato, M. (2021). Mission Economy: A Moonshot Guide to Changing Capitalism. London: Penguin UK.

Murtinu, S., Foss, N. J., & Klein, P. G. (2022). The entrepreneurial state: An ownership competence perspective. In K. Wennberg & C. Sandström (Eds.), Questioning the Entrepreneurial State: Status-quo, Pitfalls, and the Need for Credible Innovation Policy (pp. 57–75). Cham: Springer.

Robinson, D. K. R., & Mazzucato, M. (2019). The evolution of mission-oriented policies: Exploring changing market creating policies in the US and European space sector. Research Policy, 48(4), 936–948.

Roos, M., & Reccius, M. (2023). Narratives in economics. Journal of Economic Surveys, published online.

Sandström, C., & Alm, C. (2022). Directionality in innovation policy and the ongoing failure of green deals: Evidence from biogas, bio-ethanol, and fossil-free steel. In K. Wennberg & C. Sandström (Eds.), Questioning the Entrepreneurial State: Status-quo, Pitfalls, and the Need for Credible Innovation Policy (pp. 251–271). Cham: Springer

Schnellenbach, J. (2005). Model uncertainty and the rationality of economic policy. Journal of Evolutionary Economics, 15(1), 101–116.

Schnellenbach, J., & Schubert, C. (2015). Behavioral political economy: A survey. European Journal of Political Economy, 40(Part B), 395–417.

Schnellenbach, J., & Schubert, C. (2019). A note on the behavioral political economy of innovation policy. Journal of Evolutionary Economics, 29(5), 1399–1414.

Shiller, R. J. (2019). Narrative Economics. How Stories Go Viral and Drive Major Economic Events. Princeton, NJ: Princeton University Press.

Tversky, A., & Kahneman, D. (1973). Availability: A heuristic for judging frequency and probability. Cognitive Psychology, 5(2), 207–232.

Tversky, A., & Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science, 211, 453–458.

Wilkinson, N., & Klaes, M. (2018). An Introduction to Behavioral Economics. 3rd edition. London: Palgrave Macmillan.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2024 The Author(s)

About this chapter

Cite this chapter

Schnellenbach, J. (2024). A Behavioral Economics Perspective on the Entrepreneurial State and Mission-Oriented Innovation Policy. In: Henrekson, M., Sandström, C., Stenkula, M. (eds) Moonshots and the New Industrial Policy. International Studies in Entrepreneurship, vol 56. Springer, Cham. https://doi.org/10.1007/978-3-031-49196-2_4

Download citation

DOI: https://doi.org/10.1007/978-3-031-49196-2_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-49195-5

Online ISBN: 978-3-031-49196-2

eBook Packages: Economics and FinanceEconomics and Finance (R0)