Abstract

A comparative analysis of distance methods, kriging and stochastic simulation is conducted for evaluating their capabilities for predicting fluctuations in uncertainty due to changes in spatially correlated samples. It is concluded that distance methods lack the most basic capabilities to assess reliability despite their wide acceptance. In contrast, kriging and stochastic simulation offer significant improvements by considering probabilistic formulations that provide a basis on which uncertainty can be estimated in a way consistent with practices widely accepted in risk analysis. Additionally, using real thickness data of a coal bed, it is confirmed once more that stochastic simulation outperforms kriging.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Introduction

In any form of sampling, there is always significant interest in establishing the reliability that may be placed on any conclusions extracted from a sample of certain size. In the earth sciences and engineering, such conclusions can be the extension of a contamination plume or the in situ resources of a mineral commodity. Increases in sample size result in monotonic improvements with diminishing returns: up to measuring the entire population, the benefits increase with the number of observations. In the classical statistics of independent random variables, the number of observations is all that counts. In spatial statistics, however, the locations of the data are also important.

Early on in spatial sampling, it was recognized that sampling distance was a factor in determining the reliability of estimations. However, insurmountable difficulties of incorporating other factors led to the reliability of spatial samplings being determined solely by geographical distance, particularly for the public disclosure of mineral resources (e.g., USBM and USGS 1976).

Significant advances in the determination of spatial uncertainty did not take place until the advent of digital computers and the formulation of geostatistics (e.g., Matheron 1965). Geostatistics introduced the concept of kriging variance, which was a significant improvement over the relatively simplistic distance criteria for determining reliability. The third generation of methods to determine reliability of spatial sampling came with the development of spatial stochastic simulation shortly after the formulation of kriging (Journel 1974).

Although there are several reports in the literature about applications of distance methods (e.g., USGS 1980; Wood et al. 1983; Rendu 2006) and kriging (e.g., Olea 1984; Bhat et al. 2015), the mere fact that distance methods are still being used indicates that the merits of the geostatistical methods remain unappreciated. This chapter is an application of the three families of methods for conducting sensitivity analyses on the reliability of the assessment of geologic resources due to variations in sample spacing. The simulation formulation given here is novel as it is an illustrative example used for comparing all three approaches.

2 Data

The data in Fig. 19.1 and Table 19.1 of the Appendix will be used to anchor the presentation. They are thickness measurements for the Anderson coal bed in a central part of the Gillette coal field of Wyoming taken from a more extensive study (Olea and Luppens 2014). A conversion factor could have been used to transform all the thickness values to tonnage, but it was decided to perform the analysis in terms of the attribute actually measured. The reader may want to know, however, that a density of 1,770 short tons per acre-foot for subbituminous coal is a good average value to estimate tonnage values and that the cell size used here is 400 ft by 400 ft.

With resources of more than 200 billion short tons of coal in place, the Gillette coal field is one of the largest coal deposits in the United States (Luppens et al. 2008). There are eleven beds of importance in the field. The Anderson coal bed, in the Paleocene Tongue River Member of the Fort Union Formation, is the thickest and most laterally continuous of the six most economically significant beds. This low sulfur, subbituminous coal has a field average thickness of 45 ft. Hence, it is the main mining target.

3 Traditional Uncertainty Assessment

For a long time, the prevailing practice has been the determination of uncertainty in mining assessments based on distance between drill holes. Figure 19.2 shows an example following U.S. Geological Survey Circular 891 (Wood et al. 1983), hereafter referred to as Circular 891. This example uses the drill holes in Fig. 19.1a after eliminating the holes along the diagonal. Circular 891 classifies resources into four categories according to the distance from the estimation location to the closest drill hole:

Classification of in situ resources according to Circular 891 for the data in Fig. 19.1a after eliminating the drill holes along the diagonal

-

0 to ¼ mi: measured

-

¼ to ¾ mi: indicated

-

¾ to 3 mi: inferred

-

More than 3 mi: hypothetical

Classification schemes like this are fairly simple and gained popularity prior to the advent of computers. Evaluating the degree of uncertainty of a magnitude or an event is the domain of statistics (e.g., Caers 2011). The standard approach for analyzing uncertainty consists of listing all possible values or events and then assigning a relative frequency of occurrence. A simple example is the tossing of a coin, where the outcomes are head and tail. For a fair coin, these two events occur with the same frequency, which is called probability when normalized to vary from 0 to 1. The same concept can be applied to any event or attribute, including coal bed thickness. For example, the outcome at a site not yet drilled could be modeled as the following random variable:

-

5–10 ft, probability 0.3

-

10–15 ft, probability 0.4

-

15–21 ft, probability 0.2

-

21–28 ft, probability 0.1

Note that the sum of the probabilities of all possible outcomes is 1.0. Random variables rigorously allow answering multiple questions about unknown magnitudes, in this case, the likely thickness to penetrate. A sample of just three assertions would be: (a) coal will certainly be intersected because the value zero is not listed among the possibilities; (b) it is more likely that the intersected thickness will be less than 15 ft than greater than 15 ft; and (c) odds are 6 to 4 that the thickness will be between 10 and 21 ft, or to put it differently, the 11 ft interval between 10 and 21 ft has a probability of 0.6 of containing the true thickness. These are the standard concepts and tools used universally in statistics to characterize uncertainty.

The classification system established by Circular 891 does not use probabilities and lacks the predictive power of a random variable approach. In particular,

-

The classification uses an ordinal scale (e.g., Urdan 2017), supposedly ranked, but the classification does not indicate how much more uncertain one category is relative to another. In practice, it has been found that errors may not be significantly different among categories (Olea et al. 2011).

-

The results of a distance classification are difficult to validate. The tonnage in a class denotes an accumulated magnitude over an extensive volume of the deposit. The entire portion of the deposit comprising a class would have to be mined in order to determine the exact margin of error in the classification for such a class. In practical terms, the classification is not falsifiable, thus it is unscientific (Popper 2000). Moreover, there is little value in determining the reliability of a prediction post mining.

-

The classification fails to consider the effect of geologic complexity. Coal deposits ordinarily contain several geologically different beds that may be penetrated drilling a single hole. When all beds are penetrated by the same vertical drill holes, the drilling pattern is the same for all beds. Using the Circular 891 classification method, the areal extension of each category is the same for the resources of each coal bed separately and for the accumulated resources considering all coal beds, while logic indicates that the extension of true reliability classes should be all different.

-

For similar reasons, in a multi-seam deposit, increasing the drilling density results in the same reduction in uncertainty for all coalbeds, which is also unrealistic.

-

The number of methods for estimating resources is continuously growing, hopefully for the better. Considering that not all methods are equally powerful, independently of the data, different methods offer varying degrees of reliability. The uncertainty denoted by the Circular 891 classification is insensitive to the methods used in the calculation of the tonnage. For example, inferred resources remain as inferred resources independently of the nature and quality of the methods used in the assessment.

Despite these drawbacks and the formulation of the superior alternatives below, Circular 891 and similar approaches remain the prevailing methods worldwide for the public disclosure of uncertainty in the assessment of mineral resources and reserves (JORC 2012; CRIRSCO 2013).

4 Kriging

Kriging is a family of spatial statistics methods formulated for the improvement in the reporting of uncertainty and in the estimation of the attributes of interest themselves. Although it is possible to establish links between kriging and other older estimation methods in various disciplines, mining was the driving force behind the initial developments of kriging and other related methods collectively known today as geostatistics (Cressie 1990).

Kriging is basically a generalization of minimum mean square error estimation taking into account spatial correlation. Kriging provides two numbers per location \( ({\mathbf{s}}_{o} ) \) conditioned to some sample of the attribute \( (z({\mathbf{s}}_{i} ),i = 1,2, \ldots ,N) \): an estimate of the unknown value \( (z^{*} ({\mathbf{s}}_{o} )) \) and a standard error \( (\sigma ({\mathbf{s}}_{o} )) \). The exact expression for these results depends on the form of kriging. For ordinary kriging, the most commonly applied form and the one used here, the equations are:

where:

- \( n \le N \) :

-

is a subset of the sample consisting of the observations closest to \( {\mathbf{s}}_{o} \);

- \( \gamma ({\mathbf{d}}) \) :

-

is the semivariogram, a function of the distance d between two locations;

- \( \lambda_{i} \) :

-

is a weight determined by solving a system of linear equations comprising semivariogram terms; and

- \( \mu \) :

-

is a Lagrange multiplier, also determined by solving the same system of equations.

The method presumes knowledge of the function characterizing the spatial correlation between any two points, which is never the case. A structural analysis must be conducted before running kriging to estimate this function: a covariance or semivariogram. The semivariogram can be regarded as a scaled distance function. The weights and the Lagrange multiplier depend on the semivariogram for multiple drill-hole to drill-hole distances and estimation location to drill-hole distances. For details, see for example Olea (1999).

The two terms, \( z^{*} ({\mathbf{s}}_{o} ) \) and \( \sigma^{2} ({\mathbf{s}}_{o} ) \), are the mean and the variance of the random variable modeling the uncertainty of the true value of the attribute \( z({\mathbf{s}}_{o} ) \), terms that are compatible with all that is known about the attribute through the sample of size N. Variance is a measure of dispersion, in this case, dispersion of possible values around the estimate, which is the most likely value. Hence, changing the sample, a sensitivity analysis of kriging variance is a sensitivity analysis of variations in uncertainty due to changes in the sampling scheme. From Eq. 19.2, the kriging variance does not depend directly on the observations. The dependence is only indirect through the semivariogram, which is based on the data. Considering that there is one true semivariogram per attribute, changes in adequate sampling should not result in significant changes in the estimated semivariogram, which is kept constant. This independence between data and standard error facilitates the application of kriging to the sensitivity analysis in the reliability of an assessment due to changes in sampling strategy because mathematically actual measurements are not necessary to calculate standard errors; the modeler only has to specify the semivariogram and the sampling locations.

Figure 19.3 shows the set of estimated semivariogram values obtained using the sample in Fig. 19.1 plus a model fitting the points for the purpose of having valid semivariogram values for any distance. In this case, the fitted curve is called a spherical model with a nugget of 20 sq ft, sill of 595 sq ft and a spatial correlation range of 88,920 ft. Geologically, the nugget is related to the variance of short scale fluctuations; the sill is of the same order of magnitude as the sample variance, and the correlation range is equal to half the average geographical size of the anomalies. For details on structural analysis, see for example Olea (2006).

Figure 19.4 shows the results of applying ordinary kriging to the sample in Fig. 19.1a and Table 19.1 in the Appendix. As expected, the standard error is zero at the drill holes because there is no uncertainty where measurements have been taken.

Ordinary kriging maps for the Anderson coal bed in a central part of the Gillette coal field (Wyoming) using the sample in Fig. 19.1: a thickness; b standard error

Although kriging can analyze any configuration, Fig. 19.5 only relates to additions or eliminations to the basic sample in Fig. 19.1a. Values along the diagonal were used only for modeling the semivariogram and producing Fig. 19.4. Figure 19.5a also has every other row and column eliminated. Estimates could be produced for the first two configurations because thickness is known at each drill hole. The other maps were produced by interpolating locations in the sample with the next largest spacing; it is only possible to produce the standard error map for Fig. 19.5c–e.

Ordinary kriging standard error for the same configuration in Fig. 19.2 for several average spacings: a 6 mi; b 3 mi; c 1.5 mi; d 3/4 mi; e 3/8 mi

The similarity between Figs. 19.2 and 19.5b may lead to incorrect conclusions. Although the location and extension of similar colors are approximately the same, what is important is the meaning of the colors. Figure 19.2 does not provide any numerical information that can be associated with the accuracy and the precision of the estimated values. In Fig. 19.5b the numbers are standard errors, a direct measurement of estimation reliability. In other more irregular configurations, there will not be similarity in color patterns no matter how the colors are selected. For example, by expanding the boundary of the study area, Fig. 19.6 shows how the Circular 891 classification is totally insensitive to the fact that, along the periphery, there is an increase in uncertainty because the data are now to one side, not surrounding the estimation locations. Instead, kriging accounts for the fact that extrapolation is always a more uncertain operation than interpolation, an important capability when accounting for boundary effects.

Kriging is able to provide random variables for the statistical characterization of uncertainty if the modeler is willing to introduce a distributional assumption. \( z^{*} ({\mathbf{s}}_{o} ) \) and \( \sigma^{2} ({\mathbf{s}}_{o} ) \) are the mean and the variance of the distribution of the random variable providing the likely values for \( z({\mathbf{s}}_{o} ) \). These parameters are necessary but not sufficient to fully characterize any distribution. However, this indetermination can be eliminated by assuming a distribution that is fully determined with these two parameters. Ordinarily, the distribution of choice is the normal distribution, followed by the lognormal. The form of the distribution does not change by subtracting \( z({\mathbf{s}}_{o} ) \) from all estimates. As the difference \( z^{*} ({\mathbf{s}}_{o} ) - z({\mathbf{s}}_{o} ) \) is the estimation error, the distributional assumption also allows characterizing the distribution for the error at \( {\mathbf{s}}_{o} \).

Kriging with a distribution for the errors overcomes all the disadvantages of the distance methods listed in the previous section:

-

It is possible to calculate the probability that the true value of the attribute lies in any number of intervals. Probabilities are a form of a ratio variable, for which zero denotes an impossible event and, say, a 0.2 probability denotes twice the likelihood of occurrence of an event than 0.1.

-

Validation is modular. An adequate theory assures that, on average, \( z^{*} ({\mathbf{s}}_{o} ) \) and \( \sigma^{2} ({\mathbf{s}}_{o} ) \) are good estimates of reality. Yet, as illustrated by an example in the last Section, if going ahead with validation of the uncertainty modeling primarily to check the adequacy of the normality assumption, it is not necessary to validate all possible locations throughout the entire deposit to evaluate the quality of the modeling.

-

The effect of complexity in the geology is taken into account by the semivariogram.

-

In general, the thickness of every coal bed or the accumulated values of thickness for several coal beds has a different semivariogram. Thus, even if the sampling configuration is the same, the standard error maps will be different.

-

The characterization of uncertainty is specific to the estimation method because the results are valid only for estimated values using the same form of kriging used to generate the standard errors.

Figure 19.7 summarizes the results of the maps in Fig. 19.5. Display of the 95th percentile is based on the assumption that all random variables follow normal distributions. The curves clearly outline the consequences of varying the spacing in a square sampling pattern from 2,000 to 32,000 ft. So, for example, if it is required that all estimates in the study area must have a standard error less than 10 ft, then the maximum spacing must be at most 12,500 ft. The validity of the results, however, is specific to the attribute and sampling pattern: thickness of the Anderson coal bed investigated with a square grid. Any change in these specifications requires preparation of another set of curves.

Sensitivity of ordinary kriging to spacing of mean standard error, its 95th percentile, and the maximum standard error based on the Fig. 19.5 configurations

5 Stochastic Simulation

Despite limited acceptance, the kriging variance has been in use for a while in the sensitivity analysis of uncertainty to changes in sampling distances and configurations (e.g., Olea 1984; Cressie et al. 1990). Kriging, like any mathematical method, has been open to improvements. One result has been the formulation of another family of methods: stochastic simulation.

Relative to the topic of this chapter, stochastic simulation offers two improvements: (a) it is no longer necessary to assume the form for the distribution providing all possible values for the true value of the attribute \( z({\mathbf{s}}_{o} ) \); and (b) the standard error is sensitive to the data.

As seen in Fig. 19.4, for every attribute and sample, kriging produces two maps, a map of the estimate and a map of the standard error. The idea of stochastic simulation is to characterize uncertainty by producing instead multiple attribute maps, all compatible with the data at hand and each representing one possible outcome of reality—realization, for short. From among the many available methods of geostatistical simulation, sequential Gaussian simulation has been chosen for this study because of its simplicity, versatility and efficiency (Pyrcz and Deutsch 2014). Figure 19.8 shows four simulated realizations, each of which is a possible reality in the sense that the values have the same statistics and spatial statistics (semivariogram) and the simulation reproduces the known sample values (i.e., the sample used to prepare Fig. 19.5b).

A sample of four sequential Gaussian realizations using the same data used in the preparation of Fig. 19.5b

Generation of significant results needs preparation of more realizations than the four in Fig. 19.8. An estimation of uncertainty requires summarizing the fluctuations from realization to realization, either at local or global scales. Figure 19.9 is an example of local fluctuation summarizing all values of thickness at the same location for 100 realizations. This histogram is the numerical characterization of uncertainty through a random variable. There is one random variable for each of the 57,528 pixels (cells) comprising each realization. As clearly implied by the selected values in the tabulation, this collection of 100 maps provides multiple predictions of the true thickness value that should be expected at this location. For example, the most likely value (mean) is 65.75 ft; the standard error is 13.47 ft; and there is a 0.95 probability that the coal bed will be less than 87.8 ft thick.

Maps can be generated for various statistics across the study area to display fluctuations in their values. Figure 19.10 shows a map of the mean and a map of the standard error. Note that the map for the mean is quite similar to the ordinary kriging map in Fig. 19.4a. More importantly, the maps for the standard errors in Figs. 19.5b and 19.10b are significantly different. The differences in the standard errors are primarily the result of the dependency of the standard error not only on the semivariogram and the drill hole locations, but also on the values of thickness as well. For example, comparing Figs. 19.1a and 19.10b, despite the regularity in the drilling, there is less uncertainty in the southwest corner where all values are low as well as in the south central part where all values are consistently high.

Production of a display of the standard error equivalent to that in Fig. 19.5 is more challenging now that the standard deviation must be extracted from multiple realizations and the preparation of each realization requires a value at each drill hole in the configuration of interest to complete the analysis. Figure 19.11 shows the equivalent results to Fig. 19.5 for the same drill holes, but now produced after applying sequential Gaussian simulation. The additional data necessary to prepare the maps in Fig. 19.11c–e where obtained by randomly selecting 10 of the 100 realizations used to prepare the maps in Figs. 19.8 and 19.10. The data for the hypothetical drill holes were taken from the values at the collocated nodes in these selected 10 realizations, thus obtaining 10 datasets consisting partly of the 48 actual data in Fig. 19.11b plus the artificial data obtained by “drilling” the realizations. Finally, each dataset was used to generate 100 realizations, for a total of 1,000 realizations per configuration. As mentioned for Fig. 19.10b, despite the regularity of the drill hole pattern, the fluctuations in standard error are no longer completely determined by the drilling pattern.

Sequential Gaussian simulation standard error for the same configuration on Fig. 19.2 for several average spacings: a 6 mi; b 3 mi; c 1.5 mi; d 3/4 mi; e 3/8 mi

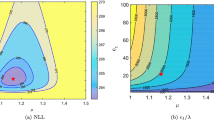

Figure 19.12 is the summary equivalent to that in Fig. 19.7. Considering the completely different methodologies behind both sets of curves, the results are quite similar, particularly the curves for the mean standard error, which are almost identical. The more extreme standard errors of the sequential Gaussian simulation are larger than those for ordinary kriging in the case of the 95th percentile and the maximum value. The remaining question is: Which approach produces the most realistic forecasts of uncertainty?

Sequential Gaussian simulation sensitivity to spacing of mean standard error, its 95th percentile, and the maximum standard error based on the configurations in Fig. 19.11

6 Validation

Figure 19.13 provides an answer to the question above in terms of percentiles. A percentile is a number that separates a set of values into two groups, one below and the other one above the percentile. The percentage of values below gives the name to the percentile. For example, in Fig. 19.9, the value 46.22 ft separates the 100 values of thickness into two classes, those below and those equal to or above 46.22 ft. It turns out that only 5 of the 100 values are below 46.22 ft. Hence, 46.22 ft is the 5th percentile of that dataset. Accepting only integer values of percentages, there are 99 percentiles in any dataset. The quality of a model of uncertainty can be validated by checking the proportion of true values that are actually below the percentiles of the prediction random variables collocated with data not used in the modeling. One of the reasons for selecting the Anderson coal thickness for the study is that there are much more data than the 48 values used to generate the realizations, a generous set of 2,136 additional values to be precise. This larger number of values has been used for checking the accuracy of the percentiles, not only the 5th percentile, but all 99 percentiles. In the graphs, the actual percentage shows, on average, the proportion of times the true value was below the percentile of a random variable at the location of a censored measurement. For example, in Fig. 19.13a, 641 times out of 2,136 (i.e., 30%) the true value was indeed below the 35th percentile. Ideally, all dots should lie along the main diagonal. The clear winner is sequential Gaussian simulation.

7 Conclusions

Distance methods, kriging and stochastic simulation rank, in that order, in terms of increasing detail and precision of the information that they are able to provide concerning the uncertainty associated to any spatial resource assessment.

The resource classification provided by distance methods is completely independent of the geology of the deposit and the method applied to calculate the mineral resources. The magnitude of the resource per class has no associated quantitative measure of the deviation that could be expected between the calculated resource and the actual amount in place.

The geostatistical methods of kriging and stochastic simulation base the modeling on the concept of random variable used in statistics, which allows the same type of probabilistic forecasting used in other forms of risk assessments. Censored data were used for validating the accuracy of the probabilistic predictions that can be made using the geostatistical methods. The results were entirely satisfactory, particularly in the case of stochastic simulation.

References

Bhat S, Motz LH, Pathak C, Kuebler L (2015) Geostatistics-based groundwater-level monitoring network design and its application to the Upper Floridan aquifer, USA. Environ Monit Assess 187(1):4183, 15

Caers J (2011) Modeling uncertainty in the earth sciences. Wiley-Blackwell, Chichester, UK, p 229

Cressie N (1990) The origins of kriging. Math Geol 22(3):239–252

Cressie N, Gotway CA, Grondona MO (1990) Spatial prediction from networks. Chemometr Intell Lab Syst 7(3):251–271

CRIRSCO (Combined Reserves International Reporting Standards Committee) (2013) International reporting template for the public reporting of exploration results, mineral resources and mineral reserves, pp 41. http://www.crirsco.com/templates/international_reporting_template_november_2013.pdf

JORC (Joint Ore Reserves Committee) (2012) Australasian code for reporting of exploration results, mineral resources and ore reserves. http://www.jorc.org/docs/jorc_code2012.pdf

Journel AG (1974) Geostatistics for conditional simulation of ore bodies. Econ Geol 69(5):673–687

Luppens JA, Scott DC, Haacke JE, Osmonson LM, Rohrbacher TJ, Ellis MS (2008) Assessment of coal geology, resources, and reserves in the Gillette coalfield, Powder River Basin, Wyoming. U.S. Geological Survey, Open-File Report 2008-1202, pp 127. http://pubs.usgs.gov/of/2008/1202/

Matheron G (1965) Les variables régionalisées et leur estimation; Une application de la théorie des fonctions aléatories aux Sciences de la Nature. Masson et Cie, Paris, pp 305

Olea RA (1984) Sampling design optimization for spatial functions. J Int Assoc Math Geol 16(4):369–392

Olea RA (1999) Geostatistics for engineers and earth scientists. Kluwer Academic Publishers, Norwell, MA, p 303

Olea RA (2006) A six-step practical approach to semivariogram modeling. Stoch Env Res Risk Assess 39(5):453–467

Olea RA, Luppens JA (2014) Modeling uncertainty in coal resource assessments, with an application to a central area of the Gillette coal field, Wyoming. U.S. Geological Survey Scientific Investigations Report 2014-5196, pp 46. http://pubs.usgs.gov/sir/2014/5196/

Olea RA, Luppens JA, Tewalt SJ (2011) Methodology for quantifying uncertainty in coal assessments with an application to a Texas lignite deposit. Int J Coal Geol 85(1):78–90

Popper KR (2000) The logic of scientific discovery. Routledge, London, p 480

Pyrcz MJ, Deutsch CV (2014) Geostatistical reservoir modeling, 2nd edn. Oxford University Press, New York, p 433

Rendu JM (2006) Reporting mineral resources and mineral reserves in the United States of America—Technical and regulatory issues. In: Proceedings, Sixth International Mining Geology Conference: Australasian Institute of Mining and Metallurgy, pp 11–20

Urdan TC (2017) Statistics in plain English. Routledge, New York, p 265

USBM and USGS (U.S. Bureau of Mines and U.S. Geological Survey) (1976) Coal resource classification system of the U.S. Bureau of Mines and U.S. Geological Survey. Geological Survey Bulletin 1450-B, pp 7. https://pubs.usgs.gov/bul/1450b/report.pdf

USGS (U.S. Geological Survey) (1980) Principles of resource/reserve classification for minerals. U.S. Geological Survey Circular 831, pp 5. http://pubs.usgs.gov/circ/1980/0831/report.pdf

Wood GH Jr, Kehn TM, Carter MD, Culbertson WC (1983) Coal resources classification system of the U.S. Geological Survey. U.S. Geological Survey Circular 891, pp 65. http://pubs.usgs.gov/circ/1983/0891/report.pdf

Acknowledgements

This contribution completed a required review and approval process by the U.S. Geological Survey (USGS) described in Fundamental Science Practices (http://pubs.usgs.gov/circ/1367/) before final inclusion in this volume. I wish to thank Brian Shaffer and James Luppens (USGS), Peter Dowd (University of Adelaide) and Josep Antoni Martín-Fernández (Visiting Fulbright Scholar, USGS) for suggestions leading to improvements to earlier versions of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

See Table 19.1.

Rights and permissions

<SimplePara><Emphasis Type="Bold">Open Access</Emphasis> This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.</SimplePara> <SimplePara>The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.</SimplePara>

Copyright information

© 2018 The Author(s)

About this chapter

Cite this chapter

Olea, R.A. (2018). Advances in Sensitivity Analysis of Uncertainty to Changes in Sampling Density When Modeling Spatially Correlated Attributes. In: Daya Sagar, B., Cheng, Q., Agterberg, F. (eds) Handbook of Mathematical Geosciences. Springer, Cham. https://doi.org/10.1007/978-3-319-78999-6_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-78999-6_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-78998-9

Online ISBN: 978-3-319-78999-6

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)