Abstract

Global-scale collaborative observation on land and sea requires cutting-edge deep-Earth research. The fundamental prerequisite for obtaining full information in the deep Earth is to conduct high-quality seismic observation in marine areas, given that the oceans and seas cover 70% of the Earth’s surface..

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

4.1 Geophysical Exploration Technologies for Deep Earth

4.1.1 Theories and Technologies for Seismic Surveys

Development of sea floor detection and distributed optical fiber sensing seismic survey technologies

Global-scale collaborative observation on land and sea requires cutting-edge deep-Earth research. The fundamental prerequisite for obtaining full information in the deep Earth is to conduct high-quality seismic observation in marine areas, given that the oceans and seas cover 70% of the Earth’s surface. In recent years, intensive research and development (R&D) have made effective submarine observation possible by supporting a series of deep geophysical exploration projects which implement broadband ocean bottom seismography (OBS) (PLUME, SAMPLE, Pacific Array and others). Meter-scale observation density of seismic waves has been achieved using distributed optical fiber sensing technologies, which find wide application in petroleum exploration as well as in natural earthquake observation and are gradually established as the new generation of seismic survey technologies. These technologies make long-term and continuous seismic surveys possible in the deep seas, sub-zero zones, and even on extraterrestrial bodies, all of which are difficult to cover using traditional networks of seismographic stations. The introduction of new technologies (e.g. big data, cloud computing, and artificial intelligence) may support the development of new imaging methods based on distributed optical fiber sensing. Data with TB and even PB volumes from large-scale, high-density surveys, are available for processing using big data mining, which is driving a revolution in seismic survey. Meanwhile, the construction of data sharing platforms ensures maximum utilization of seismic survey data.

Emphasis on breaking through survey system limitations, and drawing lessons from multi-disciplinary thinking

Limited by its reliance on surface systems, deep exploration has to rely on simple telemetry to send and receive data. Uneven distribution of seismic stations, as well as the occurrence of earthquakes, constrains the accuracy and resolution of deep Earth structural imaging. Breaking through these technical bottlenecks is an important objective for future deep exploration studies. For example, data from current observation systems have to be supplemented and expanded by extracting structural signals from continuous noise records, previous seismic signals, and data using wave field interference. In wave field interference (or continuation) technology, surface observation systems can be migrated to regions near deep study targets, which makes ‘telemetry’ become ‘short-distance measurement’, significantly improving structural imaging accuracy and resolution. However, the fields of seismology, interference theories, signal extraction, and analytical technologies are still waiting for the effective application of these concepts and methods in actual deep exploration.

Enhanced survey methods for the lower mantle and core, and promotion of comprehensive, quantitative, and multi-scale research

Study of the structure of the lower mantle and core is currently weak and must be strengthened to achieve the objectives of understanding deep-Earth processes and the habitable Earth. Deep exploration will be required to accomplish a relatively unified and complete understanding of the Earth’s systems from the deepest zones to the surface. Study of deep structures and properties need more precision to reduce uncertainty. Comprehensive understandings of deep structures, physical and chemical properties, dynamic processes, deep-shallow coupling, and sphere interaction, require more precise geophysical exploration and multi-disciplinary observation (e.g. geophysics, geology, rock-geochemistry). Dynamic simulations and experiments should also be carried out as part of a comprehensive, systematic, and multi-scale research program.

4.1.2 Quantum Sensing and Deep Geophysics

Quantum sensing gravity and magnetic technologies can produce results several orders of magnitude more accurate and fine-grained than those of traditional measurement methods (Peters et al. 2001). If the measurement precision of quantum gravity can be increased to 10–1 uGal, it may be able to record surface gravity changes caused by motion of the core (at present it is thought that surface gravity changes of approximately 0.1 uGal can be generated by decade-scale movements). Technological innovation in quantum sensing gravity and magnetic measurement means that we will soon be able to achieve high-accuracy observation of delicate structures in the deep Earth, their characteristics, and their dynamic variations. The mechanisms of medium- and long-term variations in the inner core will be revealed and understood by acquiring new evidence from deep core-mantle coupling, movement patterns of the solid and liquid cores, and density differences at the core-mantle boundary. The patterns of Earth sphere interactions on different spatiotemporal scales will be determined by the acquisition of new information about inner Earth mass transfers and crust movements. The new technology will also immeasurably improve our capacity for monitoring and predicting earthquakes and volcanic activity, quantitatively monitoring mass transfers and changes in surface systems (e.g. continental water, sea water and glaciers), and evaluating the influence of climate changes and human activity on water resources (Fig. 4.1). Improvement of the accuracy and resolution of quantum geomagnetic measurement will also be helpful in achieving a better understanding of the motion of the liquid core, and for further exploring the mechanisms of decade-scale Earth variations.

4.2 Deep-Earth Geochemical Tracker and High-Precision Dating Techniques

To better understand the formation and evolution of habitable Earth, new technics are needed to constrain Earth’s differentiation processes, develop new proxies that are sensitive to Earth’s habitability and their variation rates, timelines of major geological events. Geochemical tracing methods and high-precision dating technics will play vital roles.

4.2.1 Geochemical Technologies for Tracing Early-Stage Earth Evolution and Core-Mantle Differentiation

‘Early-stage’ Earth evolution refers to important geological events that occurred in the range of ca.1 Ga—from planet formation to crust formation (4.56–3.6 Ga)—including primitive Earth materials composition and sources, giant impacts and their effects on Earth’s composition, core-mantle differentiation, core formation and evolution of the geodynamo, crust-mantle differentiation, the ancient magma ocean, primitive continental crust, early-stage Earth structure types, timing of the emergence of pre-plate tectonics and plate tectonics, formation of the early-stage Earth hydrosphere and atmosphere, life origins, and other cutting-edge issues. However, material samples from early-stage Earth are extremely rare and valuable, and current traditional geochemical analysis technologies are inadequate to understand these ancient processes and features. Innovative new theories and technologies for isotope dating and geochemical tracing are urgently required and should be based on multi-disciplinary cooperation. Time is the basis for studying geological processes. Besides traditional radioactive isotope dating methods (e.g. zircon U–Pb and individual mineral 40Ar/39Ar), short half-life extinct nuclide dating has considerable advantages in mapping the chronology of early-stage Earth geological events. High-precision extinct nuclide dating (e.g., 53Mn–53Cr, 26Al–26Mg, 182Hf–182W and 146Sm–142Nd systems) accurately measures the differential timing of metallic core and silicate mantle, and of crust and mantle, and can also trace core-mantle differentiation and heterogeneous mantle processes. Development of analytical theories and technologies for high-precision dating of stable metal isotopes (Ti, V, Zr, Ni, Al, Ca, Cr, V, Zn, Cd, In, Pd, Os, Ru, etc.) can reveal the element distribution characteristics and controlling factors of high-temperature isotope fractionation in the process of core-mantle differentiation (e.g. temperature, pressure, oxygen fugacity, and chemical components), which is helpful for throwing light on the process of core-mantle differentiation and the formation mechanisms of mantle heterogeneity in early-stage Earth.

During core-mantle differentiation, relatively few light elements found their way into the core, and their separation under HPHT conditions following core formation could have provided the force required for geomagnetic field initiation. The development of high-precision analytical technology for light element isotopes (Si, C, S, N and O), particularly isotopes with low abundance (△17O and δ34S), is essential for understanding the distribution behavior of light elements during core-mantle differentiation as well as the process itself.

4.2.2 Index System of Earth Habitability Elements

Understanding the evolution of factors making Earth habitable over time is a prerequisite for study of the formation of Earth habitability and the controlling mechanisms of the deep Earth; and is a considerable basis for comprehending the essence of every crucial geological event, their systemic driving forces, the connections between various system circulations, and their feedback mechanisms. Only once we have this information can the story of habitable Earth be fully told and the reasons for Earth’s habitability dissected, which will finally establish the basis for constructing a systematic theory of habitable Earth. Nevertheless, there are many unresolved questions about the formation and mechanism of Earth habitability till nowadays. As we know, temperature, water, and oxygen are three essential elements of habitable Earth, their relative stability or dynamic balance in surface and near-surface systems are the key to maintaining Earth habitability. Early-stage life consisted mainly of bacteria, archaebacteria and other microorganisms, but it is still unclear how these microorganisms interacted with Earth’s environment and how they contributed to creating the current configuration of these three basic elements. Concerning the Great Oxidation Event, neither the initiation nor the termination have been clearly explained, and even the timing of its episodes has not been accurately dated. Its temporal relationship and position in evolutionary sequence relative to a series of other vital events (continental crust evolution (growth, composition abrupt changes and uplifting), the global glaciation, flourishing of cyanobacteria, buildup of banded iron formations) have still not been established. Essentially, none of its interactions and correlations with other major events are fully understood. There are many methods for water body temperature reconstruction (e.g., oxygen isotopes in calcareous organism (foraminifers, bivalves, brachiopods, etc.), foraminifer Mg/Ca, coral skeleton Sr/Ca, foraminifer δ44Ca and TEX86), but all of these indices have significant shortcomings. For instance, both the oxygen isotope method and the trace element method show deviations because the pH and salinity of water bodies affect the composition of isotopes and trace elements in planktonic foraminifer shells. This shortcoming will hinder the confirmation or rebuttal of scientific hypotheses, and also limit our ability to reconstruct the evolution of the habitable oxygen-bearing atmosphere and predict its future. An index system therefore should be constructed starting from the critical elements of habitable Earth and the necessary material basis for life, this will be helpful for reaching a deeper understanding of the formation and evolution of our habitable environment and will also be a focus of future research.

4.2.3 High-Precision Dating Technique

Application of secondary ion mass spectrometry (SIMS) and laser albration-plasma mass spectrometry in geochronology has been a great success in China and reached a very ? level in the international standard. It enables precise in-situ analysis of isotope ratios in the micrometer to sub-micrometer scale, and thus markedly improved analytical efficiency. Combined application of color spectrum-accelerator mass spectrometry technologies increases the accuracy of 14C investigation capacity from milligram scale to molecular scale. To deploy the international Earth time scheme (EARTHTIME), with the key objective of enhancing time resolution, the dating accuracy of U–Pb and Ar/Ar has been enhanced from roughly 1–0.1% and the differences between laboratories and between the previous dating systems have largely been obviated. This has facilitated the leap from merely determining the age of geological events to being able to trace the rates of progress of geological processes. By comparison, no significant breakthrough in high-precision ID-TIMS dating technology has been achieved in China. Core technologies for high-precision chronology are the development and calibration of spikes and standard samples, measurement of fundamental parameters such as decay constants, and the development of data processing software, all of which are now urgent requirements.

4.2.4 HPHT Experiments and Computing Simulation Techniques

As a bridge between geochemistry and geophysics, HPHT simulation represents a crucial backbone and foundation for elucidating and testifying new geoscientific theories, opinions and concepts. The future development of HPHT experiment and simulation techniques lies in combining geophysics, microscopic structural analysis, numerical calculation, and simulation. The simulation temperatures and pressures realized so far cover the conditions of crust to core. In particular, the combination of synchro with HPHT experiment technics paves new way to achieve significant breakthroughs in the study of deep Earth interior.

Geo-scientific research in China has always suffered from the shortcoming of emphasizing observation at the expense of simulation and integration. This situation is being improved recently asthe new generation of scientists is doing well, carrying out simulation experiments on dynamic processes (e.g., mineral phase changes, physical property testing, partial melting, and rheological properties) over the entire range of temperatures and pressuresof the upper mantle. Of particular notice is the diamond anvil cell (DAC) technology, developed at the Center for High Pressure Science and Technology Advanced Research (HPSTAR), is now capable of simulating conditions in the lower mantle and even in the core, which has allowed China to be a significant force in global research on the ultra-deep Earth. China’s capabilities in other technologies also need to be strengthened, particularly technology of fluid studies, in-situ micro measurement under HPHT conditions, simulation of higher temperature and pressure conditions, and interdisciplinary integration in the use of HPHT simulation technology.

4.3 Deep-Sea Observation and Survey

4.3.1 Detection Technologies for Ocean Laser Profile

Understanding the subsurface vertical structures in the global ocean plays an important role in climate change research, underwater vehicles detection, and marine fishery development. However, mature technologies for collecting global subsurface water profiles with high-resolution and high-precision are limited at the moment. To achieve the full-parameter, high-resolution, integrated remote sensing detection of ocean profiles in a combination of marine optics, thermodynamics, dynamics, and ecology, it’s necessary to study the mechanisms of high-sensitivity individual photons detection and identification, coupling between marine dynamics and optics, also coupling between sub-mesoscale surface topology and subsurface vertical structures.

4.3.2 Detection Technologies for Marine Neutrino

The scarcity of real-time observations of seafloor sediment and polar ice caps is a major bottleneck of the development of polar science. Neutrinos can penetrate seawater with relative freedom. They can also interact weakly with quarks of hydrogen and oxygen atoms in seawater, releasing charged particles and weak rays with extremely high velocities. Studying the mechanisms of the weak interactions between neutrinos and sea materials, the mechanisms of seafloor neutrino flux detection, and the telescope technology of oceanic neutrino, will enable the extraction of parameters from the deep sea, such as neutrino oscillation, mixing angle, and so on. Furthermore, this can help inverse deep-sea environment, detect the vertical sea ice structures, and explore the seafloor resources.

4.3.3 Deep-Sea and Transoceanic Communication Technologies

All wireless communication technologies relying on radio frequencies, optics and underwater sound are subject to certain limitations, making it extremely urgent to explore the marine information transmission technologies in the deep and open sea. The complex ocean environment requires constructing a new and fundamental theoretical system for satellite-based, high spatiotemporal resolution, thus assisting the investigation of the deep seas. This system involves the investigation of satellite-sea collaborative coupling communication mechanisms, laser-induced sound and acousto-optic transformation mechanisms, and underwater platform quantum communication patterns. The R&D aims to integrate laser, underwater sound, and quantum to build communication systems, and further breakthroughs are expected in these revolutionary new-generation technologies. These systems will establish the basis for developing deep-sea laser investigation satellites, neutrino detection satellites, and ocean quantum communication satellites.

4.3.4 Underwater Observation and Survey

The ultra-high-speed unmanned platforms that can satisfy stringent demands of underwater observation and survey are rarely reported both at home and abroad, while only the Russian “Poseidon” nuclear powered unmanned underwater vehicle has achieved a top underwater speed of around 200 km/h. Therefore, it is necessary to develop ultra-high-speed, unmanned, multi-state, invisible, and intelligent vehicles equipped with high-precision and intelligent navigation, positioning, and communication systems, as well as self-adaptive sensors for sampling and observation/survey. The onboard systems should have the functions of the survey, communication, and navigation by integrating underwater acoustic, optical, and quantum communication technologies. These vehicles will have the capacity for trans-media indefinite cruising at all weathers and depths, with self-sustaining and adaptive transformation technologies, which can realize rapid environmental awareness, machine learning, and artificial intelligence based on decision making.

4.3.5 Sea Floor In-Situ Surveys

Ocean drilling ships currently in service need to be equipped with high-capacity mud pumps and marine risers. Their enormous volumes and high operational costs may be crucial factors in explaining why the Moho has not yet been penetrated. A possible alternative technology for the future is placing drilling rigs on the sea floor, with power supplied from support ships on the surface. Drill cores would be collected and transported automatically, and the rigs equipped with ROVs for maintenance. The following technological systems must first be developed: (1) automatic AI drilling and exploration systems, (2) automatic coring technology, (3) core storage and transportation technology; and (4) sea floor anti-corrosion technology.

The crustal lithosphere, oceanic benthos layer, demersal hydrosphere, and atmosphere comprise a single interconnected unit. From the perspective of Earth systems it is vital to engage in long-term, in-situ observation of multispheric interactions (atmosphere, hydrosphere, biosphere and lithosphere) around the sea-atmosphere, sea-land, and sea floor boundaries (Fig. 4.2). Essential properties of in-situ, full-depth, topographic sensors are underwater calibration-free and high resistance to environmental damage. Long-term, stable, and comprehensive in-situ surveys of key physical and chemical parameters are required to combine sound, optics, spectrum, mass spectrum, and other technologies and methods. The areas that must be surveyed include material and energy transportation pathways (such as caverns created by ocean drilling), deep-sea cold springs and hydrothermal fluids, and large-expanse oceanic physical–chemical fields. Breakthrough technologies for such a system include: comprehensive multi-parameter survey technologies for the sea-atmosphere, sea-land, and sea floor boundaries; quantitative analytical methods for extreme deep-sea environment; automatic intelligent power generation and management technologies for underwater environment; long-term, calibration-free, and stable operation technology for in-situ sensors; and automatic storage, protection, and long-distance and real-time transmission technology for batch data.

4.4 Deep-Sea Mobility and Residence

Deep-sea mobility is predicated on the development of ultra-high-speed, ultra-long distance, ultra-deep water, ultra-invisible and ultra-large size mobile platforms. The following key theories are required.

Ultra-high-speed: The relationship between microscopic (e.g. cavitation/Ultra-cavity growth, collapse and interaction) and macroscopic mechanisms must be established. The generation mechanisms of cavitation and cavity instability in navigational processes must be studied as well as the characteristics and mechanisms of cavity collapse. Physical models of collapse pressures should be constructed. The influencing mechanisms and patterns of ventilation on stability should also be studied along with the characteristics and mechanisms of ventilated cavity dropout (Wu 2016).

Super-cavitation drag reduction: Important considerations are the generation and annihilation mechanisms of vapor bubbles in water. The rules governing generation, variation and annihilation of vapor bubbles must be studied under low temperature and high-pressure conditions. The factors affecting drag reduction by bubble curtain caused by vapor should be identified. Fluid-gas–solid coupling mechanical models and simulation methods in high-velocity submarine navigation bodies must be studied. Revealing the drag reduction patterns of fluid-gas–solid three-phase coupling and the construction of simulations of resistance and controlling forces will provide strategies and algorithms for control in ultra-high velocity navigation.

Ultra-deep water: New materials must be developed with high durability, low density, abrasion resistance, and strong mechanical properties. These might include carbon fiber, ceramic materials, titanium alloys, other new nonmetals, and compound metallic materials with suitable mechanical and corrosion-resistance properties which will solve the problems of ultra-deep water pressurization and capsulation.

Ultra-invisible: Anti-noise mechanisms and denoising in cavitation states are essential. The empirical formulae for noise pressure and vibration velocity are deduced from the noise generated in the initiation and collapse stages of cavities. Noise in the entire process and its affecting factors must be examined and understood, from the initiation of the generation of free gas to cavity collapse. The study of denoising patterns starts from their affecting factors.

Ultra-large size: The dynamic properties and fluid–solid coupling mechanisms of ultra-large mobile platforms must be established, as well as the dynamic responses of ultra-large platforms navigating in complex ocean environment. The mechanical rules governing fluid–solid coupling in ultra-large mobile structures must be revealed. The interactions of nonlinear mechanical processes in ultra-large structures must also be studied.

The deep sea offers strategic opportunities for the expansion of the space available for human habitation (Fig. 4.3). Nevertheless, several crucial scientific problems must first be solved. Theories are required to predict the dynamic responses and intensity of complex ocean engineering under extreme loading conditions. Specific issues include fluid–solid coupling problems caused by thumping, green water, shaking, and other phenomena that affect machinery and structures in the extreme environment of the deep ocean will influence the engineering of human submarine residences; occurrence, evolution, and description of extreme ocean environment events; theories and methods for ocean structure/equipment response inhibition. Deep-sea environmental protection must also be taken into consideration, particularly the influence of human activity in underwater cities on marine ecology.

4.5 Exoplanets Exploration

The three basic conditions essential for the existence and survival of all life of Earth are energy, certain fundamental elements (C, H, N, O, P and S) and liquid water. The first two are commonplace throughout the universe. The third condition therefore becomes the key constraint for Earth habitability and also the most important reference index for the identification of habitable exoplanets (Meadows and Barnes 2018; Pierrehumbert 2010; Zhang 2020). Long-term existence and maintenance of liquid water on a planet’s surface is dependent on three factors: the properties of the planet itself, the properties of its star, and the properties of its planetary system. The properties of the planet itself include planet size, volume and mass, its composition at the time of initial formation, internal structure, geological activity (volcanic activity, plate movement, heat plumes, etc.), magnetic field, planetary orbit (semi-major axis, eccentricity and inclination), rotation rate, atmospheric composition and quality, clouds and aerosols, etc. The crucial properties of its star are temperature, activation, metal abundance, rotation rate, and early-stage luminosity. Planetary system properties include interactions between planets, stellar gravitational and tidal action on planets, and the influence of other celestial bodies on the planetary system. Rapid development of exoplanet exploration will be beneficial from the enormous advances in investigation technologies, for example, wide-field photometry on planetary surfaces and high precision optical spectrum surveys. In the future, it will be essential to develop new theories and to carry out simulations, experiments, and observations to understand whether planetary surfaces can sustain liquid water over long periods of time.

4.5.1 Exploration of Atmospheres and Life on Exoplanets

Atmospheric observation theories for exoplanets are relatively mature. Whereas, technological measures are limited by the size of current telescopes. Coronagraphy and stellar shading board technology are applied for direct imaging. The former method is technologically mature but is untested in the observation of exoplanets and the latter is still in the R&D stage, being developed exclusively by NASA, and is currently being prepared for atmospheric observation of exoplanets (National Academies of Sciences, Engineering, and Medicine 2018).

Current observation technologies can only explore the atmospheres of planets that have large volumes and relatively abundant atmospheres, for example, ‘hot Jupiters’ and ‘hot Neptunes’. It is very difficult to observe the atmospheres of terrestrial planets. The apertures of current telescopes are too small, limiting observation capabilities, and atmosphere detection on terrestrial planets is more complex. For instance, the effects of clouds on stellar light, and of our own atmosphere on atmosphere observation signals, present enormous challenges to observation precision. Coronagraphs, or stellar shading plates, deal with the problem of stellar light shading. However, with respect to cloud shade, ultra-long exposure times are required to improve signal-to-noise ratios sufficiently to solve the problem. Large-aperture space telescopes (several meters aperture) must therefore be developed specifically for the observation of exoplanets. In the stage of start-up and experience accumulation, observation of huge planets like hot Jupiters has been attempted with small-aperture telescopes (roughly 1 m).

The investigation of life-related gaseous components in the atmospheres of exoplanets is currently accepted as the most promising approach for searching for exolife. New technologies will be developed to achieve the investigation objectives and study will be carried out in combination with a full deep space investigation plan, which is expected to accomplish great breakthroughs in the future.

4.5.2 Climate Environment on Oceanic Planets

Liquid water on the Earth’s surface accounts for roughly 0.02% of the total volume of the planet. On one type of exoplanet, water may account for 5% or even more of the overall volume, which means that the sea depths on this type of planet could be up to tens or even thousands of kilometers. Planets of this type are called ocean planets. If a planet is totally covered by ocean, there will be no weathering processes like those on Earth. Carbon dioxide from volcanic eruptions will therefore simply accumulate in the atmosphere. Ultimately, a runaway greenhouse effect is likely to develop, driving the atmosphere of an ocean planet into a fugitive state. In addition, questions relating to the distribution and intensity of ocean circumfluence, surface climate, and life supporting capability of ocean planets will require in-depth research.

4.5.3 Ocean Currents and Heat Transportation on Lava Planets

Observations indicate that the surface of a certain type of solid and extremely hot planet is a molten sea of magma, very similar to that of early Earth. The viscosity of magma is higher than that of aqueous ocean but it can still flow, redistributing heat energy and mass around the entire planet. The Spitzer and Hubble telescopes have both observed the characteristics of heat distribution on molten planets, especially those with tidal locking, where rock components may be molten on one hemisphere and consolidated on the other, exerting a huge influence on the configuration of the entire planet. Nevertheless, research in this area is scant at present.

Every country should coordinate its scientific and technological forces to seize international leading-edge themes for exoplanet detection, to develop large-size and high-precision space telescopes, to develop new technologies and methods, and to promote exoplanet detection. In China we have project CHES, which is searching for habitable terrestrial planets in habitable zones of the Sun’s neighboring stars using astronomical observation methods. The super Kepler project is searching for Earth 2.0 using large-view, small-aperture arrays. The 1.2 m ExIST spectroscopic telescope is searching for relatively close exoplanets and is exclusively applied in the “Tianlin” (Chinese means Sky Neighbors) project to study planetary properties in habitable zones and search for exolife on suitable candidate planets. In addition, the spatial interference method is being used to search terrestrial planets in habitable zones and image them directly using large-scale space telescopes in the “Miyin” (Chinese means Voice Searching) project.

4.6 Infrastructure Framework and Theories of Positioning, Navigation, and Timing (PNT) Services

The traditional geocentric space–time datum maintenance technology and its corresponding PNT technology based on Global Navigation Satellite System (GNSS), suffers from inherent vulnerabilities, regionality and other practical limitations. GNSS signals can’t explore deep space, penetrate water bodies, or provide high-precision and high-sensitivity observations for detecting the variations of deep Earth materials. Therefore, they could hardly meet the requirements of deep space, deep sea, and deep Earth exploration. The space–time datum based on geometric and optical observations can only provide PNT services in local areas. As a result, the new challenge for future space–time datum construction and maintenance is to seek technologies based on new physical principles supporting the research of “three deep and one system” and livable environment.

A comprehensive national PNT system refers to the PNT service infrastructure utilizing a variety of PNT information sources based on different principles under the control of cloud platform (Yang 2016). In the future, pulsars may be the PNT information source for deep space detection; the satellite constellation at Lagrange points may provide radio PNT information with the same source and datum as the BeiDou system for the solar system and Earth-Moon system; the low Earth orbit (LEO) communication satellites would serve as the information source for low-orbit space; a PNT reference network similar to the geodetic network and satellite constellation can be built on the seafloor. Thus, a PNT infrastructure network with seamless coverage from deep space to deep sea can be completely established (Fig. 4.4).

The comprehensive PNT system will be the important direction of space–time datum construction for China and some developed countries. The key issue involved is to form a unified space–time datum using various information sources based on different physical principles. Firstly, a radio celestial reference frame will be established through observing stellar and occultation, and the relationship between the optical celestial and other reference framework must be established. Secondly, through the measuring and modeling of high-precision Earth orientation parameters (EOP), the relationship between the geodetic datum and deep space datum will be established. Thirdly, through the joint observations of land surface, sea surface and seafloor datum points, the geodetic and marine datum will be related. Thereby, the datum unification of deep space, geodetic, deep Earth, and deep sea will be accomplished.

4.6.1 Collaborative PNT Systems in High, Medium, and Low Orbits

As for deep-space PNT satellite constellations, the Lagrange points of the Sun-Earth and Earth-Moon system are the optimum relay stations for PNT services, and navigation satellite constellations (high-orbit constellations) can be deployed. Lagrange satellites may be equipped with upper and lower antennae directing to the deep space and Earth, respectively. The upper antenna can observe pulsars and broadcast ephemeris for deep space vessels to provide PNT services (Fig. 4.5). The lower antenna can receive the medium and low orbit signals of GNSS and provide PNT services for near space vessels. Signals from Lagrange constellation and GNSS can be combined and operated synchronously (Li and Fan 2016; Gao et al. 2016; Lu 2012).

The main challenges faced by Sun-Earth and Earth-Moon Lagrange navigation constellation are the stability and autonomous controllability of the constellation itself; the autonomous orbit and clock bias determination of the constellation; the compatibility and interoperability of deep-space and low altitude constellations.

The MEO and LEO communication and remote sensing satellite constellations are suitable navigation augmentation constellations. They can not only provide enhanced PNT services, but also determine the Earth’s gravity field (using high-low tracking or low-low tracking technology) and research spatial atmosphere (using radio occultation). The working patterns of LEO navigation constellation can be divided into two types. The first is broadcasting navigation signals directly from LEO satellites with small on-board atomic clocks. However, it is relatively difficult to construct the constellation, apply for operating frequency and control the cost. The second is providing ranging and positioning information using time-scale data carried along with communication signals from LEO satellites. In this way, on-board atomic clock is unnecessary and the time information can be acquired by forwarding GNSS time. Whereas, it is incapable of providing independent, high-precision PNT services and can only be used to supplement and enhance medium- and high-orbit GNSS services.

As the supplement and enhancement of medium- and high-orbits GNSS constellations, low-cost LEO Earth observation and communication satellites would considerably increase the number of visible satellites to users, optimize the observation geometry and improve the precision of GNSS satellite orbits (Reid et al. 2016; Yang et al. 2020). In addition, the signal of LEO satellites would be relatively stronger and has better anti-interference ability (except deliberate interference). As a navigation augmentation constellation, low-orbit satellites could broadcast correction information, shorten initialization time for precise point positioning, and increase the calculation efficiency (Zhang and Ma 2019; Tian et al. 2019). Collaborative PNT service systems with high-, medium-, and low-orbit satellites will become a new focus of PNT services.

4.6.2 Pulsar Space–Time Datum

Pulsars are neutron stars that revolve at enormous speeds with extremely stable rotation frequency. Like the fixed star catalogue, the catalogue of pulsars constitutes a high-precision inertial reference frame. Pulsars generally send out periodic pulse signals, and the long-term stability of the rotation frequency of some millisecond pulsars is comparable to the most stable caesium atomic clocks. Therefore, pulsars can serve as natural clock and beacon in the universe that provide stable and reliable space–time reference for the navigation in deep space.

Evenly distributed pulsars can constitute a constellation similar to that of a navigation satellite system, and provide high-precision and high-security autonomous navigation for deep space craft. They can also serve as precise natural clock group and establish new time frequency datum with better stability. It can be further employed to identify atomic time shifts and unknown changes and to study tiny variations in Earth’s rotation and other solar system ephemeris.

The difficulties of constructing the space–time datum of pulsars lie in generating high-precision catalogue to satisfy the deep space PNT service requirements and developing deep space signal receiving and processing sensors for pulsars with low power consumption.

4.6.3 Marine PNT System

The establishment of a marine datum requires the formation of a unified land-sea geodetic datum network combining the coastal and island continuously operating reference stations (CORS), sea level GNSS buoys, and seafloor datum points. The unification of land and marine space–time datum is realized through the joint measurement of geodetic, sea surface and seafloor control points and fusion of multi-source information (Fig. 4.6).

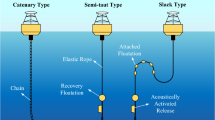

A seafloor geodetic datum network is generally established by reasonably deploying the sonar beacon network on the seafloor. In order to prolong the operational life of seafloor beacons, they can be designed to be purely passive or to operate in the ‘wake-up’ mode, which is to say they are normally in a dormant state but can be remotely activated at any time to provide navigation and positioning services.

There are a number of key problems involved. With regard to marine geophysics, marine geodynamic effects, subsea plate movements, subsea dynamic environmental effects, and optimal seafloor datum network geometry must be considered. With regard to seafloor beacon development, the beacons and shelters should be able to resist pressure, corrosion, and turbulence. As for PNT information transmission, sea surface observation geometry, seafloor datum network geometry, and observation equation establishing and observation fusion based on different physical principles are involved. With regard to marine geophysical inversion, fundamental theories and practical and scientific approaches must be developed for maintaining the coordinate accuracy of seafloor datum networks and datum points.

4.6.4 Miniaturized and Chip-Size PNT

Comprehensive PNT information sources are crucial for intelligent Earth, intelligent ocean and intelligent life. With the coexistence of various information sources, it’s inevitable to realize comprehensive PNT sensor integration and information fusion. Otherwise, large, heavy and power-consuming user terminals will be incompatible with the principles of ‘intelligent’ cities and living. PNT application development must therefore aim towards developing chip-sized, modularized, low-power, integrated, miniaturized PNT terminals (Yang and Li 2017).

Micro-PNT offers not only micro-size (miniaturized) PNT equipment, but also “high precision + stability + reliability” in operation. Miniaturization demonstrates the optimized design principles, delicate manufacture techniques, and the deep integration technology for multiple micro-components. In addition, miniaturization requires the autonomous calibration of each measurement component, including both the active and passive calibration capacities, and also demands that the output information from various components in the PNT system be fused adaptively (Yang and Li 2017).

With sensor integration and miniaturization technology, the objective of Micro-PNT is to develop highly available, anti-interference, portable, stable, and low-power integrated sensors. The challenge to achieving goals is to develop inertial navigation components with ultra-stability and small accumulated error (e.g., quantum inertial navigation assemblies) based on super-stable chip-size atomic clocks.

4.6.5 Theory and Technology of Quantum Satellite Positioning

Pulse signals with entanglement and compression properties can be applied in quantum positioning. The pulse width and power (the number of photons contained in each pulse) are key factors affecting the positioning accuracy. The more photons contained in each pulse (equivalent to redundant observation), the higher measurement accuracy of pulse delay may be achieved (Bahder 2004).

As satellites with quantum entanglement capacity can only send out entangled photon signals to assigned position, they cannot provide PNT services in broadcasting mode like BeiDou or GPS. Therefore, it is not feasible to transmit quantum entangled photons from satellites, but from user terminals. The LEO satellites (with known orbits) receive and reflect the pulse signals transmitted by user multi-antenna transmitters, then the users calculate the distance between the transmitter and the satellites with the entangled to-and-fro pulse signals between them and thus calculate the user’s position (Fig. 4.7). Possessing both high measurement accuracy and strong anti-interference and anti-deception abilities, quantum positioning technology can provide high-precision and high-reliability navigation and positioning services for core carriers in confrontation. At present, the quantum ranging accuracy using single photon entanglement is better than 1 mm, and the double-photon measurement accuracy is expected to reach 1 μm, which could provide sufficiently high-precision data support for real-time monitoring of plate motion (Pan et al. 1998, 2003; Liao et al. 2017; Giovannetti et al. 2001, 2002; Jozsa et al. 2000; Peters et al. 2001).

The key fundamental theories of quantum satellite positioning include quantum entanglement theory and devices for the user terminal, antenna design of the user terminal, the pointing accuracy of laser transmission and its influence, the model of energy consumption and the design of quantum positioning constellation.

4.6.6 Datum Measurement Technology for Optical Clocks and Elevations

The traditional leveling and gravity measuring methods for height datum construction are inefficient, labor intensive and error cumulative, which cannot be employed in mountains or sea areas. Thus, the elevation datum obtained is regional and difficult to be unified globally.

According to the Einstein’s theory of general relativity, clocks are affected by the gravity fields. With a common time scale, the clock operates at different speeds under different gravitation, which means that the frequency shift of atomic clocks is closely related to the gravitational potential difference. Thus, the frequency shift between local and remote (optical) clocks can be determined according to the gravitational potential and height differences between the two points and, in turn, the gravitational potential difference can be determined with the clock frequency shift (Shen et al. 2016, 2017, 2019a, b; Mehlstäubler et al. 2018; Chen et al. 2016; Freier et al. 2016). The spatial gravitational potential and height variation can be calculated through precise monitoring of the time–frequency transitions of high-precision atomic clocks at different locations, thereby accomplishing the direct measurement of gravitational potential differences and achieving the unification of global height datum (Fig. 4.8).

The height measurement using optical clocks is a revolutionary technology for the spatial datum establishment. The key factors involved include the high-precision atomic clock technology and the long-distance frequency comparison technology. At present, the precision of optical atomic clocks is up to 6.6 × 10–19/h, which can sense elevation variations of about 7 mm. If the frequency comparison accuracy reaches 1 × 10–18, the accuracy of elevation measurement can be within 1 cm. A small optical clock will be equipped in the China’s space station to explore the feasibility of the large-scale unified height datum (Fig. 4.9).

The fundamental theories of the height datum establishment with optical clock include the precise theory relations and models between gravitational potential difference and clock frequency, high-precision time transfer strategies and algorithms of optical clocks at different sites (the required transfer error is much smaller than the error of the optical clock itself), the frequency error influence models of various optical clocks on gravitational potential difference, and the influence mechanism and quantitative evaluation of other disturbances on the optical clocks frequency.

4.6.7 Technology and Fundamental Theory of Resilient PNT

Based on comprehensive PNT information, resilient PNT takes the optimized and integrated multi-source PNT sensors as the platform, adopts resilient adjustment of functional models and resilient optimization of stochastic models, and realizes the integration and generation of available PNT information across a range of complex settings. Thus, the high availability, continuity, and reliability of PNT information are ensured, and so is the safe and reliable application of PNT in critical infrastructure (Yang 2019).

The PNT services involved in national critical infrastructure operation and personal security must be safe, reliable and continuous. However, any single PNT information source has the risk of unavailability, which may affect the safety and integrity of PNT service. Thus, resilient PNT is proposed to realize the “resilient” selection of PNT information through polymerizing redundant information, and improve the reliability, security and robustness of PNT services for various carriers.

Resilient PNT is a revolutionary technology for PNT integration and application. The fundamental theories involved are resilient integration of PNT sensors, resilient functional modeling, resilient data fusion theories and methods (Yang 2019), and resilient application of PNT infrastructure. The key technologies include the adaptive identification of observation model and the environment, resilient observation modeling adaptable for different environments, theoretical models of resilient data fusion, etc.

4.7 Big Data and Artificial Intelligence

The digital revolution, represented by big data and artificial intelligence, is profoundly altering our life and the way of thinking, and will inevitably change scientific research paradigms. Over the past two or three centuries, a series of industrial revolutions has significantly speeded up the process of civilization and facilitated the development of science and technology. It is believed that the main driving force for the current and near future scientific and technological breakthroughs and the development of a sustainable society is the digital revolution. As a matter of fact, scientific research has entered the fourth industrial revolution marked by the new paradigm of data-intensive knowledge discovery. The principal digital technologies including artificial intelligence, the internet of things, 3D printing, virtual reality, machine learning, blockchain, robotics, and quantum data processing will create unprecedented opportunities to solve many major and complex problems faced by modern science and society.

Multiple examples demonstrate the two unique advantages of big data analysis and artificial intelligence. One advantage is the increasing volume of available data, while data collection, arrangement, cleaning, analysis, and other preparation processes can be simplified and optimized so that data acquisition is no longer time and labour intensive. The other advantage is the discovery of previously unrecognized processes and scientific patterns or rules by using data and knowledge mining, resulting in a rapid evolution from the scientific and technological paradigm of ‘searching answers for known scientific questions’ to ‘searching for unknown answers for unknown questions’ (Cheng et al. 2020).

Machine learning, machine reading, complex networks, and knowledge graphs can collate and integrate relevant data from multiple sources in diverse disciplines to achieve effective digital association and visual presentation of complex systems and interactions between numerous spheres, scales, media, and processes in the Earth. Every significant extreme event occurring within Earth’s complex system can be analyzed deeply, associated remotely, simulated quantitatively, and predicted statistically using knowledge mining based on deep machine learning, artificial intelligence, and complex systems analysis. Big data and artificial intelligence will be utilized in the geosciences to facilitation of vital data-driven geoscientific discovery.

4.7.1 Data Integration, Data Assimilation, and Knowledge Sharing

To carry out research on the Earth habitability under the vision of “three deep and one surface” systems (deep Earth, deep ocean, deep space, and surface systems), it is necessary to collect geoscientific data from diverse disciplines, on multiple spatiotemporal scales, across enormous ranges, and in vast volume. An integrated Earth monitoring system must be constructed with strong capacity for observation and data processing that can link diverse elements and processes involved in the land-, space-, and ocean-based monitoring systems. Data assimilation technology should be developed to form reusable datasets that are continuous in time and space, with consistent internal association and the capacity for describing co-evolution of sea-land-atmosphere systems. Just to name a few examples, construction of databases including sediment geochemical database that can spatially or temporally link sedimentological, biological, and environmental information is necessary for holistic reconstruction of the evolution of surface processes in the Earth system; a fundamental dataset of global environmental changes is needed for comprehensive study of the environment and associated hazards; deep space exploration needs panoramic detection around the entire Sun using measurements from the solar-Earth sphere networks; a ground platforms-based “digital space brain” system should be developed; and for deep Earth research, one of the focuses should be given on establishment of globally unified time scale by combining global geologic time variation data and other relevant criteria.

The explosive growth in geoscientific data resources has resulted in a variety of large-scale and distributed professional databases as well as the rapid development of data analysis and computational infrastructures. For instance, engineering databases can provide structured spatial data in a machine-readable form, whereas scientific data that are collected, preserved, maintained, and partially published by scientists are dispersed in various media (e.g., journal papers, books, research reports, and web platforms) as text, figures, photographs, tables, audio, and video. Most of these unstructured data cannot be easily read, queried, or integrated by machine. These types of scientific data are complementary and must be integrated to describe the entire Earth. Technically, the principal difficulties in geoscience data utilization are data assimilation and database interoperability and compatibility. With respect to data that is still not shared, await utilization after release, or is not automatically readable by machine, global cooperation is required to promote data standardization, unification, and integration. A fundamental step in promoting data sharing is to ensure that data meets FAIR (Findable, Accessible, Interoperable, and Reusable) specifications and standards set down by the international scientific community.

4.7.2 Geoscientific Knowledge Graphs and Knowledge Engines

Obtaining information from data by scientific, rational, and effective methods is the principal concern of geoscientists to aid in understanding and interpreting geoscientific issues. Therefore, a considerable criterion for quantification of the results of big data processing is how much useful information and knowledge can be extracted from the big data.

The Earth is a complex system. The spheres of the Earth are in a continual process of dynamic interaction and mutual influence. Fundamental objectives of current geoscience are to study the operating mechanisms, relationships, and controlling and adjustment factors of the co-evolution of the spheres. Multi-disciplinary efforts are required to address this fundamental and universal geoscientific question. Driven by this and other fundamental questions, the geosciences are undergoing a transition from seeking answers to specific questions in individual disciplines to addressing universal and inclusive questions in transdisciplines. A deep integration of modern disciplines (e.g., data science and mathematical geosciences) and traditional geoscience disciplines is required to break down current interdisciplinary barriers and to construct a new theoretical knowledge system to connect all geoscience disciplines. New data analytical technologies are essential to deal with and analyze big data to obtain new knowledge. Platforms connecting knowledge systems from multiple disciplines using digital pattern detection will achieve full integration of disciplines and provide the data needed for multi-dimensional geoscientific practice (Fig. 4.10).

Integration of geosciences, computer technologies, engineering and mathematics (Cheng et al. 2020)

Methods and technologies for swift and efficient big data processing are required for the integration of massive volumes of data from various disciplines and to deal with multi-source, heterogeneous, and rapidly accumulating data. Advanced information technologies (e.g., cloud and parallel computing, supercomputing, and complex networks) can provide crucial support. Knowledge resources can be described visually using knowledge graphs, and knowledge engines can provide intelligent knowledge management systems. Both knowledge graphs and knowledge engines can be applied in mining, analyzing, constructing, and displaying knowledge associations to achieve multi-discipline integration. Modern AI technology and advanced semantic knowledge engines can be jointly utilized to develop knowledge graphs to aid and accomplish the automat integration of human thinking and machine learning, and even extend to the processes of integrating machine learning, information management, and infrastructure networking. Knowledge engines can be constructed using specific or natural programming languages and are editable, amendable, expandable, and reusable. Knowledge graph and engine models can therefore be continuously improved and optimized by positive feedback processes. Integration of big data and computing technologies will promote abductive reasoning driven by both data and physical models, thereby accomplishing organic integration of essential computing resources and human intelligence to deal with the challenges presented by complex geoscientific problems.

4.7.3 Deep Machine Learning and Complex Artificial Intelligence

Artificial intelligence is the simulation of human intelligence and behavior in computer software. The concept of machine learning was originally presented by the American computer and artificial intelligence expert Arthur Samuel and ever since it has been an important field in the research and development of artificial intelligence. The general objective is to extract characteristics and information automatically from raw data using computers. Artificial intelligence and machine learning are rooted in cross-fertilization between applied mathematics, applied statistics, and computing technology and have developed rapidly alongside big data technology and the recent exponential growth in computer processing power. In the past five years, the AlphaGo AI program developed by Google DeepMind has defeated the world’s best human chess player, and artificial intelligence has attracted widespread attention and general approval in society. In 2019, “quantum supremacy” was successfully demonstrated by Google—a major milestone in computing which heralds an entirely new development space for supercomputers. The computing power of supercomputers and big data will boost technical development of complex artificial intelligence, deep machine learning, and machine reading.

Big data analysis and supercomputing can simulate, calculate, and predict Earth development, evolution, and sphere interactions. The internal mechanisms of, and connections among, important geological events can be revealed, and the future development trend of the Earth’s systemic processes can be analyzed and predicted. In the deep sea, breakthrough research has already been carried out on prediction and forecast based on big data and artificial intelligence technologies. Technological support from both artificial intelligence and machine learning are necessary for research on the evolutionary laws of habitable environments on terrestrial planets, the development of intelligent identification technologies for natural catastrophes driven by analysis of disaster mechanisms and data synthesis, construction of a digital model of the ionosphere system, and the construction of digital terrestrial space models with high spatiotemporal precision.

The capacities of artificial intelligence and machine learning programs depend on mathematical models, algorithms, and computer processing power. At present, calculation methods commonly used in machine learning include logistic regression analysis, artificial neural networks, naïve Bayes classifiers, random forest algorithms, and adaptive enhancement, all of which are at the forefront of research and development (R&D) and application development in mathematical geosciences. For instance, neural network models are becoming a mainstream element in machine learning utilized in quantitative prediction and assessment of mineral resources and energy by academia, governments, and industry. However, complex geoscientific problems involve nonintegrable or nondifferentiable functions (e.g. fractal curves or curved surfaces) that can’t be approximated infinitely by current neural networks. Approximation theorem proves that only the Lebesgue integral function can be approximated in neural networks, which ensures infinite approximation of geological problems as long as they can be represented by smooth, continuous, integrable functions. For dealing with geological problem represented by complex functions, artificial neural networks with greater width and depth must be utilized which usually generate a wider range of model parameters requiring more data and computing power. The results are also unstable or non-convergent, and their repeatability and precision are also significantly reduced. These represent significant constraints on the use of artificial intelligence in research on Earth complexity. The currently fragmentary observational database is another issue for the application of artificial intelligence in the geosciences. Innovation in theories and methods is still imperative in the design and modelling of neural networks targeted on extreme Earth events caused by abrupt system changes. This type of artificial intelligence can be called ‘complex artificial intelligence’ or ‘local precision artificial intelligence’.

4.7.4 Data-Driven Geoscientific Research Paradigms Transformation

The digital revolution will promote two major transitions in geoscientific research: the transition from expert learning to machine learning and artificial intelligence, and scientific paradigm transition from problem driven to data driven.

Human beings obtain new knowledge and experience by re-learning and re-practicing previous experience and knowledge. Machine learning and artificial intelligence can effectively promote positive feedback processes to improve the performance of the learning system itself. Primary objectives of machine learning and artificial intelligence are extracting the patterns hidden in data, and uncovering new knowledge by data analysis. New functions and advanced behavior of robots can be achieved by integrating advanced technologies like computer vision and natural language in machine learning and artificial intelligence. These types of technologies have been rapidly applied in many fields of natural and social sciences, and engineering, and profoundly affecting industries. Machine learning and artificial intelligence technologies have also been utilized successfully by geoscientists in quantitative analysis, simulation, prediction and evaluation of extreme phenomena such as severe weather events, volcanic activity, earthquakes, and mineral and energy resources. This indicates that machine learning and artificial intelligence have a huge potential in the detection, simulation, and prediction of extreme events based on geoscientific big data. The development of big data and artificial intelligence must be leveraged to promote the integration and cooperation of machine learning, human intelligence, and artificial intelligence (Fig. 4.11).

Major components of integrated data, models, algorithms, data mining processes, computer networks and personnel to support data-driven knowledge discovery (Cheng et al. 2020)

From the perspectives of Earth system science and habitable Earth, the main issues confronting geoscientists can be classified into two groups. One group consists of fundamental scientific issues relating to the interaction and co-evolution of the multiple spheres in the Earth system. The other group consists of application issues related to the mechanisms, prediction and spatial–temporal distribution of natural resources (e.g. mineral resources, energy, and water) and of geological hazards (e.g. earthquakes, volcanoes, landslides, flooding, tsunamis). Based on geoscientific big data, super-computing capacity, and modern artificial intelligence technologies, researchers can correlate and analyze lithosphere, biosphere, hydrosphere, and atmosphere under a unified geological age “deep time scale” including exploring the variations, internal correlations, and co-evolution of every relevant sphere in the spatiotemporal scale of the Earth. For example, the use of time series analysis or complex network techniques to analyze global data sets can quantitatively describe the changing trends of the Earth’s biodiversity, the chemical index of the atmosphere, the chemical composition of seawater, and the changing laws of some mineral phases. Reconstruction of Earth’s evolution thereby reveals the complex correlations between important and extreme geological events (e.g., supercontinent formation, massive biologic extinctions, BIF iron ore body formation, the Great Oxidation Event, snowball Earth event, and large-scale mineralization). A number of crucial discoveries may follow, including identification of unrecognized long distance interdependent relationships (symmetric or asymmetric) between vital events, and understanding of mutual cascade circulation and gradually upgrading effects. Data-driven study can address these unanswered questions, providing breakthroughs in the research paradigm of ‘finding unknown answers to known questions’.

References

Bahder TB (2004) Quantum positioning system. In: Proceedings of the 36th annual precise time and time interval systems and applications meeting, Washington, D.C., December 2004, pp 53–76

Chen LL, Luo Q, Deng XB et al (2016) Precision gravity measurements with cold atom interferometer (in Chinese). Sci Sin-Phys Mech Astron 46:073003. https://doi.org/10.1360/SSPMA2016-00156

Cheng QM, Oberhänsli R, Zhao M (2020) A new international initiative for facilitating data-driven Earth science transformation. Geol Soc Lond 499(1):225–240

Freier C, Hauth M, Schkolnik V et al (2016) Mobile quantum gravity sensor with unprecedented stability. J Phys: Conf Ser 723:012050

Gao YT, Xu B, Zhou JH et al (2016) Lagrangian navigation constellation seamlessly covering lunar space and its construction method (in Chinese). PRC Patent, CN105486314A, 2016-04-13

Giovannetti V, Lloyd S, Maccone L (2001) Quantum-enhanced positioning and clock synchronization. Nature 412(6845):417–419

Giovannetti V, Lloyd S, Maccone L (2002) Positioning and clock synchronization through entanglement. Phys Rev A 65(2):022309

Jozsa R, Abrams DS, Dowling JP et al (2000) Quantum clock synchronization based on shared prior entanglement. Phys Rev Lett 85(9):2010–2013

Li M, Fan JJ (2016) Analysis of the lunar navigation constellation scheme (in Chinese). Geomatics Sci Eng 036(005):20–23

Liao SK, Cai WQ, Liu WY et al (2017) Satellite-to-ground quantum key distribution. Nature 549:43–47

Lu Y (2012) Design and analysis of lunar communication relay and navigation constellation (in Chinese). Univ Electron Sci Technol China, Chendu, p 126

Meadows VS, Barnes RK (2018) Factors affecting exoplanet habitability. In: Deeg HJ, Belmonte JA (eds) Handbook of exoplanets. Springer, Cham, Switzerland

Mehlstäubler TE, Grosche G, Lisdat C et al (2018) Atomic clocks for geodesy. Rep Prog Phys 81(6):064401

National Academies of Sciences, Engineering, and Medicine (2018) Exoplanet science strategy. The National Academies Press, Washington, DC. https://doi.org/10.17226/25187

Pan JW, Bouwmeester D, Weinfurter H et al (1998) Experimental entanglement swapping: entangling photons that never interacted. Phys Rev Lett 80(18):3891–3894

Pan JW, Gasparoni S, Ursin R et al (2003) Experimental entanglement purification of arbitrary unknown states. Nature 423:417–422

Peters A, Chung KY, Chu S (2001) High-precision gravity measurements using atom interferometry. Metrologia 38(1):25–61

Pierrehumbert RT (2010) Principles of planetary climate. Cambridge University Press, Cambridge, p 674

Reid TG, Neish AM, Walter TF et al (2016) Leveraging commercial broadband LEO constellation for navigation. In: Proceeding of the 29th international technical meeting of the satellite division of the institute of navigation (ION GNSS + 2016). ION, Porland, Oregon, pp 2300–2314

Shen ZY, Shen WB, Zhang SX (2016) Formulation of geopotential difference determination using optical-atomic clocks onboard, satellites and on ground based on Doppler cancellation system. Geophys J Int 206(2):1162–1168

Shen ZY, Shen WB, Zhang SX (2017) Determination of gravitational potential at ground using optical-atomic clocks on board satellites and on ground stations and relevant simulation experiments. Surv Geophys 38(4):757–780

Shen WB, Sun X, Cai C et al (2019a) Geopotential determination based on a direct clock comparison using two-way satellite time and frequency transfer. Terres Atmos Ocean Sci 30:21–31

Shen ZY, Shen WB, Peng Z et al (2019b) Formulation of determining the gravity potential difference using ultra-high precise clocks via optical fiber frequency transfer technique. J Earth Sci 30(2):422–428

Tian Y, Zhang L, Bian L (2019) Design of LEO satellites augmented constellation for navigation (in Chinese). Chin Space Sci Technol 39:55–61

Wu Z (2016) Research on control of ventilated supercavitating vehicles based on water tunnel experiment (in Chinese). Haerbin, Master Thesis, Harbin Engineering University, 73

Yang YX (2016) Concepts of comprehensive PNT and related key technologies (in Chinese). Acta Geodaetica et Cartographica Sinica 45(5):505–510

Yang YX (2019) Resilient PNT concept frame. J Geodesy Geoinf Sci 2(3):1–7

Yang YX, Li XY (2017) Micro-PNT and comprehensive PNT (in Chinese). Acta Geodaetica et Cartographica Sinica 46(10):1249–1254

Yang YF, Yang YX, Xu JY et al (2020) Navigation satellites orbit determination with the enhancement of low Earth orbit satellites (in Chinese). Geomatics Inf Sci Wuhan Univ 45:46–52

Zhang X (2020) Atmospheric regimes and trends on exoplanets and brown dwarfs. Res Astron Astrophys 7:1–92

Zhang XH, Ma FJ (2019) Review of the development of LEO navigation-augmented GNSS (in Chinese). Acta Geodaeticaet Cartographica Sinica 48(9):1073G1087

Author information

Authors and Affiliations

Consortia

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (http://creativecommons.org/licenses/by-nc-nd/4.0/), which permits any noncommercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if you modified the licensed material. You do not have permission under this license to share adapted material derived from this chapter or parts of it.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 Science Press

About this chapter

Cite this chapter

The Research Group on Development Strategy of Earth Science in China. (2022). Scientific and Technological Support: Fundamental Theoretical Issues with Revolutionary Technologies. In: Past, Present and Future of a Habitable Earth. SpringerBriefs in Earth System Sciences. Springer, Singapore. https://doi.org/10.1007/978-981-19-2783-6_4

Download citation

DOI: https://doi.org/10.1007/978-981-19-2783-6_4

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-2782-9

Online ISBN: 978-981-19-2783-6

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)