Abstract

This paper examines the use of machine learning in creating digitally integrated design-to-fabrication workflows. As computational design allows for new methods of material specification and fabrication, it enables direct functional grading of material at high detail thereby tuning the design performance in response to performance criteria. However, the generation of fabrication data is often cumbersome and relies on in-depth knowledge of the fabrication processes. Parametric models that set up for automatic detailing of incremental changes, unfortunately, do not accommodate the larger topological changes to the material set up. The paper presents the speculative case study KnitVault. Based on earlier research projects Isoropia and Ombre, the study examines the use of machine learning to train models for fabrication data generation in response to desired performance criteria. KnitVault demonstrates and validates methods for shortcutting parametric interfacing and explores how the trained model can be employed in design cases that exceed the topology of the training examples.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

New interfaces between design and fabrication have fundamentally changed and extended the practice of architects, expanding our design agency from the designers of artefacts to the designers of material systems [17]. The direct design interface to digital fabrication at the material scale allows a new degree of material address and enables the steering of material behaviour in respect to performance criteria. In contrast to composites, functionally graded materials are singular multi-materials that vary their consistency gradually over their volume [8]. Due to their optimized material composition, they better respond to given conditions and a particular set of design and environmental constraints.

Although the integration of graded material properties into design is advantageous, these systems require a deep understanding of fabrication processes and depend on the detailed parametric and simulation models to steer the material composition. This in-depth knowledge of dedicated fabrication systems often requires specialist training and manufacturing setups can be unintuitive and repetitive. The current way to solve this is setting up parametrically defined interfaces that link the specification of geometry and material with fabrication steering data [5, 6, 9, 14]. These workflows employ a simulation-model-validation loop, where the performance of the graded materials is validated through simulation and physical testing of fabricated probes. However, the predefined fixed topology modelling logics are often linear and inflexible, thus can not readily be changed on the fly [4].

2 Machine Learning for Complex Graded Material Systems

The integration of machine learning into design systems enables new measures for feedback in the architectural design chain. These methods allow to rethink the organisation of the parametric model and break its inherent reductionism [17, 18]. Where machine learning is finding application in design generation, design optimisation and analysis [20], special interest lies with the application of machine learning for fabrication. Here, the training of models to detect and automate simpler parts of the steering process could lead to more intuitive specification processes. The process of design specification for fabrication lends itself well to machine learning. By being inherently data-heavy training data can be provided on an ever-expanding data set. In this way the quality of the model’s outcome increases with each fabrication cycle, evolving its mapping, complexity and scope while remaining intuitive and design integrated. The second potential of employing machine learning for manufacturing is its adaptability. The promise here is a higher degree of versatility in the design process where trained models can predict fabrication data with a higher degree of the topological variance than parametric models. The following case study examines the training of a fabrication model, its validation through physical prototyping and its application on topologically different situations that would otherwise be impossible through traditional parametric interfaces.

3 Knit as a Model for Material Specification

KnitVault takes a point of departure in the research project Isoropia [16]. Developed for the 2018 Venice Biennale, it represents a CNC knitted membrane architecture based on a system of arches pulled into tension by a cable-net system. Being a site-specific installation, it required the bespoke variation of each membrane through functional material grading [12]. In order to increase the structural performance of the arches, the knit variation took place through the methodical evaluation of fibre length and its deduced extendability to increase the depth of tensioned cones. The design model incorporated a parametric interpretation of a series of stitch pattern boundaries and translation of these into encoded pixel images as a means of material automatic specification.

The difficulty in evaluating the performative effect of the patterns and the inflexibility of the parametric set up is the starting point for this study. The process of specifying for CNC knitting is highly cumbersome relying on an in-depth understanding of the fabrication parameters and their limitations. Design protocols remain non-intuitive and require special expertise for programming the fabrication files, complicated by many parameters such as yarn friction and tensioning, knit speed, roll down settings and the performance of the resulting fabric. Additionally, the material specification encoding is limited to typical garment templates, such as sweaters and trousers etc. The lack of integrated simulation tools means that the prediction of material outcome: the shape, size and performance of knitted materials are still mainly evaluated and tuned through physical prototyping [17]. The use of functionally graded knit structures increases design complexity which inevitably leads to extensive prototyping. Only recently related research in computer graphics has investigated more user-friendly design to manufacturing interfaces for users who have no or only limited knowledge in knitting [2, 11, 13]. As a consequence, state of art in design-to-manufacture workflows for knit production are not meeting the current architectural design and fabrication paradigms, where predictability and the simulation of a design’s performance on all levels before making and construction are key [19]. The challenge of knit simulation lies in loops’ intricate structure and their interaction at multiple scales. If quite precise yarn-to-yarn modelling methods exist in textile engineering, these are only applicable on a sample scale, due to computational capacity limits. Besides, methods for abstracting knit behaviour through digital models are not yet fully understood.

Machine Learning offers an alternative approach for specifying graded knitted membranes. Here, the learning of relations between fabricated surfaces and corresponding design data replacing the simulation of highly detailed knit surfaces, their specification accompanied by the challenging encoding of knit parameters. As knit is interfacing with the machine through the image-based file, machine learning image-based algorithms are applicable here to generate CNC-fabrication data. KnitVault builds on and develops methods first examined in the probe Ombre [15] where the machine learning approach was first tested. In the following sections, it will be described how the methodology first established in Ombre developed further to KnitVault.

4 Ombre - Model for Light Filtering Textile Specification

Ombre investigates the specification of a light filtering textile. Constructed from two yarn types; the first a reflective monofilament and the second absorbent elastomer, Ombre is knitted in a single knit jacquardFootnote 1. Here the technique is used to create a complex non-binary pattern as the floats affect and change the translucency of the knitted textile. The shifting between two yarns creates complex non-linear correlations between light and shadow that are not readily intuited by the designer. To explore the correlation between the shadow and the material specification, Ombre uses image-based sensing through photographs under an artificial sun to record the shadow effects of the textiles. This data is then used to determine the textiles’ light filtering performances and map these to the corresponding fabrication bitmap patterns (Fig. 1). In Ombre, GANs [7] are employed to train the model and build the link between the shadow effect, fabric density and the corresponding fabrication bitmaps. Machine learning allows here to construct relations between sets of data when these cannot be described in a linear straightforward manner. The study proved the viability of predicting fabrication data for complex graded materials and demonstrated the relevance of image-based machine learning methods to train direct design to fabrication models. However, where the study focussed on simple performance criteria of light filtering, the further question of transferring the method onto another performance criteria provoked the development of the project into KnitVault.

5 KnitVault - Geometrical Performance Through Machine Learning

5.1 Experiment Description and Setup

In KnitVault the machine learning for fabrication approach is further extended to work on three-dimensional configurations. Rooted in Isoropia project, the aim is to study the functional grading of knitted textiles to control the deformation of the membrane under stress. The method is used to explore how the membrane can be better understood and how the trained model can be used on cases that exceed the topology of the training data.

The model is trained on a set of predesigned flat knitted textiles with varying patterns and fixed topology of a central node. All the textiles were knitted on an industrial knitting machine (STOLL 730T E7.2) with the same tension settings, in a single Dyneema® yarnFootnote 2, employing three knit stitch types (Fig. 2). This way the pattern geometry is the only changing parameter. Panels are then tensioned to a frame and loaded with 15 kg in the center, creating a 3d cone-like shape of different depths. The resulting shape is documented with a FARO 3d-laser scanner resulting in detailed point clouds to get the real-world geometrical performance. Pattern shapes were drawn manually, following the intention to create diversity in patterns. as vector files in turn transformed to bitmap files for fabrication (Fig. 3).

This shows that the piquet material is more stretchy (B expands by 13 cm) than piquet lacoste (A - by 12 cm). Stretch is also increased with interlock present in the stretched area (C - E), where E - the most stretchy of the investigated samples. The geometry of the patterns influences the stretch furthermore (C - I) (Fig. 4).

5.2 Description of Neural Network (NN) Workflow

The training of the neural network is based on the Pix2Pix architecture [10], implemented in Tensorflow [1]. Here the NN is learning a relationship between two sets of images: a training input and an output, where the 3D scans of the samples under stress are used for the input, and pixel-based knit fabrication files - for the output. Seven samples are used for training and one (D) - for validation. To interface the 3d data from point-clouds with the 2d image-based machine learning, clouds are translated into grayscale height maps (Fig. 2), where the darkness of the colour corresponds to the depth of the geometry (12–25 cm). For expanding the limiting teaching data, the image augmentation techniques [19] - flip, mirror, rotate, stretch and scale - are performed on the input and output files in parallel [3] (Fig. 5).

The output images represent different pixel distributions - where each colour represents a particular knitting instruction for the machine. After training the NN for 150 epochsFootnote 3 on augmented fragments it is applied to the seven input full-size images and then verified on the “unseen” height map (Fig. 6).

5.3 Results

The result of neural network application shows that it can correctly predict five of the samples (A, G, E, F, I) two of the predictions (B, C) are ambiguous (Fig. 6). This stems from a non-linear relationship between the pattern and the material stretching: alike samples have non-similar stretching behaviours and vice versa.

Although a 62% success rate is low compared to neural networks performances in other knowledge domains, it nonetheless seems reasonable, if assumed that ambiguity in the training data is the source of the error rather than the method itself.

The reassessment of the training data brings the understanding that one-dimensionality of the training data leads to logical errors where the high similarity of input files can be driven by very different output files, as visible through similar physical depths outcome for different knitting patterns (F/C “oval vs. cross”and I/B “oval vs. grey square” in Fig. 6).

5.4 Verification Through the Physical Prototyping

To verify the fidelity of the trained model the resulting pattern from the 8th “unseen” sample is physically knitted with previously established machine settings and tested on the loading rig (Fig. 7). Further, it will be referred to as ML sample.

The loading of the 8th sample results in a depth of 25,1 cm (Fig. 8), which is relatively far from the predicted depth of 18.4 cm as in D. When looking at the per cent of stitch types between D and ML - they vary and we conclude that the ratio of stitches and their visual distribution in the surface affects the expandability of the textile under load. Also, the same conflation of inputs and outputs during training can be the reason for the depth difference.

Then, the visual examination was conducted to find patterns within the range of samples which are more similar in material composition and performance to the ML sample. I and E are the selected candidates for the discussion (Fig. 9). Although I sample, being visually similar to ML (asymmetric oval-like geometry) does not have an aligned depth in its physical sample (25 cm in ML vs. 16 cm in I). The closest in the depths of the physical sample is sample E, however, it remains challenging to explain the reasons, as it has different stitch ratio proportions and not-alike in its pattern geometry to ML. However, in general, the machine learning sample did achieve the predicted cone geometry, albeit with 6 cm tolerance.

We conclude that none of the identified parameters such as relations between the stitch ratios, pattern visual configuration (oval, cross, free shape etc.) alone is determining the stretch of the material. Yet it proves the complexity of the problem and confirms the expediency of exploring machine learning techniques for predicting fabrication files for challenging material behaviours.

5.5 Testing the Neural Network on the New Design Topology

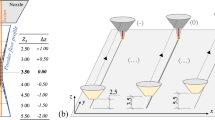

The second part of the KnitVault experiment tests the adaptability of our trained neural network to predict outside of the learning scope. If previously the training data was based on the same single cone topology, the second test explores how double and triple cone configurations lead to novel fabrication files (Fig. 10).

Successfully, it shows the capacity of a neural network to translate from single to double and triple cones while keeping the logic of the colour encoding. This confirms the possibility to use NN to overcome the limitations of parametric workflows. While a parametric model needs to be restructured to deal with new inputs or new output typologies, a neural network is more flexible and can transfer from one problem domain to another without further specification. This approach is especially useful for modelling environments where input and output types are fixed but their degree varies over the time of design-to-production.

6 Conclusion

While the results of the first test of predicting the fabrication file from a desired stretching depth remains ambiguous, the success of the method’s adaptability across different topologies is promising for future digital workflows. Generally, the project shows the possibility of bypassing domain-specific limitations of parametric models. Nevertheless, the used data set for the training was small and ambiguous and therefore the training results of the neural network are equally indistinct. Eventhough the heavy augmentation of a small data set is not advised in machine learning communities, it is symptomatic for the real-world problems in architectural applications, where big data sets are not always available. The question is how much data will be needed to train neural networks in architectural contexts to help to inform design decisions.

The second test of the adaptability of the method to different topologies, showed the possibility of transferring from one problem domain to another. Further probes have to be made to determine how far away from the training data a neural network can be used to predict the outputs.

The general approach of skipping the simulation-model-validation loop by training neural networks directly on the fabrication data can have useful implications for the other design-to-production workflows where a non-linear relation between design intent and fabrication data exists. For example, CNC milling usually includes cumbersome procedures of manual labour, when 3D models are translated to G-code. Nonetheless, there is a relationship between model and g-code which potentially can be learned by a neural network. Sharing fabrication data and design models for different fabrication machines (robots, CNC milling, 3d printing, knitting, etc.) can potentially help to overcome the limitations of small data sets and too much data augmentation for machine learning approaches in architectural digital fabrication.

Finally, the presented image-based approach can be extended to either parallel image-based approaches (optimizing for different problem domains simultaneously), voxel-based GANs or potentially even time-based voxel GANs in the future.

Notes

- 1.

knitting technique often used in garments and fashion, in which two or more types of yarns are knitted together and allows the intermittent foregrounding of one yarn while the other yarns “float” behind the knitted surface [21]. The technique allows the designer to create highly complex patterns, while “hiding” the unused yarns on the backside of the fabric.

- 2.

High-tenacity and ultra-high molecular weight polyethylene (UHMwPE).

- 3.

Cycles through the training data.

References

Abadi, M., et al.: TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems (2015). https://www.tensorflow.org/

Albaugh, L., Hudson, S., Yao, L.: Digital fabrication of soft actuated objects by machine knitting. In: Proceedings of the 2019 Conference on Human Factors in Computing Systems. Glasgow, UK, pp. 1–13 (2019)

Bloice, M.D., Roth, P.M., Holzinger, A.: Biomedical image augmentation using augmentor. Bioinformatics 35(21), 4522–4524 (2019)

Davis, D., Burry, J., Burry, M.: The flexibility of logic programming. In: Circuit Bending, Breaking and Mending: Proceedings of the 16th International Conference on Computer-Aided Architectural Design Research in Asia, Association for Computer-Aided Architectural Design Research in Asia (CAADRIA), pp. 29–38 (2011)

Deleuran, A.H., Schmeck, M., Quinn, G., Gengnagel, C., Tamke, M., Ramsgaard Thomsen, M.: The tower: modelling, analysis and construction of bending active tensile membrane hybrid structures. In: Proceedings of the International Association for Shell and Spatial Structures (IASS): Future Visions, Amsterdam, The Netherlands (2015)

Dörstelmann, M., Parascho, S., Prado, M., Menges, A., Knippers, J.: Integrative computational design methodologies for modular architectural fiber composite morphologies. In: Design Agency, Los Angeles, pp. 219–228 (2014)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Grigoriadis, K.: Computational blends: the epistemology of designing with functionally graded materials. J. Archit 24(2), 160–192 (2019)

Hensel, M.: Material and digital design synthesis: integrating material self-organisation, digital morphogenesis, associative parametric modelling and computer-aided manufacturing. AD Archit. Des. 76(2), 88–97 (2006)

Isola, P., Zhu, J.-Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017). https://doi.org/10.1109/cvpr.2017.632

Kaspar, A., Oh, T.-H., Makatura, L., Kellnhofer, P., Aslarus, J., Matusik, W.: Neural inverse knitting: from images to manufacturing instructions. In: Proceedings of International Conference on Machine Learning (ICML), Long Beach, California (2019)

La Magna, R., Längst, P., Lienhard, J., Fragkia, V., Noël, R., Baranovskaya, Y., Tamke, M., Ramsgaard Thomsen, M.: Isoropia : an encompassing approach for the design, analysis and form-finding of bending-active textile hybrids. In: Proceedings of the IASS Symposium 2018 Creativity in Structural Design (2018)

McCann, J., Albaugh, L., Narayanan, V., Grow, A., Matusik, W., Mankoff, J., Hodgins, J.: A compiler for 3D machine knitting. ACM Trans. Graph. 35, 1–11 (2016)

Mendez Echenagucia, T., Pigram, D., Liew, A., Van Mele, T., Block, P.: Full-scale prototype of a cable-net and fabric formed concrete thin-shell roof. In: Proceedings of the IASS Symposium 2018, Boston (2018)

Ramsgaard Thomsen, M., Nicholas, P., Tamke, M., Gatz, S., Sinke, Y.: Predicting and steering performance in architectural materials. In: Proceedings of eCAADe 37 - Material Studies and Innovation. Bd 2, pp. 485–494 (2019)

Ramsgaard Thomsen, M., Sinke Baranovskaya, Y., Monteiro, F., Lienhard, J., La Magna, R., Tamke, M.: Systems for transformative textile structures in CNC knitted fabrics – Isoropia. In: Proceedings of the TensiNet Symposium 2019, pp. 95–110 (2019)

Ramsgaard Thomsen, M., et al.: Knit as bespoke material practice for architecture. In: Proceedings of ACADIA 2016: Posthuman Frontiers, Michigan, USA (2016)

Ramsgaard Thomsen, M., Tamke, M., Nicholas, P., Ayres, P.: Information rich design. CITA Complex Modelling, pp. 254–255. Denmark, Copenhagen (2020)

Sheil, B., Ramsgaard Thomsen, M., Tamke, M., Hanna, S.: Design Transactions: Rethinking Information Modelling for a New Material Age. UCL Press (2020)

Tamke, M., Nicholas, P., Zwierzycki, M.: Machine learning for architectural design: practices and infrastructure. IJAC 16(2), 123–143 (2018)

Spencer, D.: Knitting Technology: A Comprehensive Handbook and Practical Guide. Woodhead Publishing, Lancaster (1996)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2021 The Author(s)

About this paper

Cite this paper

Sinke, Y., Gatz, S., Tamke, M., Ramsgaard Thomsen, M. (2021). Machine Learning for Fabrication of Graded Knitted Membranes. In: Yuan, P.F., Yao, J., Yan, C., Wang, X., Leach, N. (eds) Proceedings of the 2020 DigitalFUTURES. CDRF 2020. Springer, Singapore. https://doi.org/10.1007/978-981-33-4400-6_29

Download citation

DOI: https://doi.org/10.1007/978-981-33-4400-6_29

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-33-4399-3

Online ISBN: 978-981-33-4400-6

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)