Abstract

The problem of linearly predicting a scalar response Y from a functional (random) explanatory variable \(X=X(t),\ t\in I\) is considered. It is argued that the term “linearly” can be interpreted in several meaningful ways. Thus, one could interpret that (up to a random noise) Y could be expressed as a linear combination of a finite family of marginals \(X(t_i)\) of the process X, or a limit of a sequence of such linear combinations. This simple point of view (which has some precedents in the literature) leads to a formulation of the linear model in terms of the RKHS space generated by the covariance function of the process X(t). It turns out that such RKHS-based formulation includes the standard functional linear model, based on the inner product in the space \(L^2[0,1]\), as a particular case. It includes as well all models in which Y is assumed to be (up to an additive noise) a linear combination of a finite number of linear projections of X. Some consistency results are proved which, in particular, lead to an asymptotic approximation of the predictions derived from the general (functional) linear model in terms of finite-dimensional models based on a finite family of marginals \(X(t_i)\), for an increasing grid of points \(t_j\) in I. We also include a discussion on the crucial notion of coefficient of determination (aimed at assessing the fit of the model) in this setting. A few experimental results are given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear regression is a topic of leading interest in statistics. The general paradigm is well-known: one aims to predict a response variable Y in the best possible way as a linear (or affine) function of some explanatory variable X. In the classical case of multivariate regression, where Y is a real random variable and X takes values in \({\mathbb R}^d\) there is little doubt about the meaning of “linear”. However, this is not that obvious when X is a more complex object that can be modelled via different mathematical structures. The most important example arises perhaps in the field of Functional Data Analysis (FDA) where \(X=X(t)\) is a real function; see e.g., Cuevas (2014) for a general survey on FDA and Horváth and Kokoszka (2012) for a more detailed account, including a short overview of functional linear models.

1.1 Some notation

More precisely, we will deal here with the scalar-on-function regression problem where the response Y is a real random variable and X is a random function (i.e., a trajectory of a stochastic process). In formal terms, let \((\Omega ,\mathcal {F},\mathbb {P})\) be a probability space and denote by \(L^2(\Omega ) = L^2(\Omega ,\mathcal {F},\mathbb {P})\) the space of square integrable random variables defined on \((\Omega ,\mathcal {F},\mathbb {P})\). Denote by \(\langle X, Y\rangle _{L^2(\Omega )} =\mathbb {E}(XY)\) the inner product in this space and by \(\Vert \cdot \Vert _{L^2(\Omega )}\) the corresponding norm. For \(Y_1, Y_2\in L^2(\Omega )\) the notation \(Y_1\bot Y_2\) will stand for \(\mathbb {E}(Y_1Y_2)=0\).

Consider a response variable \(Y\in L^2(\Omega )\) and a family of regressors \(\{X(t):\, t\in I\}\subset L^2(\Omega )\), where I is an arbitrary index set. For the sake of simplicity we will assume both the response and the explanatory variable are centred, so that \(\mathbb {E}(Y)=\mathbb {E}(X(t))=0\), for all \(t\in I\). The covariance function \(K:I\times I\rightarrow \mathbb {R}\) of \(\{X(t):\, t\in I\}\) is the symmetric, positive semidefinite function given by \(K(s,t)=\langle X(s), X(t)\rangle _{L^2(\Omega )} =\mathbb {E}(X(s)X(t))\). In what follows we will assume that \(I=[0,1]\) and K(s, t) is continuous on \(I\times I\). We will also assume that all involved processes are separable. This can always be done without loss of generality, since Separability Theorem (Ash and Gardner 1975, p. 166) establishes that any process \(\{X(t),\ t\in [0,1]\}\) has a separable version.

The trajectories \(X(\cdot )=X(t)\) of the underlying process are assumed to live in the space \(L^2[0,1]\) of square integrable real functions. Denote by \(\langle \cdot ,\cdot \rangle _2\) and \(\Vert \cdot \Vert _2\), respectively, the usual inner product and norm in \(L^2[0,1]\). The norm in the finite-dimensional Euclidean space \({\mathbb R}^p\) will be simply denoted by \(\Vert \cdot \Vert \).

1.2 The aim of this work. Some motivation

Our purpose is to show that the term “linear” admits several interpretations in the functional case; all of them could be useful, depending on the considered context. We will provide a general formulation of the linear model and we will show that several useful formulations of functional linear models appear as particular cases. Some basic consistency results will be given regarding the estimation of the slope (possibly functional) parameter. The theory of Reproducing Kernel Hilbert Spaces (RKHS) will be an important auxiliary tool in our approach; see Berlinet and Thomas-Agnan (2004).

In order to give some perspective and motivation, let us consider, for \(I=[0,1]\), the classical \(L^2\)-based functional regression model, as given by

where \(X=X(t)\) is a process, \(\varepsilon \) is an error variable, with \(\mathbb {E}(\varepsilon \vert X)=0\) and \(\text{ Var }(\varepsilon \vert X)=\sigma ^2\), and \(\beta \in L^2[0,1]\) is the slope function. The usual aim in such a model is estimating \(\beta \) and \(\sigma ^2\) from an iid sample \((X_i,Y_i)\), \(i=1,\ldots ,n\). As we are assuming \(\mathbb {E}(X(t))=0\) we omit as well the additional intercept additive term \(\beta _0\) in the theoretical developments involving model (1). This term will be incorporated in the numerical examples of Sect. 6.

The vast majority of literature on functional linear regression is concerned with model (1); see, e.g., the pioneering book by Ramsay and Silverman (2005) (whose first edition dates back to 1997), as well as the paper by Cardot et al. (1999), the book by Horváth and Kokoszka (2012) and references therein. Though this model is, in several aspects, natural and useful, we argue here that this is not the only sensible approach to functional linear regression.

There are several reasons for this statement: first, unlike the finite dimensional model \(Y=\beta _1X_1+\ldots +\beta _dX_d+\varepsilon \), there is no obvious, easy to calculate, estimator for \(\beta \) under model (1). The simple, elegant least squares theory is no longer available here. The optimality properties (Gauss–Markov Theorem) of the finite-dimensional least squares estimator do not directly apply to (1) either. Second, note that in the finite-dimensional situation, where \(X=(X_1,\ldots ,X_d)\), there is a strong case in favour of a model of type \(Y=\beta _1X_1+\ldots +\beta _dX_d+\varepsilon \). The reason is that, as it is well-known, when the joint distribution of all the involved variables is Gaussian, the best approximation of Y in terms of \(X=(X_1,\ldots ,X_d)\) is necessarily of type \(\beta _1X_1+\ldots +\beta _dX_d\). A similar motivating property does not hold for model (1). Third, some natural, linear-like functional approaches do not appear as particular instances of (1); this is the case, for example, with an approach of type the response Y is (up to an additive noise) a linear combination of a finite subset of variables \(\{X(t),\ t\in I\}\).

Our goal here is to analyse a more general linear model which partially addresses these downsides and includes model (1) as a particular case. Perhaps more importantly, the finite dimensional models of type \(Y=\beta _1X(t_1)+\ldots +\beta _pX(t_p)+\varepsilon \), with \(t_j\in I\), \(\beta _j\in {\mathbb R}\) and \(p\in {\mathbb N}\) are also included. This is particularly relevant in practice since, in some cases, the predictive power of such models may be larger than that of the \(L^2\)-based model (1); see the experiments in Sect. 6. The special points \(t_i\) used to define these finite-dimensional models are often called “impact points”. Some interesting references on impact points-based functional regression (with no explicit use of an RKHS approach) are McKeague and Sen (2010), Kneip et al. (2016), Poß et al. (2020)

1.3 Some literature on RKHS methods in functional regression

The book by Hsing and Eubank (2015) is a good reference for the mathematical basis of functional data analysis, including the use of RKHS theory in this field.

If we focus on RKHS methods in functional regression models, we should refer to the papers by Hsing and Ren (2009) and Kneip and Liebl (2020).

The RKHS-based linear model (2) we will consider below has been previously analysed by other authors, from slightly different points of view. Thus (Shin and Hsing 2012, Eq. (2.3)), use that model with a particular emphasis in prediction. In fact, our Theorem 2 below provides a result similar to that Theorem 3.1 in Shin and Hsing (2012) under quite different conditions. Also, the RKHS formulation of the functional linear model explicitly appears in Hsing and Ren (2009) from a rather general perspective. Some closely related ideas appear as well in Kneip and Liebl (2020) focussing on the topic of reconstructing partially observed functional data.

The linear model is also considered from the RKHS perspective by Berrendero et al. (2019). Still, this work is only focussed in variable selection problems (i.e., on the estimation of the impact points) with no further theoretical development of the RKHS-based model.

From a completely different point of view, the RKHS methodology in functional regression has been previously addressed by Yuan and Cai (2010) and Shin and Lee (2016). Let us note, however, that these authors in fact deal with the classical \(L^2\)-model (1); the RKHS techniques are used in these papers to define the penalization term in a penalized approach to the estimation of the slope function \(\beta \). See also Shang and Cheng (2015) in the context of generalized linear models.

1.4 The organization of this paper

In Sect. 2 our general linear RKHS-based model is defined. Section 3 is devoted to prove that some relevant examples of practical interest appear just as particular cases of such a model. Two results of consistent estimation of the slope function are given in Sect. 4. A discussion of the coefficient of determination on this setting is given in Sect. 5. Some experimental results are discussed in Sect. 6. Some conclusions are summarized in Sect. 7. The proof of Theorem 2 is given in the final Appendix.

2 A general formulation of the functional linear model

In the functional framework introduced in the previous section, a linear model might be defined as any suitable linear expression of the variables X(t) aiming to explain (predict) the response variable Y. The \(L^2\)-model (1) is just one possible formulation of such idea.

In the present work, by “linear expression” we mean an element of the closed linear subspace \(L_X\) of \(L^2(\Omega )\) spanned by the variables in \(\{X(t):\, t\in I\}\). In other words, \(L_X\) is the closure of the linear subspace of all finite linear combinations of variables in the collection. Hence, \(L_X\) includes both finite linear combinations of the form \(\sum _{j=1}^p \beta _j X(t_j)\) (where \(p\in \mathbb {N}\), \(\beta _1,\ldots ,\beta _p\in \mathbb {R}\), and \(t_1,\ldots ,t_p \in I\)) and rv’s U such that there exists a sequence \(U_n\) of these linear combinations with \(\Vert U_n-U\Vert _{L^2(\Omega )}\rightarrow 0\), as \(n\rightarrow \infty \).

In more precise terms, our general linear model will be defined by assuming that Y and \(\{X(t):\, t\in I\}\) are related by

where \(U_X\in L_X\), and \(\varepsilon \) is a random variable with \(\mathbb {E}(\varepsilon \vert X)=0\) and \(\text{ Var }(\varepsilon \vert X)=\sigma ^2\) (a positive constant). Note that \(\mathbb {E}(\varepsilon \vert X)=0\) entails that \(\varepsilon \) belongs to \(L_X^{\perp }\), the orthogonal complement of \(L_X\), that is \(\mathbb {E}(\varepsilon U)=0\) for all \(U\in L_X\). Let us also assume throughout, by simplicity, that \(\mathbb {E}(X(t))=0\) for all \(t\in I\); see Remark 1 below.

Since \(L_X\) is closed, we know that \(L^2(\Omega )=L_X\oplus L_X^{\perp }\), and then the elements in the model are unambiguously given by the orthogonal projections \(U_X=\text{ Proj}_{L_X}(Y)\) and \(\varepsilon =\text{ Proj}_{L_X^{\perp }}(Y).\) Given a linear continuous operator \(\mathcal {T}\), \(\Vert \mathcal {T}\Vert _{op}\) stands for the usual operator norm \(\Vert \mathcal {T}x\Vert _{op}=\sup _{\Vert x\Vert =1}\Vert \mathcal {T}x\Vert \).

2.1 An RKHS formulation of the proposed linear model

The aim of this subsection is to give a fairly natural parametrization of model (2), based on the RKHS theory. As a consequence of this alternative formulation we will show that several useful linear models appear as particular cases of (2).

Let us begin by briefly defining the notion of RKHS associated with a symmetric, positive semidefinite function \(K:[0,1]^2\rightarrow {\mathbb R}\); in our case, K will be the (continuous) covariance function of the process X whose trajectories provide the functional data. We first define the space \(H^0_K\) of functions \(g:[0,1]\rightarrow {\mathbb R}\) of the form \(g(\cdot )=\sum _{j=1}^n \beta _j K(\cdot ,t_j)\), for all possible choices of \(n\in {\mathbb N}\), \(t_1,\ldots ,t_n\in [0,1]\) and \(\beta _1,\ldots ,\beta _n\in {\mathbb R}\). If \(f\in H_K^0\) with \(f(\cdot )=\sum _{i=1}^m \alpha _i K(\cdot ,s_i)\), the inner product \(\langle f,g\rangle _K=\sum _{i,j}\alpha _i\beta _j K(s_i,t_j)\) provides \(H^0_K\) with a structure of pre-Hilbert space. Then \(H_K\) is defined as the completion of \(H^0_K\), obtained by incorporating the pointwise limits of all Cauchy sequences of functions in \(H^0_K\). The “completed” RKHS inner product fulfills the so-called reproducing property \(\langle f,K(\cdot .t)\rangle _K=f(t)\), for all \(t\in [0,1]\), \(f\in H_K\). See Berlinet and Thomas-Agnan (2004), Cucker and Zhou (2007) and Janson (1997) for more details.

In the following paragraphs we recall a deep interpretation of the RKHS theory in statistical terms. Denote by \(\mathbb {R}^I\) the set of all real functions defined on \(I=[0,1]\). We are going to define a map \(\Psi _X: L_X \rightarrow \mathbb {R}^I\) that will play a key role in the sequel: given \(U\in L_X\), \(\Psi _X(U)\) is just the function

As we will next show, this transformation defines an isometry (often called Loève’s isometry; see Lemma 1.1 in Lukić and Beder (2001)) between \(L_X\) and \(\Psi _X(L_X)\). We will also see that \(\Psi _X(L_X)\) coincides in fact with the RKHS generated by K, that we have denoted \(H_K\).

Let us recall here, for the sake of completeness, a simple lemma collecting two elementary properties of \(\Psi _X\):

Lemma 1

Let \(\Psi _X(U)\) be as defined in (3). Then,

-

(a)

\(\Psi _X\) is injective.

-

(b)

\(\Psi _X(X(t))(\cdot )=K(\cdot , t)\), for all \(t\in I\). Equivalently, \(\Psi _X^{-1}[K(\cdot ,t)]=X(t)\).

Proof

To show (a), let \(U,V\in L_X\) be such that \(\Psi _X(U)(t)=\Psi _X(V)(t)\), for all \(t\in I\). Then, \(\mathbb {E}[(U-V)X(t)]=0\), for all \(t\in I\), what implies \(U-V\in L_X^{\perp }\). Since we also have \(U-V\in L_X\), we get \(U=V\); recall that in \(L^2\) spaces we identify those functions that coincide almost surely. Property (b) is obvious from the definition. \(\square \)

As a consequence of this result \(\Psi _X: L_X \rightarrow \Psi _X(L_X)\) is a bijection (Loève’s isometry). Observe that by Lemma 1(b), all the finite linear combinations \(\sum _{j=1}^p \beta _j K(\cdot , t_j)\) belong to \(\Psi _X(L_X)\).

The inner product in \(L_X\) induces an inner product in \(\Psi _X(L_X)\): given \(f, g\in \Psi _X(L_X)\), define

It turns out that \(\Psi _X(L_X)\), endowed with \(\langle \cdot , \cdot \rangle \), is a Hilbert space. Once we endow \(\Psi _X(L_X)\) with this structure, the mapping \(\Psi _X\) is a linear, bijective, and inner product preserving operator between \(L_X\) and \(\Psi _X(L_X)\); this accounts for the word “isometry”.

On the other hand, it is well-known (see, e.g., Appendix F in Janson (1997) for details) that, given a positive semidefinite function \(K:I\times I\rightarrow {\mathbb R}\) (called “reproducing kernel”), there is a unique Hilbert space, generated by the linear combinations of the form \(\sum _j\beta _jK(\cdot ,t_j)\). This space is usually called the Reproducing Kernel Hilbert Space associated with K.

Let us recall also the following simple result, that shows that \(\Psi _X(L_X)\) coincides in fact with the RKHS \(H_K\) generated by the covariance function K of the process \(X=X(t)\).

Proposition 1

The Hilbert space \(\Psi _X(L_X)\) and the covariance function K satisfy the following two properties:

-

(a)

For all \(t\in I\), \(K(\cdot , t)\in \Psi _X(L_X)\).

-

(b)

Reproducing property: for all \(f\in \Psi _X(L_X)\) and \(t\in I\), \(\langle f, K(\cdot ,t)\rangle = f(t)\).

Proof

(a) follows directly from Lemma 1(b). To prove (b),

The first equality is also due to Lemma 1(b). \(\square \)

Finally, from the uniqueness result mentioned above, we conclude that the space (\(\Psi _X(L_X),\langle \cdot ,\cdot \rangle )\) coincides with the RKHS \((H_K,\langle \cdot ,\cdot \rangle _K)\) defined at the beginning of this subsection. We are now in a position to recast the general model (2) into a sort of parametric formulation, where the “parameter” belongs to the RKHS generated by the covariance function K of the process \(X=X(t)\). As we will see, this reformulation will be particularly useful to encompass several particular cases of practical relevance.

Theorem 1

Model (2) can be equivalently established in the form

where \(\alpha \in H_K\) and \(\varepsilon \) is a random variable with \(\mathbb {E}(\varepsilon \vert X)=0\) and \(Var (\varepsilon \vert X)=\sigma ^2\).

In addition the “parameter” \(\alpha \) is the cross-covariance function \(\alpha (t)=\mathbb {E}(YX(t))\).

Proof

Formulation (4) follows directly from the definition of \(\Psi _X\) and the fact that this transformation is a bijection between \(L_X\) and \(H_K\); hence \(U_X\in L_X\) if and only if there exists a (unique) \(\alpha \in H_K\) such that \(U_X=\Psi _X^{-1}(\alpha )\).

To prove the second assertion note that, by the reproducing property, \(\alpha (t) = \langle \alpha , K(\cdot ,t)\rangle _K \) for all \(t\in I\), and hence

because \(\varepsilon \in L_X^{\perp }\). \(\square \)

As a consequence, the RKHS \(H_K\) is a fairly natural parametric space for our general linear regression model.

Remark 1

Let us note that model (4) was already considered, with a different notation, in the paper by Berrendero et al. (2019). Indeed, the inverse Loève transformation \(\Psi _X^{-1}(\alpha )\) is sometimes denoted \(\langle \alpha , X\rangle _K\) (or \(\langle X,\alpha \rangle _K\)). This is somewhat of a notational abuse, as typically the trajectories of the process do not belong to the RKHS \(H_K\); see Berrendero et al. (2019) for details. Still, the notation is often convenient, so that we can also use the following expression to formulate the RKHS-model

As mentioned above, we assume throughout, by simplicity, \(\mathbb {E}(X(t))=0\). The general case can be treated by adding an intercept term \(\beta _0\) on the right-hand side of (6) and using the estimator \(\alpha \in H_K\) derived from (6) to estimate the additional parameter \(\beta _0\) by a standard minimum squares procedure.

3 Some important particular cases

The above mentioned work by Berrendero et al. (2019) focusses in the model (4)–(6) from the point of view of its application to variable selection topics. In the present section, we go further in the study of such model by showing that other several commonly used models appear just as particular cases. In Sect. 4 we address the problem of estimating the “regression parameter” \(\alpha \) and in Sect. 6 we carry out some numerical experiments.

3.1 Finite dimensional models: a setup for variable selection problems

When there are infinitely many regressors (which is the case in functional regression problems), several procedures of variable selection are available (see Berrendero et al. (2019) for details) for replacing the whole set of explanatory variables with a finite, carefully chosen, subset of these variables. The following proposition characterizes when it is possible to apply these procedures without any loss of information at all.

Proposition 2

Under model (4)–(6), there exist \(X(t^*_1),\ldots ,X(t_p^*)\in \{X(t):\, t\in I\}\) and \(\beta _1,\ldots ,\beta _p\in \mathbb {R}\) such that \(Y=\beta _1X(t^*_1)+\cdots + \beta _pX(t^*_p)+\varepsilon \) if and only if for all \(t\in I\), \(\alpha (t) = \beta _1K(t,t_1^*) + \cdots + \beta _pK(t,t_p^*)\).

Proof

By Lemma 1(b), \(Y=\beta _1X(t^*_1)+\cdots + \beta _pX(t^*_p)+\varepsilon \) if and only if \(\Psi _X^{-1}(\alpha ) = \beta _1\Psi _X^{-1}(K(\cdot , t^*_1))+\cdots + \beta _p\Psi _X^{-1}(K(\cdot , t^*_p))\), what in turn happens if and only if \(\alpha (t) = \beta _1K(t,t_1^*) + \cdots + \beta _pK(t,t_p^*)\). \(\square \)

3.2 The classical \(L^2\)-model

For \(I=[0,1]\) assume that \(X=\{X(t):\, t\in I\}\) is an \(L^2\) random process and Y a response variable such that the RKHS linear model (2) or, equivalently (4) or (6), holds. To gain some insight, let us illustrate this with an example, beyond the finite-dimensional models considered in the previous subsection.

Example (Brownian regressors): When \(X=\{X(t):\, t\in [0,1]\}\) is a standard Brownian Motion (\(K(s,t)=\min \{s,t\}\)) it can be shown

and \(\langle \alpha _1,\alpha _2\rangle _K=\langle \alpha '_1,\alpha '_2\rangle _2\). It can also be proved that \(\Psi _X^{-1}(\alpha )\) is given by Itô’s stochastic integral, \(\Psi _X^{-1}(\alpha ) = \int _0^1\alpha '(t) dX(t)\); for these results see Janson (1997), Example 8.19, p. 122. Thus, in this case, the linear model (2) or (4) reduces to \(Y= \int _0^1\alpha '(t) dX(t) + \varepsilon \).

Our goal in this subsection is to analyse under which conditions the RKHS model (2) or (4) entails the \(L^2\)-model (1). To do this, we need to recall some basic facts about the RKHS space associated with K. Let us denote by \(\mathcal {K}:L^2[0,1]\rightarrow L^2[0,1]\) the integral operator defined by K, that is

Recall that we are assuming throughout that K is continuous. Under this condition, it is well-known that \(\mathcal {K}\) is a compact and self-adjoint operator. The following proposition is a standard result in the RKHS theory. See, e.g., (Cucker and Zhou 2007, Corollary 4.13) for a proof and additional details.

Proposition 3

Assume \(I=[0,1]\) and K is continuous. Let \(\lambda _1\ge \lambda _2\ge \cdots \) be the non-null eigenvalues of \(\mathcal {K}\) and let \(e_1, e_2,\ldots \) be the corresponding unit eigenfunctions. Then, the RKHS corresponding to K is

endowed with the inner product \(\langle f,g\rangle _{K}=\sum _{i=1}^\infty \langle f,e_i\rangle _2 \langle g,e_i\rangle _2/\lambda _i\).

Thus, the membership to \(H_K\) can be understood as a “regularity property” established in terms of a very fast convergence to zero of the Fourier coefficients \(\langle f,e_i\rangle _2\). This is just an alternative, equivalent formulation for the definition of \(H_K\) given at the beginning of Sect. 2.1. When the kernel K is continuous, both \(\mathcal {K}\) and \({\mathcal K}^{1/2}\) can be considered as operators from \(L^2[0,1]\) to the space \(\mathcal {C}[0,1]\) of continuous functions. Expression (7) must be understood in this sense; see (Cucker and Zhou 2007, Th. 2.9 and Corollary 4.13).

Now, let us go back to the classical functional linear regression model (1). We will show that (1) appears as a particular case of our general model (4) if and only if the “slope function” \(\alpha \) in (4) is regular enough to belong to the image subspace \(\mathcal {K}(L^2[0,1])\) which, by Proposition 3, is a subset of \(H_K\). The formal statement is given in the following proposition.

Proposition 4

If the \(L^2\)-based model (1) holds for some slope function \(\beta \in L^2[0,1]\), then it can be formulated as an RKHS-based model (4) whose slope function is \(\alpha = \mathcal {K}\beta \). Conversely, if the RKHS model (4) holds for some \(\alpha \in H_K\) and there exists \(\beta \in L^2[0,1]\) such that \(\alpha = \mathcal {K}\beta \), then the model can be reformulated as an \(L^2\)-model such as (1) with slope function \(\beta \).

Proof

The proof is essentially the same as that of Th. 1 in Berrendero et al. (2022), an analogous result in the framework of the functional logistic regression model.

\(\square \)

Observe that the difference between (4) and (1) is not just a minor technical question. There are important values of the parameter \(\alpha \) such that \(\alpha \in H_K\) but \(\alpha \ne \mathcal {K}\beta \) for all \(\beta \). This is the case, for example, of finite linear combinations of the form \(\beta _1K(\cdot , t_1)+\cdots + \beta _pK(\cdot ,t_p)\), which are important because they allow us to include finite dimensional regression models (also called impact point models in the literature on functional regression) as particular cases of the general model (see Proposition 2 above).

The procedures to fit model (1) very often involve to project \(X=\{X(t):\, t\in [0,1]\}\) on a convenient subspace of \(L^2[0,1]\). More precisely, given \(\{u_j:\, j = 1,2,\ldots \}\), an arbitrary orthonormal basis of \(L^2[0,1]\), it is quite common to use as regressor variables the projections of \(X=\{X(t):\, t\in [0,1]\}\) on the finite dimensional subspace spanned by the first p elements of the basis. This amounts to replace the whole trajectory with \(\langle X, u_1\rangle _2 u_1 +\cdots +\langle X, u_p\rangle _2 u_p\). This method will work fine whenever \(\int _0^1 X(t)\beta (t) dt \approx \sum _{j=1}^p \beta _j \langle X, u_j\rangle _2\), where \(\beta _j = \langle \beta , u_j\rangle _2\). More precisely, this projection-based model would be as follows,

where \(\beta _1,\ldots ,\beta _p \in \mathbb {R}\) and \(\varepsilon \in L_X^{\perp }\). A natural question to ask is when there is no loss in using the projection instead of the whole trajectory, and how is this situation characterized in terms of the parameter \(\alpha \) in (4). The answer is given by Proposition 5 below. Its proof is completely similar to that of the analogous result Theorem 2 (b) in Berrendero et al. (2022).

Proposition 5

If model (8) holds, then model (4) also holds. Conversely, if (4) holds and \(\alpha \) belongs to the subspace spanned by \(\{\mathcal {K}u_1,\ldots ,\mathcal {K}u_p\}\) then (8) holds.

An important particular case is functional principal component regression (FPCR). In FPCR, the orthonormal basis is given by \(u_j = e_j\), the eigenfunctions of \(\mathcal {K}\). Then, \(\mathcal {K}e_j = \lambda _j e_j\), for \(j=1,\ldots ,p\), and the condition in Proposition 5 simply states that \(\alpha \) must belong to the subspace spanned by \(\{e_1,\ldots ,e_p\}\).

4 Estimation and prediction in the RKHS-model

We now focus on the main target of this work, that is, the RKHS-based functional model defined (with three alternative notations) in (2), (4) or (6). Our aim will be to estimate the functional parameter \(\alpha \in H_K\) based on a sample of iid observations \((X_i,Y_i)\), \(i=1,\ldots ,n\) with \(X_i=\{X_i(t):\, t\in [0,1]\}\). We will also address the prediction of the response variable based on the estimation of \(\alpha \).

4.1 Different approaches to the estimation of \(\alpha \)

In short, our aim is to explore two “natural ways” of estimating \(\alpha \). We will first consider, in Sect. 4.2, an estimator based on regularization, denoted \(\check{\alpha }\). Though this method is conceptually meaningful and has some practical interest, it suffers from the serious limitation of assuming the knowledge of the covariance structure of the underlying process. Then, in Sect. 4.3 we will consider our main proposal, denoted \(\hat{\alpha }_p\), which relies on the idea of approximating our model (4)–(6) by a sequence of finite-dimensional linear models. Its consistency is analysed in Theorem 2, the main result of this work.

4.2 An estimator based on regularization

The interpretation of \(\alpha \) as a covariance given by Eq. (5) suggests a natural way to estimate it. We could just use the sample covariance function,

Unfortunately, \(\mathbb {P}(\tilde{\alpha } \in H_K)=0\) (see Lukić and Beder (2001)) whereas we are assuming \(\alpha \in H_K\). Also, the natural idea of projecting \(\tilde{\alpha }\) on \(H_K\) (to obtain the element in \(H_K\) “closest” to \(\tilde{\alpha }\)) does not work since \(H_K\) is not in general closed and might even be dense in \(L^2[0,1]\); in fact, this is the case when all the eigenvalues of \(\mathcal {K}\) are strictly positive, see (Cucker and Zhou 2007, Remark 4.9, p. 59). A common way of circumventing this problem is to get a sort of “quasi-projection”, by penalizing the \(L^2\)-distance with a term accounting for the “roughness” of the quasi-projection, as measured by the norm in \(H_K\). This idea is often referred to as Tikhonov regularization. It leads to the following estimator of \(\alpha \):

where \(\gamma _n>0\) is a sequence of regularization parameters depending on the sample size. It turns out that \(\check{\alpha }\) has the following explicit expression:

where \(\mathcal {K}\) is the integral operator defined by the kernel K; see (Cucker and Zhou 2007, p. 139).

Note that (9) relies on the previous knowledge of the true covariance operator \(\mathcal {K}\). This could be the case in some particular models (e.g., Lindquist and McKeague (2009)) but, in general, such assumption is somewhat restrictive. In any case, the consistency in the RKHS norm of this estimator is established in the following result.

Proposition 6

Let \(\gamma _n\rightarrow 0\) such that \(n\gamma ^2_n\rightarrow \infty \), then \(\Vert \check{\alpha }-\alpha \Vert _K^2\rightarrow 0\) in probability.

Proof

To prove that \(\Vert \check{\alpha }-\alpha \Vert _K\rightarrow 0\) in probability, observe that since \(\alpha \in H_K\), for all \(\epsilon >0\) there exists \(N=N(\epsilon )\) such that

For this value of N we have

We will look at each term in the expression above. For the first one, we have:

Now, observe that from Mourier’s SLLN (see e.g. Theorem 4.5.2 in Laha and Rohatgi (1979) p. 452) \(\Vert \tilde{\alpha }-\alpha \Vert _2\rightarrow 0\) almost surely (a.s.), and let us define

Then, for large enough n, with probability one,

We have used Cauchy–Schwarz inequality in the first inequality above. Similarly, we also have, for large enough n,

The second term in (11) satisfies \(\Vert \sum _{j=1}^N \langle \tilde{\alpha }-\alpha ,e_j\rangle _2e_j\Vert ^2_K \le N\lambda _N^{-1}\Vert \tilde{\alpha }-\alpha \Vert ^2_2<\epsilon ,\) for large enough n, with probability one. For the third term in (11), let \(\sum _{j=1}^\infty \lambda _j:=C<\infty \). Then,

in probability, since we are assuming \(n\gamma _n^2\rightarrow \infty \) and \(n\Vert \tilde{\alpha }-\alpha \Vert _2^2\) is bounded in probability by the Central Limit Theorem. Finally, the fourth term in (11) is also bounded by \(\epsilon \) using (10):

\(\square \)

4.3 RKHS-consistent estimation and prediction

In the previous subsection we have considered a penalized estimator \(\check{\alpha }\) for the slope parameter \(\alpha \) and we have established its RKHS-consistency. In this subsection we address the RKHS-based estimation of \(\alpha \) using a different strategy: we will use a discrete approximation of the linear model itself, taking advantage of the RKHS structure. As it turns out, predictions based on such estimator can be made in the natural way with no need of knowing the covariance function, thanks to Loève’s isometry. The idea is to approximate the RKHS model (4) by a sequence of finite dimensional models of type of those considered in Proposition 2, based on \(p_n\)-dimensional marginals \((X(t_{1,p}),\ldots ,X(t_{p,p}))\), obtained by evaluating the process \(X=\{X(t):t\in [0,1]\}\) at the grid points \(T_p=\{t_{j,p}\}\), where \(p=p_n\). The corresponding sequence of least squares estimators of the slope function \(\hat{\alpha }_p\) will hopefully provide a consistent sequence of estimators of the true slope function \(\alpha \) in (4). This idea is next formalized.

We will use the following lemma (which follows from Theorem 6E in Parzen (1959)), that states that the function \(\alpha \) can be approximated (in the RKHS norm) by a finite linear combination of the kernel function K, evaluated at points of a partition of [0, 1].

Lemma 2

Let \(\alpha \in H_K\). Let us consider \(T_p=\{t_{j,p}:\, j=1,\ldots ,p\}\) where \(0\le t_{1,p}\le \dots \le t_{p,p}\le 1\), is an increasing sequence of partitions of [0, 1], i.e, \(T_p\subset T_{p+1}\), such that \(\overline{\cup _pT_p}=[0,1]\). Then, there exist \(\beta _{1,p},\dots ,\beta _{p,p}\) such that, \(\big \Vert \alpha (\cdot )-\sum _{j=1}^p \beta _{j,p} K(t_{j,p},\cdot )\big \Vert _K^2\rightarrow 0,\ \text{ as } p\rightarrow \infty .\)

Now our estimator is defined by the ordinary least squares estimator of the coefficients \(\beta _{1,p},\dots ,\beta _{p,p}\). To be more precise, let us denote

where \(t_{1,p},\dots ,t_{p,p}\) are chosen as indicated in Lemma 2 and, for \(j=1,\dots ,p\), \(\hat{\beta }_{j,p}\) are the ordinary least squares estimators (based on a sample of size n) of the regression coefficients in the p-dimensional linear model

To prove the almost sure consistency of the estimator we will need to impose a condition of sub-Gaussianity. Let us recall that a random variable Y with \(\mathbb {E}(Y)=0\) is said to be sub-Gaussian with (positive) proxy constant \(\sigma ^2\) (we will denote \(Y\in SG(\sigma ^2)\)) if the moment generating function of Y satisfies \(\mathbb {E}\left( \exp (sY)\right) \le \exp (\sigma ^2s^2/2)\), for all \(s\in {\mathbb R}\). It can be seen that the tails of a random variable \(Y\in SG(\sigma ^2)\) are lighter than or equal to those of a Gaussian distribution with variance \(\sigma ^2\), i.e. \({\mathbb P}(\vert Y\vert >t)\le 2\exp (-t^2/(2\sigma ^2))\) for all \(t>0\). A p-dimensional random vector \(\textbf{X}\) is said to be sub-Gaussian with proxy constant \(\sigma ^2\) if \(\textbf{X}^\prime v\in SG(\sigma ^2)\) for all \(v\in {\mathbb R}^d\) with \(\Vert v\Vert =1\). Observe that if \(\textbf{X}\) is a p-dimensional random vector \(\textbf{X}=(X_1,\ldots ,X_p)\) and the \(X_i\) are independent with \(X_i\in SG(\sigma ^2)\) and sub-Gaussian then \(\textbf{X}\) is sub-Gaussian with proxy constant \(\sigma ^2\) as well. See (Rigollet and Hütter 2017, Ch. 1) for details.

Theorem 2

Assume the RKHS-based linear model \(Y_i=\langle X,\alpha \rangle _K+ \epsilon _i\) for \(i=1,\ldots ,n\), as defined in (2), (4) or (6). Let us consider a sequence of approximating p-dimensional models (with \(p=p_n\)) as defined in (13). Assume that

-

(i)

The error variables \(e_{i,p}\) in the p-dimensional models are iid and sub-Gaussian, \(SG(\sigma ^2_p)\) with \( \sigma ^2_p\ge C_0\) for all p and some \(C_0>0\).

-

(ii)

The random variable \(\sup _{t\in [0,1]} X(t)\) has sub-exponential tails, that is \(\mathbb {P}(\sup _{t\in [0,1]} X(t)> s)\le C_1\exp (-C_2s^2)\) for some constants \(C_1,C_2>0\) and for all \(s>0\).

-

(iii)

We have \(p\rightarrow \infty \), as \(n\rightarrow \infty \), in such a way that there exists \(C_3>0\) such that \(n(\gamma _{p,p})^2/(p^2\log ^3 n)\rightarrow C_3\), where \(\gamma _{p,p}\) is the smallest eigenvalue of the covariance matrix, \(K_{T_p}\), of \((X(t_{1,p}),\ldots ,X(t_{p,p}))\).

Then, \(\nu _n\Vert \hat{\alpha }_p-\alpha _p\Vert _K^2\rightarrow 0\) a.s., for all \(\nu _n\rightarrow \infty \) such that \(n\gamma _{p,p}/(p^2\nu _n\log n)\rightarrow C_4>0\). In addition, as a consequence of Lemma 2, \(\Vert \hat{\alpha }_p-\alpha \Vert _K^2=\max \{\nu _n^{-1},\mathcal {O}(\Vert \alpha -\alpha _p\Vert _K^2)\}\ \text{ a.s. }\)

The proof of this theorem is deferred to the appendix as it is a bit more technical than those of the previous results in the paper. Let us now discuss the real extent of this result by analysing how restrictive are the required assumptions.

4.4 Some remarks on the assumptions of Theorem 2

Clearly assumption (i) holds if the errors \(e_i\) are Gaussian, which is a common assumption in regression theory. But it is also satisfied by many other usual centred distributions such as those of compact support or finite mixtures of centred Gaussian distributions.

Regarding assumption (ii), it is fulfilled, for example, when the process \(X=\{X(t):t\in [0,1]\}\) is Gaussian. To see this, define \(Y=\sup _{s\in [0,1]}\vert X(s)\vert \) and note that, for any \(t>0\), \({\mathbb P}(\sup _{s\in [0,1]}X(s)>t)\le {\mathbb P}(Y>t)\). Now, according to Theorem 5 in Landau and Shepp (1970), there is some \(\epsilon >0\) such that \({\mathbb E}(e^{\epsilon Y^2})<\infty \). But this entails

Therefore, condition (ii) is fulfilled with \(C_1={\mathbb E}(e^{\epsilon Y^2})\) and \(C_2=\epsilon \).

Finally, hypothesis (iii) in Theorem 2 is satisfied for the case of processes with stationary and independent increments and equispaced impact points \(t_{j,p}\), as stated in the following proposition.

Proposition 7

Let \(\{W(t):t\in [0,1]\}\) be a stochastic process with stationary and independent increments, such that \(\mathbb {E}(W^2(t))<\infty \) and \(\mathbb {E}(W(t))=0\) for all \(t\in [0,1]\). Then for all \(\delta >0\), \(p^{1+\delta }\gamma _{p,p}\rightarrow \infty \), \(\gamma _{p,p}\) being the smallest eigenvalue of \(K_{T_p}\), the covariance matrix of the random vector \((W(1/p),\dots ,W(1))\).

Proof

Let us denote, with some notational abuse, \(W=(W(1/p),\dots ,W(1))\), \(t_i=i/p\), and \(v=(v_1,\dots ,v_p)\). Let us introduce the \(p\times p\) matrix A, such that WA is the \(1\times p\) row vector \((W(1/p),W(2/p)-W(1/p),\dots , W(1)-W(1-1/p))\), that is \(A=(a_{ij})\) where \(a_{ii}=1\) for \(i=1,\dots ,p\), \(a_{i-1,i}=-1\) for \(i=2,\dots ,p\) and \(a_{ij}=0\) otherwise. The coordinates of WA are independent random variables. Then, for all v,

Since A is invertible there exists v with \(\Vert v\Vert =1\) such that \(w:=Av\) fulfils \(K_{T_p}w=\gamma _{p,p}w\). Then

From where it follows that \(\gamma _{p,p}\ge \Vert A\Vert _{op}^{-2} \min _{v:\Vert v\Vert =1}\mathbb {E}\vert WAv\vert ^2.\) Since \(\text {Var}(W_{t+s}-W_t)=\sigma ^2\,s\) for all \(0\le t,s\le 1\), such that \(s+t\le 1\), and for some \(\sigma >0\), then, if \(\Vert v\Vert =1\), it follows that

Then \(\gamma _{p,p}\ge \sigma ^2/(p\Vert A\Vert ^2_{op})\). Lastly, \(\Vert A\Vert ^2_{op}=1/p+ (4/p)(p-1)\), (because the maximum of \(\Vert Av\Vert \) subject to \(\Vert v\Vert =1\) is attained at \(v_i=(-1)^{i+1}/\sqrt{p}\)). \(\square \)

Remark 2

The class of processes with stationary independent increments includes many counting processes and the Brownian Motion. Putting together the condition \(n(\gamma _{p,p})^2/(p^2\log ^3 n)\rightarrow C_3\) for some \(C_3>0\), imposed in Theorem 2 and the conclusion obtained in Proposition 7, it turns out that a choice of type \(p=(n/\log ^3 n)^{1/5}\) for p would be sufficient to ensure the applicability of Theorem 2.

To conclude this section, we provide an interesting interpretation of Theorem 2 in terms of prediction.

Theorem 3

Under the conditions of Theorem 2, we have that the general regression function of Y with respect to X, \(m(X)=\mathbb {E}(Y\vert X)\), can be approximated in \(L^2(\Omega )\) from the prediction functions derived from the finite-dimensional models, that is as \(p=p_n\rightarrow \infty \).

Proof

As a consequence of the assumed RKHS model, we have \(m(X)=\mathbb {E}(Y\vert X)=\Psi _X^{-1}(\alpha ):=\langle \alpha ,X\rangle _K\). Now, the result follows from Loève’s isometry, since

and \(\Vert \hat{\alpha }_p-\alpha \Vert _K\rightarrow 0\), a.s., as a consequence of Theorem 2. \(\square \)

In order to properly interpret this result, let us recall that the conditional expectation \(g(X)={\mathbb E}(Y\vert X)\) is known to be the projection of Y on the Hilbert subspace of \(L^2(\Omega )\) of random variables Z of the form \(Z=h(X)\) with \({\mathbb E}(Z^2)<\infty \); see e.g. (Laha and Rohatgi 1979, p. 382). In other words, \({\mathbb E}(Y \vert X)\) is the minimizer in Z of \(\Vert Y-Z\Vert ^2_{L^2(\Omega )}={\mathbb E}(Y-Z)^2\) when Z is in the space of all rv’s of type \(Z=h(X)\) with \({\mathbb E}(Z^2)<\infty \). In this sense \(m(X)={\mathbb E}(Y \vert X)\) could be considered in a very precise way as the “best possible prediction (in the sense of quadratic mean error) of Y in terms of X”. In Theorem 3 it is shown that we are able to asymptotically approach such m(X). Note that these finite-dimensional predictions considered here do not require the knowledge of the covariance function K.

5 The coefficient of determination in the functional case

The coefficient of determination, often denoted \(R^2\), is commonly used in regression analysis as a measure of the goodness of fit for the regression model under consideration. In this section we will define and motivate, in population terms, the notion of coefficient of determination for our RKHS-based regression model (2)–(4)–(6). We will show as well how this coefficient can be consistently approximated from a natural statistic, analogous to that used in the standard multivariate cases. Let us start by briefly recalling some essentials about the coefficient of determination in more classical situations.

5.1 The linear finite-dimensional case

In multivariate linear regression, the \(X_i\) are random vectors in \({\mathbb R}^d\). If \({\hat{Y}}_i\) stands for the prediction of \(Y_i\) obtained from the usual linear model \(Y_i=\beta _0+\beta _1X_{i1}+\cdots +\beta _pX_{ip}\), the coefficient of determination is given by

which can be interpreted as the portion of total variability explained by the model.

A thorough study of \(R^2\) can be found in (Rencher and Schaalje 2008, Sect. 7.7, 10.4, 10.5). In summary, it can be seen that \(R^2\) is the square (sample) linear correlation coefficient between the observations \(Y_i\) and the predictions \({\hat{Y}}_i\) (obtained with the standard least squares estimations of the parameters). It is as well the maximum (sample) square linear correlation coefficient that can be obtained between the \(Y_i\) and all linear functions of the coordinates of the \(X_i\).

This suggests a population version of \(R^2\), not depending on the sample data, but on the underlying random variable (X, Y) with values in \({\mathbb R}^d\times {\mathbb R}\). It could be defined as the maximum linear correlation coefficient (denoted “Corr") between the response variable Y and linear functionals of X, of type \(\beta 'X=\beta _1X_1+\cdots +\beta _dX_d\). Thus

where the subscript \(\mathcal {L}\) emphasizes that we are considering linear functions of X to predict Y.

5.2 The fully nonparametric case

By analogy with the linear multivariate case, one could consider the approximation of the scalar random variable Y in terms of a general measurable function of X. It is well-known that, if we assume \(\mathbb {E}(Y^2)<\infty \), the function m(x) which minimizes the square prediction error \(\mathbb {E}[(Y-g(X))^2]\) within the class \(\mathcal {G}\) of real measurable functions such that \(\mathbb {E}(g^2(X))<\infty \) is \(m(x)=\mathbb {E}(Y\vert X=x)\). Thus, by analogy with (16), one might define

In Doksum and Samarov (1995) the coefficient of determination is considered in this nonparametric setting under the name of Pearson’s correlation ratio, and it is defined as the following quotient of variances

In view of the ANOVA identity \(\text{ Var }(Y)=\text{ Var }(m(X))+\mathbb {E}(\text{ Var }(Y\vert X)))\), \(\eta ^2\) is nothing but the proportion of total variability explained by the regression model m(X). But, actually, this interpretation is compatible with that behind definition (17), since it is easy to see that \(\eta ^2=\rho ^2_{\mathcal {G},y\vert X}\). Indeed, we have, for any \(g\in \mathcal {G}\),

Note that (*) holds from the fact that \(\mathbb {E}(Y)=\mathbb {E}(m(X))\) and \(Y-m(X)\) is (by the projection properties of the conditional expectation) orthogonal to all functions in \(\mathcal {G}\) and (**) holds from the Cauchy–Schwartz inequality. This shows \(\eta ^2\ge \rho ^2_{\mathcal {G},y\vert X}\). The converse inequality readily follows from the fact that \(m(\cdot )\in \mathcal {G}\).

Left panel: prediction errors under Scenario 1 for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

Left panel: prediction errors under Scenario 2a for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

5.3 The coefficient of determination in the context of our RKHS-based linear functional model

Now, coming back to the linear functional model (2)–(4)–(6), as a consequence of the above discussion, we define the coefficient of determination by

The following proposition, gives a simple expression for \(\rho ^2_{Y\vert X}\) in terms of the model (6),

Proposition 8

Let us assume the validity of the RKHS-based regression model (2)–(4)–(6), i.e. \(Y=\Psi _X^{-1}(\alpha )+\varepsilon \), with \(\mathbb {E}(\varepsilon \vert X)=0\) and \(\text{ Var }(\varepsilon \vert X)=\sigma ^2\). Then, the coefficient of determination (19) can be expressed as

Proof

For any \(U\in L_X\),

Now, observe that the RKHS-model entails \(\mathbb {E}(Y\vert X)=\Psi _X^{-1}(\alpha )\) and

Indeed, \(\text{ Var }[\mathbb {E}(Y\vert X)]=\text{ Var }[\Psi _X^{-1}(\alpha )]=\mathbb {E}(\Psi _X^{-1}(\alpha )^2)\) which, from Loève’s isometry, equals \(\Vert \alpha \Vert _K^2\) and \(\mathbb {E}[\text{ Var }(Y\vert X)]=\text{ Var }(\varepsilon \vert X)=\sigma ^2\).

Using again Loève’s isometry in (21), we have

where \(\alpha _U\) denotes the image of U in \(H_K\) by Loève’s isometry, that is, \(\alpha _U=\Psi _X(U)\). Finally, from Cauchy–Schwartz inequality

and the bound in the right-hand side is attained when U is such that \(\alpha _U=\alpha \). \(\square \)

Note that, in view of expression (20), \(\rho ^2_{Y\vert X}\) can be interpreted again as the proportion of variance explained by the RKHS linear model (4).

We now address the problem of approximating the (population) functional coefficient of determination \(\rho ^2_{Y\vert X}\) with the corresponding quantities (that we will denote \(\rho _T^2\) for simplicity) in the approximating finite-dimensional models based on the observations \(X(t_1),\dots ,X(t_p)\) on a grid \(T_p:=T=\{t_1,\dots ,t_p\}\subset [0,1]\), with \(t_1<\ldots <t_p\). Denote \(\alpha _T=(\alpha (t_1),\dots , \alpha (t_p))'\) and \(K_{T}\equiv K(t_i,t_j)\) the covariance matrix of \((X(t_1),\ldots ,X(t_p))\). Let \(L_T^2\equiv \text {sp}\{X(t_1),\dots ,X(t_p)\}\), the space of all possible linear combinations of \(X(t_1),\dots ,X(t_p)\).

Left panel: prediction errors under Scenario 2b for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

Left panel: prediction errors under Scenario 3 for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

In accordance to the previous discussion, the finite dimensional coefficient of determination, can be defined as

Then, the random variable U attaining this maximum is the best possible predictor of Y using a linear combination of the variables \(X(t_1),\dots , X(t_p)\). The following proposition establishes the convergence of \(\rho _T^2\) to \(\rho ^2_{Y\vert X}\), as \(p=p_n\rightarrow \infty \).

Proposition 9

Under the indicated RKHS-linear model (2), assume that the covariance function K is continuous in \([0,1]^2\) and the matrix \(K_T\) defined above is invertible. Then,

-

(a)

$$\begin{aligned} \rho _T^2=\frac{\alpha _T'K_T^{-1}\alpha _T}{\sigma ^2+\Vert \alpha \Vert _K^2}, \end{aligned}$$(24)

where \(\alpha _T=(\alpha (t_1),\ldots ,\alpha (t_p))'\) and \(\alpha (t)=\mathbb {E}(YX(t))\).

-

(b)

Assume further that the sequence \(T_p\) is increasing (\(T_p\subset T_{p+1}\)) and the set \(\bigcup _{p=1}^\infty T_p\) is dense in [0, 1]. Then

$$\begin{aligned} \lim _{p\rightarrow \infty }\rho _T^2=\rho _{Y\vert X}^2. \end{aligned}$$(25)

Proof

(a) Recall that \(\rho _T^2\equiv \max _{U\in L_T^2} \text {Corr}^2(Y,U)\). Since \(U\in L_T^2\), there exists \(\beta =(\beta _1,\dots ,\beta _p)\) such that \(U=\sum _{j=1}^p \beta _j X(t_j)\). Then, from \(\alpha (t)=\mathbb {E}(YX(t))\) and (22),

We have to maximize on \(\beta \) the quotient \((\beta '\alpha _T)^2/(\beta 'K_T\beta )\). Using (Mardia et al. 2021, Cor. A.9.2.2, p. 480) we get \(\max _{\beta } (\beta '\alpha _T)^2/(\beta 'K_T\beta )=\alpha '_TK_T^{-1}\alpha _T\), and the result follows.

(b) If \(\alpha \in H_K\) is the maximizer of the expression (19) defining \(\rho ^2_{Y \vert X}\). Since \(K_T:=K(t_i,t_j)_{t_i,t_j\in T}\) is an invertible matrix, we have that, for each \(p=p_n\) and \(t_j\) in the grid T, there exist constants \(\beta _k^p:=\beta _k\) such that

so that \(\alpha _T=K_T\beta _T\) and \(\beta _T=K_T^{-1}\alpha _T\).

Now, the result is a consequence of (Parzen 1959, Th. 6E). Indeed, using expression (6.26) in that paper (for the particular case \(f=g=\alpha \)), we have

(note, that in Parzen’s notation \(\langle \alpha ,\alpha \rangle _p:=\sum _{k=1}^p \beta _k\alpha (t_k)\)). Thus, from (26),

which proves (25).

\(\square \)

We now consider the problem of estimating \(\rho _{Y\vert X}^2\) from the sample data \((X_i(\cdot ),Y_i)\), \(i=1,\ldots ,n\). We will show that the sample versions of the coefficients of determination (23) corresponding to the “approximating models” provide a consistent estimation of \(\rho _{Y\vert X}^2\).

First, recall that under the assumed model (4), \(Y_i=\langle X_i,\alpha \rangle _K+\varepsilon _i\), we have \(\text{ Var }(Y_i)=\Vert \alpha \Vert ^2_K+\sigma ^2\). Then, a natural estimator of \(\rho _{Y\vert X}^2\) would be

where \({\hat{Y}}_i:={\hat{Y}}_{ip}\) is the standard prediction of \(Y_i\) obtained form the finite-dimensional approximating linear model based on \(X(t_1),\ldots ,X(t_p)\). We next show the almost sure consistency of this estimator.

Theorem 4

Under the hypotheses of Theorem 2,

as \(p=p_n\rightarrow \infty \).

Proof

From (22), \(\mathbb {E}(Y_i^2)=\sigma ^2+\Vert \alpha \Vert _K^2\). So, from the Strong Law of Large Numbers,

Let us now prove that \((1/n)\sum _{i=1}^n {\hat{Y}}_i^2\rightarrow \Vert \alpha \Vert _K^2\) a.s. Indeed, following the notation in the proof of Theorem 2 (see the Appendix below),

From Theorem 3, \(\Vert \hat{\alpha }_p\Vert ^2_K\rightarrow \Vert \alpha \Vert ^2_K\) a.s. Let \(\epsilon >0\), from Lemma 5 (see the proof of Theorem 2 in the Appendix),

It follows that

So it is enough to prove that \(\Vert \hat{\beta }_p\Vert ^2 \gamma _{p,p}\) is bounded from above independently of p. But \(\Vert \hat{\beta }_p\Vert ^2 \gamma _{p,p}\le 2 \Vert \hat{\beta }_p-\beta _p\Vert ^2 \gamma _{p,p}+2\Vert \beta _p\Vert ^2 \gamma _{p,p}\). Then, since \(\Vert \hat{\beta }_p-\beta _p\Vert ^2\rightarrow 0\) a.s., it is enough to bound \(\Vert \beta _p\Vert ^2\gamma _{p,p}\) from above. But \(\Vert \alpha _p\Vert _K^2=\beta _p'K_{T_p}\beta _p\ge \Vert \beta _p\Vert ^2\gamma _{p,p}\). This, together with the conclusion of Theorem 2, \(\Vert \hat{\alpha }_p\Vert _K^2\rightarrow \Vert \alpha \Vert _K^2\), and (28), concludes the proof.

\(\square \)

6 Some empirical results

We will consider here different examples of functional regression problems in which the goal is to predict a real random variable Y from a functional explanatory variable \(X=\{X(t):\, t\in I\}\). Hence, our sample information is given by n pairs \((X_i,Y_i)\), \(i=1,\ldots ,n\), where \(X_i=\{X_i(t):\, t\in I\}\) are sample trajectories of the process X, and \(Y_i\) are the corresponding response variables.

The overall aim of this section is to check the performance of different finite-dimensional models, based on a few one-dimensional marginals \(X(t_1),\ldots ,X(t_p)\), such as those whose asymptotic behaviour has been analysed in the previous section, versus that of a functional \(L^2\)-based counterpart. More precisely, we will compare the performance of a model of type

with that of

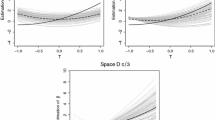

see the beginning of Sect. 6.1 for details. The word “performance” must be mostly understood in terms of “prediction capacity”, as measured by appropriate estimations of the prediction error \(\mathbb {E}[({\hat{Y}}-Y)^2]\), \({\hat{Y}}\) being the predicted value for the response obtained from the fitted model; see Figs. 1, 2, 3, 4.

It is very important to note that the finite-dimensional models of type (29) are viewed here as functional models, in the sense that they are all considered as particular cases of the RKHS-model (4). In practice, this means that we do not assume any prior knowledge about the “impact points” \(t_i\) or the number p of variables. So, in principle, the whole trajectory is available in order to pick up the impact points \(t_i\) we will use. However, given the grid points \(t_i\), the model (29) is handled as a problem of finite-dimensional multiple regression.

Left panel: prediction errors under Scenario 1 for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

Left panel: prediction errors under Scenario 2a for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

Left panel: prediction errors under Scenario 2b for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

Left panel: prediction errors under Scenario 3 for the RKHS-method, plotted as a function of the number of explanatory variables. The horizontal lines correspond to the prediction errors for the principal components (PC) regression method for different choices of the number of components. Right panel: the dual graph for the PC method as a function of the number of components versus the RKHS-based method for a few choices of the number of components

6.1 Simulation experiments

6.2 The models we use to generate the data

We analyse here three scenarios: the first scenario is more or less “neutral” in the comparison of a model based on finite-dimensional marginals versus a \(L^2\)-model. The second one is somewhat favourable to the finite-dimensional models, in the sense that one of these models is the “true one”, though we have no advanced knowledge about the impact points \(t_i\) and the number of them. Finally, the third scenario clearly favours the \(L^2\)-choice since the data are generated according to a model of type (30). In all cases, the aim is to compare the prediction errors obtained with our RKHS-based approach (based on p discretization points), with those corresponding to the classical \(L^2\) method or, more precisely, the popular version of this method based on q principal components; this is the so-called “principal components method” and will be denoted \(L_q^2\) in what follows. We now define these scenarios in precise terms.

- Scenario 1.:

-

We use the function rproc2fdata of the R-package fda.usc (Febrero-Bande and Oviedo de la Fuente 2012) to generate random trajectories according to a fractional Brownian Motion (fBM) \(X=\{X(t):t\in [0,1]\}\) and the aim is to predict \(Y\equiv X(1)\) from the observation of the sample trajectories X(t) for \(t\in [0,0.95]\). Let us recall that the fBM is a Gaussian process whose covariance function is \(K(s,t)=0.5(\vert t\vert ^{2\,H}+\vert s\vert ^{2\,H}-\vert t-s\vert ^{2\,H})\), H being the so-called “Hurst exponent”. We have taken \(H=0.8\).

- Scenario 2.:

-

We have generated the responses \(Y_i\) according to the following two finite-dimensional models (previously considered in Berrendero et al. (2019)), Model 2a: \(Y=2X(0.2)-5X(0.4)+X(0.9)+\varepsilon .\) Model 2b: \(Y=2.1X(0.16)-0.2X(0.47)-1.9X(0.67)+5X(0.85)+4.2X(0.91)+\varepsilon ,\) where in both cases the error variable \(\varepsilon \) has a distribution \(N(0,\sigma )\) with standard deviation \(\sigma =0.2\). The process \(\{X(t):\, t\in [0,1]\}\) follows a centred fBM with \(H=0.8\).

- Scenario 3.:

-

The response variable Y is generated according to a \(L^2\)-based linear model with \( Y=\int _0^1\log (1+4s)X(s)ds+\varepsilon , \) where, again, the trajectories \(\{X(t):\, t\in [0,1]\}\) are drawn from the same fBM indicated above and \(\varepsilon \) has a \(N(0,\sigma =0.2)\) distribution.

Note that these models are only used to generate the data, so that none of the regression models we will compare in our simulations below will incorporate any prior knowledge on the true distribution of \((X(\cdot ), Y)\) whatsoever.

6.3 The specific regression models and estimation methods we compare

Let us go back to our basic question: to what extent the finite-dimensional models (based on marginals \(X(t_i)\)) of type (29) are competitive against a standard, \(L^2\)-based regression model of type (30)? Since we do not assume any previous knowledge on the underlying models generating the data, we will take the “impact points” \(t_1,\ldots ,t_p\) equispaced in the observation interval [0, 1] (or, in the interval [0, 0.95] in Scenario 1 above). The coefficients \(\beta _i\) in this model are estimated by the ordinary least squares method for multiple regression, using the R-function lm. We will check several values of p, from 10 to 60.

As for the \(L^2\)-regression model (30), we will estimate the slope function \(\beta \) and the intercept \(\beta _0\) by the so-called functional principal components (PC) method; this essentially amounts to approximate the model (30) with another finite-dimensional model obtained by projecting the functional data on a given number q of principal functions (i.e., eigenfunctions of the covariance operator). We use the function fregre.pc of the R-package fda.usc. The considered sample sizes are \(n=100\) (black solid lines in the figures), and 200 (red dotted lines). We report, under the different scenarios, the mean over 1000 replications of the “prediction errors” \(\sqrt{(1/k)\sum (Y_i-\hat{Y}_i)^2}\), where \(k=0.2 \,n\) is the size of the random “test sub-samples” we use to evaluate the predictions \({\hat{Y}}_i\). The “training subsamples”, made of the remaining 80% of observations, are used to estimate the regression coefficients for \({\hat{Y}}_i\).

6.4 The simulation outputs

Our results are summarized in Figures 1-4 whose interpretation is as follows: in the left-hand panels, the wiggly curves show the prediction errors of our RKHS-based models as a function of the number p of explanatory variables (which are just one-dimensional marginals of the underlying process). The horizontal lines correspond to the errors obtained with the standard regression model based on different numbers of principal components, including the “optimal one”, assessed by simulation. The graphics in the right-hand panels, are, in some sense, dual: the curves show in this case the estimated prediction errors obtained with the principal components-based regression, as a function of the number of considered components. The horizontal lines correspond to the prediction errors obtained in the RKHS-based model with various “standard" choices of the number of explanatory one-dimensional marginals, including the “optimal" one in the considered equispaced grids. The small square legends in the lower right-hand side of the panels give the corresponding numbers of components (or variables) for the horizontal lines.

These graphics are, hopefully, self-explanatory. The RKHS-based models provide smaller prediction errors in those cases where the underlying model is of RKHS type. In any case, the differences are not very large. As a final, important, remark, let us point out that these comparisons are not completely fair for the RKHS-based models. Indeed, there is a considerable room for optimality in the grids \(t_1,\ldots ,t_p\), without any restriction of equispaced points; see Berrendero et al. (2019). However, this “variable selection approach" entails a heavier computation load and involves some theoretical challenges outside the scope of this work.

Overall, the results are to be expected: in Figs. 2 and 3, corresponding to Scenarios 2a and 2b (favourable to the finite-dimensional approximations) the predictions based on \(\hat{\alpha }_p\) are better than those based on the \(L^2\)-based functional linear model, except for very large values of p where the collinearity effect hampers the estimation. In Scenario 3, see Fig. 4, the situation reverses but, still the finite-dimensional models appear to be competitive for small values of p and large sample sizes.

In Scenario 1 (Fig. 1) the U-shape of the curves of estimated prediction errors is more evident. Still, the finite-dimensional proposals are better than \(L^2_3\) and \(L^2_6\) for the central range of considered values of p. A similar example, included in Appendix B, considers the case of the standard Brownian Motion. Here the conclusions are not far from those of the fractional Brownian Motion (with Hurst index H=0.8) but the more irregular nature of the trajectories is reflected in a larger sensitivity with respect to the specific location of the “design points” \(t_i\).

As a final remark, let us point out that our aim here is not to prove an overall superiority of the RKHS-based models in terms of prediction errors. This would require a much more exhaustive numerical study. Still, our experimental results suggest that the interpretability advantages of the RKHS-based models do not necessarily entail any serious loss in efficiency.

6.5 Real data examples

This is another natural playing field for a fair comparison on the prediction capacity of different regression models.

In all considered cases the sample is randomly divided in two parts: 80% of the observations is used for training (i.e. for parameter estimation) and the remaining 20% is used in order to check the accuracy of the predictions. This random splitting is repeated 100 times. Figures 5, 6 and 7 report the average prediction errors and the (average) adjusted coefficients of determination. In all cases we show in the left panel the average prediction errors of the RKHS model for different values of p across 100 replications. The horizontal line represents the error of the \(L^2\) model, which was fitted by projecting onto the three principal components (this value has been chosen just as a reference). The right panel shows the adjusted \(R^2\) values for both the RKHS and \(L^2\) models, represented by horizontal lines.

6.6 The data sets under study

(a) The Tecator data set. This data set has been used and described many times in papers and textbooks; see, e.g., Ferraty and Vieu (2006). It is available in the R-package fda.usc, see Febrero-Bande and Oviedo de la Fuente (2012). After removing some duplicated data, we have 193 functions obtained from a spectrometric study performed on meat samples in which the near infrared absorbance spectrum is recorded. The response variable is the fat content of the meat pieces. The functions are observed at a grid of 100 points.

An important aspect of this data set is the fact that the derivatives of the sample functions seem to be more informative than the original data themselves. Thus, we have taken into account this feature, using the second derivatives to predict the response variable (obtained by preliminary smoothing of the data. Figure 5 displays the results. All the considered values of p are checked in every run.

(b) The sugar data set. This data set has been previously considered in functional data analysis by several authors; see e.g. Aneiros and Vieu (2014) for additional details. The functions X(t) are fluorescence spectra obtained from sugar samples and the response Y is the ash content, in percentage of the sugar samples. The comparison results of finite-dimensional models versus the \(L^2\)-functional counterpart are shown in Fig. 6. Again the outputs correspond to the averages over 100 replications obtained by randomly selecting 214 (\(80\%\)) data for training and 54 (\(20\%\)) for testing, from the original data.

(c) Population data. For 237 countries and geographical areas, the percentage of population under 14 years for the period 1968-2018 (one datum per year) is recorded. In our experiment, we consider longitudinal data consisting of vectors \((X(1960), X(1961),\ldots ,X(2010))\); the aim is to predict the value eight years ahead. Thus, the response variable is \(Y=X(2018)\). Several theoretical assumptions (for example, independence), commonly used in the linear model, are violated here but, still, our comparisons make sense at an exploratory data level. The outputs can be found in Fig. 7 below. As in the previous examples, they correspond to 100 runs based on random partitions of the data set into 80% training data and 20% test data. Again p denotes the number of years (equispaced in the interval 1960-2010) used as explanatory variables in the finite-dimensional models. Thus for \(p=10\) we consider the years 1960, 1965,...,2010; for \(p=8\) we take 1960, 1967, 1974,...,2009.

7 Conclusions

We explore a mathematical framework, different from the classical \(L^2\)-approach (30), for the problem of linear regression with functional explanatory variable X and scalar response Y. This mathematical formulation includes, as particular cases, the finite-dimensional models (29) obtained by considering as explanatory variables a finite set of marginals \(X(t_i)\), with \(i=1,\ldots ,p\). This would allow us, for example, to compare such models for variable selection purposes (Berrendero et al. 2019) or considering, within a unified framework, the study of asymptotic behaviour of models as the number p of covariates grows to infinity; see e.g. Sur and Candès (2019) for a recent analysis in the logistic regression model. Note also that in the functional case the asymptotic analysis as \(p\rightarrow \infty \) appears more naturally than in the case of general regression studies, since all co-variables \(X(t_i)\) come from the unique, predefined reservoir of the one-dimensional marginals of the process \(X=\{X(t):t\in [0,1]\}\).

While this model, based on the theory of RKHS spaces, has been considered (explicit or implicitly) in several other papers, as mentioned above, we contribute some insights and some new theory that, hopefully, will consolidate this RKHS option as a useful alternative.

From a practical point of view, the fact of encompassing all the finite-dimensional models under a unique super-model (4)–(6) is also relevant in view of the empirical results of Sect. 6: indeed, the outputs of the simulations and the real data examples there show that, somewhat surprisingly, there is often little gain in considering the \(L^2\) functional model (30) instead of the simpler finite-dimensional alternatives (29).

Of course, we do not claim that the \(L^2\)-based regression model (30) should be abandoned in favour of the finite-dimensional alternatives of type (29), since the \(L^2\) model is now well-understood and has proven useful in many examples. We are just suggesting that there are perhaps some reasons to consider the problem of linear functional regression under a broader perspective. In addition, note that the \(L^2\) model appears as a particular case of the general formulation (4)–(6).

In any case, even if we are willing to incorporate the finite-dimensional models (29), according to our suggested approach, the functional character of the regression problem is not lost at all as the proposed global general formulation is unequivocally functional. In practice, this means that, according to our assumptions, the explanatory variables are still functions and we cannot get rid of this fact in the formulation of our problem.

References

Aneiros G, Vieu P (2014) Variable selection in infinite-dimensional problems. Stat Prob Lett 94:12–20

Ash R, Gardner M (1975) Topics in stochastic processes. Academic Press, Cambridge

Berlinet A, Thomas-Agnan C (2004) Reproducing kernel Hilbert spaces in probability and statistics. Kluwer Academic Publishers, New York

Berrendero J, Bueno-Larraz B, Cuevas A (2022) On functional logistic regression: some conceptual issues. Test 32:321–349

Berrendero J, Bueno-Larraz B, Cuevas A (2019) An RKHS model for variable selection in functional linear regression. J Multivar Anal 170:25–45

Bosq D (1991) Modelization, nonparametric estimation and prediction for continuous time processes. In: Roussas G (ed) Nonparametric functional estimation and related topics, NATO ASI Series. Mathematical and physical sciences series C. Springer, New York, pp 509–529

Cardot H, Ferraty F, Sarda P (1999) Functional linear model. Stat Prob Lett 45:11–22

Cucker F, Zhou DX (2007) Learning theory: an approximation theory viewpoint. Cambridge University Press, Cambridge

Cuevas A (2014) A partial overview of the theory of statistics with functional data. J Stat Plan Inference 147:1–23

Doksum K, Samarov A (1995) Nonparametric estimation of global functionals and a measure of the explanatory power of covariates in regression. Ann Stat 23:1443–1473

Febrero-Bande M, Oviedo de la Fuente M (2012) Statistical computing in functional data analysis: the R package fda.usc. J Stat Softw 51:1–28

Ferraty F, Vieu P (2006) Nonparametric functional data analysis: theory and practice. Springer Science and Business Media, New York

Horváth L, Kokoszka P (2012) Inference for functional data with applications. Springer Science and Business Media, New York

Hsing T, Eubank R (2015) Theoretical foundations of functional data analysis. Wiley, New York

Hsing T, Ren H (2009) An RKHS formulation of the inverse regression dimension-reduction problem. Ann Stat 37:726–755

Janson S (1997) Gaussian Hilbert spaces, vol 129. Cambridge University Press, Cambridge

Kneip A, Liebl D (2020) On the optimal reconstruction of partially observed functional data. Ann Stat 48:1692–1717

Kneip A, Poß D, Sarda P (2016) Functional linear regression with points of impact. Ann Stat 44:1–30

Landau HJ, Shepp LA (1970) On the supremum of a Gaussian process. Sankhyā 32:369–378

Laha RG, Rohatgi VK (1979) Probability theory. Wiley, New York

Lindquist MA, McKeague IW (2009) Logistic regression with Brownian-like predictors. J Am Stat Assoc 104:1575–1585

Lukić M, Beder J (2001) Stochastic processes with sample paths in reproducing kernel Hilbert spaces. Trans Am Math Soc 353:3945–3969

Mackey L, Jordan MI, Chen RY, Farrell B, Tropp JA (2014) Matrix concentration inequalities via the method of exchangeable pairs. Ann Prob 42:906–945

Mardia K, Kent J, Bibby J (2021) Multivariate analysis. Probability and mathematical statistics. Academic Press Inc, Cambridge

McKeague IW, Sen B (2010) Fractals with point impact in functional linear regression. An Stat 38:25–59

Parzen E (1959) Statistical inference on time series by Hilbert space methods. CA applied mathematics and statisticas labs. I. Stanford Univ, Stanford

Poß D, Liebl D, Kneip A, Eisenbarth H, Wager TD, Barrett LF (2020) Superconsistent estimation of points of impact in non-parametric regression with functional predictors. J R Stat Soc Ser B 82:1115–1140

Ramsay JO, Silverman BW (2005) Functional data analysis. Springer, New York

Rencher AC, Schaalje GB (2008) Linear models in statistics. Wiley, New York

Rigollet P, Hütter J (2017) High dimensional statistics. Lecture notes. Massachusetts Institute of Technology, Cambridge,Cambridge

Shang Z, Cheng G (2015) Nonparametric inference in generalized functional linear models. Ann Stat 43:1742–1773

Shin H, Hsing T (2012) Linear prediction in functional data analysis. Stoch Process Appl 122:3680–3700

Shin H, Lee S (2016) An RKHS approach to robust functional linear regression. Stat Sin 26:255–272

Sur P, Candès EJ (2019) A modern maximum-likelihood theory for high-dimensional logistic regression. Proc Nat Acad Sci 116:14516–14525

Yuan M, Cai TT (2010) A reproducing kernel Hilbert space approach to functional linear regression. Ann Stat 38:3412–3444

Acknowledgements

This research has been partially supported by Grants PID2019-109387GB-I00 from the Spanish Ministry of Science and Innovation, Grant CEX2019-000904-S funded by MCIN/AEI/ 10.13039/501100011033 and FCE_1_2019_1_156054 from ANII, Uruguay. The comments and criticisms from two reviewers and the Editors are gratefully acknowledged.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proof of Theorem 2

In what follows, we denote \(\mathcal {X}_p\) the \(n\times p\) data matrix whose (i, j)-entry is \(X_i(t_{j,p})\), \(i=1,\ldots ,n\), \(j=1,\ldots ,p\). Denote also by \(K_{T_p}\), the covariance matrix of \((X(t_{1,p}),\ldots ,X(t_{p,p}))\). Finally, we denote \(\textbf{Y}=(Y_1,\dots ,Y_n)'\) and \(\textbf{e}=(e_{1,p},\dots ,e_{n,p})'\), where \('\) stands for the transpose.

The proof of Theorem 2 relies on the three lemmas stated below.

Lemma 3

Let \(\gamma _{1,p}\ge \gamma _{2,p}\ge \dots \ge \gamma _{p,p}\) be the eigenvalues of \(K_{T_p}\) and \(\hat{\gamma }_{1,p}\ge \hat{\gamma }_{2,p}\ge \dots \ge \hat{\gamma }_{p,p}\) the eigenvalues of \((1/n)(\mathcal {X}_p^\prime \mathcal {X}_p)\). Then, for \(j=1,\dots ,p\), \(\vert \gamma _{j,p}-\hat{\gamma }_{j,p}\vert \le \Vert (1/n)(\mathcal {X}_p^\prime \mathcal {X}_p)-K_{T_p}\Vert _{op}.\)

Proof

This result follows as a direct application of Lemma 3.1 in Bosq (1991), (this is also sometimes called Weyl’s inequality in the literature). \(\square \)

Lemma 4

Let K be a continuous covariance function and let \(T_p\) be a set of grid points as in Lemma 2. Assume that all the eigenvalues of the covariance operator \(\mathcal {K}\) are strictly positive. Then \(\lim _{p\rightarrow \infty }\frac{1}{p}\Vert K_{T_p}\Vert _{op}=0\).

Proof

Assume by contradiction that \(\lim _{k\rightarrow \infty } \Vert K_{T_{p_k}}\Vert _{op}=\lim _{k\rightarrow \infty } \gamma _{1,p_k}=\infty \) for some sequence \(p_k\rightarrow \infty \). Let us denote for simplicity \(p_k=p\). Let the p-dimensional vector \(f_p=(f(t_{1,p}),\dots ,f(t_{p,p}))\) be an eigenvector of \((1/p)K_{T_p}\) associated to \(\gamma _{1,p}\) the largest eigenvalue of \(K_{T_p}\), such that \(\Vert f_p\Vert _{\max } =1\) for all p. Let us define a polygonal function \(g_p:[0,1]\rightarrow \mathbb {R}\) such that \(g_p(t_{i,p})=f(t_{i,p})\), observe that \(\Vert g_p\Vert _{\infty }=\Vert f_p\Vert _{\max }=1\). Let us prove that \(\{g_p\}_p\) is an equicontinuous sequence. Since K(s, t) is continuous, it is also uniformly continuous on \([0,1]^2\). Then, for all \(\epsilon >0\), there exists \(\delta =\delta (\epsilon )>0\) such that \( \vert K(x,y)-K(x',y') \vert <\epsilon \) if \(\Vert (x,y)-(x',y')\Vert _{\max } <\delta \). Let us denote \(\Vert T_p\Vert =\max _{i=1,\dots ,p-1} \vert t_{i+1,p}-t_{i,p} \vert \). Let \(\epsilon >0\) and p large enough such that \(\Vert T_p\Vert <\delta \). Then, we have

where the last inequality follows from \( \vert K(t_{i,p},t_{j,p})-K(t_{i+k,p},t_{j,p}) \vert <\epsilon \) and \(\Vert f_p\Vert _{\max } =1\) for all i, k such that \(1\le i \le p\), \(1\le i+k\le p\) and \(\vert t_{i,p}-t_{i+k,p} \vert <\delta \).