Abstract

Analysis of binary matched pairs data is problematic due to infinite maximum likelihood estimates of the log odds ratio and potentially biased estimates, especially for small samples. We propose a penalised version of the log-likelihood function based on adjusted responses which always results in a finite estimator of the log odds ratio. The probability limit of the adjusted log-likelihood estimator is derived and it is shown that in certain settings the maximum likelihood, conditional and modified profile log-likelihood estimators drop out as special cases of the former estimator. We implement indirect inference to the adjusted log-likelihood estimator. It is shown, through a complete enumeration study, that the indirect inference estimator is competitive in terms of bias and variance in comparison to the maximum likelihood, conditional, modified profile log-likelihood and Firth’s penalised log-likelihood estimators.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider a series of q independent pairs of independent binomial random variables \((Y_{i1},Y_{i2})\), with \(Y_{i1}\sim \textrm{Bi}(1,\pi _{i1})\) and \(Y_{i2}\sim \textrm{Bi}(m_{i},\pi _{i2})\) as in Section 4 of Lunardon (2018). Let the success probabilities satisfy \(\pi _{i1}=\exp (\psi +\lambda _{i})/\{1+\exp (\psi +\lambda _{i})\}\) and \(\pi _{i2}=\exp (\lambda _{i})/\{1+\exp (\lambda _{i})\}\), where \(\psi =\log \{\pi _{i1}/(1-\pi _{i2})\}-\log \{\pi _{i2}/(1-\pi _{i2})\}\), the log odds ratio, is the parameter of interest and \(\lambda _{i}=\log \{\pi _{i2}/(1-\pi _{i2})\}\) is the nuisance parameter, \(i=1,\ldots ,q\). This is a stratified setting as in Sartori (2003) where the sample size is \(n=\sum _{i=1}^{q}m_{i}\) and where q is the number of strata and \(m_{i}\) is the ith stratum sample size. We will refer to this exponential family model in canonical form as the binomial matched pairs model, and to the model with \(m_{i}=m=1\) as the binary matched pairs model. This model often arises in case–control studies in medical contexts, where \(y_{i1}\) and \(y_{i2}\) may represent for example the numbers of exposed or experimental persons among one case and \(m_{i}\) controls in the ith stratum and where interest lies in studying the influence of some risk factor or the effect of some treatment. For example, suppose that we have data from a clinical trial evaluating the effectivness of a new Covid vaccine in preventing Covid disease. The data was collected according to a matched case–control design with one case and \(m_{i}\) controls per stratum, where \(i=1,\ldots ,10\), is the number of strata and \(m_{i}\), which is the number of control patients, takes on various values from 3 to 7. On each of 10 days patients testing negative for Covid at a specified time in a hospital were served as subjects. On each day one patient chosen at random formed the experimental group and the remainder were controls. The binary response was whether the patient tested positive or not at the end of a specified period, where testing negative is taken as a “success" and the observed numbers \(y_{i1}\) and \(y_{i2}\) are therefore the numbers of patients in the two groups testing negative for Covid. The object of the analysis is to assess the effect of the drug on the probabilities of success \(\pi _{i1}\) and \(\pi _{i2}\) in the case and control groups, respectively. The parameter of interest \(\psi \) is the difference in the log odds ratio of the case and control groups (for more examples, see Section 1.2 of Cox and Snell 1989).

It is well known, since Neyman and Scott (1948), that in stratified modelling settings the maximum likelihood estimator, derived from the profile log-likelihood, is not in general, a consistent or unbiased estimator of the parameter of interest as the dimension of the nuisance parameter increases while the stratum sample size is kept fixed; this is known as the incidental parameter problem. It is possible to solve this problem, in some cases when the model has a particular structure, like in exponential families in canonical form, using conditional or marginal log-likelihoods (see Sections 4.4 and 4.5 of Pace and Salvan 1997). However, these are not always available and so an alternative is to work with the approximate conditional profile log-likelihood of Cox and Reid (1987), which requires orthogonality of the parameter of interest and the nuisance parameter, or the modified profile log-likelihood of Barndorff-Nielson (1983) whose computation requires a sample space derivative. These are often simple to compute for exponential and composite group families and only involve the observed information matrix for the components of the nuisance parameter which is readily available from direct differentiation (see Section 4.7 of Pace and Salvan 1997) and often provide accurate approximations to conditional or marginal log-likelihoods when they exist. It has been shown through many examples that when the profile log-likelihood performs poorly, approximate conditional and modified profile log-likelihoods can perform much better (see Section 3.1, Example 1 of McCullagh and Tibshirani 1990).

An alternative family of estimators in regular parametric problems was developed in Firth (1993) where the first order bias term is removed from the asymptotic bias of the maximum likelihood estimator by solving a set of adjusted score equations. Firth (1993) considered the case of exponential families with canonical parametrisation, amongst others, and showed that for this family of models, his method is equivalent to maximising a penalised likelihood were the penalty function is the Jeffreys invariant prior. Lunardon (2018) showed that the bias reduction approach of Firth (1993) provides an inferential framework which is, from an asymptotic perspective, equivalent to that for the approximate conditional and modified profile log-likelihoods when dealing with nuisance parameters. The advantage of bias reduction of Firth (1993) is that it can handle the problem of monotone likelihoods for stratified models with categorical responses. Nevertheless, the approach of Firth (1993) is not in general invariant under interest-respecting reparameterizations.

Indirect inference is another class of inferential procedures that appeared in the Econometrics literature in Gourieroux et al. (1993) and has been used for bias reduction of the maximum likelihood estimator and of other estimators. Its simplest version proceeds by subtracting from the maximum likelihood estimator its full bias and evaluating it at the new indirect inference estimator which therefore becomes the solution of an implicit equation. Kuk (1995) describes a simulation based approach for indirect inference by implementing an iterative bias correction of any suitably defined initial estimator, to yield an estimator which is asymptotically unbiased and consistent.

In this paper, we review in Sect. 2 the profile, conditional, modified profile and Firth (1993) penalised likelihood estimators of the log odds ratio. Since the maximum likelihood, conditional and modified profile likelihood estimators inherit the problem of infinite estimates of the log odds ratio, we propose in Sect. 3 a penalised log-likelihood function based on adjusted responses which always yields finite point estimates of the parameter of interest. The probability limit of the adjusted log-likelihood estimator is derived and it is shown that in certain settings the maximum likelihood, conditional and modified profile log-likelihood estimators drop out as special cases of the former estimator. In Sect. 4 we implement indirect inference to reduce the bias of the adjusted log-likelihood estimator, by adapting the method of Kuk (1995) to nuisance parameter settings. The finite-sample properties of all the above estimators are compared through a complete enumeration study in Sect. 5, as in Lunardon (2018) where no simulation is required, followed by a discussion. Finally, a real data set is analysed in Sect. 6.

2 Review of point estimation of the log odds ratio

2.1 Maximum likelihood

Several estimators of the common log odds ratio \(\psi \) have been proposed in the literature. These include the Mantel and Haenszel, empirical logit and Birch estimators (see Breslow 1981; Gart 1971, for a review of these estimators and of their properties). In this section, however, we only consider estimators of the log odds ratio that depend on the data only through the sufficient statistic.

The log-likelihood function for \(\theta =(\psi ,\lambda _{1},\ldots ,\lambda _{q})^{\intercal }\) for the above binomial matched pairs model is (Lunardon 2018, Section 4.1)

This is a linear full exponential family in canonical form (Pace and Salvan 1997, Section 5) with jointly sufficient statistics \(t=\sum _{i=1}^{q}y_{i1}\) and \(s_{i}=y_{i1}+y_{i2}\) for \(\psi \) and \(\lambda _{i}\), respectively. Throughout this section, we consider for simplicity \(m_{i}=m\) with totals \(s_{i}=(m+1)/2\) as in (Sartori 2003, Example 3). In this setting, the constrained maximum likelihood estimator of \(\lambda _{i}\) for a fixed value of \(\psi \), denoted by \({\hat{\lambda }}_{i,\psi }\), will be identical for all \(i=1,\ldots ,q\) and so we set \({\hat{\lambda }}_{i,\psi }={\hat{\gamma }}_{\psi }\). Equivalently, denote the constrained maximum likelihood estimator of \(\psi \) for a fixed value of \(\gamma \) by \({\hat{\psi }}_{\gamma }\). The score equations for the log-likelihood function with respect to \(\gamma \) and \(\psi \) are respectively

The solution of (3) is \({\hat{\psi }}_{\gamma }=\log \{t/(q-t)\}-\gamma \), and on substituting this in (2) and solving for \(\gamma \) we get the maximum likelihood estimator of the nuisance parameter \({\hat{\gamma }}=\log \big [\{q(m+1)-2t\}/\{q(m-1)+2t\}\big ]\). Substituting \({\hat{\gamma }}\) in \({\hat{\psi }}_{\gamma }\) we get the maximum likelihood estimator of the parameter of interest

Note that when \(t=0\) or \(t=q\), \({\hat{\psi }}\) is \(-\infty \) or \(+\infty \), respectively. This is problematic because it means in these extreme situations were all cases ‘succeed’ or ‘fail’ we cannot estimate \(\psi \). Since \(\psi \) is defined as a logarithm of odds ratio, infinite estimates arise when the argument of the logarithm, i.e. the odds ratio is zero. Therefore, a better estimator of \(\psi \) that avoids infinity needs to avoid zero as a possibility for the argument of the log function.

Using the weak law of large numbers, Slutsky’s theorem and the Continuous mapping theorem (Florescu 2014, Section 7), we find that \({\hat{\psi }}\) converges in probability to \(\psi +\log [\{(m+1)\exp (\psi )+m-1\}/\{(m-1)\exp (\psi )+m+1\}]\) as \(q\rightarrow \infty \), and so it is inconsistent. When m is also allowed to increase to \(\infty \), \({\hat{\psi }}\) will tend to \(\psi \). This means that \({\hat{\psi }}\) will be consistent only when both m and q diverge.

Given that the totals \(s_{i}\) are fixed, the maximum likelihood estimator of \(\psi \) depends on the data only through the sufficient statistic \(T=\sum _{i=1}^{q}Y_{i1}\) and so its bias and variance can be calculated exactly using

2.2 Conditional maximum likelihood

The conditional log-likelihood function is based on the distribution of \(Y_{i1}\) given \(S_{i}=s_{i}\) in each stratum. Davison (1988) and Gart (1970) noted that the conditional density of \(Y_{i1}\) given \(S_{i}\) is

This is the noncentral hypergeometric distribution which is obtained by rewriting the left hand side of (7) as \(\textrm{Pr}(Y_{i1}=y_{i1},S_{i}=s_{i})/\textrm{Pr}(S_{i}=s_{i})=\textrm{Pr}(Y_{i1}=y_{i1})\textrm{Pr}(Y_{i2}=s_{i}-y_{i1})/\textrm{Pr}(Y_{i1}+Y_{i2}=s_{i})\), by independence of \(Y_{i1}\) and \(Y_{i2}\), and noting that \(\textrm{Pr}(Y_{i1}+Y_{i2}=s_{i})=\sum _{u=0}^{s_{i}}\textrm{Pr}(Y_{i1}=u)\textrm{Pr}(Y_{i2}=s_{i}-u)\). Because \(y_{i1}\) can only be 0 or 1, the right hand side of (7) can be further simplified to

This shows that \(Y_{i1}\vert S_{i}\) has a Bernoulli distribution with success probability the first term inside the bracket of the right hand side of (8). Taking the logarithm of the product of (8) gives the conditional log-likelihood function which simplifies to

Letting \(m_{i}=m\), \(s_{i}=(m+1)/2\) and differentiating (9) gives the conditional maximum likelihood estimator

When \(t=0\) or \(t=q\), \({\hat{\psi }}_{c}\) is \(-\infty \) or \(+\infty \), respectively. In the setting of Lunardon (2018), i.e. when \(m_{i}=m\) and \(s_{i}=(m+1)/2\), the success probability of the Bernoulli random variable \(Y_{i1}\vert S_{i}\) simplifies to \(\pi =\exp (\psi )/\{\exp (\psi )+1\}\). The distribution of the sufficient statistic T given \(S_{i}\) is therefore Binomial with denominator q and success probability \(\pi \). The conditional distribution of T can also be obtained using the convolution method following (Butler and Stephens 2017, Section 2). This will be particularly useful for general \(m_{i}\) and \(s_{i}\) where the Binomial conditional distribution of T no longer holds. In fact, the conditional distribution of T will be Poisson binomial.

By noting that T converges in probability to \(q\pi \) by the weak law of large numbers, we find that \(\log \{t/(q-t)\}\xrightarrow {p}\psi \) by Slutsky’s theorem and the Continuous mapping theorem, so \({\hat{\psi }}_{c}\) is consistent. As the conditional maximum likelihood estimator depends on the data only through the sufficient statistic, its bias and variance can be calculated using (5) and (6) but replacing \({\hat{\psi }}(T)\) by \({\hat{\psi }}_{c}(T)\).

2.3 Modified profile maximum likelihood

(Davison 2003, Section 12) showed that for a linear exponential family in canonical form, the modified profile log-likelihood function of Barndorff-Nielson (1983) reduces to

where \(l(\psi ,{\hat{\lambda }}_{\psi })\) is the profile log-likelihood obtained from (1) by substituting the constrained maximum likelihood estimator of \(\lambda \) and where \(j_{\lambda \lambda }(\psi ,\lambda )\) is the observed information per observation for the \(\lambda \) components and is given by the negative of the second derivative of the log-likelihood function with respect to \(\lambda \). In the setting \(m_{i}=m\) and \(s_{i}=(m+1)/2\), \(j_{\lambda \lambda }(\psi ,\lambda )\) becomes the \(q\times q\) matrix with ith diagonal element

and zero elsewhere, and where we observed in Sect. 2.1 that the solution of (2), \({\hat{\lambda }}_{\psi }={\hat{\gamma }}_{\psi }\), is not available in closed form. This means that there is no closed form expression for (11) and we calculate the maximum modified profile log-likelihood estimator, \({\hat{\psi }}_{mp}\), numerically and evaluate its bias and variance using (5) and (6), respectively, by replacing \({\hat{\psi }}(T)\) with \({\hat{\psi }}_{mp}(T)\).

2.4 Firth penalized likelihood

When \(\theta =(\psi , \lambda _{1},\ldots ,\lambda _{q})\) is the canonical parameter of an exponential family model like in the model considered here, Firth (1993) showed that the adjusted score equations estimator of \(\theta \) is equivalent to the maximiser of the penalised log-likelihood function

where \(i(\theta )=\textrm{E}\{j(\theta )\}\) is the Fisher information matrix. In the setting \(m_{i}=m\) and \(s_{i}=(m+1)/2\), the second order partial derivatives of \(l(\psi ,\lambda _{i})\) are

Since the above derivatives do not depend on the data, the Fisher information matrix coincides with the observed information and is given by

where \(V_{i1}=\big (\exp (\psi +\lambda _{i})\big )/\big (1+\exp (\psi +\lambda _{i})\big )^2\) and \(V_{i2}=m\big (\exp (\lambda _{i})\big )/\big (1+\exp (\lambda _{i})\big )^2\), \(i=1,\ldots ,q\). The determinant of \(i(\psi ,\lambda _{i})\) is obtained using the standard identity (see, Magnus and Neudecker 2019, Chapter 1, page 28) and simplifies to

Therefore the penalty function that needs to be added to the log-likelihood function is

The score equations for the penalised log-likelihood of Firth (1993), \(l_{*}(\psi ,\lambda _{i})\), with respect to \(\lambda _{i}\) and \(\psi \) involve cumbersome expressions and have no closed form solution so the penalised log-likelihood of Firth (1993) estimator of \(\psi \), denoted by \({\hat{\psi }}_{*}\) is obtained numerically. This estimator is always finite as shown in Section 2.1 of Kosmidis and Firth (2021). The bias and variance of \({\hat{\psi }}_{*}\) are calculated using (5) and (6), respectively.

2.5 Binary matched pairs model

The binary matched pairs model is a special case of the binomial matched pairs model when \(m=1\). This implies that in the setting of Lunardon (2018), \(s_{i}=1\), and so \(a=d=0\), where a, b, c and d denote the number of pairs of the form (0, 0), (0, 1), (1, 0) and (1, 1) respectively, with \(a+b+c+d=q\). Note that \(\sum _{i=1}^{q}y_{i1}=c+d\), \(\sum _{i=1}^{q}y_{i2}=b+d\) and \(\sum _{i=1}^{q}(y_{i1}+y_{i2})=b+c+2d\). We call pairs of the form (0, 0) and (1, 1) concordant, while pairs of the form (0, 1) and (1, 0) are called discordant. In this case, \({\hat{\gamma }}_{\psi }=-\psi /2\) and so the profile log-likelihood for \(\psi \) is (see Davison 2003, Example 12.23)

which is maximised at

Alternatively, \({\hat{\psi }}\) can be obtained by substituting \(m=1\) in (4). Davison (2003) showed that \({\hat{\psi }}\) converges in probability to \(2\psi \) as \(q\rightarrow \infty \), thus it is inconsistent.

Only discordant pairs enter the conditional log-likelihood and it is given by

which is maximised at

which converges in probability to \(\psi \) as \(q\rightarrow \infty \), as noted by (Davison 2003, Example 12.23), so it is consistent.

Substituting \({\hat{\gamma }}_{\psi }=-\psi /2\) in (12) gives \( j_{\lambda \lambda }(\psi ,{\hat{\lambda }}_{\psi })=2\big (\exp (\psi /2)\big )/\big (1+\exp (\psi /2)\big )^2\), and in this case (Davison 2003, Example 12.23) showed that

which is maximized at

where the latter converges in probability to \(2\log \big [\{1+5\exp (\psi )\}/\{5+\exp (\psi )\}\big ]\) as \(q\rightarrow \infty \). Note that when \(c=0\) or \(b=0\), \({\hat{\psi }}_{mp}\) is \(2\log (1/5)\) or \(2\log (5)\), respectively, i.e. \({\hat{\psi }}_{mp}\) is finite. Although \({\hat{\psi }}_{mp}\) is inconsistent, Davison (2003) showed that it is less biased than \({\hat{\psi }}\).

3 Adjusted likelihood method

3.1 Penalised likelihood based on adjusted responses

In order to avoid infinite estimates of \(\psi \), as is the case with \({\hat{\psi }}\), \({\hat{\psi }}_{c}\) and \({\hat{\psi }}_{mp}\) (for \(m\ne 1\)), when all of the \(y_{i1}\) observations are zero or one, we propose to adjust the log-likelihood function by adding a small number \(\delta >0\) to each success, \(y_{i1}\) and \(y_{i2}\), and to each failure, \(1-y_{i1}\) and \(m_{i}-y_{i2}\). The penalised log-likelihood function based on adjusted responses for \(\theta =(\psi ,\lambda _{1},\ldots ,\lambda _{q})^{\intercal }\) becomes

When \(m_{i}=m\) and \(s_{i}=(m+1)/2\), the score equations for the above log-likelihood function with respect to \(\gamma \) and \(\psi \) simplify respectively to

where we set the constrained penalised maximum likelihood estimator of \(\lambda _{i}\), based on adjusted responses, for a fixed value of \(\psi \), denoted by \({\hat{\lambda }}_{i,\psi ,a}\), to be \({\hat{\gamma }}_{\psi ,a}\) because it will be identical for all \(i=1,\ldots ,q\). The simultaneous solution of (27) and (28) give the penalised maximum likelihood estimators of \(\gamma \) and \(\psi \), based on adjusted responses

Note that when \(\delta =0\), \({\hat{\psi }}_{a}={\hat{\psi }}\). Note also that when \(t=0\) or \(t=q\), \({\hat{\psi }}_{a}\) is finite, while when \(t=q/2\), \({\hat{\psi }}_{a}=0\). When \(m=1\),

Since \({\hat{\psi }}_{a}\) depends on the data only through the sufficient statistic t, its bias and variance are computed using (5) and (6), respectively.

3.2 Probability limit of the penalised likelihood estimator based on adjusted responses

In this section we obtain the probability limit of the penalised log-likelihood estimator based on adjusted responses and derive the relationship that \(\delta \) should satisfy in order to make this estimator consistent. We also show how the modified profile log-likelihood estimator (when \(m=1\)) and the conditional log-likelihood estimator can be recovered for particular values of \(\delta \).

When \(m=1\), as \(q\rightarrow \infty \), \({\hat{\psi }}_{a}\) converges in probability to

while for a general m, we find that as \(q\rightarrow \infty \), \({\hat{\psi }}_{a}\) converges in probability to

Similar to Table 12.3 of Davison (2003), Table 1 compares the limiting values of \({\hat{\psi }}\), \({\hat{\psi }}_{c}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{a}\) when \(m=1\) for a set of values of \(\psi \) ranging from 0 to 5 and a set of values of \(\delta \) ranging from 0.05 to 0.50. We note that for any given \(\psi \), there exists a value of \(\delta \) for which the limit of \({\hat{\psi }}_{a}\) is closer to the truth than \({\hat{\psi }}_{mp}\). In other words, there is evidence that there exists a \(\delta \) value such that \({\hat{\psi }}_{a}\) converges to the truth faster than \({\hat{\psi }}_{mp}\). These values of \(\delta \) decrease as the true value of \(\psi \) increase. Observe also that when \(\delta =0.25\), the limiting value of \({\hat{\psi }}_{a}\) coincides with that of \({\hat{\psi }}_{mp}\). In fact substituting \(\delta =0.25\) in (32), we find that \({\hat{\psi }}_{a}\) converges in probability to the same limit of \({\hat{\psi }}_{mp}\) given in Sect. 2.5. This means that the penalised log-likelihood estimator based on adjusted responses recovers the modified profile log-likelihood estimator when \(m=1\) and \(\delta =0.25\), i.e. \({\hat{\psi }}_{a}={\hat{\psi }}_{mp}\).

When \(m=1\), in order to make \({\hat{\psi }}_{a}\) consistent we need to equate the ratio inside the logarithm of (32) with \(\sqrt{\exp (\psi )}\) which simplifies to the equation

When \(\psi =0\), there is no adjustment because there is no bias so we consider the positive solution of the quadratic equation \([\delta ^2\{\exp (\psi )\}^2+\{2\delta ^2-1\}\{\exp (\psi )\}+\delta ^2]=0\) in terms of \(\delta \) which simplifies to

Substituting (35) into (31) and solving for \(\psi \) gives the same estimate as \({\hat{\psi }}_{c}\). This means that the value of \(\delta \) that achieves consistency of \({\hat{\psi }}_{a}\) is the one that recovers \({\hat{\psi }}_{c}\). This is disadvantageous because we inherit exactly the same problems with conditional log-likelihood (i.e. infinite estimates) if we attempt to tune \(\delta \) to make \({\hat{\psi }}_{a}\) consistent. The value of \(\delta \) in terms of t and q that recovers \({\hat{\psi }}_{c}\) is obtained by equating (31) with \({\hat{\psi }}_{c}\) and simplifies to

Observe that when \(t=0\) or \(t=q\), \(\delta =0\) and so \({\hat{\psi }}_{a}={\hat{\psi }}\), while when \(t=q/2\), \(\delta \) is infinite.

For a general m, the relationship that \(\delta \) should satisfy in order to make \({\hat{\psi }}_{a}\) consistent is found by equating the ratio inside the logarithm of (33) with \(\exp (\psi )\) which simplifies to the equation

with no closed form solution. The value of \(\delta \) in terms of t, q and m that recovers \({\hat{\psi }}_{c}\) satisfies the equation

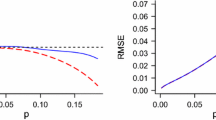

Fig. 1 shows a plot of \(\delta \), the root of (37), against \(\psi \) for \(m=1\), \(m=3\), \(m=11\) and \(m=39\). This plot shows that there is a scaled logistic relationship between \(\delta \) and \(\psi \) and that the best choice of \(\delta \) lies in the range \(0<\delta <0.5\). Given the true value of \(\psi \), as m increases, the value of \(\delta \) that makes \({\hat{\psi }}_{a}\) consistent decreases to zero. This is expected because we know from standard asymptotic theory that the maximum likelihood estimator is asymptotically consistent.

4 Indirect inference estimation of the log odds ratio

Suppose that \({\hat{\psi }}\) is some initial estimator of \(\psi \), not necessarily the maximum likelihood estimator, then the simplest method of bias reduction of \({\hat{\psi }}\) via indirect inference relies on solving the equation (see, Kuk 1995)

with respect to \({\tilde{\psi }}\) where \(\textrm{B}_{{\hat{\psi }}}({\tilde{\psi }},\lambda )=\textrm{E}_{{\tilde{\psi }},\lambda }({\hat{\psi }})-{\tilde{\psi }}\) is the bias function of \({\hat{\psi }}\) evaluated at \({\tilde{\psi }}\) and \(\lambda =\lambda _{1},\ldots ,\lambda _{q}\). We call \({\tilde{\psi }}\) the indirect inference estimator of \(\psi \). Alternatively, (39) can be written as

Since we want to reduce the bias of \({\hat{\psi }}_{a}\) when \(m_{i}=m\) and \(s_{i}=(m+1)/2\), our indirect inference estimator \({\tilde{\psi }}\) is the solution of

As the expectation of \({\hat{\psi }}_{a}\) can be obtained using complete enumeration, one version of \({\tilde{\psi }}\), \({\tilde{\psi }}_{a*}\), which is independent of \(\gamma \), can be defined by using the conditional density \(\textrm{Pr}(T=u\vert S_{i};\psi )\) which is binomial with denominator q and success probability \(\exp (\psi )/\{\exp (\psi )+1\}\),

No closed form solution exists for \({\tilde{\psi }}_{a*}\), so we solve the above equation numerically and calculate the expectation and variance of \({\tilde{\psi }}_{a*}\) using (5) and (6). For \(t\in \{0,q\}\), \({\tilde{\psi }}_{a*}\) has no solution. In fact the expectation in the right hand side of (42) is bounded below by \({\hat{\psi }}_{a}(0)\) and above by \({\hat{\psi }}_{a}(q)\). This means that when the binary observations are all zero or one the indirect inference estimator is not defined which is unfortunate because even though we overcome the problem of infinite estimates at \(t=0\) and \(t=q\) by introducing a penalised likelihood estimator based on adjusted responses, when we attempt to reduce the bias of the later the same problem appears again at those boundary values of t.

5 Complete enumeration study

We reproduce the complete enumeration study in (Lunardon 2018, Table 1) which compares the finite sample bias and variance of estimators derived from profile, conditional, modified profile and penalized ( Firth (1993)) likelihoods, denoted by \({\hat{\psi }}\), \({\hat{\psi }}_{c}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{*}\), respectively. We enrich this study by adding the penalised maximum likelihood estimator based on adjusted responses, \({\hat{\psi }}_{a}\), and the indirect inference estimator based on \({\hat{\psi }}_{a}\) using the conditional model, denoted by \({\tilde{\psi }}_{a*}\), for a set of 20 values of \(\delta \) ranging from 0.05 to 1.00. The comparison in (Lunardon 2018, Table 1) also assesses the coverage probability and length of 95% confidence intervals for \(\psi \) based on the chi-squared approximation to the distribution of \(W_{*}(\psi )\) and to the distributions of the profile, conditional and modified profile log-likelihood ratios, denoted by \(W(\psi )\), \(W_{c}(\psi )\) and \(W_{mp}(\psi )\), respectively. We extend this comparison by adding the coverage probability and length of 95% confidence intervals for \(\psi \) based on the chi-squared approximation to the distribution of the penalised log-likelihood ratio based on adjusted responses, denoted by \(W_{a}(\psi )\). The exact bias and variance of estimators and the exact coverage probability and average length of confidence intervals is obtained through complete enumeration because the estimators and confidence intervals all depend on the sufficient statistic \(T=\sum _{i=1}^{q}Y_{i1}\) and so the distribution of T given \(S_{1}=s_{1},\ldots ,S_{q}=s_{q}\) can be computed numerically following Butler and Stephens (2017). These summaries however, are computed only for \(t\in \{1,\ldots ,q-1\}\) since when \(t\in \{0,q\}\), \({\hat{\psi }}\), \({\hat{\psi }}_{c}\) and \({\hat{\psi }}_{mp}\) (for \(m\ne 1\)) are infinite.

Tables 5, 6, 7, 8, 9 and 10 report the bias and variance of estimators, while Tables 11, 12, 13, 14, 15 and 16 report the coverage probability and average length of confidence intervals with nominal level 95% when the true log odds ratio \(\psi \) is unity, with \(m\in \{1,3,11,39\}\) and \(q\in \{30,100,1000\}\).

Overall, for fixed \(\delta \) the numerical value of the bias and variance of the estimator \({\hat{\psi }}_{a}\) decrease as m increases. In many cases, however this means that the bias becomes more negative, i.e. the magnitude of the bias increases with m. This suggests that for any combination of q and m, there exists a particular value of \(\delta \), above which the bias of \({\hat{\psi }}_{a}\) does not improve. In fact for any combination of m and q, there exists a value of \(\delta \) such that \({\hat{\psi }}_{a}\) has minimum bias which is smaller than the bias of the estimators \({\hat{\psi }}\), \({\hat{\psi }}_{c}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{*}\); for example, for \(q=30\), \(m=1\) this optimal \(\delta \) in terms of bias is 0.45, for the combinations \(q=30, m=39\) and \(q\ge 100, m\ge 11\), the optimal value of \(\delta \) that gives minimum bias becomes smaller than 0.05. For \(q=30\), at the optimum \(\delta \) value, the estimator \({\hat{\psi }}_{a}\) has smaller bias and variance than \({\hat{\psi }}_{c}\). This is also true for other values of q, except that it is not very clear from Tables 5, 6, 7, 8, 9 and 10 because we consider a specific set of values for \(\delta \); for example, for \(q=100\) and \(m=11\), at \(\delta =0.045\), \({\hat{\psi }}_{a}\) has bias and variance − 0.03 and 0.47, respectively (both multiplied by 10), while for \(q=1000\) and \(m=1\), at \(\delta =0.444\), \({\hat{\psi }}_{a}\) has bias and variance 0.00 and 0.04, respectively (both multiplied by 10). This optimal value of \(\delta \) decrease as m increases, but for a fixed m, as q increase above 30, the optimum \(\delta \) value remain constant. This is expected because the theoretical derivations in equations (35) and (37) tell us that the optimum value of \(\delta \) in terms of consistency is independent of q. For example, the optimum value of \(\delta \) when \(m=1\) and \(\psi =1\) is given by (35) and is equal to \(\delta =0.4434\). Similarly, (37) gives the optimum value of \(\delta \) in terms of consistency for a general value of m and \(\psi \), for example, when \(m=3\) and \(\psi =1\), the optimum \(\delta \) is 0.1566.

So in terms of the incidental parameter problem, for a fixed value of m and increasing q, there is an optimum value of \(\delta \) that makes \({\hat{\psi }}_{a}\) less biased and has smaller variance than all the other estimators. This behaviour can be seen more clearly from Table 17 where the optimum value of \(\delta \) that minimizes the bias of \({\hat{\psi }}_{a}\) was chosen from a finer set of \(\delta \) values. For the values of m and q chosen in Table 17, the effect of fixing m and allowing q to increase is a larger optimal \(\delta \), while fixing q and increasing m decreases this optimal \(\delta \) value. However, the pattern in Table 17 suggest that as both q and m are allowed to diverge, the optimal \(\delta \) value becomes close to zero and this makes intuitive sense because we know that \(\delta =0\) gives rise to the maximum likelihood estimator \({\hat{\psi }}\) which is asymptotically unbiased when both m and q tend to \(\infty \).

Nevertheless, the bias results for the estimator \({\tilde{\psi }}_{a*}\) show a marked improvement over \({\hat{\psi }}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{c}\), for all values of q and m. When \({\tilde{\psi }}_{a*}\) is compared with \({\hat{\psi }}_{*}\), the former has smaller bias and variance for \(m=1\) and \(m=3\) for all values of q. When compared with \({\hat{\psi }}_{a}\), the bias of \({\tilde{\psi }}_{a*}\) is reduced for all values of \(\delta \) except the optimal one. The interesting result to note here is that as \(\delta \) increases, the bias and variance of \({\tilde{\psi }}_{a*}\) approach that of \({\hat{\psi }}_{c}\) for all combinations of q and m considered. To wrap up this comparison of estimators, if we were to choose a \(\delta \) based on \({\hat{\psi }}_{a*}\), then it will be the one that is close to (but not equal to) zero because it will give the smallest bias and variance. However, \({\hat{\psi }}_{a*}\) does not perform better than \({\hat{\psi }}_{a}\) all the time. In fact, if we were to choose a \(\delta \) based on \({\hat{\psi }}_{a}\), then it will be the optimal \(\delta \) that minimizes the bias of \({\hat{\psi }}_{a}\) because the bias and variance of \({\hat{\psi }}_{a}\) at this optimal \(\delta \) is smaller than that of any other estimator in the table for any combination of q and m. Having said that, in practice we are only given a data set and we don’t know the particular \(\delta \) for that data set so \({\tilde{\psi }}_{a*}\) will be a good choice because its bias and variance are very competitive regardless of the value of \(\delta \).

Concerning the coverage probability and average length of 95% confidence intervals, Lunardon (2018) noted that intervals derived from \(W_{mp}(\psi )\) and \(W_{*}(\psi )\) are consistent with those from \(W_{c}(\psi )\) for \(m\ge 3\). The coverage probability and average length of confidence intervals derived from \(W_{a}(\psi )\) show an improvement over those derived from \(W_{c}(\psi )\) for particular values of \(\delta \) (shown in bold face in Tables 11, 12, 13, 14, 15, 16). We considered 20 values of \(\delta \) ranging from 0.31 to 0.50 for \(m=1\), 0.06 to 0.25 for \(m=3\) and 0.01 to 0.20 for \(m=11\) and \(m=39\). For those particular values of \(\delta \) in bold face, the coverage probability derived from \(W_{a}(\psi )\) is closer to the nominal coverage of 95% than that derived from \(W_{c}(\psi )\). This agrees with the fact that \({\hat{\psi }}_{a}\) performs better than \({\hat{\psi }}_{c}\) in terms of bias and variance for some optimal value of \(\delta \). As the value of \(\delta \) increase the average length of confidence intervals derived from \(W_{a}(\psi )\) decreases which is expected because the variance (and hence standard error) of \({\hat{\psi }}_{a}\) becomes smaller as \(\delta \) becomes larger.

In conclusion it has been shown how, in the binomial matched pairs model, finite estimates of the log odds ratio are produced in cases where the observations are either all equal to zero or all equal to one by penalising the log-likelihood function through the additive adjustment of a tuning parameter \(\delta >0\) to each success and failure. This value \(\delta \) was then used as a parameter that could be tuned to improve the bias and/or variance of the penalised log-likelihood estimator based on adjusted responses. The indirect inference method was applied to further reduce the bias of \({\hat{\psi }}_{a}\). The method can be applied in principle to any parametric model. We are also free to use estimators other than \({\hat{\psi }}_{a}\) as initial estimates. Indeed the setting considered here where \(m_{i}\) and \(s_{i}\) are fixed to m and \((m+1)/2\), respectively is a special case and perhaps a large scale simulation study would be useful to account for different stratum sizes and totals.

6 Analysis of crying babies data

In this section we illustrate the methods discussed above in a general setting by providing a real-data example with different stratum sizes. We re-analyse the crying of babies data set given in (Cox and Snell 1989, Example 1.2). The data come from an experiment intended to assess the effectiveness of rocking motion on the crying of babies and were collected according to a matched case–control design with one case and \(m_{i}\) controls per stratum, where \(i=1,\ldots ,18\) and \(m_{i}\) takes on various values from 5 to 9. On each of 18 days babies not crying at a specified time in a hospital were served as subjects. On each day one baby chosen at random formed the experimental group and the remainder were controls. The binary response was whether the baby was crying or not at the end of a specified period. In (Cox and Snell 1989, Example 1.2), not crying is taken as a "success" and the observed numbers \(y_{i2}\) and \(y_{i1}\) are therefore the numbers of babies in the two groups not crying. The number of non crying babies in the experimental group is \(t=15\).

The estimates of the log odds ratio \(\psi \) and its standard error are reported in Table 2. Davison (1988) obtained the maximum likelihood and conditional maximum likelihood estimates while Lunardon (2018) obtained the estimates of \(\psi \) derived from the modified profile and penalised (Firth) log-likelihoods. We found the penalised based on adjusted responses log-likelihood and indirect inference estimates for 20 values of \(\delta \) ranging from 0.05 to 1.00. As the true log odds ratio \(\psi \) is unknown, it is difficult to decide which estimator should be preferred however, there is an important observation to note. As \(\delta \) approaches 1, the indirect inference estimates of \(\psi \) approach the conditional log-likelihood estimates and the standard errors of \({\hat{\psi }}_{a*}\) approach that of \({\hat{\psi }}_{c}\). This observation has been noted before in the complete enumeration study in terms of bias and variance in the specific setting where the ith stratum sample size was fixed. Even though this observation has not been proved analytically, the results based on the crying babies data show that, at least numerically, it may also hold for a general binary matched pairs with different stratum sizes.

The standard errors of the estimates in Table 2, except for \({\hat{\psi }}_{c}\), are obtained using the Fisher information matrix evaluated at the given estimate of \(\psi \). In particular, for the maximum likelihood estimator the standard error is obtained using \(\sqrt{\textrm{diag}\{i^{-1}({\hat{\psi }},{\hat{\lambda }}_{1},\ldots ,{\hat{\lambda }}_{18})\}}\), where i is the Fisher information matrix. For the conditional maximum likelihood estimator the standard error is obtained using \(\sqrt{(-\partial ^{2}l_{c}(\psi )/\partial \psi ^{2})^{-1}}\), evaluated at \({\hat{\psi }}_{c}\). For the modified profile likelihood estimator, \({\hat{\psi }}_{mp}\), the standard error is obtained using \(\sqrt{(\partial ^{2}l_{mp}(\psi )/\partial \psi ^{2})^{-1}}\), evaluated at \({\hat{\psi }}_{mp}\), i.e. using the Hessian. For the penalised (Firth) and penalised based on adjusted responses likelihood estimators, the standard errors are obtained using \(\sqrt{\textrm{diag}\{i^{-1}({\hat{\psi }}_{*},{\hat{\lambda }}_{1,*},\ldots ,{\hat{\lambda }}_{18,*})\}}\) and \(\sqrt{\textrm{diag}\{i^{-1}({\hat{\psi }}_{a},{\hat{\lambda }}_{1,a},\ldots ,{\hat{\lambda }}_{18,a})\}}\), respectively. Finally, for the indirect inference estimator the standard error is obtained using \(\sqrt{(-\partial ^{2}l_{c}(\psi )/\partial \psi ^{2})^{-1}}\), evaluated at \({\tilde{\psi }}_{a*}\). However according to Kuk (1995), to obtain the estimated standard error for the indirect inference estimator we need a further correction of the Fisher information using sandwich estimators of the variance based on the Godambe information because the second Bartlett identity no longer holds. Similarly, a Godambe information matrix would be better to use for the estimated standard error of the modified profile likelihood estimator. The estimated standard error reported in Lunardon (2018) for \({\hat{\psi }}_{*}\) is obtained using the Hessian matrix and is slightly different to our result.

To assess the reliability of the estimators we compute their actual bias and variance, conditioning on the observed totals as in (Lunardon 2018, Table 2), for a set of values of \(\psi \) ranging from -3 to 3. The conditional distribution of the sufficient statistic \(T=\sum _{i=1}^{18}Y_{i1}\), which represents the total number of babies not crying in the experimental group, is the distribution of the sum of independent Bernoulli random variables with different probabilities. To calculate this distribution we use the R function dkbinom which gives the mass function of the sum of k independent Binomial random variables, with possibly different probabilities. This function implements the convolution algorithm of k binomials described in Butler and Stephens (2017).

The results reported in Table 2 of Lunardon (2018) are incorrect because Lunardon (2018) computes the conditional bias and variance of \({\hat{\psi }}_{c}\), \({\hat{\psi }}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{*}\) for \(t\in \{1,\ldots ,17\}\) but without rescaling and normalizing the conditional distribution of T. The corrected conditional summaries are reported in Table 3 with the addition of the penalised log-likelihood estimator based on adjusted responses, \({\hat{\psi }}_{a}\), and the indirect inference estimator, \({\hat{\psi }}_{a*}\), for various values of \(\delta \). We observe that for \(\psi \in \{-2,-1,0,1,2\}\) there exists a value of \(\delta \) in the range \(0.01<\delta <0.20\) such that \({\hat{\psi }}_{a}\) is less biased than any of \({\hat{\psi }}\), \({\hat{\psi }}_{c}\), \({\hat{\psi }}_{mp}\) and \({\hat{\psi }}_{*}\). In fact the effect of increasing the absolute value of \(\psi \) is to decrease the optimal \(\delta \) value in terms of the bias of \({\hat{\psi }}_{a}\). For \(\psi =3\), the maximum likelihood estimator seems to be the least biased but this is due to the effect of infinite estimates at \(t=0\) and \(t=18\). In other words, removing these infinite estimates significantly lowers the average of the estimates for \(t\in \{1,\ldots ,17\}\). This is also true for the conditional log-likelihood estimator, \({\hat{\psi }}_{c}\).

Overall, for \(\psi \in \{-2,-1,0,1\}\) the indirect inference estimator is an improvement over the penalised log-likelihood estimator based on adjusted responses in terms of bias for all values of \(\delta \) considered. We notice that there exists a \(\delta \) value in the range \(0.01<\delta <0.20\) such that \({\hat{\psi }}_{a*}\) is less biased than \({\hat{\psi }}_{a}\). In fact the indirect inference estimator for \(\psi \in \{-2,-1,0,1\}\) is competitive for all \(\delta \) values considered, which makes it the best choice amongst estimators. However, for \(\psi \in \{-3,2,3\}\) the best choice of \(\delta \) is the largest possible, in this case \(\delta =1\). It is worth noting that as in the complete enumeration study in Tables 5, 6, 7, 8, 9 and 10, it is also the case here that we observe that the bias and variance of the indirect inference estimator approaches that of the conditional log-likelihood estimator as \(\delta \) increase to 1.

Table 4 reports the unconditional bias and variance of the modified profile, penalised (Firth) and penalised based on adjusted responses log-likelihood estimators for \(t\in \{0,\ldots ,18\}\). Even though \({\hat{\psi }}_{*}\) has smaller bias and variance than \({\hat{\psi }}_{mp}\) for all value of \(\psi \), we again observe as in Table 3 that there exists a value of \(\delta \) such that \({\hat{\psi }}_{a}\) is less biased than \({\hat{\psi }}_{*}\) for all values of \(\psi \). We may conclude that \({\hat{\psi }}_{a}\) is preferable over \({\hat{\psi }}_{*}\) as its variance is also decreased at the optimal \(\delta \) value for all values of \(\psi \) considered (except for \(\psi =0\)). The relationship between the optimal \(\delta \) value and the true value of \(\psi \) coincides with that in the conditional case (Table 3), i.e. the optimal \(\delta \), in terms of bias, decreases as the absolute value of \(\psi \) increases. Note that the indirect inference estimator has no solution at \(t=0\) and \(t=18\), and so it is excluded from the unconditional summaries in Table 4.

7 Conclusions

For the binomial matched pairs model, we evaluated the performance of a new penalised log-likelihood estimator of the log odds ratio which is based on an additive adjustment, \(\delta >0\), to the responses so as to avoid infinite estimates which is inherited by the maximum likelihood and conditional likelihood estimators. We calculated the probability limit of this estimator and showed that the maximum likelihood, conditional and modified profile log-likelihood estimators, when \(m=1\) for the latter, can be retrieved from this new estimator for certain values of \(\delta \). It was found that indirect inference estimation based on the new estimator is competitive for a wide range of values of \(\delta \).

It is worth investigating numerically whether, for a general value of m, there exists a \(\delta \) that recovers the modified profile log-likelihood estimator of \(\psi \) from the penalised log-likelihood estimator based on adjusted responses, because this will imply that \({\hat{\psi }}_{mp}\) could be retrieved from \({\hat{\psi }}_{a}\) for any value of m not just in the special case of the binary matched pairs model where \(m=1\). One future direction is to investigate the performance of the estimators of the log odds ratio outside the setting of Lunardon (2018) for a general \(m_{i}\) and \(s_{i}\). This is possible since in this general setting the distribution of the sufficient statistic \(T=\sum _{i=1}^{q}y_{i1}\) can be obtained using the convolution method of Butler and Stephens (2017) and is Poisson binomial. Obtaining the probability limit of the indirect inference estimator in the setting of Lunardon (2018) is desired. The complete enumeration study in Sect. 5 may be expanded by considering other values of \(\psi \), e.g. \(\psi \in \{-3,-2,-1,0,1,2,3\}\). Finally, yet another possible future direction would be to investigate the performance of an alternative adjustment to the log-likelihood function where a small number \(\delta >0\) is added to each success but subtracted from each failure.

References

Barndorff-Nielson OE (1983) On a formula for the distribution of the maximum likelihood estimator. Biometrika 70(2):343–365. https://doi.org/10.1093/biomet/70.2.343

Breslow N (1981) Odds ratio estimators when the data are sparse. Biometrika 68(1):73–84. https://doi.org/10.1093/biomet/68.1.73

Butler K, Stephens MA (2017) The distribution of a sum of independent binomial random variables. Methodol. Comp. Appl. Prob. 19:557–571. https://doi.org/10.1007/s11009-016-9533-4

Cox DR, Reid N (1987) Parameter orthogonality and approximate conditional inference. Journal of the Royal Statistical Society 49(1):1–39. https://doi.org/10.1111/j.2517-6161.1987.tb01422.x

Cox DR, Snell EJ (1989) Analysis of Binary Data. Chapman and Hall, London

Davison AC (1988) Approximate conditional inference in generalized linear models. Journal of the Royal Statistical Society 50(3):445–461. https://doi.org/10.1111/j.2517-6161.1988.tb01740.x

Davison AC (2003) Statistical Models. Cambridge University Press, Cambridge

Firth D (1993) Bias reduction of maximum likelihood estimates. Biometrika 80(1):27–38. https://doi.org/10.1093/biomet/80.1.27

Florescu I (2014) Probability and Stochastic Processes. John Wiley and Sons, New Jersey

Gart JJ (1970) Point and interval estimation of the common odds ratio in the combination of 2\(\times \)2 tables with fixed marginals. Biometrika 57(3):471–475. https://doi.org/10.2307/2334765

Gart JJ (1971) The comparison of proportions: A review of significance tests, confidence intervals and adjustments for stratification. Int. Statist. Rev. 39(2):148–169. https://doi.org/10.2307/1402171

Gourieroux C, Monfort A, Renault E (1993) Indirect inference. Journal of Applied Econometrics 8:85–118. https://doi.org/10.1002/jae.3950080507

Kosmidis I, Firth D (2021) Jeffreys-prior penalty, finiteness and shrinkage in binomial-response generalized linear models. Biometrika 108(1):71–82. https://doi.org/10.1093/biomet/asaa052

Kuk AYC (1995) Asymptotically unbiased estimation in generalized linear models with random effects. Journal of the Royal Statistical Society 57(2):395–407. https://doi.org/10.1111/j.2517-6161.1995.tb02035.x

Lunardon N (2018) On bias reduction and incidental parameters. Biometrika 105(1):233–238. https://doi.org/10.1093/biomet/asx079

Magnus JR, Neudecker H (2019) Matrix Differential Calculus with Applications in Statistics and Econometrics, 3rd edn. John Wiley and Sons, New Jersey

McCullagh P, Tibshirani R (1990) A simple method for the adjustment of profile likelihoods. Journal of the Royal Statistical Society 52(2):325–344. https://doi.org/10.1111/j.2517-6161.1990.tb01790.x

Neyman J, Scott EL (1948) Consistent estimates based on partially consistent observations. Econometrika 16(1):1–32. https://doi.org/10.2307/1914288

Pace L, Salvan A (1997) Principles of Statistical Inference: from a Neo-Fisherian Perspective. World Scientific Publishing Co, Singapore

Sartori N (2003) Modified profile likelihoods in models with stratum nuisance parameters. Biometrika 90(3):533–549. https://doi.org/10.1093/biomet/90.3.533

Acknowledgements

The author is deeply indebted to Professor Ioannis Kosmidis for his many insightful remarks and critical comments and suggestions which have led to significantly improving the quality and presentation of the results.

Funding

This work was supported by the University College London EPSRC funded studentship in Mathematical Sciences.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Supplementary information

Not applicable

Conflict of interest

The author declares that she has no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Results tables for complete enumeration study

Appendix A: Results tables for complete enumeration study

See Tables 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16 and 17.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saleh, A. Reduced bias estimation of the log odds ratio. Stat Papers (2024). https://doi.org/10.1007/s00362-024-01593-7

Received:

Revised:

Published:

DOI: https://doi.org/10.1007/s00362-024-01593-7

Keywords

- Bias reduction

- Binary matched pairs

- Indirect inference

- Maximum likelihood

- Modified profile likelihood

- Adjusted responses