Abstract

The present study was designed to understand and optimize self-assessment accuracy in cognitive skill acquisition through example-based learning. We focused on the initial problem-solving phase, which follows after studying worked examples. At the end of this phase, it is important that learners are aware whether they have already understood the solution procedure. In Experiment 1, we tested whether self-assessment accuracy depended on whether learners were prompted to infer their self-assessments from explanation-based cues (ability to explain the problems’ solutions) or from performance-based cues (problem-solving performance) and on whether learners were informed about the to-be-monitored cue before or only after the problem-solving phase. We found that performance-based cues resulted in better self-assessment accuracy and that informing learners about the to-be-monitored cue before problem-solving enhanced self-assessment accuracy. In Experiment 2, we again tested whether self-assessment accuracy depended on whether learners were prompted to infer their self-assessments from explanation- or performance-based cues. We furthermore varied whether learners received instruction on criteria for interpreting the cues and whether learners were prompted to self-explain during problem-solving. When learners received no further instructional support, like in Experiment 1, performance-based cues yielded better self-assessment accuracy. Only when learners who were prompted to infer their self-assessments from explanation-based cues received both cue criteria instruction and prompts to engage in self-explaining during problem-solving did they show similar self-assessment accuracy as learners who utilized performance-based cues. Overall, we conclude that it is more efficient to prompt learners to monitor performance-based rather than explanation-based cues in the initial problem-solving phase.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The worked-example approach is a well-established method to foster initial cognitive skill acquisition (e.g., Sweller et al., 2019). This approach usually involves four stages (Renkl, 2014; see also van Gog et al., 2020). First, learners are provided with instructional explanations that communicate basic knowledge on new principles and concepts. In terms of models of cognitive skill acquisition (e.g., Anderson, 1982; VanLehn, 1996), this stage corresponds with the early phase, in which learners need to gain a basic understanding of the learning content. Second, learners receive multiple worked examples that illustrate how the principles and concepts can be used to solve problems and are engaged in self-explaining the examples. After they have studied and self-explained the worked examples, learners turn to problem-solving in the third stage. As does studying worked examples, initial problem-solving also serves the theoretical function of supporting learners in understanding the rationale of the solutions. Hence, both studying worked examples and initial problem-solving correspond with the intermediate phase of cognitive skill acquisition, which focuses on learning how the principles and concepts can be used to solve concrete problems (see Renkl, 2014). In the fourth stage, learners practice problem-solving extensively. This stage, which corresponds with the late phase of cognitive skill acquisition, is mainly focused on enhancing problem-solving speed and accuracy.

The transition from the third to the fourth stage is hence accompanied by a change in the main learning goal. It changes from understanding the rationale of solutions (Stage 1–3) to enhancing the accuracy and speed of problem-solving (Stage 4). Thus, at least when learners navigate through these stages in a self-regulated manner, it is crucial that learners accurately self-assess their level of understanding after the initial problem-solving, because they should proceed to Stage 4 only when their level of understanding is sufficient. There is evidence that learners use their initial problem-solving performance to diagnose their level of understanding (e.g., Foster et al., 2018). It is also found that learners decide to engage in further problem-solving (i.e., proceeding to Stage 4) too often (see Foster et al., 2018), which likely reflects the widespread finding that learners’ self-assessments tend to be inaccurate (for recent meta-analytical findings, see León et al., 2023).

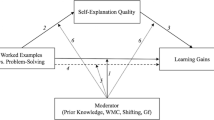

The present study’s main goal was to investigate how self-assessment accuracy in the initial problem-solving phase of cognitive skill acquisition through learning from worked examples could be optimized. Specifically, we were interested to explore whether self-assessment accuracy increased when learners were prompted to infer their self-assessments from their ability to explain the rationale of the problems’ solutions (i.e., explanation-based cues) rather than from their problem-solving performance (i.e., performance-based cues). Furthermore, we tested whether informing learners about to-be-monitored cues already before the problem-solving phase enhanced self-assessment accuracy.

Cognitive Skill Acquisition Through the Worked-Example Approach

Based on the worked example effect (Sweller & Cooper, 1985)―one of the first effects postulated by cognitive load theory (see Sweller et al., 1998)―the worked-example approach has been developed and become one of the prominent and widely used procedures to foster cognitive skill acquisition (e.g., Atkinson, 2002; Foster et al., 2018; Hefter et al., 2014; Kölbach & Sumfleth, 2013; Rawson et al., 2020; Schworm & Renkl, 2007; van Gog et al., 2008; for an overview, see also Renkl, 2014). This approach usually involves four stages and relates to two important learning goals (see Fig. 1).

The first important learning goal is to understand the rationale of solutions for the problems to be solved with the respective cognitive skill. This goal is pursued in the first three stages―the present study focuses on these stages. In the first stage, learners receive instructional explanations that communicate basic knowledge concerning the principles and concepts on which the cognitive skill is based (see Wittwer & Renkl, 2010). For instance, when learners acquire the cognitive skill of solving mathematical urn model problems, which relate to the calculation of probabilities of combinations of random events (e.g., events in repeated dice rolling or drawing objects from a vessel such as an urn), instructional explanations could explain stochastic principles concerning the order of events (i.e., order is relevant or irrelevant) and concerning the replacement of events after they have been removed from the urn (i.e., with or without replacement). Hence, such explanations cover the four stochastic principles of order relevant/with replacement, order relevant/without replacement, order irrelevant/with replacement, and order irrelevant/without replacement (see Berthold & Renkl, 2009; Schalk et al., 2020). In terms of models of cognitive skill acquisition (e.g., VanLehn, 1996), this first stage can be mapped on the early phase, which relates to gaining a basic understanding of the content that is to be learned.

In the second stage, learners receive multiple worked examples that illustrate how the previously explained basic knowledge can be used to solve problems. This stage directly relates to supporting learners in understanding the rationale of the solution procedure. More specifically, in this step, learners engage in generative learning activities that are targeted at understanding how the basic knowledge acquired in the first stage can be used to explain or justify solution steps (e.g., Hefter et al., 2015; Nokes et al., 2011; Roelle et al., 2017). Unfortunately, learners often do not sufficiently engage in such self-explaining of worked examples on their own (e.g., Chi et al., 1989; Renkl, 1997). Consequently, worked examples are frequently provided together with self-explanation prompts that explicitly require learners to engage in relating the worked examples to the previously provided instructional explanations (e.g., Atkinson et al., 2003; Conati & VanLehn, 2000; Hefter et al., 2015; Schworm & Renkl, 2007). In the case of the cognitive skill of solving mathematical urn model problems, for example, such self-explanation prompts could require learners to explain which of the solution’s features reflect whether the sequence of events is relevant or irrelevant, and which features reflect whether events are replaced.

In the third stage, learners turn to problem-solving. Note that this initial problem-solving phase targets the same learning goal as the previous stage. That is, the theoretical function of the initial problem-solving is to support learners in deeply understanding the rationale of the solution procedures (see Renkl, 2014). Hence, both the second and third stages of the procedure relate to the intermediate phase of cognitive skill acquisition (see VanLehn, 1996), which focuses on learning how the basic principles and concepts can be used to solve concrete problems (Renkl, 2014). Other than the worked examples, however, the initial problem-solving likely serves a secondary function beyond the deepening of learners’ understanding by applying and refining the acquired schemata. Specifically, the early problem-solving may also serve the function of helping learners to self-assess their level of understanding of the to-be-learned solution rationales. If learners realize they have not understood how to solve the examples on their own, they can turn back to studying the worked examples (for a similar notion from research on learning from example-problem sequences, see van Gog et al., 2020).

In the fourth stage, like in the third stage, learners engage in problem-solving. Other than in Stage 3, however, the main learning goal is not to foster understanding the problem-solving procedure’s rationale. Rather, the main learning goal in Stage 4 is to enhance the speed and accuracy of problem-solving (see Renkl, 2014). That is, once learners have formed a quality problem-solving schema or multiple schemata through the first three stages, enhancing the efficiency of the schema(ta) is key. Accordingly, this stage corresponds with late phases of cognitive skill acquisition. Although to date it is generally unclear how many problems learners need to solve at what times to effectively consolidate the acquired schemata (e.g., Rawson et al., 2020; see also Rawson & Dunlosky, 2022), this stage is considered the end of the approach of learning from worked examples.

A wealth of previous research has dealt with optimizing this approach. These studies investigated instructional support measures that related to single stages as well as to transitions between the stages. In terms of support measures associated with single stages, previous research has, for example, investigated prompts to enhance deep processing of the basic instructional explanations (Stage 1; e.g., Roelle et al., 2017) or prompts to elicit self-explanations during processing the worked examples (i.e., Stage 2; e.g., Berthold et al., 2009). In terms of supporting the transitions between stages, previous research has, among other aspects, analyzed the degree and difficulty of required retrieval of previously processed principles and concepts from memory when learners transition from processing the instructional explanations to studying worked examples (i.e., Stage 1 → Stage 2; e.g., Roelle & Renkl, 2020; see also Hiller et al., 2020) or on fading worked examples to transition from worked examples to (initial) problem-solving (i.e., Stage 2 → Stage 3; e.g., Foster et al., 2018; Renkl et al., 2004).

Despite this wealth of research that contributed to optimizing learning from worked examples, however, there is still room for optimization. Such optimization potential lies, for example, in the initial problem-solving phase (i.e., Stage 3). At least when learners navigate the example-based sequence in a self-regulated manner, they need to self-assess their level of understanding of the solution procedure in this phase. (Accurate) self-assessment helps to decide whether to turn back to worked-example study (Stage 2) or proceed with more problem-solving (Stage 4). A study by Foster et al. (2018), however, found that learners tend to proceed to Stage 4 too early, which detrimentally affects learning outcomes. One potential explanation for this suboptimality is that in the initial problem-solving phase, learners infer their self-assessments from cues of relatively low diagnosticity.

The Role of Cue Utilization in Self-Assessment in the Initial Problem-Solving Phase

Inaccurate student self-assessment and its consequences have attracted a great deal of attention in recent years (e.g., Alexander, 2013; de Bruin & van Gog, 2012; de Bruin & van Merriënboer, 2017; León et al., 2023; Panadero et al., 2016; Waldeyer et al., 2023). One theoretical model explaining how learners form self-assessments and why they are often inaccurate is the cue-utilization framework (Koriat, 1997). According to this framework, learners infer their self-assessments from cues, which they can monitor during learning. For example, such cues could be the fluency with which learners solve certain problems, the degree to which they can retrieve previously studied information from memory, or their interest in the topic (e.g., Thiede et al., 2010). The accuracy of learners’ self-assessments is therefore thought to depend on the degree to which the utilized cues are diagnostic (i.e., predictive) in the respective context (see de Bruin et al., 2017). Hence, inaccurate self-assessments are assumed to result from cues of low diagnosticity, which is supported by a wealth of findings concerning learners’ cue utilization in different settings (e.g., in problem-solving, see, e.g., Ackerman et al., 2013; Baars et al., 2014a, b, 2017; Oudman et al., 2022; in learning from texts, see, e.g., de Bruin et al., 2017; Endres et al., 2023; van de Pol et al., 2021a; Waldeyer & Roelle, 2023; for overviews, see Prinz et al., 2020; Thiede et al., 2010; van de Pol et al., 2021b).

Findings by Foster et al. (2018) indicate that in the initial problem-solving phase of learning from worked examples, learners infer their self-assessments from their (perceived) problem-solving performance. At first glance, using this cue appears to be sensible, as this cue is highly salient and hence likely easy to monitor during problem-solving. If a learner has acquired a high level of understanding, this should be reflected in good problem-solving performance and hence the cue of problem-solving performance should be highly diagnostic. In support of this notion, Baars et al. (2014a, b) found that self-assessments made by primary school students were more accurate when learners could monitor their performance on a practice problem after worked-example study when learning about addition and subtraction problems as compared to monitoring their performance after studying worked examples without subsequent problem-solving. However, at second glance, the diagnosticity of the cue of problem-solving performance might depend on how learners solved the problems. When learners have not yet reached a sufficient level of understanding, they search for solutions via shallow strategies, for example, a copy-and-adapt strategy (i.e., copying the solution procedure from a presumably similar problem and adapting just the numbers; VanLehn et al., 2016), a keyword strategy (i.e., selecting a solution procedure by a keyword in the cover story of a problem (Karp et al., 2019), or means-end analyses without schema construction efforts (Salden et al., 2010; see also Renkl, 2014). Such shallow problem-solving strategies often result in correct solutions (e.g., Karp et al., 2019), which, in turn, may lead to performance cues that might be misleading with respect to learning in the sense of understanding. Such shallow strategies are also very resource-intensive, and their effectiveness depends on variable features of the to-be-solved problems (e.g., on whether the to-be-solved problem is actually structurally similar to the one from which the solution is adapted). Hence, success on shallowly solved problems might not be a reliable predictor of future performance on isomorphic problems. Rather, the diagnosticity of the cue of problem-solving performance should be relatively low in this case. In support of this notion, Baars et al. (2014a, b) found that providing learners with the correct solution to a problem as a standard for comparison improved learners’ self-assessments only for identical problems and not for isomorphic problems. These findings suggest that learners might have inferred their self-assessments from surface features of the problems they practiced instead of from structural features such as their understanding of the solution procedure.

On a more general level, the outlined line of reasoning concerning the potentially suboptimal diagnosticity of problem-solving performance as a cue is in line with desirable difficulties research (e.g., Soderstrom & Bjork, 2015). This research, the core of which relates to the counterintuitive finding that learning activities that slow down improvement or even hinder performance during learning often produce superior long-term learning, indicates that learners often mix up performance (e.g., problem-solving success) and learning (e.g., the level of understanding of the to-be-learned principles and concepts) when judging their progress in studying (Soderstrom & Bjork, 2015; see also Endres et al., 2024). For example, Rohrer and Taylor (2007, Exp. 2) found in mathematics learning that learners who studied different problem types in a mixed (i.e., interleaved) sequence learned more than learners who studied blocked sequences, as indicated by a 1-week delayed posttest. The performance during learning, however, was higher on part of the learners with blocked sequences. Hence, like in the outlined case of shallow solution procedures in learning from worked examples, the diagnosticity of the cue of performance during learning would have been relatively low.

An alternative cue from which learners could infer the degree to which they have understood the to-be-learned knowledge or mastered the to-be-acquired cognitive skill at the end of Stage 3 of the worked-example sequence could be their ability to explain how the problems should be solved. There is evidence which suggests that the quality of self-explanations is predictive of learners’ level of understanding in example-based learning (e.g., Hefter et al., 2015; Otieno et al., 2011; Roelle & Renkl, 2020). However, like solving problems, explaining problem-solving procedures can be done in a shallow way as well. For example, when learners who used shallow strategies to solve problems are prompted to infer their self-assessments from the ability to explain how the problems should be solved, they might believe that explanations of their shallow strategies would suffice. Although this case might be relatively rare as learners likely know that their strategy is hardly aligned with how the problems should be solved ideally, explanation-based cues, like performance-based cues, might be of low diagnosticity as well in some cases.

In view of the outlined arguments concerning performance-based and explanation-based cues and the available empirical evidence, it is hard to predict with some certainty whether the one or other type of cue would result in better self-assessment accuracy in the initial problem-solving phase in learning from worked examples. Regardless of the type of cue from which the self-assessments are inferred, however, self-assessment accuracy should be higher when learners monitor the respective cue from the beginning of the problem-solving phase rather than reconstruct the cue only after problem-solving. A promising means to enhance self-assessment accuracy could hence be to inform learners about the to-be-monitored cue already at the beginning of the problem-solving phase. Surprisingly, however, to date, the effects of the point in time at which learners are informed about the cue from which they should infer their self-assessments have largely been ignored in research on self-assessment accuracy.

It is important to highlight that informing learners before the initial problem-solving phase about the cue to use for monitoring might entail not only beneficial effects but could have negative side effects as well. There is evidence that monitoring requires mental effort (e.g., Froese & Roelle, 2022; Panadero et al., 2016). When the task of problem-solving imposes high cognitive load, the additional mental effort required for monitoring could compromise learners’ performance in the initial problem-solving phase (e.g., Valcke, 2002; see also van Gog et al., 2011). This, in turn, could decrease the diagnosticity of the cue of problem-solving performance, as the low performance would be due to the requirement to attend to certain cues for monitoring and not to insufficient understanding. An alternative effect of too high cognitive load could be that learners disengage from monitoring the respective cue, which should also decrease self-assessment accuracy but not compromise problem-solving performance. A third potential consequence of high cognitive load could be that learners infer that they are not learning well. There is evidence that learners infer self-assessments from perceived mental effort such that self-assessments decrease as subjective mental effort increases (see Baars et al., 2020). If learners would lower their self-assessments because of high experienced mental effort and their problem-solving performance would be compromised by the high perceived mental effort as well, informing learners about the to-be-monitored cues before problem-solving could increase self-assessment accuracy, but for unintended reasons. In investigating effects of the point in time at which learners are informed about the to-be-monitored cues, it is hence crucial to also analyze effects on cognitive load (i.e., mental effort) and problem-solving performance and relations between cognitive load and self-assessments in order to test for such unintended mechanisms (see also the Effort Monitoring and Regulation (EMR) Framework by de Bruin et al., 2020).

Experiment 1

In Experiment 1, we addressed two main research questions with respect to understanding and optimizing self-assessment accuracy in cognitive skill acquisition by the approach of learning from worked examples.

-

Research Question 1: Does self-assessment accuracy at the end of the initial problem-solving phase (i.e., Stage 3), where learners need to be aware whether they have already understood the rationale behind the solution procedure, depend on whether learners were prompted to infer their self-assessments from explanation-based cues or from performance-based cues?

-

Research Question 2: Does informing learners about the type of to-be-monitored cues already before the problem-solving phase increase self-assessment accuracy as compared to informing learners only after the problem-solving phase?

We also addressed the outlined potential unintended effects of informing learners about the to-be-monitored cue already before the problem-solving phase. Specifically, in Research Question 3, we pursued the following questions:

-

Research Question 3: Does informing learners about the type of to-be-monitored cues already before the problem-solving phase affect mental effort and performance in the problem-solving phase? Do learners’ mental effort ratings und learners’ self-assessments correlate? Do these potential correlations differ between learners with and without up-front information about the to-be-monitored cue?

Method

Sample and Design

As we were interested in effects relevant for educational practice, we used the lower limit of a medium effect size (ηp2 = 0.06; further parameters: α = 0.05, β = 0.20) as the basis for our a priori power analysis. This power analysis, which was conducted with G*Power 3.1.9.3 (Faul et al., 2007), was fitted for a 2 × 2 between-subjects ANOVA and yielded a required sample size of N = 128. Hence, we recruited N = 135 university students from different universities in Germany (73.3% female; MAge = 26.37 years, SDAge = 7.25 years) majoring in various disciplines. We excluded one participant who reported being diagnosed with dyscalculia and five participants who achieved a score higher than 90% on the pretest. Our final sample comprised 129 university students (73.6% female; MAge = 26.01 years, SDAge = 6.66 years).

All learners were instructed on the cognitive skill of solving mathematical urn model problems, which relate to the calculation of probabilities of combinations of random events (e.g., events in repeated dice rolling), by means of learning from worked examples. Hence, all learners first received basic instructional explanations about stochastic principles (Stage 1) and were then given four worked examples together with self-explanation prompts (Stage 2). In the third stage, all learners were to solve four mathematical urn model problems (Stage 3) and then self-assess how well they understood the solution rationales. The experimental manipulation took place during this initial problem-solving phase. Specifically, we varied whether the learners were prompted to infer their self-assessments from performance-based cues or from explanation-based cues (i.e., factor of prompted cue type). Furthermore, we varied whether learners were or were not informed about the to-be-monitored cue at the beginning of the problem-solving phase (i.e., factor of timing of cue prompt). Hence, the experiment followed a 2 × 2 factorial between-subject design. The participants were randomly assigned to the conditions.

The participation was compensated with online vouchers worth 15€. The experiment was approved by the ethics committee of Ruhr University Bochum (No. EPE-2022–027). We collected written informed consent from all participants.

Materials

Stages 1 and 2: Introductory Instructional Explanations and Worked Examples

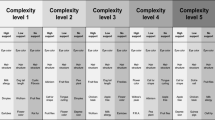

In the first stage of the example-based learning procedure, all participants were asked to carefully read basic instructional explanations that covered stochastic principles concerning mathematical urn models. Specifically, we used a slightly adapted version of the instructional explanations used by Berthold and Renkl (2009) and Schalk et al. (2020). The instructional explanations introduced the concepts of probability of (a) an event in a single-stage experiment, (b) a sequence of events in multistage experiments, and (c) multiple sequences of events in multistage experiments. Furthermore, the instructional explanations covered four stochastic principles capturing situations in which the order of an event is relevant or irrelevant and in which events are removed for the next experiment and are then either returned or kept outside of it (i.e., with/without replacement). At the end of this introduction, the participants were shown a table in which the four stochastic principles were listed and illustrated (see Fig. 2).

After reading the basic instructional explanations, that is, in the second stage, the participants were provided with four worked examples in which the four stochastic principles were embedded in different contexts. Each worked example contained a question, a colored diagram that illustrated the calculation path, and a mathematical calculation including the solution and answer. Self-explanation prompts accompanied the worked examples in order to scaffold deep processing (Chi & Bassok, 1989; Renkl, 2014; see Fig. 3 for a worked example illustrating the principle “order relevant/without replacement” and the self-explanation prompt). The worked examples were shown to all participants in the same order.

Stage 3: Initial Problem-Solving Phase

In the third stage of the example-based learning procedure, the learners turned to problem-solving. Specifically, all participants were provided with four problems to be solved. Each problem was provided on an individual page and learners had access to a tool for calculations and a box in which they could write notes. Each problem dealt with one of the four stochastic principles studied beforehand (see Fig. 4). The problems were shown to all participants in the same order.

The experimental manipulations took place during this initial problem-solving phase. After having worked on all four problems, all participants were required to self-assess their level of understanding of the rationales of the four problems’ solutions. In view of the notion that the required level of understanding at the end of the initial problem-solving phase relates to both understanding of the abstract principles and understanding of how to solve concrete problems, learners were posed two questions. First, all learners were asked “how well did you understand the four types of combinations of random events?” (i.e., the four stochastic principles) with the answer scale ranging from 0% (not at all) to 100% (very good). Second, all learners were asked “how well can you solve problems about the four types of random-event combinations?” with the answer scale ranging from 0% (not at all) to 100% (very good). Depending on the prompted cue type, learners were asked to infer their self-assessments from “how well you were able to solve the four problems you just completed” (i.e., performance-based cues) or from “how well you could explain how the four problems you just completed are solved” (i.e., explanation-based cues).

The factor timing of cue prompt related to the instruction that was provided at the beginning of the problem-solving phase. The learners in the conditions who were informed about the prompted cue type already at the beginning of the problem-solving phase received the following instruction: “In the following, you are to solve four problems on the four types of stochastic principles on your own. In doing so, please pay attention to how well you can solve the problems” (i.e., performance-based cue condition), or “[…] how well you can explain how the problems are solved” (i.e., explanation-based cue condition). “You will need this information afterwards.” By contrast, the learners in the conditions who were informed about the prompted cue type only after problem-solving were simply told that “in the following, you are to solve four problems on the four types of stochastic principles on your own.”

Instruments and Measures

Pretest

To assess the participants’ prior knowledge on stochastics, we used a pretest containing six questions. Two of these questions were multiple-choice-single-select questions and four required an open answer. The open answers were scored by two independent raters applying a scoring protocol. Interrater reliability as determined by the intraclass coefficient with measures of absolute agreement was very good, ICC > 0.85. The pretest score was obtained by calculating the ratio of the theoretical maximum number of points. These scores were then converted into percentage values (i.e., 0–100%). Internal consistency was acceptable, ω = 0.72.

Performance in the Initial Problem-Solving Phase

The learners’ solutions to the four problems in the initial problem-solving phase (Stage 3) were scored by two independent raters using a scoring protocol. Interrater reliability as determined by the intraclass coefficient with measures of absolute agreement was very good (ICC > 0.85).

Self-Assessments

In learning from worked examples, the required level of understanding at the end of the initial problem-solving phase relates to both understanding of the abstract principles and to understanding of how to solve concrete problems (see Renkl, 2014). Accordingly, we asked learners to self-assess how well they thought they understood the four types of combinations of random events and how well they thought they could solve problems about the four types of random-event combinations (0% = not all to 100% = very good). These two self-assessments correlated strongly (r = 0.87, p < 0.001). Hence, we aggregated them to determine the self-assessed level of understanding (0–100%).

Posttest

To assess the participants’ level of actual understanding concerning the four types of stochastics problems, we designed a posttest containing ten questions. Four of these questions were problem-solving tasks that were isomorphic to the problems provided in the initial problem-solving phase. Of the further six questions, two related to transfer problems that went beyond the problems provided in the initial problem-solving phase and four were designed to assess the learners’ level of understanding on an abstract level (e.g., “You roll a dice three times in a row and aim to roll two sixes and a single one in random order. How would you characterize this problem in terms of ‘order relevance’ and ‘replacement’?”).

All questions were scored by two independent raters applying a scoring protocol. Interrater reliability was very good for all tasks (all ICCs > 0.85). The final posttest scores were obtained by averaging the scores of all ten questions (i.e., 0–100%; ω = 0.83).

Self-Assessment Accuracy

We calculated absolute accuracy to determine the accuracy of learners’ self-assessments. Specifically, we used the formula proposed by Schraw (2009) and computed the squared deviation between learners’ self-assessments and their performance on the posttest. It is important to highlight that our original plan was to use the deviation between learners’ self-assessments and the overall posttest performance to determine absolute accuracy. A thoughtful comment of an anonymous reviewer, however, highlighted that as the learners’ self-assessments were explicitly related to the four types of problems (see above), the accuracy measure would only make sense when a posttest performance of 100% would reflect fully accurate performance on the four types of problems. For determining absolute accuracy, we hence used only the learners’ performance on the four problems that were isomorphic to the problems that were provided in the initial problem-solving phase. Scores closer to zero indicate better absolute accuracy (theoretical minimum, 0; theoretical maximum, 10,000).

Mental Effort

Subjective mental effort invested in the problem-solving phase was assessed by asking participants to judge the mental effort they invested in the problem-solving phase after each problem on a 9-point Likert scale (i.e., higher scores represent higher mental effort). The question’s wording was adapted from scales for assessing mental effort (see Paas, 1992; Paas et al., 2003). For the later analyses, the four ratings were averaged (ω = 0.95).

Procedure

This experiment was conducted in an online learning environment on https://www.unipark.com, which participants accessed from their own devices. First, participants gave general information on gender, age, grade point average in high school (HSGPA), and dyscalculia, and then worked on the pretest. Afterwards, they went through the example-based learning sequence in a self-paced manner. The experimental manipulation took place during the initial problem-solving phase (i.e., Stage 3 of the sequence). That is, learners in the conditions who were informed about the prompted cue type before problem-solving were informed about the to-be-monitored cue type at the beginning of the problem-solving phase and, when they were to self-assess their level of understanding at the end of the phase, we varied whether learners were prompted to infer their self-assessments from performance-based or from explanation-based cues. Finally, all participants took the posttest.

Results

The descriptive results are found in Table 1.

Preliminary Analyses

In the first step, we tested whether the random assignment resulted in comparable groups. Applying Bayesian ANOVAs with a JZS prior, we were able to find strong evidence for non-existing differences (null hypothesis) between the groups concerning gender distribution, BF01 = 16.66, and HSGPA, BF01 = 21.71. We found anecdotal evidence for non-existing differences regarding prior knowledge, BF01 = 2.52, and age, BF01 = 1.31. Overall, these results indicate that the random assignment resulted in comparable groups.

In view of the notion that differences in self-assessment accuracy can simply result from effects of support measures on performance (e.g., when a support measure fosters performance, overestimation of performance becomes less likely, which can increase self-assessment accuracy, see Froese & Roelle, 2023), we also analyzed whether the groups differed concerning performance on the posttest. Bayesian ANOVAs showed substantial evidence for non-existing differences regarding the overall posttest score, BF01 = 4.78, as well as regarding the performance on the four problems that were isomorphic to the problems that were provided in the initial problem-solving phase, BF01 = 8.11. Hence, the effects on self-assessment accuracy found in the present study (see below) cannot be attributed to effects of the support measures on posttest performance.

Effects on Self-Assessment Accuracy

We tested whether prompting learners to infer their self-assessments from explanation-based cues or performance-based cues led to differences concerning self-assessment accuracy (Research Question 1). We addressed this research question by determining the main effect of prompted cue type in a 2 × 2 ANOVA with the factors prompted cue type and timing of cue prompt. We found a statistically significant main effect of prompted cue type, F(1, 125) = 6.45, p = 0.012, ηp2 = 0.05. The groups that were prompted to utilize performance-based cues showed better self-assessment accuracy (i.e., values closer to zero).

In Research Question 2, we were interested in whether informing learners about the type of to-be-monitored cue already before the problem-solving phase would increase self-assessment accuracy as compared to informing learners only after the problem-solving phase. We addressed this research question by determining the main effect of timing of cue prompt. In support of our assumption, the 2 × 2 ANOVA revealed that the groups that were informed about the to-be-monitored cue already before the problem-solving phase showed better self-assessment accuracy, F(1, 125) = 4.09, p = 0.046, ηp2 = 0.03.

For exploratory purposes, we also analyzed whether there was an interaction between the two factors concerning self-assessment accuracy. The ANOVA, however, did not reveal a statistically significant interaction effect, F(1, 125) = 0.10, p = 0.758, ηp2 < 0.01.

Effects on Mental Effort and Performance During Problem-Solving

In Research Question 3, we tested whether the point in time when learners were informed about the to-be-monitored cue would affect mental effort and performance in the problem-solving phase. Furthermore, we determined the correlations between mental effort und learners’ self-assessments, which might indicate that learners used cognitive load as a cue in self-assessments and whether these correlations would differ between learners with and without up-front information about the to-be-monitored cue. We addressed the first part of this research question by determining the main effect of timing of cue prompt in a 2 × 2 ANOVA with the factors prompted cue type and timing of cue prompt. In terms of mental effort, the ANOVA did not reveal a statistically significant main effect of timing of cue prompt, F(1, 125) = 0.96, p = 0.330, ηp2 < 0.01. We also examined the main effect of prompted cue type and the interaction between the two factors. However, none of these effects was statistically significant, F(1, 125) = 0.01, p = 0.906, ηp2 < 0.01, and F(1, 125) < 0.01, p = 0.958, ηp2 < 0.01, respectively.

In terms of problem-solving performance, we found a similar pattern of results. There was no main effect of timing of cue prompt, F(1, 125) = 1.03, p = 0.311, ηp2 < 0.01, and also no main effect of prompted cue type and no interaction effect, F(1, 125) < 0.01, p = 0.975, ηp2 < 0.01, and F(1, 125) = 0.56, p = 0.457, ηp2 < 0.01.

To address the second part of Research Question 3, we determined the correlations between mental effort and self-assessments for the learners with and without information on the cue at the beginning of the problem-solving phase. For both learners with and without cue information before problem-solving, there was a statistically significant negative correlation between mental effort and self-assessments, r = − 0.29, p = 0.012, and r = − 0.27, p = − 0.048. A Fisher’s z-test did not indicate a statistically significant difference between these correlations, z = 0.17, p = 0.431. This finding suggests that informing learners about the to-be-monitored cue in advance did not affect the degree to which learners inferred their self-assessments from the unspecific cue of cognitive load during problem-solving.

Discussion

With regard to Research Question 1, we found that prompting learners to infer their self-assessments from performance-based cues fostered absolute accuracy (i.e., resulted in more accurate self-assessments) in comparison to prompting learners to infer their self-assessments from explanation-based cues. One explanation for this finding is as follows. Potentially, the learners who were prompted to utilize performance-based cues hardly accidentally solved the problems correctly by means of shallow strategies although they did not understand the solution rationale. Hence, problem-solving performance was a highly diagnostic cue. Moreover, utilizing the explanation-based cue might have been difficult. Specifically, unlike the learners who were prompted to monitor their problem-solving performance, which is highly salient during problem-solving and probably familiar to learners, the learners who were prompted to monitor their ability to explain the solution procedures during problem-solving potentially did not know what exactly they were supposed to monitor or what type of explanation would be of high quality. Hence, the learners may not have known how to utilize the explanation-based cue.

In terms of Research Question 2, we found that the learners who were informed about the cue they were to monitor already before the problem-solving phase showed better self-assessment accuracy than their counterparts. One explanation for this finding is that when learners were informed about the to-be-monitored cue in advance, they were attending to the cue already during problem-solving, which was less error-prone than having to remember or reconstruct the cue only after having finished the problem-solving phase. The finding that informing learners about the to-be-monitored cue already before the problem-solving phase fostered self-assessment accuracy furthermore indicates that the instruction to attend to performance-based or explanation-based cues during problem-solving did not overload learners. In line with this assumption, we did not find a significant effect of the timing of the cue prompt concerning mental effort or performance in the problem-solving phase (Research Question 3). Also, the timing of the cue prompt did not affect the correlation between mental effort and self-assessments. Given that the level of mental effort was generally relatively low (i.e., M = 5.37 on a 9-point Likert scale), this pattern of results suggests that the learners were not overwhelmed by the requirement to monitor certain cues during problem-solving, even when the learners were informed in advance about the to-be-monitored cue.

Experiment 2

The main purpose of Experiment 2 was to understand why prompting learners to infer their self-assessments from explanation-based cues results in lower self-assessment accuracy than prompting learners to infer their self-assessments from performance-based as well as to investigate how learners could be supported to exploit the potential of explanation-based cues in learning from worked examples. As mentioned above, one explanation for the inferiority of explanation-based cues in Experiment 1 could be that learners lack knowledge about criteria for good or poor explanations of problem-solving procedures. In Experiment 2, we varied whether learners were provided with information that clarifies criteria for good or poor explanations or good or poor problem-solving performance, respectively (i.e., factor of cue criteria instruction), as suggested by research on rubrics that transparently communicate assessment criteria to learners (for a recent overview, see Panadero et al., 2023; see also Panadero et al., 2024).

However, the beneficial effects of communicating assessment criteria to learners found in rubrics research were all found in settings in which learners were explicitly engaged in the task or activity whose quality they were to assess with the help of rubrics. In the initial problem-solving phase of example-based learning, however, it is reasonable to assume that learners hardly engage in explaining. Hence, learners may have not or only scarcely engaged in the activity whose quality they should assess with the help of rubrics. The guidance offered by rubrics, which communicate criteria for good or poor explanations, could thus be insufficient for improving the effects of explanation-based cues on self-assessment accuracy. Rather, to exploit the potential of explanation-based cues, learners might additionally need to be engaged in explanation activities during problem-solving. In Experiment 2, we hence also varied whether learners received self-explanation prompts in the problem-solving phase. Against this background, we addressed the following research questions in Experiment 2.

-

Research Question 1: Does the effect of the prompted cue type (performance-based vs. explanation-based) on self-assessment accuracy depend on whether learners are provided with criteria that help them interpret the cues and engaged in self-explanation activities during problem-solving?

In particular, we were interested in whether learners who are prompted to infer their self-assessments from explanation-based cues would exploit the potential of these cues and hence catch up or even outperform learners who are prompted to infer their self-assessments from performance-based cues only when they were both informed about criteria and engaged in self-explanation activities during problem-solving. Based on the findings of Experiment 1, we assumed that learners who are prompted to infer their self-assessments from performance-based cues would already be familiar with these cues and hence scarcely benefit from being informed about criteria for good problem-solving performance; they might even be hindered by being engaged in self-explanation activities, because it could distract them from monitoring their performance. We hence expected a three-way interaction between type of cue prompt, cue criteria instruction, and explanation prompts during problem-solving.

Like in Experiment 1, we were furthermore interested in whether the support measures that were implemented before or during the problem-solving phase affect mental effort and performance in the problem-solving phase.

-

Research Question 2: Does cue criteria instruction affect mental effort and performance in the problem-solving phase?

-

Research Question 3: Do self-explanation prompts affect mental effort and performance in the problem-solving phase?

Method

Sample and Design

The effect of prompted cue type was of small-to-medium size in Experiment 1 (i.e., ηp2 = 0.05). A power analysis using this effect size and an α-level of 0.05 and a β-level of 0.20 yielded a required sample size of N = 152. This power analysis was fitted for detecting the main effects of the above-mentioned size in a 2 × 2 × 2 ANOVA, which corresponded with the design of Experiment 2 (see below). To increase statistical power, we decided to oversample and recruit N = 268 German university students (72.4% female, 0.7% diverse; MAge = 23.93 years, SDAge = 4.03 years) majoring in various disciplines. We excluded one participant who reported having dyscalculia; 15 participants achieved a score higher than 90% in the pretest. Our final sample comprised 252 university students (72.6% female; MAge = 23.90 years, SDAge = 3.94 years). By this sample size, a power of 95% (i.e., β-level of 0.05) could be established in Experiment 2.

Like in Experiment 1, all learners were instructed on the cognitive skill of solving mathematical urn model problems. Again, the experimental manipulations took place during the initial problem-solving phase (i.e., Stage 3). As in Experiment 1, we varied whether the learners were prompted to infer their self-assessments from performance-based cues or from explanation-based cues (i.e., factor of prompted cue type). Other than in Experiment 1, all learners were informed about the to-be-monitored cue already at the beginning of the problem-solving phase. We also varied (1) whether at the beginning of the problem-solving phase learners received instruction on criteria that should be met for a cue to indicate high quality (i.e., factor of cue criteria instruction) and (2) whether learners were prompted to explain their solutions while problem-solving (i.e., factor of self-explanation prompts). Hence, the experiment followed a 2 × 2 × 2 factorial between-subject design. Participants were randomly assigned to the conditions.

Written informed consent was obtained from all participants, and their voluntary participation was compensated with 20 Euros. The experiment was approved by the ethics committee of Ruhr University Bochum (No. EPE-2022–027).

Materials

Stage 1 and 2: Introductory Instructional Explanations and Worked Examples

The introductory instructional explanations and worked examples were the same as in Experiment 1.

Stage 3: Initial Problem-Solving Phase

All participants were provided with the same four stochastic problems as in Experiment 1. The experimental manipulations took place during this initial problem-solving phase. Once the participants had worked on all four problems, they were required to self-assess their level of understanding of the rationales of the problems’ solutions with the same two questions as in Experiment 1. Dependent on the factor of prompted cue type, learners were asked to infer their self-assessments from “how well you were able to solve the four problems you just completed” (i.e., performance-based cue) or from “how well you could explain how the four problems you just completed are solved” (i.e., explanation-based cue). All participants were informed about the to-be-monitored cue at the beginning of the initial problem-solving phase.

The learners who were provided with further support on how to utilize the prompted cue received instruction on criteria of high-quality explanations/performance (i.e., cue criteria instruction). Specifically, the participants who were prompted to infer their self-assessments from performance-based cues received the following cue criteria instruction: “A complete and therefore very good solution means that you are able to successfully perform all necessary steps to solve the specific problem. A very poor solution, on the other hand, means that you are unable to perform any of the steps needed to solve the specific problem.” By contrast, the learners who were prompted to infer their self-assessments from explanation-based cues received the following cue criteria instruction: “A very good explanation means that you are able to explain why the specific task could be characterized as relevant/irrelevant and with/without replacement. Furthermore, a very good explanation shows that you are able to explain for which aspects of the solution approach, the type of stochastic principles plays a role and for what reason. Furthermore, a crucial aspect of a good explanation is the ability to clearly identify and articulate the specific areas within the solution approach where stochastic principles apply and the underlying reasons for their significance. A very poor explanation, on the other hand, would mean that you are unable to explain these aspects.”

The participants who were prompted to engage in self-explaining while problem-solving received a self-explanation prompt in conjunction with each of the four to-be-solved problems. The prompts required learners to explain “In which aspects of the solution approach does the task type play a central role, and for what reasons?” The learners should write their responses to the self-explanation prompts into a text box.

Instruments and Measures

Pretest

To assess the participants’ prior knowledge on stochastics, we posed the same pretest questions and applied the same scoring protocol as in Experiment 1. Interrater reliability was very good (ICC > 0.85) and internal consistency was acceptable, ω = 0.67.

Performance in the Initial Problem-Solving Phase

The problem-solving performance was scored via the same scoring protocol as in Experiment 1. Interrater reliability was very good (all ICCs > 0.85).

Posttest

To assess the participants’ level of understanding concerning the four types of stochastics problems, we used the same posttest and scoring protocol as in Experiment 1. Interrater reliability was very good (all ICCs > 0.85). Internal consistency was very good as well, ω = 0.85.

Self-Assessments and Self-Assessment Accuracy

Self-assessments of learners’ level of understanding were assessed by the same two questions as in Experiment 1, which again correlated strongly (r = 0.86, p < 0.001) and were thus aggregated. Absolute accuracy was determined the same way as in Experiment 1.

Mental Effort

As in Experiment 1, we asked participants to judge their subjectively invested mental effort while problem-solving after each problem on a 9-point Likert scale (i.e., higher scores represent higher mental effort) and averaged the ratings for further analyses (ω = 0.95).

Procedure

As Experiment 1, Experiment 2 was conducted in an online learning environment on https://www.unipark.com, which participants accessed from their own devices. Until learners entered the initial problem-solving phase, the procedure was identical to Experiment 1.

The experimental manipulations took place during the initial problem-solving phase (i.e., Stage 3 of the sequence). Here, the participants were prompted to focus on either performance-based cues or explanation-based cues and were or were not provided with cue criteria instruction. In the conditions with self-explanation prompts, participants were asked to generate self-explanations while problem-solving. Participants could work on each problem as long as they wanted but could not return to a problem once they had clicked a button to proceed. Participants answered the question on mental effort after each problem. They then self-assessed their level of understanding, whereby we varied whether learners were prompted to infer their self-assessments from performance-based or explanation-based cues. Finally, all participants took the posttest.

Results

The descriptive results are found in Table 2.

Preliminary Analyses

Applying Bayesian ANOVAs, we found strong evidence for non-existing differences (null hypothesis) between the groups with regard to gender distribution, BF01 = 29.72, HSGPA, BF01 = 13.30, and prior knowledge, BF01 = 39.77. We observed moderate evidence for non-existing differences with regard to age, BF01 = 6.43. Overall, these results indicate that the random assignment resulted in comparable groups.

As in Experiment 1, we also tested whether the groups differed in their posttest performance. Bayesian ANOVAs showed substantial evidence for non-existing differences regarding the overall posttest score, BF01 = 12.57, as well as regarding learner performance on the posttest tasks that were isomorphic to the tasks in the initial problem-solving phase, BF01 = 5950.11. Hence, the effects on self-assessment accuracy found in the present study (see below) cannot be attributed to effects of the support measures on posttest performance.

Effects on Self-Assessment Accuracy

To address Research Question 1, we ran a 2 × 2 × 2 ANOVA with the factors prompted cue type, cue criteria instruction, and self-explanation prompts. Most importantly, there was a statistically significant three-way interaction, F(1, 244) = 7.41, p = 0.007, ηp2 = 0.03 (all other effects of the ANOVA were not statistically significant, all p > 0.076). To explore the interaction pattern, in the first step, we analyzed the effects of prompted cue type and cue criteria instruction separately for the learners with and without self-explanation prompts.

For the learners without self-explanation prompts, a 2 × 2 ANOVA revealed a statistically significant main effect of prompted cue type, F(1, 118) = 4.01, p = 0.048, ηp2 = 0.03. The learners who were prompted to use performance-based cues showed better absolute accuracy (i.e., values closer to zero). There was no statistically significant main effect of cue criteria instruction and also no statistically significant interaction, F(1, 118) = 0.14, p = 0.705, ηp2 < 0.01, and F(1, 118) = 1.13, p = 0.291, ηp2 = 0.01. For the learners with self-explanation prompts, by contrast, a 2 × 2 ANOVA did not yield a statistically significant main effect for prompted cue type, F(1, 126) = 0.17, p = 0.678, ηp2 < 0.01. There was also no statistically significant main effect for cue criteria instruction, F(1, 126) = 0.31, p = 0.579, ηp2 < 0.01. However, there was a statistically significant interaction effect, F(1, 126) = 8.37, p = 0.005, ηp2 = 0.06 (for an illustration of the pattern of results, see Fig. 5).

In the next step, we explored the interaction effect between prompted cue type and cue criteria instruction for the learners with self-explanation prompts. We found that for the learners without cue instruction, performance-based cues yielded better absolute accuracy than explanation-based cues (p = 0.028, ηp2 = 0.08), whereas for the learners with cue instruction, explanation-based cues yielded better accuracy than performance-based cues (p = 0.044, ηp2 = 0.06).

Effects on Mental Effort and Performance During Problem-Solving

In Research Questions 2 and 3, we were interested in whether cue criteria instruction and self-explanation prompts would affect mental effort and performance in the initial problem-solving phase. We addressed this research question by determining the main effects of these factors in a 2 × 2 × 2 ANOVA with the factors prompted cue type, cue criteria instruction, and self-explanation prompts. In terms of mental effort, the ANOVA revealed neither a statistically significant main effect of cue criteria instruction, F(1, 244) = 0.82, p = 0.368, ηp2 < 0.01, nor a statistically significant main effect of self-explanation prompts, F(1, 244) = 1.94, p = 0.165, ηp2 = 0.01. The only statistically significant effect yielded by this ANOVA was a statistically significant interaction between cue criteria instruction and self-explanation prompts, F(1, 244) = 4.70, p = 0.031, ηp2 = 0.02. Self-explanation prompts increased mental effort for learners without cue instruction (p = 0.016, ηp2 = 0.05) but did not significantly affect mental effort for learners with cue instruction (p = 0.572, ηp2 < 0.01).

In terms of problem-solving performance, the ANOVA revealed neither a statistically significant main effect of cue criteria instruction, F(1, 244) = 1.92, p = 0.167, ηp2 = 0.01, nor a statistically significant main effect of self-explanation prompts, F(1, 244) = 2.90, p = 0.090, ηp2 = 0.01. In addition, none of the interactions effects was statistically significant, all p > 0.064.

Discussion

In terms of Research Question 1, we found that only when learners were provided with criteria that helped them to interpret the to-be-monitored cues and were engaged in self-explaining during problem-solving did the learners who were prompted to infer their self-assessments from explanation-based cues outperform their counterparts who were prompted to infer their self-assessments from performance-based cues in terms of self-assessment accuracy. When the learners who were prompted to infer their self-assessments from explanation-based cues received none or only one of these support measures, like in Experiment 1, prompting learners to use performance-based cues yielded higher self-assessment accuracy. One explanation for this pattern of results is that learners lack knowledge on how to interpret explanation-based cues and that learners scarcely engage in explaining during problem-solving on their own accord. Consequently, the learners who were prompted to monitor the highly salient cue of problem-solving performance, which likely was familiar to learners and hence easy to interpret, showed better accuracy when learners did not receive the supplementary support measures of cue criteria instruction and self-explanation prompts. Notably, even when the explanation-based learners received both support measures, their superiority over the performance-based learners in terms of self-assessment accuracy at least in part resulted from the fact that the self-explanation prompts appeared to hinder the learners who were prompted to monitor performance-based cues (see Fig. 5). Furthermore, two of the groups who were prompted to infer their self-assessments from performance-based cues, at least on a descriptive level, still showed lower absolute accuracy scores (i.e., better self-assessment accuracy) than the best-supported explanation-based group (see Table 2). Jointly, these results provide insight into how learners can be supported to exploit the potential of explanation-based cues in learning from worked examples and show that focusing learners on performance-based cues might be most efficient and effective.

General Discussion

The present study’s main goal was to investigate how self-assessment accuracy in the initial problem-solving phase of cognitive skill acquisition through learning from worked examples can be optimized. In particular, we were interested (a) in whether self-assessment accuracy would depend on whether learners were prompted to infer their self-assessments from explanation-based or performance-based cues and (b) in whether informing learners about the cue already before the problem-solving phase would matter. In view of Experiment 1’s results, we were furthermore interested (c) in whether learners can be supported in exploiting the potential of explanation-based cues through instruction on criteria for interpreting the cue and through prompting them to engage in self-explaining during problem-solving.

At least when learners did not receive further instructional support measures that helped them to monitor and utilize the cues, we found in both experiments evidence that prompting learners to infer their self-assessments from performance-based cues yields better self-assessment accuracy at the end of the initial problem-solving phase of learning from worked examples. From a theoretical view, we assumed that an advantage of performance-based cues would be that it is highly salient and that learners are familiar with this cue and that a disadvantage could be that learners could in some cases solve problems correctly although they did not understand the solution rationale (i.e., based on shallow problem-solving strategies), which would result in low diagnosticity. In terms of explanation-based cues, we assumed that they could be advantageous because self-explanation quality has been found to be a reliable predictor of learning outcomes in example-based learning and that a disadvantage could be that learners would believe that shallow explanations (e.g., explanations of shallow problem-solving strategies) could suffice. Across both experiments, we found clear evidence that prompting learners to infer their self-assessments from performance-based cues has the greatest net benefit regarding self-assessment accuracy. Furthermore, in Experiment 2, we found that the inferiority of explanation-based cues mainly stems from the facts that learners do not know when an explanation is of poor or good quality and that learners scarcely engage in explaining during problem-solving. Accordingly, the rubric-like information when an explanation is of high quality alone did not increase self-assessment accuracy. It is important to highlight, however, that this finding does not contradict the widespread finding that rubrics are helpful in student self-assessment (e.g., Krebs et al., 2022; Panadero et al., 2023). Rather, they highlight that when learners have not or only scarcely engaged in an activity or task, informing them about assessment criteria for the respective activity or task scarcely affects self-assessment accuracy. Importantly, our findings also highlight that engaging learners in an activity that is not aligned with the cues that are to be monitored detrimentally affects self-assessment accuracy. Evidently, at least when they also received cue criteria instruction, the learners who were to monitor performance-based cues were even hindered by the requirement to engage in self-explanation activities during problem-solving.

Jointly, these results indicate that, dependent on the presence of further support measures, prompting learners to infer their self-assessments from performance-based cues and prompting learners to infer their self-assessments from explanation-based cues can result in similar levels of self-assessment accuracy. However, in terms of efficiency, focusing learners on performance-based cues seems to be preferable. Unlike explanation-based cues, at least university students can readily utilize performance-based cues in forming self-assessments. Explanation-based cues, by contrast, require at least some further instructional support to result in similar levels of self-assessment accuracy.

The original motivation of the present study was to optimize self-assessment accuracy in learning from worked examples. Specifically, based on findings by Foster and colleagues (Foster et al. 2018), which suggested that learners spontaneously use their initial problem-solving performance to diagnose their level of understanding in the initial problem-solving phase of learning from worked examples and that learners decide to engage in further problem-solving (i.e., proceeding to Stage 4) too often, we intended to investigate whether prompting learners to infer their self-assessments from explanation-based cues could be a fruitful remedy. In view of the findings of our two experiments, we can clearly state that explanation-based cues are not the cure we had hoped for. By contrast, informing learners about the to-be-monitored cue already before the problem-solving phase did function in the expected manner. Although the effect was only of small-to-medium size, we can hence recommend that, at least when it comes to utilizing performance-based or explanation-based cues, informing learners that they need to monitor the cue already before they engage in problem-solving is beneficial. An obvious explanation for this effect is that when learners are informed about the to-be-monitored cues already before problem-solving, they do not or only to a lower degree need to reconstruct the cue afterwards, which might be less error-prone. As it does not seem to affect mental effort during problem-solving and thus does not increase the risk of cognitive overload, up-front information concerning to-be-monitored cues hence beneficially affects self-assessment accuracy in learning from worked examples.

Relating the Present Findings to Effort Monitoring and Self-Regulation

This article is part of a special issue on cognitive load and self-regulation, with a special eye on the research questions outlined in the EMR framework by de Bruin et al. (2020). We discuss the contributions of the present findings to these research questions.

A basic tenet of the EMR framework by de Bruin et al. (2020) is that mental effort is a cue that is frequently used by learners in forming self-assessments and that there is typically a negative relation between mental effort and judgments of learning. This assumption was supported by the meta-analysis of Baars et al. (2020), which was inspired by this framework. We have found negative relations between mental effort and self-assessments (Exp. 1), which is in line with the assumption of de Bruin et al. (2020) that mental effort plays a role in forming self-assessments.

De Bruin et al. (2020) also stated that in text learning, students might better refer to their ability to explain the text rather than judging their reading fluency when monitoring their learning (see RQ1 by de Bruin et al., 2020). We tested in our experiments whether an analogue assumption can be made also in the case of example-based skill acquisition: It might be better in terms of self-assessment accuracy to use the ability to explain problem solutions than to refer to the ability (or fluency) to solve problems. However, we could not support this assumption as performance-based cues were overall superior. A tentative conclusion relevant to the EMR framework is that it might depend on the learning method and/or the type of learning goals (declarative knowledge by learning from text; cognitive skill acquisition by example-based learning) which types of cue are especially useful for accurate self-assessment.

De Bruin et al. (2020) claimed that research should investigate which design aspects influence students’ monitoring and self-assessment (see RQ1 by de Bruin et al., 2020). Such findings are relevant in two respects: Understanding context effects and informing about possible support procedures for accurate self-assessment. Our results are revealing in at least two respects in this regard. First, we found that it makes a difference for self-assessment accuracy whether the learners are provided (or not) with both instruction on cue interpretation and self-explanation prompts when using explanation-based cues. Such support is not a necessary condition for the productive use of performance-based cues, that is, the usefulness of performance-based cues does not depend on such design factors. An interesting general, though, tentative conclusion is that such context or design factors may not generally be relevant for cue use but their relevance may depend on the specific type of cues to be used. Admittedly, further research has to test this claim. Second, a support factor that may be of general relevance for assessment accuracy is the timing of instruction on monitoring. We found that informing the learner before a learning phase to use specific cues in monitoring their learning fostered self-assessment accuracy, irrespective of the type of cue to be used. A specific advantage of this metacognitive support procedure is that it is easy to implement. In addition, this procedure did not induce additional effort or cognitive load, which might have been a potential negative side effect. The latter finding is also relevant to de Bruin et al.’s (2020) question for further research whether students should be made aware about issues of monitoring (see RQ3 by de Bruin et al., 2020). Our findings suggest “yes,” at least when it is done by the procedure used in our studies.

Limitations and Directions for Future Research

The present study has some limitations and raises important open questions to be addressed in future research. First, it is an open question whether the effects of utilizing explanation-based cues increase over time. As outlined above, our learners were probably very familiar with performance-based cues, but not with explanation-based cues. Although additional instructional support in the form of cue criteria instruction and self-explanation prompts enhanced the benefits of prompting learners to infer their self-assessments from explanation-based cues, those cues were likely nevertheless still new for the learners. Once learners have familiarized themselves with explanation-based cues and are potentially given feedback on the accuracy of their self-assessments, the benefits of these cues might potentially increase. This notion aligns with findings in strategy training literature, which indicate that longer interventions are usually required to overcome a utilization deficiency (e.g., Endres et al., 2021; Nückles et al., 2020; see also Abel et al., 2024; Trentepohl et al., 2022), meaning that even in principle effective strategies need to be trained and partly automated before they show positive effects. Hence, the lacking superiority of explanation-based cues even in the best-supported group in Experiment 2 does not need to be the end of research on the benefits of explanation-based cues.

Another issue that could be addressed in future research concerns the fact that in the present study, the initial problem-solving phase followed immediately after the worked-examples phase and that the posttest followed immediately after the initial problem-solving phase. Hence, it is an open question whether the pattern of results between the performance-based and explanation-based cues would depend on the delay between the phases. For example, the explanation-based cues might result in overconfident self-assessments when the delay between the worked-examples phase and the initial problem-solving phase is very short, because at this time, learners likely remember the principles and concepts and solution rationales that were previously explained very well. However, in view of the relatively high forgetting rates even after short delays (e.g., Karpicke, 2017; Rowland, 2014; see also Roelle et al., 2022, 2023), such high rates of correct retrieval from memory might not reflect learners’ actual level of the to-be-learned content. As in authentic learning settings (e.g., in classroom lessons at high school), these phases are likely frequently separated by one or even several days (e.g., because learners need to engage in initial problem-solving for homework after some worked examples have been explained in the previous lesson); it would hence be fruitful to address the role of the delays between these phases for the effects found in the present study.

A third issue is how we formulated the self-assessment questions. They did not explicitly ask learners to predict how many of the given number of tasks they would be able to solve correctly. More concrete item formulations have been recommended by some researchers (e.g., Ackermann & Thompson, 2017) to help enable more valid self-assessments. In the present study, we admittedly do not know whether learners who said that they understood the solution rationales “very well” would expect to solve all problems correctly, which is why the absolute numbers of the self-assessment accuracy measure need to be interpreted cautiously. A further potential point of criticism with respect to how we measured the self-assessments is that we did not require learners to self-assess their level of understanding for each of the four types of problems separately. Consequently, we cannot rule out that, for instance, a student who assigned herself a score of 50% because she thought she understood the problems without replacement but not the problems with replacement actually correctly solved the problems with replacement but not the problems without replacement on the posttest. In this case, the student would be considered fully accurate in the present study although her self-assessments were actually inaccurate. A remedy for this potential validity threat would be to assess separate self-assessments for each type of problem in future research. Furthermore, like the above-mentioned limitation with respect to the formulation of the self-assessment items, this limitation casts doubt on the absolute numbers of the self-assessment measure. However, this limitation should be less relevant to the effects between groups we found in the present experiments. As it applied to all groups in a similar manner, this limitation cannot explain why some groups (e.g., the ones who were prompted to infer their self-assessments from performance-based cues) showed more accurate self-assessments in both experiments.

The limitations concerning the self-assessment measure relate to another open question in the present study. As we did not implement the fourth stage of the worked-example approach of example-based learning, we do not know whether the improvement in self-assessment through the different cues and support measures would actually matter, as it affects the effectiveness and efficiency of learners’ decision whether to return to studying a worked example or to begin intensive problem-solving. Future studies could hence implement the fourth phase as well as give learners the opportunity to flexibly switch between stages so as to explore cue instruction’s effects on both self-assessment and on learning from worked examples.

Conclusion

The present study contributes the following main points to understanding and optimizing self-assessment accuracy in the initial problem-solving phase of cognitive skill acquisition through learning from worked examples. First, prompting learners to infer their self-assessments from performance-based cues and explanation-based cues can lead to similar levels of self-assessment accuracy. However, learners might be more readily prepared to utilize performance-based cues, which is why we consider prompting learners to utilize this cue more efficient. Second, both information on assessment criteria and actual engagement in self-explaining seems to be necessary to exploit the potential of explanation-based cues. It is an open question whether learners would become better and better in utilizing explanation-based cues over time; in this case, the effects on self-assessment accuracy would increase and the required support would decrease on future occasions. Future research on this topic could be very fruitful. Third, informing learners about the to-be-monitored cue already before the initial problem-solving phase is an effective and easy to implement means to enhance self-assessment accuracy in example-based learning. Hence, to reduce learners’ tendency to prematurely proceed to Stage 4 and hence engage in further problem-solving too early, regardless of whether learners are prompted to infer their self-assessments from performance-based or explanation-based cues, learners should be informed about the to-be-monitored cues in advance.

Data Availability

Data will be made available on request.

References