Abstract

The retention of fundamental mathematical skills is imperative to provide a foundation on which new skills are developed. Educators often lament about student retention. Cognitive scientists and educators have explored teaching methods that produce learning which endures over time. We wanted to know if using spaced recall quizzes would prevent our students from forgetting fundamental mathematical concepts at a post high school preparatory school where students attend for 1 year preparing to enter the United States Military Academy (USMA). This approach was implemented in a Precalculus course to determine if it would improve students’ long-term retention. Our goal was to identify an effective classroom strategy that led to student recall of fundamental mathematical concepts through the end of the academic year. The concepts that were considered for long-term retention were 12 concepts identified by USMA’s mathematics department as being fundamental for entering students. These concepts are taught during quarter one of the Precalculus with Introduction to Calculus course at the United States Military Academy Preparatory School. It is expected that students will remember the concepts when they take the post-test 6 months later. Our research shows that spaced recall in the form of quizzing had a statistically significant impact on reducing the forgetting of the fundamental concepts while not adversely effecting performance on current instructional concepts. Additionally, these results persisted across multiple sections of the course taught at different times of the day by six instructors with varying teaching styles and years of teaching experience.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

It has long been established that memory declines over time (Ebbinghaus, 1964). Although this is a normal human condition, it is problematic in the field of education, particularly in disciplines where coursework requires students to possess knowledge accumulated from previous classes. When foundational concepts are forgotten, new learning can be stunted (Kamuche & Ledman, 2005; Taylor et al., 2017). This is particularly true in mathematics and can be seen in other courses of study that require a strong mathematical background (Pearson Jr & Miller, 2012). For this reason, we were interested in finding strategies that could be implemented in a mathematics course to help students retain and recall fundamental mathematical concepts. We were most interested in strategies that could be easily implemented and would not require a huge overhaul on the current syllabus.

Based on the work of cognitive psychologists, Lang (2016) recommends several classroom strategies for improving instruction and learning that can be easily implemented within existing course structures. One of which, is spending a small portion of each class asking students questions on material from any previous lesson. He considers this to be low-level interleaving and claims that asking students to regularly recall previous content, along with spaced learning, improves long-term retention.

The role that spaced learning plays in the durability of memory is also well known (Ebbinghaus, 1964). Since Ebbinghaus’ work in 1885, studies have continued to investigate the role of spaced learning on retention. It has been found that retrieval attempts are more beneficial when repeated in spaced-out sessions versus massed sessions (Cepeda et al., 2006). The benefits of quizzing as a method of asking students to regularly recall previous course content has also been studied. A meta-analysis by Rowland (2014) and a review of several laboratory and educational studies by Roediger III and Karpicke (2006a) both conclude that the benefits of quizzing outweigh the benefits of other study activities such as homework and re-reading. This phenomenon has been named the “testing effect.” There is a connection between effortful recall and memory. Memory is made more durable when effort is needed to recall information. Re-reading material with the intent to increase retrieval takes little cognitive effort and is therefore less effective than retrieval activities such as quizzing (Brown et al., 2014).

However, to achieve long-term retention through quizzing it is important to space the quizzing effectively. Karpicke and Roediger III (2007) studied the effects of expanding retrieval intervals and equally spaced retrieval intervals and showed that what matters most is not whether the retrieval intervals are equally spaced or expanded but instead, that the initial retrieval attempt is effortful. If, for example, a quiz on previous concepts is administered too soon after it was learned, effortful retrieval, the key to retention, is diminished, making the exercise less effective. Benjamin and Tullis (2010) identified that the timing of spaced retrieval is maximized when the “sweet spot” is found between students having to use effort to recall, but not so much effort that they will not be able to remember. Cepeda et al. (2008) developed a model called the “retention surface” which plots student performance as a function of the study gap. The model identifies the retention interval that can be used to determine the optimal spacing interval between retrieval attempts for a desired retention interval.

Although studies that compared the effects on learning between open response (recall) versus multiple choice (recognition) quizzes found mixed results (Karpicke, 2017), we decided to use open response for our research. McDaniel et al. (2007) found that both multiple choice and open response yielded positive effects on retrieval over simply re-reading material. However, it was the open response questions that produced a greater positive effect on the retrieval of the material. No matter the mode, Roediger III et al. (2011) claims that testing leads to new retrieval routes which increases the effort for retrieval. When the effort to retrieve information is increased, the desired difficulty has been achieved to lead to long-term retention. The term “desired difficulty,” first identified by psychologists Elizabeth and Robert Bjork (Brown et al., 2014), is a key factor in establishing deeper connections so learning is more durable over time.

Some instructors feel that quizzing students too often or quizzing students when they do not have a solid grasp on the material may reinforce misunderstandings. Kornell et al. (2009) studied this concern and found that unsuccessful attempts in testing that were followed up by feedback produced a significant improvement in follow-on tests. They also found that the effort required to recall material for a test (even if the answer is wrong and feedback is given) produces deep processing, corrects the brain’s retrieval route for that information, and serves as a cue for recall in the future. Additionally, Benjamin and Tullis (2010) and Karpicke and Roediger III (2007) concluded in their research that providing feedback on the retrieval attempts helps to mitigate the encoding opportunity that is lost from an unsuccessful retrieval attempt.

The research mentioned here supports the potential for quizzing to improve students’ long-term retention. However, with the large number of factors that can influence education in classroom settings, it is not surprising that efforts to understand how quizzing influences long-term retention have been conducted in laboratory settings. In fact, of the research cited so far, the majority were conducted in laboratory settings, with only two studies being conducted in a classroom (McDaniel et al., 2007; Roediger III et al., 2011). This does not include the four reviews of existing literature (Benjamin & Tullis, 2010; Cepeda et al., 2006; Roediger III & Karpicke, 2006a; Rowland, 2014).

While the studies mentioned so far show the potential of spaced quizzing to positively impact student retention, what is needed is promising results with educationally relevant tasks in actual classroom environments. Some studies made a step in that direction by using educationally relevant tasks in a lab setting. The Roediger III and Karpicke (2006b) lab study asked students to read passages on scientific topics and then students restudied the passage or took a recall test. They found that testing, and not studying, improved retention. Additionally, Arnold and McDermott (2013) used English and Russian word associations in their lab study and found that more recall attempts increased recall ability. Finally, Rohrer and Taylor (2007) also noted benefits to long-term retention that come from spaced learning in the form of frequent quizzing. Their study used mathematical problems with college students, but again, this work was performed in a lab setting and not as a component of a regular course routine.

Recent years have seen an increase in classroom research on improving retention. Karpicke (2017) summarized 10 years of research on retention. As part of their summary, they reviewed studies that considered the effects of quizzing on retention in educational classrooms. Their research reports 14 studies conducted between 2009 and 2016 that addressed the benefits of quizzing in classrooms, all of which produced positive results. Of those studies, nine were conducted in college-level courses and five in middle schools but none in high schools. Only two of the studies they mentioned involved mathematical content, both of which were conducted in college courses (Hopkins et al., 2016; Lyle & Crawford, 2011). Yang et al. (2021) conducted a meta-analytic review of quizzing’s effect on classroom learning and looked at 222 studies that included 573 effects to more fully understand classroom moderators. They reviewed classroom research conducted in or since the year 2000. Their data is not organized in a manner that links course level to subject matter but most of the studies they reviewed were conducted in middle school and university or college courses and only 8% of the effects were obtained from high school studies while only 4.9% of the effects were obtained from studies involving mathematical content. They provide a very extensive review of 19 moderating variables and implementation considerations, but two potential moderating variables they did not report on were class hour and teacher experience.

Of the studies conducted with mathematical content in classroom settings, the study conducted by Hopkins et al. (2016) is especially notable. They evaluated massed versus spaced retrieval practice of mathematical concepts in a college introductory calculus course for engineers. They showed that spaced retrieval led to retention of concepts that persisted into the following semester. Although the calculus course did not use the traditional classroom format of lectures, their findings indicate a similar strategy used in a Precalculus course may be equally effective at improving students’ long-term retention of fundamental mathematical concepts.

To this end, the objective of this research was to explore the effectiveness in using spaced recall quizzes in a classroom setting to reduce the forgetting of fundamental mathematical concepts that usually occurs when students are not asked to revisit these concepts. Many studies on retention take place in a lab, yet we set out to investigate the use of weekly quizzes in an in-person classroom. Our classroom setting was specifically in a high school level precalculus course composed of post high school students in a preparatory school environment. In addition, we sought to determine if the results persisted across multiple sections of the course taught at different times of the day by six instructors with varying teaching styles and years of teaching experience. The literature we reviewed did not contain studies that took place in a classroom with the range of teaching experience and number of instructors that we had; therefore, our results add to the body of knowledge in this area of research. These results showcase that the use of spaced recall quizzes can reduce forgetting regardless of the teaching experience of the educator. Using the study of Hopkins et al. (2016) as a model, we anticipated that the use of intentionally spaced recall, in the form of quizzing, would improve students’ long-term retention of fundamental mathematical concepts in a traditional classroom setting. For this study, long-term is defined as 6 months: the time between the completion of quarter one instruction and the administration of the post-test exam.

The paper is organized as follows. The method we used is put forth in Sect. 2 and includes a description of the student population and the sequence and timeline of key components. The results of the quiz’s effect on long-term retention are then introduced in Sect. 3. In Sect. 4 we discuss the results in light of moderating factors (e.g., teacher experience, class hour, etc.). Finally, in Sect. 5 we discuss practical implications related to using spaced recall quizzing in the classroom. Section 6 is left for the conclusion.

Method

Participants

We conducted our study at the United States Military Academy Preparatory School (USMAPS) located at West Point, New York during the 2020–2021 academic year. When students are not yet qualified for direct admission to the United States Military Academy (USMA) but they show great potential, they are given the opportunity to attend USMAPS. The students that are admitted to USMAPS are deficient in one or more of these three areas: academics, physical fitness, or leadership. The purpose of the school is to develop the students in those three areas to meet the rigorous admission standards of USMA. For most students, the prevalent deficiency is in academics. The students who attend USMAPS live in rooms on campus. On average, the 240 students that enter the 1 year program are demographically and geographically diverse (within the United States and its territories). In 2020–2021, 235 students attended USMAPS with approximately 25% prior service soldiers and most of the others having graduated high school the previous year. Approximately 48% were African American, 4% were Hispanic, 2% were Asian, and 1% identified as “Other Minorities.”

At USMAPS, there are three levels of mathematics courses: Calculus, Precalculus with an Introduction to Calculus (PIC), and a Precalculus course that has an emphasis on Algebra/Trigonometry (PCAT). When students arrive at USMAPS, they take a pretest and fill out a survey to identify their previous mathematical exposure and competency. The pretest, survey, and student Scholastic Assessment Test (SAT) and/or American College Testing (ACT) scores are used to place students in the Calculus, PIC, or PCAT course.

Our study began with \(N=157\) students enrolled in the PIC course. Six instructors taught this course. Five of the instructors taught three sections each. As our hypothesis was that the spaced recall quizzes would reduce forgetting, we wanted to rule out the potential that the reduction in forgetting was a result of the instructor. We designed our study to have the instructors’ sections randomly assigned as follows: one section assigned as treatment, one as control, and one section as mixed. For the sixth instructor who only taught two sections, one section was randomly assigned as treatment and the other as control. In the mixed sections, students were randomly assigned to either the treatment or control group. Enrollment numbers for each section were equally distributed (plus or minus one student). This placed \(N=76\) students in the control group and \(N=81\) students in the treatment group. The study received approval (20-115-1) from the Institutional Review Board (IRB) at USMA and was deemed exempt because it was conducted in an established educational setting and involved normal educational practices. Instructors were not informed which sections were treatment, control, or mixed or which students belonged to each group. Two of the instructors were authors and had access to which students and sections were treatment or control; however, they did not examine that information before the end of the experiment. This was to ensure that there was no unintentional bias on the part of the study leads. The potential for study lead bias was investigated during data analysis (see Sect. 4.4).

Although we were able to randomly assign students, scheduling logistics prevented us from redistributing students to control for race, gender, standardized tests, and high school grade point average (GPA). Consequently, we had to maintain the assignment of students obtained by randomly assigning students to sections of treatment, control, or mixed, as previously explained. This was a departure from the approach employed by Hopkins et al. (2016), who balanced student assignment by also considering racial and gender composition, mean ACT score, and mean high school GPA. Nevertheless, the method we used for obtaining randomness is acceptable and has been used in other studies when additional measures were not available or could not be used to achieve balanced groups (Begolli et al., 2021; Jaeger et al., 2015; Liming & Cuevas, 2017; Shirvani, 2009; Sanchez et al., 2020).

During the academic year, some students who were originally placed in PIC demonstrated that their fundamental skills were not at the level initially expected based on ACT/SAT scores, previous course work, and pretest performance. In these cases, those students were moved to the PCAT course and thereby removed from the study. In addition, some students were separated from USMAPS during the academic year removing them from the study. There were also a few cases where students were removed due to incomplete data. Consequently, our initial study of \(N=157\) students reduced to \(N=128\) students. This left \(N=60\) students in the control group and \(N=68\) in the treatment group.

Procedure

This study was conducted within 17 sections of an in-person class that met daily. Classroom (action) research adds elements that are more challenging to control than if the research was done in a lab setting. These elements included student aptitude, instructor experience, and the hour of the day the class met. We address these in Sect. 4. Another challenge we faced when doing research in the classroom (instead of a lab) was how to handle giving feedback and how to control when and if students accessed the feedback later. We did not give feedback immediately after the quizzes to prevent students from sharing answers in between classes as that would affect the exposure gap (Cepeda et al. 2008) and desired difficulty (Bjork and Bjork 1992) we had designed into the treatment.

There were advantages to conducting the study in the classroom rather than in the lab. We were fortunate to belong to a mathematics department in which all instructors were willing and interested in this study which allowed us to have a sample size of 128 students. We also had the benefit of conducting the study on the retention of mathematical concepts while using the insights on retention that was gained through studies done in a lab with Swahili-Swedish word pairs (Bertilsson et al. 2017), or loosely connected word pairs in another study (Kornell et al. 2009).

Our experiment consisted of the progression outlined in Fig. 1. First, a pretest was administered to all students to establish a baseline comprehension of fundamental concepts. Then, the students were instructed in the fundamental concepts of mathematics in quarter one. Next, all students took weekly quizzes. The treatment group took a weekly quiz on the spaced fundamental concepts and the control students took a weekly quiz on the current topics being learned. Finally, all students were given a post-test to determine their retention of the fundamental concepts. Each of the components are discussed in detail in the following sections.

Pretest: establish a baseline comprehension of fundamental concepts

To assess long-term retention we considered 12 mathematical concepts identified by the the United States Military Academy’s mathematics department as being fundamental for entering students. These topics are listed in Table 1 and are the fundamental concepts taught in quarter one of the PIC course. The expectation is that students will remember these concepts when they are assessed on the USMAPS post-test exam 6 months later.

The topics that we cycled in the treatment group come from a document published by the United States Military Academy entitled, “Required Mathematical Skills for Entering [Students].” From this document we chose 12 of the 40 skills based on the fact that those 12 skills are taught in the first 8 weeks of the academic year. This would allow us to examine the exposure gap of quizzing three of the topics every 28 days with a goal of reduced forgetting when these topics were tested again at the end of the year on a multiple choice post-test. If we chose topics taught after the first 8 weeks of school, we would run out of time in the school year to test this exposure gap that was designed based on the research of Cepeda et al. (2008). We tested three different topics in each retention quiz to give each of the twelve topics the same exposure.

When the students complete our program and are admitted to the United States Military Academy, they will take a multiple choice Fundamental Concepts Exam within the first week or so of classes. This Fundamental Concepts Exam is built from the 40 “Required Mathematical Skills for Entering [Students].” Historically, our student body scores extremely low on the Fundamental Concepts Exam despite the fact that our curriculum covers all 40 of the required skills. The frustration that we felt knowing our students continued to score poorly is what lead us to want to do research on ways to improve the long-term retention of these skills. In addition, these fundamental skills are the building blocks for future course work at the United States Military Academy in the areas of advanced mathematics, physics, chemistry, and engineering. We were experiencing a similar dilemma as described in the study by Hopkins et al. (2016), where a spaced versus massed practice was used in a course titled, Introductory Calculus for Engineers.

The pretest that students took when they arrived at USMAPS in July was used as the initial measurement of knowledge for 10 of the 12 fundamental concepts. The pretest is a 50-problem multiple choice exam which the students have 80 min to complete. The post-test is the same exam given the following April. We mapped the 50 problems in the pretest against the 12 identified fundamental concepts and found that two of the topics were not directly assessed so, although those two topics were included in the weekly quizzes during the study, they were not analyzed as part of the study results.

Instruction in fundamental concepts

During quarter one, and prior to the start of the study, all students in the PIC classes experienced instruction in the same mathematical concepts including the 12 fundamental concepts. At the end of quarter one, all PIC students took a final exam that was an open response exam (no multiple choice). The final exam was “group-graded.” Each instructor was given a rubric for a specific problem on the final exam and they graded that problem for every student in the course. This is our practice for grading major exams to build in consistency and fairness in our grading procedure. Performance on this final exam was one metric used to establish the initial statistical equivalence between the treatment and control groups (see Sect. 4.1).

Weekly quizzes

Once a week during quarters two, three, and the first two weeks of quarter four, all PIC students took a retention quiz (RQ). The RQs were administered and graded using a web-based platform. The quizzes were administered during class and at a time that was open to the instructor’s discretion. The students logged into the web-based platform and the instructor provided a password that allowed the students to begin the 10 minute timed exam. The web-based platform displayed a timer for the student and no longer accepted submissions once the time had expired. The web-based platform automatically graded the open-response submissions and then released the students’ scores later that day (after all students had taken the RQ). It was coordinated such that all sections of PIC took the weekly RQ on the same day. For motivational purposes, the quizzes were worth points but only counted for 4% of students’ total grade in quarters two and three with no effect on final grade in quarter four. The students in the control group were given RQs that had three questions on the mathematical content currently being covered in the course (massed recall). The students in the treatment group were given RQs that had three questions that ONLY came from the 12 identified fundamental concepts that were taught in quarter one (spaced recall). Each quiz addressed three fundamental concepts and the concepts cycled every 28 days based on the exposure gap suggested in the study by Cepeda et al. (2008) to achieve the retention interval of 6 months. We also staggered the three concepts being quizzed each class period to prevent any loss of the desired difficulty that might come from students sharing what was on their RQ. Regardless of content, all quiz questions were formatted as open response. Scores and worked solutions were available to the student at the end of the academic day. Class time was not used to discuss quiz solutions as our design was to find a classroom strategy that would not take too much time away for teaching new material as Lang (2016) suggested. Each of the fundamental concepts were cycled four times over the course of the 16 RQs. Tables 2 and 3 provide an example of a treatment RQ and a control RQ.

Post-test: establish retention of fundamental concepts

Six months after the quarter one final exam and after the 16 RQs were administered, all students took the post-test simultaneously in identical conditions. We conducted an analysis of each groups’ post-test scores to determine if there was any significant difference in performance between the treatment and control group. Again, two of the 12 mathematical concepts that we cycled during the treatment’s RQs were not assessed on the post-test, so we did not measure those two concepts: radicals to rational exponents and distance/rate/time.

Measures (retention index)

To measure whether the spaced weekly RQs led to long-term retention, we compared the performance of all 128 students on 24 selected problems from the pre/post-test. These 24 problems assessed 10 of the fundamental concepts that cycled in the treatment group RQs. We determined the treatment to be a success or failure as shown in Fig. 2.

The pretest and post-test were identical multiple choice assessments that contained 50 questions. We only analyzed 24 of the 50 questions as they were the questions that assessed one of the 10 topics that we cycled in the treatment group. Due to the fact that these questions were multiple choice, the problem was considered correct if they made the right choice out of the 4 multiple choice options. If they did not choose the correct multiple choice option, we considered that incorrect. As our hypothesis was that the treatment of the spaced recall quizzes would help reduce forgetting, the student was considered to have success if they got the problem right on the post-test (even if it was wrong on the pretest). Notice that a correct answer on the post-test is considered a retention success and an incorrect answer is considered a retention failure no matter the student’s performance on that topic from the pretest that was given before quarter one instruction. After calculating the successes and failures for each of the 24 problems for each student, we then calculated the proportion of success a student experienced on these 24 specific questions and named this the student’s retention index. Comparing the retention index of students in the control group to the retention index of students in the treatment group allowed us to measure the effect of spaced recall, in the form of quizzing, on long-term retention.

Results

It was discovered that the success in reducing forgetting was quite pronounced. To quantify these gains, we used several statistical techniques such as data visualization with density plots, t-tests, and analysis of variance (ANOVA). The results of these statistical techniques are reported in this section and can be found in Table 4. We used the 50 question post-test to establish the retention of the fundamental mathematics concepts. Figure 3 shows the performance (distribution of scores) between the treatment and control groups. The treatment group’s mean score \((72.4\%)\) is higher than the control group’s \((68.5\%)\) and is statistically significant at the 0.05 level. We compared mean scores using a conservative hypothesis test that examined if the true population mean scores were the same. The p-value of 0.011 provides significant evidence that this hypothesis is implausible. In fact, the ability of the treatment group to outperform the control group with a \(3.9\%\) increase in the mean is statistically significant.

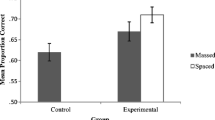

Next, we looked at the treatment and control groups’ performance on 24 specific problems within the 50-problem post-test that directly assessed 10 of the 12 fundamental concepts. We used the same conservative hypothesis test as we did in the analysis of the post-test. As shown in Fig. 4, the mean performance (retention index) of the treatment group \((81.6\%)\) is higher than that of the control group \((77.3\%)\). The p-value of 0.012 provides statistically significant evidence that the control and treatment group did not perform the same on those 24 specific problems. The mean score of the treatment group was \((4.2\%)\) greater than the mean of the control group, indicating the treatment group was more successful in reducing forgetting of the fundamental concepts.

Discussion

In this section we will explore other factors that strengthened our results. We examined factors such as latent (prior) student aptitude, instructor experience level, class hour of the day, and study lead bias using common statistical techniques. A statistical summary of these factors is included in Table 4.

Student aptitude

Given that new mathematical knowledge builds on prior mathematical understanding, we wanted to verify that our random assignment resulted in two groups with relatively equal mathematical foundations prior to treatment. Without the opportunity to balance our groups for mean SAT/ACT and mean high school grade point average, two measures that might indicate the strength of a student’s mathematical foundation, we used student performance on a pretest to demonstrate that the two groups could be considered equally balanced in their mathematical preparedness. The pretest was administered in late July and was administered simultaneously to all students in identical conditions. Figure 5 shows the performance (distribution of scores) between the treatment and control groups. A t-test that assumed the two mean scores were the same, produced a p-value of 0.841 (see Table 4). Consequently, although the treatment group’s mean score \((47.0\%)\) is slightly below the control group’s \((48.8\%)\), this difference is not statistically significant. The random assignment of students to treatment or control created two groups with relatively equal mathematical foundations.

We also examined student performance on the quarter one final exam which students took after receiving instruction in the 12 fundamental concepts and prior to the start of the study. We wanted to ensure that quarter one instruction did not favor one group over another and therefore create an imbalance between the two groups before the treatment began. Figure 6 shows the quarter one final exam performance (distribution of scores) between the treatment and control groups. Note that the mean scores for each group are almost identical with the treatment group mean score \((79.5\%)\) only slightly above the control group \((79.2\%)\). As before, we used a t-test and assumed that the means were the same. The p-value of 0.823 (see Table 4) indicates that the slight difference in means is not statistically significant. Taken together, results from the pretest and quarter one final exam establish that the initial assignment of students to the treatment and control groups produced two groups that were equally balanced in their mathematical preparedness before the treatment began.

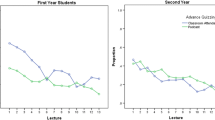

Instructor experience level

The faculty members who taught the Precalculus class have teaching experience that spans from 5 to 20 years. Is it possible that the more experienced instructors are better skilled at their craft and as a result, their students scored higher on the retention quizzes regardless of whether they were in the treatment or control group? We conducted a two-way ANOVA and confirmed that there is almost no evidence to suggest that student performance was affected by the instructor’s level of teaching experience (see Table 4). This result is powerful because it shows that spaced recall in the form of recall quizzes can be effective for any instructor no matter the years of teaching experience.

Class hour

The hour in which students attend class is a possible concern as one may have heard that students would perform better if they did not have a mathematics class so early in the morning, or right after lunch, or at the end of the day. Eliasson et al. (2010) studied how earlier wake times affect student performance. Trockel et al. (2000) found early wake up times were the biggest contributor to differences in grade point averages. There is even a recent claim that early morning classes may impede performance (Yeo et al., 2021). To explore this influence, we conducted a two-way ANOVA and found that there is little evidence to suggest that in this experiment student performance varied by class hour (see Table 4). This could be due to the fact that although the students have class at different times of the day, the whole student body is required to wake up at the same time to attend a morning accountability formation. Importantly, the claim that the treatment was effective in reducing forgetting holds regardless of the time in which students attend class.

Study lead bias

Two of the six instructors for this Precalculus course were the designers of this experiment and spent significant time reading the research on the effectiveness of spaced learning techniques and mitigating factors. Both instructors taught a control, treatment, and mixed section. To determine if the instructors subconsciously changed their techniques in response to their understanding of the topic being studied, we used a t-test that assumed student performance between study leads and non-study leads was the same. A p-value of 0.899 indicated that there was almost no evidence that would suggest student performance varied as a result of being a student in a study lead’s class (see Table 4).

Classroom implications

The results of this study clearly indicate the effectiveness of spaced recall quizzing at reducing student forgetting. This is the similar result we found in the work of Hopkins et al. (2016); however, we obtained these similar results without the presence of immediate feedback. When used as a classroom strategy it only takes a minimal amount of time away from the current curriculum. Lang (2016) describes this as a “small teaching” tool that can be added to your current syllabus without a major overhaul of your curriculum. Such a small activity had a huge positive influence on our students’ ability to recall fundamental mathematical concepts at the end of the course. There are some implications worth considering when spaced recall quizzing is used as a classroom strategy. These are: the impact on instructional time, the practice of providing feedback on student solution attempts, and the effect the quizzes may have on student affect, all of which we discuss below.

A possible argument that may be made against using spaced recall quizzes is that they take time away from the instruction of current concepts and therefore reduce the level at which students learn new material. We anticipated that we might observe this result due to the different content of the retention quizzes (RQs) for control verses treatment. The control group RQs addressed current material and the treatment group RQs did not. We anticipated that because of the control group’s extra exposure and time with the current material they would perform better than the treatment group on the quarter three final exam. To check for this possibility, we collected data on the quarter three final exam for both the treatment and control groups. At the end of quarter three, all PIC students took a final exam that was an open response exam (no multiple choice). The final exam was “group-graded.” Each instructor was given a rubric for a specific problem on the final exam and they graded that problem for every student in the course. This is our practice for grading major exams to build in consistency and fairness in our grading procedure. Figure 7 shows the performance (distribution of scores) between the treatment and control groups on that exam. Although the control group’s mean score (\(76.4\%\)) is slightly above the treatment group’s (\(74.9\%\)), a t-test that examined if the two groups performed the same produced a p-value of 0.400. This provides evidence that the mean quarter three final exam scores were similar between the two groups indicating that both groups understood the new concepts equally well (see Table 4). So, not only did revisiting fundamental concepts on the spaced recall quizzes reduce forgetting of those concepts for the treatment group; lost instructional time did not negatively affect their ability to learn new concepts. This is where there is a difference in our results compared to the Hopkins et al. (2016) study where a spaced versus massed practice was used in a course titled, Introductory Calculus for Engineers. Although both of our studies found the spaced (treatment) students outperformed the massed (control) on the spaced material, we found the control and treatment to perform the same on the current material. Hopkins et al. (2016) found the spaced learners to outperform the massed learners on the current material.

As previously mentioned, several studies addressed the role that feedback plays in retrieval attempts, particularly when retrieval attempts are unsuccessful (Benjamin & Tullis, 2010; Karpicke and Roediger III, 2007; Kornell et al., 2009). These studies claim that the phenomenon by which retrieval attempts can enhance learning are improved when students receive feedback on their solution attempts. The design of our study prevented us from discussing the quiz solutions during class time because of the potential for the students to discuss the solutions with other students that had not taken their retention quiz yet that day. Any discussion between students would interfere with the designed exposure gap informed by the research of Cepeda et al. (2008) and the “desired difficulty” as mentioned by Roediger III and Karpicke (2006b), a phrase coined by Bjork and Bjork (1992). This precluded students from receiving immediate feedback on their solution attempts. Students were able to log into the web-based platform later in the day and see their scores as well as solutions to the problems, but we could not ensure that students did so. This is worth noting because even without a way of providing immediate feedback or ensuring that students engaged with that feedback later, the RQs produced statistically significant improvements in students’ long-term retention (see Sect. 3). Consequently, even in classroom situations where providing immediate feedback is not possible, quizzing alone is still effective in improving long-term retention. This is consistent with the findings of Roediger III and Karpicke (2006b) and Karpicke and Roediger III (2007) that the testing effect occurs even when feedback is not given. RQs have been adopted as part of our curriculum due to the success in reducing forgetting. As we implement the RQs as a practice rather than a research study, we plan to provide immediate feedback and look forward to analyzing the retention with immediate feedback in future studies. One reviewer noted it could be possible that the success of the retention quizzes was a result of whether the student logged into the web-based platform to review the feedback. Whether feedback played a role in increasing retention is an item for further investigation.

In addition to the quantitative measures used to determine the efficacy of the RQs in reducing forgetting; we used the standard end of quarter surveys to ask students to comment on the utility of RQs. Although our research did not formally address student affect, what we observed is worth noting. The feelings of many students in the study can be summed up by one student who said,

“I have a ton of [n]egative feelings towards the retention quizzes. These quizzes are the dumbest thing I have ever taken in school. I think the idea of going over past knowledge that will not be on the [current exam] is not worth the wasted 10 minutes at the beginning of class. I think that the retention quizzes are a waste of time and are an easy F in the grade book. This might just be because I am forgetting the minor past stuff and trying to focus more on what we are learning now but I believe that the retention quiz is not necessary.”

Other students expressed similar sentiments, noting the difficulty of the RQs, and expressed concern about their grade.

“I felt negatively about retention quizzes because I often did poorly on a subject that I had previously done very well on.”

“It was a stressful thing to jump into in class and generally brought everyone’s grade down.”

“I feel like the retention quizzes only takes away from our grade. Our minds are focused on what we are learning currently then we have to revert back to old stuff.”

These sentiments did not surprise us. Lang (2016) explained that recalling information that a student thought they had already mastered can be frustrating. He suggested informing students about the research supported benefits of quizzing and retrieval practice. Because these quizzes are a recall attempt and not a formative assessment the instructor can also reduce potential negative effects on students’ grades by making them low stakes, giving them less weight in the overall grade.

Another option might be to not grade the quizzes at all, as long as there is some other motivation for students to put forth effort. A key factor in the success of the RQs is effortful recall (Brown et al., 2014) So motivating students to expend effort is an important consideration. It is also possible that helping students understand the benefits that come from even unsuccessful retrieval attempts could help resolve some of their frustration and combat any potentially negative learning effects due to poor affect. Future research could investigate the influence that recall quizzes have on student affect and consider effective ways for addressing any potentially negative aspects. Based on what we observed informally, any attempt to use spaced recall in the form of quizzing to improve long-term retention in a classroom setting should include a plan to address students’ frustration with the experience. If the RQs are to be graded, student concerns regarding the impact of the quizzes on their grades should also be addressed. One reviewer suggested that we find ways to make the effort that the students put into the RQs a rewarding experience that allows them to see the benefit of the effortful retrieval rather than a punishment in their grade. This is a great suggestion that we will find a way to implement as our school plans to continue the use of RQs for all students due to the significant improvement on the reduction of the forgetting of fundamental mathematical concepts.

Conclusion

This study demonstrated the effectiveness of spaced recall quizzing in reducing forgetting, thereby improving students' long-term retention. Specifically, identifying essential knowledge such as fundamental concepts and then providing students weekly spaced quizzes where the identified concepts cycle monthly, positively effects students’ ability to recall those concepts at the end of the academic year. Importantly, implementing this small teaching strategy does not reduce the level at which students learn new material, despite the loss of instructional time due to quizzing. Additionally, in situations where it is not possible to provide students with feedback on retrieval attempts, quizzing alone is still effective in improving long-term retention. The effects of quizzing on student affect was unable to be formally addressed, but was evident in end of course surveys as they exposed student frustration with the retention quizzes. The potential for negative responses from students is not something to be ignored. Therefore, anticipating and addressing negative student feelings is an important consideration if spaced recall quizzing is to be implemented in the classroom.

Data availibility

The datasets and R codes generated during this study are available from the corresponding author on reasonable request.

References

Arnold, K. M., & McDermott, K. B. (2013). Test-potentiated learning: Distinguishing between direct and indirect effects of tests. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(3), 940.

Begolli, K. N., Dai, T., McGinn, K. M., & Booth, J. L. (2021). Could probability be out of proportion? Self-explanation and example-based practice help students with lower proportional reasoning skills learn probability. Instructional Science, 49, 441–473.

Benjamin, A. S., & Tullis, J. (2010). What makes distributed practice effective? Cognitive Psychology, 61(3), 228–247.

Bertilsson, F., Wiklund-Hörnqvist, C., Stenlund, T., & Jonsson, B. (2017). The testing effect and its relation to working memory capacity and personality characteristics. Journal of Cognitive Education and Psychology, 16(3), 241–259.

Bjork, R. A., & Bjork, E. L. (1992). A new theory of disuse and an old theory of stimulus fluctuation. From Learning Processes to Cognitive Processes: Essays Inhonor of William K Estes, 2, 35–67.

Brown, P.C., Roediger III, H.L., & McDaniel, M.A. (2014). Make it stick: The science of successful learning.

Cepeda, N. J., Pashler, H., Vul, E., Wixted, J. T., & Rohrer, D. (2006). Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychological Bulletin, 132(3), 354.

Cepeda, N. J., Vul, E., Rohrer, D., Wixted, J. T., & Pashler, H. (2008). Spacing effects in learning: A temporal ridgeline of optimal retention. Psychological Science, 19(11), 1095–1102.

Ebbinghaus, H. (1964). Memory: A contribution to experimental psychology (henry a. ruger & clara e. bussenius, trans.). New York, NY: Teachers College(Original work published as Das Gedächtnis, 1885).

Eliasson, A. H., Lettieri, C. J., & Eliasson, A. H. (2010). Early to bed, early to rise! sleep habits and academic performance in college students. Sleep and Breathing, 14(1), 71–75.

Hopkins, R. F., Lyle, K. B., Hieb, J. L., & Ralston, P. A. (2016). Spaced retrieval practice increases college students’ short-and long-term retention of mathematics knowledge. Educational Psychology Review, 28, 853–873.

Jaeger, A., Eisenkraemer, R. E., & Stein, L. M. (2015). Test-enhanced learning in third-grade children. Educational Psychology, 35(4), 513–521.

Kamuche, F. U., & Ledman, R. E. (2005). Relationship of time and learning retention. Journal of College Teaching & Learning (TLC), 2(8), 10.

Karpicke, J.D. (2017). Retrieval-based learning: A decade of progress. Grantee Submission.

Karpicke, J. D., & Roediger, H. L., III. (2007). Expanding retrieval practice promotes short-term retention, but equally spaced retrieval enhances long-term retention. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(4), 704.

Kornell, N., Hays, M. J., & Bjork, R. A. (2009). Unsuccessful retrieval attempts enhance subsequent learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35(4), 989.

Lang, J. M. (2016). Small teaching: Everyday lessons from the science of learning. Wiley.

Liming, M. C., & Cuevas, J. (2017). An examination of the testing and spacing effects in a middle grades social studies classroom. Georgia Educational Researcher, 14(1), 103–136.

Lyle, K. B., & Crawford, N. A. (2011). Retrieving essential material at the end of lectures improves performance on statistics exams. Teaching of Psychology, 38(2), 94–97.

McDaniel, M. A., Anderson, J. L., Derbish, M. H., & Morrisette, N. (2007). Testing the testing effect in the classroom. European Journal of Cognitive Psychology, 19(4–5), 494–513.

Pearson, W., Jr., & Miller, J. D. (2012). Pathways to an engineering career. Peabody Journal of Education, 87(1), 46–61.

Roediger, H. L., III., Agarwal, P. K., McDaniel, M. A., & McDermott, K. B. (2011). Test-enhanced learning in the classroom: Long-term improvements from quizzing. Journal of Experimental Psychology: Applied, 17(4), 382.

Roediger, H. L., III., & Karpicke, J. D. (2006a). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210.

Roediger, H. L., III., & Karpicke, J. D. (2006b). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255.

Rohrer, D., & Taylor, K. (2007). The shuffling of mathematics problems improves learning. Instructional Science, 35(6), 481–498.

Rowland, C. A. (2014). The effect of testing versus restudy on retention: a meta-analytic review of the testing effect. Psychological Bulletin, 140(6), 1432.

Sanchez, D. R., Langer, M., & Kaur, R. (2020). Gamification in the classroom: Examining the impact of gamified quizzes on student learning. Computers & Education, 144(103), 666.

Shirvani, H. (2009). Examining an assessment strategy on high school mathematics achievement: Daily quizzes vs. weekly tests (pp. 34–45). American Secondary Education.

Taylor, A. T., Olofson, E. L., & Novak, W. R. (2017). Enhancing student retention of prerequisite knowledge through pre-class activities and in-class reinforcement. Biochemistry and Molecular Biology Education, 45(2), 97–104.

Trockel, M. T., Barnes, M. D., & Egget, D. L. (2000). Health-related variables and academic performance among first-year college students: Implications for sleep and other behaviors. Journal of American College Health, 49(3), 125–131.

Yang, C., Luo, L., Vadillo, M. A., Yu, R., & Shanks, D. R. (2021). Testing (quizzing) boosts classroom learning: A systematic and meta-analytic review. Psychological Bulletin, 147(4), 399.

Yeo, S. C., Lai, C. K., Tan, J., Lim, S., Chandramoghan, Y., & Gooley, J. J. (2021). Large-scale digital traces of university students show that morning classes are bad for attendance, sleep, and academic performance. BioRxiv. https://doi.org/10.1101/2021.05.14.444124

Acknowledgements

We would like to thank the USMAPS Math Department head, Dr. Alex Heidenberg, for adopting the retention quizzes into the Precalculus course. We also appreciate the support and participation of the instructors: Elizabeth Giebler, Justyna Marciniak, and Fran Teague. Because of their involvement along with the rich student feedback, the data was more robust than anticipated.

Funding

No funding was received for conducting this study.

Author information

Authors and Affiliations

Contributions

Lindquist, D and Sparrow, B jointly conceived the study, trained instructor participants, and collected and interpreted data findings. Lindquist, J conducted all statistical explorations.

Corresponding author

Ethics declarations

Competing interests

The authors have no financial or proprietary interests in any material discussed in this article.

Ethical approval

This research was submitted to the West Point Institutional Review Board and the protocol was deemed to meet the requirements for exempt status under 32CFR219.104(d)(1). Since data involved secondary analysis of routinely collected data, consent was not required.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Statistical tests

While descriptive statistics may clearly show a difference between the control and treatment groups, the reader may be interested in whether the results are generalizable to a broader population—say high school students, or perhaps a similar strata of college students. For this, we rely upon inferential statistics to determine the likelihood of similar results given a different sampling of students. This section briefly outlines the tests used to specify this generalizability.

-

1.

Data Visualization: While summary statistics (maximum, minimum, mean, variance, etc.) are useful point estimates of student performance, they may mask key features. A common tool to visualize these features is density plot. This tool estimates and plots the underlying probability density function resulting in a “smoothed” histogram that is not sensitive to bin size selection. This analysis is of the type shown in Fig. 4.

-

2.

T-Test: When comparing two groups (i.e. between control and treatment), one is often interested in whether an observed performance difference (\(\mu _1-\mu _2\)) is significant. The t-test examines the null hypothesis

$$H_0: \mu _1 = \mu _2$$that the mean performances are the same—and returns a p-value that can be interpreted as a probability that observed differences between the two groups is due to simple chance. A p-value of 0.05 indicates that differences could be explained by simple chance in just 5 out of every 100 experiments. Given this unlikely outcome, the null hypothesis should be rejected. In most contexts, a p-value greater than 0.05 suggests insufficient evidence to reject the null hypothesis. For this analysis, all t-tests were computed using the Welch’s t-test which requires generally normal responses (evident from Figs. 3, 4, and others) and relaxes the assumption of equal variances between the two groups required when using the Student’s t-test.

-

3.

Analysis of Variance (ANOVA): When comparing more than two groups, one is often interested in whether an observed performance difference (i.e between more than 2 instructors) is significant. ANOVA tests the null hypothesis

$$H_0: \mu _1 = \mu _2 = \ldots = \mu _n,$$that the mean performances are the same. Here, \(\mu _n\) is the mean performance of the \(n^{th}\) group. If the test returns a statistically significant result, there is evidence to reject the null hypothesis (\(H_0\)) implying that at least one group is different from the others. One-way ANOVA tests for differences in one independent variable (say control/treatment). Two-way ANOVA tests for differences in two independent variables (say control/treatment and hour of the day taught). When reporting the degrees of freedom for ANOVA, we adopt the notation (a,b) where a represents the degrees of freedom for the “between-group” variance and b represents the degrees of freedom for the “within-group” variance.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lindquist, D.S., Sparrow, B.E. & Lindquist, J.M. Spaced recall reduces forgetting of fundamental mathematical concepts in a post high school precalculus course. Instr Sci (2024). https://doi.org/10.1007/s11251-024-09680-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11251-024-09680-w