Abstract

Paleotsunami deposits provide a compelling record of these events, including valuable insights into their recurrence and associated magnitudes. However, precisely determining the sources of these sedimentary evidence remains challenging due to the complex interplay between hydrodynamic and geological phenomena and the intricacy of the processes responsible for forming and preserving tsunami deposits. Here, we introduce a novel approach that employs Bayesian inference methods to divide the complex tsunami process into segments and independently handle uncertainties, thereby enabling more precise and comprehensive modelling of the sources. We provide a list of potential earthquake scenarios with different likelihoods instead of a single best fit. Based on this method, we calculated that the source of the 869 Jogan earthquake had a magnitude ranging from Moment Magnitude 8.84 to 9.1 (within one standard deviation) with different slip distributions along the Japan Trench. Our results reaffirm that the Jogan event had a similar order of magnitude to the 2011 Tohoku-oki tsunami and enhanced the applicability of paleotsunami deposits to hazard assessment.

Similar content being viewed by others

Introduction

Paleotsunami deposits, first documented 40 years ago1,2,3, have attracted increasing interest because they are considered to be reliable indicators of prehistoric events. Understanding paleotsunami deposits can greatly enhance tsunami hazard assessment by providing information about the hazard presence on a specific coast, tsunami magnitudes, and recurrence intervals that inform numerical, deterministic, and probabilistic tsunami hazard models, from which tsunamigenic earthquake magnitudes can be derived4,5,6,7. Consequently, its understanding represents valuable information for disaster risk assessment, especially in light of the devastating repercussions of the 2004 Indian Ocean tsunami and 2011 Tohoku-oki tsunami in Japan that were not expected in such large magnitudes8,9,10. This understanding allows for data-based risk management, reduction strategies, and preparedness efforts. However, attributing these deposits to specific sources remains challenging because of the many factors that affect the tsunami evolution and resulting tsunami deposit. Such factors include the coastal configuration during the paleotsunami; volume of sediment available, transported, and effectively deposited; number of places with sedimentary evidence; extent of deposit erosion before preservation; and post-burial processes such as compaction. These components add layers of complexity, making pinpointing the origins of these paleotsunami deposits a demanding task.

Identifying tsunami sources through historical and prehistorical deposits is primarily based on numerical modelling techniques that integrate sedimentary data as inputs, boundary conditions, or as means of verification of the tsunami simulation results. Adopted methodologies include trial-and-error forward modelling10,11,12,13, and neural network-based inverse modelling14,15. Recently, deep neural network (DNN) inverse modelling was utilised in the Odaka region of Japan to estimate tsunami parameters such as the maximum inundation distance, flow velocity, and sediment concentration16. However, physical deposits are often directly compared with modelling outputs in these methods. Considering the assumptions required for modelling, this approach may only partially reflect reality. For example, such comparisons often imply that deposit thickness and extent are directly proportional to modelling outcomes, potentially underestimating the tsunami magnitude because of the possibility of deposit erosion. Furthermore, addressing uncertainties related to coastal hydrodynamic processes, such as bed erosion, is crucial.

Is it feasible to pinpoint the exact source of a specific paleotsunami deposit? This inquiry naturally branches into two subquestions: (1) Given the high degree of uncertainty, is seeking a singular earthquake source realistic? and (2) If pursuing a single source is viable, can we adequately incorporate all uncertainties intertwined with several hydrodynamic and geological processes? In response to the first query, our proposition veers from striving to locate a source precisely, which is currently beyond our capabilities. Instead, a list of potential sources can be created, which can also be used for hazard assessment. Regarding the second question, we propose breaking down the complex paleotsunami deposit formation process into subprocesses according to the phenomena governing each stage, that is, earthquake generation, tsunami inundation, and sediment preservation17,18. We show that using Bayesian inference methods to decompose the entire process and individually manage these uncertainties forges a path toward more accurate and comprehensive paleotsunami source modelling. A similar approach was previously used in other studies to track the sources of particles such as beached plastics19, and for the probabilistic estimation of tsunami amplitude from far-field events20. The outcome is a ranked list of potential earthquake scenarios with varying locations and magnitudes. The likelihood of each scenario being the cause of the paleotsunami deposit formation was quantitatively evaluated.

The Bayesian inference method employed in our study represents a significant advancement in paleotsunami source tracing. It can be applied to a broad spectrum of Earth and disaster science research. This method excels at analysing complex phenomena, from identifying the causative events behind coseismic deposits to tracing the sources of paleotempestite formations and volcanic deposit assessments. Its ability to dissect intricate processes into manageable segments and standardise outcomes enables the comprehensive mathematical evaluation of phenomena that are otherwise governed by diverse physical laws.

Source of the 869 C.E. Jogan tsunami deposit

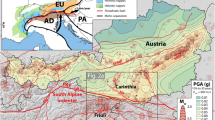

The 869 C.E. Jogan earthquake, whose deposits have been recorded from the Sendai Plain in the Miyagi Prefecture to Odaka in the Fukushima Prefecture, has gained recognition as the predecessor of the 2011 Tohoku-oki earthquake and tsunami10,21,22,23,24. It is one of the best-preserved, recorded, and most extensively studied paleotsunami deposits. The significance of this event, coupled with its well-documented deposits, makes it an excellent case study for assessing our approach to decoding the sources of paleotsunami deposits. In addition, the carefully surveyed deposit in the Sendai Plain allowed us to evaluate the feasibility of deducting the paleotsunami source by only including a single survey area. Hence, for our study, we selected the same geographical area as Sugawara et al.10, that is, the Sendai Plain (Fig. 1).

a General framework of the Bayesian hierarchical inference process. The dots along the Japan Trench represent the rupture area, which is linked to the earthquake-location probability through the coupling coefficient. The flowchart originating from the Sendai area illustrates the methodological progression from utilising modelled cumulative sediment deposit (CSD) outputs to deriving the potential sources of the paleotsunami. The coupling model distribution by [30]—GPS + Teleseismic model is included in the figure. b Logic tree mapping the sources of uncertainty within our approach. The map was created using ArcGIS Pro (2023) under a license held by the International Research Institute of Disaster Science (IRIDeS), with data from Esri, TomTom, Garmin, FAO, NOAA, USGS, and CGIAR.

The decision to use a single deposit was influenced by the practical consideration that in many regions beyond Japan, such as around the Indian Ocean25 and Chile26, paleotsunami evidence is often limited to single sites or its correlation among several places is problematic27. This makes our approach widely applicable in a global context.

To investigate potential source candidates for the Jogan paleotsunami deposit, we divided the paleotsunami deposit formation process into three hierarchical levels: earthquake occurrence model, tsunami model and deposit formation, and post-depositional effects (i.e. erosion and compaction; Fig. 1). To determine the sources of uncertainty, we employed a logic tree, a method commonly used for probabilistic tsunami hazard assessment (PTHA)28. In the first level, the informative prior, we identify primary components, such as earthquake location (EQl) and earthquake magnitude (EQm), using a stochastic process. The main factors of the second level are the initial sediment availability and grain size. Finally, we determine the post-depositional effects of erosion and compaction in the third level. Accordingly, we harnessed Bayesian hierarchical modelling29 to amalgamate all variables and their uncertainties and probabilities.

Bayesian hierarchical models, also known as multilevel models, involve parameters that are modelled as random variables forming a hierarchy. The hierarchical model allows us to express the uncertainty at multiple levels, from the individual event parameters to the broader prior distributions informed by historical evidence.

Within the framework of the Bayesian hierarchical inference, the joint posterior probability is proportional to the product of the likelihood and priors. Translating this into the context of our study, the posterior probability gives the probability that a specific paleotsunami deposit was generated by a combination of a specific earthquake magnitude and slip distribution, a specific tsunami evolution, and a specific post-depositional process. This probability is proportional to the product of the likelihood of the paleotsunami data given the tsunami’s model variables and prior probability of the parameters in the model. This can be represented as:

where p(paleoTsu∣postD, Tsu, EQ) is the likelihood, representing the probability of the observed paleotsunami deposit data given the model parameters, p(postD∣Tsu, EQ) is the prior distribution of post-depositional effects, p(Tsu∣EQ) is the prior distribution of the tsunami model variables, and p(EQ) is the informative prior distribution of earthquake parameters such as location, magnitude, and fault slip; and, the quantities involved are defined as, paleoTsu represents the observed paleotsunami deposit data, structured as an array containing deposit thickness measurements from various borehole locations, postD encompasses post-depositional effects, which can be modelled as probabilistic distributions accounting for factors like erosion rates and sediment compaction over time, Tsu refers to the tsunami model parameters, including variables directly associated with sediment transport modelling that can be assessed and compared in the framework: deposit thickness, grain size, initial sediment thickness (sediment availability), derived from numerical simulations, and EQ includes earthquake parameters such as location, magnitude, and fault slip, represented through stochastic sampling.

Results

Earthquake occurrence

We selected 30 of 1347 samples for the stochastic modelling process for earthquake scenario generation (Fig. 2). This selection process is centred on determining the proportion of a specific fault segment’s coupling relative to the overarching coupling values of the entire dataset. Note that these coupling values were normalised and ranged from 0.01 to 1. When specific segment couplings were extracted, they were further normalised to understand their relative significance within their subsets. This resulted in mean coefficient values ranging from 5.00E−5 to 1.88E−4. The 95% confidence intervals for the mean coefficients were 5.00E−5 to 8.00E−6 and 1.88E−4 to 8.00E−6. An evident location-dependent trend was observed, in which scenarios closer to the central or northern regions of the earthquake-generation area exhibited higher mean values. This spatial variance in the coefficient values aligns with the pre-2011 event coupling model introduced by Loveless et al., specifically the GPS + Teleseismic model30 (Fig. 1). Consequently, the first level constitutes an informative prior for the Bayesian framework

The probability distribution of earthquake magnitudes was based on the Gutenberg–Richter law, which states that the frequency of earthquakes decreases exponentially with increasing magnitude, adopting a b-value of 0.6 specific to the Tohoku region31. Before normalisation, these probabilities varied on a logarithmic scale from 0.001665 (with a confidence interval of 0.001461 to 0.001868) for a Moment Magnitude (Mw) 7.73 earthquake scenario to 0.000171 (with a confidence interval of 0.000150 to 0.000191) for a Mw 9.1 scenario. This pattern indicates the lower likelihood of higher-magnitude earthquakes, which is a typical feature of seismicity encapsulated in the Gutenberg–Richter law.

The sensitivity analysis revealed a significant impact of different b-values on the probability distribution. For magnitudes lower than approximately Mw 8.25, the probability increased with decreasing magnitude and higher b-values. Conversely, for magnitudes of Mw 8.25 and above, the probability decreased with higher b-values and increasing magnitude. This second case aligns with our modelling, as the 30 earthquake samples used as paleotsunami sources are above this magnitude threshold. These findings emphasise the model’s sensitivity to the choice of the b-value, underscoring the importance of accurately determining this parameter for reliable analyses Fig. 3. This uncertainty was included in the final computation of the earthquake occurrence probability).

The left panel illustrates the probability distributions for earthquake magnitudes ranging from 7.8 to 9.2, with b-values varying from 0.1 to 1.0. The right panel shows the mean probability and confidence intervals for the b-value distribution centred around 0.6 with a standard deviation of 0.1. The inset histogram represents the distribution of b-values used in the sensitivity analysis.

Tsunami model and deposit formation

The tsunami modelling outputs exhibit differences in the sediment deposition distribution inside the polygon, as depicted in Fig. 4b. In general, the larger the magnitude is, the greater the deposition. However, grain-size sensitivity analysis (SA) revealed a quasilinear correlation between the sediment grain size and cumulative sediment deposition (CSD). We selected a grain size range of 187 to 348 μm, which agrees with previous flume experiments and numerical model studies and yields a mean range of 267 μm reported in previous studies12,32. We noted a change of 8% in the CSD within the polygon across the entire range of grain sizes used in our sensitivity analysis. This variation reflects how different grain sizes influenced the CSD obtained in each case. This relationship suggests that smaller sediment particles yield a greater mean deposit thickness and overall volume, as shown in Fig. 5a.

The left map displays the reconstructed topography and bathymetry based on 1961 aerial photographs. It outlines the polygon used for goodness-of-fit computations and pinpoints the drilling locations reported in Sugawara et al.10. The right image illustrates the cumulative erosion/sedimentation modeling output based on the Mw 9.10 earthquake scenario (sample 363).

a Sensitivity analysis (SA) using grain size as a parameter for the study polygon. The mean tsunami deposit thickness and grain size are plotted on the y- and x-axes, respectively. b Normalised mean thickness for various grain sizes, including envelope distribution. c Grain size distributions of the 869 Jogan deposit, based on Sugawara et al.10. d Calibrated envelope distribution utilising actual grain sizes from the Jogan deposit. e SA for initial sediment thickness, with the y-axis indicating the mean tsunami deposit thickness and the x-axis showing the initial sediment thickness available for erosion in the model. f Normalised mean thickness for different initial sediment thicknesses, including the envelope distribution. g Probability distribution of tsunami deposit formation under the given earthquake scenario derived from Monte Carlo modelling for SA.

A distinct pattern emerged when the effects of variations in the initial sediment thickness available for transport on the CSD were examined (Fig. 5e). The CSD increases with the increase in the thickness to 3 m. However, surpassing this threshold resulted in a progressive decline in the CSD. When the effect of the sediment on the fluid density within Delft3D was turned off, the CSD remained steady, irrespective of the initial sediment availability. This finding underscores the crucial role sediment concentration plays in the flow density and, hence, in the final CSD, as demonstrated by Sugawara et al.33.

In both scenarios, the envelope distributions captured the highest expected variability in the total sediment deposition based on the input parameters for grain size and initial sediment thickness (Fig. 5b, f). We computed a 5% CSD variability for the grain size variability (within 2σ). In contrast, we calculated a 2% variability (within 2σ) for the initial sediment thickness. After calibrating the grain-size envelope distribution using actual data from the Jogan tsunami (Fig. 5c), we observed a shift in the envelope distribution towards greater CSD values. This shift is attributed to the higher proportion of finer particles in the actual grain size distribution. This calibration also resulted in a tighter confidence interval (Fig. 5d).

In the Monte Carlo simulation conducted to accommodate the inherent uncertainties in the grain size and initial sediment availability, the mean of the resulting distribution of P(Tsu∣EQ) was determined to be 0.94, with a 95% confidence interval ranging from 0.91 to 0.97 (Fig. 5g). This distribution reflects the influence of these uncertainties on the modelling of tsunami sediment transport. As these uncertainties are inherent characteristics of the system, the derived distribution was applied across all stochastic earthquake scenarios.

Post-depositional effects

The Monte Carlo simulation generated a set of 1000 potential tsunami deposits for each earthquake scenario. These results incorporate random erosion, varying between 10% and 50% of the initial value, followed by a triangular distribution with a mean of 70%.

The actual conditions for erosion are influenced by several physical, environmental, and biologically induced factors, such as slope variations due to older beach ridges, proximity to coastal areas, and pluvial-derived water flow. However, precisely quantifying the specific erosion conditions that affect unconsolidated deposits, such as paleotsunami deposits, remains a challenge34. A randomised approach is a practical solution for managing epistemic uncertainty. This methodology has been further validated by global observations, indicating that pedogenesis and hydrometeorological conditions are significant contributors to the erosion of tsunami deposits35,36. This approach yielded 30,000 potential results for 30 earthquake scenarios.

By examining the Jogan paleotsunami deposit dated to 869 C.E., with an average burial depth of 40 cm, we calculated a natural compaction factor of 0.01%. This represents a reduction of 4 mm in the original thickness, in line with the Sadler’s effect37. It is important to note that this estimate does not account for other factors that could influence compaction, such as water movement, porosity, and bioturbation. In addition, although carbonate dissolution was not a significant factor in the Sendai Plain, anthropogenic deformation, primarily due to farming activities, played a notable role.

Likelihood

By computing the normal-sampled Kling–Gupta Efficiency (KGE) metric, we assessed the model performance of the earthquake, tsunami, and erosion scenarios against the data derived from Jogan deposit (Table 1). Among 30,000 simulations, KGE values were widely dispersed, ranging from roughly −0.90 to 0.12. Negative scores primarily signal a weak correspondence between the model predictions and observed data and marked disparities in bias and variability. Conversely, higher positive KGE values reflect better, albeit not exact, agreement between the model outputs and actual observations.

Our data revealed a broad range of individual KGE components. The correlation coefficient (r) spanned from −0.21 to 0.12. Negative values indicate discordant trends between modelled and observed data. These were mainly associated with earthquakes with Mw < 8.86. In contrast, positive r values indicate concurrent increases or decreases in model and observed data. However, the highest r value of 0.12 represents a weak but positive correlation, suggesting an agreement between the model and observed data.

Regarding the bias ratio (alpha), values fluctuated considerably between 0.05 and 1.99. Values below 1 indicate instances in which the model underpredicts relative to observed data. In contrast, alpha values above 1 indicate a model propensity. Finally, the variability ratio (beta) presented a diverse range of 0 to 1.72. This extensive spread reflects the varying success of the model in accurately capturing the variability in the observed data. The ideal beta value is 1, denoting a perfect match in the variability between modelled and observed data.

Based on the KGE distribution for each earthquake scenario, a performance distribution was obtained (Fig. 6a, b). This distribution encapsulates the combined probabilities of all potential interactions between earthquakes, tsunamis, and post-depositional processes, leading to the formation of a paleotsunami deposit analogous to the Jogan deposit. In other words, the distributions represent the conditional probability of generating a determined paleotsunami, given the complex interplay of post-depositional effects, tsunami, and earthquake occurrences p(paleoTsu∣postD, Tsu, EQ). Generally, larger magnitudes tended to have a higher correlation. However, the distribution was broader for higher earthquake magnitudes.

a Likelihood of the 30 earthquake scenarios from stochastic sampling ordered by increasing magnitude. b Box plot summarising the likelihood distributions of the 30 selected earthquake scenarios, showcasing the variability and range of each distribution. c Normalised posterior distributions of the earthquake scenarios within a confidence interval of 1σ, with the probabilities adjusted using the Bayesian hierarchical model. d Normalised box plot of the posterior distributions of the earthquake scenarios within a confidence interval of 1σ, providing a visualisation of the final scenario probabilities.

Posterior distribution

The posterior distribution was computed using a point-wise approach the parameters given the observed paleotsunami deposits by evaluating the product of the likelihood and prior distributions at discrete points in the parameter space. After constraining our analysis to the first standard deviation of the earthquake scenarios, we obtained nine distinct scenarios with tightly bound distributions (Fig. 6c and Table 2). Subsequently, we calculated the posterior distribution p(posDep, Tsu, EQ∣paleoTsu)) by integrating the probabilities associated with earthquake locations, magnitudes, tsunami occurrence uncertainties, and post-depositional effects. The outcome of this analysis suggests that the likelihood that the five earthquake scenarios with magnitudes of Mw 9.01 and Mw 9.05, each in a distinct location and slip distribution, were the causative events of the Jogan paleotsunami is higher (Fig. 6d and Table 2). Close possibilities for these scenarios were earthquake sources of magnitudes Mw 8.84 and Mw 9.10, with different locations and slip distributions for each case.

Posterior predictive checks

The posterior predictive checks (PPC) assessed the model’s fit to the observed paleotsunami deposit data. The observed data and the replicated datasets generated from the posterior predictive distribution were compared using histograms with kernel density estimates (KDE) (Fig. 7).

The observed data shows a high frequency of lower deposit thickness values, with the frequency decreasing as the deposit thickness increases. The KDE overlay of the replicated model data indicates that the model captures the general trend of the observed data. However, discrepancies can be noted in specific thickness ranges. These discrepancies highlight areas where the model may require further refinement to represent the observed data more accurately. The PPC results demonstrate that the model is reasonably consistent with the observed paleotsunami deposits, suggesting that the model-generated data generally aligns with the observed data.

Discussion

Evaluating paleotsunami sources: integrating independent variables of location and magnitude

By treating location and magnitude as independent variables, we could separately evaluate probable locations and magnitude-dependent recurrence interval probabilities of paleotsunami sources. This approach is useful for constraining the locations of paleotsunami events, thereby providing insights into the locations of tsunamigenic earthquakes for hazard assessment. Drawing on the methodologies outlined by Goda et al.38, we integrate the principles of multivariate probabilistic models in our study. These models differentiate quantitatively between tsunamigenic and non-tsunamigenic earthquakes and incorporate uncertainty and dependency on multiple source parameters.This is particularly suitable for our subsampling technique, which reduced the sample space to only tsunamigenic events.

Integrating earthquake probabilities for location and magnitude directly improves paleotsunami source tracing. It allows for the analysis of multiple scenarios (Fig. 2). Specifically, this approach enables a more refined and scientifically robust understanding of potential paleotsunami sources, considering that similar tsunami sources can have different locations, slip configurations and magnitudes and generate similar deposits. After completing the analysis of other priors and likelihoods and determining the list of source candidates, the earthquake samples that ranked high can inform the PTHA input as constrained sources, enhancing the accuracy and relevance of the hazard assessment.

These inputs are widely used in PTHA assessments, emphasising the importance of accounting for paleotsunami-deposits-derived earthquake slip distributions’ spatial and temporal variability in tsunami hazard modelling for a better hazard assessment4,6.

Using the long-term probability distribution for specific events in our Bayesian framework accounts for the inherent uncertainty and variability in earthquake occurrence. By integrating the Gutenberg–Richter law into our Bayesian inference model, we leverage the statistical properties of earthquake occurrence to inform our prior distributions. This method acknowledges that higher-magnitude earthquakes have a lower probability of occurrence. Since it is challenging to precisely define the probability of recurring events, especially for large earthquakes with long recurrence intervals, using the long-term probabilities described by the Gutenberg–Richter law is the most suitable approximation. This probabilistic framework enhances the robustness of our hazard assessment by systematically incorporating the uncertainty associated with earthquake magnitudes.

However, SA revealed that the choice of the b-value significantly contributes to the uncertainty in calculated earthquake probabilities, particularly for larger magnitudes. Consequently, the uncertainty quantification analysis underscores the importance of accurately determining the b-value for seismic hazard assessment and paleotsunami source tracing. There is considerable debate regarding the appropriate b-value for subduction regimes, such as the Japan Trench. Studies have shown significant spatial and temporal variations in b-values, ranging from 0.5 to 1, especially following the 2011 Tohoku earthquake. These variations reflect the complex seismicity patterns and stress distributions along the trench39,40. This variability represents an additional challenge in paleotsunami source tracing, given the changes that could have occurred since the paleotsunami event and the potential substantial modification of calculated probabilities. It also adds a layer of epistemic uncertainty, and additional studies are needed.

Estimated coupling coefficients were used to derive the probability of earthquake nucleation. However, this approach might introduce new sources of uncertainty. For example, the coupled state of a trench during the development of a paleotsunami may have been under a different stress regime. If considered, the location probability method could assume homogeneous coupling, non-informative prior, implying equiprobability in the location of earthquake scenarios. This is also useful for other subduction areas where the coupling state must be calculated. In such cases, the locations of scenarios are not necessarily constrained by any specific coupling model. This approach permits the assessment of probable locations of paleotsunami sources, independent of the behaviour of the subduction source.

As an additional constraint, recurrence intervals established from paleotsunami deposit dating can be used to refine our understanding of potential seismic sources. Each probable source in our analysis is associated with a specific magnitude according to the principles of the Gutenberg–Richter law, which in turn has a probability of recurrence over set periods. We can more effectively constrain likely sources by aligning the recurrence intervals derived from paleotsunami deposits with specific recurrence probabilities. This procedure links paleotsunami recurrences to seismic origins. It leverages the recurrence probability of the magnitude as a critical criterion for identifying potential sources. This approach enables the targeted and informed assessment of paleotsunami origins, providing insights into geological evidence and probabilistic analysis.

Our study focused on a single survey site from the well-documented 869 Jogan paleotsunami deposit. We acknowledge that this presents limitations, as incorporating data from multiple deposits could reduce the non-uniqueness in source region determination and enhance the robustness of the Bayesian framework. This makes our approach widely applicable in a global context. However, while it is novel in its application of Bayesian methods to paleotsunami source tracing, we recognise that the method used to implement the Bayesian framework here approach has limitations regarding statistical thoroughness and needs to be strengthened in the future.

Future research should aim to integrate multiple paleotsunami deposits to validate and refine our methodology further and include a sensitivity analysis of the coupling model choices to evaluate the impact of different slip distributions on flood simulation outcomes, enhancing the robustness and reliability of the hazard assessment.

Analysing coastal morphology: navigating uncertainties in tsunami deposit formation and preservation

Utilising aerial photographs from 1961 for the morphological reconstruction of the Sendai Plain effectively reduced uncertainties related to coastal morphology changes (Fig. 5).These images depict the coastline before introducing significant artificial structures such as sea walls. Based on using topography from a period with minimal human intervention, our model more closely represents the coastal landscape during the time of the paleotsunami. Although reconstructing the exact topographic conditions of the paleotsunami era is challenging, this approach is crucial. It enables the more accurate simulation of paleotsunami or even far-in-time historical tsunami deposit formation against a landscape that has undergone minimal artificial alterations, enhancing the numerical modelling reliability. Utilising these historical images allowed for a more precise approximation of the conditions during the paleotsunami, providing a baseline representative of the natural state of the coastline. Such a baseline is required to understand sediment dynamics during the event. SA plays a critical role in accounting for uncertainties associated with environmental conditions during a paleotsunami (Fig. 5). Given the temporal distance from these events, precise knowledge of details such as the date of occurrence or sediment availability is elusive. However, the sedimentological characteristics of the paleotsunami deposits provide crucial insights into the sedimentary context of the event. The uncertainty surrounding sediment availability, which demonstrates significant seasonal variation, presents a considerable challenge for our study. This variability is well documented in the coastal areas of Australia and Japan41,42. Precisely quantifying the type and volume of sediment present during a specific paleotsunami event is nearly impossible because of limitations in accurately dating such events. This issue is particularly pertinent for most paleotsunamis, although it may be less critical for historical ones. For instance, the Jogan tsunami that occurred in July likely coincided with the typhoon season and higher precipitation, suggesting increased sediment availability. However, confirming the amount of sediment deposited during this period remains challenging. SA can be used to circumvent this obstacle, providing a route towards approximating the actual conditions during a paleotsunami (Fig. 5e).

Further precision was added through grain-size SA and the calibration of the results using empirical grain-size data gathered from actual paleotsunami deposits. For our modelling, we used Jogan deposit grain size analysis to calibrate and shift the envelope distribution from the sensitivity analysis (Fig. 5c, d). This calibration process highlights the crucial role of real-world data in fine-tuning predictive models. By aligning our models with observed evidence, we ensured that they were better suited for mirroring the conditions and outcomes of actual tsunami events.

Monte Carlo simulations for sediment availability and grain size are based on the simplified assumption that these parameters are independent of the earthquake magnitude. This assumption underpins the model’s approach to parameter variability, which suggests that uncertainties in grain size and sediment availability uniformly affect the processes across different magnitudes. Although the outputs of our model are intrinsically linked to the earthquake magnitude, the independence of the grain size and sediment availability from earthquake magnitude is a key aspect of our current methodology.

However, this approach requires further refinement. A more nuanced approach is necessary if further analysis reveals a significant interdependence between these parameters and modelled outcomes that vary with the earthquake magnitude. In such cases, conducting SA across different magnitude ranges could provide insights into the interaction of these model parameters with model outputs at varying earthquake magnitudes. This modification is crucial for improving the accuracy and reliability of our model in representing the complex dynamics of sediment transport during tsunamis.

Additional sources of uncertainty inherent in our coastal simulations must be acknowledged. Our model omits factors such as cohesive sediments, larger particles such as gravel, and variability in topographic roughness. While these elements can be incorporated in future studies, it is essential to recognise that their inclusion does not necessarily yield technical improvement. Accounting for the transport of cohesive sediments and coarse particles and introducing spatially variable roughness introduced additional layers of complexity and uncertainty into the models. These aspects are important for a more comprehensive and nuanced representation of the coastal environment during paleotsunamis. Thus, while these improvements can potentially enhance the overall reliability and applicability of the results in paleotsunami source tracing, they also require careful consideration of new uncertainties introduced into the modelling approach.

This study features the first model in which post-depositional erosion and compaction factors are integrated into computations for paleotsunami source identification. Our methodology assumes that erosion is a stochastic process that does not correlate with regional rainfall rates. The almost flat topography of the Sendai Plain (<1°) coupled with the morphology reconstruction resolution of 15 m justified our decision to refrain from incorporating slope variations into our calculations.

However, our erosion estimate, which ranged between 30% and 50%, carries uncertainties related to the specific meteorological conditions of the study region (Fig. 8). These estimates leverage insights derived from studies conducted in various locations, from Japan to diverse tropical climates such as Peru and Indonesia35,36. This wide range of applicability represents an additional uncertainty layer.

Refining earthquake magnitude estimations in paleotsunami source identification

We adopted a sophisticated approach to determine the feasibility of identifying the exact source of a specific paleotsunami deposit. Considering the high degree of uncertainty highlighted in the first subquestion (see Introduction), the emphasis shifted from identifying a singular earthquake source to generating a list of potential sources with associated probabilities (Figs. 1 and 6). This approach aids in constraining hazard assessments more realistically by providing a list of probable sources based on paleotsunami deposit analysis, including uncertainties. This quantitative method allows for considering multiple potential sources, helping to avoid underestimation or overestimation and contributing to a more data-driven assessment. Our methodology entails a comprehensive approach that manages uncertainties individually and incorporates probability variations based on the location and magnitude of earthquake scenarios and factors in morphological reconstruction alongside random erosion factors (Fig. 1). Based on this approach, we notably reduced the uncertainty and narrowed down potential earthquake magnitudes compared with previous studies. Sugawara et al.10 estimated a magnitude of Mw 8.4 and Namegaya and Satake23 reported a magnitude of Mw 8.6. In contrast, nine earthquake scenarios are estimated in our study, with magnitudes ranging from Mw 8.84 to 9.1, within a 1σ confidence interval among a pool of 1347 samples (Fig. 6c, d).

Besides the different approach, one of the reasons for this remarkable difference lies in the topography used in our model. We used reconstructed topography from aerial photographs, which firmly controlled the inundation patterns. In contrast, the studies mentioned above used contemporary topography with corrected shorelines. This difference in topographic data likely influenced the magnitude estimates.

To address the second sub-question, our study leverages Bayesian inference methods to decompose the complex process of paleotsunami deposit formation into subprocesses governed by individual phenomena (Fig. 1). This approach allowed us to trace paleotsunami sources more accurately and comprehensively. The result of our research is a ranked list of transitive earthquake scenarios, each quantitatively estimated for its likelihood of being the cause of the formation of the paleotsunami deposit (Fig. 6c, d).

Regarding the computation of the posterior distribution, the point-wise approach is suitable for models with relatively low dimensionality. However, this method has several disadvantages. As the number of parameters increases, the parameter space grows exponentially, making point-wise calculations computationally expensive and potentially infeasible for high-dimensional models. Although our PPC showed a good correlation between the posterior results and the observed paleotsunami deposits, future work is needed to evaluate using Markov Chain Monte Carlo (MCMC) algorithms to compute the posterior distribution. This also applies to the computation of all the priors. Different statistical techniques should be evaluated to assess discrepancies better and improve the model’s accuracy.

Our innovative method holds significant promise when applied to other coastal regions vulnerable to tsunami hazards, such as the Nankai Trough in Western Japan, Indonesia, and Chile, where historical paleotsunamis are evident but mostly found in single sites. The Bayesian hierarchical methodology extends beyond tsunami analysis and provides utility for studying other complex paleoevent phenomena through segmented analysis.

Future research should be focused on determining the minimum number of field samples required for a robust analysis using this approach, thereby enhancing its efficiency and application in tsunami risk assessment. Evaluating the impact of non-informative and varying informative priors at the earthquake generation level is also crucial. This evaluation will pave the way for more detailed assessments and a better understanding of how source model selection influences paleotsunami source assessments. Therefore, assessing the sensitivity of using different coupling models is essential. In this research, we employed the model by Loveless et al.30, developed before the 2011 TOT event, and assumed limited knowledge about the probability of generating an earthquake in the foreseeable future. However, based on seafloor geodetic data, more precise coupling models, such as Iinuma43, were developed after the 2011 TOT event. Consequently, evaluating the differences between models with varying levels of information will help us better understand the implications of selecting a specific informative prior. The same applies to non-informative priors, which assume a complete lack of knowledge of the subduction zone. Also, future studies should directly compare this new method with previous approaches to further validate and refine our approach. Such comparisons will help assess and quantify the differences more clearly, providing deeper insights into the impact of topographic data and methodological approaches on magnitude estimates.

New surrogate model for tsunami modelling

Our approach introduces a surrogate model for tsunami research, characterised by a unique method of sample selection and the implementation of a Bayesian hierarchical process. It involves selecting a finite number of samples from the stochastic pool, reducing the sampling space for the sources of interest, and selecting a constrained number of samples for subsequent analysis. This strategy enables a preliminary analysis of the Bayesian results to identify sources with higher potential. Hence, it reduces the sampling space for refined calculations, a feature inherent in Bayesian inference theory29,44. One significant advantage of this methodology is a consequent reduction in computational time and resources, leading to a substantial improvement in the efficiency of the process.

Methods

In this section, we introduce the Bayesian hierarchical framework we employed to identify the sources of the paleotsunami deposits. We then describe the characteristics of the paleotsunami deposit of interest and the source area. Subsequently, we discuss the levels used in the Bayesian framework and its components.

Bayesian hierarchical framework

Bayes’ theorem is a principle of probability theory and statistics that describes how to update the probabilities of hypotheses when evidence is given44.

Bayesian hierarchical models, also known as multilevel or hierarchical Bayesian models, are statistical models that are used to estimate the parameters of the posterior distribution using the Bayesian method. These models involve parameters that are modelled as random variables, forming a hierarchy of parameters29. This enables complex models to be expressed and solved in a modular manner:

where y represents the observed data, θ are the parameters of the process, and ϕ are the hyperparameters.

We postulate that the formation process of paleotsunami deposits was complex. However, it can be conceptually divided into several segments based on the processes involved, governing phenomena, and equations applicable to each. The first one pertains to the generation of earthquakes and consequent deformation of the seafloor (EQ)30,45,46. The second encompasses tsunami modelling, including hydrodynamic interactions such as sediment transport and morphological changes (Tsu)17,47,48,49. The third level involves post-depositional effects accounting for erosion and compaction (postD). These three stages encompass the main processes involved in the trajectory from the inception of the tsunami to the identification of paleotsunami deposits.

Paleotsunami deposit

We used well-documented paleotsunami deposits related to the 869 Jogan tsunami in the Sendai Plain10. A total of 330 drilling sites were distributed across seven transects (Fig. 4).

Among them, 88 sites exhibited paleotsunami deposits (Fig. 4). The mean depth of the upper contact of the paleotsunami layer is 0.4 m. The locations and recorded paleotsunami thicknesses of these sites were utilised to identify potential sources of the paleotsunami21. Notably, ‘not-found’ values were integral to our calculations because they indicate either non-deposition or erosion, both of which are relevant to the dataset.

Level 1: tsunamigenic earthquake scenarios

The Japan Trench was selected as the tsunamigenic area for the Sendai Plain, as shown in Fig. 1. Loveless et al.30 calculated interseismic coupling prior to the 2011 Tohoku-oki earthquake and tsunami. Using a systematic sampling method, stochastic earthquake samples were generated by varying the earthquake magnitude in steps of 0.2, covering a range of 7.8 to 9.2. For each magnitude, the corresponding fault length and width were calculated using the scaling relationships for earthquake source parameters, represented by the equations \(l=1{0}^{(0.55 \, \times \, {M}_{w}-2.19)}\) and \(w=1{0}^{(0.31\times {M}_{w}-0.63)}\), respectively. These dimensions were then adjusted to align them with the base unit of the fault length. This method also includes conditional checks for the feasibility of scenarios based on specific criteria such as subfault locations relative to the water depth. This resulted in 1947 samples, the maximum number the simulation could generate with the given magnitude step.

Our analysis was focused on samples that could thoroughly inundate the extent observed in the Jogan deposits in the Sendai Plain. It was determined that earthquakes with magnitudes below Mw 8.8 only led to partial inundation of this region. These smaller magnitudes did not reach the maximum lateral extent of the corrected shoreline Jogan paleotsunami. Given that such magnitudes could not generate the observed paleotsunami deposits, they were excluded from further analyses. From the initial 1947 samples generated through stochastic sampling, 162 samples had magnitudes greater than 8.78. To ensure computational feasibility while maintaining a robust representation of potential earthquake scenarios, we downscaled this number to 30 events. This selection was based on balancing computational feasibility, sensitivity and ensuring a representative sample size to capture variability in earthquake scenarios effectively. Consequently, we selected 30 samples with magnitudes ranging from Mw 8.8 to 9.2 for a more detailed examination. This selection was based on the likelihood that these samples would cause extensive inundation, which is consistent with paleotsunami deposits. By using these samples, we computed the seafloor deformation resulting from shear and tensile faults in an elastic half-space45 to gain a deeper insight into potential seismic sources of the observed paleotsunami evidence.

The sensitivity analysis indicated that 30 samples provided sufficient coverage of the variability in earthquake scenarios while remaining computationally manageable. The sensitivity analysis involved comparing the results obtained using different numbers of samples and assessing the impact on the modelled inundation patterns and seafloor deformations. The results showed that increasing the sample size beyond 30 did not significantly change the variability captured, as the normalised standard deviation remained around 0.5 (Fig. 9). However, reducing the sample size below 20 led to a noticeable decrease in the variability representation. This approach allowed us to perform a comprehensive assessment without compromising the model’s robustness.

Probability calculation

We used the Gutenberg–Richter law to characterise the frequency-magnitude distribution of the stochastic earthquake scenarios, which is often expressed as:

where N is the number of earthquakes greater or equal to magnitude M in a specified period, and a and b are constants specific to a particular time and place.

The cumulative distribution function of the Gutenberg–Richter law, which represents the probability of an earthquake with a magnitude below or equal to M, is given by:

where M is the magnitude of the earthquake, and Mmin is the minimum observed magnitude.

We used a dataset of instrumentally recorded earthquakes along the Japan Trench provided by the Japanese Meteorological Agency to calculate the occurrence probabilities of earthquake scenarios based on magnitude. Historically, earthquakes with magnitudes greater than Mw 8 include the Mw 9.1 event on March 11, 2011; Mw 8.2 event on May 16, 1968; Mw 8.4 event on March 2, 1933; and Mw 8.5 event on June 15, 1896. For our calculations, we adopted a b-value of 0.6 in line with the methodological approach employed by Nanjo et al.31. Given that the Jogan deposit, perceived as the precursor of the 2011 Tohoku-Oki tsunami, has an established age of 869 years C.E., we used a period of 1000 years for our probability calculations.

Sensitivity analysis for the frequency-magnitude distribution

To perform the sensitivity analysis of the b-value, we varied it from 0.1 to 1.0 in steps of 0.1, capturing a wide range of scenarios. For each b-value, we calculated and normalised the earthquake magnitude probabilities using the Gutenberg–Richter law. We then assessed the impact of different b-values on the probability distribution. Additionally, we quantified uncertainty by assuming a normal distribution for the b-values centred around 0.6 with a standard deviation of 0.1. We generated 1000 samples from this distribution, calculated each earthquake’s probabilities, and computed their mean and standard deviation. The results, visualised to show the distribution of probabilities and their confidence intervals, highlighted the model’s sensitivity to such differences.

Earthquake location

Regarding the earthquake’s location, we associated the probability of each scenario with the mean of the interseismic coupling coefficients and their confidence intervals as outlined by Loveless et al.30. Given that these coupling coefficients range from 0 to 1, which represent the degree of locking in the subduction zone (i.e., the higher the coefficient, the greater the locking), we can reasonably infer that these coefficients also illustrate the probability of earthquake generation contingent on the distribution of locking.

Therefore, the probability of an earthquake scenario, P(EQ), can be calculated as the product of the conditional probability of a given magnitude, assuming an earthquake occurs, and the conditional probability of a specific location, given an earthquake occurs. So, we have:

where \(P(\,{\mbox{Magnitude}}| {\mbox{Earthquake}})=1-1{0}^{(-b(M-{M}_{\min }))}\), is the probability of a certain magnitude given an earthquake, M is the magnitude of the earthquake, and \({M}_{\min }\) is the minimum observed magnitude. The probability P(Location∣Earthquake) is defined as the mean of the interseismic coupling coefficients, representing the likelihood of earthquake nucleation at specific locations along the subduction zone. The distribution of these probabilities is derived from historical seismic data and the spatial distribution of coupling coefficients, providing a probabilistic measure of earthquake likelihood across different locations.

The assumption that earthquake magnitude and location are independent events, while a simplification, plays a crucial role in our analysis by effectively incorporating inherent uncertainties about the precise locations of tsunamigenic earthquakes. This approach, albeit a simplification, assumes independence and, hence, unpredictability of magnitudes, aligns with the commonly accepted practices in seismic research. However, recent studies have sparked a debate on the feasibility of earthquake predictions based on the dependence of earthquake magnitude on past seismicity. While clustering in time and space is widely accepted, the existence of magnitude correlations remains more questionable50,51. Our model’s approach, acknowledging these complexities, is a pragmatic solution to the challenging task of earthquake uncertainty assessment.

Level 2: tsunami occurrence

Morphology reconstruction

Extending approximately 9 km between the Natori and Nanakita rivers (Fig. 4), the coastline of the Sendai Plain is marked by a series of shore-parallel beach ridges that highlight the progradation of the alluvial plain10. Because of the historical significance of this area, a considerable amount of aerial imagery dating back to the 1950s is available. This archival resource enables access to regional topographic information prior to human intervention. We reconstructed the morphology of the Sendai Plain using 33 aerial photographs taken in 1961. These photographs show areas with fewer man-made topographic modifications, such as seawalls. Simultaneously, the resolution, spacing, and number of photos allowed for the stereographic reconstruction of the area. We used the ortho mapping workflow of ArcGIS Pro to scan aerial imagery52.

The aim of this morphological reconstruction was to simulate the formation of a paleotsunami deposit against a background of minimally modified topography, thereby reducing the effects of modern topographic features on our numerical modelling. We used scanned aerial photographs from 1961 onwards. Before this time, no significant coastal structures were identified. This approach allowed for more accurate and authentic modelling of paleotsunami deposit formation.

In this reconstruction, we acknowledge that the paleocoastline during the 869 C.E. Jogan tsunami was 1 km farther inland compared with the present shoreline (Fig. 4). The decision against the adjustment of the shoreline position to reflect the Jogan-era coastline in our morphological model has two reasons: (1) uncertainties in the height and width of paleo-beach ridges, which significantly impact sediment transport processes and quantities, complicate such an adjustment, and (2) to maintain consistency with the current geographical context and for practical reasons related to available data and the coastal morphology uncertainty, we used the 1961 shoreline as a baseline. However, we corrected the position of the Jogan tsunami deposits by moving them 1 km seaward to align with the present shoreline. This adjustment ensures that our model reflects the current coastal configuration while considering historical changes, providing a balanced approach to understanding modern and historical coastal dynamics.

Tsunami modelling

By using the seafloor deformation values calculated using Okada’s model45, we simulated tsunami propagation and inundation using Delft3D-FLOW. In this method, the propagation of tsunami waves is computed based on a free-surface gradient. Delft3D-FLOW computes unsteady flow, sediment transport and feedback morphology changes, including parameters such as roughness, initial sediment thickness, grain size, and density48. Simulations were performed using structured grids and domain decompositions. Six domains were used to calculate wave propagation from the tsunami source to the survey area, with spatial resolutions of 15, 45, 135, 405, 1215, and 3645 m, respectively.

Sensitivity analysis (SA) was conducted to account for variability in the grain size and initial sediment availability in cumulative sediment deposition (CSD). We used a Mw 9.1 earthquake will run the same tsunami simulation for sediment transport and morphological changes. We conducted sensitivity analyses for both grain size and sediment availability.

Sensitivity analysis for grain size

We conducted SA for grain size utilising seven distinct sizes: 187, 214, 241, 267, 294, 320, and 348 μm (Fig. 5a). Our choice of specific grain sizes was based on the results reported by Takahashi et al.32, who demonstrated the critical role of sand grain size in sediment transport during tsunamis. Their study utilised a flume experiment in a closed rectangular channel with a wavemaker, assessing sediment transport rates for sand grains with median diameters of 166, 267, and 394 μm. Their results indicated that the coefficients for bed and rising loads in sediment transport significantly depend on the grain size, influencing the sediment transport rate32). This dependency highlights the necessity of considering a range of grain sizes to accurately model sediment dynamics in tsunami scenarios. This range was also used by Masuda et al.12 for tsunami deposit source identification. The CSD from each model was normalised using the CSD of the 267 μm sample, serving as the standard parameter across all tsunami computations in the general model. We then normalised the thickness distributions for each of the seven models and determined their envelope distributions. This approach provides the broadest possible spectrum of sediment thickness within the analysed grain size range, reflecting the influence of grain size on sediment transport characteristics, as observed in previous studies.

Calibration using the actual grain size

We calibrated our modelling outputs using real grain-size data from the Jogan deposit. We used the grain size information extracted from a previous study10 and computed the ensemble average distribution of the grain size. By multiplying this empirical distribution by the distribution obtained from the SA, we fine-tuned the output of our model to mirror the actual grain-size characteristics observed in the Jogan deposit. This process enabled the model to encapsulate the variability in sediment thickness associated with distinct grain sizes, thereby providing a more detailed description of the sediment dynamics within the study area (Fig. 5d).

Sensitivity analysis for sediment availability

To investigate the effect of the initial sediment availability on the CSD, we set the model to a uniform initial sediment thickness. This was performed using thicknesses of 2, 3, 4, 5, 6, 7, and 8 m (Fig. 5e). This range was carefully chosen to encompass the diversity of initial sediment thickness layers observed in previous studies. For example, Zeiler et al.53 reported sediment thicknesses ranging from 0.4–1.5 m in shallower zones and 2–3 m in deeper zones in the North Sea. In addition, this range accounts for uncertainties associated with sediment configurations specific to areas such as Sendai Bay. For comparison, each model’s CSD was normalised using the CSD of the 4 m sample, which was designated as the reference parameter for all tsunami calculations by using a general model. Subsequently, we computed the normalised thickness distributions for the seven models and established an envelope distribution to represent the widest conceivable range of sediment thickness in relation to the initial sediment availability.

Monte Carlo simulation to propagate sensitivity analysis uncertainty

To incorporate the uncertainties associated with the grain size and initial sediment availability into the calculation of P(Tsu∣EQ), we used a Monte Carlo simulation approach. We generated random samples from uniform distributions characterised by the mean and confidence intervals derived from the sensitivity analysis for each parameter. Specifically, grain size samples were drawn from a uniform distribution ranging from 0.95 to 1.05, with a mean of 1.01. Similarly, sediment availability samples were drawn from a uniform distribution spanning from 0.98 to 1.01, centred around a mean of 1.00. We conducted 10,000 simulations to ensure the robustness of the statistical results. For each simulation, we calculated P(Tsu∣EQ) using the sampled grain size and sediment availability values. Because the sensitivity analyses indicated that grain size variations contributed approximately twice as much to the uncertainty in P(Tsu∣EQ) than variations in sediment availability, we appropriately weighted the grain size values in this computation. The output of this procedure was a distribution of P(Tsu∣EQ) values incorporating the propagated uncertainties from the grain size and sediment availability parameters. We then computed the mean and standard deviation of this distribution to quantify the central tendency and dispersion of P(Tsu∣EQ), thereby reflecting the inherent uncertainties in these parameters. Note that this approach assumes that the relationship among grain size, sediment availability, and the modelled outcome P(Tsu∣EQ) is independent of the earthquake magnitude. This assumption is valid within the context of our sensitivity analysis, which was conducted using a fixed earthquake magnitude. This allows for the simplified but practical application of the derived P(Tsu∣EQ) distribution across all stochastic earthquake scenarios, explicitly ranging from Mw 8.8 to 9.2. This assumption represents a critical simplification that benefits the generalisation of our results. However, we acknowledge that a different analytical approach is required for various magnitude ranges if the relationship between these parameters and tsunami probability significantly depends on the earthquake magnitude.

Level 3: likelihood

In our Bayesian framework, the term ‘likelihood’ is defined as the probability of observing a specific paleotsunami deposit data under consideration, given the assumed earthquake, tsunami, and post-depositional conditions. The likelihood function is essential in Bayesian inference as it quantifies how well different model parameters explain the observed data. The procedure for calculating this likelihood comprises the following steps.

We assumed the observed data are subjected to various sources of uncertainty, including variability in the slip distribution during earthquake generation, the tsunami-induced morphodynamic process, and variability in sediment deposition and preservation. These sources of uncertainty are modelled using appropriate probability distributions.

For our model, the likelihood function can be expressed as:

where, paleoTsu represents the observed paleotsunami deposit data, postD encompasses post-depositional effects, Tsu refers to the tsunami model variables, and EQ includes earthquake variables, magnitude and location.

Here, we aimed to include the uncertainty in assessing numerical models with a relatively large horizontal resolution (5 m) versus observation points restricted to a sampler resolution of 10 cm in diameter and the implications regarding local topography changes Fig. 10. Additionally, we aimed to assess the post-depositional erosion and compaction. To achieve this, we decomposed the likelihood into two sections. Then we assumed the likelihood as a weighted goodness-of-fit, as follows.

Post-depositional effects

In our evaluation of post-depositional processes that sediments may undergo, we chose to incorporate both erosion and compaction. Post-depositional erosion was assessed using a simple Monte Carlo simulation with a triangular distribution. The bounds for the volume of eroded sediment were derived from the observational data of tsunami deposits linked to the 2004 Indian Ocean tsunami and other tsunami deposits35,36. Reports from these deposits indicate erosion of up to 50% following deposition. The simulation model reached stability after 1000 iterations, which was selected as the cutoff point. After iterating with 30 earthquake samples, 30,000 outputs were obtained. To include the effects of compaction, we estimated the compaction percentage based on estimations made on similar floodplains37. Based on the depth of the upper contact of the Jogan deposit, the amount of compaction was estimated to be 3%.

Goodness-of-fit computation

To assess the fit of our model outputs against the Jogan deposit after accounting for post-depositional effects, we relied on the Kling–Gupta Efficiency (KGE)54. This hydrological model evaluation metric deconstructs the Nash-Sutcliffe efficiency (NSE) and Mean Squared Error (MSE). KGE encapsulates three distinct elements of model performance into a singular metric: correlation, bias (expressed as the mean ratio), and variability (expressed as the standard deviation ratio). This comprehensive approach to performance assessment provides a balance that is not offered by the NSE and MSE, which both prioritise the mean error or squared differences. KGE provides a more even-handed evaluation across the complete spectrum of modelled values, mitigating the sensitivity to extreme values and edges that are inherent in other metrics. This is particularly beneficial for our model, in which the effect of extreme values is undesirable. KGE is defined as follows:

where r is the Pearson correlation coefficient between the observed and simulated values, α(alpha) is the ratio of the standard deviation of the simulated values to the standard deviation of the observed values, and β (beta) is the ratio of the mean of the simulated values to the mean of the observed values.

In our study, we derived the observed values from the thickness values of the Jogan deposit, whereas we obtained simulated values from the model, accounting for post-depositional effects. Our reference simulated value obtained from the model output was computed using the average of the nearest data points within a 15 m radius of the observed point, a strategy designed to accommodate the grid resolution inherent in the modelling process. We normalised the KGE for each scenario using the total number of samples. This normalisation process enabled us to compute the distribution of KGE values for each earthquake scenario, with these distributions subsequently depicted using box plots.

To provide a better representation of uncertainty related to local topographic discrepancies, we calculated the normal-sampled KGE as follows:

where KGEi represents the KGE value for the i-th sample. We then computed the mean and standard deviation of these KGE values to construct confidence intervals, assuming a normal distribution:

Finally, we constructed the 95% confidence intervals for the mean KGE:

These illustrations serve as visual representations of the goodness of fit in relation to the paleotsunami layer. Hence, we gathered 1000 KGE values from every earthquake source to calculate the probability distribution for each corresponding earthquake scenario. In this particular context, the KGE quantifies the alignment between the output of our deposition-erosion-compaction model and the paleotsunami deposit, with a potential range of 0 to 1. Consequently, the distribution of all outputs per earthquake scenario signifies the probability that a specific scenario can generate a paleotsunami deposit of a distinct nature. This corresponds to our definition of ‘likelihood’ within the Bayesian framework, which is as follows:

where yi represents the observed deposit thickness at location i, μi is the expected deposit thickness at location i based on the model parameters, σi is the standard deviation representing the measurement error and natural variability at location i, and n is the number of observations. This explicit form of the likelihood function incorporates the KGE as a weighting factor, which enhances the likelihood of scenarios that better fit the observed data, thereby justifying its use within our Bayesian framework.

Posterior distribution: finding potential paleotsunami sources

Finally, we computed the product of the likelihood, tsunami occurrence model, and earthquake probability (prior) to account for the posterior distribution. It represents the probability that a specific scenario responsible for a particular paleotsunami deposit is proportional to the product of the likelihood of the paleotsunami data given the model parameters and the prior probability of the parameters in the model.

We use a point-wise approach to compute the posterior distribution of the parameters given the observed paleotsunami deposits by evaluating the product of the likelihood and prior distributions at discrete points in the parameter space. This involves iterating over all combinations of the parameters. The posterior distribution is defined as follows:

where p(paleoTsu∣posDep, Tsu, EQ) is the likelihood, p(Tsu∣EQ) is the prior distribution of the tsunami process, and p(EQ) is the prior distribution of the earthquakes.

The posterior is then normalised to ensure that the sum of the probabilities over all parameter combinations equals 1.

Posterior predictive checks

To assess the model’s fit to the observed data, we conducted posterior predictive checks (PPC). PPC involves generating new samples of paleotsunami deposits from the posterior predictive distribution and comparing these to the actual observed data. This process evaluates if the generated datasets are consistent with the observed paleotsunami deposits.

Replicated datasets are generated from the posterior predictive distribution. First, each replication randomly selects an erosion percentage from a predefined set. The selected erosion percentage is then applied to the original model data to generate a replicated model dataset:

The replicated model data is adjusted to ensure that all values are non-negative. Subsequently, the adjusted replicated model dataset is added to a list of replicated datasets. This iterative process is performed for the specified number of replications. Consequently, summary statistics for a given dataset are calculated, including the mean, standard deviation, minimum, and maximum deposit thickness values. Jogan’s paleotsunami data and model data files are loaded, and histograms with KDE overlays, respectively, are generated to facilitate comparison. These comparisons help assess how well the model-generated replicated data aligns with the observed data.

Data availability

The datasets generated and analysed during the current study are available in the Zenodo repository, accessible via the following https://doi.org/10.5281/zenodo.13319447. These datasets contain all the necessary information to replicate the results presented in this study.

Code availability

The calculations described in the ‘Methods’ section utilised shared Fortran libraries (for stochastic sampling) and Python libraries such as NumPy, SciPy, Pandas, Matplotlib, PyMC3 and Seaborn. Numerical modelling was conducted using the Delft3D 4 Suite (structured), available at https://www.deltares.nl/en/software-and-data/products/delft3d-4-suite. For further information or specificities about the coding, please contact the corresponding author.

References

Minoura, K., Nakaya, S. & Sato, H. Traces of tsunamis recorded in lake deposits—an example from Jusan, Shiura-mura, Aomori. J. Seismol. Soc. Jpn. 2, 183–196 (1987).

Atwater, B. F. Evidence for great Holocene earthquakes along the outer coast of Washington State. Science 236, 942–944 (1987).

Reinhart, M. & Bourgeois, J. Distribution of anomalous sand at Willapa Bay, Washington: evidence for large-scale landward-directed processes. EOS 1469 (1989).

Grezio, A. et al. Probabilistic tsunami hazard analysis: multiple sources and global applications. Rev. Geophys. 55, 1158–1198 (2017).

Cisternas, M., Garrett, E., Wesson, R., Dura, T. & Ely, L. L. Unusual geologic evidence of coeval seismic shaking and tsunamis shows variability in earthquake size and recurrence in the area of the giant 1960 Chile earthquake. Mar. Geol. 396, 54–66 (2018).

Behrens, J. et al. Probabilistic tsunami hazard and risk analysis: a review of research gaps. Front. Earth Sci. 9, 1-28 (2021).

Ramírez-Herrera, M. T. et al. Tsunami deposits highlight high-magnitude earthquake potential in the Guerrero seismic gap Mexico. Commun. Earth Environ. 5, 198 (2024).

Cummins, P. R., Kong, L. S. & Satake, K. Tsunami Science Four Years after the 2004 Indian Ocean Tsunami. Part II* Observation and Data Analysis 1 edn (Birkhäuser, 2009).

Goto, K., Chagué-Goff, C., Goff, J. & Jaffe, B. The future of tsunami research following the 2011 Tohoku-oki event. Sediment. Geol. 282, 1–13 (2012).

Sugawara, D., Goto, K., Imamura, F., Matsumoto, H. & Minoura, K. Assessing the magnitude of the 869 Jogan tsunami using sedimentary deposits: prediction and consequence of the 2011 Tohoku-oki tsunami. Sediment. Geol. 282, 14–26 (2012).

Ioki, K. & Tanioka, Y. Re-estimated fault model of the 17th century great earthquake off Hokkaido using tsunami deposit data. Earth Planet. Sci. Lett. 433, 133–138 (2016).

Masuda, H., Sugawara, D., Abe, T. & Goto, K. To what extent tsunami source information can be extracted from tsunami deposits? Implications from the 2011 Tohoku-oki tsunami deposits and sediment transport simulations. Prog. Earth Planet. Sci. 9, 65 (2022).

Sato, K., Yamada, M., Ishimura, D., Ishizawa, T. & Baba, T. Numerical estimation of a tsunami source at the flexural area of Kuril and Japan trenches in the fifteenth to seventeenth century based on paleotsunami deposit distributions in northern Japan. Prog. Earth Planet. Sci. 9, 72 (2022).

Mitra, R., Naruse, H. & Abe, T. Estimation of tsunami characteristics from deposits: inverse modeling using a deep-learning neural network. J. Geophys. Res. Earth Surf. 125, 1–22 (2020).

Mitra, R., Naruse, H. & Fujino, S. Reconstruction of flow conditions from 2004 Indian Ocean tsunami deposits at the Phra Thong island using a deep neural network inverse model. Nat. Hazards Earth Syst. Sci. 21, 1667–1683 (2021).

Mitra, R., Naruse, H. & Abe, T. Understanding flow characteristics from tsunami deposits at Odaka, Joban coast, using a deep neural network (DNN) inverse model. Nat. Hazards Earth Syst. Sci. 24, 429–444 (2024).

Jaffe, B., Goto, K., Sugawara, D., Gelfenbaum, G. & Selle, S. P. L. Uncertainty in tsunami sediment transport modeling. J. Disaster Res. 11, 647–661 (2016).

Sugawara, D. Numerical modeling of tsunami: advances and future challenges after the 2011 Tohoku earthquake and tsunami. Earth Sci. Rev. 214, 103498 (2021).

van Duinen, B., Kaandorp, M. L. & van Sebille, E. Identifying marine sources of beached plastics through a bayesian framework: application to southwest Netherlands. Geophys. Res. Lett. 49, e2021GL097214 (2022).

Boumis, G., Geist, E. L. & Lee, D. Bayesian hierarchical modeling for probabilistic estimation of tsunami amplitude from far-field earthquake sources. J. Geophys. Res. Oceans 128, e2023JC020002 (2023).

Minoura, K., Imamura, F., Sugawara, D., Kono, Y. & Iwashita, T. The 869 Jogan tsunami deposit and recurrence interval of large scale tsunami on the pacific coast of northeast Japan. J. Nat. Disaster Sci. 23, 83–88 (2001).

Sawai, Y., Namegaya, Y., Okamura, Y., Satake, K. & Shishikura, M. Challenges of anticipating the 2011 Tohoku earthquake and tsunami using coastal geology. Geophys. Res. Lett. 39, 21309 (2012).

Namegaya, Y. & Satake, K. Reexamination of the a.d. 869 Jogan earthquake size from tsunami deposit distribution, simulated flow depth, and velocity. Geophys. Res. Lett. 41, 2297–2303 (2014).

Tamura, T., Sawai, Y. & Ito, K. OSL dating of the AD 869 Jogan tsunami deposit, northeastern Japan. Quat. Geochronol. 30, 294–298 (2015).

Sangode, S. J. & Meshram, D. C. A comparative study on the style of paleotsunami deposits at two sites on the west coast of India. Nat. Hazards 66, 463–483 (2013).

Kempf, P. et al. Paleotsunami record of the past 4300 years in the complex coastal lake system of Lake Cucao, Chiloé Island, south central Chile. Sediment. Geol. 401, 105644 (2020).

Tetsuka, H., Goto, K., Ebina, Y., Sugawara, D. & Ishizawa, T. Historical and geological evidence for the seventeenth-century tsunamis along Kuril and Japan trenches: implications for the origin of the ad 1611 Keicho earthquake and tsunami, and for the probable future risk potential. Geol. Soc. Lond. Spec. Publ. 501, 269–288 (2020).

The Japan Society of Civil Engineers (JSCE). Tsunami assessment method for nuclear power plants in Japan 2016. https://committees.jsce.or.jp/ceofnp/node/140 (2016).

Haining, R. & Guangquan, L. Modelling Spatial and Spatial-Temporal Data: A Bayesian Approach 1st edn (CRC Press, 2020).

Loveless, J. P. & Meade, B. J. Spatial correlation of interseismic coupling and coseismic rupture extent of the 2011 Mw = 9.0 Tohoku-oki earthquake. Geophys. Res. Lett. 38, 1–5 (2011).

Nanjo, K. Z., Hirata, N., Obara, K. & Kasahara, K. Decade-scale decrease in b value prior to the M9-class 2011 Tohoku and 2004 Sumatra quakes. Geophys. Res. Lett. 39, 1–4 (2012).

Takahashi, T., Kurokawa, T., Fujita, M. & Shimada, H. Hydraulic experiment on sediment transport due to tsunamis with various sand grain size. J. Jpn. Soc. Civ. Eng. Ser. B2 Coast. Eng. 67, I_231–I_235 (2011).

Sugawara, D., Takahashi, T. & Imamura, F. Sediment transport due to the 2011 Tohoku-oki tsunami at Sendai: results from numerical modeling. Mar. Geol. 358, 18–37 (2014).

Wahlstrom, E. Rocks and Unconsolidated Deposits: Origin, Composition, and Properties, Vol. 6, 31–51 (Elsevier, 1974).

Szczuciński, W. The post-depositional changes of the onshore 2004 tsunami deposits on the Andaman Sea coast of Thailand. Nat. Hazards 60, 115–133 (2012).

Szczuciński, W. Post-Depositional Changes to Tsunami Deposits and their Preservation Potential 1st edn, 443–469 (Elsevier, 2020).

Sadler, P. M. Sediment accumulation rates and the completeness of stratigraphic sections. Source J. Geol. 89, 569–584 (1981).

Goda, K., Yasuda, T., Mori, N. & Maruyama, T. New scaling relationships of earthquake source parameters for stochastic tsunami simulation. Coast. Eng. J. 58, 1650010-1–1650010-40 (2016).

Nishikawa, T. & Ide, S. Earthquake size distribution in subduction zones linked to slab buoyancy. Nat. Geosci. 7, 904–908 (2014).

Bürgmann, R., Uchida, N., Hu, Y. & Matsuzawa, T. Tohoku rupture reloaded? Nat. Geosci. 9, 183 (2016).