Abstract

Background

There is no standardized EEG duration guideline for detecting epileptiform abnormalities in patients, and research on this topic is scarce. This study aims to determine an optimal EEG duration for efficient detection of epileptiform abnormalities across different patient groups.

Methods

Retrospective analysis was performed on EEG recordings and clinical data of patients with the first seizure and epilepsy. Patients were categorized based on various factors, including the interval time since the last seizure, use of anti-seizure medication (ASM), and seizure frequency. The detection ratio (DR) of epileptiform abnormalities and latency time for their discovery were calculated. Statistical analyses, including chi-square tests, logistic regression, and survival analysis were utilized to illustrate DR and latency times.

Results

In whole-night EEG recordings, the DR was 37.6% for the first seizure group and 57.4% for the epilepsy group. Although the maximum latency times were 720 min in both two groups, DR in the first seizure group was distinctly decreased beyond 300 min. Significant factors influencing the DR included the use of ASM in the first seizure group (P < 0.05) and seizure frequency in the epilepsy group (P < 0.001). For epilepsy patients who experience a seizure at least once a month or undergo timely EEG recordings (within 24 h after a seizure), the DR significantly increases, and the maximum latency time is reduced to 600 min (P < 0.001). Additionally, the DR was significantly reduced after 240 min in epilepsy patients who had been seizure-free for more than one year.

Conclusions

In this retrospective study, we observed a maximum latency of 720 min for detecting epileptiform abnormalities in whole-night EEG recordings. Notably, epilepsy patients with a higher seizure frequency or timely EEG recordings demonstrated both a higher detection ratio and a shorter maximum latency time. For patients exhibiting a low detection ratio, such as those experiencing their first seizure or those with epilepsy who have been seizure-free for more than a year, a shorter EEG duration is recommended. These findings underscore the importance of implementing customized EEG strategies to meet the specific needs of different patient groups.

Similar content being viewed by others

Introduction

Background

According to the consensus of the International League Against Epilepsy (ILAE) and the International Bureau for Epilepsy, an epileptic seizure is defined as a transient occurrence of signs and/or symptoms resulting from abnormal, excessive, or synchronous neuronal activity in the brain [1]. Epilepsy is a brain disorder characterized by a lasting predisposition to generate epileptic seizures. Diagnosis of epilepsy typically involves clinical evaluation and electroencephalography (EEG) analysis [1]. Scalp EEG not only captures epileptic seizures but also identifies interictal epileptiform discharges (IEDs), indicating simultaneous activation of cortical neurons across extensive cortical areas. The American Academy of Neurology Guidelines on Management of an Unprovoked First Seizure in Adults corroborate a heightened recurrence risk for patients exhibiting an EEG with epileptiform abnormalities [2]. These abnormalities play a crucial role in the diagnosis and treatment of epilepsy [3, 4].

There was no standardized EEG duration guideline for detecting epileptiform abnormalities in patients. Guidelines from the American Clinical Neurophysiology Society and the Canadian Society of Clinical Neurophysiologists only recommend at least 20 min for routine EEGs and 30 min for sleep EEGs [5,6,7]. Numerous studies have explored methods to enhance EEG’s capability in detecting epileptiform abnormalities. Tutkavul K. et al. demonstrated the increased likelihood of detecting IEDs with an extension of recording time from 20 to 45 min [8]. Craciun L. et al. observed a higher incidence of interictal EEG abnormalities when prolonging the recording duration from 30 to 180 min [9]. Another study revealed a higher detection ratio with 6-hour EEG monitoring compared to 30-minute monitoring in adults with a first unprovoked seizure [10]. The optimal duration for EEG monitoring lacks strong evidence [11]. Although EEG sensitivity to detecting epileptiform abnormalities may correlate with recording time, the extended duration poses challenges for technicians, increases patient costs, and prolongs appointment waiting times.

Objectives

This prompts the question of whether it’s feasible to reduce EEG duration without affecting the detection of epileptiform abnormalities. Sleep has proven to be more effective in detecting epileptiform abnormalities compared to other activation procedures like photic stimulation and hyperventilation [12]. Given the risks of seizures associated with sleep deprivation [13] and the comparable detection ratios from sleep EEG recordings during spontaneous and sleep-deprived states [14], the effectiveness of overnight EEG monitoring is gaining interest and warrants further study. In addition, studies have investigated the factors that influence the detection ratio of epileptiform abnormalities, but little research has been designed to discuss the duration of EEG utilizing these factors. Our research seeks to determine an optimal, shorter EEG monitoring duration that effectively detects epileptiform abnormalities across different patient groups, focusing on the identification of factors that influence detection ratios.

Methods

Study design and setting

This study retrospectively analyzed EEG recordings and clinical data from adolescent and adult patients who experienced their first seizure or were treated for epilepsy at the Neurology Department’s epilepsy center in Xingtai People Hospital. The study covered the period from June 2021 to July 2022 and followed the STROBE guidelines.

Participants

The inclusion criteria for epileptic seizures adhered to the conceptual definitions outlined by the ILAE in 2005 [1], denoting a transient manifestation of signs and/or symptoms resulting from abnormal, excessive, or synchronous neuronal activity in the brain. Epilepsy patients met the criteria for epilepsy, requiring at least two unprovoked (or reflex) seizures occurring > 24 h apart or a diagnosis of epilepsy syndrome [15].

The exclusion criteria were as follows: (1) Paroxysmal events due to convulsive syncope, parasomnias, movement disorders, transient ischemic attacks, and other non-epileptic events, differentiated by clinical presentations and additional tests [16]. (2) Suspected cases of Psychogenic Non-epileptic Seizures, identified through detailed medical histories, reviews of previous medical records, and psychometric assessments [17]. (3) Certain epilepsy syndromes sensitive to activation procedures, identified by EEGs including photic stimulation and hyperventilation tests, which can trigger epileptiform abnormalities.

Data sources

The International Federation of Clinical Neurophysiology and the ILAE recommend the essential use of video-EEG in all patients with suspected epilepsy or clinical events [11]. The video-EEG (NihonKohden, Japan) recordings of first seizure and epilepsy patients, starting from 4:00–5:00 p.m. on the first day and ending at 8:00–9:00 on the following day, covered the waking and whole night sleeping stage. The silver chloride cup electrodes were positioned following the international 10–20 system. All the EEG recordings were retrospectively reviewed by an experienced EEG technologist (JW) and the board-certified attending clinical epileptologists (Y-x X and S-q L), who had obtained intermediate qualification certification in the EEG and epilepsy professions from the China Association Against Epilepsy. The digital EEGs, presented in bipolar, referential, and average montages, were typically filtered between 0.5 and 70 Hz and examined at a sensitivity of 10 µv/mm with a temporal resolution of 30 mm/s. Ictal epileptic EEG and IEDs are identified based on established clinical EEG practices and past consensus [18, 19]. Ictal epileptic EEG was identified by morphologic and distributive evolution. IEDs, including spikes, polyspikes, and sharp waves with or without slow waves, were recognized based on morphology and amplitude, standing out in the normal EEG background. Ictal epileptic EEG and IEDs were considered epileptiform abnormalities (EAs). Recorded whether EAs were detected and the latency for the first epileptiform abnormality in each patient.

However, normal EEG variants, such as small spike sharp, wicket spikes, and fourteen and six Hz positive spikes, were not identified as EAs. It was also worth mentioning that physiological spike-wave-like EEG transients often occur in the whole-night EEG recording to confuse the epileptiform discharges [20], all of these sleep transient waves should be recognized and identified carefully via different montages by experienced EEG recording reviewers to avoid false positives.

Variables

The patients were initially categorized into two groups: the first seizure group and the epilepsy group. Clinical data, comprising gender, age, the last seizure time, EEG recording time, the use of anti-seizure medication (ASM), and seizure frequency, were collected for both sets of patients.

Patients experiencing their first seizure were classified into three categories: a single epileptic seizure, a cluster of seizures within 24 h, and a first episode of status epilepticus [21], with the latter two requiring ASM for management. Subsequently, these patients were subdivided into two subgroups based on the use of ASM. Previous research [22, 23] categorized the interval time between the last seizure and the EEG recording as either within 24 h or between 2 and 7 days. In our study, we refined this into three subgroups: ≤24 h (subgroup S1), between 24 h and 7 days (subgroup S2), and > 7 days (subgroup S3).

In the epilepsy group, the grouping method for the interval time remained the same as that in the first seizure group. Based on the definition of drug-resistant epilepsy by ILAE [24], which states that adequate trials of two tolerated and appropriately chosen and used ASMs can’t achieve sustained seizure freedom, the use of ASM was grouped into three subgroups: without ASM (subgroup M0), with single ASM (subgroup M1), and with two or more ASMs (subgroup M2). Referring to prior grouping methodologies on seizure frequency, patients were categorized into four subgroups based on the number of seizures per unit time [25, 26]: daily to weekly seizures (subgroup F1), one seizure per week to one per month (subgroup F2), one seizure per month to one per year (subgroup F3), and seizure-free for more than one year (subgroup F4).

Study size

According to prior research [23], EEG recordings within 24 h and more than 2 or 7 days post-seizure demonstrated epileptiform abnormality detection rates of 77% and 33%-41%, with an estimated sample size of 28 cases per subgroup. For seizure frequencies above 12/year and below 12/year, the yield of epileptiform abnormalities was 68% and 41%, with approximately 53 cases in each subgroup.

Statistical methods

The detection ratio (DR) of EAs and the latency time for the first epileptiform abnormality were calculated for all groups and subgroups. SPSS 23.0 was used for statistical analysis. The chi-square test compared DR differences, while multivariate logistic regression identified factors influencing DR. Kaplan-Meier analysis illustrated latency times. For DR below 50%, only maximum latency was considered; above 50%, both median and maximum latencies were evaluated. The decreased cutoff point for detecting EAs was noted. The log-rank test assessed temporal differences in DR. The significance threshold was set at P < 0.05, adjusted by Bonferroni correction for multiple subgroup comparisons.

Results

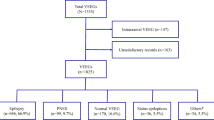

This study included a total of 360 patients, including 109 first seizure patients (73 males and 36 females) with a mean age of 46.05 ± 21.07, and 251 epilepsy patients (152 males and 99 females) with a a mean age of 37.32 ± 18.71. Upon reviewing EEG recordings, EAs were identified in 185 of 360 patients. Specifically, IEDs were detected in 171 patients, and ictal epileptic EEG was recorded in 14 patients.

The differences between first-seizure and epilepsy groups

In the first seizure group, EAs were detected in 41 patients with a DR of 37.6%, and the maximum latency time for discovering EAs was 720 min. Among the 109 patients experiencing their first seizure, the follow-up period ranged from 1 to 2 years. Of the 68 patients without detected EAs, 21 experienced recurrent seizures. In the epilepsy group, 144 patients detected the EAs with a DR of 57.4%, and the median and maximum latency times were 300 and 720 min, respectively. A chi-square test showed a significantly lower DR in the first seizure group compared to the epilepsy group (P = 0.001; Fig. 1a). Moreover, the log-rank test indicated a significant difference in the increase of the DR over time between the two groups (P = 0.001). Despite both groups having the same maximum latency time of 720 min, the yield of EAs in the first seizure group notably decreased beyond 300 min, whereas in the epilepsy group, EAs continued to be detected up to 720 min (Fig. 1b).

(a) The detection ratio in the first seizure group is less than that in the epilepsy group. (*P = 0.001). (b) Significant differences in detection ratio increase over time between the first seizure and epilepsy groups. In the first seizure group, the detection ratio of epileptiform abnormalities decreases after 300 min, while in the epilepsy group, epileptiform abnormalities are detected up to 720 min

Influencing factors and latency times in the first seizure group

Table 1 presents the DR in each subgroup. Logistic regression analysis was conducted to explore factors influencing DR, including gender, age, the interval time between the last seizure and EEG recording, and the use of ASM. The results showed that the first three factors had an insignificant impact, while the use of ASM significantly influenced DR (P = 0.012). The DR in the without ASM subgroup was 23.5%, lower than that in the with ASM subgroup (44%), with an odds ratio (OR) of 0.33 and a 95% confidence interval (CI) of 0.12–0.93 (Fig. 2a). The increase of DR with the extension of time in the without ASM and with ASM subgroups showed no significant difference by log-rank test. The maximum latency time in the without ASM subgroup was 240 min, while in the with ASM subgroup, the decreased cutoff point for detecting EAs was 300 min (Fig. 2b). Table 1 also indicates that the DR in subgroup S3 (EEG recorded over 7 days after seizure) was lower (22.2%), but these differences were statistically insignificant by the chi-square test.

(a) Variations in detection ratio between subgroups without and with anti-seizure medication. (b) There was no significant difference in the detection ratio increase over time between subgroups without and with anti-seizure medication. Maximum latency was 240 min in the without anti-seizure medication subgroup, and the decreased cutoff point for detecting epileptiform abnormalities in the with anti-seizure medication subgroup was 300 min

Influencing factors and latency times in the epilepsy group

The logistic regression analysis confirmed that gender, age, interval time, and ASM had an insignificant impact on the DR, while seizure frequency exerted a significant influence (P < 0.001; Table 2). DR varied with seizure frequencies: 85.5% in subgroup F1 (seizures daily to weekly), 72.1% in subgroup F2 (one seizure a month to a week), 46.7% in subgroup F3 (one seizure a month to a year), and 31.5% in subgroup F4 (seizure-free > 1 year). The DR in subgroups F1 and F2 was significantly higher than that in subgroup F4 (P < 0.001; subgroup F1 vs. subgroup F4: OR 10.54, 95%CI 3.91–28.42; subgroup F2 vs. subgroup F4: OR 5.91, 95%CI 2.37–14.72; Fig. 3a). The increase of DR over time varied significantly among different seizure frequencies, as determined by the log-rank test (Fig. 3b: P < 0.001 subgroup F1 vs. subgroup F3 or F4, subgroup F2 vs. subgroup F4; P = 0.001 subgroup F2 vs. subgroup F3). However, no significant differences were observed between the F1 and F2 subgroups or between the F3 and F4 subgroups. The median latency times in subgroups F1 and F2 were 30 min and 120 min, respectively, with maximum latency times of 600 min and 330 min. Both subgroups F3 and F4 had a maximum latency time of 720 min. In subgroup F4, EAs were scarce after 240 min, whereas in the other subgroups, EA detection gradually increased until reaching the maximum latency times.

(a) Comparison of detection ratios in different subgroups of seizure frequencies. **P < 0.001 for subgroup F1 vs. subgroup F4, and subgroup F2 vs. subgroup F4. (b) Significant differences in detection ratio over time between subgroups F1/F2 and F3/F4. In subgroup F4, epileptiform abnormalities seldom appeared after 240 min, whereas other subgroups exhibited a gradual rise in detection until reaching their respective maximum latency times. Maximum latency times for subgroups F1 to F4 were 600, 330, 720, and 720 min, respectively. (c) Differences in detection ratio among different interval times between the last seizure and electroencephalogram recording. #P = 0.039 subgroup S1 vs. subgroup S2; ##P < 0.001 subgroup S1 vs. subgroup S3. (d) Significant differences in detection ratio increase over time exist between subgroups S1 and S3. Maximum latency times for subgroups S1 to S3 were 600, 660, and 720 min, respectively, with a decreased cutoff point for detecting epileptiform abnormalities at about 300 min

Although the interval time between the last seizure and EEG recording showed an insignificant effect on DR in logistic regression analysis, significant differences in DR existed among the three subgroups by chi-square test (P = 0.001; Table 2). DR was 73.2% in subgroup S1 (interval time ≤ 24 h), 54.1% in subgroup S2 (interval time: 24 h-7 days), and 46.3% in subgroup S3 (interval time > 7 days). Significant differences were observed between subgroup S1 and the other two subgroups (subgroup S1 vs. subgroup S2: P = 0.039; subgroup S1 vs. subgroup S3: P < 0.001; Fig. 3c). The maximum latency times from subgroups S1 to S3 were 600 min, 660 min, and 720 min. In subgroups S1 and S2, the median latency times were 120 min and 300 min, respectively. The increase of DR over time showed significant differences between the S1 and S3 subgroups (Fig. 3d; P < 0.001), and the difference mainly happened in the early stages of EEG monitoring. The decreased cutoff point for detecting EAs was about 300 min.

Discussion

In this study, we evaluated whole-night video-EEG recordings, which reduced daytime monitoring but covered the entire night. Unfortunately, due to data limitations, we were unable to compare these with 24-hour or longer EEG recordings. Previous studies recommend EEG durations of at least 48 h for children and 72 h for adults and the elderly to capture a comprehensive view of typical clinical events [27], and 24 h to detect interictal epileptiform abnormalities [28]. In our findings, the detection ratios were 37.6% in the first seizure group and 57.4% in the epilepsy group. These results are comparable to those of a retrospective study that reported a cumulative yield of 39% among subjects with a single unprovoked seizure and 53% among subjects with epilepsy following the first EEG within 24 h [29]. Previous research has indicated a higher incidence of epileptiform discharges during non-rapid eye movement (NREM) sleep [30], with subclinical seizures occurring more frequently during sleep and at night than during wakefulness and daytime [31]. Elderly subjects (≥ 60 years old) were also found to be more prone to interictal epileptiform abnormalities during the 3–4 phase of NREM sleep [32]. In conclusion, whole-night EEG recording, which moderately reduces daytime monitoring but provides continuous overnight observation, is an optimized approach that maintains a high detection ratio of epileptiform abnormalities.

Meanwhile, the optimal duration for detecting epileptiform abnormalities in the whole-night EEG recordings was observed. The maximum latency times of epileptiform abnormalities were both 720 min (12 h) in the first seizure and epilepsy groups. However, for the first seizure group with the low yield of epileptiform abnormalities, the detection ratio was distinctly decreased beyond 300 min, while in the epilepsy group, the epileptiform abnormalities continued to be discovered slowly up to 720 min. This aligns with prior research. The Long-term video-EEG recordings, spanning an average of 4 days, identified IEDs in 134 (53%) out of 255 patients, with 125 (49%) occurring during the first hour of sleep [33]. Another study involving a 24-hour ambulatory EEG, initiated from 12 − 1 p.m. and concluding after 24 h, found no additional value in the yield of epileptiform discharges beyond 13 h in the epilepsy group [34].

Does the effective duration of EEG recording vary among different patients when considering the factors affecting the detection ratio? This study found that age and gender insignificantly impacted the sensitivity of EEG for detecting epileptiform abnormalities. In the first seizure group, ASM was the only factor significantly affecting the detection ratio, while it had an insignificant effect in the epilepsy group. Some investigators suggested that patients without anti-seizure drug therapy and diagnosed with epilepsy or seizures were more prone to epileptiform abnormalities in the EEGs [35], while others contested the impact of ASM on epileptiform discharges among patients with focal epilepsy [36]. In the first seizure group, the detection ratio in the subgroup without ASM was less than 25%, significantly lower than that in the subgroup with ASM. This difference may be related to the underlying severity of the disease rather than the effect of ASM itself on the detection ratio. The detection ratio increase over time was similar between the two subgroups. In the subgroup without ASM, the maximum latency time was 240 min, likely due to its low detection ratio. In the subgroup with ASM, while the maximum latency reached 720 min, there was minimal yield of epileptiform abnormalities beyond 300 min.

A study on the first seizure cohort reported a higher detection ratio of epileptiform discharges in EEG recordings within 24 h after a seizure compared to those recorded later (> 24 h) [22]. Another study on patients with a history of seizures found epileptiform discharges on 77% of EEGs when recorded within 2 days after the last seizure, whereas only 41% of EEGs were positive when recorded after 7 days of the seizure [23]. Logistic regression analysis in this study didn’t identify the interval time between the last seizure and EEG recording as a significant factor affecting the detection ratio. However, in the epilepsy group, there were significant differences in the detection ratio based on different intervals after epileptic seizures, as indicated by the chi-square test. The extension of the interval time led to a gradual decline in the detection ratio of epileptiform abnormalities. In epilepsy patients, the detection ratio was significantly higher when the EEG recording was done within 24 h after the seizure compared to recordings after 24 h or 7 days. Different interval times for recording EEG after the seizure, from S1 to S3, resulted in maximal latency durations for detecting epileptiform abnormalities of 600, 660, and 720 min, respectively. The decreased cutoff point of detection ratio was approximately 300 min, with the most significant differences between the S1 and S3 subgroups occurring early in EEG monitoring. The median latency times for epileptiform abnormalities were 120 min for S1 and 300 min for S2. Timely EEGs within 24 h post-seizure improve detection efficiency in epilepsy patients, allowing for a higher detection ratio in less time. Consequently, the maximum duration for overnight EEGs can be reduced to 10 h, with median latency times generally within 2 h.

A study demonstrated that epilepsy patients with frequent seizures (> 1 per month) were more likely to exhibit epileptiform discharges on a 20–40 min EEG recording [23]. This study, which extended recording times, still highlights the influence of seizure frequency on the incidence of epileptiform abnormalities in epilepsy patients. Compared to subgroup F4 (seizure-free for over a year), subgroups F1 (daily to weekly seizures) and F2 (monthly to weekly seizures) showed a significant increase in the detection ratio of epileptiform abnormalities. Conversely, no significant increase was observed in subgroup F3 (monthly to yearly seizures). In the F1 and F2 subgroups, the median latency times for discovering epileptiform abnormalities were 30 min and 120 min, respectively. To detect epileptiform abnormalities as comprehensively as possible, the maximum duration of the EEG in subgroups F1 and F2 was 600 min and 330 min, respectively, and the maximum latency times in subgroups F3 and F4 were 720 min. There were no differences in the detection ratio over time between the F1 and F2 subgroups or between the F3 and F4 groups. Epilepsy patients experiencing frequent seizures (at least one per month) tend to detect epileptiform abnormalities in a shorter time, with a maximum latency time of less than 10 h and a median latency time of less than 2 h in the whole-night EEG recording. Additionally, in subgroup F4 (seizure-free for over a year), there were few epileptiform abnormalities detected after 240 min, while in the other subgroups, the detection ratio continued to increase slowly until their maximum latency times.

The sample size and follow-up period in our study were limited; the superiority of the whole-night EEG recording and customized EEG duration for diverse patients need to be further verified by a comparative study of a multi-center large sample.

Conclusions

The whole-night EEG recordings, which cover overnight sleep monitoring while shortening daytime awake recordings, are highly effective for detecting epileptiform abnormalities. In both the first seizure and epilepsy groups, the maximum latency times reached 720 min (12 h); however, the detection ratio in the first seizure group significantly dropped after 300 min. For epilepsy individuals with frequent seizures (at least once per month), or those who undergo EEGs within 24 h of a seizure, the detection ratio of epileptiform abnormalities is significantly enhanced, with the maximum latency time reduced to 10 h and the median latency times typically around 2 h. In patients who had been seizure-free for over a year, detection ratios notably declined after 240 min. This study underscores the importance of customizing the optimal duration of EEG for different patients, which is a new endeavor and exploration.

Data availability

All data generated or analyzed during this study are included in this published article.

References

Fisher RS, Boas WVE, Blume W, Elger C, Genton P, Lee P, Engel J. Epileptic seizures and Epilepsy: definitions proposed by the International League against Epilepsy (ILAE) and the International Bureau for Epilepsy (IBE). EPILEPSIA. 2005;46(4):470–2.

Krumholz A, Wiebe S, Gronseth GS, Gloss DS, Sanchez AM, Kabir AA, Liferidge AT, Martello JP, Kanner AM, Shinnar S, et al. Evidence-based guideline: management of an unprovoked first seizure in adults. NEUROLOGY. 2015;84(16):1705–13.

Chen H, Koubeissi MZ. Electroencephalography in Epilepsy evaluation. CONTINUUM: Lifelong Learn Neurol. 2019;25(2):431–53.

Chung YG, Lee W, Na SM, Kim H, Hwang H, Yun C, Kim KJ. Deep learning-based automated detection and multiclass classification of focal interictal epileptiform discharges in scalp electroencephalograms. SCI REP-UK. 2023;13(1):6755.

Flink R, Pedersen B, Guekht AB, Malmgren K, Michelucci R, Neville B, Pinto F, Stephani U, Özkara C. Guidelines for the use of EEG methodology in the diagnosis of epilepsy. ACTA NEUROL SCAND. 2002;106(1):1–7.

Dash D, Dash C, Primrose S, Hernandez-Ronquillo L, Moien-Afshari F, Ladino LD, Appendino JP, Mazepa L, Elliott C, Mirsattari SM, et al. Update on minimal standards for Electroencephalography in Canada: a review by the Canadian Society of Clinical Neurophysiologists. Can J Neurol Sci / J Canadien Des Sci Neurologiques. 2017;44(6):631–42.

Sinha SR, Sullivan L, Sabau D, San-Juan D, Dombrowski KE, Halford JJ, Hani AJ, Drislane FW, Stecker MM. American Clinical Neurophysiology Society Guideline 1: Minimum Technical requirements for performing clinical Electroencephalography. J CLIN NEUROPHYSIOL. 2016;33(4):303–7.

Tutkavul K, Çetinkaya Y. Optimum recording time of routine electroencephalogram for adults with epilepsy. TURK J MED SCI. 2019;49(2):635–8.

Craciun L, Gardella E, Alving J, Terney D, Mindruta I, Zarubova J, Beniczky S. How long shall we record electroencephalography? ACTA NEUROL SCAND. 2014;129(2):e9–11.

Shapiro M, Foster G. Prolonged EEGs in adult patients with a first unprovoked seizure: a prospective pilot study. EPILEPTIC DISORD. 2019;21(6):561–6.

Peltola ME, Leitinger M, Halford JJ, Vinayan KP, Kobayashi K, Pressler RM, Mindruta I, Mayor LC, Lauronen L, Beniczky S. Routine and sleep EEG: minimum recording standards of the International Federation of Clinical Neurophysiology and the International League against Epilepsy. EPILEPSIA. 2023;64(3):602–18.

Baldin E, Hauser WA, Buchhalter JR, Hesdorffer DC, Ottman R. Utility of EEG activation procedures in Epilepsy: a Population-based study. J CLIN NEUROPHYSIOL. 2017;34(6):512–9.

Haut SR, Hall CB, Masur J, Lipton RB. Seizure occurrence. NEUROLOGY. 2007;69(20):1905–10.

Foldvary-Schaefer N, Grigg-Damberger M. Sleep and Epilepsy. SEMIN NEUROL. 2009;29(4):419–28.

Fisher RS, Acevedo C, Arzimanoglou A, Bogacz A, Cross JH, Elger CE, Engel J, Forsgren L, French JA, Glynn M, et al. ILAE Official Report: a practical clinical definition of epilepsy. EPILEPSIA. 2014;55(4):475–82.

Scheffer IE, Berkovic S, Capovilla G, Connolly MB, French J, Guilhoto L, Hirsch E, Jain S, Mathern GW, Moshé SL, et al., et al. ILAE classification of the epilepsies: position paper of the ILAE Commission for Classification and terminology. EPILEPSIA. 2017;58(4):512–21.

Wiseman H, Reuber M. New insights into psychogenic nonepileptic seizures 2011–2014. SEIZURE-EUR J EPILEP. 2015;29:69–80.

Liu X. Clinical electroencephalography. People’s Medical Publishing House; 2017.

Kane N, Acharya J, Beniczky S, Caboclo L, Finnigan S, Kaplan PW, Shibasaki H, Pressler R, van Putten MJAM. Corrigendum to A revised glossary of terms most commonly used by clinical electroencephalographers and updated proposal for the report format of the EEG findings. Revision 2017 [Clin. Neurophysiol. Practice 2 (2017) 170–185]. Clin Neurophys Pract. 2019;4:133.

Beun AM, van Emde Boas W, Dekker E. Sharp transients in the sleep EEG of healthy adults: a possible pitfall in the diagnostic assessment of seizure disorders. Electroencephalogr Clin Neurophysiol Ed. 1998;106(1):44–51.

Neligan A, Adan G, Nevitt SJ, Pullen A, Sander JW, Bonnett L, Marson AG. Prognosis of adults and children following a first unprovoked seizure. COCHRANE DB SYST REV. 2023;1(1):CD013847.

King MA, Newton MR, Jackson GD, Fitt GJ, Mitchell LA, Silvapulle MJ, Berkovic SF. Epileptology of the first-seizure presentation: a clinical, electroencephalographic, and magnetic resonance imaging study of 300 consecutive patients. Lancet. 1998;352(9133):1007–11.

Sundaram M, Hogan T, Hiscock M, Pillay N. Factors affecting interictal spike discharges in adults with epilepsy. Electroencephalogr Clin Neurophysiol. 1990;75(4):358–60.

Kwan P, Arzimanoglou A, Berg AT, Brodie MJ, Allen Hauser W, Mathern G, Moshé SL, Perucca E, Wiebe S, French J. Definition of drug resistant epilepsy: Consensus proposal by the ad hoc Task Force of the ILAE Commission on Therapeutic Strategies. EPILEPSIA. 2010;51(6):1069–1077.

Al Kasab S, Dawson RA, Jaramillo JL, Halford JJ. Correlation of seizure frequency and medication down-titration rate during video-EEG monitoring. EPILEPSY BEHAV. 2016;64:51–6.

Choi H, Hamberger MJ, Munger Clary H, Loeb R, Onchiri FM, Baker G, Hauser WA, Wong JB. Seizure frequency and patient-centered outcome assessment in epilepsy. EPILEPSIA. 2014;55(8):1205–12.

Klein H, Pang T, Slater J, Ramsay RE. How much time is enough? Establishing an optimal duration of recording for ambulatory video EEG. EPILEPSIA OPEN. 2021;6(3):569–78.

Kuo J, Lee-Messer C, Le S. Optimal recording duration of ambulatory EEG (aEEG). EPILEPSY RES. 2019;149:9–12.

Baldin E, Hauser WA, Buchhalter JR, Hesdorffer DC, Ottman R. Yield of epileptiform electroencephalogram abnormalities in incident unprovoked seizures: a population-based study. EPILEPSIA. 2014;55(9):1389–98.

Seneviratne U, Boston RC, Cook M, D’Souza W. Temporal patterns of epileptiform discharges in genetic generalized epilepsies. EPILEPSY BEHAV. 2016;64:18–25.

Jin B, Wang S, Yang L, Shen C, Ding Y, Guo Y, Wang Z, Zhu J, Wang S, Ding M. Prevalence and predictors of subclinical seizures during scalp video-EEG monitoring in patients with epilepsy. INT J NEUROSCI. 2016;127(8):651–8.

Arbasino C, Calorio F, Orsi D, Marchioni G, Tartara E, Marchese E, Rustioni D, Moglia V, Galimberti A. Interictal spiking in adult newly-diagnosed focal epilepsy of unknown cause: the effect of age. CLIN NEUROPHYSIOL. 2015;126(8):1498–504.

Liu X, Issa NP, Rose S, Wu S, Sun T, Towle LV, Warnke PC, Tao JX. The first-hour-of-the-day sleep EEG reliably identifies interictal epileptiform discharges during long-term video-EEG monitoring. SEIZURE-EUR J EPILEP. 2018;63:48–51.

Siddiqi M, Ahmed SN. No further yield of ambulatory EEG for Epileptiform discharges beyond 13 hours. Neurodiagnostic J. 2017;57(3):211–23.

Monif M, Seneviratne U. Clinical factors associated with the yield of routine outpatient scalp electroencephalograms: a retrospective analysis from a tertiary hospital. J CLIN NEUROSCI. 2017;45:110–4.

Guida M, Iudice A, Bonanni E, Giorgi FS. Effects of antiepileptic drugs on interictal epileptiform discharges in focal epilepsies: an update on current evidence. EXPERT REV NEUROTHER. 2015;15(8):947–59.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

Y-x X designed the research, analyzed the data, and wrote this article; Y-x X, JW, and S-q L read the digital EEG and collected data; X-q Y and Z-l F reviewed medical records and collected clinical data; FS provided advice for writing the article.

Corresponding author

Ethics declarations

Ethics approval to participate

This study has been approved by the ethics committee of Xing Tai People Hospital. The authors confirm that the study complies with the Declaration of Helsinki.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xing, Y., Wang, J., Yin, X. et al. Optimizing electroencephalogram duration for efficient detection of epileptiform abnormalities in diverse patient groups: a retrospective study. BMC Neurol 24, 285 (2024). https://doi.org/10.1186/s12883-024-03796-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12883-024-03796-9