Abstract

In pest control, taking the lag of parasitic eggs, the lag effect of pesticide poisoning and the age of releasing natural enemies as control variables, combined with the crop fertility cycle, researches on the optimization problem of pest control models at seedling stage, the bud stage, and filling stage of crops fill in a gap. For these purposes, a generalized hybrid optimization problem involving state delay with characteristic times and parameter control is presented. Then an algorithm based on a gradient computation is given. Finally, two examples in an agroecological system are given to exhibit the effectiveness of the proposed optimization algorithm.

Similar content being viewed by others

1 Introduction

A mathematical model for continuous control of insect pests is mostly used by a differential equation to describe the relationship between preys and predators. Apreutesei et al. [1] and Srinivasu et al. [2] investigated the optimal parameter selection problem of the pest control models. Apreutesei [3], Kard [4], Bhattacharyya [5, 6], and Molnar [7] took the external interference intensities of systems, such as the rate of pesticide poisoning, releasing rates of parasitic eggs, and infertility pests as well as pathogens, as control variables to study the optimal pest management strategy. In the above achievements, taking the differential equations as state equations, taking the Bolza type performance index as objective function, the authors sought the optimal control by utilizing Pontryagin’s minimum principle. These are standard optimal control problems [8].

In the last decade, research on the mathematical models of the chemical controls, biological controls, and of comprehensive control has made great progress. He et al. [9], Luo et al. [10] and Feng et al. [11] have investigated the optimal problems of microorganism and population dynamic systems. The National Natural Science Foundation of China has funded research projects related to the mathematical model for pest control. Despite all this, there are still some challenging problems worth exploring, including primarily: (1) lag, hibernate, delay phenomena of development from larva to adult and so on are widespread in the population life cycle, therefore considering optimal control problem of the ecological system with time delay is a meaningful work; (2) taking the lag of parasitic eggs, lag effect of pesticide poisoning and the age of releasing natural enemies as control variables (state delays), combined with the crop fertility cycle, research on the optimization problem of pest control models at seedling stage, bud stage and filling stage of crops fills (in) a gap which is optimal problem with multiple characteristic times.

The generalized model of these problems is a hybrid optimization problem involving state delay with characteristic time and parameter control. In this paper, we first present a general question on state delays, then design optimization algorithms. Finally, two examples in the field of pest control are given to exhibit the effectiveness of the proposed optimization algorithm.

2 Problem formulation

Consider the following nonlinear time delay system:

Here \(t_{f} > 0\) is a given terminal time, \(\mathbf{y}(t) = (y_{1}(t), \ldots , y_{n}(t))^{T} \in R^{n}\) is the state vector, \(\omega_{i}\), \(i = 1,\ldots ,m\) are state delays and \(\eta_{i}\), \(i=1,2,\ldots,r\) are system parameters. \(\mathbf{g}:R^{(m+1)n} \times R^{m} \times R^{r}\rightarrow R^{n}\) and \(\boldsymbol{\psi} : R \times R^{r} \rightarrow R^{n}\) are given functions. Let

Let \(\mathcal{M}\) denote the set of all ω which are admissible state delay vectors. Let \(\mathcal{F}\) denote the set of all η which are admissible parameter vectors.

The following bound constraints on the state delays and system parameters are imposed:

and

where \(A_{i}\) and \(B_{i}\), \(C_{j}\), and \(D_{j}\) are given constants such that \(0 \leq A_{i} \leq B_{i}\) and \(0 \leq C_{j} \leq D_{j}\).

Our aim is to seek admissible controls ω and η that minimize the following cost function:

where \(\Theta: R^{pn} \times R^{m}\times R^{r} \rightarrow R\) is a given function and \(t_{k}\), \(k = 1, \ldots, p\) are given time points satisfying \(0 < t_{1} < \cdots< t_{p} \leq t_{f}\). Time points \(t_{k}\), \(k = 1, \ldots, p\) are called characteristic times. We define the hybrid optimization problem with state delay as follows.

Problem (P)

Choose \((\boldsymbol{\omega}, \boldsymbol{\eta}) \in\mathcal {M} \times\mathcal{F}\) to minimize the cost function (5).

Remark

Both state equation (1) and cost function (5) are not only explicit but also implicit functions of the decision variables. In addition, our cost function (5) contains a integral term which is known as the problem of Lagrange. Hybridity of optimization problem increases the complexity of the calculation.

3 Algorithm design based on gradient computation

Define

where \(\frac{\partial}{\partial\tilde{\mathbf{y}}^{i}}\) is differentiation with respect to the ith delayed state vector.

Take into account the auxiliary impulsive system below,

Define \(\boldsymbol{\lambda}(\cdot\mid\boldsymbol{\omega}, \boldsymbol{\eta})\) as the solution of system (6)-(8) matching the admissible control pair \((\boldsymbol{\omega}, \boldsymbol{\eta}) \in\mathcal{M}\times\mathcal{F}\).

In turn, the following result gives formulas for the partial derivatives of J with respect to the state delays.

Theorem 1

For each \((\boldsymbol{\omega}, \boldsymbol{\eta}) \in \mathcal{M }\times\mathcal{F}\), we obtain the following gradients with respect to time delays \(\omega_{i}\):

where \(i=1,\ldots,m\).

Proof

Let the function \(\boldsymbol{\varrho}(t) : [0,\infty) \rightarrow R^{n}\). Assume that \(\boldsymbol{\varrho}(t)\) is continuous and differentiable on the intervals \((t_{k-1}, t_{k})\), \(k = 1, \ldots, p + 1\), where \(t_{0} = 0\), \(t_{p+1} = \infty\). Furthermore \(\lim_{t\rightarrow t^{+}_{0}}\boldsymbol{\varrho}(t)\) exists and for each \(t=t_{k}\) (\(k = 1, \ldots, p\)), \(\lim_{t\rightarrow t^{\pm}_{k}}\boldsymbol{\varrho}(t)\) exists. We may express the cost function J as follows:

Applying integration by parts to the last integral gives

Computing the above third term shows

Substituting (11) into (10) yields

Let \(\delta_{li}\) be the Kronecker delta function and define

Then

Define the indicator function:

Equation (13) is rewritten

Next, according to (14), differentiating (12) with respect to \(\omega _{i}\) one obtains

Therefore

Again the second last integral term in (15) can be transformed as

Because the function \(\boldsymbol{\lambda}(\cdot\mid\boldsymbol{\omega},\boldsymbol{\eta})\) meets all properties of ϱ and its arbitrariness, one may choose \(\boldsymbol{\varrho}= \boldsymbol{\lambda}(\cdot\mid\boldsymbol{\omega},\boldsymbol{\eta})\) in (17). Then together with (7) and (8), we get

So, combined with (6), one obtains

The proof is completed. □

Next we calculate the gradients of J with respect to the system parameters.

Theorem 2

For each \((\boldsymbol{\omega}, \boldsymbol{\eta}) \in \mathcal{M }\times\mathcal{F}\), we obtain the following gradients with respect to the system parameters \(\eta_{j}\):

where \(j = 1,\ldots, r\).

Proof

Recall \(\boldsymbol{\varrho}(\cdot)\) and equation (12) in the proof of Theorem 1. Differentiating (12) with respect to \(\eta_{j}\) gives

Hence

For \(t \leq0\), \(\mathbf{y}(t) = \boldsymbol{\psi}(t,\boldsymbol{\eta})\), then we have

Uniting (20) with (19) generates

Let \(\boldsymbol{\varrho}= \boldsymbol{\lambda}(\cdot\mid\boldsymbol{\omega},\boldsymbol{\eta})\). Along with (6)-(8), then the above formula can be translated to (18). The proof can be completed. □

4 Application

Example 1

Consider a delayed epidemic model with the stage structure [12]

and

The pest population is divided into egg, susceptible, and infective classes, with the size of each class given by \(x_{1}(t)\), \(x_{2}(t)\), and \(y(t)\), respectively. The parameter τ represents a constant time to hatch, which mathematically is the delay in our model. For the pest egg population, the death rate is proportional to the existing pest egg population with a proportionality constant γ. The parameters η and ω represent the death rate of the susceptible pest population and, respectively, infective pest population. The expression \(px_{2}(t)/(q+x_{2}^{n}(t))\) is a birth rates function of the susceptible pest population. The incidence rate is given by a function \(\beta x_{2}(t)y(t)/(A+y(t))\), and \(u>0\) is the release amount of infected pests which are bred in laboratories each time in order to drive target pests (susceptible pests) to catch an epidemic, or generate an endemic. Our aim is to find an admissible control pair \({(\tau, u )}\) that minimizes the following cost function:

In (23), the terminal time is taken to be \(T=20\), and the observation times for susceptible pest are scheduled at \(t_{k}=5, 10, 15, 20\), \(k=1,2,3,4\). The state delay τ and the release amount of infected pests u will be optimally selected to obtain the minimum pest level J at minimal cost in problem (23).

The auxiliary impulsive system for this problem is

with jump conditions

and boundary conditions

By Theorems 1 and 2, the gradient formulas for this problem are

In order to get a minimum cost value, we try to choose the optimal time to hatch the pest egg population τ and release an amount of infected pests u. For this purpose, we will conduct the following numerical simulation.

For problem (23), take

We solved the optimal problem by a Matlab program that integrates the SQP optimization method with equations (21)-(27). Starting with the initial guesses \(\tau=2\) and \(u=3\), we recover the optimal time to hatch the pest egg population \(\tau^{*}= 4.999\) and release amount of infected pests \(u^{*}= 0.196\), as well as the corresponding optimal cost value \(J^{*}= 39.597\). The optimal cost value \(J^{*}\) is less than \(J=101.479\) under no optimal control strategy. Here the initial values of the populations are taken to be \(\varphi_{1}(t)=3\), \(\varphi_{2}(t)=4\), \(\varphi_{3}(t)=1\). We impose the bound constraints on the state delay τ and the release amount of infected pests u as \(0<\tau (\mbox{or } u)\leq5\).

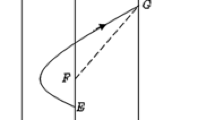

We plot the comparisons of dynamic behaviors according to the optimal control \(\tau^{*}= 4.999\), \(u^{*}= 0.196\) as well as no optimal control \(\tau=2\), \(u=3\) (see Figures 1(a)-(d)). Exactly, (a), (b), and (c) are time-series of the egg, susceptible, and infective pest populations, respectively, when time varies from the first day to the 20th day. We conclude that the susceptible pest population decreases after using an optimal control strategy. Also, the infective pest population declines as a result of its optimal release amounts \(u^{*}=0.196< u=3\). At the same time, the curve graph of the pest populations (egg, susceptible, and infective classes) is drawn in Figure 1(d).

Example 2

Consider a competitive-predator model with stage structure and reserved area[13]:

and

where \(u_{1}(t)\) and \(u_{2}(t)\) are the densities of prey species at time t on unreserved area and reserved area, respectively. The delays \(\tau_{1}\) and \(\tau_{2}\) are the times taken from birth to maturity of \(u_{1}(t)\) and \(u_{2}(t)\). The meanings \(\alpha_{i}\), \(\beta_{i}\) (\(i=1,2\)) are the same as in the literature [13]. Nevertheless, \(\theta_{1}\) and \(\theta_{2}\) are the predation rates of predator on two preys. The parameters \(c_{1}\) (>0) and \(c_{2}\) (>0) are the migration rates from the unreserved area to the reserved area and the reserved area to the unreserved area, respectively. d is the death rate of the predator population. Let \(\boldsymbol{\tau}= (\tau_{1}\ \tau_{2} )^{T}\), \(\mathbf{c}= (c_{1}\ c_{2} )^{T}\). Our aim is to obtain a maximum harvest with respect to the prey and predator populations at the harvesting time T. Thus, the objective function is

Assume that the harvesting time for prey and predator populations is scheduled at \(T=30\). We will optimally select the state delays \(\tau_{1}\), \(\tau_{2}\) and the migration rates \(c_{1}\), \(c_{2}\) to obtain the maximum harvest J at the observation time in problem (31).

The auxiliary impulsive system for this problem is

with jump conditions

and boundary conditions

According to Theorems 1 and 2, the gradients of J with respect to \(\tau_{1}\), \(\tau_{2}\), \(c_{1}\), and \(c_{2}\) are

Let us ask how to optimally choose the times taken from birth to maturity \(\tau_{1}\), \(\tau_{2}\) and the migration rates \(c_{1}\), \(c_{2}\) to get the highest level of population at harvesting time in a period. For this purpose, we will conduct the following numerical simulation.

In the same way, for problem (31), take

We solved this problem utilizing the same Matlab program as was used to solve the above example. Similarly, for the initial guess \(\tau _{1}=1\), \(\tau_{2}=2\), \(c_{1}=0.3\), and \(c_{2}=0.4\), we obtained the optimal periods of maturity \(\tau_{1}^{*}= 3.241\), \(\tau_{2}^{*}=2.458\), and migration rates \(c_{1}=0.553\), \(c_{2}=0.411\), as well as the maximum yield \(J^{*}=4.272\). The maximum yield \(J^{*}\) is higher than \(J=3.218\) under no optimal control strategy, which is exactly what we expected. Here the initial values of populations are taken to be \(\varphi_{1}(t)=2\), \(\varphi_{2}(t)=2\), \(\varphi_{3}(t)=3\). The bound constraints of the state delays as well as the migration rates are \(0<\tau_{1}(\mbox{or }\tau_{2} )\leq5\) and \(0< c_{1}(\mbox{or }c_{2} )\leq1\), respectively.

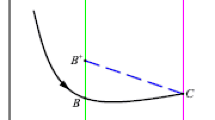

Figures 2(a)-(c) show that the comparisons of dynamic behaviors of the prey and predator populations, respectively, according to the optimal control \(\boldsymbol{\tau}^{*}= (3.241 \ 2.458 )^{T}\), \(\mathbf{c}^{*}= (0.553\ 0.411 )^{T}\), and no optimal control \(\boldsymbol{\tau}= (1\ 2 )^{T}\), \(\mathbf{c}= (0.3\ 0.4 )^{T}\). It is observed that the prey population on unreserved area and reserved area increases after using optimal control strategy. Also, Figure 2(c) implies that the predator population goes up as the time varies from the first day to the 30th day. The curve graph of prey and predator populations also proves our ideas (see Figure 2(d)).

5 Conclusion

In pest control, taking the lag of parasitic eggs, the lag effect of pesticide poisoning, and the age of releasing natural enemies as control variables, combined with the crop fertility cycle, researches on the optimization problem of pest control models at seedling stage, bud stage, and filling stage of crops fill in a gap. For these purposes, a generalized hybrid optimization problem involving state delay with characteristic time and parameter control is presented. Then an algorithm based on a gradient computation is given. Finally, two examples in an agroecological system are given to exhibit the effectiveness of the proposed optimization algorithm.

References

Apreutesei, NC: An optimal control problem for a prey predator system with a general functional response. Appl. Math. Lett. 22, 1062-1065 (2009)

Srinivasu, PDN, Prasad, BSRV: Time optimal control of an additional food provided predator-prey system with applications to pest management and biological conservation. J. Math. Biol. 60, 591-613 (2010)

Apreutesei, NC: An optimal control problem for a pest, predator, and plant system. Nonlinear Anal., Real World Appl. 13, 1391-1400 (2012)

Kar, TK, Ghosh, B: Sustainability and optimal control of an exploited prey predator system through provision of alternative food to predator. Biosystems 109, 220-232 (2012)

Bhattacharyya, S, Bhattacharya, DK: An improved integrated pest management model under 2-control parameters (sterile male and pesticide). Math. Biosci. 209, 256-281 (2007)

Bhattacharyya, S, Bhattacharya, DK: Pest control through viral disease: mathematical modeling and analysis. J. Theor. Biol. 238, 177-197 (2006)

Ghosh, S, Bhattacharya, DK: Optimization in microbial pest control: an integrated approach. Appl. Math. Model. 34, 1382-1395 (2010)

Li, R: Optimal Control Theory and Application of Impulsive and Switched System. The University of Electronic Science and Technology Press, Chengdu (2010) (in Chinese)

He, ZR, Liu, Y: An optimal birth control problem for a dynamical population model with size-structure. Nonlinear Anal., Real World Appl. 13, 1369-1378 (2012)

Luo, ZX, He, ZR: Optimal control for age-dependent population hybrid system in a polluted environment. Appl. Math. Comput. 228, 68-76 (2014)

Feng, EM, Xiu, ZL: Nonlinear Dynamic System of Fermentation Process - Identification, Control and Parallel Optimization. Science Press, Beijing (2012) (in Chinese)

Zhang, H, Chen, LS, Nieto, JJ: A delayed epidemic model with stage-structure and pulses for pest management strategy. Nonlinear Anal., Real World Appl. 9, 1714-1726 (2008)

Lv, YF, Yuan, R, Pei, YZ, Li, TT: Global stability of a competitive model with state-dependent delay. J. Dyn. Differ. Equ. (2015). doi:10.1007/s10884-015-9475-5

Acknowledgements

The authors thank the referees for their careful reading of the original manuscript and many valuable comments and suggestions, which greatly improved the presentation of this paper. This work was supported by National Natural Science Foundation of China (11471243, 11501409, 11501410).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the manuscript and read and approved the final draft.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chen, M., Pei, Y., Liang, X. et al. A hybrid optimization problem at characteristic times and its application in agroecological system. Adv Differ Equ 2016, 157 (2016). https://doi.org/10.1186/s13662-016-0856-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0856-9