Abstract

Background

Protein-protein interactions (PPIs) are crucial in cellular processes. Since the current biological experimental techniques are time-consuming and expensive, and the results suffer from the problems of incompleteness and noise, developing computational methods and software tools to predict PPIs is necessary. Although several approaches have been proposed, the species supported are often limited and additional data like homologous interactions in other species, protein sequence and protein expression are often required. And predictive abilities of different features for different kinds of PPI data have not been studied.

Results

In this paper, we propose ppiPre, an open-source framework for PPI analysis and prediction using a combination of heterogeneous features including three GO-based semantic similarities, one KEGG-based co-pathway similarity and three topology-based similarities. It supports up to twenty species. Only the original PPI data and gold-standard PPI data are required from users. The experiments on binary and co-complex gold-standard yeast PPI data sets show that there exist big differences among the predictive abilities of different features on different kinds of PPI data sets. And the prediction performance on the two data sets shows that ppiPre is capable of handling PPI data in different kinds and sizes. ppiPre is implemented in the R language and is freely available on the CRAN (http://cran.r-project.org/web/packages/ppiPre/).

Conclusions

We applied our framework to both binary and co-complex gold-standard PPI data sets. The detailed analysis on three GO aspects suggests that different GO aspects should be used on different kinds of data sets, and that combining all the three aspects of GO often gets the best result. The analysis also shows that using only features based solely on the topology of the PPI network can get a very good result when predicting the co-complex PPI data. ppiPre provides useful functions for analysing PPI data and can be used to predict PPIs for multiple species.

Similar content being viewed by others

Background

Although different experimental methods [1, 2] have already generated a large amount of PPI for many model species in recent years [3], these existing PPI data are incomplete and contain many false positive interactions. In order to refine these PPI data, computational approaches are urgently needed.

Some recent researches have shown that PPIs can be integrated with other kinds of biological data in using supervised learning to predict PPIs [4–7]. In supervised learning, a classifier is trained using truly interacting protein pairs (positive samples) and protein pairs which are not interacting with each other (negative samples). Then the trained classifier is able to recover false negative interactions and remove false positive interactions from the PPIs input by users.

Existing studies are mainly differing in the selection of features used in the prediction framework. In these studies, different biological evidences are extracted and used as features training the classifier, including Gene Ontology (GO) functional annotations [8, 9], protein sequences [10] and co-expressed proteins [11]. For the organisms or proteins which are lack of research, biological features may don't work well, so features based on network topology are also needed to integrate [12–14].

Although some frameworks and tools have also been proposed for predicting PPIs [15–20], they have two disadvantages in general. First, most of the frameworks only support a few well studied model organisms. Second, these frameworks often need users to provide additional biological data along with the PPIs. Moreover, different species often require different features, which make these existing frameworks not very convenient to use.

In this paper, we describe ppiPre, an open-source framework for the PPI prediction problem. The framework is implemented in the R language so it can work together with other R packages dealing with biological data and network [21], which is different from other tools accessed via web services. ppiPre integrates features extracted from multiple heterogeneous data sources, including GO [22], KEGG [23] and topology of the PPI network. Users don't need to provide additional biological data other than gold-standard PPI data. ppiPre provides functions for measuring the similarity between proteins and for predicting PPIs from the existing PPI data.

Methods

Heterogeneous features are integrated in the prediction framework of ppiPre, including three GO-based semantic similarities, one KEGG-based similarity indicating the proteins are involved in the same pathways and three topology-based similarities using only the network structure of the PPI network.

We chose these three features to be integrated in our framework because they are highly available for the PPIs of different species and can be easily accessed in the R environment. Not like other methods and software tools, ppiPre did not integrate biological features that may not be available for the species or proteins which are not well studied, such as structural and domain information.

GO-based semantic similarities

Proteins are annotated by GO with terms from three aspects: biological process (BP), molecular function (MF), and cellular component (CC). Directed acyclic graphs (DAGs) are used to describe these aspects. It is known that interacting protein pairs are likely to be involved in similar biological processes or in similar cellular component compared to those non-interacting proteins [2, 24, 25]. Thus if two proteins are semantically similar based on GO annotation, the probability that they actually interact is higher than two proteins that are less similar.

Several similarity measures have been developed for evaluating the semantic similarity between two GO terms [26–28]. The information content (IC) of GO terms and the structure of the GO DAG are often used in these measures.

The IC of a term t can be defined as follows:

where p(t) is the probability of occurrence of the term t in a certain GO aspect. Two IC-based semantic similarity measures proposed recently are integrated in ppiPre, which are Topological Clustering Semantic Similarity (TCSS) [29] and IntelliGO [30].

TCSS

In TCSS, the GO DAGs are divided into subgraphs. A PPI is scored higher if the two proteins are in the same subgraph. The algorithm is made up of two major steps.

In the first step, a threshold on the ICs of all terms is used to generate multiple subgraphs. The roots of the subgraphs are the terms which are below the previously defined threshold. If roots of two subgraphs have similar IC values, these two subgraphs are merged. Overlapping subgraphs may occur because some GO terms have more than one parent terms. In order to remove overlap between subgraphs, edge removal and term duplication are processed. Transitive reduction of GO DAG is used to remove overlapping edges by generating the smallest graph that has the same transitive closure as the original subgraph. After edge removal, if a term is included in two or more subgraphs, it will be duplicated into each subgraph. More details are described in [29].

After the first step, a meta-graph is constructed by connecting all subgraphs. Then the second step called normalized scoring is processed. For two GO terms, normalized semantic similarity is calculated based on the meta-graph rather than the whole GO DAG so that more balanced semantic similarity scores can be obtained.

Using the frequency of proteins that are annotated to GO term t and its children, the information content of annotation (ICA) for a GO term t is:

where P t is the proteins that are annotated by t in aspect O and N(t) is the child terms of t.

The information content of subgraph (ICS) for term in the mth subgraph is defined as follows:

The information content of meta-graph (ICM) for a term in meta-graph Gm is defined as follows:

Finally, the similarity between two proteins i and j is defined as:

where LCA(s m ,t n ) is the common ancestor of the terms s m and t n with the highest IC. T i and T j are two sets of GO terms which annotate the two proteins i and j respectively.

IntelliGO

The IntelliGO similarity measure introduces a novel annotation vector space model. The coefficients of each GO term in the vector space consider complementary properties. The IC of a specific GO term and its evidence code (EC) [31] are used to assign this GO term to a protein. The coefficient α t given to term t is defined as follows:

where w(g, t) is the weight of the EC which indicates the annotation origin between protein g and GO term t, and IAF (Inverse Annotation Frequency) represents the frequency of term t occurred in all the proteins annotated in the aspect where t belongs.

For two proteins i and j, the IntelliGO uses their vectorial representation and to measure their similarity, which is defined as follows:

The detailed explanation of the definition can be found in [30].

Wang's method

The similarity measure proposed by Wang [32] is also implemented in the ppiPre package, which is based on the graph structure of GO DAG.

In the GO DAG, each edge has a type which is "is-a" or "part-of". In Wang's measure, a weight is given to each edge according to its type. DAG t = (t,T t ,E t ) represents the subgraph made up of term t and its ancestors, where T t is the set of the ancestor terms of t and E t is the set of edges in DAG t .

In DAG t , S t (n) measures the semantic contribution of term n to term t, which is defined as:

The similarity between two GO term m and term n is defined as:

where SV(m) is the sum of the semantic contribution of all the terms in DAG m .

The semantic similarity between two proteins i and j is defined as the maximum value of all the similarity between any term that annotate i and any term that annotate j.

KEGG-based similarity

Proteins that work together in the same KEGG pathway are likely to interact[33][34]. The KEGG-based similarity between proteins i and j is calculated using the co-pathway membership information in KEGG. The similarity is defined as:

where P(i) is the set of pathways which protein i involved in the KEGG database.

Topology-based similarities

In order to deal with the proteins that haven't got any annotations in GO or KEGG database, topology-based similarity measures are also integrated. In ppiPre, three different topological similarities are implemented.

The Jaccard similarity [35] between two proteins i and j is defined as:

where N(i) is set of all the direct neighbours of protein i in PPI network.

Adamic-Adar(AA) similarity [36] punishes the proteins with high degree by assigning more weights to the nodes with low degree in PPI network. The AA similarity between two proteins i and j is defined as:

where k n is the degree of protein n.

Resource Allocation (RA) similarity [37] is similar to AA similarity and considers the common neighbours of two nodes as resource transmitters. The RA similarity between two proteins i and j is defined as:

Prediction framework

The data of interacting protein pairs verified by experiments are very incomplete and the non-interacting protein pairs far outnumber interacting protein pairs. So the classical SVM [38] which is able to handle small and unbalanced data is chosen to integrate different features in ppiPre. We have tested different kernels in e1071 and the results showed no significant difference, so the default kernel and parameters are used in ppiPre.

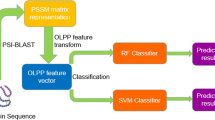

The prediction framework of ppiPre is presented in Figure 1. Heterogeneous features are calculated for the gold-standard PPI data set which is given by users, and the SVM classifier is trained by the gold-standard positive and negative data set (solid arrows). After the classifier is trained, the features are calculated from the query PPIs input by users, and the trained classifier can predict false positive and false negative PPIs from the input data (hollow arrows).

Results and discussion

Since all the features are calculated within the package, users don't need to provide additional biological data for different species. When users use ppiPre to predict the PPIs, they only need to provide both the gold-standard positive and negative training set and the test set. In this paper, we test the performance of ppiPre in yeast using two yeast gold-standard positive data sets which are a high quality binary data set provided by Yu's research [39] and the MIPS data set [40]. Self-interactions and duplicate interactions were removed previously. The detail of the two gold-standard data sets is shown in Table 1.

Non-interacting pairs were randomly selected from the proteins in gold-standard positive data sets as gold-standard negative data sets. The positive and negative data sets are set to the same size. In order to minimize the impact to the topological characteristics of the PPI network, the degree of each protein was maintained.

10-fold cross validation was used to evaluate the performance of the prediction framework.

Predictive abilities of GO-based similarities

First, the predictive abilities of the three aspects of GO on different data sets were evaluated. We analysed the prediction performance using only one of the BP, MF and CC aspects. The receiver operating characteristic (ROC) curves are shown in Figure 2 and Figure 3. In order to assess these results quantitatively, the area under the ROC curve (AUC) of each ROC curve was calculated. The result is shown in Table 2.

ROC curves for binary data set using single GO aspect. ROC evaluations of three GO aspects with three semantic similarity measures on the binary PPI data set are shown. The evaluation was performed using only one GO aspect at a time. BP shows the overall best predictive abilities in three aspects in GO.

ROC curves for co-complex data set using single GO aspect. ROC evaluations of three GO aspects with three semantic similarity measures on the MIPS co-complex data set are shown. The evaluation was performed using only one GO aspect at a time. CC shows the overall best predictive abilities in three aspects of GO.

For the binary data set, the BP aspect shows the best performance among all three aspects in ROC analysis of three GO-based semantic similarities (Figure 2, Table 2). This result is expected. The BP aspect is related to protein interaction and thus can be used to predict them.

For the co-complex data set, the CC aspect shows the best performance in ROC analysis of three GO-based semantic similarities (Figure 3, Table 2). Since the MIPS data set is composed of protein complexes, and a protein complex can only be formed if its proteins are localized within the same compartment of the cell, terms in the CC aspect correctly reflect the functional grouping of proteins in these complexes.

We then analysed the prediction performance using a combination of GO aspects. The ROC curves of a combination of two aspects are shown in Figure 4 and Figure 5. The ROC curves of combination three aspects are shown in Figure 6. The AUCs of the ROC curves are shown in Table 3. The results show that by combing more than one GO aspect, our method could get a better prediction performance than using a single aspect for both binary data set and co-complex data set. And the overall best performance was achieved by combing all the three GO aspects. So it is necessary to integrate all the three GO aspects in the prediction framework.

ROC curves for binary data set using two GO aspects. ROC evaluations of the combination of two GO aspects with three semantic similarity measures on the binary PPI data set are shown. The evaluation was performed using two of the three GO aspects at a time. In general, the prediction performance is better than that using one aspect.

ROC curves for co-complex data set using two GO aspects. ROC evaluations of the combination of two GO aspects with three semantic similarity measures on the MIPS co-complex PPI data set are shown. The evaluation was performed using two of the three GO aspects at a time. In general, the prediction performance is better than that using one aspect.

Predictive abilities of KEGG-based and topological similarities

Then, the predictive abilities of KEGG-based similarity and three topological similarities were evaluated. For binary and co-complex data sets, the performance of KEGG-based similarity shows no big difference (Figure 7, Table 4). On the contrary, three topological similarities work perfectly for co-complex data set, but show only modest effects for binary data set. This is because the MIPS co-complex data set is composed of multi-protein complexes, and the interacting pairs are all in the same complex. The co-complex data set represents several unconnected subgraphs in the corresponding PPI network, meaning that two proteins from different complexes had no common neighbours in the PPI network. So the topological similarities of two proteins from two different complexes are zero while topological similarities of two proteins from the same complexes are not.

ROC curves using KEGG-based and topological features. ROC evaluations of the KEGG-based similarity (KEGG), Jaccard similarity (Jaccard), Adamic-Adar similarity (AA) and Resource Allocation similarity (RA) on the binary and co-complex PPI data sets are shown. The result shows that topological similarities work very well for the co-complex data set.

Integration of biological and topological similarities

After analysing biological and topological features separately, we integrated these heterogeneous features together.

The ROC curves of two kinds of PPI data sets using GO-based, KEGG-based and topological similarities are shown in Figure 8. The AUC of binary and co-complex PPI data sets are 0.958 and 0.999.

ROC curves using a combination of GO-based, KEGG-based and topological features. ROC evaluations of the integration of GO-based, KEGG-based and topological similarity measures on the binary and co-complex PPI data sets are shown. The result shows that integrating heterogeneous features can improve the prediction performance.

The result shows that integrating biological and topological similarities can improve the prediction performance. So, it's necessary to integrate heterogeneous features together when dealing with the PPI prediction problem. All the features are integrated in ppiPre.

For proteins with unknown annotations in GO and KEGG, the GO-based and KEGG-based similarity measures cannot work. But the impact on these two data sets can be ignored since interactions without annotations are only 2 in the binary data set (0.19%) and 16 in MIPS data set (1.84%). However, when ppiPre is used on a large amount of proteins that are poorly annotated in GO, users should consider that the performance of ppiPre may be hampered under such situation.

Implementation and usage

The current version of ppiPre supports 20 species. The detail of the species supported and IC data used in GO-based semantic similarities are described in [41]. The annotation data of GO and KEGG are got from the packages GO.db and KEGG.db.

ppiPre has been submitted to CRAN (Comprehensive R Archive Network) and can be installed and loaded easily in the R environment. ppiPre provides functions for calculating similarities and predicting PPIs. A summary of the functions available is shown in Table 5. Detailed descriptions and examples for all the functions are contained in the manual provided within ppiPre.

Conclusions

An open-source framework ppiPre for PPI prediction is proposed in this paper. Several heterogeneous features are combined in ppiPre, including three GO-based similarities, one KEGG-based similarity and three topology-based similarities. To make the prediction, users don't need to provide additional biological data other than gold-standard PPI data.

ppiPre can be integrated into existing bioinformatics analysis pipelines in the R environment. Other features will be evaluated and integrated in future work, and the framework will be tested on PPI data of more species especially those poorly annotated in GO.

References

Gavin A-C, Bosche M, Krause R, Grandi P, Marzioch M, Bauer A, Schultz J, Rick JM, Michon A-M, Cruciat C-M, Remor M, Hofert C, Schelder M, Brajenovic M, Ruffner H, Merino A, Klein K, Hudak M, Dickson D, Rudi T, Gnau V, Bauch A, Bastuck S, Huhse B, Leutwein C, Heurtier M-A, Copley RR, Edelmann A, Querfurth E, Rybin V: Functional organization of the yeast proteome by systematic analysis of protein complexes. Nature. 2002, 415: 141-147. 10.1038/415141a.

Uetz P, Giot L, Cagney G, Mansfield TA, Judson RS, Knight JR, Lockshon D, Narayan V, Srinivasan M, Pochart P, Qureshi-Emili A, Li Y, Godwin B, Conover D, Kalbfleisch T, Vijayadamodar G, Yang M, Johnston M, Fields S, Rothberg JM: A comprehensive analysis of protein-protein interactions in Saccharomyces cerevisiae. Nature. 2000, 403: 623-627. 10.1038/35001009.

De Las Rivas J, Fontanillo C: Protein-Protein Interactions Essentials: Key Concepts to Building and Analyzing Interactome Networks. PLoS Comput Biol. 2010, 6: e1000807-10.1371/journal.pcbi.1000807.

Ben-Hur A, Noble WS: Kernel methods for predicting protein-protein interactions. Bioinformatics. 2005, 21: i38-46. 10.1093/bioinformatics/bti1016.

Chen X-W, Liu M: Prediction of protein-protein interactions using random decision forest framework. Bioinformatics. 2005, 21: 4394-4400. 10.1093/bioinformatics/bti721.

Patil A, Nakamura H: Filtering high-throughput protein-protein interaction data using a combination of genomic features. BMC Bioinformatics. 2005, 6: 100-10.1186/1471-2105-6-100.

Lin X, Liu M, Chen X: Assessing reliability of protein-protein interactions by integrative analysis of data in model organisms. BMC Bioinformatics. 2009, 10 (Suppl 4): S5-10.1186/1471-2105-10-S4-S5.

Mahdavi M, Lin Y-H: False positive reduction in protein-protein interaction predictions using gene ontology annotations. BMC Bioinformatics. 2007, 8: 262-10.1186/1471-2105-8-262.

Kuchaiev O, Rašajski M, Higham DJ, Pržulj N: Geometric De-noising of Protein-Protein Interaction Networks. PLoS Comput Biol. 2009, 5:

Wang C, Cheng J, Su S: Prediction of Interacting Protein Pairs from Sequence Using a Bayesian Method. The Protein Journal. 2009, 28: 111-115. 10.1007/s10930-009-9170-7.

Qi Y, Klein-Seetharaman J, Bar-Joseph Z: A mixture of feature experts approach for protein-protein interaction prediction. BMC Bioinformatics. 2007, 8 (Suppl 10): S6-10.1186/1471-2105-8-S10-S6.

Lü L, Zhou T: Link prediction in complex networks: A survey. Physica A: Statistical Mechanics and its Applications. 2011, 390: 1150-1170. 10.1016/j.physa.2010.11.027.

Guimerà R, Sales-Pardo M: Missing and spurious interactions and the reconstruction of complex networks. Proceedings of the National Academy of Sciences. 2009, 106: 22073-22078. 10.1073/pnas.0908366106.

Chua HN, Ning K, Sung W-K, Leong HW, Wong L: Using indirect protein-protein interactions for protein complex prediction. J Bioinform Comput Biol. 2008, 6: 435-466. 10.1142/S0219720008003497.

Kim S, Shin S-Y, Lee I-H, Kim S-J, Sriram R, Zhang B-T: PIE: an online prediction system for protein-protein interactions from text. Nucleic Acids Research. 2008, 36 (Web Server): W411-W415. 10.1093/nar/gkn281.

Guo Y, Li M, Pu X, Li G, Guang X, Xiong W, Li J: PRED_PPI: a server for predicting protein-protein interactions based on sequence data with probability assignment. BMC Research Notes. 2010, 3: 145-10.1186/1756-0500-3-145.

Li D, Liu W, Liu Z, Wang J, Liu Q, Zhu Y, He F: PRINCESS, a Protein Interaction Confidence Evaluation System with Multiple Data Sources. Mol Cell Proteomics. 2008, 7: 1043-1052. 10.1074/mcp.M700287-MCP200.

Michaut M, Kerrien S, Montecchi-Palazzi L, Chauvat F, Cassier-Chauvat C, Aude J-C, Legrain P, Hermjakob H: InteroPORC: Automated Inference of Highly Conserved Protein Interaction Networks. Bioinformatics. 2008, 24: 1625-1631. 10.1093/bioinformatics/btn249.

Pitre S, Dehne F, Chan A, Cheetham J, Duong A, Emili A, Gebbia M, Greenblatt J, Jessulat M, Krogan N, Luo X, Golshani A: PIPE: a protein-protein interaction prediction engine based on the re-occurring short polypeptide sequences between known interacting protein pairs. BMC Bioinformatics. 2006, 7: 365-10.1186/1471-2105-7-365.

McDowall MD, Scott MS, Barton GJ: PIPs: human protein-protein interaction prediction database. Nucleic Acids Research. 2009, 37 (Database): D651-D656. 10.1093/nar/gkn870.

Csárdi G, Nepusz T: The igraph software package for complex network research. InterJournal Complex Systems. 2006, 1695:

Ashburner M, Ball CA, Blake JA, Botstein D, Butler H, Cherry JM, Davis AP, Dolinski K, Dwight SS, Eppig JT, Harris MA, Hill DP, Issel-Tarver L, Kasarskis A, Lewis S, Matese JC, Richardson JE, Ringwald M, Rubin GM, Sherlock G: Gene Ontology: tool for the unification of biology. Nat Genet. 2000, 25: 25-29. 10.1038/75556.

Kanehisa M, Goto S: KEGG: Kyoto Encyclopedia of Genes and Genomes. Nucleic Acids Research. 2000, 28: 27-30. 10.1093/nar/28.1.27.

Lehner B, Fraser AG: A first-draft human protein-interaction map. Genome Biology. 2004, 5: R63-10.1186/gb-2004-5-9-r63.

Jansen R: A Bayesian Networks Approach for Predicting Protein-Protein Interactions from Genomic Data. Science. 2003, 302: 449-453. 10.1126/science.1087361.

Resnik P: Using Information Content to Evaluate Semantic Similarity in a Taxonomy. IJCAI. 1995, 448-453.

Jiang J, Conrath D: Semantic Similarity Based on Corpus Statistics and Lexical Taxonomy. International Conference Research on Computational Linguistics (ROCLING X). 1997, 9008-

Lord PW, Stevens RD, Brass A, Goble CA: Semantic similarity measures as tools for exploring the gene ontology. Pac Symp Biocomput. 2003, 601-612.

Jain S, Bader G: An improved method for scoring protein-protein interactions using semantic similarity within the gene ontology. BMC Bioinformatics. 2010, 11: 562-10.1186/1471-2105-11-562.

Benabderrahmane S, Smail-Tabbone M, Poch O, Napoli A, Devignes M-D: IntelliGO: a new vector-based semantic similarity measure including annotation origin. BMC Bioinformatics. 2010, 11: 588-10.1186/1471-2105-11-588.

Rogers MF, Ben-Hur A: The use of gene ontology evidence codes in preventing classifier assessment bias. Bioinformatics. 2009, 25: 1173-1177. 10.1093/bioinformatics/btp122.

Wang JZ, Du Z, Payattakool R, Yu PS, Chen C-F: A new method to measure the semantic similarity of GO terms. Bioinformatics. 2007, 23: 1274-1281. 10.1093/bioinformatics/btm087.

Qi Y, Bar-Joseph Z, Klein-Seetharaman J: Evaluation of different biological data and computational classification methods for use in protein interaction prediction. Proteins. 2006, 63: 490-500. 10.1002/prot.20865.

van Noort V, Snel B, Huynen MA: Exploration of the omics evidence landscape: adding qualitative labels to predicted protein-protein interactions. Genome Biology. 2007, 8: R197-10.1186/gb-2007-8-9-r197.

Jaccard P: Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull Soc Vaud Sci Nat. 1901, 37: 541-

Adamic LA, Adar E: Friends and neighbors on the Web. Social Networks. 2003, 25: 211-230. 10.1016/S0378-8733(03)00009-1.

Zhou T, Lü L, Zhang Y-C: Predicting missing links via local information. The European Physical Journal B - Condensed Matter and Complex Systems. 2009, 71: 623-630. 10.1140/epjb/e2009-00335-8.

Vapnik VN: The Nature of Statistical Learning Theory. 2000, Springer

Yu H, Braun P, Yildirim MA, Lemmens I, Venkatesan K, Sahalie J, Hirozane-Kishikawa T, Gebreab F, Li N, Simonis N, Hao T, Rual J-F, Dricot A, Vazquez A, Murray RR, Simon C, Tardivo L, Tam S, Svrzikapa N, Fan C, de Smet A-S, Motyl A, Hudson ME, Park J, Xin X, Cusick ME, Moore T, Boone C, Snyder M, Roth FP: High-Quality Binary Protein Interaction Map of the Yeast Interactome Network. Science. 2008, 322: 104-110. 10.1126/science.1158684.

Yu H, Luscombe NM, Lu HX, Zhu X, Xia Y, Han J-DJ, Bertin N, Chung S, Vidal M, Gerstein M: Annotation Transfer Between Genomes: Protein-Protein Interologs and Protein-DNA Regulogs. Genome Research. 2004, 14: 1107-1118. 10.1101/gr.1774904.

Deng Y, Gao L: ppiPre - an R package for predicting protein-protein interactions. 2012 IEEE 6th International Conference on Systems Biology (ISB). 2012, 333-337.

Acknowledgements

We thank the anonymous reviewers for many helpful suggestions and comments. We thank Dr. Yong Wang of AMSS, CAS for insightful discussions. We also thank Rongjie Shao and Gang Wang of Xidian University for technical support. A preliminary version of this paper was published in the proceedings of IEEE ISB2012 [41].

Declarations

The publication of this article was funded by the NSFC (91130006, 60933009, 61202174) and the Fundamental Research Funds for the Central Universities (K5051223003, K5051223005).

This article has been published as part of BMC Systems Biology Volume 7 Supplement 2, 2013: Selected articles from The 6th International Conference of Computational Biology. The full contents of the supplement are available online at http://www.biomedcentral.com/bmcsystbiol/supplements/7/S2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

LG conceived of the study, supervised the project and revised the manuscript. YD implemented the framework, performed the experiments, and drafted the manuscript. BW participated in the data analysis. All authors read and approved the final manuscript.

An erratum to this article is available at http://dx.doi.org/10.1186/s12918-015-0196-5.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Deng, Y., Gao, L. & Wang, B. ppiPre: predicting protein-protein interactions by combining heterogeneous features. BMC Syst Biol 7 (Suppl 2), S8 (2013). https://doi.org/10.1186/1752-0509-7-S2-S8

Published:

DOI: https://doi.org/10.1186/1752-0509-7-S2-S8